Abstract

Objectives

To assess the effectiveness of an intervention package comprising intense education, a range of reporting options, changes in report management and enhanced feedback, in order to improve incident‐reporting rates and change the types of incidents reported.

Design, setting and participants

Non‐equivalent group controlled clinical trial involving medical and nursing staff working in 10 intervention and 10 control units in four major cities and two regional hospitals in South Australia.

Main outcome measures

Comparison of reporting rates by type of unit, profession, location of hospital, type of incident reported and reporting mechanism between baseline and study periods in control and intervention units.

Results

The intervention resulted in significant improvement in reporting in inpatient areas (additional 60.3 reports/10 000 occupied bed days (OBDs); 95% CI 23.8 to 96.8, p<0.001) and in emergency departments (EDs) (additional 39.5 reports/10 000 ED attendances; 95% CI 17.0 to 62.0, p<0.001). More reports were generated (a) by doctors in EDs (additional 9.5 reports/10 000 ED attendances; 95% CI 2.2 to 16.8, p = 0.001); (b) by nurses in inpatient areas (additional 59.0 reports/10 000 OBDs; 95% CI 23.9 to 94.1, p<0.001) and (c) anonymously (additional 20.2 reports/10 000 OBDs and ED attendances combined; 95% CI 12.6 to 27.8, p<0.001). Compared with control units, the study resulted in more documentation, clinical management and aggression‐related incidents in intervention units. In intervention units, more reports were submitted on one‐page forms than via the call centre (1005 vs 264 reports, respectively).

Conclusions

A greater variety and number of incidents were reported by the intervention units during the study, with improved reporting by doctors from a low baseline. However, there was considerable heterogeneity between reporting rates in different types of units.

Incident reporting is a tool that enables healthcare workers to disclose adverse events, defined as unintended injury caused by healthcare management rather than the patient's disease,1,2 and near misses. Information from reports enables contributing factors to be identified and corrections made to prevent recurrence of similar incidents.2 With recognition that hospitals should establish effective voluntary incident management systems,3,4,5 it is important that these meet the needs of those expected to use them.

There is evidence from studies comparing incident reporting with other techniques to detect adverse events6,7 and from clinicians themselves8,9 that many incidents are not reported. A number of studies have attempted to improve incident reporting10,11,12,13,14,15; however, a literature review has failed to identify any studies aimed at improving incident reporting using the rigorous study design of a matched controlled study.

This intervention aimed to improve reporting and stimulate a greater variety of reported incidents by doctors and nurses, and assess the effectiveness of components of the intervention.

Methods

Study population

This study was undertaken in South Australia, Australia, which contains a population of approximately 1.5 million people and 78 acute public hospitals (12 major city, 44 regional and 22 remote hospitals).16 Nursing and medical staff working in 10 control and intervention units across two regional and four major city tertiary hospitals participated in the study. Table 1 gives the details of the characteristics of these hospitals and units.

Table 1 Details of participating hospitals and units.

| Control units (n = 10) | Intervention units (n = 10) | |

|---|---|---|

| Hospital characteristics | ||

| Major city | ||

| Hospital 1 | – | ICU |

| Hospital 2 | Medical (cardiology) | Medical (neurology) |

| ICU | ED | |

| Surgical (GI) | Surgical (GI) | |

| Hospital 3 | General surgical | General medical |

| ED | ||

| Hospital 4 | Medical (neurology) | Medical (cardiology) |

| Regional | ||

| Hospital 5 | General medical | ED |

| General surgical | ||

| ICU | ||

| Hospital 6 | ED | General medical |

| General surgical | ||

| ICU | ||

| Department characteristics | ||

| Number of ICU beds | 21 | 42 |

| Major city | 15 | 34 |

| Regional | 6 | 8 |

| Number of OBDs in baseline and study period (for combined medical, surgical and ICU areas) | 90 142 | 97 134 |

| Major city | 68 399 | 76 251 |

| Regional | 21 743 | 20 883 |

| Number of patient discharges in baseline and study period | 15 916 | 15 043 |

| Major city | 10 434 | 10 419 |

| Regional | 5412 | 4624 |

| Number of ED attendances in baseline and study period | 78 264 | 66 669 |

| Major city | 60 949 | 44 057 |

| Regional | 17 315 | 22 612 |

ED, emergency department; GI, gastrointestinal; ICU, intensive care unit; OBDs, occupied bed days.

Study design

The two regional hospitals were purposively selected on the basis of their similar sizes16 and geographical isolation from each other. One of the regional hospitals employed doctors to work in its emergency department (ED), whereas in the other hospital general practitioners (GPs) worked on a rotational basis in the ED. In both hospitals, inpatient care was provided by GPs. To reduce contamination of the control and intervention units caused by doctors working across multiple inpatient areas, units were stratified into inpatient areas (including the intensive care unit (ICU), medical and surgical units) and EDs, and were block randomised.

In major city hospitals, intervention and control units were matched by type of unit (medical units, surgical units, ICUs and EDs) and services provided (neurology, cardiology and gastrointestinal surgery). Difficulty in recruiting necessitated non‐random allocation of control and intervention units, in order to ensure a spread of units across hospitals.

This study compared incident‐reporting rates and types of reports generated between (a) baseline and study period and between (b) control and intervention units. Intervention rollout was staggered, from June to August 2003, with units implementing the intervention over a 40‐week period.

Components of the intervention

We conducted focus groups using a topic guide with 14 doctors and 19 nurses8 and surveyed doctors and nurses across four major cities and two regional hospitals (n = 773, response rate = 72.8%) using a questionnaire9 to identify barriers to reporting and current reporting practices. Validation of the questionnaire and the methods for conduct and analysis of the focus groups has been reported previously.8,9 This information guided the intervention design.

Results showed that many doctors did not know what or how to report, and that senior doctors particularly feared legal repercussions. Reporting was largely seen as the nurses' responsibility. Fear of disciplinary action, time constraints and lack of feedback were other identified barriers. In addressing these barriers, the following intervention components were developed:

Education

A manual was developed to improve knowledge of reportable incidents and to address fear of disciplinary action. Manuals were distributed to all GPs, medical heads of units, nurse unit managers and patient safety managers, and placed in tearooms and medical resident's rooms. A detailed verbal explanation of all aspects of the study was provided to all medical heads of units, nurse unit managers and patient safety managers, and to most GPs (project officers were unable to schedule a meeting with 20% of GPs). Posters explaining the types of incidents to be reported were strategically placed in handover rooms, medical staff rooms and nurses' stations. Incident forms were prominently displayed in the clinical areas.

Education sessions were scheduled in existing departmental meetings to explain the purpose of the study, changes in reporting processes, the ability to report anonymously, the importance of reporting near misses and qualified privilege afforded under the Commonwealth legislation to identify information disclosed through the reporting system.

Reduce fear of reporting

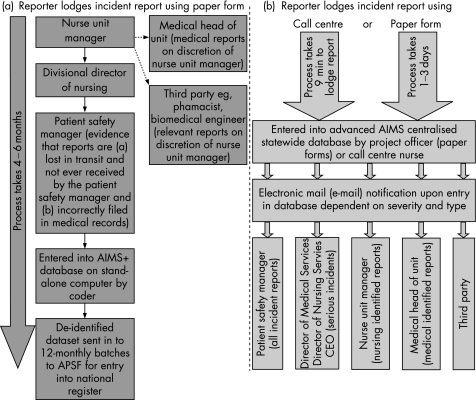

To address the concern that some nurse unit managers used reports against staff, reporting processes were redesigned (fig 1). Reports were initially sent to the patient safety manager. Identified reports had the severity of the incident validated and action taken by the nurse unit managers for nurse‐generated reports, and by the medical head of unit for doctor‐generated reports. Anonymous reports were validated and managed only by the patient safety manager in each institution.

Figure 1 Incident reporting processes (a) at baseline and in control units during the study period and (b) in the intervention units during the study period. APSF, Australian Patient Safety Foundation; AIMS, Advanced Incident Management System; CEO, chief executive officer.

Each patient safety manager, medical head of unit and nurse unit manager from intervention units was encouraged to attend a two‐day root cause analysis (RCA) workshop, where they were taught how to investigate incidents effectively.17

Reduce reporting burden

A one‐page report form replaced the existing three‐page form. A freecall telephone service (1800NOTIFY) was introduced, enabling staff to report an incident 24 h a day, 7 days a week, to a registered nurse, who entered incident details directly into the statewide Advanced Incident Management System (AIMs) database. Online reporting was piloted in the ICU of major cities, in addition to the call centre and paper‐based reporting.

Improve feedback

Four newsletters were distributed to doctors and nurses. These newsletters contained statistics, summaries of quality‐improvement investigations, and de‐identified RCA findings and recommendations from incidents reported in intervention units during the study period. Individual feedback was provided for incidents classified by reporters as resulting in death, permanent loss/lessening of function, additional surgery or increased length of stay. For less serious events, aggregate data were presented at departmental meetings scheduled at least every 3 months.

Statistical analysis

We assumed a type 1 error of 0.05, 90% power and a two‐sided test. Then, if the control group reporting rate was 100/10 000 occupied bed days (OBDs), a sample size of 10 375 OBDs in each group would be sufficient to demonstrate a 50% increase in reporting rate in the intervention group compared with the control group as being statistically significant. This requirement is reduced by the matched design, but increased because of the within‐hospital clustering. Further, a number of subgroup analyses were considered necessary. Hence, a study period was chosen to provide approximately 35 000 OBDs in each study arm at baseline and during the study period.

The relative change in reporting rates from baseline to the end of the study between the control and intervention units was assessed using Fisher's exact test. Reporting rates by type of unit (medical, surgical and ED) and location of hospital (major city and regional) were compared using negative binomial regression analysis, adjusting for clustering by hospitals. Poisson regression analysis using robust variance was used to assess changes in the ICU and designation of reporter by profession, since negative binomial regressions would not converge. Comparisons between intervention and control units adjusted for differences at baseline were undertaken by using an interaction term as the measure of effect.

A principal incident type was allocated for each incident by coders, who were blinded to the location of the incident. Changes in types of incidents reported were analysed using log binomial regression analysis. Principal incident types were classified a priori into those likely and unlikely to implicate the nurse unit manager. The conventional level of p⩽0.05 was taken to represent statistical significance. Analysis was done using the STATA statistical software package V.7.0. Approval was obtained from each of the participating hospital's ethics committee.

Results

Reporting rate

Despite matching by type of unit and hospital location, there was considerable heterogeneity between control and intervention units at baseline. Owing to the large size of the major city intervention ICU, there were twice as many ICU beds in intervention units (42 beds) as those in control units (21 beds), a significant difference (χ2(1) = 11.19, p = 0.001; table 1). At baseline, there were significantly more reports made in inpatient intervention units and in ED control units, and there were more anonymous reports generated in control units (table 2).

Table 2 Comparison of reporting rates by type of unit and location of hospital.

| Type of unit | Baseline | End | Significance of the interaction term† | Absolute difference (SEM)‡ | ||||

|---|---|---|---|---|---|---|---|---|

| Control reports | Intervention reports | Rate ratio* (95% CI) | Control reports | Intervention reports | Rate ratio* (95% CI) | |||

| Reporting rates/10 000 OBDs | ||||||||

| ICU | 22.3 (9/4043) | 89.5 (108/12 068) | 0.2 (0.1 to 0.5) | 17.0 (7/4107) | 118.2 (153/12 943) | 0.1 (0 to 0.3) | 0.094 | 34.0 (31.7) |

| Surgical | 39.7 (87/21 891) | 43.2 (47/10 880) | 0.9 (0.6 to 1.3) | 71.9 (140/19 458) | 150.8 (165/10 940) | 0.5 (0.4 to 0.6) | <0.001 | 76.3 (29.7) |

| Medical | 79.1 (146/18 446) | 96.7 (243/25 125) | 0.8 (0.7 to 1.0) | 141.0 (313/22 197) | 243.1 (612/25 178) | 0.6 (0.5 to 0.7) | <0.001 | 84.5 (30.4) |

| Total inpatient reporting | 54.5 (242/44 380) | 82.8 (398/48 073) | 0.7 (0.56 to 0.77) | 101.0 (462/45 762) | 189.6 (930/49 061) | 0.5 (0.5 to 0.6) | <0.001 | 60.3 (18.6) |

| Reporting rates/10 000 ED attendances | ||||||||

| ED | 21.5 (85/39 504) | 6.3 (24/37 781) | 3.4 (2.2 to 5.4) | 22.2 (86/38 760) | 46.5 (181/28 888) | 0.4 (0.3 to 0.5) | <0.001 | 39.5 (11.5) |

| Reporting rates/10 000 OBDs and 10 000 ED attendances (combined) | ||||||||

| Anonymous¶ | 2.1 (18/83 884) | 0.7 (6/85 854) | 5.9 (2.4 to 13.5) | 5.1 (43/84 552) | 23.9 (186/77 949) | 0.32 (0.21 to 0.53) | <0.001 | 20.2 (3.9) |

| Regional | 55.2 (112/20 283) | 73.5 (156/21 227) | 0.75 (0.6 to 1.0) | 59.7 (112/18 775) | 208.1 (471/22 628) | 0.29 (0.2 to 0.3) | <0.001 | 130.1 (26.6) |

| Major city | 33.8 (215/63 601) | 41.2 (266/64 627) | 0.82 (0.7 to 1.0) | 66.3 (436/65 747) | 97.4 (640/65 681) | 0.68 (0.6 to 0.8) | <0.001 | 23.7 (11.8) |

ED, emergency department; ICU, intensive care unit; OBD, occupied bed day.

*Comparison made using Fisher's exact test.

†Comparison between baseline and end of intervention made using negative binomial regression analysis adjusting for clustering by hospitals. To test for the significance of the intervention effect, the statistical significance of the interaction term was assessed using the interaction term and exposure of patient discharges, with the exception of ICUs, where Poisson regression analysis used robust variance.

‡Absolute difference in change in reporting rates per 10 000 OBDs (inpatient units) or 10 000 ED attendances between intervention and control units at the end of the study compared with those at baseline.

¶Anonymous reports during the baseline period and in control units refer to those reports in where the professional designation of the reporter is not given. Anonymous reports during the study period in the intervention units refer to those reports where either the location (n = 63) or the professional designation of the reporter (n = 22), or both (n = 101), were not given by the reporter.

Compared with control units, the intervention resulted in an absolute increase of 60.3 reports/10 000 OBDs (95% CI 23.8 to 96.8 reports/10 000 OBDs) in inpatient areas (p<0.001), 39.5 reports/10 000 ED attendances (95% CI 17.0 to 62.0 reports/10 000 ED attendances; p<0.001) and 20.2 anonymous reports/10 000 ED attendances and OBDs combined (95% CI 12.6 to 27.8 reports/10 000 ED attendances and OBDs combined; p<0.001). Within inpatient areas, the most significant improvement occurred in medical units, with an additional 84.5 reports/10 000 OBDs (95% CI 24.9 to 144.1 reports/10 000 OBDs). The intervention was not able to demonstrate improved reporting in the ICUs. There was heterogeneity between individual units, with rates in medical and surgical intervention units (n = 6) ranging from 113 to 431 reports/10 000 OBDs.

Reporter designation

Most of the reports were generated by nurses (84%), followed by allied health professionals (11%) and doctors (5%). The intervention did not significantly improve reporting by doctors in inpatient areas, but did improve reporting in EDs. Conversely, reporting by nurses improved in the inpatient areas, but not in the EDs (table 3). With regard to anonymous reports, in 63 reports, the reporters identified only their profession (8 doctors and 55 nurses), 22 reports contained only the location of the incident and in 101 reports neither was disclosed. In hospitals with only one intervention unit (hospitals 1, 4 and 5), no paper reports were submitted anonymously.

Table 3 Comparison of reporting rates (incident reports/10 000 occupied bed days/emergency department attendances) by profession.

| Professional designation | Baseline | End | Significance of the interaction term† | Absolute difference (SEM)‡ | |||||

|---|---|---|---|---|---|---|---|---|---|

| Control reports | Intervention reports | Risk ratio* (95% CI) | Control reports | Intervention reports | Risk ratio* (95% CI) | ||||

| Reporting rates/10 000 OBDs | |||||||||

| Doctors | 0.2 (1/44 380) | 0.6 (3/48 073) | 0.4 (0 to 3.4) | 0.7 (3/45 762) | 6.3 (31/49 061) | 0.1 (0 to 0.3) | 0.213 | 5.2 (3.6) | |

| Nurses | 50.5 (224/44 380) | 80.3 (386/48 073) | 0.6 (0.5 to 0.7) | 88.5 (405/45 762) | 177.3 (870/49 061) | 0.5 (0.4 to 0.6) | <0.001 | 59.0 (17.9) | |

| Allied health | 1.1 (5/44 380) | 5.2 (25/48 073) | 0.2 (0 to 0.5) | 3.3 (15/45 762) | 14.5 (71/49 061) | 0.3 (0.1 to 0.4) | <0.001 | 7.1 (4.4) | |

| Reporting rates/10 000 ED attendances | |||||||||

| Doctors | 0.8 (3/39 504) | 0 (0/37 781) | N/A | 0.3 (1/38 760) | 9.0 (26/28 888) | 0.03 (0 to 0.2) | 0.001 | 9.5 (3.7) | |

| Nurses | 18.7 (74/39 504) | 5.8 (22/37 781) | 3.2 (2.0 to 5.3) | 19.3 (75/38 760) | 31.2 (90/28 888) | 0.6 (0.5 to 0.8) | 0.302 | 24.8 (9.2) | |

| Allied health | 0.3 (1/39 504) | 0 (0/37 781) | N/A | 0.3 (1/38 760) | 21.1 (61/28 888) | 0.1 (0 to 0.06) | 0.001 | 21.1 (5.3) | |

ED, emergency department; ICU, intensive care unit; N/A, not applicable; OBD, occupied bed day.

*Comparison made using Fisher's exact test.

†Comparison between baseline and end of intervention made using Poisson regression analysis, adjusting for clustering by hospitals, and allowing for robust estimates of standard errors. In order to test for the significance of the intervention effect, the statistical significance of the interaction term was assessed.

‡Absolute difference in change in reporting rates/10 000 OBDs (inpatient units) or 10 000 ED attendances between intervention and control units at the end of the study compared with those at baseline.

Reporting format

The majority (79%) of the 1275 reports lodged during the study period in intervention units were reported using the single‐page form, with 21% submitted through the call centre (table 4). The average time taken to report an incident through the call centre was 9 min 20 s; 57% of reports were logged out of office hours and 24% made on the weekend. Staff in major city hospitals used the call centre significantly more often than their regional counterparts (32.1% vs 4.1%, p<0.001). Regional units were the first to implement call centre reporting. Poor call centre use by regional hospitals is likely due to protracted report‐taking by a few inexperienced call centre operators in the first few weeks of commencing the project. More documentation‐related reports (13.1% vs 4.9% of all reports, p<0.001) and less human performance‐related reports (2.3% vs 7.2% of all reports, p<0.001) were generated using the one‐page form compared with the call centre method.

Table 4 Demographic profile of reports submitted using the one‐page form, call centre and online‐reporting options.

| Reports generated using one‐page form, n = 1005 (%) | Reports generated using call centre, n = 264 (%) | Reports generated electronically, n = 6 | Number of incidents lodged | p Value* | |

|---|---|---|---|---|---|

| Type of unit | 1275 | <0.001 | |||

| Medical | 70.3 | 29.7 | N/A | 612 | |

| Surgical | 78.2 | 21.8 | N/A | 165 | |

| ICU | 86.9 | 9.1 | 3.9% | 153 | |

| ED | 97.2 | 2.8 | N/A | 181 | |

| Anonymous | 83.5 | 16.5 | N/A | 164 | |

| Total | 78.8 | 20.7 | 0.5% | ||

| Regional/metro location | 1275 | ||||

| Regional | 95.9 | 4.1 | N/A | 518 | |

| Major city | 67.1 | 32.1 | 0.8% | 757 | <0.001 |

| Profession | 1017† | ||||

| Doctor | 82.5 | 17.5 | 57 | ||

| Nurse | 74.8 | 24.6 | 960 | 0.933 | |

ED, emergency department; ICU, intensive care unit; N/A, not applicable.

*Analysis undertaken using Fisher's exact test.

†132 allied health and 123 anonymous reports not included in analysis.

Despite providing training and giving access to staff, only six reports were lodged on‐line in the ICU. There was no significant difference by profession as to the reporting format used.

Change in types of incidents reported

Table 5 shows the comparison of principal incident types between intervention and control units at the end of the study period, having adjusted for baseline reporting practices. Despite falls‐related reports doubling in intervention units, as a percentage of all incidents reported, there was a significant relative decline (36.1–23.8% of all reports, p<0.001). Intervention units reported increased numbers of documentation, clinical management, aggression and environmental incidents during the study period, compared with their baseline‐reporting practices and reporting in the control unit. More incidents were submitted anonymously for incidents in which line managers were often implicated (clinical management, organisational management, and behaviour and human performance incidents) compared with identified reports (17.9% vs 11.7%, p = 0.009).

Table 5 Comparison of reporting rates at baseline and during the study period according to the principal incident type in matched control and intervention departments.

| Baseline | End | Comparison at end adjusted for baseline† p value | |||||

|---|---|---|---|---|---|---|---|

| Control % of reports (n) | Intervention % of reports (n) | Rate ratio* | Control % of reports (n) | Intervention % of reports (n) | Rate ratio* | ||

| Falls | 39.9% (131) | 36.1% (152) | 1.1 (0.9, 1.4) | 48.0% (263) | 23.8% (304) | 2.01 (1.7, 2.3) | <0.001 |

| Medication | 24.4% (80) | 26.8% (113) | 0.91 (0.7, 1.2) | 25.2% (138) | 27.9% (356) | 0.90 (0.7, 1.1) | 0.942 |

| Documentation | 2.7% (9) | 4.5% (19) | 0.61 (0.3, 1.3) | 3.0% (16) | 11.4% (146) | 0.25 (0.1, 0.4) | 0.004 |

| Clinical management ‡ | 7.3% (24) | 8.1% (34) | 0.91 (0.5, 1.5) | 5.3% (29) | 11.4% (146) | 0.46 (0.3, 0.7) | 0.035 |

| Accident/OH&S | 4.6% (15) | 5.5% (23) | 0.84 (0.4, 1.6) | 4.0% (22) | 4.8% (61) | 0.84 (0.5, 1.3) | 0.949 |

| Organisational management¶ | 3.7% (12) | 2.4% (10) | 1.54 (0.7, 3.7) | 5.7% (31) | 4.1% (53) | 1.36 (0.9, 2.0) | 0.868 |

| Behaviour/human performance | 6.1% (20) | 4.3% (18) | 1.43 (0.7, 2.7) | 3.5% (19) | 3.3% (42) | 1.05 (0.6, 1.8) | 0.339 |

| Blood/blood products | 0.3% (1) | 0.9% (4) | 0.32 (0, 2.6) | 1.1% (6) | 0.7% (8) | 1.74 (0.6, 5.1) | 0.147 |

| Medical device/equipment | 2.7% (9) | 6.9% (29) | 0.40 (0.2, 0.8) | 1.3% (7) | 4.8% (61) | 0.27 (0.1, 0.6) | 0.720 |

| Aggression | 4.0% (13) | 2.4% (10) | 1.67 (0.7, 3.9) | 1.8% (10) | 4.4% (56) | 0.41 (0.2, 0.8) | 0.011 |

| Security | 0.6% (2) | 0 | 0.9% (5) | 1.2% (15) | 0.78 (0.2, 2.1) | 0.290 | |

| Buildings/fittings/surroundings | 0.9% (3) | 0 | 0 | 1.2% (15) | 0.005 | ||

| Infection | 0 | 0.2% (1) | 0.2% (1) | 0.3% (4) | 0.58 (0, 4.6) | 0.806 | |

| Nutrition | 0.3% (1) | 0.7% (3) | 0.43 (0, 8.0) | 0.2% (1) | 0.4% (5) | 0.46 (0, 3.4) | 0.723 |

| Total | 100% (328) | 100% (421) | 0.045 | 100% (548) | 100% (1275) | <0.001 | <0.001 |

OH&S, Occupational Health and Safety.

*Fisher's exact test.

†End of study comparisons between intervention and control units were undertaken by formally testing the interaction term between period and study group in the generalised linear models.

‡Clinical management examples include procedures not done, delayed, incorrect or inadequate, wrong body part or site, unnecessary assessment, test or procedure, non‐adherence to rules, policy and procedure.

¶Organisational management examples include unavailable supplies, inadequate supervision/staffing level and after‐hour delays.

Of the 57 doctor‐initiated reports made during the study period, most related to clinical management issues (n = 18) and medication errors (n = 13). Because the majority of all reports were submitted by nurses, the overall changes in incident types, shown in table 5, largely reflect those of nurses.

Addressing barriers to reporting

Education

One education session was provided in each unit for doctors prior to commencing of the project. A number of sessions over a 2‐week period were provided to nursing staff. Allied health workers attended departmental meetings, interacted with staff and were exposed to promotional posters and incident forms displayed in intervention units, which may have improved their reporting from a very low baseline (table 3).

All nurse unit managers and patient safety managers, and three of the six major medical heads of units in the city attended the RCA workshop. Despite offering financial incentive (lost wages and all costs associated with attending), no GPs attended. Reporting by doctors in units where the medical heads of units attended the workshop was higher than in areas where this did not occur (35 vs 14 reports).

Feedback

There were 185 incidents in which severity was not classified by the reporter. Individual feedback was provided to reporters of the 41 (3.7%) incidents that resulted in death or permanent loss of function, and to reporters of the 90 (8.0%) incidents that caused permanent lessening of function, additional surgery or increased length of stay. With the exception of the regional ICU, where feedback was given on a 3‐monthly basis, aggregate feedback to nurses of less serious incidents by project officers was provided on a monthly basis in all intervention units in sessions at shift change. Feedback to doctors at departmental meetings was more difficult. In the four regional units, despite a number of attempts, only two feedback sessions were provided, because of a lack of participation by GPs. Units receiving ⩾3 feedback sessions in the 40‐week study period included the major city ED and two major city medical units. These three units generated more doctor‐generated reports than the combined total of the seven other intervention units.

Discussion

The intervention resulted in more anonymous reports and more reports in EDs, medical and surgical units, but not in ICUs. There were a greater number of doctor‐initiated reports in the ED and more nurse‐generated reports in areas other than the ED.

The lack of ability to improve reporting in ICUs may be due to the fact that, unlike other successful ICU reporting studies,10,12 project officers were unable to provide intense education, facilitation and feedback to the 200 nurses and 40 doctors. The lack of doctor‐initiated reports meant that feedback was limited to discussion of incidents generated in other areas, with potentially limited perceived relevance. Attributes of those units and groups demonstrating significant improvement in reporting are outlined in box 1.

Although other studies to improve incident reporting have had greater success than our study in inpatient areas7,11 and the ED,18,19 without a control unit for comparison, it is difficult to attribute the improvement to the intervention. With regard to reporting within professional groups, our doctor‐initiated reporting rate was lower than studies targeting only doctors using intense facilitation,10,15,20 and yet comparable with14 or higher than studies not restricted to doctors.21,22 As demonstrated in other studies targeting ⩾1 professional groups, the majority of reports in our study were generated by nurses.11,14

In our study, one‐fifth of all reports were generated using the call centre, which is higher than the 9% identified in a US study in primary practice.13 As with other studies in institutions offering a variety of reporting options, when given a choice, staff prefer to use paper‐based reporting to online reporting.

Possible reasons for the doubling of reports in surgical and medical control units during the study period include contamination by intervention unit staff and allied health professionals working in control units as part of normal hospital activity, and the impact of other quality activities in the hospital at the time of the intervention, including state‐wide RCA training.

The ability of the study to facilitate reporting of a more diverse range of incidents is important. In a climate in which staff are constantly facing time pressures, it is important that resources are focused on reporting incidents for which there is limited knowledge about contributing factors and in which important lessons can be learned.

Even though our intervention actively provided feedback and highlighted the ability to report anonymously, it lacked elements identified in other successful reporting systems.23 Reports were not sent to an independent organisation.24 Most reports were managed by nurse unit managers and medical heads of units who were not provided with additional time, financial assistance, or a risk register to assist in prioritising and taking action on the many more reports being generated. Unlike aviation,25 there was no consistent systematic evaluation of reports at a macro‐level by an independent expert trained at identifying underlying causes of incidents in the system.

There were a number of limitations to our study. Contamination of results was suggested by the finding that 15 call centre incidents and five one‐page reports were generated by staff in control units. Such contamination diminished the measurable effect of the intervention. Lack of randomisation in major city hospitals might have introduced selection bias. Baseline reporting in the intervention ICU was higher than in its control unit, whereas baseline reporting in the control ED was higher than in its intervention unit. Our analysis focused on the relative change between baseline and study period, to take into account the observed baseline differences. To reduce any selection bias associated with non‐randomised sampling of major city units, wherever possible, we matched unit types within the hospital (eg, matching medical subspecialty with medical subspecialty) and specialties between hospitals (eg, neurology unit in hospital 4 with neurology unit in hospital 2). However, units with higher reporting rates at baseline may be more amenable to strategies to increase reporting, or units with lower reporting rates at baseline may have greater potential for improvement.

Our finding that it is possible to improve reporting rates and diversify the types of incidents reported is encouraging. If voluntary reporting is not successful in identifying adverse events, particularly by doctors, then there is the real risk that governing bodies will not continue to support it, or that a more regulatory approach will be taken instead.

Box 1: Features distinctive in intervention units demonstrating improved incident reporting

Medical line managers attended root cause analysis training specifically designed to teach systems approach in error management.

The initial education captured the majority of doctors.

Departmental education sessions were held at least every 10 weeks, with discussion of incidents conducted for at least 20 min.

Feedback provided clinically relevant incidents for discussion.

Posters and manuals were clearly displayed in clinical areas, describing what types of incidents staff should report.

Proficient call centre nurses captured reports in a timely manner.

On‐line reporting was not offered.

Acknowledgments

We thank the South Australian Safety and Quality Council for funding this project; the Australian Patient Safety Foundation for providing the AIMS® software; executive staff in the six hospitals for supporting the project; Jesia Berry, Marilyn Kingston, Michelle DeWit and Rhonda Bills for assisting in implementation of the study; and the doctors, nurses and allied health workers who submitted reports.

Abbreviations

ED - emergency department

GP - general practitioner

ICU - intensive care unit

OBD - occupied bed day

RCA - root cause analysis

Footnotes

Competing interests: None declared.

No reprints will be available.

References

- 1.Wilson R M, Runciman W B, Gibberd R W.et al The Quality in Australian Health Care Study. Med J Aust 1995163458–471. [DOI] [PubMed] [Google Scholar]

- 2.Runciman W B, Moller J.Iatrogenic injury in Australia. A report prepared by the Australian Patient Safety Foundation for the National Health Priorities and Quality Branch of the Department of Health and Aged Care of the Commonwealth Government of Australia. Adelaide, South Australia: Australian Patient Safety Foundation, 2001

- 3.Australian Council for Safety and Quality in Health Care Maximising national effectiveness to reduce harm and improve care: fifth report to the Australian Health Ministers' Conference. Canberra: Safety and Quality Council, 2004

- 4.Expert Group on Learning from Adverse Events in the N H S.An organisation with a memory. London: Stationary Office, 2000

- 5.Kohn L T, Corrigan J M, Donaldson M S.To err is human: building a safer health system. Washington, DC: National Academy Press, 20001–16. [PubMed]

- 6.Schmidek J M, Weeks W B. Relationship between tort claims and patient incident reports in the Veterans Health Administration. Qual Saf Health Care 200514117–122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jha A K, Kuperman G J, Teich J M.et al Identifying adverse drug events: development of a computer‐based monitor and comparison with chart review and stimulated voluntary report. J Am Med Inform Assoc 19985305–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kingston M J, Evans S M, Smith B J.et al Attitudes of doctors and nurses towards incident reporting: a qualitative analysis. Med J Aust 200418136–39. [DOI] [PubMed] [Google Scholar]

- 9.Evans S M, Berry J G, Smith B J.et al Attitudes and barriers to incident reporting: a collaborative hospital study. Qual Saf Health Care 20061539–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.O'Neil A C, Petersen L A, Cook E F.et al Physician reporting compared with medical‐record review to identify adverse medical events. Ann Intern Med 1993119370–376. [DOI] [PubMed] [Google Scholar]

- 11.Kivlahan C, Sangster W, Nelson K.et al Developing a comprehensive electronic adverse event reporting system in an academic health center. Jt Comm J Qual Improv 200228583–594. [DOI] [PubMed] [Google Scholar]

- 12.Beckmann U, Bohringer C, Carless R.et al Evaluation of two methods for quality improvement in intensive care: facilitated incident monitoring and retrospective medical chart review. Crit Care Med 2003311006–1011. [DOI] [PubMed] [Google Scholar]

- 13.Fernald D H, Pace W D, Harris D M.et al Event reporting to a primary care patient safety reporting system: a report from the ASIPS collaborative. Ann Fam Med 20042327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nakajima K, Kurata Y, Takeda H. A web‐based incident reporting system and multidisciplinary collaborative projects for patient safety in a Japanese hospital. Qual Saf Health Care 200514123–129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Weingart S N, Callanan L D, Ship A N.et al A physician‐based voluntary reporting system for adverse events and medical errors. J Gen Intern Med 200116809–814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Australian Institute of Health and Welfare Australian Hospital Statistics 2004–2005. Catalogue number HSE41. Canberra, ACT: Commonwealth Government of Australia, 2006

- 17.Bagian J P, Lee C, Gosbee J.et al Developing and deploying a patient safety program in a large health care delivery system: you can't fix what you don't know about. Jt Comm J Qual Improv 200127522–532. [DOI] [PubMed] [Google Scholar]

- 18.Fordyce J, Blank F S L, Pekow P.et al Errors in a busy emergency department. Ann Emerg Med 200342324–333. [DOI] [PubMed] [Google Scholar]

- 19.Sucov A, Shapiro M J, Jay G.et al Anonymous error reporting as an adjunct to traditional incident reporting improves error detection. Acad Emerg Med 20018498–499. [Google Scholar]

- 20.Welsh C H, Pedot R, Anderson R J. Use of morning report to enhance adverse event detection. J Gen Intern Med 199611454–460. [DOI] [PubMed] [Google Scholar]

- 21.Sutton J, Standen P, Wallace A. Unreported accidents to patients in hospital. Nurs Times 19949046–49. [PubMed] [Google Scholar]

- 22.Wolff A M, Bourke J, Campbell I A.et al Detecting and reducing hospital adverse events: outcomes of the Wimmera clinical risk management program. Med J Aust 2001174621–625. [DOI] [PubMed] [Google Scholar]

- 23.Leape L L. Reporting of adverse events. N Engl J Med 20023471633–1638. [DOI] [PubMed] [Google Scholar]

- 24.Beckmann U, West L F, Groombridge G J.et al The Australian Incident Monitoring Study in Intensive Care: AIMS–ICU. The development and evaluation of an incident reporting system in intensive care. Anaesth Intensive Care 199624314–319. [DOI] [PubMed] [Google Scholar]

- 25.Billings C E.The NASA aviation safety reporting system: lessons learned from voluntary incident reporting. Enhancing patient safety and reducing errors in health care. Chicago, IL: National Patient Safety Foundation, 1999