Abstract

Objective

An intervention existing of an evidence-based medicine (EBM) course in combination with case method learning sessions (CMLSs) was designed to enhance the professional performance, self-efficacy and job satisfaction of occupational physicians.

Methods

A cluster randomized controlled trial was set up and data were collected through questionnaires at baseline (T0), directly after the intervention (T1) and 7 months after baseline (T2). The data of the intervention group [T0 (n = 49), T1 (n = 31), T2 (n = 29)] and control group [T0 (n = 49), T1 (n = 28), T2 (n = 28)] were analysed in mixed model analyses. Mean scores of the perceived value of the CMLS were calculated in the intervention group.

Results

The overall effect of the intervention over time comparing the intervention with the control group was statistically significant for professional performance (p < 0.001). Job satisfaction and self-efficacy changes were small and not statistically significant between the groups. The perceived value of the CMLS to gain new insights and to improve the quality of their performance increased with the number of sessions followed.

Conclusion

An EBM course in combination with case method learning sessions is perceived as valuable and offers evidence to enhance the professional performance of occupational physicians. However, it does not seem to influence their self-efficacy and job satisfaction.

Keywords: Evidence-based medicine, Continuing medical education, Knowledge management, Occupational physician, Professionalism

Introduction

After completing their formal education, physicians have the moral and ethical obligation to keep their knowledge up-to-date. After all, knowledge is dynamic and can be out-dated within as little as 10 years (Donen 1998). According to the latest evidence, physicians must therefore commit themselves to continuing medical education (CME) in order to guarantee the quality of care (Davis et al. 2003). Yet it is difficult for physicians to remain abreast of new evidence and to integrate this evidence into their own clinical practice. Like all physicians, occupational physicians (OPs) are currently overwhelmed by the quantities of evidence being published, and a good knowledge infrastructure and the time required to keep up are lacking (Bakken 2001; Ely et al. 2002; Hugenholtz et al. 2007). Moreover, many OPs lack the skills in epidemiology and statistics that would allow them to evaluate the scientific evidence available to them with an adequate degree of accuracy (Sackett and Parkes 1998; McCluskey and Lovarini 2005; McConaghy 2006). As a result, OPs tend to depend on their routines or on the opinions of colleagues or experts when searching for answers to their questions about patient care (Ely et al. 2002; Schaafsma et al. 2004).

Efforts have been made to support OPs in the uptake of knowledge. More and more evidence-based guidelines and systematic reviews have been developed with a view to consolidating high-quality knowledge into a more practical form (Birrell and Beach 2001; Eakin and Mykhalovskiy 2003). Nevertheless, the implementation of these guidelines leaves much to be desired (Grol 2001; Gross et al. 2001; Grimshaw et al. 2006). In addition, the practice of evidence-based medicine (EBM) has been in vogue among OPs over the past few decades, thus integrating the best external evidence with individual clinical expertise and patients’ choices (Sackett et al. 1996; Timmermans and Kolker 2004).

As occupational health specialists, OPs must consider their patients’ working conditions, workplace environments, the management priorities, and labour legislation (Lurie 1994). They also have to deal with a whole range of diseases, making it harder for them to keep up with all the latest evidence. A survey among Dutch OPs revealed that most of them consult the most up-to-date evidence in their fields infrequently––averaging no more than three times per month––and that they make only sporadic use of practice guidelines (Noordam and de Vries 2002). These factors subsequently influence the quality of the advice they give to their patients.

In order to enhance the professional performance of OPs in The Netherlands by helping them make greater use of available knowledge, a multifaceted intervention has been developed. CME studies have already demonstrated that an intervention is more effective when designed in a multifaceted manner. Interventions designed for a small group of physicians from within a single discipline, and interactive interventions relying on problem-based learning strategies are proven success factors which were taken into account in designing the intervention (Davis et al. 1999; Smits et al. 2003).

The incorporation of these new elements into physicians’ routine practice was aimed at enhancing their job satisfaction. The new knowledge and improved skills gained by means of this scheme could, for example, help prevent the effects of boredom; boredom can occur among OPs as a result of routinization and repetitiveness of the job, as well as lack of opportunities for CME, all of which are factors that contribute to a reduction in job satisfaction (Kushnir et al. 2000; Bovier and Perneger 2003). In addition to the stated objective of enhancing physicians’ job satisfaction, it was also theorized that the intervention would have a positive impact on the occupational self-efficacy of OPs, given the fact that the status of OPs is considered to be lower than that of other medical specializations (Walsh 1986). By teaching them EBM and by supporting them in discussing the nature and practice of their profession, the intervention aims to improve their sense of self-confidence in the quality of the work that they perform.

This study combined a didactic EBM course with recurrent case method learning sessions (CMLSs) in small peer groups. It was hypothesized that when OPs are able to find, share, and integrate this knowledge, professional performance will improve and both job satisfaction and occupational self-efficacy will be enhanced.

Methods

Study population and inclusion

Study participants were Dutch OPs working for occupational health service (OHS) providers or in private practices. They were recruited both via OHS providers and calls for participants published in the Newsletter of The Netherlands Society of Occupational Medicine (NSOM). Potential participants received a brochure containing information about the study, and were requested to return the informed consent and application form if they decided to participate. Inclusion criteria for study participation were commitment of the participant’s OHS management and Internet access in the workplace.

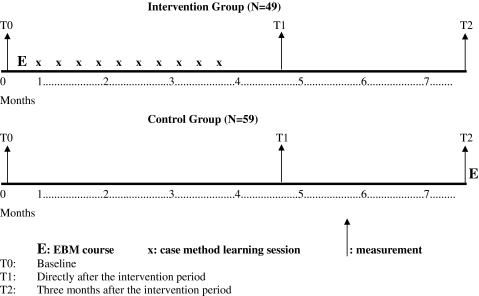

Study design and procedure

This study was a randomized controlled trial, with randomization on the peer group level. Before randomization, all participants were clustered into peer groups and each group was randomly assigned to either the intervention group or control group. The peer groups already existed or were formed by the researchers on a geographical basis. Block randomization, of four groups per block, was applied in cases where two or more groups from one OHS provider participated in the study. The intervention group participated in an EBM training programme, consisting of an EBM course paired with case method learning sessions, lasting for a period of 4 months. OPs randomized to the control group applied their usual standards of care during the 4-month intervention period. Pre- and post-test measurements were conducted by means of a questionnaire. Both the intervention group and the control group received the questionnaire on three occasions; one baseline questionnaire just before the intervention period (T0), one directly after the intervention period (T1), and a final one (T2) 3 months after the intervention period (see Fig. 1).

Fig. 1.

Time frame of the randomized controlled trial

Intervention

OPs in the intervention group received an EBM course of three half-days spread over 2 weeks. During this course they learned the basics of EBM: a general introduction to EBM, instructions on searching for literature using PubMed, and techniques for critical appraisal of the literature. In addition, a member of each peer group volunteered to be the chairperson and received training in chairing case method learning sessions (CMLSs).

Upon completion of the EBM course, the OPs scheduled approximately ten CMLSs with their peer group (6–8 persons). These sessions took place every other week and lasted 1–1½ h. During these sessions, OPs were challenged to each discuss one case in a concise and structured format, and to give one another feedback on how and where to find knowledge on those cases. At the end of a session, the OPs chose a few cases (half of the total number of discussed cases) for which an evidence search was carried out by the respective OPs. This search was conducted by means of the EBM method learned using a standardized form and guided by peers’ feedback. The results of the search were presented briefly at the beginning of the next session.

During the intervention period, OPs from both the intervention group and the control group had access to the Internet and to a helpdesk offering support in conducting literature searches and accessing full-text articles. The control group received the EBM course after the study was completed. The intervention was pilot-tested and evaluated with one peer group, but no major adjustments of the original concept were deemed to be necessary. The study was carried out from September 2005 until the beginning of January 2006; both the EBM course and the CMLSs were accredited by the Dutch “Board of Registration of Doctors of Social Medicine” [Sociaal-Geneeskundigen Registratie-Commissie (SGRC)].

Process and outcome measures

Process measurement assessed the extent of OPs’ participation in the CMLSs. Participation was considered to be good if at least eight sessions were attended, moderate if six or seven sessions were attended, and poor if less than six sessions were attended. Furthermore, the length of each CMLS, the number of cases discussed per CMLS and the number of searches per CMLS were assessed.

Professional performance, self-efficacy, and job satisfaction were the main outcomes measured by this study. In addition, the perceived utility of the CMLS was assessed.

Professional performance. Professional performance was defined as the self-reported practice of keeping up with and using knowledge in daily practice. A questionnaire was designed, modelled on a non-validated Dutch questionnaire developed by Schaafsma et al. (2004), which included questions to determine the amount of time spent on keeping up-to-date, and extent of use of the Internet and literature databases (Schaafsma et al. 2004).

Self-efficacy. The occupational self-efficacy of the OPs was defined as each individual person’s belief in his or her own ability to perform his or her job, and was measured using Schyns and von Collani’s validated occupational self-efficacy scale (reliability 0.92) (Schyns and von Collani 2002).

Job satisfaction. OPs’ job satisfaction was measured using 7 of the 13 subscales of the validated physician worklife survey (PWS). These subscales were: autonomy (reliability 0.70), personal time (reliability 0.79), patient care issues (reliability 0.74), relationships with colleagues (reliability 0.72), global job satisfaction (reliability 0.86), career satisfaction (reliability 0.88), and specialty satisfaction (reliability 0.82). Both the self-efficacy and job satisfaction questionnaires were translated into Dutch by a professional translator and one of the researchers (NH), and adjusted to the OPs’ specific situations (Linzer et al. 2000).

Perceived utility of the CMLS. To determine the utility of the CMLS, all participants filled out a short evaluation form after every session, concerning the contribution of the CMLS to gaining new insights and to improving the quality of their performance.

Statistical analysis

Due to the intensity of the intervention, our target sample size was 100 OPs, with 50 in the intervention and control groups alike. Differences in baseline characteristics were tested with t-tests for continuous variables and Chi-square tests for categorical variables. To define the effect of the intervention on professional performance, job satisfaction, and self-efficacy, intention to treat analyses were performed in mixed model analyses, based on repeated measurements with adjustments for the cluster randomization. First, the overall effect of the intervention over time was calculated on the basis of a comparison between the intervention group and the control group for professional performance, job satisfaction, and self-efficacy. Next, mean scores measuring the utility of the CMLSs were calculated in the intervention group, as expressed in the contribution of the CMLSs to this group’s gaining new insights into their field and to the quality of their performance. The utility of the CMLSs as related to the number of sessions attended was calculated by GLM analyses for repeated measures. Finally, subgroup analyses were performed within the intervention group to investigate potential predictors for high or low scores on professional performance, job satisfaction, and self-efficacy. The following predictors were analysed: the effect of participation in the CMLSs (good, moderate or poor); age (higher or lower than the median of 48 years) and experience as an OP (more or less than the median of 13 years). Statistical analyses were carried out using SPSS version 13.0.

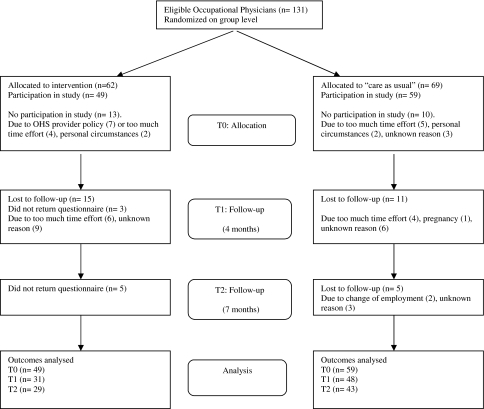

Results

In total, 131 OPs were recruited from 16 occupational health services and 8 private practices between May 2005 and September 2005. Twenty-three OPs withdrew before the start of the intervention, leaving 108 participating OPs. Primary reasons for withdrawal were a lack of support from the OHS provider or an objection to the expected amount of time required for the intervention. During the intervention period, 18 OPs in the intervention group and 16 OPs in the control group withdrew from the study (see Fig. 2).

Fig. 2.

Flow chart of participants through trial

The personal characteristics of the participating OPs at baseline are shown in Table 1. With the exception of mean age (48 vs. 45 years) and years of experience as a medical doctor (MD) (20 vs. 17 years), there were no statistically significant differences between the intervention and the control group.

Table 1.

Baseline characteristics of OPs

| Characteristics | Intervention group (n = 49) | Control group (n = 59) |

|---|---|---|

| Age in years, mean (SD)* | 48 (5.8) | 45 (6.7) |

| Women, n (%) | 23 (47) | 22 (37) |

| MD years of experience, mean (SD)* | 20 (5.7) | 17 (6.8) |

| OP years of experience, mean (SD) | 14 (6.1) | 13 (7.1) |

| Previous experience with EBM education, n (%) | 11 (22) | 9 (15) |

| Previous experience with critical appraisal, n (%) | 14 (29) | 14 (24) |

| Experience with research, n (%) | 23 (47) | 22 (37) |

| N of groups | 7 | 9 |

*p < 0.05 t-test

Process evaluation

Less than half of the OPs in the intervention group attended eight CMLSs. However, 80% of the OPs participated in six sessions, which was considered to be “moderate”. The length of the CMLS was on average almost 10 min longer than the intended 1 h. Each participant in the group was supposed to discuss one case during each session, thus an average of seven cases would be discussed during a single CMLS. The actual average number of cases discussed during a single CMLS was 5.4. Consequently, the number of searches per CMLS was slightly lower (3.1) than the intended 3.5 (half of the seven cases). There was no peer group with substantially deviating results on the process measurements.

Professional performance, self-efficacy, and job satisfaction

Table 2 shows professional performance, self-efficacy, and job satisfaction as measured in T0, T1, and T2 for both the intervention and control group. The difference in the overall effect of the intervention between the intervention and control groups over time was statistically significant for professional performance (p < 0.001). Job satisfaction subscales and self-efficacy changes were small and statistically significant neither in terms of the difference between the two groups, nor within the two groups. Table 2 shows the scores for professional performance. Post-hoc analyses demonstrated that OPs in the intervention group scored significantly higher on professional performance in T1 and T2. Job satisfaction scores on patient care issues rose over time for the intervention group and dropped for the control group, but this trend was not striking enough to be significant. Specialty satisfaction scored lowest in both the intervention and control group, while global satisfaction and self-efficacy scored highest in both.

Table 2.

Professional performance, self-efficacy, and job satisfaction (autonomy, personal time, patient care issues, relationships with colleagues, global job satisfaction, career satisfaction, and specialty satisfaction) in T0 (n = 49, n = 59), T1 (n = 28, n = 48) and T2 (n = 28, n = 43)

| Variablesa | Time point | Intervention group | Control group | ||

|---|---|---|---|---|---|

| Mean | (95% CI) | Mean | (95%CI) | ||

| Professional performance* | T0 | 20.53 | (19.67–21.39) | 21.17 | (20.36–21.99) |

| T1 | 23.31 | (22.62–24.00) | 21.06 | (20.10–22.03) | |

| T2 | 22.97 | (22.32–23.61) | 20.37 | (19.39–21.35) | |

| Self-efficacy | T0 | 3.91 | (3.77–4.04) | 3.99 | (3.87–4.11) |

| T1 | 4.09 | (3.95–4.22) | 3.96 | (3.81–4.10) | |

| T2 | 4.04 | (3.90–4.18) | 3.97 | (3.85–4.08) | |

| Job satisfaction: autonomy | T0 | 3.89 | (3.79–3.99) | 3.92 | (3.83–4.01) |

| T1 | 3.89 | (3.73–4.05) | 3.87 | (3.75–3.99) | |

| T2 | 3.83 | (3.69–3.97) | 3.93 | (3.84–4.01) | |

| Job satisfaction: personal time | T0 | 3.47 | (3.25–3.69) | 3.52 | (3.34–3.71) |

| T1 | 3.61 | (3.35–3.87) | 3.42 | (3.22–3.63) | |

| T2 | 3.69 | (3.41–3.97) | 3.59 | (3.38–3.79) | |

| Job satisfaction: patient care issues | T0 | 3.92 | (3.73–4.11) | 3.89 | (3.77–4.01) |

| T1 | 3.97 | (3.75–4.19) | 3.85 | (3.66–4.03) | |

| T2 | 4.04 | (3.85–4.24) | 3.71 | (3.56–3.87) | |

| Job satisfaction: relationships with colleagues | T0 | 3.67 | (3.49–3.86) | 3.81 | (3.68–3.95) |

| T1 | 3.79 | (3.59–3.99) | 3.73 | (3.58–3.88) | |

| T2 | 3.70 | (3.53–3.86) | 3.74 | (3.59–3.90) | |

| Job satisfaction: global job satisfaction | T0 | 4.03 | (3.83–4.23) | 3.98 | (3.83–4.14) |

| T1 | 4.15 | (3.91–4.38) | 3.85 | (3.64–4.05) | |

| T2 | 4.08 | (3.90–4.27) | 3.93 | (3.72–4.14) | |

| Job satisfaction: career satisfaction | T0 | 3.81 | (3.58–4.04) | 3.76 | (3.59–3.94) |

| T1 | 3.88 | (3.66–4.11) | 3.73 | (3.54–3.91) | |

| T2 | 3.80 | (3.59–4.01) | 3.74 | (3.54–3.94) | |

| Job satisfaction: specialty satisfaction | T0 | 3.35 | (3.10–3.60) | 3.29 | (3.12–3.49) |

| T1 | 3.28 | (2.93–3.63) | 3.08 | (2.83–3.33) | |

| T2 | 3.34 | (3.06–3.63) | 3.23 | (2.98–3.47) | |

* P < 0.001. High score corresponds to favourable outcome [0–5 for all variables, except professional performance (0–27)]

aOverall tests of trends during the intervention, comparing the intervention group with the control group

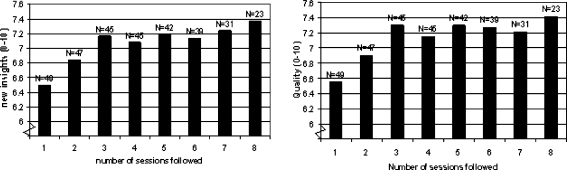

Perceived utility

The perceived utility of the CMLSs for gaining new insights and for improving the quality of the OP’s performance is shown in Fig. 3. From the figure, it is evident that the perceived utility increases during the first three sessions, but no significant increase was achieved.

Fig. 3.

Intervention group OPs’ evaluation scores (mean) of the contribution of the CMLS to gaining new insights in their field and to the quality of their performance as related to the number of CMLS they followed

Subgroup analysis

The potential predictors of effect, namely participation in the CMLSs (good, moderate, or poor), age (higher or lower than the median of 48 years), and experience as an OP (more or less than the median of 13 years) showed no significant relation to professional performance scores within the intervention group.

Discussion

Main findings

This study shows that an EBM course in combination with CMLSs enhances the self-reported professional performance of OPs. However, it enhances neither their job satisfaction nor self-efficacy. In general, OPs in the intervention group complied fairly well with the pre-defined goals of the intervention. The perceived value of the CMLSs increased as the OPs attended more sessions.

Strengths and weaknesses of the study

The study is unique in that it implements EBM in a non-clinical setting within The Netherlands in order to enhance professional performance of OPs. Added to this is the fact that a randomized controlled trial (RCT) was used as the “golden standard” to determine the effectiveness of the intervention. A well-known problem in conducting RCTs is the recruitment of participants. In this study, given the intensity of the intervention, the number of participants included (131 OPs) is relatively high. This was accomplished by fitting the intervention, insofar as possible, into the daily practice of the OPs. We arranged accreditation for the CMLSs, and the OPs could exchange the time spent on the CMLSs for other (compulsory) forms of consultation. Furthermore, thanks to the use of mixed model analyses, we were able to get a maximum amount of data (data from T0, T1, and T2) from a minimum number of tests.

Nevertheless, some methodological aspects of this study must be considered. The first is the potential factor of a biased sample of OPs; only motivated OPs who were supported by their management participated in this study. This might explain the ceiling effect in the scores for job satisfaction and self-efficacy at baseline. The ceiling effect is well known in the medical field (Steen et al. 2003). Because all participants were close to their maximum score already, any additional increase in scores was difficult to achieve. It may be possible that, if the intervention was to be carried out with OPs who are less motivated and are less interested in EBM, a significant effect on job satisfaction and self-efficacy could be measured. Such a strategy would also, however, have run the risk of even higher dropout rate for OPs. Moreover, the questionnaires used in this study measured generalized statements on job satisfaction and self-efficacy, rather than concepts that were specifically tailored to our intervention. The use of another, more targeted questionnaire would have shown greater enhancement of job satisfaction and self-efficacy among OPs; unfortunately, neither we could find such validated questionnaire nor were able to develop one ourselves. As job satisfaction and self-efficacy are multi-dimensional concepts, it may be that our intervention did not intervene to a sufficiently strong degree on all dimensions.

A second consideration is that it does not appear to be possible to estimate a reliable intracluster correlation coefficient (ICC), though this is essential for sample size calculations for a cluster randomized trial (Campbell et al. 2000). Consequently, the pre-defined number of 100 OPs (50 in each group) was set as the maximum for pragmatic reasons only. Unfortunately, despite being tailored to OPs’ daily practice, the intensity of the intervention caused a relatively high dropout rate in the intervention group, which might have caused a lack of change over time in all parameters other than professional performance. However, the lack of change over time in these parameters suggests that there was no selection process involved related to the professional quality, general motivation, or satisfaction of the participants.

Furthermore, the use of self-assessment for obtaining outcome measurements could have led participants to give desirable answers (Davis et al. 2006). Especially as the OPs were trained in EBM, it most likely enhanced their attention to the use of up-to-date knowledge in their daily practice and may have increased positive answers on the questionnaire on self-reported professional performance.

Relation to other studies

Several studies have shown that while it is possible to enhance physicians’ knowledge and skills of EBM, actual change of practice and behaviour is much more complicated (Wyatt 2000; Bauchner et al. 2001; McCluskey and Lovarini 2005). This study did show that enhanced professional performance was sustained over time; it might be concluded, therefore, that designing the intervention in a multifaceted manner by adding the CMLSs to the didactic EBM course could have had an added value in this intervention.

Furthermore, the CMLSs can facilitate the so-called “communities of practice”, which are an important component of knowledge management. Whereas, knowledge management is commonly applied in the business sector, health care is still behind in the knowledge management movement (Revere et al. 2007). Knowledge management offers a structured process for the generation, storage, distribution, and application of knowledge in organizations. This includes both tacit knowledge (personal experience) and explicit knowledge or evidence (Wyatt 2001). Communities of practices can facilitate the integration of tacit and explicit knowledge. In these communities, professionals identify themselves as having a common interest and come together at frequent intervals to collaborate on their shared interest. The result is that the knowledge used by the professionals becomes part of the collective knowledge developed within their community, ultimately leading to the improvement of their professional performance (Sandars and Heller 2006).

Our study showed no significant effect on the occupational self-efficacy of the OPs. It is difficult to compare this finding to results from other studies, since self-efficacy is generally designed as a mediating variable in studies aimed at behavioural change, as per Bandura’s theory of planned behaviour (Bandura 1977). Forsetlund et al. (2003), for example, conducted a similar intervention study among OPs in Norway, but they measured self-efficacy as a mediating variable for job satisfaction as one of the outcomes. They did not, however, find statistically significant differences in self-efficacy or job satisfaction scales (Forsetlund et al. 2003). The present study used self-efficacy as a standalone concept to indicate OPs’ self-confidence in their doing a qualitatively good job rather than as a predictor for an intention to change behaviour.

Possible mechanisms and implications

It was shown that practising EBM in a non-clinical setting is possible if existing barriers, such as access to the Internet, to databases and full-text articles, and availability of time, are overcome. Despite the tailor-made intervention, the motivated group of OPs, and the support received from OHS management, it is doubtful whether this intervention is suitable for every OP. Although less than 50% of the OPs participated in the intended eight sessions, 80% of them participated in six sessions, which was considered to be a “moderate” result. In our opinion, this gives an indication that the intervention is feasible for OPs. However, it would be advisable to reduce the frequency of the sessions from once every 2 weeks to once every month, in order to increase the opportunity for OPs to attend the sessions. Furthermore, it raises the question as to whether all OPs should even aim to be “EBM-ers”, or that the majority can be satisfied with being “EBM users” (Akl et al. 2006). Perhaps, there is no need for all OPs to become fully-trained EBM experts. It is recommended, however, that physicians at least learn the basics of EBM and then turn to clinical librarians specialized in occupational health or physicians specialized in EBM to answer any (complex) questions (Timmermans and Angell 2001; Plaice and Kitch 2003; Revere et al. 2007). The OPs who participated in this study were motivated individuals, and for that reason might be seen as advocates for the use of EBM in the occupational health setting. The use of advocates to achieve behaviour change has proven to be effective (Davis et al. 1999; Grimshaw et al. 2001; Sisk et al. 2004). It is assumed that, once proven to be effective and useful, and once promoted by the OPs who participated in this study, more OPs are likely to take EBM up in their practice.

An additional advantage of the intervention is that by noting down their cases and searches, OPs make their knowledge explicit. This method allows them to make their cases, along with the corresponding EBM searches and answers, available to other potential users. Possible uses of such data are to collect it on a local intranet, or perhaps even the Internet, so that OPs could search the resulting databank for evidence-based answers to their case-related questions, before conducting a new search themselves.

Unanswered questions and future research

In this study, investigation was limited to the level of (the performance of) physicians. Potential enhancement of patient care, which usually yields a smaller measurable effect (Mansouri and Lockyer 2007), was not measured. Schaafsma et al. (2007) showed an enhancement of the evidence-based occupational physicians’ advice in sickness absence episodes among the same participants of this study (Schaafsma et al. 2007). It might be assumed that enhanced use of evidence as the basis for providing advice will serve to enhance patient care as well (Grol and Grimshaw 2003). However, to be able to determine this in a thorough manner, another study would have to be conducted.

Acknowledgments

The research group would like to thank the Medical Library of the Academic Medical Center, and Paul Smits and Carel Hulshof for providing the EBM course.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Reference

- Akl EA, Guyatt G, Maroun N, Neagoe G, Schünnemann HJ. EBM user and practitioner models for graduate medical education: what do residents prefer? Med Teach. 2006;28(2):192–194. doi: 10.1080/01421590500314207. [DOI] [PubMed] [Google Scholar]

- Bakken S. An informatics infrastructure is essential for evidence-based practice. J Am Med Inform Assoc. 2001;8(3):199–201. doi: 10.1136/jamia.2001.0080199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977;84(2):191–215. doi: 10.1037/0033-295X.84.2.191. [DOI] [PubMed] [Google Scholar]

- Bauchner H, Simpson L, Chessare J. Changing physician behaviour. Arch Dis Child. 2001;84(6):459–462. doi: 10.1136/adc.84.6.459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birrell L, Beach J. Developing evidence-based guidelines in occupational health. Occup Med. 2001;51(2):73–74. doi: 10.1093/occmed/51.2.073. [DOI] [PubMed] [Google Scholar]

- Bovier PA, Perneger TV. Predictors of work satisfaction among physicians. Eur J Public Health. 2003;13(4):299–305. doi: 10.1093/eurpub/13.4.299. [DOI] [PubMed] [Google Scholar]

- Campbell M, Grimshaw J, Steen N. Sample size calculations for cluster randomised trials. J Health Serv Res Policy. 2000;5(1):12–16. doi: 10.1177/135581960000500105. [DOI] [PubMed] [Google Scholar]

- Davis D, O’Brien MAT, Freemantle N, Wolf FM, Mazmanian P, Taylor-Vaisey A. Impact of formal continuing medical education: do conferences, workshops, rounds, and other traditional continuing education activities change physician behavior or health care outcomes? JAMA. 1999;282(9):867–874. doi: 10.1001/jama.282.9.867. [DOI] [PubMed] [Google Scholar]

- Davis D, Evans M, Jadad A, Perrier L, Rath D, Ryan D, Sibbald G, Straus S, Rappolt S, Wowk M, Zwarenstein M. The case for knowledge translation: shortening the journey from evidence to effect. BMJ. 2003;327(7405):33–35. doi: 10.1136/bmj.327.7405.33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- Donen N. No to mandatory continuing medical education, yes to mandatory practice auditing and professional educational development. CMAJ. 1998;158(8):1044. [PMC free article] [PubMed] [Google Scholar]

- Eakin JM, Mykhalovskiy E. Reframing the evaluation of qualitative health research: reflections on a review of appraisal guidelines in the health sciences. J Eval Clin Pract. 2003;9(2):187–194. doi: 10.1046/j.1365-2753.2003.00392.x. [DOI] [PubMed] [Google Scholar]

- Ely JW, Osheroff JA, Ebell MH, Chambliss ML, Vinson DC, Stevermer JJ, Pifer EA. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. BMJ. 2002;324(7339):710. doi: 10.1136/bmj.324.7339.710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forsetlund L, Bradley P, Forsen L, Nordheim L, Jamtvedt G, Bjorndal A (2003) Randomised controlled trial of an theoretically grounded tailored intervention to diffuse evidence-based public health practice. BMC Med Educ 3(2) [DOI] [PMC free article] [PubMed]

- Grimshaw JMM, Shirran LM, Thomas RB, Mowatt GM, Fraser CM, Bero LP, Grilli RM, Harvey EB, Oxman AM, O’Brien MAM. Changing provider behavior: an overview of systematic reviews of interventions. Med Care. 2001;39(8):II. doi: 10.1097/00005650-200108002-00002. [DOI] [PubMed] [Google Scholar]

- Grimshaw J, Eccles M, Thomas R, MacLennan G, Ramsay C, Fraser C, Vale L. Toward evidence-based quality improvement. Evidence (and its limitations) of the effectiveness of guideline dissemination and implementation strategies 1966–1998. J Gen Intern Med. 2006;21(s2):S14–S20. doi: 10.1111/j.1525-1497.2006.00357.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grol RP. Successes and failures in the implementation of evidence-based guidelines for clinical practice. Med Care. 2001;39(8):II. doi: 10.1097/00005650-200108002-00003. [DOI] [PubMed] [Google Scholar]

- Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients’ care. Lancet. 2003;362(9391):1225–1230. doi: 10.1016/S0140-6736(03)14546-1. [DOI] [PubMed] [Google Scholar]

- Gross PAM, Greenfield SM, Cretin SP, Ferguson JM, Grimshaw JP, Grol RP, Klazinga NM, Lorenz WM, Meyer GSM, Riccobono CM, Schoenbaum SCM, Schyve PM, Shaw CM. Optimal methods for guideline implementation: conclusions from leeds castle meeting. Med Care. 2001;39(8):II85–II92. [PubMed] [Google Scholar]

- Hugenholtz NIR, Schreinemakers JF, A-Tjak MA, van Dijk FJH. Knowledge infrastructure needed for occupational health. Ind Health. 2007;45(1):13–18. doi: 10.2486/indhealth.45.13. [DOI] [PubMed] [Google Scholar]

- Kushnir T, Cohen AH, Kitai E. Continuing medical education and primary physicians’ job stress, burnout and dissatisfaction. Med Educ. 2000;34(6):430–436. doi: 10.1046/j.1365-2923.2000.00538.x. [DOI] [PubMed] [Google Scholar]

- Linzer M, Konrad TR, Douglas J, McMurray JE, Pathman DE, Williams ES, Schwartz MD, Gerrity M, Scheckler W, Bigby J, Rhodes E. Managed care, time pressure, and physician job satisfaction: Results from the physician worklife study. J Gen Intern Med. 2000;15(7):441–450. doi: 10.1046/j.1525-1497.2000.05239.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lurie SG. Ethical dilemmas and professional roles in occupational medicine. Soc Sci Med. 1994;38(10):1367–1374. doi: 10.1016/0277-9536(94)90274-7. [DOI] [PubMed] [Google Scholar]

- Mansouri M, Lockyer J. A meta-analysis of continuing medical education effectiveness. J Contin Educ Health Prof. 2007;27(1):6–15. doi: 10.1002/chp.88. [DOI] [PubMed] [Google Scholar]

- McCluskey A, Lovarini M (2005) Providing education on evidence-based practice improved knowledge but did not change behaviour: a before and after study. BMC Med Educ 5(40) [DOI] [PMC free article] [PubMed]

- McConaghy JR. Evolving medical knowledge: moving toward efficiently answering questions and keeping current. Prim Care: Clin Off Pract. 2006;33(4):831–837. doi: 10.1016/j.pop.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Noordam and de Vries (2002) Contouren worden zichtbaar: positioneringsonderzoek NVAB (Contours are getting visible: position finding of The Netherlands Society of Occupational Medicine) in Dutch. Adviesbureau voor organisatie en onderzoek, Amsterdam

- Plaice C, Kitch P. Embedding knowledge management in the NHS south-west: pragmatic first steps for a practical concept. Health Info Libr J. 2003;20(2):75–85. doi: 10.1046/j.1471-1842.2003.00417.x. [DOI] [PubMed] [Google Scholar]

- Revere D, Turner AM, Madhavan A, Rambo N, Bugni PF, Kimball A, Fuller SS. Understanding the information needs of public health practitioners: a literature review to inform design of an interactive digital knowledge management system. J Biomed Inform. 2007;40(4):410–21. doi: 10.1016/j.jbi.2006.12.008. [DOI] [PubMed] [Google Scholar]

- Sackett DL, Parkes J. Teaching critical appraisal: no quick fixes. Can Med Assoc J. 1998;158(2):203–204. [PMC free article] [PubMed] [Google Scholar]

- Sackett DL, Rosenberg WMC, Gray JAM, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312(7023):71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandars J, Heller R. Improving the implementation of evidence-based practice: a knowledge management perspective. J Eval Clin Pract. 2006;12(3):341–346. doi: 10.1111/j.1365-2753.2006.00534.x. [DOI] [PubMed] [Google Scholar]

- Schaafsma F, Hulshof C, van Dijk F, Verbeek J. Information demands of occupational health physicians and their attitude towards evidence-based medicine. Scand J Work Environ Health. 2004;30(4):327–330. doi: 10.5271/sjweh.802. [DOI] [PubMed] [Google Scholar]

- Schaafsma F, Hugenholtz N, de Boer A, Smits P, Hulshof C, van Dijk F. Enhancing evidence-based advice of occupational health physicians. Scand J Work Environ Health. 2007;33(5):368–78. doi: 10.5271/sjweh.1156. [DOI] [PubMed] [Google Scholar]

- Schyns B, von Collani G. A new occupational self-efficacy scale and its relation to personality constructs and organizational variables. Eur J Work Organ Psychol. 2002;11(2):219–241. doi: 10.1080/13594320244000148. [DOI] [Google Scholar]

- Sisk JE, Greer AL, Wojtowycz M, Pincus LB, Aubry RH. Implementing evidence-based practice: evaluation of an opinion leader strategy to improve breast-feeding rates. Am J Obstet Gynecol. 2004;190(2):413–421. doi: 10.1016/j.ajog.2003.09.014. [DOI] [PubMed] [Google Scholar]

- Smits PB, de Buisonje CD, Verbeek JH, van Dijk FJ, Metz JC, ten Cate OJ. Problem-based learning versus lecture-based learning in postgraduate medical education. Scand J Work Environ Health. 2003;29(4):280–287. doi: 10.5271/sjweh.732. [DOI] [PubMed] [Google Scholar]

- Steen BR, Burghen EM, Hinds PSPRC, Srivastava DKP, Tong XM. Development and testing of the role-related meaning scale for staff in pediatric oncology. Cancer Nurs. 2003;26(3):187–194. doi: 10.1097/00002820-200306000-00003. [DOI] [PubMed] [Google Scholar]

- Timmermans S, Angell A. Evidence-based medicine, clinical uncertainty, and learning to doctor. J Health Soc Behav. 2001;42(4):342–359. doi: 10.2307/3090183. [DOI] [PubMed] [Google Scholar]

- Timmermans S, Kolker ES. Evidence-based medicine and the reconfiguration of medical knowledge. J Health Soc Behav. 2004;45:177–193. [PubMed] [Google Scholar]

- Walsh DC. Divided loyalties in medicine: the ambivalence of occupational medical practice. Soc Sci Med. 1986;23(8):789–796. doi: 10.1016/0277-9536(86)90277-7. [DOI] [PubMed] [Google Scholar]

- Wyatt JC. Knowledge and the Internet. J R Soc Med. 2000;93(11):565–570. doi: 10.1177/014107680009301104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyatt JC. Management of explicit and tacit knowledge. J R Soc Med. 2001;94(1):6–9. doi: 10.1177/014107680109400102. [DOI] [PMC free article] [PubMed] [Google Scholar]