Abstract

Finding the global positioning of points in Euclidean space from a local or partial set of pairwise distances is a problem in geometry that emerges naturally in sensor networks and NMR spectroscopy of proteins. We observe that the eigenvectors of a certain sparse matrix exactly match the sought coordinates. This translates to a simple and efficient algorithm that is robust to noisy distance data.

Keywords: distance geometry, eigenvectors, multidimensional scaling, sensor networks

Determining the configuration of N points ri in ℝp (e.g., p = 2,3) given their noisy distance matrix δij is a long-standing problem. For example, the points ri may represent coordinates of cities in the United States. Distances between nearby cities, such as New York and New Haven, are known, but no information is given for the distance between New York and Chicago. In general, for every city, we are given distance measurements to its k ≪ N nearest neighbors or to neighbors that are, at most, δ away. The distance measurements δij of dij = ‖ri − rj‖ may also incorporate errors. The problem is to find the unknown coordinates ri = (xi,yi) of all cities from the noisy local distances.

Even in the absence of noise, the problem is solvable only if there are enough distance constraints. By solvable, we mean that there is a unique set of coordinates satisfying the given distance constraints up to rigid transformations (rotations, translations, and reflection). Alternatively, we say that the problem is solvable only if the underlying graph consisting of the N cities as vertices and the distance constraints as edges is rigid in ℝp (see, e.g., ref. 1). Graph rigidity had drawn a lot of attention over the years, see (1–5) for some results in this area.

When all possible N(N − 1)/2 pairwise distances are known, the corresponding complete graph is rigid, and the coordinates can be computed by using a classical method known as multidimensional scaling (MDS) (6–8). The underlying principle of MDS is to use the law of cosines to convert distances into an inner product matrix, whose eigenvectors are the sought coordinates. The eigenvectors computed by MDS are also the solution to a specific minimization problem. However, when most of the distance matrix entries are missing, this minimization becomes significantly more challenging because of the low rank-p constraint that is not convex. Various solutions to this constrained optimization problem have been proposed, including relaxation of the rank constraint by semidefinite programming (SDP) (9), regularization (10, 11), smart initialization (12), expectation maximization (13), and other relaxation schemes (14). Recently, the eigenvectors of the graph Laplacian (15) were used to approximate the large scale SDP problem by a much smaller one, noting that linear combinations of only a few Laplacian eigenvectors can well approximate the coordinates.

The problem of finding the global coordinates from noisy local distances arises naturally in localization of sensor networks (16–18) and protein structuring from NMR spectroscopy (19, 20), where noisy measurements of neighboring hydrogen atoms are inferred from the nuclear Overhauser effect (NOE). The theory and practice involves many different areas, such as machine learning (15), optimization techniques (14) and rigidity theory (1, 4, 5, 17, 21, 22). We find it practically impossible to give a fair account of all of the relevant literature and recommend the interested reader to see the references within those listed.

In this article, we describe locally rigid embedding (LRE), a simple and efficient algorithm to find the global configuration from locally noisy distances, under the simplifying assumption of local rigidity. We assume that every city, together with its neighboring cities, forms a rigid subgraph that can be embedded uniquely (up to a rigid transformation). That is, there are enough distance constraints among the neighboring cities that make the local structure rigid. The “pebble game” (23) is a fast algorithm to determine graph rigidity. Thus, given a dataset of distances, the local rigidity assumption can be tested quickly by applying the pebble game algorithm N times, once for each city. In Summary, we discuss the restrictiveness of the local rigidity assumption (see refs. 17, 21, 22 for conditions that ensure rigidity) as well as possible variants of LRE when the assumption does not hold.

The essence of LRE follows the observation that one can construct an N × N sparse weight matrix W that has the property that the coordinate vectors are an eigenspace. The matrix is constructed in linear time complexity and has only O(N) nonzero elements, which enables efficient computation of that small eigenspace. The matrix W is formed by preprocessing the local distance information to repeatedly embed each city and its neighboring points (e.g., by using MDS or SDP), which is possible by the assumption that every local neighborhood is rigid. Once the local coordinates are obtained, we calculate weights much like the locally linear embedding (LLE) recipe (24) and its multiple weights (MLLE) modification (25). Similar to recent dimensionality reduction methods (24, 26–28), the motif “think globally, fit locally” (29) is repeated here. This is just another example for the usefulness of eigenfunctions of operators on datasets and their ability to integrate local information to a consistent global solution. In contrast to propagation algorithms that start with some local embedding and incrementally embed additional points while accumulating errors, the global eigenvector computation of LRE takes into account all local information at once, which makes it robust to noise.

Locally Rigid Embedding

We start by embedding points locally. For every point ri (i = 1, …, N) we examine its ki neighbors ri1, ri2, …, riki for which we have distance information. We find a p-dimensional embedding of those ki + 1 points. If the local (ki + 1) × (ki + 1) distance matrix is fully known, then this embedding can be obtained by using MDS. If some local distances are missing, then the embedding can be found by using SDP or other optimization methods. The assumption of local rigidity ensures that in the noise-free case, such an embedding is unique up to a rigid transformation. Note that the neighbors are not required to be physically close but can, rather, be any collection of ki points that the distance constraints among them make them rigid. This locally rigid embedding gives local coordinates for ri, ri1, …, riki up to translation, rotation, and, perhaps, reflection. We define ki weights Wi,ij that satisfy the following p + 1 linear constraints

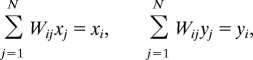

|

|

The vector equation (Eq. 1) means that the point ri is the center of mass of its ki neighbors, and the scalar equation (Eq. 2) means that the weights sums up to 1. Together, the weights are invariant to rigid transformations (translation, rotation, and reflection) of the locally embedded points. It is possible to find such weights if ki ≥ p + 1. In fact, for ki > p + 1, there is an infinite number of ways in which the weights can be chosen. In practice, we choose the least-square solution, i.e., that with minΣj=1kiWi,ij2. This prevents certain weights from becoming too large and keeps the weights balanced in magnitude. We rewrite Eqs. 1 and 2 in matrix notation as

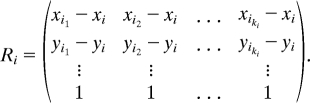

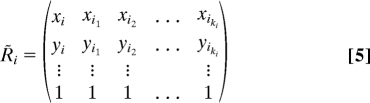

where b = (0, …, 0, 1)T ∈ℝp+1, wi = (Wi,i1, Wi,i2, …, Wi,iki)T, and Ri is a (p + 1) × ki matrix, whose first p rows are the relative coordinates, and the last row is the all ones vector

|

It can be easily verified that the least-squares solution is

Note that some of the weights may be negative. In fact, negative weights are unavoidable for points on the boundary of the convex hull of the set.

The computed weights Wi,ij (j = 1, …, ki) are assigned to the ith row of W, whereas all other N − ki row entries, including the diagonal element Wi,i, are set to zero. This procedure of locally rigid embedding is repeated for every i = 1, …, N, each time filling another row of the weight matrix W. Therefore, it takes O(N) operations to complete the construction of the weight matrix W, which ends up being a sparse matrix with k̄N nonzero elements, where k̄ = 1/NΣi=1N ki is the average degree of the graph (i.e., the average number of neighbors). Both the least-squares solution and the local embedding (MDS or SDP) are polynomial in ki, so repeating them N times is also O(N).

Next, we examine the spectrum of W. Observe that the all ones vector 1 = (1, 1, …, 1)T satisfies

because of Eq. 2. That is, 1 is an eigenvector of W with an eigenvalue λ = 1. We will refer to 1 as the trivial eigenvector. If the locally rigid embeddings were free of any error (up to rotations and reflections), then the p coordinate vectors x = (x1, x2, …, xN)T, y = (y1, y2, …, yN)T, … are also eigenvectors of W with the same eigenvalue λ = 1

This follows immediately from Eq. 1 by reading it one coordinate at a time,

|

Thus, the eigenvectors of W corresponding to λ = 1 give the sought coordinates, e.g., (xi, yi) for p = 2. It is remarkable that the eigenvectors give the global coordinates x and y despite the fact that different points have different local coordinate sets that are arbitrarily rotated, translated, and, perhaps, reflected with respect to one another.

Note that W is not symmetric, so its spectrum may be complex. Although every row of W sums up to 1, this does not prevent the possibility of eigenvalues ∣λ∣ > 1, because W is not stochastic, having negative entries. Therefore, we compute the eigenvectors with eigenvalues closest to 1 in the complex plane.

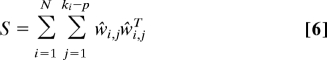

A symmetric weight matrix S with spectral properties similar to W can be constructed with the same effort (per D. A. Spielman, private communication). For each point ri, compute an orthonormal basis w̃i,1, …, w̃i,ki−p ∈ ℝki+1 for the nullspace of the (p + 1) × (ki + 1) matrix R̃i

|

In other words, we find w̃i,j that satisfy

and

The symmetric product Si = Σj=1ki−p w̃i,jw̃i,jT of the nullspace with itself is a (ki + 1) × (ki + 1) positive semidefinite (PSD) matrix. We sum the N PSD matrices Si (i = 1, …, N) to form an N × N PSD S by updating for each i only the corresponding (ki + 1) × (ki + 1) block of the large matrix

|

where ŵi,j ∈ ℝN is obtained from w̃i,j by padding it with zeros. Similar to the nonsymmetric case, it can be verified that the p + 1 vectors 1, x, y, … belong to the nullspace of S

In fact, because the global graph of N points is rigid, any vector in the nullspace of S is a linear combination of those p + 1 vectors (the previous construction of W may not enjoy this nice property, i.e., it is possible to create examples for which the dimension of the eigenspace of W is greater than p + 1). In rigidity theory, we would call S a stress of the framework (1).

From the computational point of view, we remark that, if needed, the eigenvector computation can be done in parallel. To multiply a given vector by the weight matrix W (or S), the storage of W (S) is distributed between the N points, such that the ith point stores only ki values of W (S) together with ki values of the given vector that correspond to the neighbors.

Multiplicity and Noise

The eigenvalue λ = 1 of W (or 0 for S) is degenerate with multiplicity p + 1. Hence, the corresponding p + 1 computed eigenvectors φ0,φ1,…,φp may be any linear combination of 1, x, y, …. We may assume that φ0 = 1 and that 〈φj, 1〉 = 0 for j = 1, …, p. We now look for a p × p matrix A that represents the linear transformation from the original coordinate set ri = (xi,yi, …) to the eigenmap φi = (φ1i,φ2i, …)

The squared distance between ri and rj is

Every pair of points for which the distance dij is known gives a linear equation for the coefficients of the matrix AT A. Solving that equation set results in AT A, whose Cholesky decomposition yields A (up to an orthogonal transformation).

The locally rigid embedding incorporates errors when the distances are noisy. The noise breaks the degeneracy and splits the eigenvalues. If the noise level is small, then we still expect the first p nontrivial eigenvectors to match the original coordinates, because the small noise does not overcome the spectral gap

where λi are the eigenvalues of the noiseless W. For higher levels of noise, the noise may be large enough so that some of the first p eigenvalues cross the spectral gap, causing the coordinate vectors to spill over the remaining eigenvectors.

To overcome this problem, we allow A to be p × m instead of p × p, with m > p, but still m ≪ N. In other words, every coordinate is approximated as a linear combination of the m nontrivial eigenvectors φ1, …, φm, whose eigenvalues are the closest to 1. We replace Eq. 7 by a minimization problem for the m × m PSD matrix P = AT A

where the sum is over all pairs i ≈ j for which there exists a distance measurement Δij. The least-squares solution for P is often not valid, when it ends up not being a PSD matrix or when its rank is >p. It was shown in ref. 15 that the optimization problem (Eq. 8) with the PSD constraint P ≻ 0 can be formulated as SDP by using the Schur complement lemma. The matrix A is reconstructed from the singular vectors of P corresponding to the largest p singular values.

Numerical Results

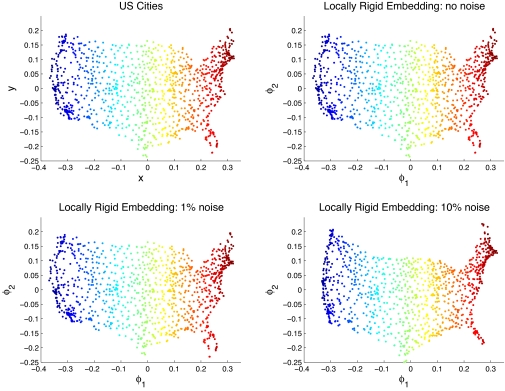

We consider a dataset of n = 1,097 cities in the United States, see Fig. 1(Upper Left). For each city, we have distance measurements to its 18 nearest cities (ki = k = 18). For simplicity, we assume that the (218) distances between the neighboring cities are also collected, so that MDS is used to locally embed the neighboring cities. We tested the locally rigid embedding algorithm for three different levels of multiplicative Gaussian noise: no noise, 1% noise, and 10% noise. That is, Δij = dij[1 + N(0,δ2)], with δ = 0,0.01,0.1. We used SeDuMi (30) for solving the SDP (Eq. 8).

Fig. 1.

Locally rigid embedding of US cities. (Upper Left) Map of 1,097 cities in the United States. (Upper Right) The locally rigid embedding obtained from clean local distances. (Lower Left) LRE with 1% noise. (Lower Right) LRE with 10% noise. Cities are colored by their x coordinate.

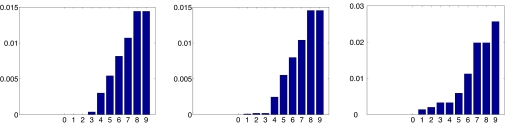

The behavior of the numerical spectrum for the different noise levels is illustrated in Fig. 2, where the magnitude of 1 − λi for the 10 eigenvalues that are closest to 1 is plotted. It is apparent in the noiseless case that λ = 1 is degenerated with multiplicity 3 corresponding to 1, x, y. Note that the spectral gap is quite small. The LRE finds the exact configuration without introducing any errors as can be seen in Fig. 1 (Upper Right).

Fig. 2.

Numerical spectrum of W for different levels of noise: Clean distances (Left), 1% noise (Center), and 10% noise (Right).

Adding 1% noise breaks the degeneracy and splits the eigenvalues, and because of the small spectral gap, the coordinates spill over the fourth eigenvector. Therefore, for that noise level, we solved the minimization problem (Eq. 8) with P being a 3 × 3 matrix (m = 3). The largest singular values of P were 101.7, 42.5, and 0.13, indicating a successful two-dimensional embedding.

When the noise is increased more, the spectrum changes even further. We used m = 7 for the noise level of 10%. The largest singular values of the optimal P were 76.6, 8.3, 2.0, and 0.002, which implies that the added noise effectively increased the dimension of points from two to three. The LRE distorts boundary points but does a good job in the interior.

Algorithm

In this section, we summarize the various steps of LRE that should be taken to find the global coordinates from a dataset of vertices and distances by using the symmetric matrix S.

Input: A weighted graph G = (V, E, Δ), δij is the measured distance between vertices i and j, and ∣V∣ = N is the number of vertices. G is assumed to be locally rigid.

Allocate memory for a sparse N × N matrix S.

- For i = 1 to N

- Set ki to be the degree of vertex i.

- i1, …, iki are the neighbors of i: (i, ij) ∈ E for j = 1, …, ki.

- Use SDP or MDS to find an embedding ri, ri1, …, riki of i, i1, …, iki.

- Form the (p + 1) × (ki + 1) matrix R̃i following Eq. 5.

- Compute an orthogonal basis w̃i,1, …, w̃i,ki−p for the nullspace of R̃i.

- Update S ← S + Σj=1ki−pŵi,jŵi,jT. (ŵi,j are obtained from w̃i,j by zero padding.).

Compute m + 1 eigenvectors of S with eigenvalues closest to 0: φ0, φ1, …, φm, where φ0 is the all-ones vector.

Use SDP as in Eq. 8 to find coordinate vectors x1, …, xp as linear combinations of φ1, …, φm.

Summary and Discussion

We presented an observation that leads to a simple algorithm for finding the global configuration of points from their local noisy distances under the assumption of local rigidity. The LRE algorithm that uses the matrix W is similar to the LLE algorithm (24) for dimensionality reduction. The input to LLE are high-dimensional data points for which LLE constructs the weight matrix W by repeatedly solving overdetermined linear equation systems. In the global positioning problem the input is not high-dimensional data points, but rather distance constraints that we preprocess (using MDS or SDP) to get a locally rigid low-dimensional embedding. Therefore, the weights are obtained by solving an underdetermined system (Eqs. 1–2) instead of an overdetermined system. The LRE variant that uses the symmetric matrix S is similar to the MLLE algorithm (25) in the sense that it uses more than a single weight vector, taking all basis vectors of the nullspace into account in the construction of S.

The assumption of local rigidity of the input graph is crucial for the algorithm to succeed. It is reasonable to ask whether or not this assumption holds in practice and how the algorithm can be modified to prevail even if the assumption fails to hold. These are important research questions that remain to be addressed. Even if a few vertices have corresponding subgraphs that are not rigid (nonlocalizable), so their weights are not included in the construction of the stress matrix S, the matrix S may still have a nullspace of dimension three. This can happen if the nonlocalizable vertices have enough localizable neighbors. Still, the algorithm fails when there are too many nonlocalizable vertices. For such nonlocalizable vertices, one possible approach is to consider larger subgraphs, such as the one containing all two-hop neighbors (the neighbors of the neighbors), hoping that the larger subgraph would be rigid. A different approach may be to consider smaller subgraphs, hoping that the removal of some neighbors would make the subgraph rigid. This is obviously possible if the vertex is included in a triangle (or some other small simple rigid subgraph) but at risk that the small subgraph would be too small to be rigidly connected to the remaining graph. It is not clear which approach would work better and requires numerical experiments. However, this is beyond the scope of the current article. There are many other interesting questions that follow from both the theoretical and algorithmic aspects, such as proving error bounds, finding other suitable operators for curves and surfaces, and dealing with specific constraints that arise from real-life datasets.

Acknowledgments.

I thank Ronald R. Coifman, who inspired this work. The center of mass operator emerged from my joint work with Yoel Shkolnisky on CryoEM. Dan Spielman is acknowledged for designing the symmetrized weight matrix. I would also like to thank Jia Fang, Yosi Keller, Yuval Kluger, and Richard Yang for valuable discussions on sensor networks, NMR spectroscopy, and rigidity. Finally, thanks are due to Rob Kirby for making valuable suggestions that improved the clarity of the manuscript. This work was supported by the National Geospatial Intelligence Agency (NGA) NGA University Research Initiatives, 2006.

Footnotes

The author declares no conflict of interest.

References

- 1.Biswas P, Liang TC, Toh KC, Wang TC, Ye Y. Semidefinite programming approaches for sensor network localization with noisy distance measurements. IEEE Trans Autom Sci Eng. 2006;3:360–371. [Google Scholar]

- 2.Aspnes J, et al. A theory of network localization. IEEE Trans Mobile Comput. 2006;5:1663–1678. [Google Scholar]

- 3.Ji X, Zha H. Sensor positioning in wireless ad-hoc sensor networks using multidimensional scaling. Proc IEEE INFOCOM. 2004;4:2652–2661. [Google Scholar]

- 4.Crippen GM, Havel TF. Distance Geometry and Molecular Conformation. New York: Wiley; 1988. [Google Scholar]

- 5.Berger B, Kleinberg J, Leighton FT. Reconstructing a three-dimensional model with arbitrary errors. J Assoc Comput Mach. 1999;47:212–235. [Google Scholar]

- 6.Weinberger KQ, Sha F, Zhu Q, Saul LK. Graph laplacian regularization for large-scale semidefinite programming. In: Schölkopf B, Platt J, Hofmann T, editors. Advances in Neural Information Processing Systems (NIPS) 19. Cambridge, MA: MIT Press; 2007. [Google Scholar]

- 7.More JJ, Wu Z. Global continuation for distance geometry problems. Soc Ind Appl Math J Optimization. 1997;7:814–836. [Google Scholar]

- 8.Roth B. Rigid and flexible frameworks. Am Math Monthly. 1981;88:6–21. [Google Scholar]

- 9.Hendrickson B. The molecule problem: Exploiting structure in global optimization. Soc Ind Appl Math J Optimization. 1995;5:835–857. [Google Scholar]

- 10.Hendrickson B. Conditions for unique graph realizations. Soc Ind Appl Math J Comput. 1992;21:65–84. [Google Scholar]

- 11.Anderson BDO, et al. ACM Baltzer Wireless Networks (WINET) Dordrecht, The Netherlands: Springer; 2007. Graph properties of easily localizable networks. [Google Scholar]

- 12.Aspnes J, Goldenberg DK, Yang YR. On the computational complexity of sensor network localization. Proceedings of Algorithmic Aspects of Wireless Sensor Networks: First International Workshop (ALGOSENSORS), Lecture Notes in Computer Science 3121; Berlin, Germany: Springer; 2004. pp. 32–44. [Google Scholar]

- 13.Schoenberg IJ. Remarks on Maurice Frechet's article “Sur la definition axiomatique d'une classe d'espaces vectoriels distnacies applicables vectoriellement sure l'espace de Hilbert.”. Ann Math. 1935;36:724–732. [Google Scholar]

- 14.Young G, Householder AS. Discussion of a set of points in terms of their mutual distances. Psychometrika. 1938;3:19–22. [Google Scholar]

- 15.Cox TF, Cox MAA. Multidimensional Scaling. Monographs on Statistics and Applied Probability 88. Boca Raton, FL: Chapman & Hall/CRC; 2001. [Google Scholar]

- 16.Vandenberghe L, Boyd S. Semidefinite programming. Soc Ind Appl Math Rev. 1996;38:49–95. [Google Scholar]

- 17.Wright SJ, Wahba G, Lu F, Keles S. Framework for kernel regularization with application to protein clustering. Proc Natl Acad Sci USA. 2005;102:12332–12335. doi: 10.1073/pnas.0505411102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sun J, Boyd S, Xiao L, Diaconis P. The fastest mixing markov process on a graph and a connection to a maximum variance unfolding problem. Soc Ind Appl Math Rev. 2006;48:681–699. [Google Scholar]

- 19.Gotsman C, Koren Y. Distributed graph layout for sensor networks. J Graph Algorithms Appl. 2005;9(3):327–346. [Google Scholar]

- 20.Srebro N, Jaakkola T. Weighted low-rank approximations. Proceedings of the Twentieth International Conference on Machine Learning (ICML); Menlo Park, CA: AAAI Press; 2003. pp. 720–727. [Google Scholar]

- 21.Roweis ST, Saul LK. Nonlinear dimensionality reduction by locally linear embedding. Science. 2000;290:2323–2326. doi: 10.1126/science.290.5500.2323. [DOI] [PubMed] [Google Scholar]

- 22.Donoho DL, Grimes C. Hessian eigenmaps: Locally linear embedding techniques for high-dimensional data. Proc Natl Acad Sci USA. 2003;100:5591–5596. doi: 10.1073/pnas.1031596100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003;15:1373–1396. [Google Scholar]

- 24.Coifman RR, et al. Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proc Natl Acad Sci USA. 2005;102:7426–7431. doi: 10.1073/pnas.0500334102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Saul LK, Roweis ST. Think globally, fit locally: Unsupervised learning of low dimensional manifolds. J Mach Learn Res. 2003;4:119–155. [Google Scholar]

- 26.Zhang Z, Wang J. MLLE: Modified locally linear embedding using multiple weights. In: Schölkopf B, Platt J, Hofmann T, editors. Advances in Neural Information Processing Systems (NIPS) 19. Cambridge, MA: MIT Press; 2007. pp. 1593–1600. [Google Scholar]

- 27.Jacobs DJ, Hendrickson B. An algorithm for two-dimensional rigidity percolation: The pebble game. J Comput Phys. 1997;137:346–365. [Google Scholar]

- 28.Sturm JF. Using SeDuMi 1.02, A Matlab toolbox for optimization over symmetric cones. Optim Methods Software. 1999;11:625–653. [Google Scholar]