Abstract

Three methods for fitting the diffusion model (Ratcliff, 1978) to experimental data are examined. Sets of simulated data were generated with known parameter values, and from fits of the model, we found that the maximum likelihood method was better than the chi-square and weighted least squares methods by criteria of bias in the parameters relative to the parameter values used to generate the data and standard deviations in the parameter estimates. The standard deviations in the parameter values can be used as measures of the variability in parameter estimates from fits to experimental data. We introduced contaminant reaction times and variability into the other components of processing besides the decision process and found that the maximum likelihood and chi-square methods failed, sometimes dramatically. But the weighted least squares method was robust to these two factors. We then present results from modifications of the maximum likelihood and chi-square methods, in which these factors are explicitly modeled, and show that the parameter values of the diffusion model are recovered well. We argue that explicit modeling is an important method for addressing contaminants and variability in nondecision processes and that it can be applied in any theoretical approach to modeling reaction time.

Sequential sampling models are currently the models most successful in accounting for data from simple two-choice tasks. Among these, diffusion models have been the ones most widely applied across a range of experimental procedures, including memory (Ratcliff, 1978, 1988), lexical decision (Ratcliff, Gomez, & McKoon, 2002), letter-matching (Ratcliff, 1981), visual search (Strayer & Kramer, 1994), decision making (Busemeyer & Townsend, 1993; Diederich, 1997; Roe, Busemeyer, & Townsend, 2001), simple reaction time (Smith, 1995), signal detection (Ratcliff & Rouder, 1998; Ratcliff, Thapar, & McKoon, 2001; Ratcliff, Van Zandt, & McKoon, 1999), and perceptual judgments (Ratcliff, 2002; Ratcliff & Rouder, 2000; Thapar, Ratcliff, & McKoon, 2002).

The experimental data to which the models are fit in two-choice tasks are accuracy rates and reaction time distributions for both correct and error responses. The ability of the models to deal with this range of data sets them apart from other models for two-choice decisions. Because multiple dependent variables need to be fit simultaneously and because the data can have contaminants, the fitting process is not straightforward. For these reasons, the model is a good testing ground for evaluating fitting methods.

In fitting any sequential sampling model to data, the aim is to find parameter values for the model that allow it to produce predicted values for reaction times and accuracy rates that are as close as possible to the empirical data. But so far in this domain, little attention has been paid to the methods for fitting. Sometimes models have been fit by eye, by simply observing that they can capture the ordinal trends in the experimental data. Sometimes a standard criterion such as chi square (e.g., Smith & Vickers, 1988), in which the difference between the observed and the predicted frequencies of reaction times in the reaction time distribution is minimized, is used. Nonstandard criteria have also been used. For example, Ratcliff and Rouder (1998) and Ratcliff et al. (1999) fitted an ex-Gaussian, summary, reaction time distribution (Ratcliff, 1979; Ratcliff & Murdock, 1976) to data and to predictions from Ratcliff’s diffusion model. Then the sum of squares for the differences between the parameters of the ex-Gaussian for predictions and for data plus the sum of squares for the differences between accuracy rates for predictions and data were minimized. Ratcliff and Rouder (2000) used a more direct sum-of-squares method in which quantile reaction times were used instead of parameters of the summary (ex-Gaussian) distribution. The statistical properties for all these fitting methods have not been examined.

In this article, we will examine three different methods for fitting the diffusion model to two-choice reaction time and accuracy data and will examine the properties of the estimators for the parameters of the model. The issues that arise have implications not only for fitting the diffusion model, but also for fitting summary models of single reaction time distributions (e.g., Heathcote, Brown, & Mewhort, 2002; Ratcliff, 1979; Van Zandt, 2000) and for fitting models in other domains. Throughout the article, we will discuss findings as they apply to the diffusion model case and also will place them in a more general context. Examples of the issues that have broad implications are the following. First, how should contaminant reaction times be handled empirically (can they be eliminated) and theoretically (can they be explicitly modeled)? Second, how robust is a method for estimating parameters either in terms of possible failures of a model’s assumptions or in terms of contaminated data? Third, there are practical considerations, including computation speed. Fourth, an optimal fitting method should provide the best possible estimates of parameters. The estimates should be unbiased— that is, they should converge on the true parameter values as the number of observations increases (i.e., they should be consistent). Fifth, the estimates should also have the smallest possible standard deviations, so that any single fit of a model to data produces estimates that are close to the true values. In Appendix A, we present a more formal discussion of the statistical factors involved in model fitting and parameter estimation.

In the model-fitting enterprise, sometimes there is no need for extremely accurate estimation of parameter values; finding a set of parameter values that produces predictions reasonably near the experimental data is enough to show that the model is capable of fitting the data. But there are many cases in which accurate estimation of parameter values is necessary. For example, any situation in which individual differences are examined requires reasonably accurate estimates of parameters. Also, if differences among the conditions in an experiment are to be examined, knowing the standard deviations in parameter estimates is important. It is toward these ends that this article will provide an evaluation of fitting methods in terms of their robustness and flexibility in fitting data, as well as in terms of their accuracy in recovering parameter values from the different fitting methods. In order to explain the fitting methods we evaluated and the results of the evaluations, we first will need to present the diffusion model, the model we used as a testing ground and the model for which we needed good fitting methods.

THE DIFFUSION MODEL

Diffusion and random walk models form one of the major classes of sequential sampling models in the reaction time domain. The diffusion process is a continuous variant of the random walk process. The models best apply in situations in which subjects make two-choice decisions that are based on a single, “one-shot” cognitive process, decisions for which reaction times do not average much over 1 sec. The basic assumption of the models is that a stimulus test item provides information that is accumulated over time toward one of two decision criteria, each criterion representing one of the two response alternatives. A response is initiated when one of the decision criteria is reached. The researchers who have developed random walk and diffusion models take the approach that all the aspects of experimental data need to be accounted for by a model. This means that a model should deal with both correct and error reaction times, with the shapes of the full distributions of reaction times, and with the probabilities of correct versus error responses. It should be stressed that dealing with all these aspects of data is much more of a challenge than dealing with accuracy alone or with reaction time alone.

Random walk models have been prominent since the 1960s (Laming, 1968; Link & Heath, 1975; Stone, 1960). Diffusion models appeared in the late 1970s (Ratcliff, 1978, 1980, 1981). The random walk and diffusion models are close cousins and are not competitors to each other, as they are with other sequential sampling models (e.g., accumulator models and counter models; see, e.g., LaBerge, 1962; Smith & Van Zandt, 2000; Smith & Vickers, 1988; Vickers, 1970, 1979; Vickers, Caudrey, & Willson, 1971). So, although we will deal with only one diffusion model in this article, most of the issues and qualitative results apply to other diffusion and random walk models, and the general approach applies to other sequential sampling models.

The earliest random walk models assumed that the accumulation of information occurred at discrete points in time, each piece of information being either fixed in size (e.g., Laming, 1968) or variable in size (Link, 1975; Link & Heath, 1975). The models were applied mainly in choice reaction time tasks and succeeded in accounting for accuracy and for mean reaction time for correct responses. They were also sometimes successful with mean error reaction times, but they rarely addressed the shapes of reaction time distributions.

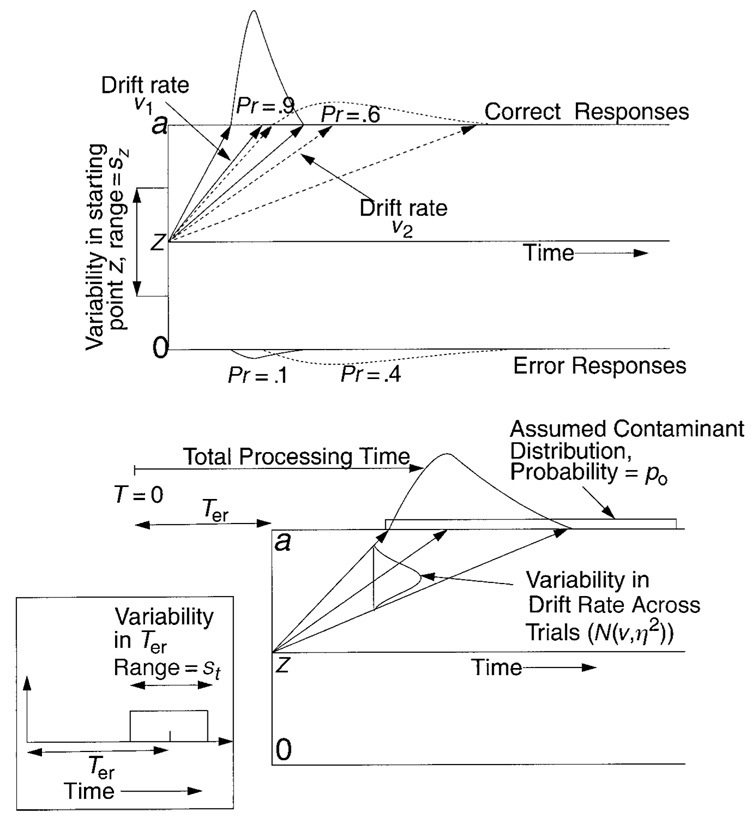

Ratcliff’s (1978) diffusion model is illustrated in Figure 1 in three panels, each showing different aspects of the model. Information is accumulated from a starting point z toward one or the other of the two response boundaries; a response is made when the process hits the upper boundary at a or the lower boundary at zero. The mean rate at which information is accumulated toward a boundary is called the drift rate. During the accumulation of information, drift varies around its mean with a standard deviation of s. Variability is large, and processes wander across a wide range, sometimes reaching the wrong boundary by mistake, which results in an error response. The top panel of the figure shows two processes, one with a drift rate of v1 (solid arrows) and the other with a drift rate of v2 (dashed arrows). Variability in drift rate leads to distributions of finishing times (reaction time distributions), one distribution for correct response times (at the top boundary for the processes shown in the figure) and another distribution for error responses (at the bottom boundary in the figure). The spread of the solid arrows shows the reaction time distribution for the process with drift rate v1, and the spread of the dashed arrows shows the reaction time distribution for the process with drift rate v2. The geometry of the diffusion process naturally maps out the right-skewed reaction time distributions typically observed in empirical data. The panel also shows how smaller drift rates (e.g., v2) lead to slower responses, with more chance of reaching the wrong boundary, and so to larger error rates.

Figure 1.

An illustration of the diffusion model and parameters. The top panel shows starting point variability and illustrates how accuracy and reaction time distribution shapes for correct and error responses change as a function of two different drift rates (v1 and v2). The bottom right panel illustrates variability in drift across trials (standard deviation η) and the distribution of contaminants. The bottom left panel shows variability in Ter, the nondecision component of reaction time.

The variability in drift rate within a trial, represented by the parameter s, is a scaling parameter; if it were doubled, for example, all the other parameters could be changed to produce predictions identical to those before the change. The s is a fixed, not a free, parameter in fits of the model to data. It would be possible to fix another of the parameters instead—for example, boundary separation. But some empirical manipulations would be expected to affect boundary separation (e.g., speed–accuracy instructions), so that if such a parameter were fixed, the effects of the manipulation would show up in the values of other parameters and interpretation would be difficult. The most natural assumption (and the standard assumption) is to hold within-trial variability in drift constant, assuming that it is a constant value across the whole range of different kinds of decisions in an experiment, from easy to most difficult. We fixed s at 0.1, a value near those used in previous applications of the model (e.g., Ratcliff, 1978).

Variability in Parameters Across Trials

Besides variability in drift rate within each trial, there are several sources of variability across trials. For one, the mean drift rate for a given stimulus varies across trials (because subjects do not encode a stimulus in exactly the same way every time they encounter it). This variability is assumed to be normally distributed with a standard deviation of η, and it is illustrated in the bottom right panel of Figure 1.

Another source of variability is variability across trials in the starting point z (top panel of Figure 1). Variability across trials comes from a subject’s inability to hold the starting point of the accumulation of information constant across trials. The distribution of starting point values is assumed to be rectangular, with sz as its range. A rectangular distribution was chosen so that the starting point would be restricted to lie within the boundaries of the decision process.1

Without variability in drift rate and starting point across trials, simple random walk and diffusion models would predict that reaction times will be the same for correct and error responses when the two response boundaries are equidistant from the starting point; this is contrary to data. There has been a number of attempts to account for error reaction times within random walk and accumulator model frameworks (e.g., Laming, 1968; Link & Heath, 1975; Smith & Vickers, 1988), and some of these were moderately successful. But the inability of most models to deal with the full range of effects led to a deemphasis of error reaction times in the literature: Error reaction times have relatively rarely been reported, and there has been relatively little effort to deal with them theoretically until recently.

However, recent work has shown how variability in drift rate and starting point can produce unequal correct and error reaction times (Laming, 1968; Ratcliff, 1978, 1981; Ratcliff et al., 1999; Smith & Vickers, 1988; Van Zandt & Ratcliff, 1995). Ratcliff (1978) showed that variability in drift across trials produces error reaction times that are slower, and Laming (1968) showed that variability in starting point across trials produces error reaction times that are faster. Ratcliff et al. (1999) and Ratcliff and Rouder (1998) showed that the combination of the two types of variability can produce accurate fits to both patterns. Most interestingly, the combination can produce a crossover, so that errors are slower than correct responses when accuracy is low and errors are faster than correct responses when accuracy is high. This crossover has been observed a number of times experimentally (Ratcliff & Rouder, 1998, 2000; Ratcliff et al., 1999; Smith & Vickers, 1988).

The diffusion process is a model of the decision process, and not of the other processes involved in a task, processes such as stimulus encoding, response output, memory access, retrieval cue assembly, and so on. The times required for these other processes are combined into one parameter, Ter (bottom right panel, Figure 1). From a theoretical perspective, it has always been recognized there must be variability in Ter (and it has been used in the simple reaction time literature; cf. Smith, 1990). But it has never been clear what the addition of the extra parameter would buy for the sequential sampling models; it appeared that success or failure of the models was not dependent on it. However, we recently found sets of data for which the diffusion model fits missed badly in some conditions and discovered that this was due to large variability in the .1 quantile reaction times across conditions. Adding variability in Ter to the model corrected the fits (Ratcliff et al., 2002).

For purposes of modeling, Ter is assumed to be uniformly distributed (bottom left panel of Figure 1). The true distribution for Ter might be skewed or normal, but this distribution is convolved with the distribution from the decision process that has a larger standard deviation (by a factor of at least 4). The distribution of the convolution is determined almost completely by the distribution of the decision process, and so the precise shape of the distribution of Ter has little effect on predicted reaction time distribution shape. The standard deviation of the distribution of Ter determines the amount of variability in the .1 quantile reaction times across trials that can be accommodated by the model, and it also determines the size of the separation between the .1 and the .3 quantile reaction times relative to the case with no variability in Ter.

Simulating the Diffusion Process

The first step in an examination of fitting methods is to produce data to be fit. A computer program was written that, given input values for all the diffusion model parameters, generated simulated data from the model. That is, the program generated individual data points, each one a response choice with its associated reaction time. The aim was that the fitting methods should recover the correct parameter values—in other words, the parameter values from which the data were generated.

To produce simulated data from the diffusion process, a random walk approximation was used. Feller (1968, chap. 14) derived the diffusion process from the random walk by using limits in the random walk: As step size becomes small, the number of steps becomes large, and the probability of taking a step toward one boundary approaches .5. Specifically, if the random walk has a probability of q of taking a step down, a step size in time of h, and a step size in space of δ, the random walk approaches the diffusion process when δ→0, h→0, and q→1/2, so that (p − q)δ/h→v and 4pqδ2/h→s2, where v and s2 are constants and p = 1 − q. If these limits are applied to the expression for reaction time distributions (first-passage times) and response probabilities, the diffusion process expressions are obtained (see Feller, 1968, chap. 14; Ratcliff, 1978).

To scale the random walk approximation so that the diffusion model parameters can be used, the step size and the probability of taking a step up or down need to be scaled, using the step size in time and the standard deviation in drift (s). First, we define the parameter h to be the step size in time (e.g., h might be 0.05 msec). Then, in one time step, the process can move a step size of δ up or down. We set and the probability of going distance δ to the lower boundary to be The simulation starts from starting point z, and after each unit of time h, it takes a step of size δ until it terminates at 0 or a. With these definitions, as h→0, then δ→0; the mean displacement during time h equals (p − q)δ/h, which approaches drift rate v; the variance of the displacement approaches 4pqδ2/h; and so the random walk approaches the diffusion process.

To implement this random walk approximation to the diffusion process in a computer program, it is more efficient to use integer arithmetic, rescaling distance so that a step is one unit up or down. This is accomplished by dividing a, z, and v by δ. To produce simulated data from the diffusion process, we reduced the step size in the random walk, in accordance with the limits stated above, until the random walk approximated the diffusion process. Steps with a size of 0.05 msec were used, and these produced mean reaction times within 0.1 msec and response probabilities within 0.1% of the values produced by explicit solutions for the diffusion model (see Appendix A).

There are many other ways in which a diffusion process could be simulated (Tuerlinckx, Maris, Ratcliff, & De Boeck, 2001). The advantage of the random walk approximation is generality. There are cases in which the drift rate is assumed to change over position in the process, as in the Ornstein Uhlenbeck diffusion model (Busemeyer & Townsend, 1992, 1993; Smith, 1995), or over time during the process. For example, if a stimulus display was masked before a response had been produced, during processing, the drift rate could be assumed to fall after masking (see Ratcliff & Rouder, 2000). In these cases, exact solutions are usually not available, but it is extremely easy to modify the program that simulates the diffusion process with the random walk.

CONTAMINANT REACTION TIMES

In evaluating the three fitting methods, we addressed the issue of contaminant reaction times. We defined contaminants as responses that come from some process other than the diffusion decision process. One class of contaminants is outliers—response times outside the usual range of responses (either shorter or longer). Outliers are a serious problem in reaction time research. They can cause major problems in data analysis, because they can distort estimates of mean reaction time and standard deviation in reaction time (see Ratcliff, 1979). Also, outliers significantly reduce the power of an analysis of variance (see Ratcliff, 1993; Ulrich & Miller, 1994). The other class of contaminants is reaction times that overlap with the distribution of reaction times from the process being examined. These are also a problem for data analysis, although not as serious as the problem caused by outliers. Contaminants might arise, for example, from a guess or from a momentary distraction that is followed by a fast response.

Fast guesses, one kind of outlier, have in themselves been a topic for modeling. The influential “fast guess” model was developed to account for speed–accuracy tradeoffs when these tradeoffs were accomplished by increasing or decreasing the number of fast guesses (Ollman, 1966; Swensson, 1972; Yellott, 1971). Often, fast error responses are called fast guesses. This is usually not an accurate description. True fast guesses are guesses—that is, their accuracy is chance (Swensson, 1972). So, in a condition in which there are fast error responses, it is necessary to determine whether all fast responses are at chance. If many fast responses are accurate and there are few fast errors, the fast errors are not fast guesses. It cannot be stressed enough that fast errors are not fast guesses unless all responses below some lower cutoff (e.g., the fastest 10%, 5%, or 1% of the responses) are at chance responding. This is the signature that is needed to identify fast guesses and that can be used to eliminate subjects or devise where to place lower cutoffs.

The method we have adopted for dealing with contaminants in data analyses is, first, to eliminate fast and slow outliers, using cutoffs. For fast outliers, we place an upper cutoff at, say, 300 msec and a lower cutoff at zero and examine how many reaction times appear in that range for each subject, examining the accuracy of these responses. If a subject has a significant number of responses (e.g., over 5% or 10%) that are fast and at chance, the subject is a candidate for elimination from the experiment (we occasionally find such noncooperative subjects). We then increase the upper cutoff (to, say, 350 msec) to see whether accuracy begins to rise above chance. Repeating this process with increasingly larger cutoff values allows us to determine a good choice of a cutoff for fast outliers.

The method just described for setting a cutoff for fast outliers is workable in most situations. However, when an experimental task biases one response over the other (e.g., Ratcliff, 1985; Ratcliff et al., 1999), then typically, one response will be faster than the other, sometimes by as much as 100–200 msec in mean reaction time. This means that the shortest reaction times for responses for the biased response will be up to 100 msec shorter than responses for the nonbiased response. In this case, the use of cutoffs will not allow fast guesses of the biased response to be distinguished from genuine responses from the decision process. Examination of the shape of reaction time distributions might be one way of detecting fast outliers. If the leading edge of the distribution has a long rise or reaction times are less than 250 msec, the short reaction times should be viewed with suspicion. Also, if only fast errors occurred in a high-accuracy condition when the correct response was biased against, these fast errors could come from fast guesses of the biased response.

For slow outliers, we set an upper cutoff not by some fixed proportion of responses, but rather by determining a point above which few responses fall. The choice depends on the research goal; the cutoff might be smaller for hypothesis testing than for model fitting (see Ratcliff, 1993, for a discussion of the power of tests).

For model fitting, cutoffs can eliminate extremely fast and slow outliers. However, to eliminate all contaminants is impossible. The solution we adopted to deal with these remaining contaminants was to explicitly represent them in the fitting method and estimate their proportion (po). For the diffusion model simulations, we assumed that contaminants were generated only by a delay inserted in the usual decision process. Specifically, we assumed that on some proportion of the trials, a random delay was introduced into the response time. Thus, the observed response times in each condition were a mixture of responses from a regular diffusion process (with a probability of 1 − po) and contaminant responses (with a probability of po; see Figure 1, bottom right panel).

Our assumptions about contaminants are reasonable if subjects are cooperative and if they make errors only as a result of lapses of attention or other short interruptions. If subjects produce contaminants in other ways (e.g., they could guess in difficult conditions), different assumptions could be incorporated into the fitting program. Dealing with contaminants theoretically in this way can easily be extended to fitting summary distributions for reaction time distributions, as in Heathcote et al. (2002), Ratcliff (1979), Ratcliff and Murdock (1976), and Van Zandt (2000).

For clarity, it is worth noting that issues of fitting contaminant reaction times will not be addressed until the methods for fitting the diffusion model have been introduced and evaluated in detail. Issues concerning variability in Ter will be addressed even later in the text.

FITTING THE DIFFUSION MODEL

To fit the diffusion model to a set of data, characteristics of the data have to be compared with the model’s predictions for those characteristics. The three different fitting methods we evaluated each compare different characteristics, and each requires an expression for the model’s predictions. Collectively, the comparisons require the predicted probability densities for individual reaction times, the predicted cumulative probability distribution, and predicted values of accuracy for each of the experimental conditions. The expressions for all of these are given in Appendix B.

Because the expressions do not have closed forms and because some of the parameters’ values vary across trials (starting point, drift rate, and Ter), the predictions must be computed numerically as described in detail in Appendix B. Numerical computation allows the accuracy of the predictions to be adjusted by adjusting the number of terms in infinite series for the reaction time distributions or increasing the number of terms in numerical integration over starting point and drift variability (although the more accuracy desired, the longer the fits take). For the tests of the fitting methods described below, predicted values of reaction time and accuracy were computed to within 0.1 msec and .0001, respectively.

Maximum Likelihood Fitting Method

Given simulated data and expressions for predictions about the data from the diffusion model, we can fit the model to the data to see how well the maximum likelihood method does in recovering parameter values. For the maximum likelihood method, the predicted defective probability density [f (ti)] for each simulated reaction time (ti) for each correct and error response is computed. By a defective density, we mean nothing more than one that does not integrate to one (see Feller, 1968); it integrates to the probability of the response. The product of these defective density values is the likelihood [L = Πf (ti)] that is to be maximized by adjustment of parameter values. Because the product of large numbers of values of densities can exceed the numerical limits of the computers used to compute likelihood, log of the likelihood is used (maximizing the likelihood is achieved with the same parameters as maximizing the log of the likelihood). Also, maximizing log likelihood is the same as minimizing minus the log likelihood, and most routines are designed for minimization.

Ratcliff’s implementation of the maximum likelihood method involves using the predicted defective cumulative distribution function to obtain the defective cumulative probability for each reaction time, F(ti), and the defective cumulative probability for that reaction time plus an increment, F(ti + dt), where dt is small (e.g., 0.5 msec). Then, by using f (t) = [F(t + dt) − F(t)]/dt, the predicted defective probability density at point t can be obtained. Summing the logs of the predicted defective probability densities for all the reaction times gives the log likelihood. Again, minus the log likelihood is minimized.

To minimize minus the log likelihood, Ratcliff used the SIMPLEX routine (Nelder & Mead, 1965; see also Appendix B). This routine takes starting values for each parameter, calculates the value of the function to be minimized, then changes the value of one parameter at a time (sometimes more than one) to reduce the value of the objective function. This process is repeated until either the parameters do not change from one iteration to the next by more than some small amount or the value to be minimized does not change by more than some small amount.

Tuerlinckx’s implementation of the maximum likelihood method uses the defective probability density of each reaction time obtained directly from the predictions of the model (rather than through the cumulative distribution function). Drift variance is integrated over explicitly, and so there is one less numerical integration for the density function. (We were unable to integrate over drift variance for the cumulative distribution function, but only for the density, and Ratcliff used his expression for the distribution function—used in the chi-square and weighted least squares programs—to numerically produce the density for use in maximum likelihood partly so that it and Tuerlinckx’s method could be checked against each other.)

To minimize minus the log likelihood, Tuerlinckx used a constrained optimization routine (NPSOL; Gill, Murray, Saunders, & Wright, 1998; implemented in the NAG library) that searches for the minimum of minus the log likelihood function by using finite difference approximations to the first derivatives for the objective or target function. Although the NPSOL computer algorithm allows the user to supply the theoretical partial derivatives (slopes of the function as a function of each of the parameter values), these were not used, because they are very complicated. In general, in this application, the finite difference method is faster than the SIMPLEX method, because it uses more information about the objective function (derivatives). But it is less robust and can lead to problems in numerical instability that cause it to fail to converge on the minimum of the function being minimized. Tuerlinckx’s method is about five times faster than Ratcliff’s method.

Chi-Square Fitting Method

There are several ways of using a chi-square method for fitting the diffusion model to data; the one we chose was designed to maximize the speed of its computer implementation (and it is the method routinely used by Smith, 1995; Smith & Vickers, 1988).

The chi-square method we used works as follows. First, the simulated reaction times are grouped into bins, separately for correct and error responses. The number of bins we chose was six, with the two extreme bins each containing 10% of the observations and the others each containing 20%. We compute the empirical reaction times that divide the data into the six bins, and these are the .1, .3, .5, .7, and .9 quantiles. Inserting the quantile reaction times for the five quantiles for correct responses into the cumulative probability function gives the expected cumulative probability up to that quantile for correct responses. Subtracting the cumulative probabilities for each successive quantile from the next higher quantile gives the proportion of responses expected between each pair of quantiles, and multiplying by the total number of observations (total number of correct responses) gives the expected frequencies in each bin for correct responses. Doing the same thing for the five quantiles for error responses gives the expected frequencies in each of the bins for error responses. (If there were fewer than five errors in an experimental condition, five quantiles could not be computed, and the error reaction times for the condition were excluded from the chi-square computation.) The expected frequencies (E) are compared with the observed frequencies (O). The chi-square statistic to be minimized is the sum over the 12 bins, the 6 correct response bins and the 6 error response bins, of (O − E)2/E (with these sums for each condition summed over conditions). This chi-square statistic is the objective function to be minimized by parameter adjustment.

For each condition, only five evaluations of F(t) are required for correct responses, and five are required for error responses (no matter how many observations in each condition). As compared with Ratcliff’s maximum likelihood method, the program runs 50 times faster with 250 observations per condition and 200 times faster with 1,000 observations per condition (because the distribution function has to be computed twice for each density function).

Weighted Least Squares Fitting Method

In this method, the sum of the squared differences between observed and predicted accuracy values plus the sum of the squared differences between observed and predicted quantile reaction times for correct and error responses is minimized. The expression for the minimized function is the following: the sum over experimental conditions (for correct and error reaction times separately) of 4(prth − prex)2 + Σiwt × prex × [Qth(i) − Qex(i)]2, where pr is accuracy, Q(i) is the quantile reaction time in units of seconds, th stands for predicted, ex stands for experimental, and wt was 2 for the .1 and .3 quantiles, 1 for the .5 and .7 quantiles, and 0.5 for the .9 quantile. Just as with the chi-square method, if there were fewer than five errors in a condition, five quantiles could not be computed, and the error reaction time for the condition was excluded from the least squares computation.

The weights were chosen to roughly approximate the relative amounts of variability in the quantiles, weighting more heavily those quantile points for which variability was smaller. For simple linear regression, an appropriate weighting scheme is to divide each data point by its variance. This gives the smallest standard deviations in the estimates of the parameters (for normally distributed residuals). Whether our weights correspond to the relative variabilities of the quantile reaction times can be determined in two ways: by computing the theoretical standard deviations for the quantile points and by computing their standard deviations empirically from experiments. Theoretically, the asymptotic variance of a quantile reaction time at quantile q is q(1 − q)/(Nf2), where N is the number of observations and f is the probability density at the quantile (Kendall & Stuart, 1977, Vol. 1, p. 252). We carried out this computation for two sets of parameter values (the first and sixth rows of Table 1, with drift rates of .3 and .1, respectively), computing the standard deviations in the quantile reaction times. We then divided the .5 quantile standard deviation by each of the others to give relative weights. For the five quantiles .1, .3, .5, .7, and .9, the ratios were 2.4, 1.6, 1.0, 0.7, and 0.3 for the first set of parameter values and 2.3, 1.6, 1.0, 0.6, and 0.3 for the sixth set of parameter values. Thus, the weights we chose are approximately in the ratio of the standard deviations. The problem with this theoretical computation is that the expression for the variance is accurate only asymptotically and our data have too few observations (especially in extreme error conditions) to be asymptotic. So, additional work would be needed to compute expressions for the nonasymptotic case.

Table 1.

Parameter Values for Simulations

| Parameter Set | a | Ter | η | sz | v1 | v2 | v3 | v4 |

|---|---|---|---|---|---|---|---|---|

| A | 0.08 | 0.300 | 0.08 | 0.02 | 0.40 | 0.25 | 0.10 | 0.00 |

| B | 0.08 | 0.300 | 0.16 | 0.02 | 0.40 | 0.25 | 0.10 | 0.00 |

| C | 0.16 | 0.300 | 0.08 | 0.02 | 0.30 | 0.20 | 0.10 | 0.00 |

| D | 0.16 | 0.300 | 0.16 | 0.02 | 0.30 | 0.20 | 0.10 | 0.00 |

| E | 0.16 | 0.300 | 0.08 | 0.10 | 0.30 | 0.20 | 0.10 | 0.00 |

| F | 0.16 | 0.300 | 0.16 | 0.10 | 0.30 | 0.20 | 0.10 | 0.00 |

Empirically, we calculated the standard deviations in quantile reaction times across subjects for a letter identification experiment (Thapar et al., 2002; see also Ratcliff & Rouder, 2000). Again, to represent the standard deviations as weights, we divided the standard deviation for the .5 quantile by each of the others. For three experimental conditions that spanned the range of accuracy values in the experiment (probability correct of .9 to .6), the ratios were the following: 1.3, 1.2, 1.0, 0.7, 0.4; 1.4, 1.2, 1.0, 0.8, 0.4; and 1.3, 1.1, 1.0, 0.8, 0.6. The squares of these ratios (relative variances) are not far from our selection of weights. Thus, given both the theoretical and the empirical calculations, we conclude that the weights we used (2, 2, 1, 1, and .5) were not unreasonable.

Another issue concerning the optimality of the least squares method is that the data entering each term in the sums of squares should be independent of each other (see, e.g., Seber & Wild, 1989). If the data are not independent, all possible covariances among the quantities should be taken into account (Seber & Wild, 1989). In our case, it is clear that quantile reaction times for correct and error responses and accuracy values all covary. If a single parameter of the model is changed, all the quantile reaction times and accuracy values will change in a systematic way. Some of the covariances are known. For example, the asymptotic covariation between all the reaction time quantiles for one response (correct or error) is given by Kendall and Stuart (1977). But this is an asymptotic expression and would not apply accurately to error reaction times (for which there are few data points). Also, theoretical formulations for the covariations between correct and error reaction time quantiles and accuracy values are not available. It would be very difficult to find expressions for all the covariances required to produce an optimal version of the weighted least squares method. The weighted least squares method we employed should, therefore, be viewed as the kind of ad hoc method that is often used in fitting.

To implement our weighted least squares method, the predicted reaction time for each of the quantiles needs to be computed. To compute these, the whole predicted cumulative reaction time distribution has to be obtained. We used 400 reaction times to obtain cumulative frequencies and then linear interpolation between pairs of values to determine the quantile reaction times. The SIMPLEX routine was used to minimize the weighted least square, and the implementations ran at about the same speed as Ratcliff’s maximum likelihood program—that is, much slower than the chi-square method.

In the weighted least squares method, accuracy is represented explicitly in the sum of squares, but it is not represented explicitly in the maximum likelihood and chi-square methods. In those methods, accuracy is represented by the predicted relative frequencies in the reaction time distributions for correct and error responses. For example, for 250 observations per condition and an accuracy value of .9, the total observed frequency for errors would be 25, and the total frequency for correct responses would be 225. In the fitting process, if the model predicted an accuracy of .8, it would overpredict errors (frequency of 50) and underpredict correct responses (frequency of 200). Then the fitting program would attempt to adjust these frequencies, subject to the other experimental conditions and other constraints.

PARAMETER VALUES

The parameter values were chosen to be representative of real experiments in which subjects are required to decide between two alternatives—for example, word or non-word in lexical decision or bright or dark in brightness discrimination. We simulated data for an experiment with four conditions, the conditions representing four levels of difficulty of a single independent variable, as, for example, in a lexical decision or recognition memory experiment with four levels of word frequency or in a two-choice signal detection experiment with four levels of brightness of the stimulus. We assumed that the levels of the variable are randomly assigned to trials in each experiment, so that subjects cannot anticipate which condition is to occur on any trial (see Ratcliff, 1978, Experiment 1). This means that subjects cannot adjust processing as a function of condition and, therefore, none of the parameters of the model except the parameter representing stimulus difficulty can change across levels of the manipulated variable.

The simulated data were generated from 12 different sets of parameter values, with the values chosen to span the ranges of values that are typical in fits of the diffusion model to real data. For the 6 sets shown in Table 1, the starting point z was symmetric between the two boundaries. The drift rates are all positive because the same results would be obtained with negative drift rates, since the boundaries are symmetric. (We also performed the same analyses with asymmetric boundaries; the results are qualitatively the same as those for the symmetric boundary case, and tables displaying the results can be found on Ratcliff’s or the Psychonomic Society’s Web pages).

Ter, the parameter for the nondecision components of processing, was always set at 0.3 sec. This parameter largely determines the location of the leading edge of the reaction time distribution. When boundary separation is small, the leading edge is a little closer to Ter, and when boundary separation is large, the leading edge is a little further away from Ter. None of the other parameter estimates or standard deviations in parameter estimates are changed by changing the value of Ter, because a change in Ter shifts all the reaction times by the same fixed amount.

Drift rate (v) represents the quality of evidence driving the decision process—that is, the difficulty level of a stimulus. For the four levels of the independent variable, we selected four drift values that span the range from high accuracy (about 95% correct) to low accuracy (about 50% correct). The values for the four drift rates are different for different values of boundary separation because when boundary separation (a) is small, there is more chance that a process will hit the wrong boundary by mistake, and so a higher value of drift rate was used to produce the same high accuracy values as when boundary separation was larger.

We selected two values for variability in drift across trials (η, which is the standard deviation in a normal distribution) and two values for variability in starting point across trials (sz, which is the range in a uniform distribution), one relatively large and one relatively small, as compared with typical values obtained in fits to real data.

We used two values of a, a = 0.08 and a = 0.16, both with symmetric boundaries and asymmetric boundaries. These values roughly bracket the values of boundary separation typically obtained in fits to real data.

Overall, the selected parameter values cover the range of values we have obtained when fitting the diffusion model to experimental data (Ratcliff, 2002; Ratcliff et al., 2002; Ratcliff & Rouder, 1998, 2000; Ratcliff et al., 2001; Ratcliff et al., 1999; Thapar et al., 2002).

EVALUATING FITTING METHODS

Our evaluation of the fitting methods will start by using models and simulated data with no contaminants or variability in Ter. Then we will introduce contaminants and report their effects on the fitting methods. We will then introduce corrections for contaminants in the fitting methods and will discuss their performance. Finally, we will introduce variability in Ter and will evaluate performance of the models without and then with this explicitly modeled. This also follows our chronological study of these issues.

We evaluated the methods by comparing the parameter values each method recovered from simulated data with the parameter values that were used to generate the data. We examined each method’s ability to recover the correct parameter values, whether the recovered values were biased away from the correct values in some consistent way, and the size of the standard deviations in the parameter values across fits to multiple simulated data sets. The standard deviations in the estimated parameter values are important for determining how much power is available for testing hypotheses about differences in parameter values across experimental conditions or subject populations. Also, the relative sizes of the standard deviations in the estimated parameter values from the different methods provide estimates of the relative efficiencies of the methods.

The diffusion model was used to produce the simulated empirical data as follows: Given a value for each of the diffusion model’s parameters, the model produces responses, each with its response time. For each set of parameter values for the model, 100 sets of simulated data were generated, each data set with either 250 or 1,000 observations for each of the four experimental conditions.

All of the three methods of fitting the model to data involve computing some statistic—that is, some objective function—to represent how well the model fits the data. A minimization routine (see Appendix B) begins with some starting values of the parameters of the model and then adjusts them to maximize or minimize (depending on the method) the objective function until the best fit is obtained between predicted accuracy values and reaction times and simulated accuracy values and reaction times. This process of finding the parameter values that give the best fit of predictions to data was repeated for each of the 100 sets of simulated data, giving 100 sets of best-fitting parameter values, from which we calculated the mean and standard deviation of the 100 estimates for each parameter. This whole process was repeated for each of the three fitting methods, and then it was all repeated again with the 100 sets of data generated from a different set of parameters. Altogether, 12 different sets of parameter values (6 with symmetric boundaries and 6 with asymmetric boundaries which are not reported) were used to span the range of parameter values typical of fits of the diffusion model to data in past studies (Ratcliff, 2002; Ratcliff & Rouder, 1998, 2000; Ratcliff et al., 2001; Ratcliff et al., 1999; Thapar et al., 2002; other unpublished studies).

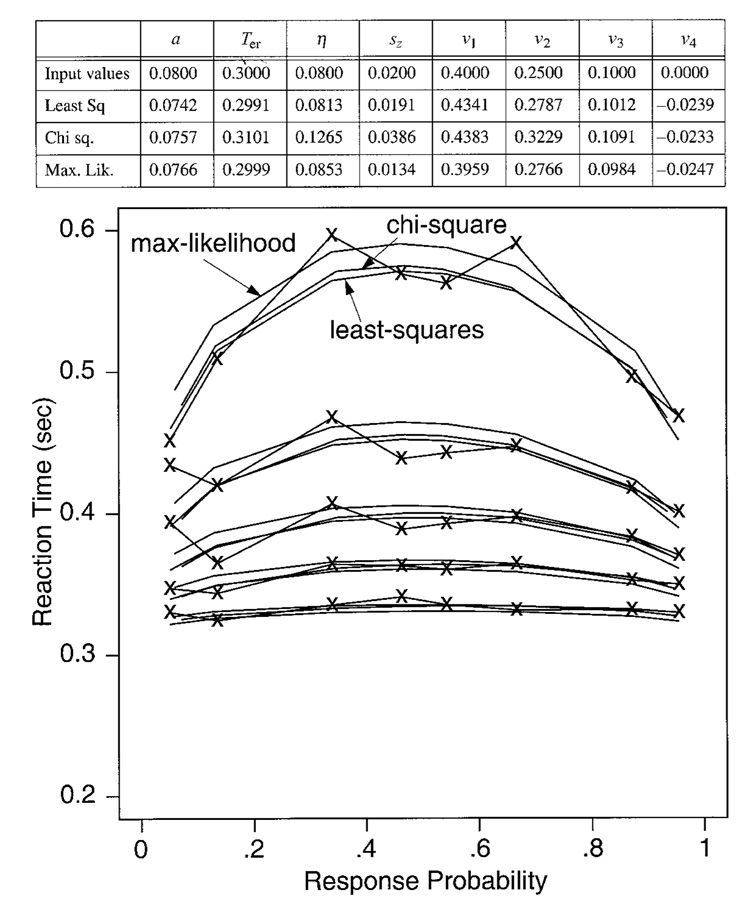

For all the 100 sets of data for all 12 sets of parameter values, Ratcliff examined the maximum likelihood, chi-square, and the weighted least squares methods, and Tuerlinckx examined the maximum likelihood method. Ratcliff examined the chi-square method with corrections for contaminants and variability in Ter, and Tuerlinckx examined the maximum likelihood method with these corrections.

The mean values of the best-fitting parameters and their standard deviations allow the three fitting methods to be compared on the basis of how well they allow recovery of the parameter values used to generate the simulated data, how variable the parameter estimates are across sets of data, and whether they produce systematic biases away from the true parameter values. For each set of simulations, we will discuss the overall behavior of the methods in terms of the results from the simulations. But only in the more important cases will we present tables of the mean values of the parameter estimates and the standard deviations in the estimates for the 100 sets of simulated data that were generated for each set of parameter values.

The conclusions of the fitting exercises are complex; each fitting method has advantages and disadvantages. To anticipate, we will list here several main conclusions, but with the caveat that any one set of simulations may have differences from the main results. (1) When the simulated data contained contaminants, as real data often do, we found that the maximum likelihood method was extremely sensitive to the contaminants. Although we developed procedures to correct for some classes of contaminants, the presence of even a few contaminants that could not be corrected for was sufficient to produce poor fits and poor parameter recovery. However, in the absence of contaminants, the maximum likelihood method produced unbiased parameter estimates and had the smallest standard deviations in the estimates of any of the methods. (2) The chi-square method was much more robust than the maximum likelihood method. The presence of a few contaminants for which we did not correct had little effect on the results of fitting: parameters were estimated with only small biases away from the values used to generate the data, and the parameters had standard deviations in their estimated values that were only somewhat larger than those obtained for the maximum likelihood method. In addition, implementations of the chi-square method are much faster than implementations of either the maximum likelihood method or the weighted least squares method. (3) The weighted least squares method produced mean parameter estimates about as biased as those for the chi-square method, but the standard deviations were larger. However, the weighted least squares method was the most robust in the face of contaminants. It was capable of producing reasonable fits even in situations in which the other methods failed dramatically, although the recovered parameters were not the same as those used to generate the diffusion process portion of the data. The weighted least squares method is most useful as a guide to whether the diffusion model is capable of fitting a data set.

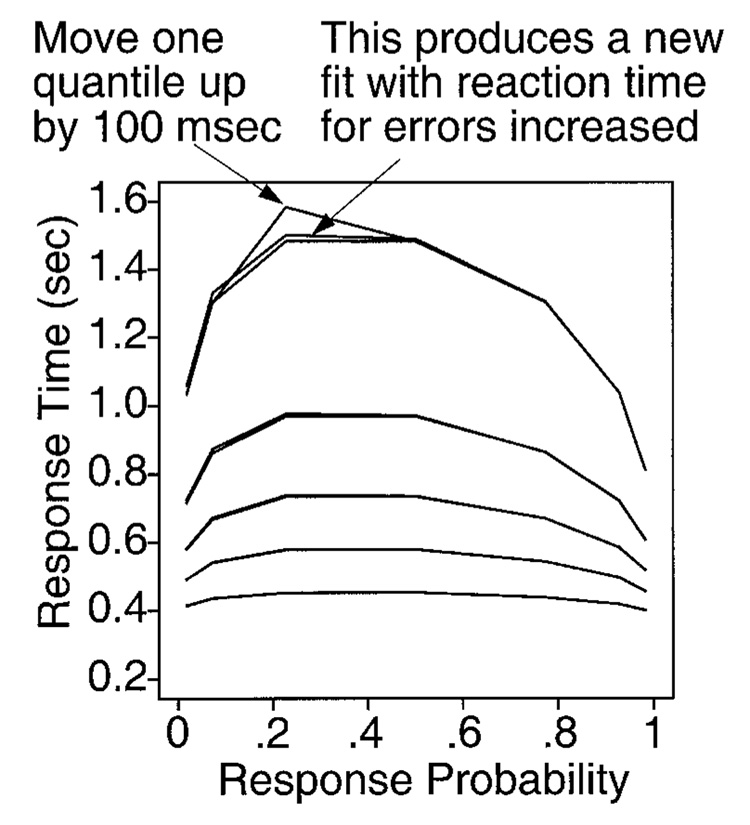

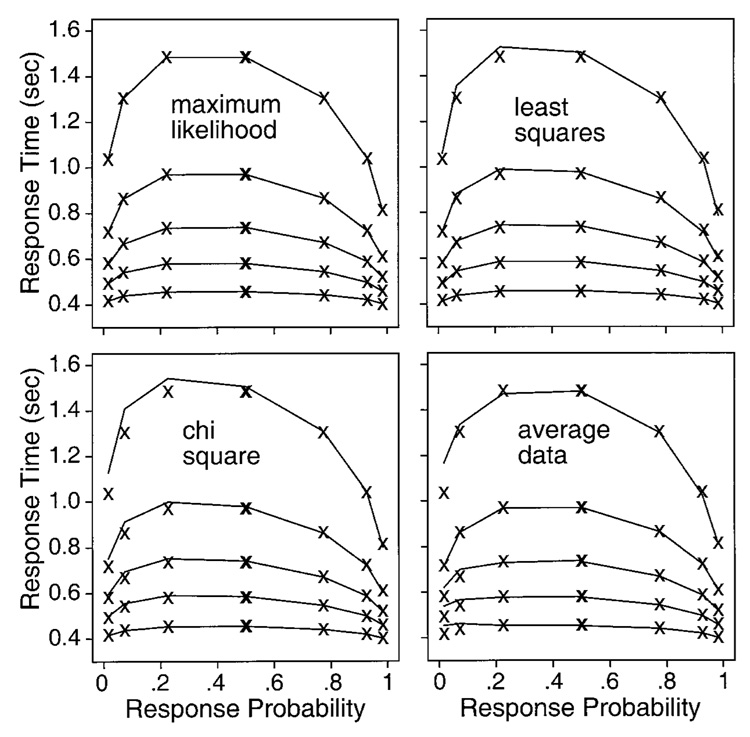

QUANTILE PROBABILITY FUNCTIONS

Fits of the diffusion model to data are complicated to display, because the data include two dependent variables—accuracy rates and correct and error reaction times—as well as distributions of reaction time. Traditionally, accuracy, mean reaction time, and reaction time distributions are all presented separately as a function of the conditions of an experiment. Here, we show a method of presenting all the dependent variables on the same plot so that their joint behavior can be better examined.

In earlier research, latency probability functions have been used to display the joint behavior of mean reaction time and accuracy. They are constructed by plotting mean reaction time on the y-axis and probabilities of correct and error responses on the x-axis (Audley & Pike, 1965). Responses with probabilities greater than .5 are typically correct responses, and so, data from correct responses typically fall to the right of the .5 point on the x-axis. Responses with probabilities less than .5 are typically errors and, so, typically fall to the left. Latency probability functions capture the joint behavior of reaction time and response probability, how fast the two change across experimental conditions, and how fast they change relative to each other. However, latency probability functions do not display information about reaction time distributions.

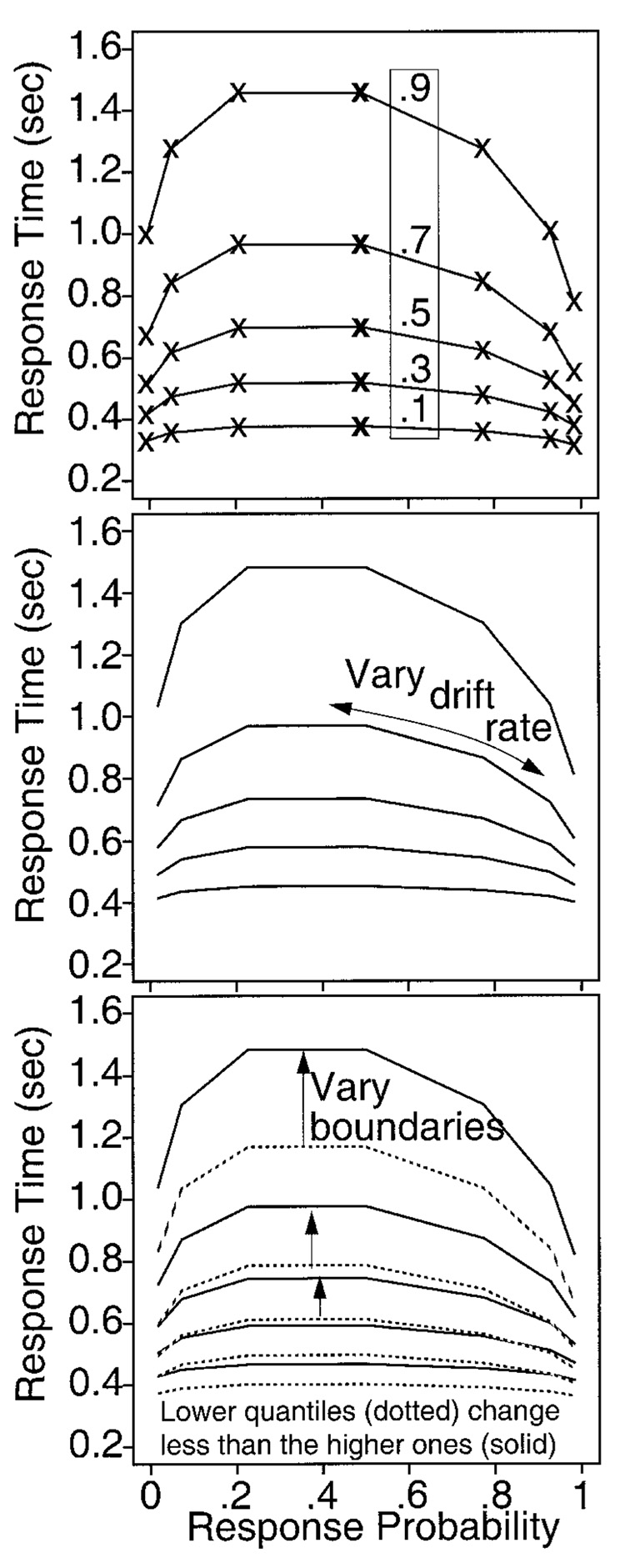

Ratcliff (2001) generalized latency probability functions to quantile probability functions, the method of presenting data that we use in this article. A quantile probability function plots quantiles of the reaction time distribution on the y-axis against probabilities of correct and error responses on the x-axis. In Figure 2, five quantiles are plotted, the .9, .7, .5, .3, and .1 quantiles, as labeled in the vertical rectangle, for four experimental conditions. For a given experimental condition with a probability of correct responses of, say, .8 (to the right of the vertical rectangle), the five quantile points form a vertical line above .8 on the x-axis. The spread among the points shows the shape of the distribution. The lower quantile points map the initial portion of the reaction time distribution, and the higher quantiles map the tail of the distribution. Because reaction time distributions are usually right skewed, the higher quantile points are spread apart more than the lower quantile points. Lines are drawn to connect the quantiles of the experimental conditions, one line to connect the first quantiles of all the experimental conditions, another line to connect the second quantiles, and so on. If, as is usually the case, responses with a probability of greater than .5 are correct responses and responses with a probability of less than .5 are error responses, the mirror image points on the x-axis around the probability of .5 point allow comparisons of the shapes of correct and error response time distributions. In the example presented in Figure 2, in the lowest accuracy condition, both correct and error responses have a probability of .5, and so their quantiles fall on top of each other. Also, comparing the quantile points across different probability values shows how distribution shape changes as a function of experimental condition. For example, if the whole distribution (all quantiles) becomes slower and slower as the difficulty of the experimental conditions increases (and probability of a correct response decreases), this is easily seen as parallel changes in all the quantiles. But if instead, the distribution becomes more skewed as the difficulty increases, the first quantiles for all the conditions will change little across conditions, and the last (longest) quantiles will change most, as is the case in the top panel of Figure 2.

Figure 2.

An illustration of quantile probability plots. The top panel shows quantile probability functions, the middle panel illustrates how the quantiles change as drift rate changes, and the bottom panel illustrates the effect of changing boundary separation.

The parameters of the diffusion model each have a systematic effect on the quantile probability function. Varying drift rate varies left to right position on the quantile probability function. Changes in drift rate can produce only a small change in the lowest quantile and a large change in the highest quantile (Figure 2, middle panel). This corresponds to the distribution’s skewing a lot and shifting its leading edge a little. Increasing boundary separation results in the distribution’s both shifting and skewing. The lowest quantile increases, and the highest quantile increases more (Figure 2, bottom panel).

If starting point variability across trials (sz) increases, the quantiles to the left of a probability of .5 (typically, errors) decrease, and they decrease most on the extreme left side of the plot. This can lead to errors that are faster than correct responses in the most accurate conditions. If variability in drift rate across trials (η) is increased, the plot becomes more asymmetric. With η = 0, sz = 0 and z = a/2, the quantile probability function is symmetric. As η is increased, the peak is lowered a little, and it moves to the left as error responses slow relative to correct responses.

When the points plotted on the quantile probability function are from an experiment in which subjects cannot change response criteria or strategies between experimental conditions, as in the experiments simulated here for tests of the fitting methods, then for the diffusion model, the shapes of the lines that connect the quantiles in the quantile probability plot are completely determined by just three parameters: a, η, and sz. This means that, in fitting the model, only drift rate can vary as a function of condition. The quantile probability function is what is called a parametric plot, with drift rate the parameter of the plot. Thus, besides providing a useful summary of the joint behavior of reaction time distribution shape and accuracy, the quantile probability function provides a stringent visual demonstration of how well the diffusion model fits the data. (For examples of patterns of data the diffusion model cannot fit, illustrated using quantile probability functions, see Ratcliff, 2002.)

Variability in Simulated Data

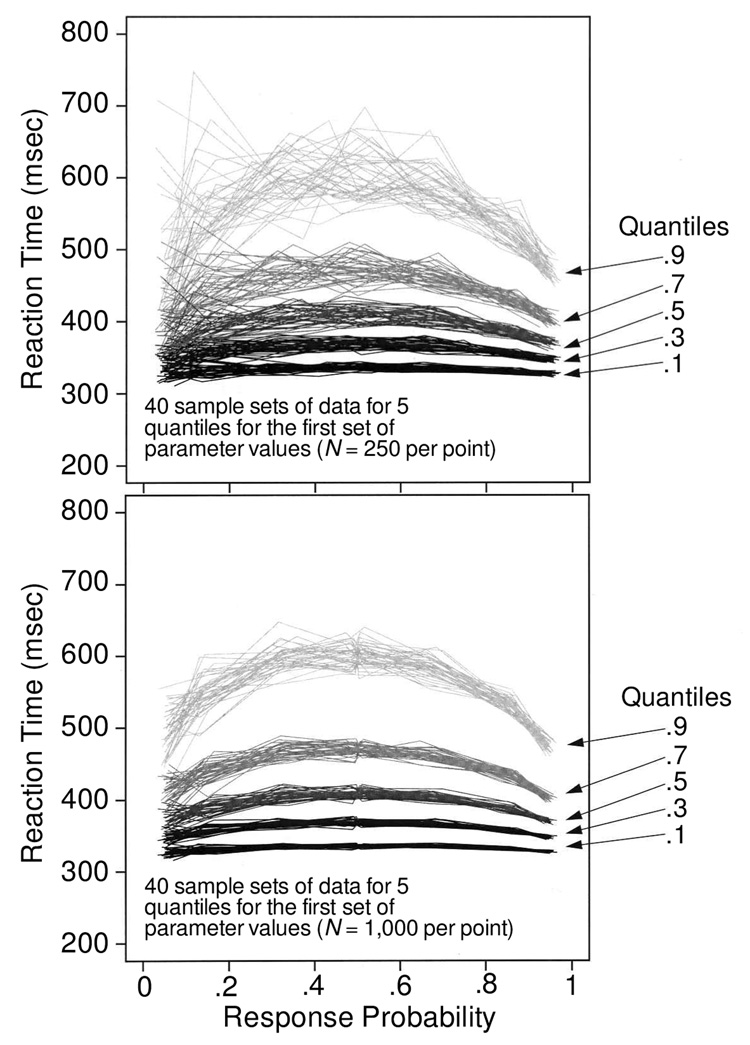

Quantile probability functions provide a vehicle with which to illustrate variability in the simulated data we used to test the three fitting methods. Variability in the data provides a backdrop for understanding why the variability in estimated parameters that we will present later is as large or as small as it is.

Figure 3 shows quantile probability functions for data simulated with the set of parameters shown in the first line of Table 1. Only the quantile probability functions for 40 sets of data, not the full 100 sets, are shown, in order to reduce clutter. The “smears” on the figure are the 40 overlapping lines at each of five quantiles. The figure shows how much variability there is in the quantile points and that there is more variability when the quantiles are derived from only 250 observations per condition (top panel) as compared with 1,000 observations per condition (bottom panel). (The quantile reaction times scale as a function of the square root of N, so the spread in the bottom panel is half that in the top panel. The figures provide a visualization of the size of the spread for these sample sizes and these values of accuracy.)

Figure 3.

Quantile probability functions for 40 sets of simulated data for 250 observations per condition (top panel) and 1,000 observations per condition (bottom panel). These illustrate the variability in simulated data for the five different quantiles and how the variability changes, going from high-accuracy correct responses (right-hand side of the figure) to errors on the left-hand side of the figure.

With 250 observations per condition, the .9 quantile varies around its mean by as much as 300 msec (for errors at the extreme left of the figure, which come from conditions with high accuracy), and it varies by as much as 100 msec for correct responses from conditions with intermediate accuracy (in the middle of the figure). The .1 quantile varies little across the 40 sets of data, except for errors in the conditions with high accuracy. With 1,000 observations per condition, the location of the quantiles is much tighter, but even so, the .9 quantile for error responses in the high-accuracy conditions varies by as much as 100 msec.

It is possible to understand which parameters have greater or lesser variability associated with them from these figures. Ter and a are determined to a large degree by the position of the .1 quantile. The figures show that the .1 quantile is quite well located without much variability. On the other hand, η and sz are largely determined by error reaction times, and these have a large amount of variability associated with them. For example, with 250 observations per condition (e.g., Figure 3, top panel), simulated data generated from parameters that should produce slow errors can easily, by chance, produce errors as fast as correct responses. This would produce a fitted value of variability in drift across trials (η) of zero. This means that with 250 observations per condition and η = 0.08, it is easily possible (e.g., 5% or 10% of the time) to obtain a set of data that produces a fitted value of η near zero. Therefore, we expect variability in η to be large when fitting simulated data.

Figure 3 shows the variability that simulated data have associated with them. If the diffusion model is correct—that is, if real data are generated from a diffusion process—the same variability will be associated with real data. This is why we provide the standard deviations in the parameter values we obtain from fitting simulated data. These standard deviations give some reasonable idea of what we can expect from parameter estimates based on real data.

PARAMETER ESTIMATES FROM THE MAXIMUM LIKELIHOOD METHOD

For all three methods of fitting, we will report and discuss results for fitting the simulated data that were generated from the six sets of parameter values for which the starting point is equidistant from the boundaries (Table 1). We will report the means of the parameter estimates across the 100 data sets and the standard deviations in the estimates. In the series of fits described first, results will be shown and discussed for the data sets with 250 observations per condition. It turns out that the standard deviations for 1,000 observations per condition are almost exactly twice as small as those for 250 observations per condition (i.e., the standard deviations change as the square root of the number of observations). Therefore, we will later discuss the results with 1,000 observations per condition only briefly. The next sections will present simulated data and fitting methods with no contaminants and with no variability in Ter.

Means and Standard Deviations of Parameter Estimates

Recall that Ratcliff and Tuerlinckx obtained probability densities for reaction time distributions in different ways and used different fitting algorithms (which provides a check on the accuracy of the methods). The means of their parameter estimates agreed within 1% of each other, except for the variability parameters (η and sz ), which agreed within 5%. Correlations between Ratcliff’s and Tuerlinckx’s means were computed for each parameter for each of the six sets of parameter values. Averaging across the six sets of correlations for each parameter value, the correlations were about .9, except that, for Ter, the correlation was .72 and for sz, the correlation was .58. The low values of the correlations for the variability parameters were due mainly to the set of parameters for which η and sz were both large. Although the correlations for Ter were low, the standard deviations in Ter were small (3–10 msec), so the low correlations do not indicate large differences between Ratcliff’s and Tuerlinckx’s estimates of Ter. Ratcliff’s values will be reported in the tables that immediately follow, and Tuerlinckx’s values will be reported for the investigations of contaminants and variability in Ter that will be presented later.

Table 2 contains the means and standard deviations of the parameter estimates that were recovered from the 100 sets of data simulated for each set of parameters in Table 1, for the data sets with 250 observations per condition. Overall, the means of the parameter estimates are unbiased—that is, they are close to the true values of the parameters with which the simulated data were generated. The standard deviations in the estimates are small, averaging about 4% of the mean for a, 3–10 msec for Ter, about 10% of the mean for drift rates (v), but anywhere from 20% to 70% of the mean for standard deviation in drift across trials (η). When sz was small (0.02), its standard deviation was about the same size as the mean, but when it was larger, it was about 30% of the mean. The relative sizes of these differences among standard deviations are consistent across all three fitting methods. Below, we will describe the results for each of the parameters in turn.

Table 2.

Means and Standard Deviations of Parameter Values Recovered From the Maximum Likelihood Fitting Method (N = 250 per Condition)

| Value | Parameter Set | a | Ter | η | sz | v1 | v2 | v3 | v4 |

|---|---|---|---|---|---|---|---|---|---|

| M | A | 0.0793 | 0.3010 | 0.0859 | 0.0206 | 0.4180 | 0.2612 | 0.1061 | −0.0021 |

| B | 0.0788 | 0.3009 | 0.1596 | 0.0178 | 0.4057 | 0.2543 | 0.0958 | 0.0032 | |

| C | 0.1598 | 0.3039 | 0.0794 | 0.0220 | 0.3064 | 0.2024 | 0.1018 | −0.0007 | |

| D | 0.1590 | 0.2998 | 0.0762 | 0.1095 | 0.2963 | 0.1985 | 0.1002 | −0.0019 | |

| E | 0.1618 | 0.3055 | 0.1696 | 0.0319 | 0.3146 | 0.2092 | 0.1039 | −0.0007 | |

| F | 0.1602 | 0.3000 | 0.1605 | 0.1068 | 0.3027 | 0.2008 | 0.0986 | 0.0025 | |

| SD | A | 0.0023 | 0.0029 | 0.0519 | 0.0144 | 0.0402 | 0.0292 | 0.0220 | 0.0195 |

| B | 0.0029 | 0.0029 | 0.0496 | 0.0159 | 0.0442 | 0.0295 | 0.0218 | 0.0238 | |

| C | 0.0058 | 0.0080 | 0.0225 | 0.0255 | 0.0269 | 0.0194 | 0.0143 | 0.0104 | |

| D | 0.0076 | 0.0045 | 0.0291 | 0.0372 | 0.0294 | 0.0229 | 0.0176 | 0.0139 | |

| E | 0.0078 | 0.0097 | 0.0297 | 0.0313 | 0.0375 | 0.0299 | 0.0204 | 0.0143 | |

| F | 0.0077 | 0.0060 | 0.0295 | 0.0327 | 0.0325 | 0.0273 | 0.0227 | 0.0153 |

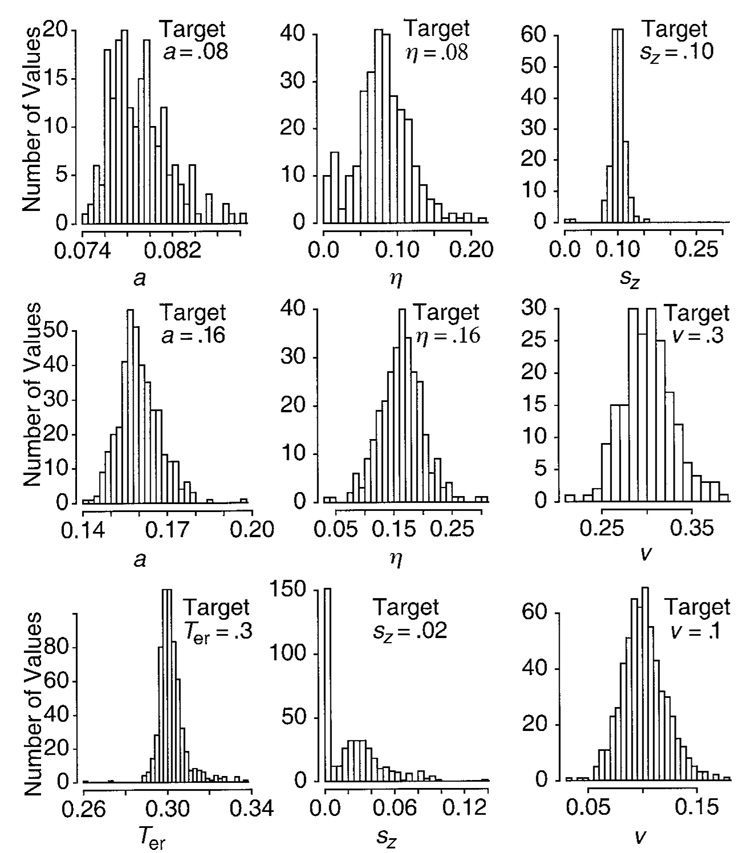

To show how the parameters vary across the random samples (the 100 data sets), Figure 4 presents nine histograms that display the parameter values. For two of the sets of parameter values used to generate the simulated data, a was 0.08. The first histogram (top left) shows all the estimates of a from the data generated with both sets. The next histogram, just below, shows the estimates of a for the data generated from the four sets of parameter values with a = 0.16. Before grouping the two sets of data for which a = 0.08 and the four for which a= 0.16, we made sure that there were no systematic differences among them. We also employed groupings for the histograms for the other parameter values, always first checking that there was no variation as a function of other parameter values.

Figure 4.

Histograms of the parameter values recovered from fits of the diffusion model, using the maximum likelihood method to simulated data (using parameters in Table 1).

For the boundary separation parameter a, all the means of estimates shown in Table 2 are within 4% of the target value (either 0.08 or 0.16), which is within less than one standard deviation. When 0.08 was the target value, the distribution was skewed only a little to the right. When 0.16 was the target value, the distribution was symmetric, roughly normal. Thus, the fitting method is recovering parameter values near the true values, with neither large deviations nor a bias toward one or the other side of the target value.

The means of the estimates for Ter are also near the true value (300 msec). The histogram for the values of Ter (Figure 4, bottom leftmost panel) is symmetric around the true value, although there are a few straggling values in the tail. The standard deviation in the estimates is reasonably small, always less than about 10 msec. The standard deviation is smaller, about 3 msec, when boundary separation (a) is small and somewhat larger, 5–10 msec, when boundary separation is larger. This is because, when a is small, the .1 quantile has a smaller value, closer to the 300-msec value of Ter, and is less variable. Hence, Ter is better located and so has a smaller standard deviation. This same explanation holds for large sz as opposed to small sz: With a large sz, more processes have a value of the .1 quantile near Ter than with a smaller sz.

The means of the estimates for drift rates are typically within 6% of the true values, with no systematic bias away from the true values, and they do not vary systematically with other parameters of the model (we show histograms for drift rates 0.3 and 0.1 only, because they are representative of all four drift rates). The standard deviations in the estimates of drift rates are about 10% of the mean for high drift rates and about 20% of the mean for low drift rates (disregarding the drift value of zero). The standard deviation in drift rates is larger for the lower value of boundary separation a than for the higher value of boundary separation. This is because the quantile probability function is flatter for the smaller value of a and, so, drift rates are constrained mainly by their position on the x-axis (accuracy) and less by their position on the y-axis (reaction times), because the latter do not vary much across experimental conditions. The standard deviation in drift rates is also larger for larger boundary separation when the variability across trials (η) is larger. This is again because the quantile probability functions are flatter when η is large.

The two variability parameters (η and sz) are considerably less accurately estimated than the other parameters (this is true for most models that use variability parameters; cf. Ratcliff, 1979). Although there do not appear to be any systematic biases in η away from the true values (0.16 and 0.08), large standard deviations mean that, if there were any systematic bias, it would be hidden in the variability. The standard deviation in the estimate of η varies from about 0.03 to about 0.05, 20%–60% the size of the mean. The reason for large variability in η is that its estimate is based on error reaction times, which themselves have such large variability (see Figure 3) because there are often few of them (see Ratcliff et al., 1999). Thus, for some simulated data sets, the best-fitting value of η is near zero, and for other simulated data sets, the best-fitting value is over twice as large as the true value used to generate the simulated data. This is shown clearly in the histograms in Figure 4. For η = 0.08, there are a number of estimates near zero. These come from simulated data for which boundary separation a is 0.08. For η = 0.16, the distribution of estimates is narrower and more symmetric. The standard deviation varies with boundary separation a. When a is 0.08, the standard deviation is larger, around 0.05, and when a is 0.16, the standard deviation is smaller, around 0.03. Thus, the larger the value of a, the better the estimate of η.

The quality of the estimates of the parameter sz depends on the true value of the parameter. When the value that was used to generate the data is small, 0.02, two standard deviation intervals include zero and a value twice as large as the true value (see the histogram in Figure 4). This extreme variability in estimates comes about because the size of sz is determined by error response times in conditions with high accuracy, in which there are relatively few error responses. When the true value of sz is 0.10, the estimates are near the true values, because the effect of sz on error reaction times is larger and, so, is less likely to be masked by random variation in the data. The distribution of estimated values for sz = 0.10 is symmetric (see the histogram in Figure 4), but there are still two values near zero.

In sum, the parameters a, Ter, and drift rates are quite well estimated, within 10% of the mean parameter value. For each of these parameters, the mean of the estimates from the 100 data sets falls close to the true mean, there is no consistent bias toward values larger or smaller than the true mean, and the standard deviation among the estimates is small relative to the size of the estimated value. But the variability parameters, η and sz, are much more poorly estimated, within 20%–70% of the mean parameter value. If it is necessary to obtain good estimates of these parameters, sample size must be increased by running experiments with many sessions per subject.

Correlations Among Parameter Values

A potentially major problem in recovering parameters for a model is that the value of the estimate for one parameter may be significantly correlated with the value of another. In attempting to find the best-fitting parameter values, given the variability in the data, a fitting method may trade the value of one parameter off against the value of another. Using results from the 100 fits to the simulated data, we can investigate how serious this problem is.

This issue is especially critical when testing for differences among parameter values across experimental conditions. A larger value of a parameter in one condition than in another could be due to random variation, or it could be due to a genuine difference in the values for the two conditions. If other parameters of the model covary with the parameter at issue in a way that is expected from the model and the difference is just barely significant, it is less likely that the observed difference is real than if the other parameters do not covary in this way.

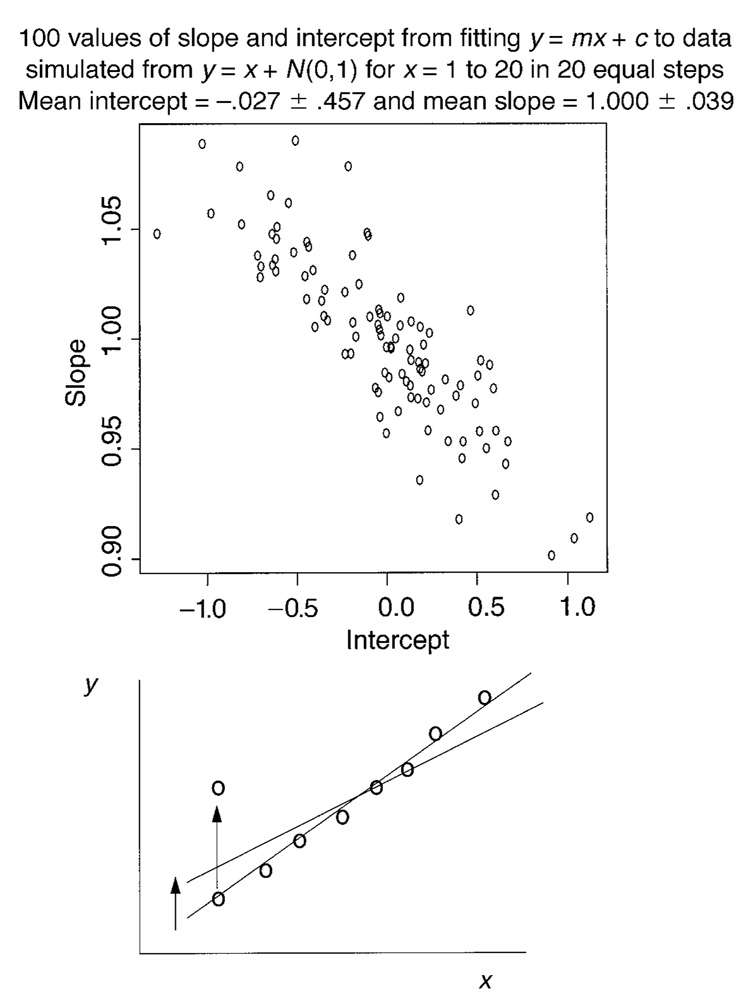

Before taking up the issue of covariation for the diffusion model, we will illustrate covariation between parameters with an example, linear regression (straight line fitting), for which there are only two parameters. Fitting the equation for a straight line, y = mx + c, to data provides estimates of slope m and intercept c. We generated 100 sets of data for a straight line, with 20 points on the line per data set. The 100 sets of data were generated with a slope of 1 and an intercept of zero, and to add variability to the function, each point had a value added to it from a normal distribution with a mean of zero and a standard deviation of 1: y = mx + c + N(0,1). For each data set, a straight line was fitted to it by the least squares method (the standard method for linear regression), giving an estimate of slope and intercept for each data set. The slopes and intercepts are plotted in Figure 5 (top panel).

Figure 5.

An illustration of the covariation between parameter estimates for linear regression. The top panel shows values of slope and intercept from simulated data (falling in an elliptical shape), and the bottom panel shows how moving one data point up (by random variation) would decrease the slope and increase the intercept of the best-fitting straight line.

In linear regression, if the correlation between the slope and the intercept is computed, it usually has a large negative value. The correlation is large if the slope is positive and the range of y values is more than four times greater than the standard deviation in the variability in y. Figure 5, top panel, illustrates this negative correlation. Plotting the slopes versus the intercepts produces a roughly elliptical shape with a negative slope. For the 100 random samples in Figure 5, the correlation between the slopes and the intercepts is −.848.

The explanation for the negative correlation is straightforward. If a data point near either end of the line is moved far from the line, as illustrated in the bottom panel of Figure 5, the slope is raised, and the intercept is lowered (or vice versa). This gives a negative correlation. It is much less likely that the slope and the intercept are both raised in some random set of data (giving a positive correlation), because this would require a change in all the data points in the same direction.

When parameter estimates are evaluated, confidence intervals are often shown for a single parameter without reference to the behavior of other parameters. However, confidence regions can be drawn for the joint behaviors of parameters. For linear regression, the confidence region for the slope and the intercept forms an ellipse with a negative slope for the major axis (e.g., Draper & Smith, 1966, p. 65). In Figure 5, this would be an ellipse around the points in the top panel. Joint confidence intervals can be important, as the following examples show: If the slope for linear regression is higher and the intercept is lower than the hypothesized population values, this can be the result of random variation in the data, but if both the intercept and the slope are higher, the values could be different from the population values. For example, in the top panel of Figure 5, imagine a point at slope = 1.07 and intercept = 0.6. This point would lie inside the individual confidence intervals for both the slope and the intercept (i.e., 1.07 lies within the vertical scatter of points, and 0.6 lies within the horizontal scatter of points). But the point would lie outside the joint confidence region (the ellipse that would contain 95% of the points in the top panel of Figure 5). So an understanding of the joint confidence regions provides additional useful information in testing hypotheses about differences among parameter values.

For linear regression, joint confidence regions can be determined analytically, and hypotheses can be tested about the joint behavior of the slope and the intercept. But for the diffusion model, such analytical results are almost impossible to produce. However, simulation methods can provide information that can be used more informally to determine whether joint behavior of parameter values is something that needs to be examined for a particular set of fits for a particular data set. This will not allow hypotheses to be tested but will provide enough information to determine whether the issue of correlated estimates needs to be considered in drawing conclusions about differences among parameter values across conditions or whether it can be ignored.

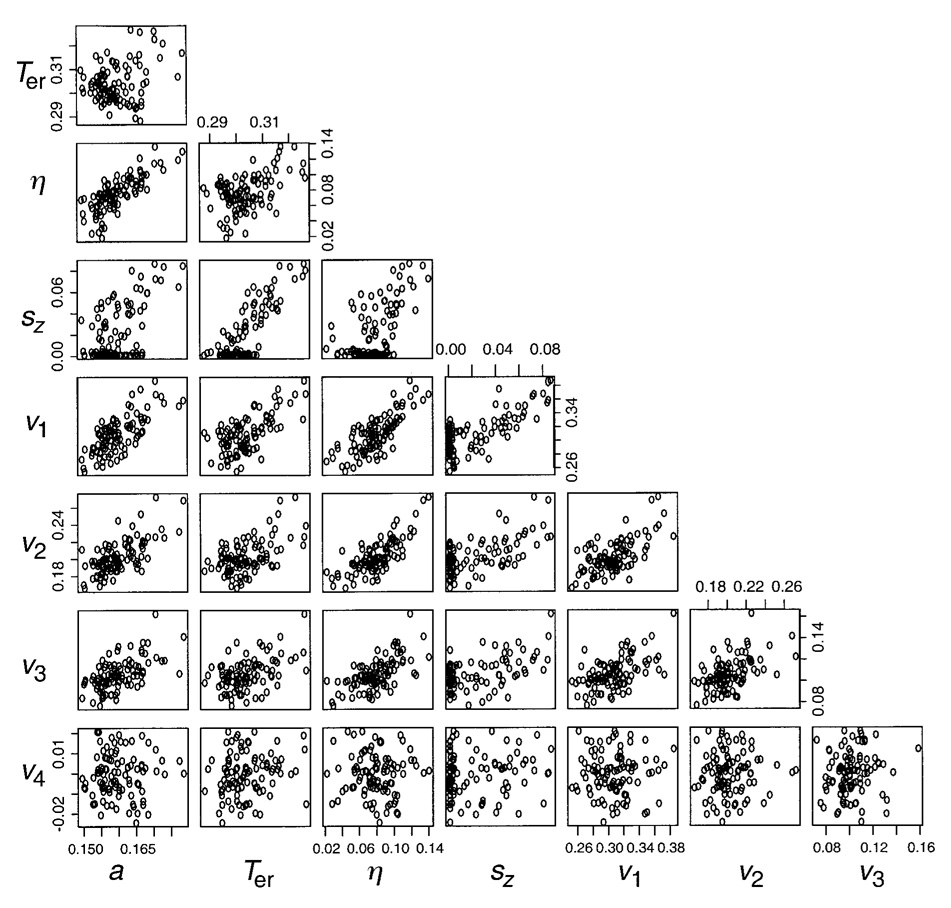

For the diffusion model, Table 3 contains correlations between each pair of parameters for the maximum likelihood fits shown in Table 3. For each of the 100 data sets for each of the 6 sets of parameter values, we computed correlations between each pair of estimated parameters, and Table 3 shows the means of these correlations. Figure 6 shows scatterplots for the 100 parameter estimates for the fits generated from 1 of the 6 sets of parameter values (the 3rd set in Table 1). For the 100 data sets, each scatterplot shows the estimated value of one parameter plotted against the estimated value of another parameter.

Table 3.

Correlations Among Parameter Values for Maximum Likelihood Fits (N = 250 per Condition)

| a | Ter | η | sz | v1 | v2 | v3 | v4 | |

|---|---|---|---|---|---|---|---|---|

| a | 1.0000 | .3980 | .8263 | .6238 | .7842 | .6819 | .5000 | −.0227 |

| Ter | .3980 | 1.0000 | .4257 | .7904 | .5295 | .4751 | .2890 | .0355 |

| η | .8263 | .4257 | 1.0000 | .5330 | .7837 | .7129 | .5270 | .0115 |

| sz | .6238 | .7904 | .5330 | 1.0000 | .6019 | .5233 | .3312 | .0165 |

| v1 | .7842 | .5295 | .7837 | .6019 | 1.0000 | .6534 | .4620 | −.0432 |

| v2 | .6819 | .4751 | .7129 | .5233 | .6534 | 1.0000 | .4378 | −.0300 |

| v3 | .5000 | .2890 | .5270 | .3312 | .4620 | .4378 | 1.0000 | .0171 |

| v4 | −.0227 | .0355 | .0115 | .0165 | −.0432 | .0300 | .0171 | 1.0000 |

Figure 6.