Abstract

Objective:

The research analyzed evaluation data to assess medical student satisfaction with the learning experience when required PubMed training is offered entirely online.

Methods:

A retrospective study analyzed skills assessment scores and student feedback forms from 455 first-year medical students who completed PubMed training either through classroom sessions or an online tutorial. The class of 2006 (n = 99) attended traditional librarian-led sessions in a computer classroom. The classes of 2007 (n = 120), 2008 (n = 121), and 2009 (n = 115) completed the training entirely online through a self-paced tutorial. PubMed skills assessment scores and student feedback about the training were compared for all groups.

Results:

As evidenced by open-ended comments about the training, students who took the online tutorial were equally or more satisfied with the learning experience than students who attended classroom sessions, with the classes of 2008 and 2009 reporting greater satisfaction (P<0.001) than the other 2 groups. The mean score on the PubMed skills assessment (91%) was the same for all groups of students.

Conclusions:

Student satisfaction improved and PubMed assessment scores did not change when instruction was offered online to first-year medical students. Comments from the students who received online training suggest that the increased control and individual engagement with the web-based content led to their satisfaction with the online tutorial.

Highlights

First-year medical students at Mount Sinai School of Medicine responded positively to an online PubMed tutorial and skills assessment created by librarians.

Students who took the online tutorial passed the PubMed skills assessment at the same high rate as students who attended in-class training led by librarians.

Feedback suggests that students preferred the individual control of the web-based content and the ease with which the online training fit into their crowded schedules.

Implications

Interactive online training encourages students to direct their own learning experience and can lead to greater student satisfaction.

Medical students of the Millennial generation may prefer flexible, self-paced assignments that can be completed at times and locations convenient to them.

Medical librarians can create online tutorials to successfully engage and instruct the next generation of medical students.

Introduction

MEDLINE searching and information retrieval skills are core competencies for medical students. Studies have shown that instruction in literature searching improves students' MEDLINE skills and increases their use of original research articles to solve clinical problems [1–3]. However, few studies have demonstrated the effectiveness of different formats of instruction for teaching searching skills or the format's relationship to student satisfaction with the learning experience.

Health sciences librarians and health educators have developed successful interactive web-based tutorials for teaching literature searching and MEDLINE skills [4–7], evidence-based medicine skills [8,9], and introductory library and online public access catalog (OPAC) skills [10]. Two studies comparing face-to-face classes covering searching and literacy skills with web-based tutorials for teaching medical students have concluded that both formats are equally effective [6,11], but the studies have found that students preferred either the face-to-face teaching over the tutorial [6] or found no difference in students' rating of the training formats [11].

Similarly, many studies at undergraduate, non–health sciences libraries have found little difference between computer or online instruction and traditional in-class library instruction in student performance and satisfaction. This finding has prompted many authors to conclude that web-based instruction is a viable alternative to traditional library classes [12–18]. Additional studies have observed that students prefer the pace of an online library tutorial over the pace of a librarian-led lecture [15] and that students and faculty respond positively to computer-based library instruction [16,19].

However, contrasting findings have also been reported. Results of one study have shown that undergraduate students who attended in-class sessions achieved higher posttest scores than students completing online library instruction [20]. Other research has found that university students and faculty were not strongly in favor of replacing librarian-led instruction with web-based tutorials [21] and that college students who attended librarian-led instruction reported a higher level of learning [22].

This paper compares two formats for providing required PubMed instruction to medical students: a traditional classroom session and a self-paced online tutorial encompassing no face-to-face teaching. PubMed skills assessment scores and student feedback about the formats were compared to determine student satisfaction and skills assessment performance by instructional format.

Background

At Mount Sinai School of Medicine, “Introduction to PubMed” is a required component of the four-year library science/medical informatics–related curriculum. Delivered to all medical students during the first year of medical school, the required PubMed training addresses the fundamentals of retrieving and managing information from PubMed.

For several years, students were assigned alphabetically to one mandatory sixty-minute PubMed training session in the library's computer classroom. Instruction librarians taught the lessons, which included lecture, discussion, and hands-on searching practice. The classes took place during the rare open time slots found throughout the fall semester, with the result that some students attended sessions during the first week of the semester and others during the final weeks. Following their assigned session, students had one week to complete an online skills assessment and feedback form in Web-CT, the electronic course management system.

Online Pubmed Tutorial

In 2004, librarians created an online version of the training, theorizing that the new format would more easily and flexibly fit into the busy fall semester schedule and give students the ability to direct their own learning experience. Creating a new tutorial rather than using an existing one allowed librarians to customize the content for first-year medical students, to add interactive elements, and to incorporate institution-specific information.

Instruction librarians used Dreamweaver MX to create the online PubMed tutorial [23]. The tutorial employs a split screen format to display the instructional material above an open PubMed window. Designed to encourage active learning, the split screen allows students to read about searching tools and techniques while simultaneously practicing live PubMed searches. The design also gives students complete control of the selection, sequence, and amount of time spent on each screen. Students are not required to view all of the content in the tutorial. Thus, more experienced PubMed users are able to omit introductory material and proceed to advanced sections.

The face-to-face instruction and the online tutorial both covered the scope and content of PubMed; ways to search using keywords, Medical Subject Headings (MeSH), and field tags; ways to display and manipulate search results; and ways to link to full-text articles. Instruction librarians completed a retrospective analysis using the skills assessment and student feedback data to compare the students enrolled in the traditional, librarian-led class (n = 99) to those enrolled in the online tutorial (n = 356).

Methods

First-year medical students in the class of 2006 (n = 99) were assigned alphabetically by last name to one of 10 mandatory, 60-minute PubMed training sessions. The training took place in a computer classroom with 9–10 students at a time. Upon arrival, each student signed in, and the instructor took attendance. All of the students in the class of 2006 attended 1 training session. Instruction librarians taught the classes using lecture, discussion, and hands-on searching practice. Following the class, each student had 1 week to complete the required online PubMed skills assessment and an anonymous student feedback form in Web-CT.

Over the following 3 years, first-year medical students in the classes of 2007 (n = 120), 2008 (n = 121), and 2009 (n = 115) completed the training entirely online through the PubMed tutorial. Librarians briefly introduced the requirements to the entire class through email (class of 2007) or in-person during new student orientation (classes of 2008 and 2009). Students then worked independently over a 5-week period on the tutorial, the PubMed skills assessment, and the anonymous student feedback form. Over the course of the assignment, librarians sent 2 emails reminding students of the deadline. Additionally, to help measure student preference, librarians offered students taking the online tutorial the option to attend a scheduled in-class seminar instead of completing the training online.

All students completed similar PubMed skills assessments, each consisting of fifteen objective, multiple choice questions focused on practical searching techniques. To improve clarity and adjust for changes in PubMed over time, librarians revised the skills assessment questions before each new class. The revisions did not change the skills evaluated by the assessments. Each assessment was created and reviewed by the same group of librarians to ensure the same level of difficulty across years. The majority of the questions asked students to complete searches based on given instructions (Appendix A online). Each question had one objectively correct answer. Web-CT automatically graded all skills assessments using the answer key entered by librarians.

To collect student feedback about the instruction, all students (completing both in person and tutorial-based instruction) were asked to submit their “overall comments and suggestions for the PubMed training” into an anonymous, open-ended survey box posted in Web-CT (Appendix B online). In addition, students who took the online tutorial were asked to rate the tutorial's organization and navigation, their own preference for taking the class online, and whether the training increased their knowledge of PubMed. They also reported the amount of time they spent reviewing the online tutorial (Appendix C online).

Four librarian reviewers—each experienced in reference, instruction, and PubMed searching—categorized the anonymous open-ended responses on a scale of 1–3 (1 = negative, 2 = neutral, 3 = positive). One of the reviewers had also contributed to developing the online tutorial and teaching the classroom sessions. The reviewers were blinded to student class enrollment, limiting the potential for bias. Comments illustrating each rating included: positive: “I learned a lot about PubMed and will now be a much more efficient searcher”; neutral: “This training was somewhat useful”; and negative: “This training was boring and seemed like a waste of time.” Mixed comments expressing both positive and negative observations were counted as neutral (example: “I found this class to be very useful but also tedious”). Fleiss's kappa score of inter-rater reliability was high (0.845).

The reviewers met as a panel to classify the responses that were not unanimous. The panel used a majority vote method to categorize the responses, with one member of the panel serving as the facilitator rather than a voter. SPSS software (version 14.0, SPSS, Chicago, IL) was used to perform statistical analyses on student feedback scores. Comparisons of student feedback scores (blinded rating of students' open-ended comments about the instruction) were made using ANOVA and Dunnett's T3 post hoc paired comparison test.

Results

The open-ended student feedback forms were submitted by 70% (n = 70, class of 2006) of the students enrolled in the traditional class and by 65% (n = 79, class of 2007), 57% (n = 69, class of 2008), and 72% (n = 83, class of 2008) of the students participating in the web-based instruction.

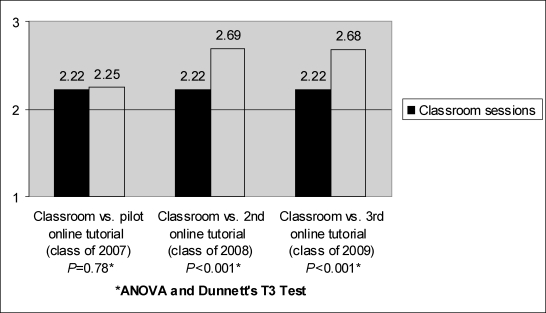

Mean student feedback scores, as classified by the blinded reviewers on a scale of 1–3 (1 = negative, 2 = neutral, 3 = positive) were: 2.23 for the traditional class (class of 2006, SD = 0.837), 2.25 for the pilot online tutorial (class of 2007, SD = 0.741), 2.69 for the second online tutorial (class of 2008, SD = 0.503), and 2.68 for the third online tutorial (class of 2009, SD = 0.544).

These results demonstrated a similar level of satisfaction with the learning experience for students enrolled in the traditional class (class of 2006) and the pilot online training (class of 2007) (P = 0.78). Students in the subsequent 2 online trainings, the classes of 2008 and 2009, reported a higher level of satisfaction compared to students in the traditional class or the pilot online class (P<0.001) (Figure 1).

Figure 1.

Mean student feedback scores (by course format)

The PubMed skills assessment was graded on a scale of 1–100. All students (100%, n = 455) submitted the PubMed skills assessment. The mean assessment score for students in all groups was the same: 91%, with ranges of 48–100 (class of 2006), 37–100 (class of 2007), 63–100 (class of 2008), and 56–100 (class of 2009). Mode scores were: 96 (class of 2006) and 100 (classes of 2007, 2008, and 2009).

Student Responses to The Tutorial

Every student enrolled in the years that PubMed training was offered electronically (n = 356) chose to complete the tutorial online, even though they were each given the option to attend a classroom session instead. Of the 231 students who submitted feedback about the online tutorial (Table 1), only 13 (5.6%) reported that they “would have preferred to learn about PubMed through an in-class training session led by an instructor.” Additionally, 183 students (79.2%) agreed with the statement: “I found the tutorial well organized and easy to navigate.” Students also rated the tutorial as an effective learning tool: 194 students (84%) stated that “the training increased my knowledge of PubMed” (Table 2).

Table 1.

Student rating of the online tutorial, combined classes of 2007, 2008, and 2009

Table 2.

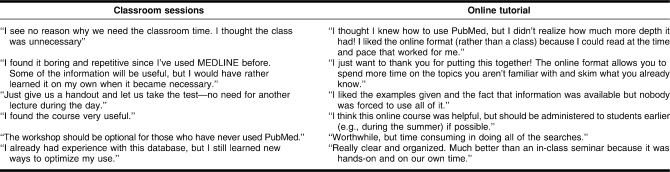

Sample comments from anonymous open-ended student feedback forms

Students' self-reported time spent on the tutorial varied greatly (Figure 2). The number of students taking 30 minutes or less to review the tutorial (107/231, 46%) was approximately the same as the number spending over 30 minutes (119/231, 51%).

Figure 2.

Self-reported time spent on tutorial, combined classes of 2007, 2008, and 2009 (by number of students reporting, n = 231)

Sample Comments from Student Feedback Forms

Although students participating in both class formats submitted positive, neutral, and negative comments, librarians observed that the content of the comments varied depending on the class format (Table 2). The comments provided insight into the students' perception of both class formats and their approach to the coursework.

Recurring comments from students enrolled in the classroom-based training included requests to make the training and skills assessment optional. Representative comments included: “This training could be offered to those who feel uncomfortable using PubMed” and “Consider having different level trainings for people with different levels of past experience with MEDLINE.” Other students expressed dissatisfaction at the timing of their assigned workshop—“The same week as a final” and “during our only lunch break”—reflecting the difficulty of scheduling multiple small groups of students in one computer classroom during the busy semester.

In contrast, the students who completed the online tutorial frequently commented on the freedom they had, because they could complete the training at any time and location during the five-week timeframe and because they could select the tutorial content that they wished to review. Representative comments included: “I had prior experience with PubMed and was able to quickly move through sections I already knew” and “I liked being able to go through the material independently and at my own pace.” Other students who took the web-based class described the tutorial as “efficient,” “relevant,” and “extremely useful.” In contrast to the students in the classroom sessions, students who completed the online tutorial rarely commented that the PubMed training or skills assessment should be optional, that training should offer different levels of instruction depending on student experience, or that the timing was inconvenient.

Discussion

First-year medical students clearly accepted the online training as an equal or better learning experience than the classroom sessions. Students taking the online training also had the same high mean PubMed skills assessment score as those who attended librarian-led training. Furthermore, no student opted to attend an available classroom session instead of completing the training online, demonstrating that the tutorial was the more appealing and convenient option for students.

Students were also able to decide the length of time they spent viewing the tutorial and the content to access. Their self-reported time spent using the tutorial differed greatly, suggesting that some students needed extended time to review the basics, while others were prepared to complete the skills assessment after little or no time viewing the online tutorial. Comments from those who completed the online training suggest that the increased control and individual engagement with the web-based content improved their overall evaluation of the learning experience. Similarly, many comments from students who attended the classroom sessions suggest that they disliked the lack of control over the pace and content of the class.

Students in all classes responded positively to the online PubMed tutorial; however, the classes of 2008 and 2009 reported a higher level of satisfaction with the online tutorial than the class of 2007. Several factors might have contributed to the higher rating. First, the class of 2007 participated in the pilot offering of the online training, so some students encountered a few minor technological errors. Also, librarians introduced the pilot tutorial through email rather than during an in-person class meeting, which was not ideal for communicating the importance of the requirements and due date.

Limitations of this study included the use of self-reported data collection and the potential for response bias from students, though the open-ended feedback forms were collected anonymously to limit the possibility of response bias. However, students were not required to submit a feedback form; thus, some opinions were not recorded. Also, although librarians categorizing the open-ended responses were blinded to course enrollment to reduce bias, a potential for bias existed given that one of the four librarians was involved in developing the tutorial and teaching the classroom sessions. In addition, though librarians creating the instruction assessments attempted to ensure that the assessment difficulty level remained consistent across years and the similarity of annual assessment scores seemed to support comparable difficulty across assessments, some differences might have been present.

Because this was a retrospective study, data showing student performance on each question of the skills assessment were not available for all class years. If these data had been archived, more in-depth comparisons of PubMed skills mastery across groups would have been possible. Another limitation was the lack of student evaluations of the teaching abilities of the librarian instructors. The instructors were competent and experienced, but it is not known to what extent their teaching might have influenced student satisfaction. Additionally, students were not asked to rate the effectiveness of the classroom session in improving their knowledge of PubMed. If these data had been available, they would have served as a valuable comparison to the tutorial evaluations submitted by students in the online classes.

Future research could investigate which aspects of an online tutorial are most predictive of student satisfaction, whether pretests and posttests can determine the training format that increases learning the most, and whether online tutorials without live PubMed searching still result in high student satisfaction.

Conclusions

First-year medical students responded positively to learning PubMed through a self-paced online tutorial and passed a PubMed skills assessment at the same high rate as students receiving in-person librarian training. These results support the use of a well-designed online tutorial for teaching PubMed skills during the medical school curriculum.

This study differs from previous research comparing two class formats [6,11] because it shows a student preference for learning PubMed through an online tutorial rather than face-to-face classroom sessions. This research is also unique because it presented students with a self-paced tutorial rather than one with a predetermined length and sequence, allowing students to direct their own learning. These findings are consistent with published profiles of the future generation of library users, often referred to as Millennials [24]. Millennial students (typically described as born between the early/mid-1980s to mid-2000s) as a group prefer to learn by exploring and experimenting. They also value immediate access and feedback and tend to be impatient and independent. Perhaps most significantly, they value flexibility in their work assignments [24].

The online PubMed tutorial allows students to select their own path through the training content and to learn at times and locations convenient to them. In addition, the live PubMed searching included in the tutorial gives students the ability to interact immediately with the training material. Creating opportunities for students to direct their own learning may be necessary for librarians to reach the next generation of medical students.

Electronic Content

Footnotes

Based on a presentation at MLA '06, the 106th Annual Meeting of the Medical Library Association; Phoenix, AZ; May 23, 2006.

This article has been approved for the Medical Library Association's Independent Reading Program <http://www.mlanet.org/education/irp/>.

Supplemental Appendixes A, B, and C are available with the online version of this journal.

References

- 1.Gruppen L.D., Rana G.K., Arndt T.S. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005 Oct;80(10):940–4. doi: 10.1097/00001888-200510000-00014. [DOI] [PubMed] [Google Scholar]

- 2.Dorsch J.L., Aiyer M.K., Meyer L.E. Impact of an evidence-based medicine curriculum on medical students' attitudes and skills. J Med Libr Assoc. 2004 Oct;92(4):397–406. [PMC free article] [PubMed] [Google Scholar]

- 3.Ghali W.A., Saitz R., Eskew A.H., Gupta M., Quan H., Hershman W.Y. Successful teaching in evidence-based medicine. Med Educ. 2000 Jan;34(1):18–22. doi: 10.1046/j.1365-2923.2000.00402.x. [DOI] [PubMed] [Google Scholar]

- 4.Loven B., Morgan K., Shaw-Kokot J., Eades L. Information skills for distance learning. Med Ref Serv Q. 1998;Fall;17(3):71–5. doi: 10.1300/j115v17n03_08. [DOI] [PubMed] [Google Scholar]

- 5.Grant M.J., Brettle A.J. Developing and evaluating an interactive information skills tutorial. Health Info Libr J. 2006 Jun;23(2):79–86. doi: 10.1111/j.1471-1842.2006.00655.x. [DOI] [PubMed] [Google Scholar]

- 6.Brandt K.A., Lehmann H.P. Teaching literature searching in the context of the World Wide Web. Proc Annu Symp Comput Appl Med Care. 1995. pp. 888–92. [PMC free article] [PubMed]

- 7.Dixon L.A. A quiver full of arrows: recommended web-based tutorials for PubMed, PowerPoint, Ovid MEDLINE, and FrontPage. Med Ref Serv Q. 2002;21(2):55–64. doi: 10.1300/J115v21n02_06. [DOI] [PubMed] [Google Scholar]

- 8.Mayer J., Schardt C., Ladd R. Collaborating to create an online evidence-based medicine tutorial. Med Ref Serv Q. 2001;20(2):79–82. doi: 10.1300/J115v20n02_08. [DOI] [PubMed] [Google Scholar]

- 9.Schilling K., Wiecha J., Polineni D., Khalil S. An interactive web-based curriculum on evidence-based medicine: design and effectiveness. Fam Med. 2006 Feb;38(2):126–32. [PubMed] [Google Scholar]

- 10.Foust J.E., Tannery N.H., Detlefsen E.G. Implementation of a web-based tutorial. Bull Med Libr Assoc. 1999 Oct;87(4):477–9. [PMC free article] [PubMed] [Google Scholar]

- 11.Schilling K. Isaías P., Nunes M.B., editors. A comparison of web-based and traditional instruction for the acquisition of information literacy skills: impact of training on learning outcomes, information usage patterns, and student perceptions. 2005. pp. 183–91. In: IADIS International Conference, WWW/Internet 2005; Lisbon, Portugal; 19 Oct 2005. IADIS;

- 12.Beile P.M., Boote D.N. Does the medium matter?: a comparison of a web-based tutorial with face-to-face library instruction on education students' self-efficacy levels and learning outcomes. Res Strategies. 2005;20(1–2):57–68. [Google Scholar]

- 13.Bracke P.J., Dickstein R. Web tutorials and scalable instruction: testing the waters. Ref Serv Rev. 2002;30(4):330–7. [Google Scholar]

- 14.Germain C.A., Jacobson T.E., Kaczor S.A. A comparison of the effectiveness of presentation formats for instruction: teaching first-year students. Coll Res Lib. 2000 Jan;61(1):65–72. [Google Scholar]

- 15.Holman L. A comparison of computer assisted instruction and classroom bibliographic instruction. Ref User Serv Q. 2000;40(1):53–60. [Google Scholar]

- 16.Kaplowitz J., Contini J. Computer-assisted instruction: is it an option for bibliographic instruction in large undergraduate survey classes. Coll Res Lib. 1998 Jan;59(1):19–27. [Google Scholar]

- 17.Nichols J., Shaffer B., Shockey K. Changing the face of instruction: is online or in-class more effective. Coll Res Lib. 2003 Sep;64(5):378–88. [Google Scholar]

- 18.Orme W.A. A study on the residual impact of the Texas Information Literacy Tutorial on the information-seeking ability of first year college students. Coll Res Lib. 2004 May;65(3):205–15. [Google Scholar]

- 19.Dixon L., Garrett M., Smith R., Wallace A. Building library skills: computer assisted instruction for undergraduates. Res Strategies. 1995;13(4):196–208. [Google Scholar]

- 20.Churkovich M., Oughtred C. Can an online tutorial pass the test for library instruction? an evaluation and comparison of library skills instruction methods for first year students at Deakin University. Aust Acad Res Lib. 2002 Mar;33(1):25–38. [Google Scholar]

- 21.Michel S. What do they really think? assessing student and faculty perspectives of a web-based tutorial to library research. Coll Res Lib. 2001 Jul;62(4):317–32. [Google Scholar]

- 22.Joint N. Information literacy evaluation: moving towards virtual learning environments. Electronic Libr. 2003;21(4):322–34. [Google Scholar]

- 23.Mount Sinai School of Medicine's PubMed tutorial [Internet] New York, NY: Mount Sinai School of Medicine; 2004. [rev 2007; cited 4 Jan 2008]. 〈 http://www.mssm.edu/library/tutorials/pubmed.html〉. [Google Scholar]

- 24.Sweeney R.T. Reinventing library buildings and services for the Millennial generation. Libr Admin Manag. 2005;19(4):165. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.