Abstract

What is the relationship between retinotopy and object selectivity in human lateral occipital (LO) cortex? We used functional magnetic resonance imaging (fMRI) to examine sensitivity to retinal position and category in LO, an object-selective region positioned posterior to MT along the lateral cortical surface. Six subjects participated in phase-encoded retinotopic mapping experiments as well as block-design experiments in which objects from six different categories were presented at six distinct positions in the visual field. We found substantial position modulation in LO using standard nonobject retinotopic mapping stimuli; this modulation extended beyond the boundaries of visual field maps LO-1 and LO-2. Further, LO showed a pronounced lower visual field bias: more LO voxels represented the lower contralateral visual field, and the mean LO response was higher to objects presented below fixation than above fixation. However, eccentricity effects produced by retinotopic mapping stimuli and objects differed. Whereas LO voxels preferred a range of eccentricities lying mostly outside the fovea in the retinotopic mapping experiment, LO responses were strongest to foveally presented objects. Finally, we found a stronger effect of position than category on both the mean LO response, as well as the distributed response across voxels. Overall these results demonstrate that retinal position exhibits strong effects on neural response in LO and indicates that these position effects may be explained by retinotopic organization.

INTRODUCTION

The human visual system is capable of categorizing and identifying objects quickly and efficiently (Grill-Spector and Kanwisher 2005). This ability is robust to changes in viewing conditions such as view angle (Fang et al. 2006; Logothetis et al. 1994) or retinal position (Biederman and Cooper 1991; Ellis et al. 1989; Thorpe et al. 2001). A key goal in vision science is to understand how the brain generalizes across viewing conditions to enable this recognition behavior.

Object recognition is mediated by a hierarchy of visual cortical regions, extending from primary visual cortex (V1) in the occipital lobe anteriorly and ventrally along the cortical surface. Early visual cortex contains neurons with small visual receptive fields (RFs) organized topographically into a series of maps of the visual field (Sereno et al. 1995; Wandell et al. 2005). The representation of visual input in these regions is explicitly connected to the retinal position of local features. Conversely, neurons in anterior, “high-level” regions respond to more complex features, such as object shape (Malach et al. 1995; Tanaka 1996b) or category (Hung et al. 2005; Kanwisher et al. 1997), which may be integrated across a large extent of the visual field. Functional magnetic resonance imaging (fMRI) has identified several high-level object-selective regions in humans, including the lateral occipital complex (LOC) (Malach et al. 1995), which responds preferentially to object over nonobject stimuli. LOC is commonly divided into a lateral and posterior region, located laterally along occipital cortex and posterior to MT (LO) and a ventral and anterior subregion in the posterior part of the fusiform gyrus and occipitotemporal sulcus (pFus/pOTS) (Grill-Spector et al. 1999; Sayres and Grill-Spector 2006). Neural activity in these regions correlates with object recognition performance (Bar et al. 2001; Grill-Spector et al. 2000; James et al. 2000).

An outstanding question about higher-order visual regions such as LO is the degree to which neurons in these regions abstract information from the retinal inputs. Electrophysiology studies in monkeys suggest that high-level visual cortex may retain a substantial amount of sensitivity to retinal position. While results from inferotemporal (IT) neurons indicate that stimulus selectivity is preserved across different retinal positions (Ito et al. 1995; Kobatake and Tanaka 1994; Logothetis et al. 1995; Rolls 2000; Tanaka 1996a), neural firing rates are nonetheless modulated with position changes. Traditional RF estimates (Op De Beeck and Vogels 2000; Yoshor et al. 2007) indicate RF sizes of 8–20° in these regions. However, recent evidence indicates an unexpectedly strong sensitivity to position in some anterior IT neurons (DiCarlo and Maunsell 2003) for small shape stimuli.

In humans, there is growing evidence for position effects in human object-selective regions. fMRI studies have shown lateralization effects (higher response to contralateral compared with ipsilateral object stimuli) in LO (Grill-Spector et al. 1998; Hemond et al. 2007; McKyton and Zohary 2007; Niemeier et al. 2005). fMRI-adaptation studies have demonstrated that LO recovers from adaptation when the retinal position of objects is changed (Grill-Spector et al. 1999), suggesting sensitivity to object position. In addition, eccentricity bias effects have been reported more broadly across extrastriate cortex: for instance, face-preferring regions prefer foveal stimulus positions while house-preferring regions prefer peripheral positions (Hasson et al. 2003; Levy et al. 2001, 2004).

A largely separate body of literature has examined position effects using retinotopic mapping methods in which the visual field is mapped with a smoothly moving stimulus (Engel et al. 1997). Initial analyses of lateral occipital regions did not find strong evidence of retinotopic organization (Tootell and Hadjikhani 2001) and noted a discontinuity in the eccentricity representation around this region. More recently, however, there have been some reports of visual field maps that lie in the vicinity of LO. Larsson and Heeger (2006) identified two hemifield representations, designated LO-1 and LO-2, that lie anterior to dorsal V3 and V3a/b, and posterior to the hMT+ complex. Hansen and colleagues (2007) identified a region designated as a dorsal part of V4, the visual field coverage of which complements that of ventral V4 (but see Brewer et al. 2005) and the anatomical location of which likely corresponds to part or all of LO-1.

However, the relationship between visual field maps such as LO-1 and LO2 and the object-selective region LO is not clear. While Larsson and Heeger (2006) describe LO-1 and LO-2 as belonging to the posterior extent of the lateral occipital complex (i.e., LO), it is unclear if these maps comprise the entirety of LO, or if both LO-1 and LO-2 would be included in the conventional definition of LO.

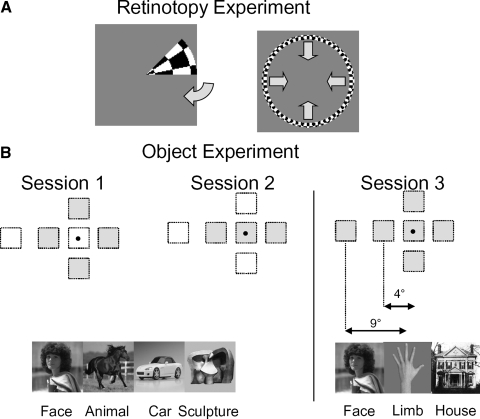

In this study, we sought to directly relate measurements of object selectivity with measurements of retinotopy. We localized the posterior extent of the LOC, region LO, using standard localizer methods (Grill-Spector et al. 2001; Malach et al. 1995). We then measured the degree of retinotopy in this region with conventional phase-encoded retinotopic mapping methods (Fig. 1A). We further tested the relationship between object category and position effects by performing a series of object experiments in which we presented different object categories (faces, animals, cars, sculptures, body parts, and houses) at different retinal positions in a block design (Fig. 1B) and examined to what extent position and category modulate LO responses. We asked: does LO have a consistent retinotopy? Does LO overlap with the visual field maps LO-1 and LO-2 (Larsson and Heeger 2006)? How do position effects measured with standard retinotopic methods relate to position effects for object stimuli? Do position and category separately modulate LO responses, and if so, what is the relative magnitude of each factor?

FIG. 1.

Design of our experiments. A: illustration of the stimuli in the retinotopic mapping experiments. The stimulus for 1 wedge and ring position is shown; arrows indicate the direction of motion of the wedge and ring. B: illustration of the 6 stimulus positions and object categories mapped in the object experiments and example object images for category. Shaded squares indicate the stimulus positions used in different sessions. The dot indicates the fixation point.

METHODS

Subjects

All MR imaging was performed at the Lucas Center at Stanford University. Six subjects participated in the study (4 males, 2 females), ages 25–37 yr. All subjects were right-handed and had normal or corrected-to-normal vision. Two subjects were the authors. Each subject participated in a session of the retinotopic mapping experiment, three scanning sessions for the block-design object experiment, and one functional localizer session for identifying object-selective regions of interest. All experiments were undertaken with the written consent of each subject, and procedures were approved in advance by the Stanford Internal Review Board on Human Subjects Research.

Stimuli

Stimuli were projected on to a screen mounted on the cradle for the imaging coil. Subjects lay on their backs in the bore of the MR scanner and viewed the screen through an angled first-surface mirror positioned ∼8 cm in front of their eyes. The mean distance between subjects' eyes and the stimulus image was 22 cm; the maximum size subtended by a stimulus at this distance was 28.5° (14.25° radius from the fovea).

LO LOCALIZER EXPERIMENT.

Each subject participated in one localizer session to define the LOC and region LO within this complex. Stimuli during these sessions were grayscale images of objects (faces, animals, cars, and sculptures) and scrambled objects (images divided into an 8 × 8 grid of tiles with the tile positions shuffled, see Supplemental Fig. S11 ), presented in a block design (12–16 s per block, 4–8 blocks per condition). Images were presented centrally and subtended ∼11°. Subjects fixated on a small (∼0.5°) fixation disc presented in the center of each image and were instructed to categorize the objects.

RETINOTOPIC MAPPING EXPERIMENTS.

The retinotopic mapping experiments employed clockwise rotating wedge and inward- and outward-moving ring stimuli, as described in Engel et al. (1997) and Sereno et al. (1995). These stimulus apertures contained 100% contrast checkerboard patterns with a spatial frequency of 0.85 cycle/°, phase-reversing at a temporal frequency of 2 Hz. Each scan consisted of eight stimulus presentation cycles, each lasting 24 s. Each retinotopic mapping session consisted of 2 to 4 scans of each stimulus type (ring and wedge, Fig. 1A).

For the ring stimuli, the last 2 s of each cycle consisted of a blank screen, separating the most foveal and peripheral stimuli. For the remaining 22 s, 11 rings of different sizes, with a constant separation between the inner and outer ring radii (2.8°), were presented. The positions of the rings overlapped by 50%; that is, each position in the visual field was covered by two rings, once in the inner half of the ring and once in the outer half. The innermost ring had an inner radius of 0° and appeared as a disk centered at the fovea. We verified the separability of responses between foveal and peripheral regions in the fMRI data by examining the distribution of voxels corresponding to the blank screen stimulus; there were few voxels with the phase corresponding to the onset of the blank compared with phases corresponding to ring stimuli, and eccentricity maps exclude voxels with this phase range.

The wedge stimuli consisted of 12 wedge apertures, each subtending an angle of 45°, rotating clockwise and extending out to the maximum 14° eccentricity. There was 33% overlap between each wedge and the preceding wedge.

OBJECT EXPERIMENTS.

The object experiments utilized grayscale images of human faces, four-legged animals (e.g., dogs, horses), body parts (limbs: hands, feet, legs, and arms), cars, abstract sculptures, and houses. Objects were presented at a range of viewpoints, and backgrounds (see Fig. 1B and Supplemental Fig. S1). Each experiment utilized 96–144 unique images from each category. Object images subtended 2.5° of visual angle at each position. We chose to use a constant image size because the foveal magnification factor in LO is unknown, and we wanted to vary only one parameter (position). Images were presented at six positions: centered at the fixation point (foveal); centered 4° above, below, to the left, and to the right of fixation; and centered 9° to the left of fixation. The experiment included three scanning sessions, each probing a subset of these positions (see Fig. 1B). In the first session, subjects viewed images presented 4° above, below, to the right or to the left of fixation. In the second session, subjects viewed images presented at the fixation, 4° to the left, and 4° to the right of fixation. In the third session, images were shown at all six positions.

Each session of the object experiment contained 8–12 runs of a block-design experiment. In each 12-s block different images from one category (face, animal, car, sculpture, body part, house) were shown at a specific position. Images were shown at a rate of 1 Hz. Image blocks were interleaved with baseline blocks (grayscale screen with fixation point) lasting 12 s. Each run contained one block for each position and category combination; thus the first session contained 16 blocks per run (4 categories × 4 positions), the second session 12 blocks per run, and the third session 18 blocks, split across two runs. The same set of object images were presented at each position within a scanning session. The presentation order of images was randomized across positions. For example, in the first session, the same set of 144 faces was presented in four different orders at each position.

Task.

During scanning, subjects were instructed to categorize each image, while maintaining fixation on a red fixation point (which was always present through the experiment, and which comprised a circle with ∼0.29° diam). Behavioral responses were collected during scanning using a magnet-compatible button box connected to the stimulus computer.

To ensure that subjects maintained fixation, subjects were instructed to respond within 1.5 s of a prompt, which was provided by the dimming of the fixation cross. The dimming prompt occurred randomly, with a 1.5- to 3.5-s interval between prompts, and was not synchronized to stimulus onsets. Key presses that occurred outside the 1.5-s period following a prompt were ignored. In addition, prompts the response time of which spanned the transition between two blocks were excluded from behavioral analysis because the correct response was ambiguous (and could be considered either the stimulus in the first or second block). Before scanning, subjects practiced this task to minimize false alarms. Runs for which overall categorization performance was low (<60%) due to a decrease in a subjects' overall vigilance were excluded from fMRI and behavioral analyses (19 runs of 197 total runs across all subjects and sessions).

In our block-design task, accuracy did not depend on position (2-way ANOVA across sessions: all P values >0.07, ANOVAs on data for each session), which may reflect the predictability of the response (the same category repeated throughout a block). However, we note that subjects were somewhat less accurate at classifying objects at the 9° left position compared with other positions (P = 0.005, 1-tailed t-test); (Table 1).

TABLE 1.

Mean behavioral performance during object experiments

| Percentage Correct | Response Time, ms | |

|---|---|---|

| Session 1 | ||

| Up 4° | 88.0 ± 5.2 | 705 ± 34 |

| Right 4° | 84.5 ± 7.1 | 707 ± 32 |

| Down 4° | 88.5 ± 3.5 | 720 ± 29 |

| Left 4° | 87.9 ± 4.5 | 708 ± 13 |

| Session 2 | ||

| Foveal | 81.1 ± 2.4 | 546 ± 36 |

| Right 4° | 85.4 ± 2.1 | 538 ± 17 |

| Left 4° | 82.8 ± 4.5 | 543 ± 20 |

| Session 3 | ||

| Foveal | 84.9 ± 5.3 | 739 ± 19 |

| Up 4° | 83.8 ± 3.7 | 729 ± 19 |

| Right 4° | 81.8 ± 3.9 | 698 ± 40 |

| Down 4° | 81.5 ± 1.4 | 755 ± 38 |

| Left 4° | 85.5 ± 7.0 | 727 ± 37 |

| Left 9° | 78.0 ± 1.0 | 738 ± 71 |

Values are means ± SD.

Anatomical scans

For each subject, we obtained a high-quality whole brain anatomical volume during a separate scanning session. These anatomies were collected using a 1.5 T GE Signa scanner and a head coil at the Lucas Imaging Center. Data across different functional imaging sessions were aligned to this common reference anatomy to use the same ROIs across sessions and restrict activations to gray matter. The whole brain anatomy was the average of three T1-weighted SPGR scans (TR = 1,000 ms, FA = 45°, 2 NEX, FOV = 200 mm, inplane resolution = 0.78 × 0.78 mm, slice thickness: 1.2 mm, sagittal prescription) which were aligned using the mutual-information coregistration algorithm in SPM (Maes et al. 1997): http://www.fil.ion.ucl.ac.uk/spm/. The reference anatomy was then segmented into gray and white matter using mrGray (http://white.stanford.edu/software/) and SurfRelax (http://www.cns.nyu.edu/∼jonas/software.html). The resulting segmentation was used to restrict activity to gray matter, and produce a mesh representation of the gray matter surface (Teo et al. 1997) for visualization of activations on the cortical surface.

Functional scans

Functional scans were performed using a 3T GE Signa scanner at the Stanford Lucas Imaging Center. We utilized a custom receive-only occipital quadrature RF surface coil (Nova Medical, Wilmington, MA). The dimensions of the coil are: interior dimension left/right = 9 in, exterior dimension left/right = 10⅛ in; height = 5¼ in; length = 7½ in. Subjects lay supine with the coil positioned beneath the head, and a front-angled mirror mounted overhead for viewing the stimulus.

RETINOTOPIC MAPPING EXPERIMENTS.

During retinotopic mapping sessions, we collected data from 32 oblique coronal slices oriented perpendicular to the calcarine sulcus, beginning at the posterior end of the brain and extending forward. Functional scans employed a T2*-weighted spiral-out trajectory (Glover 1999) gradient-recalled-echo pulse sequence with the following parameters: one-shot, TE = 30 ms, TR = 2,000 ms, flip angle = 76°, FOV = 200 mm, 32 slices that were 3 mm thick and with voxel size = 3.125 × 3.125 mm. fMRI data were co-registered to anatomical images taken at the same prescription. To acquire anatomical images, we used a T1-weighted SPGR pulse sequence (TR = 1,000 ms, FA = 45°, 2 NEX, FOV = 200 mm, resolution of 0.78 × 0.78 × 3 mm).

LO LOCALIZER.

We ran the LO localizer using the same resolution and prescription as the retinotopic mapping experiments described in the preceding text.

OBJECT EXPERIMENT.

Data for the object experiment were collected during three scanning sessions. For each session, we collected 26 slices oriented perpendicular to the calcarine sulcus covering occipito-temporal cortex of both cortical hemispheres, using 1.5-mm isotropic voxels. Functional scans used the following parameters: TE = 30 ms, TR = 2,000 ms, two-shot, flip angle = 77°, FOV = 192 mm. During each session, we acquired inplane anatomical images at the same prescription as the functional scans. Anatomical inplanes were used to co-register each session's data to the subject's whole brain anatomy.

Data analysis

TIME SERIES PROCESSING.

Blood-oxygen-level-dependent (BOLD) data were motion corrected via a robust affine transformation of each temporal volume in a data session to the first volume of the first scan (Nestares and Heeger 2000). This correction produced a motion estimate for each scan; scans with >1.5-mm estimated net motion were removed from the analysis. This included one retinotopy scan, and a total of 10 scans of 197 total scans from the object experiment (discarded high-motion scans were present for 3 of the 6 subjects). An average of 10 runs per session from each subject were collected that had <1.5 mm net motion.

Data were temporally high-pass filtered with a 1/40-Hz cutoff. We converted the time series into percent signal change by dividing each temporal volume by a baseline volume (which was an estimate of the mean response across a scan). As a baseline volume, we used the spatially low-pass-filtered version of the temporal mean BOLD image as described in (Nestares and Heeger 2000). This procedure is similar to dividing each voxel by its mean response but instead of using just each voxel's mean response, the estimate of the mean response is also based also on its neighboring voxels. We used this baseline to control for potential effects of large draining veins and partial volume effects (see Ress et al. 2007).

RETINOTOPIC MAPPING ANALYSIS.

Our retinotopic mapping experiments employed a standard phase-encoded retinotopy design (Engel et al. 1997; Sereno et al. 1995). For each subject, we analyzed the average of three to four polar angle and wedge mapping scans. Stimulus-driven modulation of the BOLD response in each functional voxel was identified by measuring the signal coherence of each voxel's time series at the stimulus frequency compared with all other frequencies (Engel et al. 1997). The phase was measured as the zero-crossing point of the sinusoid predictor relative to the start of each cycle, when the BOLD response was rising (∼6 s before the peak response). This phase was mapped into physical units by identifying the stimulus parameter (polar angle or eccentricity) corresponding to this time.

To assess whether our LO data reflected significant modulations with position, we compared the coherence distribution in LO to a control ROI that was not expected to exhibited position effects. Our control ROI was a 30-mm gray matter disk defined on the dorsal bank of the superior temporal sulcus. For each subject and hemisphere, we randomly selected a number of voxels from this ROI equal to the number of voxels in LO for that hemisphere. We extracted the coherence values for these voxels from the same polar angle and eccentricity-mapping scans. We tested whether the observed distributions differed from this control ROI using a nonparametric Kolmogorov-Smirnov (K-S) test.

GENERAL LINEAR MODEL.

We applied a general linear model (GLM) to object experiment data on a voxel-by-voxel basis. We used the hemodynamic impulse response function used in SPM2 (http://www.fil.ion.ucl.ac.uk/spm/). Data were not spatially smoothed. To avoid potential false positive identification of voxels, we used a fairly stringent threshold for identifying LO (objects > scrambled object images, P < 10−5, voxel level, uncorrected).

We estimated BOLD response amplitudes for each stimulus condition by computing the beta coefficients from a GLM applied to the mean preprocessed (high-pass filtered and converted to percentage signal change) time series in the ROI for each subject, and computing the mean and SE of the mean across subjects. The beta value for each predictor reflects the difference between times when the stimulus is shown and the blank baseline period rather than differences relative to the mean signal. Because the predictors in the design matrix were scaled to have a maximum absolute value of 1, the values of the beta coefficients match the units of the time series, which is in units of percentage signal change.

ROI selection

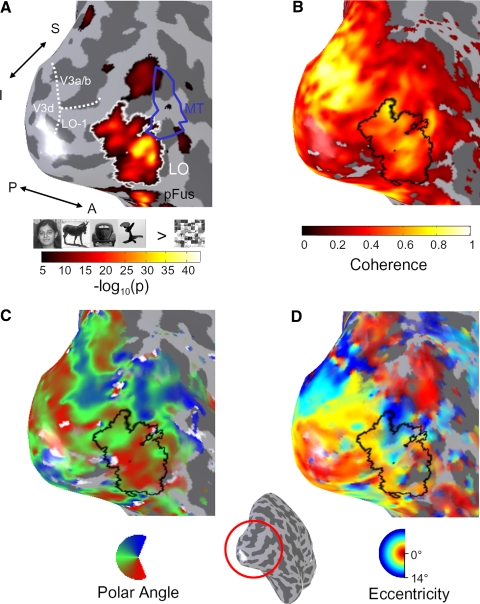

We identified LO based on an independent localizer scan using both functional and anatomical cues. LO was defined as a region that responds more strongly to objects (faces, animals, cars, and sculptures) compared with scrambled objects at (P < 10−5, GLM applied to each voxel) and was located along the lateral extent of the occipital lobe (Malach et al. 1995) located adjacent and posterior to MT (Fig. 2A). This region is anatomically distinct from a separate object-selective region along the posterior fusiform gyrus and occipito-temporal sulcus (referred to as pFus or pOTS). All analyses in this paper are focused on the properties of LO.

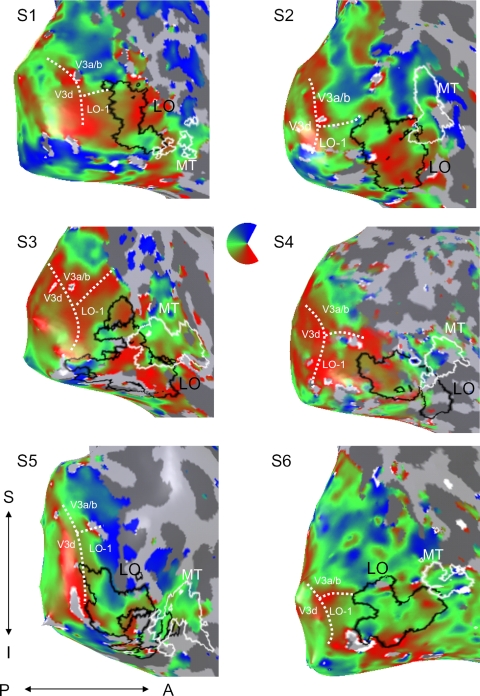

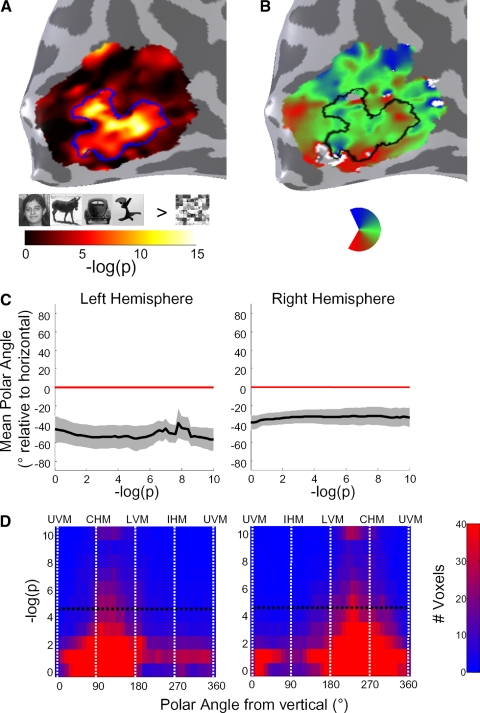

FIG. 2.

Activation maps in the lateral occipital (LO) object-selective region during localizer and retinotopy experiments. Results are shown on the inflated cortical surface representation of subject S2, zoomed in on the lateral occipital aspect of the right hemisphere. Inset: location of zoomed region (red circle) with respect to the right hemisphere. A: localization of region LO. Overlay indicates −log10(p) value of a t-test between object images (faces, cars, sculptures, and animals) and scrambled object images during a localizer scan, thresholded at P < 10−5, uncorrected. LO is outlined in white. Area MT is outlined in blue, as defined in a separate localizer. The boundary between areas V3d, V3a/b, and LO-1 is shown by the dotted white line. B: coherence map during a polar angle-mapping scan. C: polar angle map. D: eccentricity map. For B–D, overlays are thresholded at a coherence of 0.20, and LO is outlined in black. Direction labels: S, superior; I, inferior; P, posterior; A, anterior. Inset: zoomed-out view of right-hemisphere cortical reconstruction, showing the location of the zoomed region.

ROIs defined from visual field maps were identified by reversals in the representation of polar angle as described by Engel et al. (1997), Sereno et al. (1995), and Tootell et al. (1997). Region LO-1 was located anterior to V3d, adjacent to a forking (“Y”) of the lower visual representation near the boundaries with V3d and V3a/b as described in Larsson and Heeger (2006). Region LO-2, another hemifield representation described in Larsson and Heeger (2006) was located adjacent to LO-1 and had a visual field reversal (Fig. 3A). Our region labeled “V3a/b” includes V3a and, in some subjects, V3b described in Wandell et al. (2007). Note that Smith et al. (1998) describes a slightly different V3b definition that would correspond to the lower quarter field of LO-1 in our study.

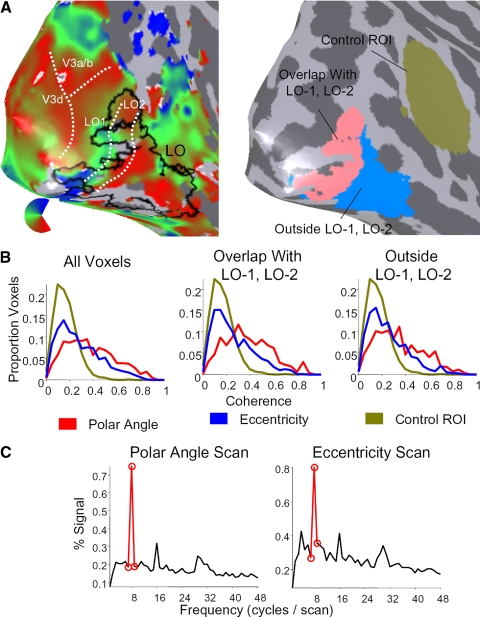

FIG. 3.

Retinotopic modulation for voxels in LO overlapping with, and outside of, visual field maps LO-1 and LO-2. A: separation of the object-selective region LO into 2 subsets. Left: polar angle map shown on right hemisphere cortical surface of subject S3 (same view as in Fig. 2) with boundaries between regions LO-1 and LO-2 marked in white, and the outline of LO marked in black. Right: 2 subsets of LO shown in the same view. The pink region of interest (ROI) is the region that overlaps with LO-1 and LO-2, whereas the blue ROI is the region lying outside these maps. The ochre ROI is a control ROI positioned along the superior temporal sulcus. B: histograms of signal coherence at the stimulus frequency during polar angle scans (red), eccentricity scans (blue), and in the control ROI (ochre; combined coherence from both polar angle and eccentricity scans). Left: data from all LO voxels; the middle panel shows data from the subset of LO which overlaps with visual field maps LO-1 or LO-2; right: data from the subset of LO lying outside LO-1 and LO-2. C: mean Fourier spectrum across all voxels for the subset of LO lying outside LO-1 and LO-2, during the polar angle (left) and eccentricity (right) scans. Power at the stimulus frequency is indicated in red.

We also defined subregions within LO for analyzing block experiment data (Fig. 6) based on the preferred polar angle and eccentricity estimates during retinotopy scans. For each subset, we selected voxels with a coherence of ≥0.20. The polar angle-defined bands were: “upper-preferring” voxels with preferred polar angle within 45° of the upper vertical meridian (UVM); “horizontal-preferring” voxels, with preferred polar angles 45–135° from UVM in the contralateral visual field; “lower-preferring” voxels with preferred angles within 45° of the lower vertical meridian (LVM). Eccentricity bands were selected to include eccentricities which corresponded to the position of the object stimuli in the block design experiment. The eccentricity-defined subregions were: “foveal” voxels, with preferred eccentricity within 2° of the fovea; “2–6°” voxels,; “7–11°” voxels; and “>11°” voxels, with preferred eccentricities between 11–14° from the fovea (outside the location where objects were presented).

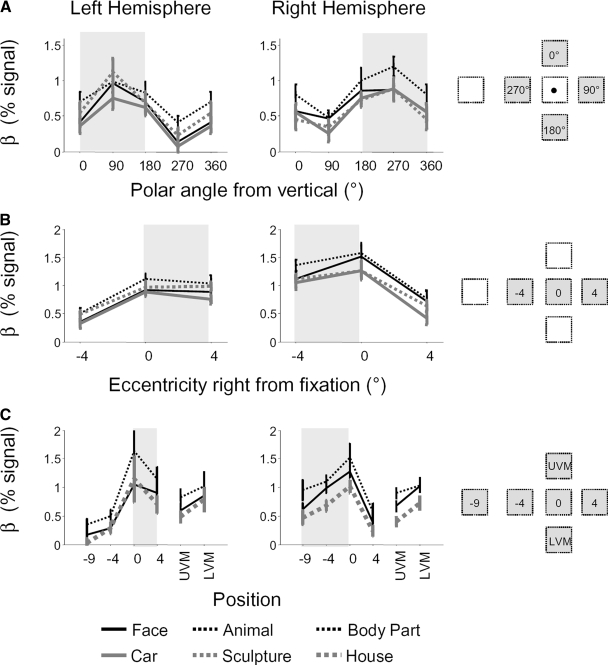

FIG. 6.

A: mean response amplitudes across LO to all objects compared with the baseline fixation condition for stimuli at 4 different polar angles with the same eccentricity (4°). B: mean response within 3 subsets of LO. Solid blue: voxels that preferred upper visual angles; dashed green: voxels that preferred horizontal visual angles; dotted red: voxels that preferred lower visual angles. For consistency with Fig. 5, the 0 = 360° condition data point is repeated. C: mean LO response to different eccentricities along the horizontal meridian relative to the fixation baseline. D: LO response to different eccentricities in 4 subsets of LO: solid red: preferred eccentricity is within 2° of the fovea; dashed yellow: 2–6° eccentricity; dotted cyan: 7–11° eccentricity; dash-dot blue: 11–14° eccentricity. Data are presented from session 3 in the object experiment; error bars represent SE across subjects. The visual field contralateral to each hemisphere is shaded.

PATTERN ANALYSES OF THE DISTRIBUTED RESPONSE ACROSS AN ROI.

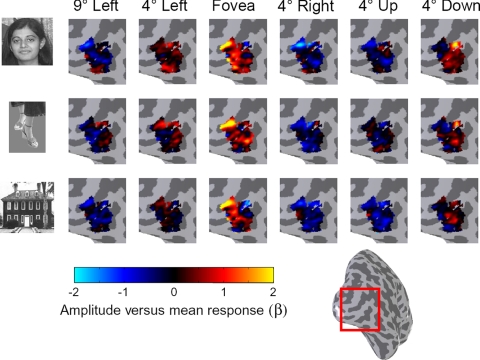

A distributed response pattern for each stimulus condition was estimated from the voxel-based GLM betas for each condition (Figs. 8 and 9) This analysis was restricted to LO voxels for which the GLM explained ≥10% of the variance of the voxel's time series during the object experiment.

FIG. 8.

Example LO distributed response patterns to different object categories and stimulus positions shown on the lateral aspect of the right hemisphere cortical surface for subject S2. Each panel shows the amplitude (beta weight from a general linear model) after subtracting the mean beta in each voxel across all conditions. Inset: inflated right cortical hemisphere, with red square indicating the zoomed region.

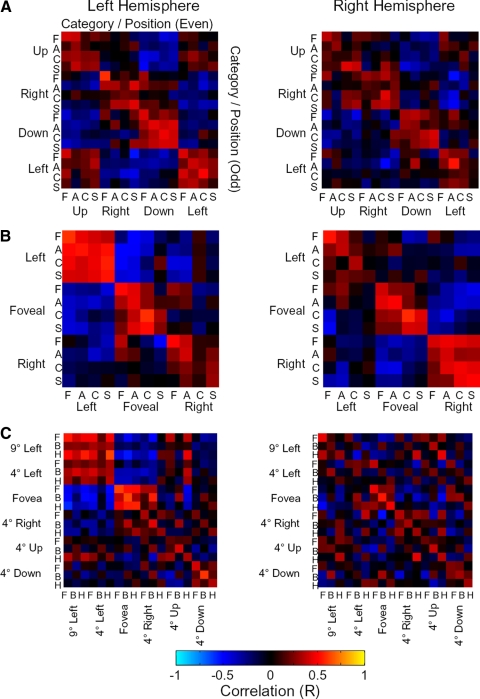

FIG. 9.

Mean cross-correlation matrices across subjects for the object experiment. Each element represents the correlation coefficient between LO response patterns for 2 conditions in even and odd runs. A: session 1 data; B: session 2 data; C: session 3 data. Abbreviations indicate categories of objects: F, face; A, animal; C, car; S, sculpture; B, body part; H, house. Positions are indicated at left and at the bottom.

The response pattern to a given stimulus (Fig. 8) was represented as a vector of length N (where N is the number of voxels exceeding the 10% threshold within 1 subject and 1 hemisphere). We subtracted the mean response (mean beta) across all stimuli from each voxel, see (Haxby et al. 2001). This step controls for potential voxel effects (e.g., high responsive vs. low responsive voxels) which may otherwise drive cross-correlations between response patterns.

To perform split-half analyses (Figs. 9 and 10 and Supplemental Figs. S6 and S7), we divided the data from each session into two independent subsets corresponding to even and odd runs. We computed the response pattern separately for each subset and calculated the Pearson's correlation coefficient (R) between pairs of response patterns to each stimulus. We computed the cross-correlation matrix of these coefficients across all stimulus pairings separately for each subject and hemisphere, and then computed the mean cross-correlation matrix across subjects (Fig. 9).

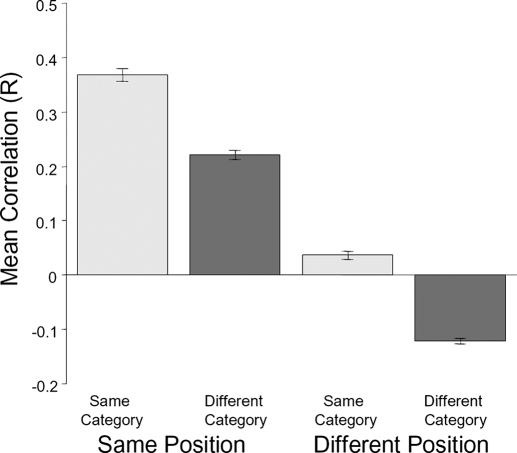

FIG. 10.

Mean correlations for different types of comparisons in the split-half analysis. Data reflect correlations from all 3 sessions in the object experiment. Error bars represent SE across all correlation coefficients.

RESULTS

Retinal position sensitivity in LO

LO was defined in each subject based on a conjunction of functional (higher responses to objects than scrambled objects, P < 10−5) and anatomical cues (see methods, Figs. 2A and Fig. 4). Region LO is located along the lateral surface of occipital cortex with the superior end roughly between V3a/b and MT, extending ventrally. In some subjects, LO overlapped somewhat with MT as per previous reports (Kourtzi et al. 2002). We observed consistent position modulation in LO as revealed by several measures. First, LO exhibited significant coherence values during retinotopic phase mapping experiments, reflecting strong BOLD modulation at the stimulus frequency compared with other frequencies (Engel et al. 1997) (Fig. 2B). Second, the preferred polar angle within LO varied smoothly along the cortical surface with a large proportion of voxels preferring contralateral lower visual field stimulation (Fig. 2C). Third, the representation of eccentricity proceeded smoothly along LO, from a confluent fovea near the occipital pole to a peripheral representation at the dorsal and anterior end (Fig. 2D). We analyzed each of these retinal position effects in turn, but first asked how much of this modulation results from the nearby visual field maps of LO-1 and LO-2.

FIG. 4.

The location of LO in relation to polar angle maps in individual subjects. The inflated right hemisphere cortical surface for each subject is shown, zoomed in on the lateral occipital aspect. Left hemisphere data are shown in Supplementary Fig. S2. The polar angle map is thresholded showing voxels with a coherence >0.20 (see methods). LO is outlined in black. MT is marked in white. The boundary (“Y” fork in lower representation) between V3d, V3a/b, and LO-1 is indicated by the dashed white lines. (LO-2 is not indicated to keep the outline of LO clear.) Direction labels: S, superior; I, inferior; P, posterior; A, anterior.

Relation of object-selective LO to visual field maps LO-1 and LO-2

We compared the location of our object-selective ROI, LO, to the visual field maps, LO-1 and LO-2 (Larsson and Heeger 2006). We first defined LO-1 and LO-2 on the cortical hemispheres for which the reversals defining the maps were sufficiently clear (see methods, Fig. 4 and Supplemental Fig. S2, Table 2). We were able to identify LO-1 in 10 of 12 hemispheres, and LO-2 in 6 of 12 hemispheres. For these hemispheres, we divided LO into two regions: one that overlapped with LO-1 and LO-2, and one that lay outside this region (Fig. 3A). LO-1 and LO-2 exhibited a modest degree of overlap with LO, (see Table 2). On average, 8.1 ± 10.0% of LO voxels overlapped with LO-1, and 18.8 ± 11.8% of voxels overlapped with LO-2.

TABLE 2.

Measurement of the overlap between LO and LO-1 and LO-2 for each subject and hemisphere

| Subject | Hemisphere | Defined? | LO Voxels, mm3 | Overlap Voxels, mm3 | Percentage |

|---|---|---|---|---|---|

| LO-1 | |||||

| S1 | LH | Y | 2439 | 423 | 17.3 |

| S1 | RH | Y | 1106 | 95 | 8.6 |

| S2 | LH | Y | 1764 | 0 | 0 |

| S2 | RH | Y | 3878 | 0 | 0 |

| S3 | LH | Y | 2603 | 0 | 0 |

| S3 | RH | Y | 2708 | 191 | 7.1 |

| S4 | LH | N | 806 | 0 | 0 |

| S4 | RH | Y | 2170 | 0 | 0 |

| S5 | LH | Y | 1982 | 579 | 29.2 |

| S5 | RH | Y | 1735 | 279 | 16.1 |

| S6 | LH | N | 1725 | 0 | 0 |

| S6 | RH | Y | 1416 | 31 | 2.2 |

| LO-2 | |||||

| S1 | LH | Y | 2439 | 126 | 5.2 |

| S1 | RH | Y | 1106 | 334 | 30.2 |

| S2 | LH | N | 1764 | 0 | 0 |

| S2 | RH | Y | 3878 | 219 | 5.6 |

| S3 | LH | N | 2603 | 0 | 0 |

| S3 | RH | Y | 2708 | 874 | 32.3 |

| S4 | LH | N | 806 | 0 | 0 |

| S4 | RH | N | 2170 | 0 | 0 |

| S5 | LH | Y | 1982 | 322 | 16.2 |

| S5 | RH | N | 1735 | 0 | 0 |

| S6 | LH | N | 1725 | 0 | 0 |

| S6 | RH | Y | 1416 | 327 | 23.1 |

LO, lateral occipital.

Retinotopic stimuli modulated subsets of LO lying within, as well as outside of, LO-1 and LO-2. We quantified the strength of position modulation in both subsets of LO by comparing the distribution of coherence values across voxels (Fig. 3B). A large proportion of voxels in LO exhibited high signal coherence at the stimulus frequency during both the polar angle (red) and eccentricity (blue) scans. These coherence values were significantly greater than in a control ROI selected along the superior temporal sulcus, which is not expected to have position sensitivity (see methods; 1-tailed Kolmogorov-Smirnov test for each subject and hemisphere: all P values <10−7). The strong coherence in LO voxels lying outside LO-1 and LO-2 can also be seen in the mean Fourier spectrum for these voxels (Fig. 3C): the power at the stimulus frequency is significantly larger than all other frequencies (Z score for polar angle scan: 14.65; eccentricity scan: 8.79). These results indicate that object-selective regions exhibit substantial position modulation with checkerboard stimuli, only part of which is attributable to the visual field maps LO-1 or LO-2.

Lower visual field bias in LO

Having established that there is significant position modulation within LO, we examined the distribution of preferred polar angle and eccentricity in this region. We first examined the distribution of preferred polar angle in each subject (Fig. 4 and Supplementary Fig. S2).

The relative location of LO to polar angle reversals appeared to vary across subjects. For some subjects, the posterior boundary of LO was close to LO-1 and V3a/b (e.g., S1 and S2), while for others it was localized more anterior and inferior (e.g., S4).

Despite this variability, a large number of voxels in LO preferred the lower visual field (colored red on the polar angle maps).

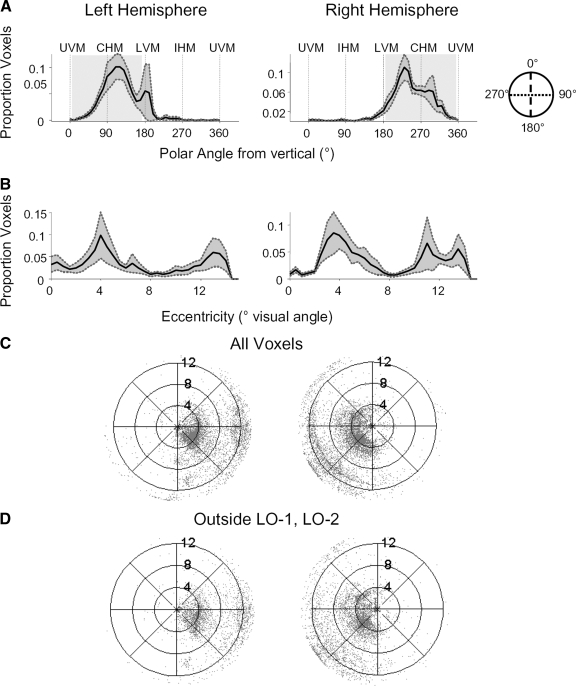

We quantified the apparent preference for the lower visual field by calculating the distribution of preferred polar angles across voxels with significant position modulation (coherence >0.20) in LO (Fig. 5A). A clear contralateral lower bias could be seen across subjects: more voxels preferred the polar angles in the lower quadrant [extending from the contralateral horizontal meridian (CHM) to the LVM] of the visual field contralateral to the respective cortical hemisphere. The general bias toward the contralateral lower visual field occurred across the LO as a whole (Fig. 5C) as well as within the subset of LO lying outside the maps LO-1 and LO-2 (Fig. 5D).

FIG. 5.

Distribution of preferred polar angle and eccentricity across LO voxels. For all panels, left represents left hemisphere data and the right represents right hemisphere data across all subjects and all voxels with a coherence >0.2. A: polar angle distribution (means ± SE across subjects) measured in degrees clockwise from the upper vertical meridian. UVM, upper vertical meridian; CHM, contralateral horizontal meridian; LVM, lower vertical meridian; IHM, ipsilateral horizontal meridian. B: eccentricity distribution (means ± SE across subjects) in degrees visual angle from the fovea. The visual field contralateral to each hemisphere is shaded. C and D: visual field coverage across subjects, for all LO voxels (C) and for those LO voxels lying outside LO-1 and LO-2. D: each represents a voxel. Only sessions in which either LO-1 or LO-2 were identified are included.

There was a wide range of preferred eccentricities in LO (Fig. 5B). The distribution of preferred eccentricity was more variable between subjects and hemispheres, as reflected by the larger SE for eccentricity than polar angle distribution. However, there were more voxels that preferred either parafoveal (∼4°) or the far periphery (>12°) than mid eccentricities (∼8°).

The joint preferred distribution of polar angle and eccentricity in LO further illustrates the biases shown in the histograms (Fig. 5C). The polar plot is denser for the contralateral and lower visual field and denser around the parafoveal eccentricities. The general bias toward the contralateral lower visual field occurred across the LO as a whole (Fig. 5C) as well as within the subset of LO lying outside the maps LO-1 and LO-2 (Fig. 5D).

Lower meridian bias for object stimuli

We next asked whether the lower visual field bias observed with standard retinotopic mapping stimuli (checkerboard wedge and rings) predicts responses to object stimuli presented at different retinotopic locations (Fig. 1B). Based on our analyses of retinotopic effects, we would expect a stronger response to objects presented in the lower visual field and contralateral to each hemisphere. Due to the larger number of voxels preferring lower visual angles, we may expect the lower bias to manifest itself in the mean response across the ROI. We may further expect the preference to be more prominent in the subset of LO preferring these angles during retinotopy scans.

We first compared the mean BOLD response amplitude across all LO voxels to object stimuli presented at the same eccentricity (4°) and different polar angles, along the UVM, LVM, and left and right horizontal meridians (LHM and RHM). Because of the pronounced lateralization effect in visual cortex, we will refer to the horizontal stimulus positions as ipsilateral (IHM) and contralateral (CHM) to the respective cortical hemispheres. We performed these analyses separately for sessions 1 and 3, with the same results.

Consistent with previous literature (Grill-Spector et al. 1998; Hemond et al. 2007; McKyton and Zohary 2007), we find a lateralization effect: responses to objects along the CHM were greater than responses along the IHM (Fig. 6A; 2-tailed t-test: all P < 10−6 across sessions, uncorrected). However, we also observed a lower bias: responses were stronger to objects along the LVM compared with the UVM (all P < 0.004). Regardless of the position, however, all object stimuli elicited a significantly higher response compared with the fixation baseline (all P < 10−15, t-test).

This lower bias was specific to particular subsets of LO. In Fig. 6B, we show the responses to objects within three subsets of LO, defined based on their preferred polar angle during retinotopy scans. Voxels preferring the lower visual field (within 45° of the LVM, see methods; red curve) responded significantly more to object stimuli presented on the LVM versus UVM (2-tailed t-test, P < 10−6). This lower bias also occurred (albeit weakly) in horizontal-preferring voxels (green curve, preferred angle within 45° of the CHM, P = 0.04, t-test). Upper-preferring voxels (blue curve), preferred angle within 45° of the UVM) did not show either a lower bias (P > 0.52) or an upper bias (P > 0.28).

Foveal bias for object stimuli

We next analyzed the effects of presenting objects at different positions along the horizontal meridian (Fig. 6, C and D). Due to the wide range of preferred eccentricities in our retinotopic data (Fig. 5B), we did not have strong predictions for an eccentricity bias in this region.

LO responses to objects presented along the horizontal meridian exhibited a foveal preference (Figs. 5 and 6, C and D). The response to foveally-presented objects was consistently higher than any other position (P < 0.001, 1-tailed t-test). The foveal bias was observed even in a subset of LO selected to prefer eccentricities outside the fovea based on the retinotopic data (Fig. 6D, 2–6° subset, yellow line; P < 10−4). Subsets of LO predicted to prefer more peripheral locations (including a subset whose preferred eccentricity lies outside the stimulus presentation positions, >11°), responded substantially to both foveal and peripheral locations with no significant difference in response between foveal and contralateral positions (all P > 0.48).

The foveal bias observed for objects differs from the effects one might predict from retinotopic experiments. When objects were shown, LO tended to prefer more foveal stimulus positions than predicted by the retinotopy experiments, and different retinotopically defined eccentricity bands did not markedly differ in their sensitivity to the eccentricity of objects. We tested whether position effects varied in each subset with a two-way ANOVA, using object position and eccentricity band as factors. We observed a differential response across eccentricity bands [interaction eccentricity band × position; left hemisphere: F(9,11) = 2.9, P = 0.02; right hemisphere: F(9,11) = 2.2, P = 0.02].

Relationship between object category and position effects

LO has been implicated in object categorization (Bar et al. 2001; Grill-Spector et al. 2000, 2004) and responds to a wide range of object categories (Grill-Spector and Malach 2004; Grill-Spector et al. 2001). It has been proposed that the distributed responses across object-selective regions, including LO, may inform categorization decisions in the brain (Cox and Savoy 2003; Haxby et al. 2001). We therefore examined how the position effects we observed relate to object category. We addressed this question at two levels: first analyzing the mean response across LO and then examining the distribution of responses across voxels.

For all categories, LO responses had a similar sensitivity to position (Fig. 7). We examined the effects of category and position effects, and tested for potential interactions, with a series of ANOVAs, summarized in Table 3. For each hemisphere's data, and for each session in the object experiment, we observed significant effects of position (P < 10−8 across all ANOVAs). Category effects were less prominent and were only significant for some experimental sessions. These category effects were driven by a slightly higher response for animate categories (body parts, animals, and faces) over inanimate categories (cars, sculptures, and houses). Category effects were weaker (smaller F values) than position effects. Finally, category and position appeared to be independent: there were no significant interactions between category and position effects in LO (P > 0.45 for all ANOVAs).

FIG. 7.

Mean response amplitudes across LO during object experiments, for each category relative to the fixation baseline, for session 1 (A), session 2 (B), and session 3 (C). Icons at left indicate stimulus positions. Error bars reflect SE across subjects. The visual field contralateral to each hemisphere is shaded.

TABLE 3.

ANOVAs on the mean response in LO during object experiments

|

LH |

RH

|

||||||

|---|---|---|---|---|---|---|---|

| Source | d.f. | F | Prob>F | Source | d.f. | F | Prob>F |

| Session 1 | |||||||

| Position | 3 | 27.96 | 1.82E-12 | Position | 3 | 22.40 | 1.26E-10 |

| Category | 3 | 3.97 | 0.01 | Category | 3 | 5.41 | 0.00 |

| Position* category | 9 | 0.39 | 0.93 | Position* Category | 9 | 0.35 | 0.95 |

| Error | 80 | Error | 80 | ||||

| Total | 95 | Total | 95 | ||||

| Session 2 | |||||||

| Position | 2 | 41.73 | 2.87E-12 | Position | 2 | 26.47 | 4.54E-09 |

| Category | 2 | 0.07 | 0.93 | Category | 2 | 2.11 | 0.13 |

| Position* category | 4 | 0.93 | 0.45 | Position* Category | 4 | 0.34 | 0.85 |

| Error | 63 | Error | 63 | ||||

| Total | 71 | Total | 71 | ||||

| Session 3 | |||||||

| Position | 5 | 13.41 | 9.04E-10 | Position | 5 | 18.38 | 1.54E-12 |

| Category | 2 | 5.28 | 6.79E-03 | Category | 2 | 18.73 | 1.57E-06 |

| Position* category | 10 | 0.23 | 0.99 | Position* Category | 10 | 0.28 | 0.98 |

| Error | 90 | Error | 90 | ||||

| Total | 107 | Total | 107 | ||||

Two-way ANOVAs (effects of position and category) were run separately on each hemisphere. Results from three ANOVAs are presented, one for each object experiment session. Each bin in the ANOVA contained 6 measurements (mean response for each subject per position and category).

The relatively weak effect of category on the mean LO response may not be very surprising given that the mean response has been reported not to exhibit strong category preference (Grill-Spector 2003). Further, there is evidence that LO voxels reflect heterogeneous neural populations, both in terms of position (Grill-Spector et al. 1999), (Figs. 5 and 6B) and category sensitivity (Cox and Savoy 2003; Haxby et al. 2001). We therefore examined the effects of these factors on the pattern of response across voxels.

Figure 8 illustrates LO response patterns in a single subject to a subset of our stimuli, three object categories presented at six retinal positions. Each image presents the distributed response to a given stimulus across all LO voxels (see methods). As can be seen in these images, patterns of response to different stimuli appeared more similar if the two stimuli were presented at the same retinal position (same column) than if they were of the same category (same row). For instance, responses to different stimuli presented foveally (3rd column) appear similar, whereas responses to faces at different positions (1st row) appear more distinct.

Split-half analyses on LO response patterns

We quantified the effects of position and category on LO response patterns by performing a split-half analysis (Figs. 9 and 10 and Supplementary Fig. S3; see methods). In this analysis, we divided the data from each scanning session in two independent halves, composed of odd and even runs. We then computed the correlation between response patterns to same (or different) categories in the same (or different) positions. In the cross-correlation matrices in Fig. 9, stimuli are organized according to first the position, then the category of a stimulus. The diagonal values represent the correlation values to the same position and category stimuli (but blocks containing different exemplars of the category) in even and odd runs. Elements just off the diagonal reflect correlations between different categories at the same position. Elements further off the main diagonal reflect correlations across positions. As can be seen in the correlation matrices, same-position comparisons produced higher correlations than across-position comparisons. Categorical effects can also been seen: on-diagonal values are slightly higher than those just off the diagonal, both for within-position comparisons, as well as across-position comparisons. These effects are particularly noticeable in session 3 (Fig. 9C).

We used the values from the cross-correlation matrices to address questions about the relative effect of position or category on response patterns (Fig. 10 and Supplementary Fig. S3). We first assessed category effects: given that two sets of stimuli were presented at the same position, were LO responses more similar if the stimuli were drawn from the same versus different categories? We found that this was the case: the mean correlation was higher for same-category response patterns than for different-category response patterns (Fig. 10, 1st 2 bars; 1-tailed t-test for each session; all P values <10−4). We also found a category effect across retinal positions: given that two stimuli were presented at different positions, response patterns were less correlated if the category was different compared with the same (Fig. 10, last 2 bars: all P values <10−7).

By comparison, we did not see a corresponding category effect when we ran the same split-half analysis on V1 data (Supplemental Figs. S4 and S5): V1 response patterns showed a similar level of correlation regardless of whether the category was the same or different both for same-position (t-test: all P values >0.09, 1st 2 bars in Supplemental Fig. S4C) and across-position comparisons (all P values >0.28, last 2 bars in Supplemental Fig. 4C). This indicates that the category effects we observe in LO do not reflect low-level visual differences between stimulus classes.

We next assessed position effects: LO response patterns to the same category were substantially more similar if they were presented at the same position versus different positions (Fig. 10, 1st and 3rd bars: P < 10−7). Changing the retinal position of a stimulus appeared to eliminate the correlation altogether: the mean correlation for same-category, different-position comparisons was close to zero. This position effect also occurred for across-category stimulus comparisons (2nd and 4th bars: all P < 10−30), with different-category, different-position response patterns being anti-correlated. The effects of changing position and category on response patterns appeared to be linear: two-way ANOVAs on the correlation coefficients for each session (factors: same vs. different category, same vs. different position) revealed no significant interactions between position and category (all F values <1.02, all P values >0.31).

Control for methods used to estimate response amplitudes

Do our split-half analysis results depend on our methods for estimating response amplitudes? We performed our split-half analyses after subtracting the mean response from each voxel as per other pattern analyses in the literature (Haxby et al. 2001). The motivation behind this step is to ensure that the analysis reflects the relative preference of each voxel to different stimuli and remove the main effect of voxels. This procedure is also used in analysis of optical imaging data, for instance in identifying orientation preferences (Zepeda et al. 2004).

One consideration when applying this step is whether the stimuli span a space of possible stimuli that may activate a neural population (e.g., a range of orientations). If a stimulus set only samples a small subset of the space, it may bias the results. For instance, it may subtract out as uninformative a voxel that responds to all stimuli in the subset although it may respond differentially to other stimuli that were not presented.

To examine this possibility, we repeated our split-half analysis without this normalization step. This control examined the distributed responses to different conditions relative to the blank baseline (which is independent from our experimental conditions). When the mean response is not removed from each voxel, LO response patterns to all stimuli were positively correlated (mean R = 0.43 across all comparisons, P < 10−30, 1-sided t-test; Supplemental Fig. S5). Nevertheless, there was the same relative pattern among comparison types (2-way ANOVA: position effects: all F > 7.5, all P < 0.005; category effects: all F > 7.2; all P < 0.007) with the position effect being larger than the category effect (same-position, different-category correlation > same-category, different-position correlation: P < 10−7, t-test).

Overall our analyses of the relationship between position and category effects indicate that position more strongly affects voxel response patterns than category and that position and category do not exhibit a substantial interaction on LO responses.

DISCUSSION

Our results demonstrate that the object-selective region LO has substantial retinal position sensitivity, only part of which results from overlap with the visual field maps LO-1 and LO-2. This position sensitivity includes a strong bias for the contralateral lower visual field, both in terms of more voxels preferring the lower visual field, as well as stronger BOLD modulation for object stimuli presented in the contralateral and lower visual field, compared with the ipsilateral and upper visual field. The lower bias to objects is retinotopically specific within LO: voxels predicted to prefer lower angles by retinotopic mapping exhibit a stronger lower bias for objects.

We have also demonstrated that retinal position modulates LO responses more than category. Position strongly affects both the mean LO response as well as the pattern of response across voxels, whereas category effects are smaller in both cases.

Lower bias in LO

Our report of a lower bias is consistent with a previous result (Niemeier et al. 2005) that showed that the mean LO response to stimuli was higher in the lower than upper visual field. We expand on this finding by demonstrating that the lower bias can be accounted for by retinotopic organization within LO. Both a greater number of voxels preferring the lower visual field (Fig. 5A) and a larger preferential response to objects in the lower visual field within lower-preferring voxels (Fig. 6B) contribute to the effect on the mean response across the entire LO ROI. The fact that the lower bias generalizes across different stimuli (retinotopic checkerboards and different categories of object stimuli), experimental designs (phase-encoded retinotopy as well as block-design object experiments), and tasks (fixation color-detection task versus categorization) suggests that the polar angle sensitivity of neural populations in LO is fairly robust to the type of visual stimulus and task.

Behavioral implications?

One question which our results raise is whether the lower bias for object-selective regions has any behavioral correlates in recognition. The most well-described behavioral advantage for stimuli in the lower visual field is vertical meridian asymmetry (VMA). VMA refers to improved visual performance for certain tasks along the lower, compared with the upper, vertical meridian. These behaviors include discrimination of orientation, contrast, hue, and motion (Cameron et al. 2002; He et al. 1996; Levine and McAnany 2005), and illusory contour perception (Rubin et al. 1996). However, it is unclear if this asymmetry extends to object recognition. Little literature exists describing object recognition differences between UVM and LVM. Rubin and colleagues (Rubin et al. 1996) tested the hypothesis that general shape discrimination was improved in the lower visual field. They observed a lower detection threshold for illusory, but not luminance-defined, contours when presented in the lower visual field. However, more thorough testing of object recognition at different polar angles is necessary to resolve this question.

Recent results indicate that activity in early visual cortex (V1 and V2) may correspond to the VMA (Liu et al. 2006). One possibility is that the lower field bias observed in LO is propagated from biases already present in early visual cortex. However, this mechanism may not explain our results because it is thought to be specific to the vertical meridian, whereas our results show enhanced representation off the lower vertical meridian as well as along it (Fig. 5).

Eccentricity bias in LO

Compared with our polar angle results, our retinotopic mapping experiments indicated that LO does not have a sharp tuning for particular visual eccentricities. LO voxels prefer a range of eccentricities with a slightly lower population preferring the parafovea and mid-eccentricities (around 6–10°; Fig. 5B). The lack of voxels preferring the fovea in our retinotopy experiment data are consistent with Hansen et al. (2007), who report a reduced representation for eccentricities around 2–4° in a lateral occipital region. The reduced representation for intermediate eccentricities may relate to a discontinuity in eccentricity representation reported in the vicinity of LO (Tootell and Hadjikhani 2001), although our findings indicate a less abrupt discontinuity. Our results diverge from these previous findings in one aspect; however, whereas (Tootell and Hadjikhani 2001) report a more consistent eccentricity than polar angle representation in these regions, we find that there is a fairly consistent distribution of polar angle preference across subjects.

Foveal bias for object stimuli

Our object experiment revealed a different pattern of eccentricity effects than our retinotopy experiments: LO generally preferred objects at more foveal positions.

Subsets of LO that had been defined to prefer particular eccentricities based on retinotopic data tended to prefer more foveal object presentation. For instance, the LO subset defined to prefer eccentricities around 4° in the retinotopy scans responded most strongly to foveal objects (Fig. 6D). One possible account for this effect relates to the perceptibility of stimuli in foveal versus peripheral positions (Thorpe et al. 2001). We did not scale our stimuli at different positions because we did not want to change both size and position simultaneously nor assume a particular magnification factor for object-selective regions. While this design ensured that we measured the effects of retinal position independent of size, lower retinal sampling in the periphery may have reduced the perceptibility of objects. This, in turn, may have resulted in lower neural activity for peripheral stimuli. This potential reduction in visibility would be limited: during the scan we did not find strong behavioral differences across peripheral and foveal positions (Table 1). We note, however, that there was a significant difference in accuracy for objects at the 9° eccentricity versus other positions (P = 0.005, 1-tailed t-test), although categorization was well above chance for all positions. Furthermore, perceptibility differences would not account for higher responses to stimuli outside the fovea than at the fovea in a subset of LO preferring peripheral locations during retinotopy scans (Fig. 6D, >11° band data). Another possibility is that neural populations in LO have different effective receptive field sizes for objects than nonobject stimuli, such as our checkerboard mapping stimuli. This hypothesis could be tested by performing traveling-wave retinotopic mapping using object stimuli as well as nonobject (e.g., checkerboard) stimuli.

Relationship of LO to visual field maps

Our observation that LO has a strong representation of the contralateral lower visual field raises the question of where this region lies with respect to visual field maps. The answer to this question does not appear to be straightforward: the parcellation of visual cortex between V3d and MT into visual field maps is a subject of debate (Hansen et al. 2007; Larsson and Heeger 2006; Tootell and Hadjikhani 2001; Wandell et al. 2007). One model proposes that this stretch of cortex contains at least two hemifield maps, LO-1 and LO-2 (Larsson and Heeger 2006). Another model proposes that this region of cortex contains a dorsal counterpart to the ventral map in hV4 with a complementary representation of the visual field (Hansen et al. 2007). While we have found the LO-1 / LO-2 model concordant with our retinotopic data, our results are not inconsistent with a dorsal V4 definition. The region defined by Hansen and colleagues as dorsal V4 (Hansen et al. 2007) would correspond to a posterior subset of our definition of LO-1, and is not likely to overlap with LO (see Table 2).

While we report a consistent relationship between polar angle representations and object selectivity, it does not appear that LO corresponds exclusively to a single visual field map. The lower bias we observe may have been accounted for simply by overlap with LO-1 and LO-2 given that the boundary between LO-1 and LO-2 does not always go to the upper vertical meridian (Larsson and Heeger 2006). However, we show that retinotopic modulation in LO extends substantially beyond these maps (Figs. 3–5).

This raises the possibility that additional visual field maps may exist anterior to these regions (but outside MT; see Figs. 3 and 4). These putative maps may only be quarter-field maps, or, alternately, they may be hemifield maps, which only partially overlap with object-selective regions. Because of the increasing receptive field size in these regions (Dumoulin and Wandell 2008; Yoshor et al. 2007), response differences across retinal positions may be small relative to the overall visual response (Dumoulin and Wandell 2008). Therefore the traditional wedge and ring mapping methods employed here may not be successful at clearly identifying the boundaries of such regions. Despite this, there is some indication of polar angle reversals anterior to LO-2 in our single-subject maps (Fig. 4 and Supplemental Fig. S2), which may indicate the approximate location of these maps. Refinements in retinotopic mapping methods, such as the use of a broader class of mapping stimuli and blank-baseline periods to better estimate position effects for large receptive fields, may enable a clearer estimation of potential visual field maps in these regions (Dumoulin and Wandell 2008).

Threshold-independent measurements

The possibility of additional visual field maps raises the question of why an object-selective cortical region may overlap with only part of a visual field map. One possibility may be that this is a thresholding issue: perhaps our fairly stringent object-selectivity criterion (P < 10−5; see methods) restricted LO to a subset of a potentially larger object-selective region, which may better correspond to hemifield map? This appears unlikely for several reasons. First, we examined the distribution of preferred polar angle and eccentricity in the vicinity of LO without thresholding. We anatomically defined a disc ROI centered on LO with a 30-mm radius (Fig. 11A). Independent of statistical threshold, there appeared to be more voxels corresponding to the contralateral lower quarter field (Fig. 11, B and C). We tested whether the lower bias was sensitive to our choice of threshold by selecting subsets of the disc at a series of object-selectivity thresholds. For each threshold, we first selected those voxels that were both object-selective at that threshold and had a coherence >0.20 during the retinotopy scans. We then calculated the distribution of preferred polar angle across these voxels. Regardless of the object-selectivity threshold, the mean preferred polar angle was located below the contralateral horizontal meridian for each hemisphere (Fig. 11C). This lower field bias was statistically significant for all thresholds (P < 0.04, Bonferroni corrected for multiple comparisons; P < 10−3, uncorrected).

FIG. 11.

Disk analysis of the cortex around LO. A: object selectivity in a 30-mm disk centered around the center of LO on the cortical surface without any thresholding. Object-selectivity is measured by the –log(p) value of a t-test for all object categories versus scrambled. Data are shown in a representative subject (S6). B: polar angle preference in the same disk as in A, without any thresholding. The P < 10−5 boundary for LO used for the main analyses is outlined in blue in A and black in B. C: mean polar angle across LO voxels as a function of object-selectivity threshold. x axis denotes object-selectivity threshold used to select voxels within the disk; y axis denotes the mean polar angle across subjects, among voxels passing a given threshold (positive values = above horizontal, negative values = below horizontal). Error bars are SE across subjects. D: joint histograms of object selectivity and preferred polar angle for all voxels across subjects. The color intensity of each bin represents the count of voxels with preferred polar angle contained within the given x-axis range, and object-selectivity [−log(p)] within the y-axis range. Dashed line indicates the statistical threshold used to define LO for the main analyses.

Another way of stating our reported lower field bias is that within the anatomical vicinity of LO, the most highly object-selective voxels prefer the contralateral lower visual field. Figure 11D presents the joint distribution of preferred polar angle during retinotopy scans (x axis) and object selectivity during the localizer scans (y axis). Of those voxels with high object-selectivity measures, most prefer angles within the lower contralateral visual field. This raises the question of why, if LO is located along a hemifield representation, neural populations in the upper representation should be less object selective than in the lower representation?

Separable position and category effects in LO

Our retinotopic analyses predicted, and object experiments confirmed, substantial position effects for object stimuli in LO. Presenting objects at different retinal positions about 4° apart produced large changes in the mean BOLD response in LO (Figs. 6 and 7) and evoked LO response patterns that were not correlated (Figs. 8 and 10 and Supplemental Fig. S3). This expands on previous fMRI-adaptation results that showed that LO recovered significantly from adaptation when the same object was presented in different positions (Grill-Spector et al. 1999). By contrast, category produced a significant but smaller effect.

Effects of thresholding and amplitude estimation

As with our reported lower bias, position and category effects in our pattern analyses may have been sensitive to the threshold and contrast we used to define LO. To control for this possibility, we repeated our split-half analysis on the anatomically defined disk ROI that we used for Fig. 11. This analysis compared response patterns in the anatomical vicinity of LO without thresholding. We found the same pattern of responses in this disk as in LO (Supplemental Fig. S3B), with significant position (2-way ANOVA: F > 179, all P < 10−30), and category effects (all F > 8.0, all P < 0.004), and larger position effects than category effects (same-position, different-category correlation > same-category, different-position correlation: P < 10−30, t-test). These results stand in contrast to our V1 results, which showed strong position effects, but not category effects (Supplemental Fig. S4).

Our pattern analysis results may also have depended on our method of estimating response amplitudes. We estimated responses by subtracting the mean response from each voxel (Fig. 8–10). Responses after this normalization step ostensibly reflect the relative preference for a voxel to a given stimulus compared with all other stimuli tested. This may reflect the actual stimulus preference of the underlying neural population, but only if the set of stimuli is reasonably balanced across the range of possible stimuli (Zepeda et al. 2004). Because we found the same relative magnitude of position and category effects without performing this normalization step (Supplemental Fig. S5), it is unlikely that, for the categories examined here, subtracting the mean response biased our results.

Comparing effects of position and category

The broader consideration of spanning a stimulus space presents a challenge to interpreting fMRI response patterns. The limited temporal resolution of fMRI constrains the number of stimuli that can be tested in a scanning session. This poses a challenge in performing parametric manipulations of several factors. For instance, only a small number of categories can be experimentally tested out of the potentially vast number of categories humans can recognize. Further, it is unclear whether there is a “category space” that contains a clear metric relationship between different categories—and/or whether it is possible to sample from this hypothetical category space in a balanced way. Because of this concern, it is possible that categories beyond those tested here may produce a stronger category effect in LO than we report. Nevertheless the categories we used include categories that have been demonstrated to produce strong differential responses in higher-order visual cortex in humans (Downing et al. 2001; Epstein and Kanwisher 1998; Kanwisher et al. 1997) and in monkeys (Kiani et al. 2007; Tsao et al. 2006).

Our data show that different object categories produce relatively small changes to both the mean (Fig. 7) and distributed (Figs. 8–10) response across LO. In comparison, a modest 4° change in an object's position produces signal changes in LO that are as large or larger than this modulation. The 4° change in position is well within the range for which humans can categorize objects (Table 1), and object detection abilities extend well beyond this range of positions (Thorpe et al. 2001). This indicates a substantial difference between the position sensitivity of recognition behavior and that of neural populations in LO. A recent study (Schwarzlose et al. 2008) that used similar methods at three retinal positions (foveal, 5.25° up and 5.25° down) also reported position sensitivity in LO, although of a somewhat smaller magnitude. However, because that study failed to include left and right lateralized positions, which are known to produce substantial signal modulations (Grill-Spector et al. 1998; Hemond et al. 2007; McKyton and Zohary 2007; Niemeier et al. 2005), it likely underestimated the magnitude of position effects.

What does “invariance” mean in the context of retinal position?

It is important to relate our results to the concept of position-invariant representations of objects and object categories. What exactly is implied by the term “invariance” depends on the scientific context. In some instances, this term is taken to reflect a neural representation that is abstracted so as to be independent of viewing conditions. A fully invariant representation, in this meaning of the term, is expected to be independent of retinal position information (Biederman and Cooper 1991; Ellis et al. 1989). However, in the context of studies of visual cortex, the term is more often considered to be a graded phenomenon in which neural populations are expected to retain some degree of sensitivity to visual transformations like position changes but in which the stimulus selectivity is preserved across these transformations (DiCarlo and Cox 2007; Kobatake and Tanaka 1994; Rolls 2000). In support of this view, a growing literature suggests that maintaining local position information within a distributed neural representation may actually aid invariant recognition (DiCarlo and Cox 2007; Dill and Edelman 2001; Newell et al. 2005). In addition, maintaining separable information about position and category may allow both recognition and localization of objects (DiCarlo and Cox 2007; Edelman and Intrator 2000). Our observation of independent position and category effects in LO are concordant with this account.

Our pattern analyses do not indicate strong position invariance in LO. For a hypothetical, strongly invariant neural population, we may expect high correlations between response patterns to stimuli from the same category at different positions. However, we found that these correlations were close to zero (Fig. 10 and Supplemental Fig. S3). Does this mean there is no position invariance in LO? Several considerations make this unlikely. First, as discussed in the preceding text, interpreting zero correlation in this analysis is complex due to the subtraction of the mean response for each voxel. Second, the relationship between the distributed BOLD response across voxels and the underlying neural population responses is not well understood. Position-invariant neural populations responses may exist at a finer spatial scale than our fMRI experiments could reliably resolve.

Conclusions

Our results add to a growing body of evidence that retinal position effects persist throughout visual cortex (Hansen et al. 2007; Hasson et al. 2003; Hemond et al. 2007; Levy et al. 2001; McKyton and Zohary 2007; Niemeier et al. 2005). These effects occur in the context of a visual hierarchy with progressively increasing receptive fields in which LO is intermediate (Grill-Spector et al. 1999). To obtain a comprehensive picture of how this hierarchy achieves position-invariant behavior, it is important to place these different results in a common conceptual framework. Estimating receptive fields in extrastriate visual cortex is a promising direction for achieving this framework (Dumoulin and Wandell 2008; Yoshor et al. 2007) and will allow a quantitative comparison of the different position effects reported in the literature. They will additionally allow for relating position effects across spatial scales among single-unit physiology, optical imaging, and fMRI studies.

GRANTS

This work was supported by National Eye Institute Grants 5 R21 EY-016199-02 and 5 F31 EY-015937 and Whitehall Foundation Grant 2005-05-111-RES.

Supplementary Material

Acknowledgments

We thank S. Dumoulin for discussions and comments on the manuscript as well as assistance in delineating visual field maps and K. Weiner for comments on the manuscript.

The costs of publication of this article were defrayed in part by the payment of page charges. The article must therefore be hereby marked “advertisement” in accordance with 18 U.S.C. Section 1734 solely to indicate this fact.

Footnotes

The online version of this article contains supplemental data.

REFERENCES

- Bar et al. 2001.Bar M, Tootell RB, Schacter DL, Greve DN, Fischl B, Mendola JD, Rosen BR, Dale AM. Cortical mechanisms specific to explicit visual object recognition. Neuron 29: 529–535, 2001. [DOI] [PubMed] [Google Scholar]

- Biederman and Cooper 1991.Biederman I, Cooper EE. Evidence for complete translational and reflectional invariance in visual object priming. Perception 20: 585–593, 1991. [DOI] [PubMed] [Google Scholar]

- Brewer et al. 2005.Brewer AA, Liu J, Wade AR, Wandell BA. Visual field maps and stimulus selectivity in human ventral occipital cortex. Nat Neurosci 8: 1102–1109, 2005. [DOI] [PubMed] [Google Scholar]

- Cameron et al. 2002.Cameron EL, Tai JC, Carrasco M. Covert attention affects the psychometric function of contrast sensitivity. Vision Res 42: 949–967, 2002. [DOI] [PubMed] [Google Scholar]

- Cox and Savoy 2003.Cox DD, Savoy RL. Functional magnetic resonance imaging (fMRI) “brain reading”: detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage 19: 261–270, 2003. [DOI] [PubMed] [Google Scholar]

- DiCarlo and Cox 2007.DiCarlo JJ, Cox DD. Untangling invariant object recognition. Trends Cogn Sci 11: 333–341, 2007. [DOI] [PubMed] [Google Scholar]

- DiCarlo and Maunsell 2003.DiCarlo JJ, Maunsell JH. Anterior inferotemporal neurons of monkeys engaged in object recognition can be highly sensitive to object retinal position. J Neurophysiol 89: 3264–3278, 2003. [DOI] [PubMed] [Google Scholar]

- Dill and Edelman 2001.Dill M, Edelman S. Imperfect invariance to object translation in the discrimination of complex shapes. Perception 30: 707–724, 2001. [DOI] [PubMed] [Google Scholar]

- Downing et al. 2001.Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science 293: 2470–2473, 2001. [DOI] [PubMed] [Google Scholar]

- Dumoulin and Wandell 2008.Dumoulin SO, Wandell BA. Population receptive field estimates in human visual cortex. Neuroimage 39: 647–660, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edelman and Intrator 2000.Edelman S, Intrator N. (Coarse coding of shape fragments) + (retinotopy) approximately = representation of structure. Spat Vis 13: 255–264, 2000. [DOI] [PubMed] [Google Scholar]

- Ellis et al. 1989.Ellis R, Allport DA, Humphreys GW, Collis J. Varieties of object constancy. Q J Exp Psychol A 41: 775–796, 1989. [DOI] [PubMed] [Google Scholar]

- Engel et al. 1997.Engel SA, Glover GH, Wandell BA. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex 7: 181–192, 1997. [DOI] [PubMed] [Google Scholar]

- Epstein and Kanwisher 1998.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature 392: 598–601, 1998. [DOI] [PubMed] [Google Scholar]