Abstract

Objective

To examine whether self-assessment and reflection-in-action improves critical thinking among pharmacy students.

Methods

A 24-item standardized test of critical thinking was developed utilizing previously-validated questions. Participants were divided into 2 groups (conditions). Those in condition 1 completed the test with no interference; those in condition 2 completed the test but were prompted at specific points during the test to reflect and self-assess.

Results

A total of 94 undergraduate (BScPhm) pharmacy students participated in this study. Significant differences (p < 0.05) were observed between those who completed the test under condition 1 and condition 2, suggesting reflection and self-assessment may contribute positively to improvement in critical thinking.

Conclusions

Structured opportunities to reflect-in-action and self-assess may be associated with improvements among pharmacy students in performance of tasks related to critical thinking.

Keywords: critical thinking, self-assessment, reflection

INTRODUCTION

A central objective of higher education is the development of critical-thinking skills and propensities.1 Within the health professions, the importance of critical thinking is clear: practitioners must make numerous complex decisions affecting patient care, frequently based upon incomplete or imperfect information.2 Thus, practitioners must be able to extrapolate, interpolate, and make reasoned, defensible judgments based upon the best available information.

Many definitions of critical thinking have been proposed. These definitions have in common several key attributes, including the strategic use of available resources; purposeful, outcome-oriented analysis that avoids personal biases; and the ability to examine a situation from multiple perspectives and integrate these in a systematic manner. Halpern's definition of critical thinking is perhaps most resonant with the goals of health professional education: “the deliberate use of cognitive skills and strategies that increase the probability of a desirable outcome in a given situation.”3

Within health professions education, there have been attempts to introduce a culture of critical thinking.4-6 Examples of courses, assignments, or learning opportunities purported to teach and assess critical thinking have been shared. This “show-and-tell” approach to critical thinking has been somewhat atheoretical and has not adequately addressed fundamental questions of how critical thinking develops, evolves, or may be nurtured.7

Lack of critical thinking – sometimes referred to as flawed thinking—is ubiquitous, even among educated individuals, including among health care professionals.1 Cognitive psychologists have argued as to whether this ubiquity indicates a natural state; that is, whether irrationality and flawed thinking are the norm, and critical thinking and reason are the exceptions.8 There is some evidence to suggest that critical thinking is a learned rather than natural behavior, and that most human beings are cognitively predisposed to flawed thinking.9 In particular, cognitive psychologists have examined the use of reasoning heuristics as a clue to the internal mental processes that guide reasoning and decision making in ambiguous and uncertain situations.10,11

Heuristics are the “rules of thumb” generated by each of us to guide judgment and decision making.11 They have been described as the specific rules governing individual thought and behavior derived from personal experience, and the process of gaining knowledge by intelligent guesswork rather than by pre-established formula or criteria. Heuristic reasoning is a shortcut thinking method that contrasts with algorithmic reasoning, which built upon clear rules in an environment of abundant, incontestable evidence.10 Heuristic reasoning is an essential cognitive strategy in day-to-day life since there are few situations that arise where clear rules exist and abundant and incontestable evidence is available. In time-pressured situations, heuristic reasoning is the dominant (and appropriate) mode of analysis and decision making. For example, when making decisions related to purchasing a piece of fruit, most individuals rely heavily upon superficial observations (eg, how the fruit looks, feels, and smells) that invoke heuristic reasoning. Algorithmic reasoning in this context would lead us to instead ask questions such as “How long ago was this fruit picked?” or “What length of time is required for shipping?” and “On average, what is the shelf-life of this kind of produce?”

In many low-stakes situations, such as day-to-day social interactions or decisions related to consumer purchases, heuristic reasoning generally serves us well enough. The cognitive energy and time required to engage in algorithmic (rule-based) reasoning for every little decision is not worth the potentially improved outcome in most cases. However, in many cases, particularly in a health care context, relying on the same heuristic reasoning patterns we use in our day-to-day life may actually increase the risk of systematic errors, assumptions, biases, and flawed thinking.12,13

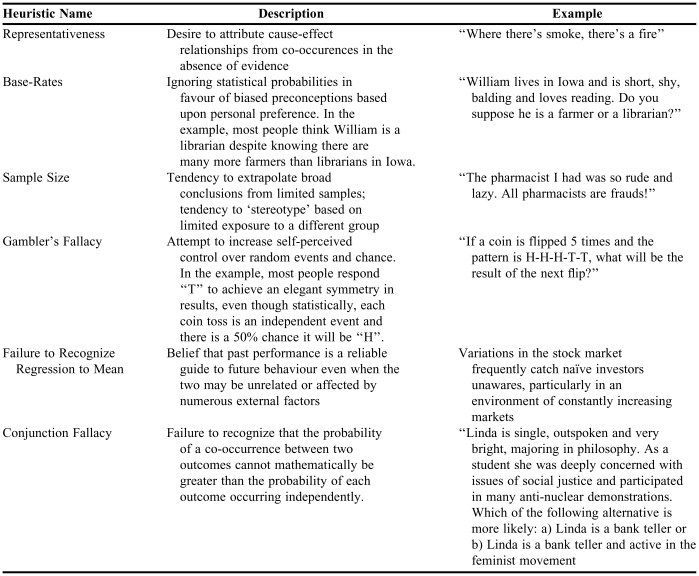

Cognitive psychologists have identified a variety of seemingly natural-state flawed thinking strategies that form heuristic reasoning patterns in most people; these are described in Appendix 1. Though not a comprehensive inventory of all heuristics, these 6 fallacies have been identified as among the most common flawed thinking strategies in the population.9-11 The consequences of such flawed thinking can range from humorous to aggravating to downright dangerous. Within medical education and practice, recent attention has focused on the ways in which flawed thinking that flows from use of these heuristics can, for example, impair accurate and effective diagnosis when physicians utilize the representativeness heuristic (Appendix 1) and make biased or stereotyped assessments of patients.12,13

Clearly, critical thinking is not like citizenship or blood typing; having passed a “test” of critical thinking neither guarantees nor predicts that an individual will avoid using heuristics in the next situation to arise (see Failure to Recognize Regression in Appendix 1). We hypothesize that a “missing link” in much of the critical thinking literature may be the role of self-assessment and reflection-in-action in helping individuals to identify situations in which heuristic reasoning is more efficient and sufficiently effective and those situations in which algorithmic reasoning should be employed to optimize outcomes. This hypothesis is built upon a belief (not empirically tested, but proposed in the literature) that self-assessment and reflection provides individuals with an opportunity to cognitively step outside of themselves and their immediate activity.14 This shift promotes realignment of goals, methods, objectives, and outcomes, and can therefore be used to shift from heuristic to critical thinking in the appropriate circumstance.15

While there is literature regarding self-assessment, much of it has focused on tools used to facilitate or encourage this propensity.16,17 There is also literature related to reflection particularly in the health professions.18,19 For example, the concept of the “reflective practitioner,” a term first coined by Schonhas, now has become widely accepted in education.20 However, there is currently a paucity of literature linking these concepts, and little empirical evidence to suggest any association between them. Elucidating the connection between self-assessment, reflection, and critical thinking may provide educators and practitioners with a more sophisticated understanding of how problems with heuristic reasoning may be overcome. Thus, the primary objective of this study was to examine whether self-assessment and reflection-in-action improved critical thinking among pharmacy students.

METHODS

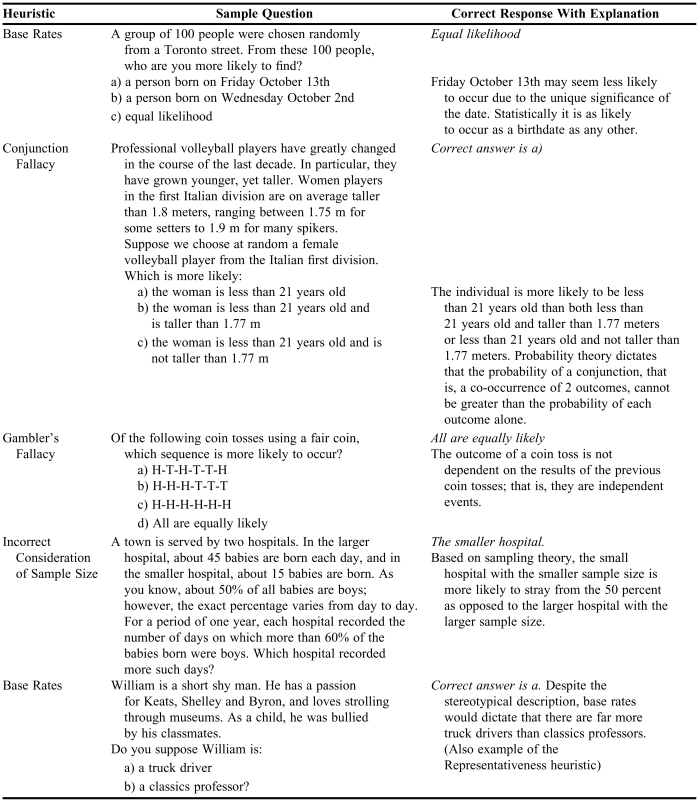

A 24-item handwritten test instrument was developed containing items designed to test heuristic and algorithmic reasoning. Three to 5 items were developed for each of the heuristic strategies described in Appendix 1. In large part, these items were adapted from the cognitive psychology literature describing heuristic reasoning1,3,8,10,11; however, the 24-item instrument as a whole was not previously utilized. Prior to administering the instrument, a validation activity had been undertaken with a group of 5 volunteers (senior-level pharmacy students), to ensure readability and common understanding of questions, distractors, and items. The format of each question differed slightly (eg, multiple choice, true/false, fill-in-the-blank). Each item had a correct answer based upon algorithmic reasoning strategies; each item also had incorrect answers built around heuristic reasoning. Sample questions and answers are provided in Appendix 2.

For this study, volunteers were recruited from a senior-level pharmacy class (fourth year of the BScPhm). Students were invited to participate in a study examining reasoning abilities. The study had been previously approved by the research ethics board and informed consent was given by all participants. The students were informed that the test was part of a study examining critical thinking, reflection, and self-assessment, and that results of the test would in no way impact their academic standing/performance and individual results would not be shared with any instructors.

The study consisted of 2 separate testing conditions. Participants were randomly allocated into one of these groups. To control for potential age-related differences in reasoning skills, participants were first sorted based on their chronological age, then randomized to either condition 1 or condition 2, to ensure equal representation of each age cohort in both groups. In condition 1, the test was administered as a series of 24 items. Participants in condition 1 completed the test in a captive situation, under the supervision of a research associate, in groups of 10-15 at a time. No communication between students was permitted during the test. Participants were required to answer each item in sequential order (ie, they were instructed that they could not go back to previously completed items and change their response midway through the test, and this was monitored by the research associate in the room), and no external prompting or questions were used. In condition 2, the same testing conditions were used, the same 24-item test was administered, and the same sequential ordering was in place; however, at both item 13 and item 18 of the written test, 2 additional cueing questions were incorporated. The first question (self-assessment) asked participants to rate their own confidence in their answer on a scale of 1 to 10 (with 10 being absolutely confident they had given the correct answer), and to provide a brief written explanation of how they arrived at this rating. The second question (reflection-in-action or real-time as described by Schon20) asked participants to provide a brief written explanation and rationalization or justification of why they selected that particular answer. Questions 13 and 18 were arbitrarily selected because they allowed for 50% of the test to be the same for conditions 1 and 2, as well as allowing for reinforcement of self-assessment and reflection three quarters of the way through the test. In order to motivate students to perform as well as possible, a prize ($50 gift certificate to the University's bookstore) was offered to the student(s) who scored the highest on the test.

Data from both groups were then analyzed and compared using Microsoft Excel. For each of the 24 questions on this test, a correct answer had been defined a priori; as a result, a final score on the instrument was calculated. Student t test (p 0.05) was used to compare performance between both groups on (1) the test overall; (2) the first 12 items; (3) items 13-18 (following the first external prompting, after both groups completed the first 50% of the test under the same conditions); and (4) items 19-24 (following the second external prompting at the 75% point in the test). Student t tests (p<0.05) also were used to establish the demographic comparability of each group.

RESULTS

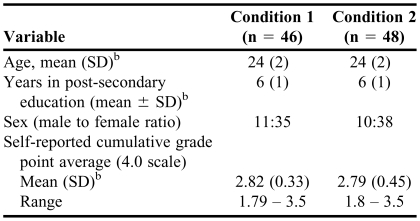

Of 139 students invited to participate in this study, 94 participants volunteered and were tested (response rate = 67.6%). All were senior level (fourth year) students enrolled in a BScPhm program. A demographic profile of participants is provided in Table 1.

Table 1.

Demographic Profile of Participants in a Study of the Use of Reflection-in-Action and Self-Assessment to Promote Critical Thinking Among Pharmacy Students

aCondition 1 = no interference during completion of test; condition 2 = specific prompts to reflect and self-assess provided to students at defined times

b p > 0.05

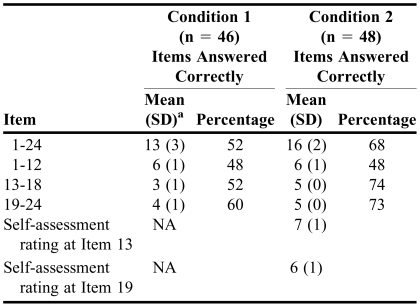

As illustrated in Table 2, there were no significant differences in performance between the 2 groups over the first 12 items; both groups demonstrated a small improvement in performance (defined as selection of the correct answer, indicating the absence of heuristic reasoning). After the initial prompt to self-assess and reflect at question 13, significant performance differences emerged; participants in condition 2 demonstrated an immediate improvement in responses, one that sustained for the remainder of the study (including after introduction of the second prompt to self-assess and reflect at question 18).

Table 2.

Test Performance Results of Pharmacy Students Participating in a Study to Determine Whether Use of Reflection-in-Action and Self-Assessment Promotes Critical Thinking, Mean (SD)

ap < 0.05, number of items answered correctly

DISCUSSION

The addition of self-assessment and reflection-in-action components in condition 2 appeared to positively influence performance on the second half of the test. Consistent with much of the literature in self-assessment, participants tended to overestimate their own abilities at the first self-assessment opportunity (the “above-average effect”14). Of interest, when asked to self-assess after question 18, participants appeared to underestimate their own abilities. Of particular relevance, however, was the improved performance on questions 13-24 after participants were asked to both self-assess and reflect-in-action. We postulate that having been prompted to self-assess and reflect, students recalibrated their responses going forward and began applying algorithmic rather than heuristic reasoning on all subsequent questions, not simply those they were asked to specifically reflect/self-assess upon. This reflection/self-assessment “echo” resulted in an overall improvement in performance. Consistent with Halpern's contention that both reflection and self-assessment prompt a cognitive reorientation,3 results of this study suggest that simply alerting individuals to think (rather than automatically respond) may improve overall performance.

The intervention examined in this study was relatively straightforward, highly subjective, and not particularly challenging. Two simple self-cueing items were included on each occasion: “How confident are you that you have answered this question correctly?” coupled with “Briefly explain how you decided on the correct answer.” No formal education was provided on heuristic vs. algorithmic reasoning, and no additional critical thinking resources were provided. Importantly, the cueing questions were entirely self-directed; participants received no external feedback on their self-assessment or reflection-in-action.

Participants in this study represent a relatively homogenous group with respect to age and experience. All had completed (and passed) formal courses in statistics and critical appraisal of medical literature, as well as modules in critical thinking, reading, and writing as part of their undergraduate studies. Somewhat surprisingly, students in condition 1 did not perform particularly well on this test, although (without prompting to self-assess or reflect) there was some improvement in performance over the course of answering the 24-items. This may be the result of a cueing effect, in that participants recognized the test consisted of a series of “trick” questions and accommodated themselves consciously or subconsciously to become more discerning in their responses.

Of greater significance, however, was the magnitude of improvement noted in condition 2. These participants demonstrated a relatively immediate and sustained improvement in their performance following the first pair of self-assessment and reflection-in-action questions. This suggests there may be value in formal, structured, and directed self-assessment and reflection processes during a learning situation.

Results of this study also suggest that self-assessment and reflection-in-action may not be natural propensities for pharmacy students, but that once prompted to engage in these activities, pharmacy students demonstrate improved performance. Simply because pharmacy students are bright individuals with good academic records does not necessarily mean they will automatically or naturally self-assess and reflect without external prompting.

The study findings suggest that self-assessment and reflection-in-action need not be cumbersome or complicated in order to have a meaningful and measurable impact on performance. Two relatively simple questions strategically placed within an activity, with no external feedback, resulted in a significant improvement in performance on the remainder of the activity. There is nothing to suggest this improvement lasted beyond this activity; indeed, the fact that all participants had already completed coursework in critical thinking yet did not perform significantly better on the first half of the test suggests pharmacy students must be constantly vigilant and prompted to ensure heuristic reasoning does not trump critical thinking. Arguably, these results may suggest that participants have critical-thinking skills but not necessarily critical thinking propensities, and that a challenge for educators is to find ways of reminding students to remind themselves to think critically. As suggested by the cognitive psychology literature and confirmed in this study, heuristic reasoning appears to be the default natural state of thinking, and to overcome this and engage in critical thinking requires cognitive energy, work, time – and prompting.

Mindful of the common errors in thinking associated with heuristic reasoning, it is important to note the limitations of this study. First, the sample size, location, and nature of participants all limit the generalizability of the findings to other settings. That caveat notwithstanding, results of this study align with findings from previous work examining heuristic reasoning.

A second limitation of this study is the design itself. While the study purports to examine the connection between self-assessment, reflection, and critical thinking, the 24-item instrument used actually examines the absence of heuristic reasoning. For the purposes of this study, we assumed that the absence of heuristic reasoning confirms the presence of critical thinking, and clearly this is not always true. This assumption was made due to the methodological difficulty of actually specifically testing critical thinking itself. The cognitive psychology literature has presented items, tests, and instruments that are useful in examining heuristic reasoning, but not algorithmic reasoning. While the absence of heuristic reasoning may not exactly equal critical thinking, there is support in the literature for the notion that it is an adequate proxy or approximation of critical thinking upon which to build a study such as this. Much of the literature on heuristic reasoning is over a decade old, and there has been little recent attention paid to this issue among cognitive psychologists.

A third limitation of this study is the instrument used to gather data. While each individual item had been cited and utilized in previous work, the instrument as a whole had not been subjected to thorough validation testing. In part, this reflects a general trend in the cognitive psychology literature from which items themselves were drawn; there were no reports of test-retest validation being undertaken with these items prior to their use in these studies. While basic principles of effective measurement suggest such validation should be undertaken, it would be difficult if not impossible due to the nature of the items themselves. Each item used could be viewed as a “trick question,” to use a colloquialism. Test-retest validation on such questions may be significantly compromised due to the cueing effect resulting from simple exposure to each question. As demonstrated in this study with condition 1, simply taking a test with a series of questions such as this appears to “prime” individuals to anticipate and look for tricks, and this would compromise the integrity and value of any test-retest validation process. We also relied upon self-reporting of grade point averages to establish comparability between participants in each condition of this study. In part this was done to circumvent the confidentiality concerns of the ethics review panel since students' grades are considered private. However, reliance on self-reporting may have resulted in inaccuracies which may in turn have compromised our ability to establish comparability between the 2 groups.

Another limitation in a study of this sort relates to the motivation of students to perform well. Students understood they were involved in a study and that results of this study would in no way affect their academic progress, grade point average, or any other feature of their day to day life. Consequently, students may not have had any particular motivation to do well or to actually try to perform to the best of their ability. While an incentive was used to try to encourage individual to perform at their best, its value in actually doing so is questionable.

Despite these acknowledged limitations, the study provides an interesting perspective on the role of a relatively simple intervention to promote critical thinking. In this study, self-assessment coupled with reflection-in-action appeared to facilitate expression of participants' critical-thinking skills; participants who were not externally prompted to self-assess and reflect appeared less successful at internally prompting themselves to critically think. These results should not, however, be interpreted as a cause-effect relationship between external prompting to self-assess/reflect and improved performance. Further work is required to corroborate these findings and to demonstrate a stronger association between self-assessment/reflection and critical thinking.

With these limitations in mind, there are important implications of this study for pharmacy educators. First, while critical-thinking skills may be taught and assessed in formal courses, the conversion of such skills to self-directed critical thinking propensities may be an important issue to address. Just because students can demonstrate critical thinking in a specific situation does not mean they will think critically in different, unobserved situations. Second, external prompting and reinforcement of self-assessment and reflection-in-action appears to positively contribute to application of critical-thinking skills, while reliance on internal prompting to self-assess and reflect appears less successful. This suggests that educators must remain vigilant throughout an educational program and continuously prompt, encourage, and engage students in self-assessment and reflection-in-action, rather than simply assume that students will do this on their own. Finally, and perhaps more importantly – and the subject of future research – is the way in which those in practice self-assess and reflect when they do not have the benefit of instructors to prompt them.

CONCLUSIONS

This study has illustrated a positive association between self-assessment and reflection-in-action, and has demonstrated that a relatively simple intervention may produce meaningful and measurable improvements in performance. Further research is required to more fully elucidate the nature and magnitude of the associations between self-assessment, reflection, and critical thinking. Educators must be mindful of the need for enforcement and prompting to ensure critical-thinking skills are matched with critical-thinking propensities.

ACKNOWLEDGEMENTS

This research was supported in part through funding by the Canadian Institutes for Health Science (CIHR) Summer Research Program.

Appendix 1. Modes of Heurstic Reasoning

Appendix 2. Sample Test Questions

REFERENCES

- 1.Stanovich KE. Who is rational? Studies of individual differences in reasoning. Mahweh, NJ: Lawrence Erlbaum Associates; 1999. [Google Scholar]

- 2.Sox HC, Blatt M, Higgins M, Marton K. Medical Decision Making. Boston, MA: Butterworth-Heinemann; 1988. [Google Scholar]

- 3.Halpern DF. Teaching critical thinking for transfer across domains: dispositions, skills, training, and metacognitive monitoring. Am Psychol. 1998;53:449–55. doi: 10.1037//0003-066x.53.4.449. [DOI] [PubMed] [Google Scholar]

- 4.Tate S, Sills M. Development of critical reflection in the health professions. http://www.health.heacademy.ac.uk/publications/occasionalpaper/occasionalpaper04.pdf. Accessed: May 28, 2007.

- 5.DePaola DP, Slavkin HS. Reforming dental health professions education. J Dent Educ. 2004;68:1139–50. [PubMed] [Google Scholar]

- 6.Miller D. An assessment of critical thinking: can pharmacy students assess clinical studies like experts? Am J Pharm Educ. 2004;68(1) Article 5. [Google Scholar]

- 7.Kunda Z. Social Cognition: Making Sense of People. Cambridge, MA: MIT Press; 1999. [Google Scholar]

- 8.Kuhn D. Thinking as argument. Harvard Educ Rev. 1992;62:167–71. [Google Scholar]

- 9.Faust D. Research in human judgment and its application to clinical practice. Prof Psychol Res Pract. 1986;17:420–30. [Google Scholar]

- 10.Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124–31. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- 11.Kahneman D, Tversky A. Choices, values, and frames. Am Psychol. 1984;39:341–50. [Google Scholar]

- 12.Groopman J. What's the trouble? How doctors think. http://www.newyorker.com/reporting/2007/01/29/070129fa_fact_groopman. Accessed: October 25, 2007.

- 13.Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184–1204. doi: 10.1111/j.1553-2712.2002.tb01574.x. [DOI] [PubMed] [Google Scholar]

- 14.Kruger J. Lake Wobegon be gone! The “below-average” effect and the egocentric nature of comparative ability judgments. J Personality Soc Psych. 1999;77:221–32. doi: 10.1037//0022-3514.77.2.221. [DOI] [PubMed] [Google Scholar]

- 15.Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments. J Personality Soc Psych. 1999;77:1121–34. doi: 10.1037//0022-3514.77.6.1121. [DOI] [PubMed] [Google Scholar]

- 16.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence – a systematic review. JAMA. 2006;296:1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 17.Duffy FD, Holmboe ES. Self-assessment in life long learning and improving performance in practice: physician know thyself. JAMA. 2006;296:1137–9. doi: 10.1001/jama.296.9.1137. [DOI] [PubMed] [Google Scholar]

- 18.Westberg J, Jason H. Collaborative Clinical Education: The Foundation of Effective Health Care. New York: Springer Publishing; 1993. [Google Scholar]

- 19.Kember D. Reflective Teaching and Learning in the Health Professions. New York: Blackwell Publishing; 2001. [Google Scholar]

- 20.Schon D. The Reflective Practitioner: How Professionals Think in Action. New York: Basic Books; 1983. [Google Scholar]