Abstract

Acoustic and perceptual similarities between Japanese and American English (AE) vowels were investigated in two studies. In study 1, a series of discriminant analyses were performed to determine acoustic similarities between Japanese and AE vowels, each spoken by four native male speakers using F1, F2, and vocalic duration as input parameters. In study 2, the Japanese vowels were presented to native AE listeners in a perceptual assimilation task, in which the listeners categorized each Japanese vowel token as most similar to an AE category and rated its goodness as an exemplar of the chosen AE category. Results showed that the majority of AE listeners assimilated all Japanese vowels into long AE categories, apparently ignoring temporal differences between 1- and 2-mora Japanese vowels. In addition, not all perceptual assimilation patterns reflected context-specific spectral similarity patterns established by discriminant analysis. It was hypothesized that this incongruity between acoustic and perceptual similarity may be due to differences in distributional characteristics of native and non-native vowel categories that affect the listeners’ perceptual judgments.

INTRODUCTION

Studies of cross-language speech perception have documented that the ease with which listeners can perceive specific contrasts among speech sounds is shaped by their linguistic environment during the early years of life (e.g., Best et al., 1988; Werker and Tees, 1984). Such early language-specific shaping in speech perception leads to great difficulty in learning a new phonological system as adults.

In order to explain the complex processes involved in cross-language speech perception and to predict difficulty in both perception and production experienced by adult second language (L2) learners, several models have been proposed. Among those, of particular interest here are the perceptual assimilation model (PAM) by Best (1995) and the speech learning model (SLM) by Flege (1995). Both PAM and SLM propose somewhat similar mechanisms in which learners’ native phonological system acts as a sieve in processing non-native phones. Both claim that the similarity between phonetic inventories of the learners’ native language (L1) and that of the L2 influences perception. In addition, both models suggest that differences in the phonetic realization (gestural and acoustic-phonetic details) of the “same” phonological segments in the two languages must be taken into consideration in establishing cross-language similarities, as well as the token variability within similar phonetic categories in the two languages—whether due to speaking styles, consonantal∕prosodic contexts, or individual speakers.

Thus far, numerous studies of vowels have attempted to provide evidence for PAM and SLM, but the majority has focused on how listeners from an L1 with fewer vowel categories assimilate vowels from larger L2 inventories that include vowels that do not occur as distinctive categories in the L1. The present study examined perceptual assimilation of L2 vowels from a small inventory by listeners whose L1 included more vowels differing in “quality” (spectral characteristics), but for whom vowel length was not phonologically contrastive, namely, perception of Japanese vowels by American English (AE) listeners.

The subsequent four sections present the two models in more detail, briefly describe the vowel systems of AE and Japanese, provide summaries of previous studies that examined Japanese listeners’ perception of AE vowels (Strange et al., 1998; 2001) and AE listeners’ use of vowel duration in perceptual assimilation of German vowels, and present the design and the research questions for the present study.

Perceptual assimilation model and speech learning model

PAM (Best, 1995) has its basis in the direct realist view of speech perception and hypothesizes that listeners directly perceive articulatory gestures from information specifying those gestures in the speech signal. It predicts relative difficulty in perceiving distinctions between non-native phones in terms of perceptual assimilation to and category goodness of the contrasting L2 phones with respect to L1 categories. According to PAM, if contrasting L2 phones are perceptually assimilated to two different L1 categories (two-category pattern), their discrimination should be excellent. However, if they are assimilated to a single L1 category as equally good (or poor) instances (single-category pattern), they are predicted to be very difficult to discriminate. On the other hand, if two L2 phones are assimilated to a single L1 category but are judged to differ in their “fit” to that category (category goodness pattern), their discrimination will be more accurate than single-category pairs but not as good as for two-category pairs. If both phones are perceived as speech sounds but cannot be assimilated consistently to any L1 category (uncategorizable), their discrimination will vary depending on their phonetic similarity to each other and perceptual similarity to the closest L1 categories. Finally, there can be cases where one L2 phone falls into an L1 category and the other falls outside the L1 phonological space (categorizable-uncategorizable pattern). In such a case, PAM predicts their discrimination to be very good.1

Although much of Flege and colleagues’ work has focused on the accentedness of L2 productions by inexperienced and experienced L2 learners, his SLM (Flege, 1995) also considers the effects of cross-language phonetic similarities in predicting relative difficulties in learning both to perceive and produce L2 phonetic categories. SLM hypothesizes that L2 learners initially perceptually assimilate L2 phones into their L1 phonological space along a continuum from “identical” through “similar” to “new” (equivalence classification) based on L2 phones’ phonetic similarities to L1 categories. If an L2 phone is “identical” to an L1 category, L1 patterns continue to be used, resulting in relatively little difficulty in perception and production. At the other extreme, if an L2 phone is phonetically “new” (very different from any L1 category), it will not be perceptually assimilated to any L1 category and eventually a new category will be created that guides both perception and production of the L2 phone. Therefore, perception and production will be relatively accurate for “new” L2 phones after some experience with the L2. However, if an L2 phone is phonetically “similar” to an L1 category, it will continue to be assimilated as a member of that L1 category. According to SLM, this pattern may lead to persistent perception and production difficulties because even though there may be a mismatch between L1 and L2 phones, learners continue to use their L1 category in perception and production. If two L2 phones are assimilated as “identical” or “similar” to a single L1 category, discrimination is predicted to be difficult, just as for PAM.

Studies have shown that the relative difficulty that late L2 learners have in discriminating L2 consonant and vowel contrasts varies considerably due to the phonetic context (i.e., allophonic variation) and token variability contributed by speakers, speaking rate, and speaking style differences (cf. Schmidt, 1996 for consonants; Gottfried, 1984 for vowels). This causes difficulties even for contrasts that may be distinctive in both languages but differ in phonetic detail across languages (cf. Pruitt et al., 2006, for perception of Hindi [d-t] and [dh-th] by AE listeners and Japanese listeners; Rochet, 1995, for perception of French vowels [i-y-u] by AE listeners and Brazilian Portuguese listeners). However, the majority of these reports are based on assimilation of more L2 categories into fewer L1 categories (“many-into-few” assimilation), and at present, it is not known whether PAM or SLM can account for the cases where learners are required to assimilate fewer L2 categories into a more differentiated L1 phonological inventory (“few-into-many” assimilation).

Japanese and American English vowel inventories

According to traditional phonological descriptions of Japanese and AE vowel inventories, the two languages differ markedly in their use of quality (tongue, lip, and jaw positions) and quantity (duration of vocalic gestures) to differentiate vowels. Japanese has a “sparse” system with five distinctive vowel qualities [i, e, a, o, Ɯ] which form five long (2-mora)-short (1-mora) pairs (Homma, 1992; Ladefoged, 1993; Shibatani, 1990). These vowels vary in height (three levels) and backness (front versus back). Thus, vowel quantity or duration is phonologically contrastive, and phonetically, long-short pairs are reported to be very similar in spectral structure (Hirata and Tsukada, 2004). (Hereafter, the Japanese vowels will be transcribed as short [i, e, a, o, Ɯ] and long [ii, ee, aa, oo, ƜƜ].) Only the midback vowels [o, oo], are rounded, and all vowels are monophthongal. In addition, the central and back vowels have distinctive palatalized forms [ja(a), jo(o), jƜ(Ɯ)] in the majority of consonantal contexts.

In contrast to Japanese, AE has a relatively “dense” vowel system, described as including 10-11 spectrally distinctive, nonrhotic vowels [iː, ɪ, eɪ, ε, æː, ɑː, ʌ, ɔː, oʊ, ʊ, uː] that vary in height (five levels) and backness (front versus back), one rhotic vowel [ɝ], and three true diphthongs [aɪ, ɔɪ, aʊ]. The mid, low to high, back vowels [ɔː, oʊ, ʊ, uː] are rounded, and [uː] can be palatalized in limited phonetic contexts (e.g., [ju] in “view, pew, cue”) and is allophonically fronted in coronal contexts (Hillenbrand et al., 1995; Strange et al., 2007). Although to a lesser extent than the true diphthongs, the mid, front [eɪ] and mid, back [oʊ] are diphthongized in slow speech and open syllable contexts, and several others of the so-called monophthongs show “vowel-inherent spectral change” in some contexts and some dialects (Hillenbrand et al., 1995; Nearey, 1989; Stack et al., 2006). In many dialects of AE, the distinction between [ɑː, ɔː] has been neutralized to a low, slightly rounded [ɒː]. Finally, while vowel length is not considered phonologically distinctive in AE, phonetically, “intrinsic duration” of AE vowels varies systematically (Peterson and Lehiste, 1960), with seven long vowels [iː, eɪ, æː, ɑː, ɔː, oʊ, uː] and four short vowels [ɪ, ε, ʌ, ʊ]. Vocalic duration also varies allophonically as a function of the voicing of the following consonant, the syllable structure, and other phonetic and phonotactic variables (Klatt, 1976; Ladefoged, 1993).

Previous cross-language vowel perception studies

Although they differ in detail, both PAM and SLM hypothesize that L2 learners’ perception and production error patterns can be predicted from the phonetic similarity between L1 and L2 phones because they posit that L2 learners initially attempt to perceptually assimilate L2 phones to L1 categories. However, it is important to establish phonetic similarity, independent of discrimination difficulties in order to make these claims noncircular. In some studies, Best and her colleagues describe phonetic similarity in terms of gestural features (degree and place of constriction by the tongue, lip postures, velar gestures), using rather abstract definitions of gestural characteristics (e.g., Best et al., 2001). In many of Flege’s studies of vowels, acoustic-phonetic similarity has been established through comparisons of formant frequencies in an F1∕F2 space (e.g., Fox et al., 1995; Flege et al., 1999). Best, Flege, and other researchers working within these theoretical frameworks have also employed techniques that directly assess cross-language perceptual similarity of L2 phones, using either informal L1 transcriptions by non-native listeners or a cross-language identification or so-called perceptual assimilation task (see Strange, 2007 for a detailed description of these techniques). In our laboratory, listeners perform a perceptual assimilation task in which they are asked to label (using familiar key words) multiple tokens of each L2 vowel as most similar to (underlying representations of) particular L1 vowel categories, and to rate their “category goodness” as exemplars of the L1 category they chose on a Likert scale (e.g., 1=very foreign sounding; 7=very native sounding). This technique has been successfully used in previous studies that investigated perceptual assimilation of larger L2 vowel inventories by listeners with smaller L1 vowel inventories (Strange et al., 1998; 2001; 2004; 2005), but has never been used to assess assimilation of L2 vowels by listeners from L1s with more vowels. The following is a summary of those previous assimilation studies.

Strange et al. (1998) examined the influence of speaking style and speakers (i.e., [hVb(ə)] syllables produced in lists [citation condition] versus spoken in continuous speech [sentence condition]) on the assimilation of 11 AE vowels [iː, ɪ, eɪ, ε, æː, ɑː, ʌ, ɔː, oʊ, ʊ, uː] by Japanese listeners living in Japan at the time of testing. Acoustic analysis showed that the duration ratios between long and short AE vowels were the same for both conditions (1.3), but the absolute durations of vowels in sentences were somewhat longer than those in citation form. The results of perceptual assimilation tests showed that none of the 11 AE vowels were assimilated extremely consistently (i.e., with >90% consistency within and across listeners) into a single Japanese category in either condition. Japanese listeners assimilated short AE vowels in both conditions primarily into 1-mora Japanese vowels (94% in citation versus 83% in sentence), whereas the proportion of long AE vowels assimilated to 2-mora Japanese vowels was twice as great in sentence (85%) condition as in citation condition (42%). This indicated that Japanese listeners were highly attuned to vowel duration when they were given a prosodic context in which to judge the (small) relative duration differences. As for spectral assimilation patterns, consistent patterns were observed for four vowels [iː, ɑː, ʊ, uː] in both conditions. Six vowels [ɪ, eɪ, ε, æː, oʊ, ɔː] were assimilated primarily to two Japanese spectral categories, but patterns changed between citation and sentence conditions for the first five vowels, reflecting the influence of speaking style. Finally, [ʌ] was assimilated to more than two Japanese categories, suggesting its “uncategorizable” status.

In Strange et al. (2001), only sentence materials were used but vowels were produced in six consonantal contexts [b-b, b-p, d-d, d-t, g-g, g-k]. Similar to Strange et al. (1998), none of the 11 AE vowels were consistently assimilated into a single Japanese category, with the pattern varying with context and∕or speaker for six vowels. Assimilation of long AE vowels to 2-mora Japanese categories also varied with speakers and contexts. More AE vowels were assimilated to 2-mora categories when followed by voiced consonants, indicating that Japanese listeners attributed (lengthened) vocalic duration to the vowels rather than to the consonants.

Strange et al. (2004; 2005) explored AE listeners’ assimilation of North German (NG) vowels. NG has 14 vowel qualities that form seven spectrally adjacent long-short pairs. However, the spectral overlap between the long-short pairs is less marked than for Japanese, and the duration differences between spectrally similar long-short vowels (1.9 in citation, 1.5 in sentence materials) are smaller than for Japanese but greater than for AE. Results of perceptual assimilation tests showed that AE listeners categorized the 14 NG vowels primarily according to their spectral similarity to AE vowels, and largely ignored vowel duration differences.

The present study

The present study is part of a series investigating the influence of token variability on cross-language vowel perception. Thus far, as described above, we have investigated many-into-few assimilation patterns by systematically manipulating sources of variation (e.g., speaking style and consonantal context). The present study is the first of few-into-many assimilation studies and focused on the influence of speaking style. Other factors, such as consonantal context, speaking rate, and prosodic context, are left for future studies.

As in Strange et al. (1998), the effects of token variability were investigated using two utterance forms (i.e., citation and sentence) produced by multiple speakers, while phonetic context was held constant ([hVb(a)]). This specific consonantal context was chosen because in Japanese and AE, both [h] and [b] have minimal coarticulatory influence on the spectral characteristics of the vocalic nucleus. Thus, the vowels in these materials should reflect their “canonical” spectral structure that, in turn, specifies their “articulatory targets.” In addition, the CVCV disyllables are phonotactically appropriate in both languages, and the consonants are very similar. The acoustic measurements of Japanese vowels are considered of archival value because no study has compared the distributional characteristics of Japanese and American vowels in comparable materials, even though averages of spectral and duration measures for Japanese vowels have been reported (e.g., Han, 1962; Homma, 1981; Keating and Huffman, 1984; Hirata and Tsukada, 2004). While the AE corpus was partially described in our previous studies (Strange et al., 1998; 2004), the Japanese corpus has not been described in any published studies.

Based on the phonetic and phonological differences between AE and Japanese vowel inventories described above, it was predicted that most of the Japanese vowels would spectrally overlap one or more AE vowels. Because AE has twice as many spectral categories as Japanese, the SLM would predict that all Japanese vowels should be assimilated into some AE category as “identical” or “similar” and none as “new” with relatively high category goodness ratings. PAM would predict that uncategorizable or categorizable-uncategorizable patterns should not be observed, but other patterns may occur as a function of token variability within a particular Japanese category as manifested as the difference in category goodness ratings.

ACOUSTIC SIMILARITY OF JAPANESE AND AE VOWELS

This section reports the results of both within-language and cross-language acoustic comparisons. Linear discriminant analysis (cf. Klecka, 1980) was chosen as the quantitative method because its conceptual framework resembles the perceptual assimilation processes. Discriminant analysis is a multidimensional correlational technique that establishes classification rules to maximally distinguish two or more predefined nominal categories from one another (i.e., vowel categories). The classification rules are specified by linear combinations of input variables (i.e., acoustic measures, such as formant frequencies and vowel duration) with weights for each variable determined to maximize separation of categories using the values for center of gravity of each category and within-category dispersion in the input set. By applying the classification rule established for the input set, membership of tokens in the input set can be re-evaluated (posterior classification technique), and the amount of overlap between categories can be quantified in terms of incorrect classification of tokens. It is also possible to classify a new set of data (test set) using the previously established classification rules.

In the present study, three types of discriminant analysis were performed. First, by performing analysis with and without vowel duration as a variable, the contribution of spectral and duration cues in distinguishing vowel categories were evaluated for each language (within-language, within-condition analyses). Second, by using the classification rules for the citation materials, the extent of changes in acoustic structure across speaking styles in each language was characterized (within-language, cross-condition analyses). Lastly, using the classification rules for the AE materials in each speaking style, similarity between the spectral characteristics of Japanese and AE vowels were evaluated for citation and sentence conditions separately (cross-language analyses).

Method

Speakers and stimulus materials

Four adult Japanese males (speakers 1–4; 32–39 yr old) served as speakers. All were native speakers of Tokyo dialect and resided in Japan all of their lives. All had at least eight years of English language education beginning in the seventh grade, but in the Japanese school system, little emphasis was placed on the spoken language. They had no training in phonetics and spoke very little English in their daily life. They reported no hearing or speech problems.

Stimuli were five long∕short pairs of Japanese vowels [ii∕i, ee∕e, a∕a, oo∕o, ƜƜ∕Ɯ]. These vowels were embedded in a nonsense [hVba] disyllable and recorded in two speaking styles. First, these disyllables were read singly in lists (citation form), each preceded by an identifying number and a pause, and spoken in falling intonation. Second, each [hVba] disyllable was embedded in a carrier sentence (sentence form), Kore wa [hVba] desu ne [koɾewa#ẖba#des(Ɯ)ne], which translates to “This is [hVba], isn’t it?” Each speaker produced four randomized lists of 10 vowels for each form. Only the last three readings were used as stimuli. When problems with fluency or voice quality were detected, the token from the first reading was used as a replacement. Thus, a total of 240 tokens (10 vowels×3 repetitions×2 forms×4 speakers) were included in the final analysis.

All tokens were recorded in an anechoic sound chamber at ATR Human Information Processing Research Laboratories in Kyoto, Japan, using a condenser microphone (SONY ECM77-77s) connected to a DAT recorder (SONY PCM-2500A, B). Each speaker sat approximately 22 cm from the microphone. Speakers read stimuli from randomized lists with identification numbers preceding each token. Speakers were instructed to read at their natural rate without exaggerating the target nonsense syllables. Recorded lists were digitally transferred to Audio Interchange File Formant (AIFF) files and down-sampled from 48 to 22.05 kHz, using a Power Macintosh 8100∕100 computer and SOUNDEDIT16 (Version 1.0.1, Macromedia). AIFF files for individual tokens for analysis and perceptual testing were created from the down-sampled files by deleting the identification numbers.

The AE corpus was produced and analyzed using similar techniques, except that they were recorded in a sound booth at University of South Florida (USF). Four male speakers of general American English dialect each recorded four tokens for each of 11 vowels in the two speaking styles; three tokens were retained for analysis. Further details of the stimulus preparation for the AE stimuli are reported elsewhere (Strange et al., 1998).

Acoustic analysis

Acoustic analysis was performed using a custom-designed spectral and temporal analysis interface in SOUNDSCOPE∕16 1.44 (PPC)™ speech analysis software (Copyright 1992 GW Instruments, Somerville, MA 02143). First, the vocalic duration of the target vowel was determined by locating the beginning and ending of the target vowel using both the spectrographic and waveform representations of the stimuli. The vowel onset was the first pitch period after the preceding voiceless segment [h], and the vowel offset was determined as the reduction of the waveform amplitude and the cessation of higher formants in the spectrogram indicating the start of the [b] closure; vocalic duration was calculated as the difference between these two time points. The first three formants (F1, F2, and F3, respectively) were measured at the 25%, 50%, and 75% temporal points of the vocalic duration. Only the measurements from the 50% point (midsyllable) are used in the discriminant analyses (average values for each vowels are reported in Appendix). Linear predictive coding (LPC) spectra (14-pole, 1024-point) were computed over approximately three pitch periods (a 25 ms-Hamming window) around the 50% point. When the cross-check with the spectrographic representation indicated the LPC estimate of a formant reflected merged, spurious, or missed formants, the formant frequency was manually estimated from the wideband fast Fourier transfer (FFT) representation of the windowed portion. In very few cases where wideband FFT also failed, a narrowband FFT was used.

Results

Within-language comparisons: Within and across speaking styles

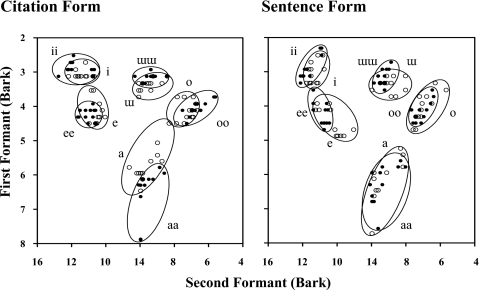

Figure 1 presents the target formant frequency data (F1∕F2 bark values at vowel midpoint) for the 120 tokens of Japanese vowels produced in citation form (left panel), and the 120 tokens produced in sentence form (right panel). Ellipses surround all 12 tokens of each vowel (4 speakers×3 tokens); tokens of the long vowels are represented by filled circles, the short vowels are represented by open circles. As Fig. 1 shows, across the five long∕short Japanese vowel pairs, there was little spectral overlap in either speaking style, despite considerable acoustic variability within and across speakers. Deviant tokens were found for low vowels: for [a], one each in the citation (by speaker 1) and in the sentence conditions (by speaker 2); for [aa], two in the citation condition and one in the sentence condition (both by speaker 2). However, since these tokens (and all others) were readily identified by a native Japanese listener as the intended vowels, they were considered extreme cases of token variability and were not eliminated from the analysis. Long∕short pairs of the same vowel quality overlapped considerably, especially for the sentence materials. Thus, as predicted, duration appears to play an important role in separating these contrasting vowel categories.

Figure 1.

Formant 1∕formant 2 (bark) plots for ten Japanese vowels produced by four male speakers in [hVba] contexts in citation form (left panel) and sentence form (right panel): 12 tokens per vowel. Filled circles are for 2-mora vowels; open circles are for 1-mora vowels.

The duration ratios of Japanese long to short vowels (L∕S ratios) averaged 2.9 for both speaking styles (ranged from 2.6 to 3.4 for each of five vowel pairs; see Appendix for detail). That is, long vowels in both forms were, on average, three times as long as short vowels. Note that these L∕S ratios for Japanese vowels are greater than those for the German vowels reported in Strange et al. (2004) (average L∕S ratio=1.9 for citation form, 1.5 for sentence form). As previously reported, average L∕S ratios for AE vowels were markedly smaller compared to Japanese vowels (1.3 for both citation and sentence forms).

In order to statistically evaluate the above observations and to quantify the spectral overlap among vowels and the contribution of duration in further differentiating vowel categories, two series of discriminant analyses were performed for each language corpus. The first analysis used spectral parameters alone (F1 and F2),2 and the second analysis used spectral parameters plus vocalic duration as input variables. In these analyses, “correct classification” was defined as the posterior classification of each token as the speaker-intended vowel category. For the formant values, as in the previous studies (Strange et al., 2004; 2005; 2007) only the measurements at vowel midpoint were entered. Table 1 presents the results for the Japanese citation and sentence forms (also depicted in Fig. 1) and for the comparable AE corpus. For both languages, the values in the first two rows represent the within-condition differentiation of vowels in the relevant input sets. The third row shows the results of cross-condition analyses (citation as input set, sentence as test set).

Table 1.

Percent correct classification of within-form and cross-form discriminant analyses based on spectral parameters alone (F1∕F2 in bark values) shown in the left column, and spectral parameters plus vocalic duration shown in the right column.

| F1∕F2 (%) | F1∕F2+Duration (%) | |

|---|---|---|

| Japanese Corpora | ||

| Citation Form | 77 | 98 |

| Sentence Form | 65 | 100 |

| Sentence→Citation | 62 | 98 |

| American English Corpora | ||

| Citation form | 85 | 91 |

| Sentence form | 86 | 95 |

| Sentence→Citation | 78 | 89 |

As these overall correct classification rates indicate, the ten Japanese vowels were not well differentiated by spectral parameters alone, especially for the sentence materials. However, almost all of the misclassifications were within long∕short vowel pairs (25 out of 28 cases for citation form and 41 out of 42 cases for sentence form). Therefore, when vowel duration was included as a parameter, classification improved to almost perfect. Thus, the Japanese five spectral categories are well differentiated even when variability due to speaker differences was included in the corpus, and with the use of duration, those five spectral categories are further separated into ten distinctive vowel categories.

For the comparable analyses for AE vowels, spectral differentiation (F1∕F2 Bark values alone) of the 11 vowels was somewhat better than for Japanese vowels, reflecting the fact that phonetically long and short AE vowels differ spectrally. As reported in Strange et al. (2004) study, many of the misclassifications of AE tokens included confusions between spectrally adjacent pairs [iː∕eɪ, eɪ∕ɪ, eɪ∕ε, æː∕ε, ɑː∕ʌ, ɔː∕oʊ, oʊ∕ʊ, uː∕ʊ] and between [ɑː] and [ɔ]. When duration was included as an input variable, correct classification rates for both forms improved but to lesser degrees than for Japanese.

The next series of analyses was performed to quantify cross-condition similarity. When sentence materials were classified using parameter weights and centers of gravities established for citation materials (F1∕F2 Bark values), only 62% of Japanese vowels were correctly classified. However, when both spectral and temporal parameters were included, only two tokens were misclassified as vowels of the same temporal categories ([ee] as [ii] and [Ɯ] as [o]). Note that the absolute duration of vowels in sentences were, on average, 15% shorter than those in citation form. These results indicate that the spectral differences among the five vowel categories are maintained across speaking styles, and that despite the changes in vowel duration between the two speaking styles, the temporal differences between the 1- and 2-mora Japanese vowels are still large enough to differentiate the ten categories.

As for the cross-condition analysis for the AE vowels, it was predicted that the inclusion of duration in the analysis should help differentiate the 11 AE vowels in sentence form but to a lesser degree than in Japanese because there was less spectral overlap among AE vowels in each speaking style. As predicted, the cross-condition analysis revealed that the rate of correct classifications improved by 11% when duration was included, but 15 cases remained misclassified. These 15 cases include the following: six cases of long∕short confusions (number of misclassified tokens): [ɪ] as [eɪ] (1), [ε] as [æː] (1), [ʌ] as [aː] (1), [oʊ] as [ʊ] (1), [uː] as [ʊ] (2); eight long∕long confusions: [eɪ] as [iː] (2), [ɑː] as [ɔː] (4), between [ɔː] and [oʊ] (2); and one short∕short confusion: [ʊ] as [ʌ].

Cross-language spectral similarity

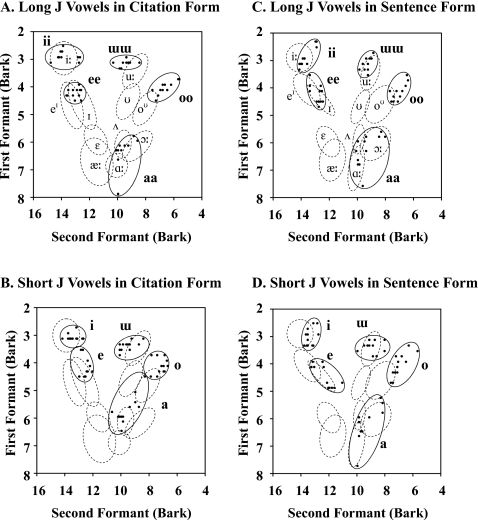

Additional discriminant analyses were performed in which the spectral parameters for the 11 AE vowels served as the input set, and those for the ten Japanese vowels served as the test set. That is, these analyses determined the spectral similarity of Japanese vowels to AE vowels using the classification rules established for AE vowel categories. Separate analyses were performed for the two speaking styles. Figure 2 displays the F1∕F2 Bark plots of Japanese and AE vowels. Distributions of vowels in the two languages are indicated by the ellipses (solid for Japanese and dashed for AE) that surround all 12 tokens of each vowel. Individual Japanese tokens are represented by filled circles; for clarity, individual AE tokens are not shown. Panels on the left are for citation materials, and the panels on the right are for sentence materials. The two top panels are for long Japanese vowels, and the two bottom panels are for short Japanese vowels. The same dashed ellipses for the 11 AE vowels in each form are shown in the background. As can be seen, most Japanese mid and short, low vowels were higher (lower F1 values) on average3 and revealed greater within-category variability than the comparable AE vowels while the front and back, high vowels were spectrally quite similar across languages.

Figure 2.

Formant 1∕formant 2 (bark) plots for 2-mora (upper panels) and 1-mora Japanese vowels (lower panels) produced by four male speakers in [hVba] in citation form (left panels) and sentence form (right panels) superimposed on 11 AE vowels (dotted ellipses). Solid ellipses encircle all 12 tokens of a Japanese vowel; individual AE tokens are not plotted.

In the cross-language discriminant analysis for the citation materials, the citation AE vowel corpus was used as the input set (F1∕F2 Bark values as input parameters); then all tokens of the Japanese citation corpus were classified using the rules established for these AE categories. The results are summarized in Table 2, where Japanese vowels are grouped in terms of the duration (1-mora and 2-mora) and tongue height (high, mid, low), as shown in the first column. The third and fourth columns display the modal AE vowels and the number of Japanese vowel tokens (max=12) classified as most similar to the respective AE category. In order to make acoustic classification consistent with the dialect profile of the listeners who performed the perceptual assimilation task in study 2, and to make results comparable between studies, Japanese tokens classified into AE [ɑː] or [ɔː] were pooled ([ɑː-ɔː]). The last two columns show the classification of the remaining tokens.

Table 2.

Acoustic similarity (F1∕F2 in Bark values) of Japanese and American English (AE) vowles: hVb(a) syllables produced in citation.

| Japanese. vowel | Modal classification | Other categories | |||

|---|---|---|---|---|---|

| AE vowel | No. of tokens | AE vowel | No. of tokens | ||

| 2-mora | |||||

| High | ii | iː | 12 | ||

| ƜƜ | uː | 12 | |||

| Mid | ee | eɪ | 7 | ɪ | 5 |

| oo | oʊ | 12 | |||

| Low | aa | ɑː–ɔː | 9 | ʌ | 3 |

| 1-mora | |||||

| High | i | iː | 12 | ||

| Ɯ | uː | 9 | ʊ | 3 | |

| Mid | e | ɪ | 8 | eɪ | 2 |

| iː | 1 | ||||

| ʊ | 1 | ||||

| o | oʊ | 9 | uː | 3 | |

| Low | a | ʌ | 7 | ɑː–ɔː | 2 |

| ε | 1 | ||||

| oʊ | 1 | ||||

| ʊ | 1 | ||||

For the citation materials, 52 out of 60 tokens of 2-mora vowels were classified as spectrally comparable long AE vowels [iː, eɪ, ɑː-ɔː, oʊ, uː]. Some tokens of mid, front [ee] and low [aa] were spectrally similar to short AE categories ([ɪ] and [ʌ], respectively). In contrast, only 21 out of 60 tokens of 1-mora vowels were classified as spectrally comparable short AE vowels [ɪ, ε, ʌ, ʊ], suggesting that they are spectrally more similar to AE long vowels. The classification of Japanese 1-mora mid, front and low vowels was to two or more AE spectrally adjacent vowels, indicating that they are intermediate among several AE categories.

Table 3 presents the results of the discriminant analysis (F1∕F2 Bark values) with the AE sentence materials as the input set and the Japanese sentence materials as the test set. The results resembled those for the citation materials: 47 out of 60 tokens of 2-mora Japanese vowels were classified into spectrally comparable long AE vowels, whereas only 12 out of 60 tokens of 1-mora vowels were classified as spectrally comparable short AE vowels. Similar to citation form, high Japanese vowels [ii, i, ƜƜ, Ɯ] were consistently classified as spectrally similar to long AE high vowels [iː, uː], while the mid, front [ee, e] and low vowels [aa, a] were classified as most similar to two or more AE categories. The mid, back vowel [o] in this form was more consistently classified as AE [oʊ].

Table 3.

Acoustic similarity (F1∕F2 in Bark values) of Japanese and American English (AE) vowles: hVb(a) syllables produced in citation.

| Japanese vowel | Modal classification | Other categories | |||

|---|---|---|---|---|---|

| AE vowel | No. of tokens | AE vowel | No. of tokens | ||

| 2-mora | |||||

| High | ii | iː | 12 | ||

| ƜƜ | uː | 12 | |||

| Mid | ee | ɪ | 9 | eɪ | 3 |

| oo | oʊ | 12 | |||

| Low | aa | ɑː–ɔː | 8 | ʌ | 4 |

| 1-mora | |||||

| High | i | iː | 10 | eɪ | 2 |

| Ɯ | uː | 12 | |||

| Mid | e | ɪ | 8 | eɪ | 3 |

| ʊ | 1 | ||||

| o | oʊ | 12 | |||

| Low | a | ɑː–ɔː | 8 | ʌ | 3 |

| oʊ | 1 | ||||

Discussion

The results of acoustic comparisons between Japanese and AE vowels suggested the following.

-

(1)

Regardless of speaking styles, Japanese vowels were consistently classified into five nonoverlapping spectral categories. These five spectral categories were further differentiated into short (1-mora) and long (2-mora) vowels by vowel duration. Altogether, classification was almost perfect for all ten vowel categories when F1, F2 values at vowel midpoint and vowel duration were included in the analysis.

-

(2)

AE vowels produced in both speaking styles were differentiated by mid-syllable formant frequencies fairly well, and some vowel pairs were further differentiated by vocalic duration. However, overall correct classification was not as good as for the Japanese vowels, indicative of a more crowded vowel space.

-

(3)

Within-language cross-condition discriminant analyses revealed that both AE and Japanese vowels produced in sentences share similar spectral and temporal characteristics to the citation material in this phonetic context, indicating that despite the effects of speaking style, the acoustic distinctions among vowel categories are maintained for both Japanese and AE.

-

(4)

Cross-language discriminant analyses using only the spectral parameters indicated that Japanese high vowels [ii, i], [ƜƜ, Ɯ], and mid, back vowels [oo, o] in both forms were spectrally similar to AE long vowels [iː], [uː] and [oʊ], respectively, while the mid, front and low Japanese vowels in both speaking styles were found spectrally more intermediate between two or more AE categories. The Japanese 1-mora vowels [e, a] in citation form were especially variable with respect to their spectral similarity to AE vowels.

In the next section, the results from the perceptual assimilation experiment are presented and discussed in relation to the acoustic comparisons between Japanese and AE vowels presented in this section.

PERCEPTUAL ASSIMILATION OF JAPANESE VOWELS BY AE LISTENERS

A listening experiment was performed using the Japanese corpus analyzed in Sec. 2. Native AE speakers with no experience with the Japanese language served as listeners and performed a perceptual assimilation task that involved two judgments: categorization of Japanese vowels using AE vowel category labels and rating the category goodness of the Japanese vowels as exemplars of the chosen AE vowel categories. Based on the results of the acoustic analyses, it was predicted that perceptual assimilation of Japanese high vowels [ii, i], [ƜƜ, Ɯ], and mid, back vowels [oo, o] would not be affected by speaking style and would be perceived as good exemplars of AE long vowels [iː], [uː], and [oʊ] unless AE listeners detected and responded on the basis of the considerable temporal differences between the 1- and 2-mora pairs. In comparison, perceptual assimilation of Japanese [e, ee] and [a, aa] was predicted to be less consistent (and possibly with poorer category goodness ratings) within and across listeners, reflecting the fact that spectrally, these vowels straddled more than one AE category. In addition, the effects of speaking style were expected to be observed for [ee, e, aa, a].

Method

Listeners

Twelve undergraduate students (two males and ten females, mean age=26.2 yr old) at USF served as the listeners for extra credit points. They were recruited either from introductory phonetics or phonology courses offered in the Department of Communication Sciences and Disorders. Some of them had had at least one semester of phonetics by the time of testing. All were fluent only in American English, and none of them had lived in a foreign country for an extended length of time. All reported that they had normal hearing. Among these listeners, ten had lived in Florida more than ten years, and two had lived in the northeastern United States more than ten years. Four additional listeners were tested but their data were excluded either because they failed to return for the second day of testing or because they were bilinguals.

Stimulus materials

Stimulus materials were the 240 tokens of ten Japanese vowels (10 vowels×4 speakers×2 utterance forms×3 repetitions) acoustically analyzed in study 1

Apparatus and instruments

All listening sessions including familiarization were provided individually in a sound booth in the Speech Perception Laboratory of USF. Stimuli were presented through headphones (STAX SR LAMBDA semi-panoramic sound electrostatic ear speaker). The listeners adjusted the sound volume for their comfort. For all sessions, stimulus presentation was controlled by a HYPERCARD 2.2 stack on a Macintosh Quadra 660AV computer with a 14 in. screen. The HYPERCARD stack used for the listening test had two components. The first component was for categorization and displayed 11 buttons labeled with Phonetic symbols (IPA) for 11 AE vowels [i, ɪ, eɪ, ε, æː, ɑː, ʌ, ɔː, oʊ, ʊ, uː] and keywords in [hVd] context (heed, hid, hayed, head, had, hod, hud, hawed, hoed, hood, who’d, respectively). The second component appeared after the listener categorized a stimulus vowel and displayed a seven-point Likert scale (1=foreign, 7=English) on which the listeners judged the category goodness of the stimulus vowel in the chosen AE category.

Procedures

A repeated-measure design was employed in which each listener was presented all tokens from all four speakers in both utterance forms. All listeners were tested on two days: one for citation materials and the other for sentence materials. The order was counterbalanced across listeners.

Familiarization

Before testing on day 1, all listeners completed an informed consent form and a language background questionnaire. Then, a brief tutorial on the IPA and two task familiarization sessions were given. The tutorial for the IPA provided a brief description of relationship between IPA for the 11 AE vowels and their sounds using the 11 [hVd] keywords. A HYPERCARD stack provided audio and text explanations.

Task familiarization had two parts: categorization-only and categorization and category goodness judgment. Stimuli for the categorization only familiarization were [həC1VC2] disyllables, where C1-C2 combinations were [b-d, b-t, d-d, d-t, g-d, g-t] and the V was one of the 11 AE vowels. These disyllables were embedded in a carrier sentence, “I hear the sound of [həC1VC2] some more.” The fifth author recorded 11 vowels in at least one of the C1-C2 context, and an additional 44 tokens were recorded by a male native speaker of AE who was not the speaker for the AE stimuli used in study 1. In the categorization-only part, these 55 tokens were presented in four blocks. The first block presented 11 tokens from the female speaker, the next two blocks presented 11 tokens from the male speaker randomly selected from his 44 tokens, and the last block presented the remaining 22 tokens from the male speaker. The listeners were asked to indicate which of the 11 AE vowels they heard by clicking on one of the response buttons on the screen. Feedback was provided by the computer program after each categorization response. When a response was incorrect, the experimenter sitting beside the listener provided explanations why the response was incorrect.

In the second part, familiarization for the category goodness judgment was given using 56 German sentence-form tokens randomly chosen from a total of 560 tokens (14 vowels×4 speakers in 5 consonantal contexts) used in Strange et al. (2005) study. A short task description was provided by a HYPERCARD stack and the listeners practiced on the German tokens using the same interface as the testing. In each trial, the listeners were asked to categorize a German vowel in terms of the 11 AE categories, and then indicate its category goodness in the chosen AE category on the seven-point Likert scale (1=foreign, 7=English). No feedback was provided in this part.

Test

Each listener was tested on two days. On day 1, a testing session was given following the task familiarization. On day 2, the listeners were given only the testing session, which followed the same procedure as testing on day 1. Half of the listeners heard citation materials on day 1 and sentence materials on day 2; presentation order was reversed for the remaining listeners.

In a testing session, stimuli were blocked by speaker, and a total of four blocks of 120 trials (10 vowels×3 tokens×4 repetitions) were presented for a speaking style. Block order was counterbalanced across the listeners. An opportunity for a short break was provided after the second block. In each trial, the same stimulus was presented twice; after the first presentation of a stimulus, the listeners categorized the Japanese vowel by choosing one of the 11 AE responses, then the same stimulus was presented again, and the listener rated its category goodness to the chosen AE category on a seven-point Likert scale (1=foreign, 7=English). The listeners were allowed to change their categorization response before making a rating response but were discouraged from doing so. A new trial began after the rating response was completed; thus, testing was listener paced.

No specific instruction was given in familiarization or test regarding the use of vowel duration, number of spectral categories in Japanese, or differences between AE and Japanese vowel inventories since the focus of the current study was to examine how naïve L2 listeners classify foreign speech sounds using L1 categories.

Results and discussion

First, for the category goodness responses, medians of ratings across all the listeners were obtained for all AE categories that received more than 10% out of 576 total responses for a Japanese vowel. As predicted from the results of the acoustic comparisons, this analysis yielded higher overall median ratings (6) for [ii, i], [ƜƜ, Ɯ], [oo, o] in both conditions and [ee] in sentence condition, and slightly lower median ratings (5) for [e, aa, a] in both conditions and [ee] in citation condition.

To analyze the categorization results, each listener’s responses were transferred to a spreadsheet and confusion matrices were constructed for (1) individual speakers, (2) individual listeners, and (3) two speaking styles. Examination of these matrices revealed some differences among listeners for some vowels (not associated with dialect) and between speaking styles.

Table 4 presents the group data in which categorization responses were pooled across the speakers, repetitions, and listeners. In order for the results to be consistent with the majority of listeners’ dialect profile, AE response categories [ɑː] and [ɔː] were pooled as [ɑː-ɔː] in this analysis.4 The top part (A) of the table displays the categorization for citation materials, and the bottom part (B) presents the results for sentence materials. The first column lists the Japanese vowels grouped by the temporal categories (2-mora or 1-mora). The cells in columns 2–11 present percentages of responses. Numbers in boldface indicate the modal perceptual responses for each Japanese vowel; boxed entries indicate the spectrally most similar AE vowels for each Japanese vowel determined by the cross-language discriminant analyses presented in Sec. 2.

Table 4.

Perceptual assimilation patterns: Categorization responses, expressed as percentages of total responses summed over speakers and listeners for citation-form (A) and sentence-form (B) materials. Bold=modal perceptual classification; boxed=modal acoustic classification.

| AE response categories | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| iː | ɪ | eɪ | ε | æː | ɑː–ɔː | ʌ | oʊ | ʊ | uː | ||

| (A) Citation 2-mora | |||||||||||

| ii | 99 | 1 | |||||||||

| ee | 2 | 94 | 5 | ||||||||

| aa | 2 | 89 | 9 | ||||||||

| oo | 1 | 99 | 1 | ||||||||

| ƜƜ | 1 | 2 | 5 | 92 | |||||||

| 1-mora | |||||||||||

| i | 95 | 4 | 1 | ||||||||

| e | 16 | 76 | 8 | ||||||||

| a | 3 | 57 | 39 | ||||||||

| o | 1 | 95 | 1 | 2 | |||||||

| Ɯ | 3 | 1 | 5 | 91 | |||||||

| (B) Sentence | |||||||||||

| 2-mora | |||||||||||

| ii | 99 | ||||||||||

| ee | 1 | 97 | 2 | ||||||||

| aa | 1 | 96 | 3 | ||||||||

| oo | 98 | 1 | |||||||||

| ƜƜ | 2 | 2 | 96 | ||||||||

| 1-mora | |||||||||||

| i | 98 | 2 | |||||||||

| e | 1 | 23 | 48 | 28 | |||||||

| a | 2 | 77 | 21 | ||||||||

| o | 95 | 4 | |||||||||

| Ɯ | 2 | 1 | 7 | 89 | |||||||

As predicted from the results of acoustic comparisons, the majority of Japanese vowels were assimilated to long AE vowels. All five 2-mora vowels [ii, ee, aa, oo, ƜƜ] were consistently assimilated to long AE counterparts [iː, eɪ, ɑː-ɔː, oʊ, uː] respectively (overall percentages from 89% to 99%), and both high and the mid, back 1-mora vowels [i, Ɯ, o] in both speaking styles were also assimilated to AE long categories [iː, uː, oʊ], respectively (overall percentages from 89% to 98%). These patterns were consistent with their spectral similarity to AE categories, except for [ee] in sentence context. This suggests that AE listeners disregarded the temporal differences between these 1- and 2-mora Japanese vowels and perceived them as equally good exemplars of long AE vowels.

By contrast, the assimilation patterns for the other Japanese vowels were not straightforward. First, contrary to prediction from acoustical similarity, Japanese 2-mora [ee] did not show any influence of speaking style and was perceived as most similar to AE [eɪ] in both conditions. Even though some tokens of citation-form [ee] were determined spectrally to be most similar to AE [ɪ] (5 out of 12 tokens, see Table 2), the influence of token variability was not observed in its assimilation pattern (94% to [eɪ]). As for sentence form [ee], 9 out of 12 tokens were spectrally similar to AE [ɪ] (see Table 3), but AE listeners were not influenced by token variability or spectral similarity, and almost unanimously assimilated it to AE [eɪ]. However, for sentence-form [ee], the average vocalic duration was 140 ms, which was closer to that of AE [eɪ] (132 ms) than [ɪ] (94 ms). Therefore, although AE listeners ignored vowel duration for [ii, i, ƜƜ, Ɯ, oo, o], temporal similarity may have contributed to AE listeners’ perceptual assimilation of Japanese [ee].

As expected, perceptual assimilation patterns for 1-mora [a] and [e] in both forms included more than one AE category. The modal responses were long AE vowels ([ɑː-ɔː] and [eɪ], respectively), but relatively large proportions of other responses were given ([ʌ] for [a] and [ɪ, ε] for [e]). As indicated in Table 4, these perceptual assimilation patterns were not entirely predictable from spectral similarity comparisons. Even when vocalic duration was considered, unlike sentence-form [ee], these assimilation patterns suggest the influence of other factors.

In order to account for the less consistent overall assimilation patterns observed for [e] and [a] in both speaking styles, further examination was performed to discover whether these patterns were due to individual differences across AE listeners or to within-listener inconsistency. The results revealed that a few listeners consistently made nonmodal responses for these vowels and their responses can be classified into either spectra-based or duration-based categorizations. Spectra-based patterns were found for citation-form [e] and [a]: responses from three listeners (2, 3, 11) consisted of 83% of [ɪ] responses for citation [e]. Similarly, for citation-form [a], 52% of [ʌ] responses were made by three listeners (1, 4, 10). Although sentence-form [a] was spectrally most similar to AE [ɑː-ɔː], three listeners (1, 2, 10) perceived this vowel as most similar to AE short vowel [ʌ], suggesting the influence of duration. The response pattern for sentence [e] can be considered partially spectra-based. Six listeners made 68% of the nonmodal responses ([ɪ] or [ε]).5 Of these nonmodal responses, one listener (1) responded with AE [ɪ] and [ε] equally often (44%); two listeners (2, 3) made [ɪ] responses most often, whereas [ε] was the modal response for the three remaining listeners (8, 10, 11). The [ɪ] response was consistent with the spectral and possibly temporal similarity to 1-mora [e]; other listeners may have chosen [ε] because 1-mora [e] (53 ms) was temporally more similar to [ε] (98 ms) than [eɪ] (132 ms). Still, it is not clear as to why the majority of listeners responded with spectrally and temporally less similar AE vowels in perceptually assimilating these Japanese vowels.

GENERAL DISCUSSION

In the present study, the similarity of Japanese vowels to the AE vowel system was examined through acoustic comparisons and perceptual assimilation tasks, using stimulus materials produced by four male speakers from each language. Consonantal context was held constant and CV and VC coarticulatory effects were considered minimal, but the target vowels were produced in citation form and in a carrier sentence to examine the influence of token variability associated with speaking style as well as individual speakers.

Results of the acoustic analysis (Sec. 2) showed that Japanese has five distinctive spectral vowel categories that were further separated into five long-short pairs. In contrast, although the 11 AE categories were differentiated fairly well by spectral measures, the inclusion of stimulus duration improved classification only slightly. Token variability due to speaking style was small for both Japanese and AE. Cross-language acoustic comparisons using only spectral measures (F1 and F2 at vowel midpoint) indicated that Japanese vowels [ii, i, ƜƜ, Ɯ, oo, o] in both speaking styles were most similar to long AE vowels [iː, uː, oʊ], respectively, whereas classification of [ee, e, aa, a] straddled more than one AE category. Thus, it was predicted that if AE listeners rely primarily on the spectral cues, the perceptual assimilation patterns should reflect these acoustic similarity patterns, with highly consistent categorization of long and short [ii∕i, oo∕o, ƜƜ∕Ɯ], but less consistent assimilation of long and short [ee∕e, aa∕a].

Perceptual assimilation results revealed that the five 2-mora Japanese vowels [ii, ee, aa, oo, ɰɰ] produced in both speaking styles were consistently assimilated to comparable long AE vowels [iː, eɪ, ɑː-ɔː, oʊ, uː], respectively. Therefore, according to SLM (Flege, 1995), Japanese 2-mora vowels can be considered perceptually identical or highly similar to AE long vowels. On the other hand, the Japanese 1-mora vowels showed more varied patterns. The high vowels [i, Ɯ] and mid, back vowel [o] were consistently assimilated to AE vowels [iː, uː, oʊ], respectively. This is in accordance with the results of spectral comparisons, and suggests that AE listeners perceived these Japanese vowels as identical or highly similar to their spectrally most similar AE long vowels and disregarded their shorter duration. Considering that their 2-mora counterparts [ii, ƜƜ, oo] were also assimilated to these same AE categories, the assimilation patterns of these long∕short Japanese pairs can be considered examples of a “single-category pattern” in PAM (Best, 1995).

As for the remaining 1-mora vowels [e, a], the responses from a few listeners were accounted for by cross-language acoustic similarity (spectral, temporal, or both). On the other hand, the remaining AE listeners assimilated these Japanese vowels into the same AE categories [eɪ, ɑː-ɔː] as for their 2-mora counterparts and as equally good exemplars, suggesting a “single-category” assimilation pattern. Unlike the minority responses, these responses could not be explained by cross-language acoustic similarity patterns.

Then what would explain these majority responses? Recall that the perceptual task used in the present study has been used successfully to assess assimilation of many L2 vowels by listeners from L1s with smaller vowel inventories (Strange et al., 1998; 2001; 2004; 2005). In those studies, cross-language discriminant analyses predicted perceptual assimilation patterns well for most, but not all non-native vowels that had counterparts in the L1. However, for some “new” vowels, such as the front rounded vowels of German that are not distinctive in AE, context-specific spectral similarity did not predict perceptual similarity patterns by naïve AE listeners. Since all five Japanese vowel qualities can be considered to be present in AE, it was hypothesized in the present study that the same perceptual assimilation task should effectively reveal perceptual similarity patterns based primarily on cross-language spectral similarity. The present results suggested otherwise in some cases. For these failures, at least two explanations can be offered. One concerns the method of acoustic comparison, and the other is associated with the assumptions of the perceptual task.

As Hillenbrand et al. (1995) and others have shown in AE, formant trajectories for many spectrally adjacent short∕long vowel pairs tend to be in the opposite directions in the vowel space, with the lax vowels (including long [æ]) moving toward more central positions, while tense vowels move toward peripheral positions in vowel space. These formant movements have been shown to affect vowel perception by AE listeners (cf. Nearey and Assmann, 1986; Nearey, 1989; Strange et al., 1983). Therefore, even though both long and short Japanese vowels tend to be monophthongal, if they are spectrally similar to AE [eɪ, ɑː-ɔː] at some time point other than the vocalic midpoint, AE listeners’ perceptual assimilation might be influenced by such similarity. Furthermore, as PAM hypothesizes, if listeners perceive articulatory gestures in the acoustic signal, similarity in the formant trajectories may explain the perceptual results. In order to test this hypothesis, Japanese 1-mora vowels [e] and [a] were subjected to a series of cross-language discriminant analyses using F1 and F2 values at three time points, namely, 25%, 50%, and 75% points of vowel duration. Separate analyses were performed for each speaking style using four combinations of three time points: 25% only, 75% only, 25%+75%, and 25%+50%+75%. Results showed that none of the four combinations yielded different results from those reported in Tables 2, 3. Therefore, although there might be some other subtle cues that have influenced perceptual assimilation, it was deemed reasonable to exclude inadequacy or insufficiency of the present acoustic comparison in failing to predict perceptual assimilation patterns for these vowels.

Turning to the second hypothesis, considering that both [e] and [a] were assimilated primarily to AE categories [eɪ, ɑː-ɔː] that are closer to the periphery in the vowel space than the spectrally more similar [ɪ, ʌ] are (see Fig. 2), the present results suggest the possible existence of other mechanisms that are not currently included in PAM or SLM. For example, although it was not intended to make such a claim, the present results resemble the “peripherality bias” that Polka and Bohn (2003) suggested to account for asymmetries in infant vowel discrimination. They summarized the results of their own studies and others and showed that the asymmetries in vowel perception by infants exhibit a bias for better discrimination in the category change paradigm when the stimuli changed from a more central to a more peripheral vowel (e.g., [ʊ] to [o], [ε] to [æ], [y] to [u], etc.). They concluded that this bias toward peripheral vowels may have an important role as perceptual anchors for language acquisition. Assuming this bias is present into adulthood and guides non-native speech learning, the present results for Japanese 1-mora vowels, including [e, a] might be explained by this perceptual bias toward more peripheral vowels as follows. Judging from the acoustic similarity and the observed perceptual assimilation patterns, it can be hypothesized that AE listeners compared the incoming 1-mora Japanese vowels against both long and short AE vowels, but due to their lack of attunement to duration cues and the peripherality bias, AE listeners chose more peripheral vowels as most similar to the Japanese vowels.6

Finally, the present study compared Japanese and AE vowels produced in relatively uncoarticulated [hVb] context in both citation and sentence conditions. More research is underway that compares how cross-language acoustic and perceptual similarities may vary when vowels are coarticulated in different consonantal contexts, and at different speaking rates and in different prosodic environments. Research on AE listeners’ perceptual assimilation of North German and Parisian French vowels (Strange et al., 2005) suggests that perceptual assimilation patterns often reflect context-independent patterns of acoustic similarity. For instance, AE listeners assimilate front, rounded vowels to back AE vowels, rather than to front, unrounded AE vowels, even in contexts in which back AE vowels are not fronted. However, since back AE vowels are fronted in coronal consonantal contexts (whereas front, unrounded AE vowels are never backed) (Strange et al., 2007), front, rounded vowels can be considered allophonic variations of back AE vowels by AE listeners. In citation-form utterances, AE listeners were able to indicate that these front “allophones” were inappropriate in noncoronal contexts by rating them as poor exemplars of AE back vowels. However, in sentence condition, front, rounded vowels were considered “good” instances of back AE vowels even when surrounded by labial consonants. Thus, while context-specific spectral similarity did not predict assimilation of front, rounded vowels in noncoronal contexts, context-independent spectral similarity relationships did. Further research on acoustic and perceptual similarity of Japanese and AE vowels is needed to determine whether context-independent spectral similarity patterns might account for perceptual assimilation of Japanese [e, a], as well as the remaining 1-mora vowels.

CONCLUSIONS

The present study set out to test whether the perceptual task used in previous studies was also useful in assessing perceptual similarity of non-native vowels from a small vowel inventory by listeners from a relatively large vowel inventory. As in previous studies, the results showed that cross-language acoustic similarity may not always accurately predict perceptual assimilation of non-native vowels. Thus, direct assessments of perceptual similarity relationships will be better predictors of discrimination difficulties by L2 learners according to PAM and SLM. It was hypothesized that the inconsistency between acoustic and perceptual similarity results may suggest the existence of comparison processes that are influenced by listeners’ knowledge about the distributional characteristics of phonetic segments in their native language. Under some stimulus and task conditions, the listeners may be able to compare phonetically detailed aspects of non-native segments, while in others, they may resort to a phonological level of analysis in making cross-language similarity judgments. Further research is needed to investigate under what conditions AE listeners may be able to utilize the large duration differences in Japanese 1-mora and 2-mora vowels when making perceptual similarity judgments and when attempting to differentiate these phonologically contrastive vowel pairs. In general, the findings of the present studies, as well as previous experiments on AE listeners’ perceptual assimilation of German vowels suggest that vocalic duration differences are often ignored in relating non-native vowels to native categories.

ACKNOWLEDGMENTS

This research has been supported by NIDCD under Grant No. DC00323 to W. Strange and JSPS under Grant No. 17202012 to R. Akahane-Yamada. The authors are grateful to Katherine Bielec, Mary Carroll, Robin Rodriguez, and David Thornton for their support with HYPERCARD stack programing, subject running, and data analysis.

APPENDIX: AVERAGE FORMANT FREQUENCIES AND DURATIONS OF JAPANESE AND AMERICAN ENGLISH VOWELS

| J | F1 | F2 | F3 | Duration | AE | F1 | F2 | F3 | Duration |

|---|---|---|---|---|---|---|---|---|---|

| Citation form | |||||||||

| ii | 295 | 2243 | 3123 | 138 | iː | 312 | 2307 | 2917 | 100 |

| ee | 443 | 1982 | 2563 | 154 | eɪ | 472 | 2062 | 2660 | 122 |

| aa | 709 | 1175 | 2343 | 165 | ɑː | 753 | 1250 | 2596 | 109 |

| ɔː | 678 | 1062 | 2678 | 132 | |||||

| oo | 423 | 732 | 2416 | 154 | oʊ | 500 | 909 | 2643 | 112 |

| ƜƜ | 322 | 1139 | 2288 | 146 | uː | 348 | 995 | 2374 | 104 |

| æː | 730 | 1568 | 2519 | 123 | |||||

| i | 317 | 2077 | 3027 | 51 | ɪ | 486 | 1785 | 2573 | 86 |

| e | 437 | 1785 | 2430 | 57 | ε | 633 | 1588 | 2553 | 91 |

| a | 615 | 1182 | 2289 | 53 | ʌ | 635 | 1189 | 2619 | 89 |

| o | 430 | 805 | 2375 | 54 | |||||

| Ɯ | 349 | 1171 | 2302 | 47 | ʊ | 489 | 1148 | 2472 | 93 |

| long/short ratio=2.9 | long/short ratio=1.3 | ||||||||

| Sentence form | |||||||||

| ii | 299 | 2189 | 3198 | 116 | iː | 303 | 2336 | 2961 | 108 |

| ee | 445 | 1925 | 2638 | 140 | eɪ | 423 | 2175 | 2722 | 132 |

| aa | 687 | 1146 | 2337 | 138 | ɑː | 754 | 1234 | 2609 | 125 |

| ɔː | 660 | 1056 | 2571 | 152 | |||||

| oo | 434 | 796 | 2400 | 127 | oʊ | 479 | 933 | 2571 | 126 |

| ƜƜ | 319 | 1128 | 2361 | 128 | uː | 342 | 1064 | 2422 | 115 |

| æː | 714 | 1645 | 2456 | 147 | |||||

| i | 312 | 2076 | 3115 | 37 | ɪ | 461 | 1826 | 2634 | 94 |

| e | 464 | 1770 | 2407 | 53 | ε | 627 | 1657 | 2544 | 98 |

| a | 672 | 1134 | 2288 | 49 | ʌ | 631 | 1232 | 2619 | 98 |

| o | 423 | 776 | 2345 | 47 | |||||

| Ɯ | 348 | 1069 | 2343 | 38 | ʊ | 495 | 1202 | 2492 | 107 |

| long/short ratio=2.9 | long/short ratio=1.3 | ||||||||

Portions of this work were presented in “Perceptual assimilation of Japanese vowels by American English listeners: effects of speaking style,” at the 136th meeting of the Acoustical Society of America, Norfolk, VA, October 1998.

Footnotes

In addition to these cases of assimilation of speech sounds to L1 categories, PAM includes a case of categorization of L2 sounds to nonspeech categories (i.e., nonassimilable) where discrimination can range from good to very good, based on the psychoacoustic salience of the acoustic patterns.

The preliminary analyses for Japanese vowels did not reveal considerable differences between two-formant vs three-formant analyses. Therefore, all discriminant analyses were performed using only F1 and F2 values.

The lower F1 for Japanese mid and low vowels were deemed cross-language differences rather than differences in vocal tract sizes between the two speaker groups based on the acoustic similarity between Japanese [ii] and AE [i:].

According to Labov et al. (2006), differentiation between [ɑ:, ɔ:] by AE speakers in Tampa area is variable overall, although they are produced distinctively before [n] and [t].

In some dialects in American English, [ɪ] and [ε] may be merged (Labov et al., 2006). However, the dialectal profiles of these listeners indicate no such relationship with their response patterns.

It should be pointed out that the present study is considerably different from the studies with infants in methodological aspects. Using synthetic stimuli, Johnson et al. (1993) found that phonetic targets for native vowels are similar to the hyperarticulated forms, but the existence of a peripherality bias for speaker-produced stimuli by adult listeners has not yet been confirmed in any published study.

References

- Best, C. T. (1995). “A direct realist view of cross-language speech perception,” in Speech Perception and Linguistic Experience: Issues in Cross-Language Research, edited by Strange W. (York Press, Timonium, MD: ), pp. 171–204. [Google Scholar]

- Best, C. T., McRoberts, G. W., and Goodell, E. (2001). “Discrimination of non-native consonant contrasts varying in perceptual assimilation to the listener’s native phonological system,” J. Acoust. Soc. Am. 10.1121/1.1332378 109, 775–794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best, C. T., McRoberts, G. W., and Sithole, N. N. (1988). “The phonological basis of perceptual loss for non-native contrasts: Maintenance of discrimination among Zulu clicks by English-speaking adults and infants,” J. Exp. Psychol. Hum. Percept. Perform. 10.1037//0096-1523.14.3.345 14, 345–360. [DOI] [PubMed] [Google Scholar]

- Flege, J. E. (1995). “Second language speech learning: Theory, findings, and problems,” in Speech Perception and Linguistic Experience: Issues in Cross-Language Research, edited by Strange W. (York Press, Timonium, MD: ), pp. 233–277. [Google Scholar]

- Flege, J. E., MacKay, I. R. A., and Meador, D. (1999). “Native Italian speakers’ perception and production of English vowels,” J. Acoust. Soc. Am. 10.1121/1.428116 106, 2973–2987. [DOI] [PubMed] [Google Scholar]

- Fox, R. A., Flege, J. E., and Munro, M. J. (1995). “The perception of English and Spanish vowels by native English and Spanish listeners: A multidimensional scaling analysis,” J. Acoust. Soc. Am. 10.1121/1.411974 97, 2540–2551. [DOI] [PubMed] [Google Scholar]

- Gottfried, T. L. (1984). “Effects of consonant context on the perception of French vowels,” J. Phonetics 12, 91–114. [Google Scholar]

- Han, M. S. (1962). Japanese Phonology: An Analysis Based upon Sound Spectrograms (Kenkyusha, Tokyo: ). [Google Scholar]

- Hillenbrand, J., Getty, L. A., Clark, M. J., and Wheeler, K. (1995). “Acoustic characteristics of American English vowels,” J. Acoust. Soc. Am. 10.1121/1.411872 97, 3099–3111. [DOI] [PubMed] [Google Scholar]

- Hirata, Y., and Tsukada, K. (2004). “The effects of speaking rate and vowel length on formant movements in Japanese,” in Proceedings of the 2003 Texas Linguistics Society Conference, edited by Agwuele A., Warren W., and Park S.-H. (Cascadilla Proceedings Project, Somerville, CA), pp. 73–85.

- Homma, Y. (1981). “Durational relationships between Japanese stops and vowels,” J. Phonetics 9, 273–281. [Google Scholar]

- Homma, Y. (1992). Acoustic Phonetics in English and Japanese (Yamaguchi-shoten, Tokyo: ). [Google Scholar]

- Johnson, K., Flemming, E., and Wright, R. (1993). “The hyperspace effect: Phonetic targets are hyporarticulated,” Language 69, 505–528. [Google Scholar]

- Keating, P. A., and Huffman, M. K. (1984). “Vowel variation in Jpaanese,” Phonetica 41, 191–207. [Google Scholar]

- Klatt, D. H. (1976). “Linguistic uses of segmental duration in English: Acoustic and perceptual evidence,” J. Acoust. Soc. Am. 10.1121/1.380986 59, 1208–1221. [DOI] [PubMed] [Google Scholar]

- Klecka, W. R. (1980). Discriminant Analysis, (Sage, Newbury Park, CA: ). [Google Scholar]

- Labov, W., Ash, S., and Boberg, C. (2006). Atlas of North American English: Phonology and Phonetics (Mouton de Gruyer, Berlin: ). [Google Scholar]

- Ladefoged, P. (1993). A Course in Phonetics, 3rd ed. (Harcourt Brace, Orlando, FL: ). [Google Scholar]

- Nearey, T. M. (1989). “Static, dynamic, and relational properties in vowel perception,” J. Acoust. Soc. Am. 10.1121/1.397861 85, 2088–2113. [DOI] [PubMed] [Google Scholar]

- Nearey, T. M., and Assmann, P. F. (1986). “Modeling the role of inherent spectral change in vowel identification,” J. Acoust. Soc. Am. 10.1121/1.394433 80, 1297–1308. [DOI] [Google Scholar]

- Peterson, G. E., and Lehiste, I. (1960). “Duration of syllable nuclei in English,” J. Acoust. Soc. Am. 10.1121/1.1908183 32, 693–703. [DOI] [Google Scholar]

- Polka, L., and Bohn, O.-S. (2003). “Asymmetries in vowel perception,” Speech Commun. 10.1016/S0167-6393(02)00105-X 41, 221–231. [DOI] [Google Scholar]

- Pruitt, J. S., Jenkins, J. J., and Strange, W. (2006). “Training the perception of Hindi dental and retroflex stops by native speakers of American English and Japanese,” J. Acoust. Soc. Am. 10.1121/1.2161427 119, 1684–1696. [DOI] [PubMed] [Google Scholar]

- Rochet, B. L. (1995). “Perception and production of second-language speech sounds by adults,” in Speech Perception and Linguistic Experience: Issues in Cross-Language Research, edited by Strange W. (York Press, Timonium, MD: ), pp. 379–410. [Google Scholar]

- Schmidt, A. M. (1996). “Cross-language identification of consonants. Part 1. Korean perception of English,” J. Acoust. Soc. Am. 10.1121/1.414804 99, 3201–3221. [DOI] [PubMed] [Google Scholar]

- Shibatani, M. (1990). The Languages of Japan (Cambridge University Press, New York: ). [Google Scholar]

- Stack, J. W., Strange, W., Jenkins, J. J., Clarke, III, W. D., and Trent, S. A. (2006). “Perceptual invariance of coarticulated vowels over variations in speaking style,” J. Acoust. Soc. Am. 10.1121/1.2171837 119, 2394–2405. [DOI] [PubMed] [Google Scholar]

- Strange, W. (2007). “Cross-language phonetic similarity of vowels: Theoretical and methodological issues,” in Language Experience in Second Language Speech Learning: In Honor of James Emile Flege, edited by Bohn O.-S. and Munro M. J. (John Benjamins, Amsterdam: ), pp. 35–55. [Google Scholar]; Strange, W., Akahane-Yamada, R., Kubo, R., Trent, S. A., and Nishi, K. (2001). “Effects of consonantal context on perceptual assimilation of American English vowels by Japanese listeners,” J. Acoust. Soc. Am. 10.1121/1.1353594 109, 1691–1704. [DOI] [PubMed] [Google Scholar]

- Strange, W., Akahane-Yamada, R., Kubo, R., Trent, S. A., Nishi, K., and Jenkins, J. J. (1998). “Perceptual assimilation of American English vowels by Japanese listeners,” J. Phonetics 10.1006/jpho.1998.0078 26, 311–344. [DOI] [PubMed] [Google Scholar]

- Strange, W., Bohn, O.-S., Nishi, K., and Trent, S. A. (2005). “Contextual variation in the acoustic and perceptual similarity of North German and American English vowels,” J. Acoust. Soc. Am. 118, 1751–1762. [DOI] [PubMed] [Google Scholar]

- Strange, W., Bohn, O.-S., Trent, S. A., and Nishi, K. (2004). “Acoustic and perceptual similarity of North German and American English vowels,” J. Acoust. Soc. Am. 10.1121/1.1687832 115, 1791–1807. [DOI] [PubMed] [Google Scholar]

- Strange, W., Jenkins, J. J., and Johnson, T. L. (1983). “Dynamic specification of coarticulated vowels,” J. Acoust. Soc. Am. 10.1121/1.389855 74, 695–705. [DOI] [PubMed] [Google Scholar]

- Strange, W., Weber, A., Levy, E., Shafiro, V., Hisagi, M., and Nishi, K. (2007). “Acoustic variability of German, French, and American vowels: Phonetic context effects,” J. Acoust. Soc. Am. 10.1121/1.2749716 122, 1111–1129. [DOI] [PubMed] [Google Scholar]

- Werker, J. F., and Tees, R. C. (1984). “Cross-language speech perception: Evidence for perceptual reorganization during the first year of life,” Infant Behav. Dev. 10.1016/S0163-6383(84)80022-3 7, 49–63. [DOI] [PubMed] [Google Scholar]