For decades, concerns have arisen over the robustness of the clinical research enterprise and the erosion of the cadre of new and experienced clinical investigators (1–8). In 1996, the director of the National Institutes of Health (NIH) responded to these concerns by impaneling a group of experienced clinical investigators and teaching hospital administrators to recommend policy changes regarding clinical research (9). Subsequently, NIH implemented a number of the panel’s recommendations, including increased support of clinical research training programs and the establishment of NIH–sponsored educational debt relief programs for clinical investigators (10,11). Re-engineering the clinical research enterprise is a major theme of the NIH Roadmap for Medical Research, launched in 2003 (12).

Despite these initiatives in support of clinical research, clinical investigators often perceive that clinical research grant applications may be disadvantaged in the NIH peer review process. Several reports have indicated that priority scores and funding rates are lower for clinical than for non-clinical applications (8, 13–15). Comparable differences between clinical and non-clinical applications were observed in applications reviewed in 1994 and 2004. Anecdotally, it has been suggested that these differences reflect inherent limitations of clinical studies, e.g., clinical studies are more difficult to control and it is difficult to determine causality from the results.

Based on an analysis of the relationship of review outcomes in 2004 to study section assignment and the professional backgrounds of study section members, we previously reported the assignment of priority scores for clinical and non-clinical applications did not differ for reviewers with or without experience conducting clinical research (15). The less favorable review outcomes for clinical applications were also not accounted for by the “density” of clinical applications reviewed in a study section or by the greater requested costs for clinical research (15). Our preliminary observations, based on data from two funding cycles, suggested that human subject concerns contributed to the overall less favorable review outcomes for clinical applications (14).

We undertook an analysis to further evaluate potential explanations for the difference in peer review outcomes between clinical and non-clinical applications. Specifically, we focused on the impact of rates of submission and outcomes of amended and competing renewal applications submitted by new and established investigators and human subject protection concerns raised at the time of review.

METHODS

Data were derived from the Consolidated Grant Applications File maintained by the NIH Office of Extramural Research. The Center for Scientific Review (CSR) reviews approximately 70% of the applications submitted to NIH, with the balance being reviewed by the institutes and centers within NIH. The data set included R01 (standard research grant) applications reviewed by CSR for the 12 review rounds beginning with October 2000 and ending May 2004.

Consistent with the definition proposed by an NIH Director’s Panel on Clinical Research, an inclusive definition of clinical research was used. A research application is defined as clinical when the principal investigator (PI) indicates involvement of human subjects in the proposed research by checking “yes” on page 1 of the grant application form in response to the involvement of human subjects query. This definition of clinical research captures research on mechanisms of disease, therapeutic interventions, clinical trials, development of technologies, epidemiological and behavioral studies and outcomes, and health services research. Research involving the collection or study of publicly available or existing deidentified data, documents, records, pathological specimens, or diagnostic specimens was not considered clinical research (NIH Exemption 4 code).

The NIH defines human subject concerns as any potential or actual unacceptable risk(s) or inadequate protection against risk(s) to human subjects identified in any portion of the application. NIH staff and study section members use codes to identify concerns with human subject protections. Study section members are advised to factor human subject concerns in their assignment of a priority score.

Review outcomes were evaluated both in aggregate and separately for “new” and “experienced” investigators. This distinction is based on the individual’s having served, or not served, as PI on a Public Health Service-supported research project. Individuals classified as new investigators may have served as PI on a small grant (R03), an Academic Research Enhancement Award (R15), an exploratory development grant (R21), or certain mentored research career development awards (K01, K08, and K12).

Applications reviewed by NIH receive a priority score, which reflects a scientific review group’s evaluation of the scientific and technical merit of the application. Using incremental units of 0.1, individual members “vote” scores from 1.0 (highest merit) to 5.0 (lowest merit). If an application is deemed to be “non-competitive” (in the lower half, qualitatively, of applications reviewed by the study section) by unanimous agreement of the study section, it will not be fully discussed at the study section meeting and will not receive a priority score. Because of the variability of scoring behaviors among study sections, to provide cross-study section and longitudinal comparisons, R01 applications within each CSR study section are assigned a percentile rank according to priority scores given in the current and previous two review rounds. As with priority scores, the lower the numerical value of the percentile, the greater the relative merit of the application. Applications that do not receive a priority score are included within a study section’s percentile rankings. This analysis focuses on the results of the scientific peer review, as reflected by the percentile rank.

RESULTS

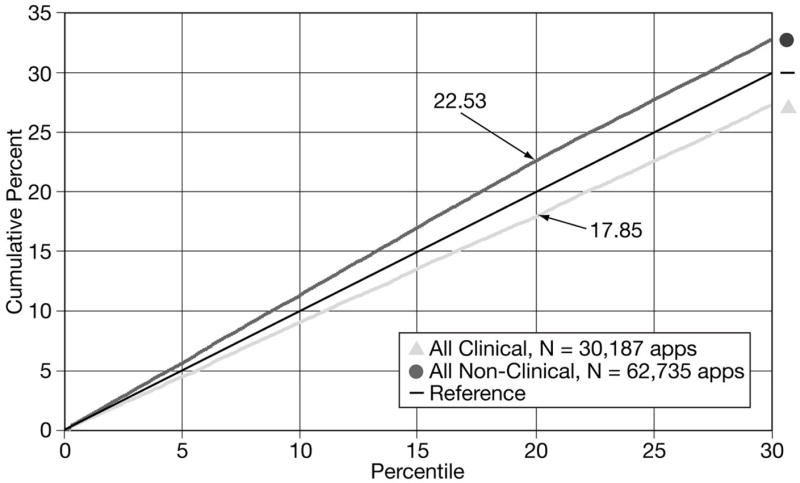

From October 2000 through May 2004, CSR reviewed 92,922 R01 applications; 62,735, or 67.5%, were considered non-clinical applications and 30,187, or 32.5%, were considered clinical applications. Figure 1 displays the cumulative percent plotted against percentile data for the clinical and non-clinical applications. Only those applications scoring at the 30th percentile are displayed. In aggregate, 20% of all applications will receive percentile scores of 20.0 or better; 30% will score 30.0 or better; etc. This “reference” line is displayed in Figure 1 and subsequent figures. Applications with better outcomes are depicted above the reference line, and applications with less favorable outcomes are depicted below the reference line. There was a modest difference in outcomes for clinical versus non-clinical applications: 17.8% of all clinical R01 applications versus 22.5% of all non-clinical R01 applications received a percentile rank of 20.0 or better.

Figure 1.

Cumulative percents of clinical and non-clinical R01 grant applications scoring within the 30th percentile

Of all R01 clinical applications, 14.8% were noted by reviewers to have human subject concerns (Table 1). Initial applications (A0) from new investigators consistently had the highest percent (19.2%) of applications with human subject concerns. Applications of experienced investigators, whether submitting a new application or a competing renewal, also had high percents of human subject concerns, ranging from 9.4% to 15.6% of applications. The percent of new investigator Type 1 applications with human subject concerns decreased on resubmission.

Table 1.

Percent of R01 clinical applications

| PI/App | Type | Total applications | Human subjects code concerns |

|---|---|---|---|

| New/T1 | A0

A1 A2 |

7382

2761 690 |

19.2%

13.4% 10.9% |

| Established/T1 | A0

A1 A2 |

8446

3775 1086 |

15.6%

14.0% 14.1% |

| Established/T2 | A0

A1 A2 |

3625

1825 597 |

9.4%

10.4% 13.4% |

| Total | 30187 | 14.8% |

Abbreviations: PI, principals investigator; A0, initial application, A1, first revision; A2, second revision of application; T1, new application; T2, competing renewal application

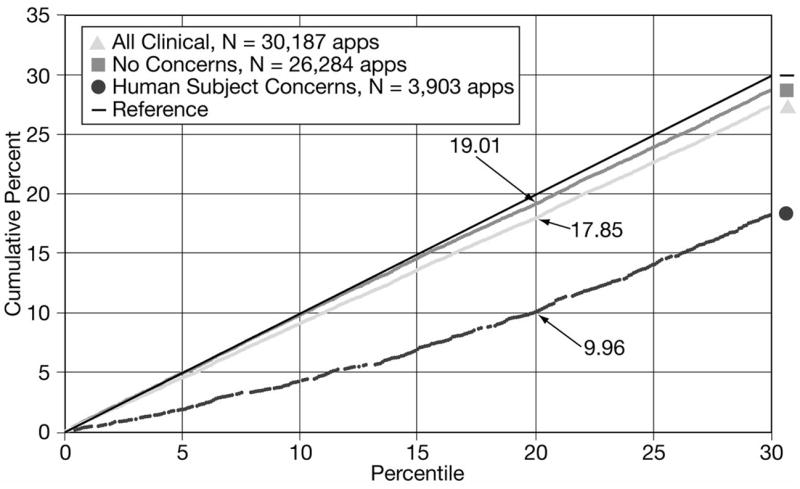

For the subset of clinical applications without human subject concerns, 19% had a percentile rank of 20.0 or better (Figure 2). In contrast, for the subset in which reviewers identified human subject concerns, only 10% received a percentile rank of 20.0 or better. Thus, approximately one-half of the observed differences in peer review outcomes for clinical versus basic research applications can be attributed to applicants failing to adequately address human subject concerns in their applications.

Figure 2.

Cumulative percents of clinical R01 grant applications with and without human subject concerns scoring within the 30th percentile

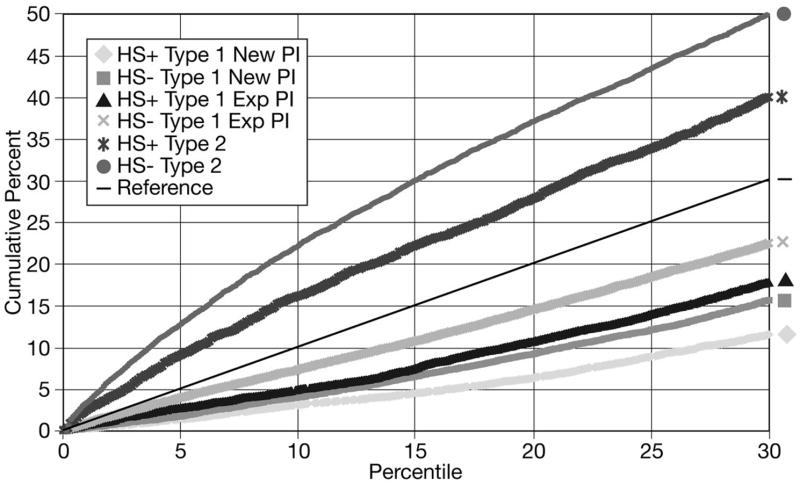

Overall, Type 1 applications, whether from new or established investigators, did not score as well as competing renewal (Type 2) applications (Figure 3). Initial Type 1 R01 applications from new investigators did not score as well as initial Type 1 applications from established investigators. This pattern persisted for the first (A1) and second (A2) resubmissions, although the differences narrowed on resubmission. Non-clinical R01 Type 1 and Type 2 applications from new and experienced investigators did better than clinical applications on the A0 submission. However, the differences between clinical and non-clinical applications for each category of investigator and application (new versus established and Type 1 v. Type 2) were reduced with the A1 application and disappeared with the A2.

Figure 3.

Cumulative percents of R01 grant applications by type (Type 1 [new] vs. Type 2 [competitive renewal], clinical [HS+]* vs. non-clinical [HS−]) and by principal investigator status (new vs. experienced) scoring within the 30th percentile

*HS+= involving human subjects; HS− = not involving human subjects

Table 2 compares submission rates for all clinical versus all non-clinical R01 applications by investigator category (new v. experienced), application type (Type 1 v. Type 2), and application submission category (AO v. A1 or A2 revisions). Overall 33.9% of clinical applications were submitted by new investigators compared to 29.5% of non-clinical applications. Additionally, investigators conducting clinical research were less likely to submit a competing renewal application than those conducing non-clinical research (20.0% v. 28.3%). Resubmissions (A1 and A2) of competing renewal (Type 2) applications from clinical investigators also represent a smaller percent of all clinical applications (8.0%) compared to Type 2 non-clinical applications (10.6%). Thus, not only are clinical investigators less likely to submit a Type 2 application, they are less likely to submit a revised application for a competing renewal.

Table 2.

Rate of submission of initial and revised applications.

| Application type | Investigator | Percent clinical applications | Percent non-clinical applications | Percent Difference |

|---|---|---|---|---|

| Type1A0 | New | 24.5 | 20 | 22.5 |

| Type1A1 | New | 9.1 | 7.6 | 19.7 |

| Type1A2 | New | 2.3 | 1.9 | 21.1 |

| Type1A0 | Exp

Exp |

28

12.5 |

27.4

11.4 |

2.2 |

| Type1A1 | 9.6 | |||

| Type1A2 | Exp | 3.6 | 3.4 | 5.9 |

| Type2A0 | 12 | 17.7 | −32.2 | |

| Type2A1 | 6 | 7.9 | −24.1 | |

| Type2A2 | 2 | 2.7 | −25.9 | |

| Total | 100.0 | 100.0 |

Abbreviations: PI, principals investigator; A0, initial application, A1, first revision; A2, second revision of application; T1, new application; T2, competing renewal application

Because different subsets of applications (for example, Type 1 v. Type 2, initial vs. revised) have different peer review outcomes, differences in the proportions of applications within each subset effects the overall review outcome. Two values influence the aggregate percents of clinical and non-clinical applications scoring within the 20th percentile: the percent of applications in each of the nine subsets in Table 2 with a percentile rank of 20.0 or better; and the percent of each subset relative to the total. By modeling the impact of the differences in submission rates of the various types of applications, we have estimated that the lower rate of submission of competing clinical applications contributes to approximately one-half of the aggregate difference in peer review outcomes between clinical and non-clinical applications.

DISCUSSION

The present study, based on data from 12 review council rounds, confirms and extends preliminary observations that clinical grant applications, in aggregate, do not fare as well in peer review as non-clinical applications (7, 13–15). The current data suggest that nearly all of the difference in review outcomes for clinical and non-clinical applications is due to two separate factors: a failure of 14.8% of clinical applicants to adequately address the human subject protection requirements for research proposals and a lower rate of submission of competing continuation applications by clinical applicants. Each of these two factors accounts for approximately half of the difference in review outcomes between clinical and non-clinical applications.

The increasing complexity of federal regulations dealing with the ethical conduct of clinical research is a challenge for the clinical investigator (16). Even some experienced funded clinical researchers proposing continuation of their research have difficulty documenting adequate protections for human subjects. Applications cited for having human subject concerns do not necessarily mean that the science is less meritorious. Human subject concerns raised at the time of review may reflect inadequate explanation by the investigator in the protection of human subjects section of the application. Conceivably, instructions for completing this section of the application may require further clarification. However, we cannot exclude the possibility that these applications may also be weaker for reasons other than inadequate documentation of human protections. Nevertheless, there may be value in providing more rigorous training for both emerging and experienced clinical investigators regarding the utilization of human subjects. The message to applicants is that failure to provide complete information about the plans to protect human subjects may result in a less favorable priority score and could adversely affect the likelihood of funding.

The percentage of A0 submissions for Type 2 clinical applications was less than that for non-clinical applications. Similarly, Dickler et al recently reported that clinical researchers with an MD degree only are more likely than non-clinical researchers to leave the R01 grant applicant pool, although this assertion was not true for individuals with both the MD and PhD degree (8). Potential factors that may contribute to the attrition of clinical investigators include the total time required for clinical research training; a paucity of clinical research mentors; the slow pace of clinical research and its impact on academic promotion and subsequent competitiveness for renewal grant applications; financial indebtedness of medical school graduates; and stable, attractive alternative career options (17). The clinical research enterprise would benefit from an informed, current understanding of the factors driving a lower submission rate for clinical research Type 2 applications.

Several limitations of this report should be noted. The observations are based on an inclusive and admittedly imperfect definition of clinical research. In the future, it would be useful to develop a more accurate mechanism for identifying and tracking review outcomes for specific types of clinical research. This analysis focuses exclusively on R01 applications reviewed by CSR. Applications for clinical research are also reviewed by other NIH institutes and centers, and clinical research is also supported by other funding mechanisms, including contracts. Finally, peer-review outcomes, not funding rates, are the focus of this analysis. However, percentile rank is not the only factor used by NIH’s institutes and centers in guiding funding decisions.

This analysis is not meant to deemphasize the importance of the composition of review panels and the assignment of applications to appropriate review groups. With the input of the external community, CSR monitors and evaluates the peer review process on an ongoing basis. Although peer review may be imperfect, it should not become the scapegoat for the organizational and cultural barriers to clinical research within medical schools and teaching hospitals, as identified by a recent Association of American Medical Colleges Task Force on Clinical Research (18).

Acknowledgments

We thank Charles Dumais and Lisa Klingensmith for their invaluable assistance in providing data for this manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Wyngaarden JB. The clinical investigator as an endangered species. N Engl J Med. 1979;301(23):1254–1259. doi: 10.1056/NEJM197912063012303. [DOI] [PubMed] [Google Scholar]

- 2.Goldstein JL, Brown MS. The clinical investigator: Bewitched, bothered, and bewildered-but still beloved. J Clin Invest. 1997;99(12):2803–2812. doi: 10.1172/JCI119470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Campbell EG, Weissman JS, Moy E, Blumenthal D. Status of clinical research in academic health centers: Views from the research leadership. JAMA. 2001;286(7):800–806. doi: 10.1001/jama.286.7.800. [DOI] [PubMed] [Google Scholar]

- 4.Nathan DG. Educational debt relief for clinical investigators—a vote of confidence. N Engl J Med. 2002;346(5):372–374. doi: 10.1056/NEJM200201313460516. [DOI] [PubMed] [Google Scholar]

- 5.Nathan DG. Careers in translational clinical research-historical perspectives, future challenges. JAMA. 2002;287(18):2424–2427. doi: 10.1001/jama.287.18.2424. [DOI] [PubMed] [Google Scholar]

- 6.Ley TJ, Rosenberg LE. Removing career obstacles for young physician-scientists—loan-repayment programs. N Engl J Med. 2002;346(5):368–373. doi: 10.1056/NEJM200201313460515. [DOI] [PubMed] [Google Scholar]

- 7.Sung NS, Crowley WF, Jr, Genel M, et al. Central challenges facing the national clinical research enterprise. JAMA. 2003;289(10):1278–1287. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 8.Dickler HB, Fang D, Heinig SJ, et al. New physician-investigators receiving National Institutes of Health research project grants: A historical perspective on the “endangered species. JAMA. 2007;297(22):2496–2501. doi: 10.1001/jama.297.22.2496. [DOI] [PubMed] [Google Scholar]

- 9.Nathan DG. Clinical research: Perceptions, reality, and proposed solutions. JAMA. 1998;280(16):1427–1432. doi: 10.1001/jama.280.16.1427. [DOI] [PubMed] [Google Scholar]

- 10.Nathan DG, Varmus HE. The National Institutes of Health and clinical research: A progress report. Nat Med. 2000;6(11):1201–1204. doi: 10.1038/81282. [DOI] [PubMed] [Google Scholar]

- 11.Nathan DG, Wilson JD. Clinical research and the NIH-a report card. N Engl J Med. 2003;349(19):860–1865. doi: 10.1056/NEJMsb035066. [DOI] [PubMed] [Google Scholar]

- 12.Zerhouni E. Medicine. The NIH Roadmap. Science. 2003;302(5642):63–72. doi: 10.1126/science.1091867. [DOI] [PubMed] [Google Scholar]

- 13.Williams GH, Wara DW, Carbone P. Funding for patient-oriented research: Critical strain on a fundamental linchpin. JAMA. 1997;278(3):227–231. [PubMed] [Google Scholar]

- 14.Kotchen TA, Lindquist T, Malik K, Ehrenfeld E. NIH Peer Review of Grant Applications for Clinical Research. JAMA. 2004;291(7):836–843. doi: 10.1001/jama.291.7.836. [DOI] [PubMed] [Google Scholar]

- 15.Kotchen TA, Lindquist T, Miller Sostek A, et al. Outcomes of National Institutes of Health peer review of clinical grant applications. J Invest Med. 2006;54(1):13–19. doi: 10.2310/6650.2005.05026. [DOI] [PubMed] [Google Scholar]

- 16.Mello MM, Studdert DM, Brennan TA. The rise of litigation in human subjects research. Ann Int Med. 2003;139(1):40–45. doi: 10.7326/0003-4819-139-1-200307010-00011. [DOI] [PubMed] [Google Scholar]

- 17.Wolf M. Clinical research career development: The individual perspective. Acad Med. 2002;77(11):1084–1088. doi: 10.1097/00001888-200211000-00004. [DOI] [PubMed] [Google Scholar]

- 18.Association of American Medical Colleges. Promoting translational and clinical science: The critical role of medical schools and teaching hospitals. Report of the AAMC’s Task Force II on Clinical Research; Washington, DC. 2006. [Google Scholar]