Abstract

Background

Self-assessment is increasingly being incorporated into competency evaluation in residency training. Little research has investigated the characteristics of residents’ learning objectives and action plans after self-assessment.

Objective

To explore the frequency and specificity of residents’ learning objectives and action plans after completing either a highly or minimally structured self-assessment.

Design

Internal Medicine residents (N = 90) were randomized to complete a highly or minimally structured self-assessment instrument based on the Accreditation Council for Graduate Medical Education Core Competencies. All residents then identified learning objectives and action plans.

Measurements

Learning objectives and action plans were analyzed for content. Differences in specificity and content related to form, gender, and training level were assessed.

Results

Seventy-six residents (84% response rate) identified 178 learning objectives. Objectives were general (79%), most often focused on medical knowledge (40%), and were not related to the type of form completed (p > 0.01). “Reading more” was the most common action plan.

Conclusions

Residents commonly identify general learning objectives focusing on medical knowledge regardless of the structure of the self-assessment form. Tools and processes that further facilitate self-assessment should be identified.

KEY WORDS: self-assessment, reflective practice, graduate medical education

BACKGROUND

Currently, there is much interest in the skills of self-assessment across the medical education continuum.1–5 In theory, self-assessment can serve as a mechanism for identifying one’s strengths and weaknesses, and subsequently, identifying learning plans1 and goals to improve patient care.2 Reflective practice, which incorporates self-assessment, is a key component of professional development6 and is being integrated into the evaluation of competency in residency training.1,3,7 Little is known, however, about what trainees identify as their learning needs or their plans to address them. Understanding needs and plans is important for evaluation research and the development of tools to facilitate self-assessment.

The primary objective of this study was to investigate the frequency and specificity of resident-identified learning objectives and the associated action plans after completing either a highly structured (HS) or minimally structured (MS) self-assessment instrument based upon the Accreditation Council for Graduate Medical Education (ACGME) Core Competencies. Varying amounts of structure were incorporated into both self-assessment instruments to facilitate residents’ understanding of the complex ACGME competencies. A second objective was to determine whether residents’ learning objectives and action plans were affected by the type of self-assessment instrument completed. We hypothesized that residents completing the HS self-assessment form would identify more specific learning objectives and address more competencies than residents completing the MS form.

Methods

Study participants were postgraduate year (PGY)-1 (n = 47) and PGY-2 (n = 43) Internal Medicine residents at the Hospital of the University of Pennsylvania, a tertiary care hospital, completing their first and second years of residency. In June 2006, residents attended a mandatory, PGY-specific, educational retreat to review policy changes, responsibilities, and teaching strategies. At the beginning of each retreat, residents were consented, randomized, and given approximately 15 minutes to complete a self-assessment packet consisting of either a HS or MS self-assessment instrument followed by a learning objectives and action plans form. The Institutional Review Board of the University of Pennsylvania approved the study; residents had the option of not participating.

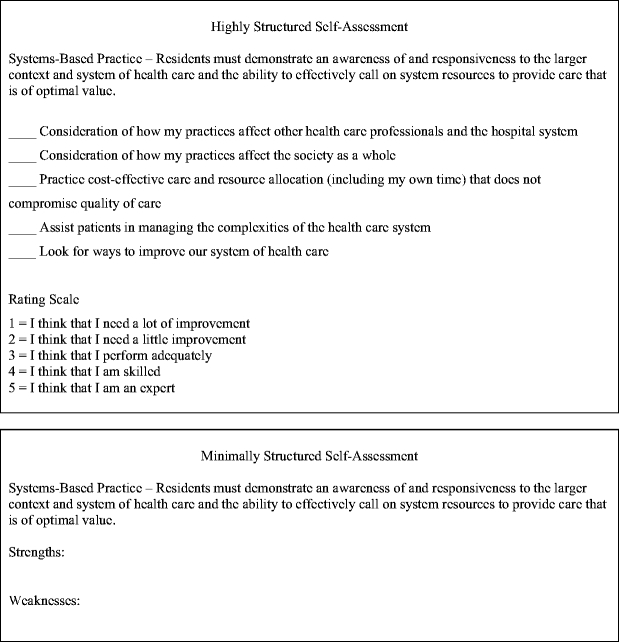

Both the HS and MS instruments were divided into 6 sections (one per competency) that each included the ACGME competency definition.7 For the HS instrument, each competency was subdivided into 5 to 12 specific components (42 total items) using the ACGME website descriptions as of February 2007.7 Residents rated themselves on each item using a 5-point Likert scale. For the MS instrument, residents were instructed to read each competency’s definition, reflect upon its meaning, and write down their strengths and weaknesses for that competency. Text Box 1 demonstrates the systems-based practice competency for the HS and MS instruments. All residents then completed an identical Learning Objectives and Action Plan form that asked, “Please list 3 specific learning objectives and how you plan to accomplish them.”

Text Box 1.

Example of HS and MS Self-assessment Question(s) for the ACGME Core Competency Systems-based Practice

Each self-assessment packet was prelabeled with a unique identifier to permit data recombination. Before data analysis, the HS and MS instruments were separated from the Learning Objectives form for blinded coding of the content. Two authors (KJC, JRK) independently categorized each learning objective into 1 of the 6 ACGME Core Competencies and also categorized them as “general” (broad and without detail, i.e., “improve patient interactions” or “increase knowledge base”) or “specific” (explicit and focused, i.e., “improve ABG interpretation” or “better outpatient clinic follow-up rates”). Action plans were analyzed for content. All assignments were reviewed with adjudication of differences by the 2 raters to reach agreement. Raters were blinded to the type of self-assessment completed and interrater agreement was determined before adjudication of the differences. Associations of content with type of self-assessment form, training level, and gender were done with chi-square analyses and Mann–Whitney tests. Data were analyzed using SPSS 15.0 for Windows.

Results

Seventy-six residents (41 PGY-1, 35 PGY-2 and 37 male, 34 female, 5 not reported) completed a self-assessment packet (response rate 84%). Thirty-nine residents completed the HS (23 PGY-1, 16 PGY-2) and 37 residents (18 PGY-1, 19 PGY-2) completed the MS self-assessment. The remaining residents (6 PGY1 and 8 PGY-2) either missed the beginning of the retreat, elected to not participate, or did not return their self-assessment.

A total of 178 learning objectives (HS = 89, MS = 89) were identified with a mean of 2.3 learning objectives per resident. Interrater agreement of learning objective category and specificity was 94% and 92%, respectively. Table 1 shows the percentage of learning objectives identified for each competency by the type of self-assessment completed. There were few differences between forms; objectives most frequently identified were for medical knowledge (40%) and patient care (27.3%). Objectives focusing on interpersonal and communication skills tended to be identified by residents completing the MS form (p = 0.04) whereas learning objectives focusing on systems-based practice were more often listed by residents completing the HS form (p = 0.06). Analyses of the percentage of residents who wrote at least 1 objective for each competency showed the same trends (73% medical knowledge, 45% patient care, 27% practice-based learning, 21% interpersonal and communication skills, 19% systems-based practice, and 11% professionalism).

Table 1.

Percent of Total Learning Objectives Identified by Core Competency

| Patient care | Medical knowledge | Practice-based learning | Interpersonal and communication skills | Professionalism | Systems-based practice | |

|---|---|---|---|---|---|---|

| All learning objectives | 27.3 | 40.0 | 11.8 | 10.0 | 4.2 | 7.3 |

| Learning objectives from HS form | 25.5 | 39.0 | 12.0 | 6.4 | 5.4 | 11.8 |

| Learning objectives from MS form | 25.0 | 42.2 | 12.6 | 13.6 | 3.3 | 3.3 |

| p value* | .98 | .61 | .89 | .04 | .47 | .06 |

*p value was based on the Mann–Whitney U test.

Learning objectives were general rather than specific (71% vs 29% of objectives, respectively), and there was no significant difference in specificity based on the type of self-assessment completed (p > 0.01). Training level and gender were not significantly related to learning objectives competency categorization or specificity (all ps > 0.01).

Analysis of the action plans revealed 10 different strategies. Interrater agreement of action plan categorization was 90%. Reading was the most commonly identified strategy (37% of all strategies identified) followed by time management (15%), finding opportunities to address perceived deficits (e.g., review x-rays with a radiologist; 12%), and teaching (11%). The 6 additional strategies identified each comprised <10% of action plans. Training level, gender, and type of form completed were not significantly related to action plan content (all ps > 0.01).

Discussion

Practicing physicians are required to participate in self-assessment programs as part of maintenance of certification,8 and the ACGME has supported the concept of reflective practice through its ongoing development of a competency-based learning portfolio for use in residency accreditation.9 An important step in developing these skills is being able to identify and reflect upon one’s strengths and weaknesses to develop attainable, specific learning objectives with associated action plans. This study is unique in that it provides insight into what residents identify as their most important learning objectives and their action plans to address those objectives after performing self-assessment.

Irrespective of the degree of structure in the self-assessment instrument, our residents identified general learning objectives largely focusing on medical knowledge and patient care with a relative paucity of learning objectives identified in the other competencies. Perhaps this should be expected as training programs have focused on teaching and evaluating patient care and knowledge longer than other competencies such as systems-based practice which are relatively unfamiliar for trainees and faculty. As graduate medical education shifts toward the evaluation of all 6 interrelated competency domains, it will be interesting to see if other competencies take a more prominent role. We also noted that most objectives lacked specificity, probably because residents had no formal introduction to self-assessment or the characteristics of good learning objectives. Akin to identifying objectives when developing curriculum, specificity and detail are important characteristics of high-quality learning objectives.10 Communicating this concept will be important.

There are several limitations in this single-institution preliminary study. First, the self-assessment instruments were created for this study as no widely accepted self-assessment instrument using the core competency framework was available. Hence, there are no comparative or psychometric data to aid interpretation. Moreover, the HS instrument asked residents to pass summary judgments of their performance using a Likert scale in multiple competency domains. Prior studies using summary judgments of performance have been shown to be inaccurate,4 and debate continues about the value of self-assessment given its inaccuracy when compared to objective external assessments.4 Our study, however, was not designed to assess the accuracy of self-assessment. Ratings were used as a reference point for residents to develop their learning objectives and action plans. As residents were given only 15 minutes to complete the self-assessment packet, it is unclear if inadequate time for reflection caused them to preferentially identify learning objectives in more familiar areas. The optimal time for reflection and self-assessment should be addressed in future studies. Because the instruments asked residents to list up to 3 learning objectives in any of the 6 competencies, it is unclear if the number, and therefore, diversity of the identified learning objectives was limited by these defined instructions. Finally, residents performed the self-assessment without the use of feedback. Ideally, objective data would be used in conjunction with iterative feedback, possibly through a mentor or advisor, to guide trainees when performing self-assessment.5

Future studies might include reflection on individual or group data and should be done on a continuous basis with ongoing formative feedback, for example using a structured learning portfolio.1,3 Validating the accuracy of residents’ self-assessments, either singularly or through a learning portfolio, will be an ongoing challenge. Finally, additional work is needed to determine whether form structure, accompanied by guidance, can promote identification of diverse, specific learning objectives and action plans.

Acknowledgments

No external funding was granted for the completion or submission of the research presented in this manuscript.

Conflict of Interest None disclosed.

Footnotes

Some of the contents of this manuscript were presented in an oral abstract presentation at the Society of General Internal Medicine 30th Annual Meeting held in Toronto, Ontario, Canada on 28 April 2007.

Dr. Caverzagie was affiliated with the Philadelphia Veteran’s Administration Hospital at the time this study was performed.

References

- 1.Carraccio C, Englander R. Evaluating competence using a portfolio: a literature review and web-based application to the ACGME competencies. Teach Learn Med. 2004;16:381–7. [DOI] [PubMed]

- 2.Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(10 suppl)S46–54. [DOI] [PubMed]

- 3.Holmboe ES, Rodak W, Mills G, McFarlane MJ, Schultz HJ. Outcomes-based evaluation in resident education: creating systems and structured portfolios. Am J Med. 2006;119:708–1. [DOI] [PubMed]

- 4.Davis DA, Mazmanian PE, Fordis M, Van Harrison , Thorpe K, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296:1094–102. [DOI] [PubMed]

- 5.Duffy FD, Holmboe ES. Self-assessment in lifelong learning and improving performance in practice: physician know thyself. JAMA. 2006;296:1137–9. [DOI] [PubMed]

- 6.Schon D. Educating the reflective practitioner. San Francisco: Jossey-Bass; 1987.

- 7.Accreditation Council for Graduate Medical Education, Outcome Project, General Competencies Version 1.3. Available at http://www.acgme.org/outcome/comp/GeneralCompetenciesStandards21307.pdf. Accessed 25 February 2008.

- 8.American Board of Medical Specialties, Maintenance of certification. Available at http://www.abms.org/About_Board_Certification/MOC.aspx. Accessed 25 February 2008.

- 9.Accreditation Council for Graduate Medical Education, The ACGME Learning Portfolio. Available at http://www.acgme.org/acWebsite/portfolio/learn_cbpac.asp. Accessed 25 February 2008.

- 10.Kern DE, Thomas PA, Howard DM, Bass EB. Curriculum development for medical education: a six-step approach. Baltimore: Johns Hopkins; 1998.