Summary

BACKGROUND

Increased clinical demands and decreased available time accentuate the need for efficient learning in postgraduate medical training. Adapting Web-based learning (WBL) to learners’ prior knowledge may improve efficiency.

OBJECTIVE

We hypothesized that time spent learning would be shorter and test scores not adversely affected for residents who used a WBL intervention that adapted to prior knowledge.

DESIGN

Randomized, crossover trial.

SETTING

Academic internal medicine residency program continuity clinic.

PARTICIPANTS

122 internal medicine residents.

INTERVENTIONS

Four WBL modules on ambulatory medicine were developed in standard and adaptive formats. The adaptive format allowed learners who correctly answered case-based questions to skip the corresponding content.

MEASUREMENTS and Main Results

The measurements were knowledge posttest, time spent on modules, and format preference. One hundred twenty-two residents completed at least 1 module, and 111 completed all 4. Knowledge scores were similar between the adaptive format (mean ± standard error of the mean, 76.2 ± 0.9) and standard (77.2 ± 0.9, 95% confidence interval [CI] for difference −3.0 to 1.0, P = .34). However, time spent was lower for the adaptive format (29.3 minutes [CI 26.0 to 33.0] per module) than for the standard (35.6 [31.6 to 40.3]), an 18% decrease in time (CI 9 to 26%, P = .0003). Seventy-two of 96 respondents (75%) preferred the adaptive format.

CONCLUSIONS

Adapting WBL to learners’ prior knowledge can reduce learning time without adversely affecting knowledge scores, suggesting greater learning efficiency. In an era where reduced duty hours and growing clinical demands on trainees and faculty limit the time available for learning, such efficiencies will be increasingly important. For clinical trial registration, see http://www.clinicaltrials.gov NCT00466453 (http://www.clinicaltrials.gov/ct/show/NCT00466453?order=1).

KEY WORDS: medical education, Web-based learning, clinical medicine, computer-assisted instruction, adaptation, problem solving

Introduction

Increased clinical demands and decreased available time have accentuated the need for efficient learning in postgraduate medical training.1,2 Authors have speculated that Web-based learning (WBL)—using Internet-based computer programs to facilitate learning3—is more efficient than traditional instructional formats.4 Although evidence supports this contention for some WBL interventions,5–7 other studies have found that WBL takes time similar to8 or greater than9 other media and that different WBL formats require different amounts of time.10,11 Whereas it seems unlikely that WBL is inherently more efficient than other instructional media, it does have the potential to facilitate efficient learning. Numerous studies have demonstrated the efficacy of WBL in various settings,5,7,8,12–14 yet research is needed to investigate how to enhance WBL’s effectiveness and efficiency.15–17

Adapting instruction to an individual learner’s baseline or prior knowledge has been proposed to improve WBL effectiveness and efficiency.18,19 Systems adapting to prior knowledge might allow learners to skip content they have already mastered or advise remediation for knowledge deficiencies. However, adaptation could potentially lead to decreased learning (if needed information is inadvertently skipped) or increased time (if excess remediation is prescribed). Studies in nonmedical education suggest that adaptation to an individual’s prior knowledge improves achievement, particularly for low-knowledge learners, and decreases time required to learn material.20–23 Although several adaptive systems have been described for medical training,24–29 the adaptive component has rarely been carefully evaluated in comparison with a similar, nonadaptive system. One model adapting to group (rather than individual) knowledge level found time savings; however, the data showed higher knowledge gains in the nonadaptive group that approached but did not reach statistical significance.26 Another adaptive model found significantly decreased time required, but this evaluation focused on adaptive testing rather than adaptive instruction.27 Given these limited studies and inconclusive results, further research regarding the efficiency of adaptive WBL is needed.

We hypothesized that a WBL intervention that adapted to the prior knowledge of Internal Medicine residents would take less time than a nonadaptive format and that knowledge test scores would not decrease, resulting in higher learning efficiency. To evaluate this hypothesis, we conducted a randomized, crossover trial. We did not calculate a learning efficiency ratio (knowledge score divided by time spent) because the value of learning is not constant across the range of scores (e.g., very short time spent resulting in very low knowledge scores would be undesirable, yet may have the same efficiency ratio as high time and high knowledge scores).

MATERIALS AND METHODS

Setting and Participants

This study took place in an academic Internal Medicine residency program during the 2005–2006 academic year. Residents have a weekly continuity clinic at 1 of 5 sites. WBL modules on ambulatory medicine are part of the regular continuity clinic curriculum. All 144 categorical residents in the Mayo Internal Medicine Residency Program in Rochester, MN, were invited to participate in the study. Our Institutional Review Board approved this study. All participants gave consent.

Interventions and Randomization

Since 2002, all categorical residents have been required to complete 4 evidence-based WBL modules on ambulatory medicine topics each year. For the present study, we updated the content of previously developed modules covering diabetes mellitus, hyperlipidemia, asthma, and depression. We also added case-based questions and feedback to enhance the instructional design based on our research showing benefits from such questions in WBL.10 For the purposes of the study intervention, these questions also served to assess baseline knowledge. We embedded questions in the module, rather than administering these as a separate pretest, to optimize their instructional efficacy.

Each revised module consisted of 17 to 21 case-based questions (patient scenarios followed by multiple-choice questions) with didactic information appearing as feedback to each question. The authors, in collaboration with at least 1 specialist in each content area, used an iterative process to develop questions specifically matching each didactic segment. Questions were added until all didactic information had at least 1 corresponding question.

In the standard format, feedback consisted of a 1-sentence explanation of the correct response followed by additional information (1 to 4 paragraphs of text, tables, illustrations, and hyperlinks to online resources) related to that question (“detailed information”). In the adaptive version, feedback began with the same brief explanation used in the standard format. However, learners who answered the question correctly were directed to advance to the next question rather than review the detailed information. These learners had the option of viewing the detailed information if desired (“Click here for more information”), but the default navigation (“Proceed to next page”) led them to the next question. Learners who answered the question incorrectly were presented both the brief explanation and the detailed information (i.e., they did not have the option to skip this information). The clinical cases, questions, explanations, and didactic information were identical between the 2 formats; only the adaptive navigation changed. Responses were not recorded because of software technical limitations.

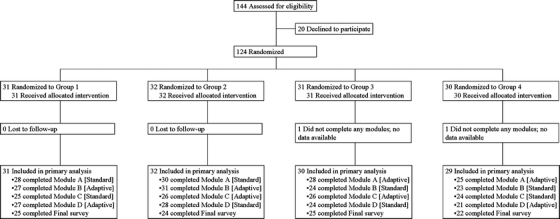

Modules were released at approximately 6-week intervals. Residents could complete modules at any time and in the order they chose. Study participants completed 2 modules using the standard format and 2 modules using the adaptive format. One author (DAC) randomly assigned participants (after enrollment) to 1 of 4 groups using MINIM (version 1.5, London Hospital Medical College, London), with stratification by postgraduate year and clinic site. Each group was assigned a different permutation of standard and adaptive formats (see Fig. 1). Participants were also randomly assigned, in a factorial design, to complete a series of optional practice cases (versus no cases) at the end of each module; the results of this intervention are reported separately.30

Figure 1.

Participant flow. Module topics were: Module A—diabetes mellitus, Module B—hyperlipidemia, Module C—asthma, and Module D—depression.

Instruments and Outcomes

Knowledge was assessed using a posttest at the end of each module. A pretest was intentionally avoided to prevent alerting participants to the material being tested,31 because of the time required,26 and because our research using earlier versions of 3 of the 4 modules showed significant knowledge gains from pretest to posttest.7 A test blueprint was used to develop 69 case-based multiple-choice questions (15 to 19 per module) designed to assess application of knowledge.32 Questions from previous pretests7 were reviewed, in conjunction with test item statistics, and retained, revised, or removed as appropriate. New questions were developed as needed, and new or substantially revised questions were reviewed by experts and pilot tested in the Internal Medicine faculty. Time spent on each module screen was logged by computer. Because screen times were highly skewed, intervals longer than the 95th percentile were set equal to the 95th percentile value. The time for each screen was then summed (“measured time”). At the end of each module, residents also provided a free-text-entry estimate of time spent on that module (“self-reported time”).

Demographic information was ascertained using a baseline questionnaire. An end-of-course questionnaire after the fourth module asked participants to indicate their preferred format and compare the perceived effectiveness and efficiency of the formats using 6-point scales.

Knowledge posttests and baseline and end-of-course questionnaires were administered using WebCT. Residents were asked to treat tests as “closed book.”

Statistical Analysis

Knowledge scores (percent correct) and time spent (measured and self-reported) were compared between the 2 formats using mixed linear models accounting for repeated measurements on each participant and for differences among modules. To meet model assumptions, it was necessary to log transform time before analysis; point estimates and confidence intervals were back-transformed to decimal numbers for reporting purposes. Additional adjustments were planned for group assignment, postgraduate year, gender, clinic site, and both assignment to and actual completion of the intervention in the factorial design. In sensitivity analyses, missing data were imputed using the mean value for participants using that format for that module.

Format preference was analyzed using the Wilcoxon signed rank test, testing the null hypothesis that there was no preference. A sensitivity analysis was conducted using the median of the preference scale for missing data. The Wilcoxon rank sum or Kruskal–Wallis test was used for comparisons among groups. Other questionnaire responses were analyzed similarly. Correlation between measured and self-reported time was calculated using Spearman’s rho.

All participants were analyzed in the groups to which they were assigned, and all data available for each participant at each time point were included. We used a two-sided alpha level of 0.05. The expected sample size of 86 participants was to provide 90% power to detect a 7% difference in knowledge score. All analyses were performed using SAS 9.1 (SAS Institute, Cary, NC, USA).

RESULTS

Beginning in December 2005, 124 residents consented to participate and were randomized (see Fig. 1). One hundred twenty-two (98%) completed at least 1 module, 111 (90%) completed all 4 modules including knowledge posttests, and 96 (77%) completed the final survey on preferences. Demographics are summarized in Table 1. Cronbach’s alpha for posttest scores was 0.68. Last follow-up was on August 31, 2006.

Table 1.

Characteristics of study participants*

| Group or response | Number of participants† | ||||

|---|---|---|---|---|---|

| Group 1 (n = 31), N (%) | Group 2 (n = 32), N (%) | Group 3 (n = 31), N (%) | Group 4 (n = 30), N (%) | ||

| Gender | Female | 13 (42) | 8 (25) | 15 (48) | 9 (30) |

| Postgraduate year | 1 | 11 (35) | 11 (34) | 12 (38) | 12 (40) |

| 2 | 11 (35) | 11 (34) | 10 (32) | 9 (30) | |

| 3 | 9 (29) | 10 (31) | 9 (29) | 9 (30) | |

| Prior postgraduate medical training | None | 20 (87) | 13 (69) | 14 (78) | 19 (86) |

| 1 year | 1 (4) | 1 (5) | 2 (11) | 1 (5) | |

| 2 or more years | 2 (9) | 5 (26) | 2 (11) | 2 (9) | |

| Postresidency plans | General Internal Medicine | 3 (12) | 6 (25) | 2 (8) | 2 (9) |

| Internal medicine subspecialty | 22 (88) | 18 (75) | 21 (84) | 20 (91) | |

| Non-IM or undecided | 0 (0) | 0 (0) | 2 (8) | 0 (0) | |

| Prior experience with Web-based learning | None | 0 (0) | 1 (5) | 0 (0) | 3 (14) |

| 1–2 courses | 3 (13) | 3 (16) | 4 (22) | 3 (14) | |

| 3–5 courses | 7 (30) | 7 (37) | 6 (33) | 8 (36) | |

| 6 or more courses | 13 (57) | 8 (42) | 8 (45) | 8 (36) | |

| Comfort using the Internet | Uncomfortable | 2 (9) | 1 (5) | 5 (28) | 3 (14) |

| Neutral | 1 (4) | 0 (0) | 1 (5) | 1 (4) | |

| Comfortable | 20 (87) | 18 (95) | 12 (67) | 18 (82) | |

*A total of 124 residents participated. Each completed 2 modules using the adaptive format and 2 modules using the standard format in a crossover fashion, with each randomly assigned group using a different permutation of formats.

†Number of responses varies because information was obtained from different questionnaires and not all respondents answered all questions; percentages are calculated based on actual responses.

Learning Time Spent

After adjusting for score differences between modules, there was no difference in knowledge scores between those using the adaptive format (mean ± standard error of the mean, 76.2 ± 0.9) and those using the standard format (77.2 ± 0.9, 95% confidence interval [CI] for difference −3.0 to 1.0, P = .34). However, the average time to complete 1 module was lower for the adaptive format (29.3 minutes [CI 26.0 to 33.0] per module) than for the standard (35.6 [31.6 to 40.3]), which represents an 18% decrease in time (CI 9 to 26%, P = .0003). Adjustment for group assignment, postgraduate year, gender, clinic site, and assigned and actual use of the optional practice cases did not change these results. Self-reported time was also less for the adaptive format (33.4 minutes [CI 30.5 to 36.5] per module) compared to the standard (36.8 [33.7 to 40.2], P = .0001). Results did not appreciably change in sensitivity analyses. Correlation between measured and self-reported time was statistically significant (rho = 0.56, P < .0001).

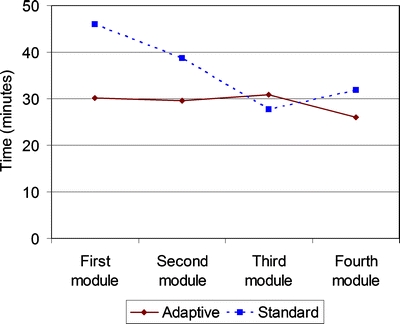

One hundred and two residents (84%) completed the diabetes module first, 15 (12%) started with hyperlipidemia, and 5 (4%) with asthma. There was no difference in measured time to complete modules whether residents started with an adaptive module or standard (P = .11). The 4 modules took a similar amount of time to complete (P = .30), but the time difference between the 2 formats varied depending on the order in which they were completed (interaction P = .012). The first, second, and fourth modules were shorter in the adaptive format, whereas the third module took slightly longer (see Fig. 2). The overall difference between formats remained significant (P = .0003). Analyses with self-reported time revealed a similar pattern, except that the interaction between format and order was not significant (P = .062). Module order had no effect on knowledge scores (P = .45, interaction with format P = .35).

Figure 2.

Time to complete module and module sequence. Residents completed modules in any sequence they chose. Shown here is the average time required to finish modules according to the completion order. The adaptive format required, on average, 29.3 minutes per module; the standard format required 35.6 minutes per module.

Knowledge scores increased slightly with longer time (2.2 points gained per 10 minutes, P < .0001); there was no interaction between time and instructional format (P = .91).

Format Preference

Seventy-two of 96 respondents (75%) preferred the adaptive format (mean rating 2.4 ± 0.2 [scale 1 = strongly prefer adaptive, 6 = strongly prefer standard], P < .0001 compared to scale median [3.5]). When missing data were set to the scale median (3.5) in a sensitivity analysis, the mean rating was 2.7 ± 0.1 (P < .0001 compared to the median). Learners also felt that the adaptive format was more efficient (2.1 ± 0.1, P < .0001) and more effective (2.9 ± 0.2, P = .0002), where 1 = adaptive format much more efficient/effective and 6 = standard format much more efficient/effective. Third-year residents rated the adaptive format higher in terms of effectiveness (2.3 ± 0.2) than did the second-year (3.1 ± 0.3) and first-year residents (3.2 ± 0.3, P = .045). Similar differences were observed for format preference (third-year 1.8 ± 0.2, second-year 2.7 ± 0.3, first-year 2.6 ± 0.3), but this did not reach statistical significance (P = .10). There was no difference in preference between those using the adaptive format first (2.2 ± 0.2) and those using standard format first (2.7 ± 0.3, P = .12).

There was an association between knowledge scores and format preference, with those preferring the standard format having higher knowledge scores (79.5 ± 1.7) than those preferring adaptive (75.4 ± 1.0, P = .044) However, those preferring the adaptive format took less time (27.7 minutes [CI 24.2 to 31.7] per module) than those who preferred standard (46.2 [36.5 to 58.4], P < .0001). There was no interaction with format (P = .26), and the adaptive format remained significantly shorter (P = .0003). These findings did not vary across postgraduate years (P = .52).

DISCUSSION

A WBL intervention on ambulatory medicine that adapted to residents’ prior knowledge decreased time spent on instruction by 18% compared to a nonadaptive intervention. Knowledge scores were unaffected, suggesting that adaptive WBL can facilitate higher learning efficiency. Three fourths of the residents preferred the adaptive format and felt it was more efficient; these effects were more notable for residents at later stages of training. Although the time savings was modest for a single module, the adaptive format would have saved over 25 minutes if used for all 4 modules and, for our 144 categorical residents, would have cumulatively saved 60 hours. In an era where reduced duty hours and growing clinical demands on trainees and faculty limit the time available for learning, such efficiencies will be increasingly important not only in residency training but across the continuum of medical education.

To our knowledge, this is the first study in medical education to document advantages of a WBL intervention adapting to individual learners’ prior knowledge in comparison to a nonadaptive system. A study using group-average knowledge test scores to adaptively include or omit modules found nonsignificantly lower scores for the adaptive group,26 confirming the common-sense concern that class-level adaptations may neglect the learning needs of individuals. Others have evaluated adaptive systems compared to paper28 or face-to-face24 interventions or no-intervention33, but these comparisons do not clarify the utility of adaptive versus nonadaptive designs. Whereas our adaptive model lacks the technical sophistication of many intelligent systems,29,34,35 our results support the concept of adaptive WBL in general and characterize a model that can be understood and implemented by a broad range of educators.

The difference in time between the adaptive and standard formats was not constant across the 4 modules, and in fact the standard format was slightly shorter than adaptive for the module completed third. As most residents completed the asthma module third, this likely represents, in part, variation between modules (i.e., the asthma module may be different than the others). Residents might have become more comfortable using the modules with practice and hence required less time; however, there was no significant difference in time overall between the 4 modules. We believe the most likely explanation is that once residents were exposed to the adaptive format, they learned to use this format even when given the standard format (for example, by not actually studying the “required” detailed information if they responded correctly to the question). Thus, the time savings from the adaptive format might have been even greater if the standard group had not been exposed to the adaptive format. The overall effect of the format on time remained significant even after adjustment for this interaction.

Learners preferring standard modules had higher test scores but also took longer (27.7 versus 46.2 minutes per module). This finding warrants further study. However, we note that those preferring standard could use the adaptive format as standard by viewing optional material as desired.

Concordant with previous arguments,12,15,16,36 we believe that WBL is not inherently more (or less) effective or efficient than other instructional media such as paper or face-to-face educational interventions. However, WBL can facilitate instructional methods that would be difficult in other settings, which may make it superior for specific contexts and instructional objectives.3,17,37 For example, the adaptability of the WBL intervention in this study would not be possible in a lecture format and would be cumbersome to duplicate with paper instruction.

Accurate learner assessment is critical to any adaptive system. This information can come from various sources, including the training level, previous assessments or standardized tests, self-assessments of knowledge, goals or preferences, knowledge pretests, clinical practice data, or monitoring how learners navigate a WBL course. One study found that a pretest added 30 minutes to the training session,26 and such an investment could negate any time savings from adaptive instruction. Thus, our model integrated the assessment with the instructional method (case-based scenario with self-assessment questions). Additionally, each assessment question must be carefully matched to its corresponding content, and even then, a correct answer could result from guessing or incorrect reasoning. We addressed this problem by allowing learners the option to review content even if they answered correctly. Others have addressed this by developing models of the learner through iterative assessment24,35 and/or by using multiple questions to assess a single domain.26,27,29,35

This study has limitations. The absence of a pretest or no-intervention arm leaves open the possibility that no learning occurred during this intervention. However, this seems unlikely in light of the 12% improvement in test scores observed in a study using an earlier version of these modules.7 The setting of a single Internal Medicine training program limits generalizability to different contexts, learners, or topics. The time savings is modest, yet it compares favorably with other studies of efficiency in WBL5,7 and cumulatively could be educationally significant. We cannot verify that all recorded time was actually spent working on the module nor is self-reported time likely to be accurate in all cases. However, analyses using both computer- and self-reported time reached identical conclusions. We do not have reliable information on residents’ location while completing modules. Finally, suboptimal completion of the end-of-course evaluation impairs the interpretation of preference results, but sensitivity analyses showed similar findings.

The results of this study warrant confirmation before widespread implementation. Future research should continue to explore systems for adaptive WBL, including comparisons of different models for assessment and adaptation.19,38,39 As noted in a recent editorial, “Both evaluations in carefully controlled laboratory settings and field evaluations are necessary.”40 Because comparisons with alternate media (such as paper or face-to-face teaching) are invariably confounded,16 research will be most meaningful when comparing 1 WBL format against another.

In summary, adapting WBL to learners’ prior knowledge can enhance learning efficiency, saving time without an adverse effect on scores. Although not a solution to all educational challenges, WBL offers flexibility that may prove advantageous in many learning settings.

ACKNOWLEDGMENTS

We thank V. Shane Pankratz, Ph.D., for assistance with statistical analyses, and K. Sorensen for assistance in developing the modules. An abstract based on this work was presented at the 2007 meeting of the Association for Medical Education in Europe in Trondheim, Norway. The funding source was the Mayo Education Innovation Program.

Conflicts of Interest Statement None disclosed.

References

- 1.Goitein L, Shanafelt TD, Wipf JE, Slatore CG, Back AL. The effects of work-hour limitations on resident well-being, patient care, and education in an internal medicine residency program. Arch Intern Med. 2005;165:2601–6. [DOI] [PubMed]

- 2.Ryan J. Unintended consequences: the accreditation council for graduate medical education work-hour rules in practice. Ann Intern Med. 2005;143:82–3. [DOI] [PubMed]

- 3.McKimm J, Jollie C, Cantillon P. ABC of learning and teaching in medicine: Web based learning. BMJ. 2003;326:870–73. [DOI] [PMC free article] [PubMed]

- 4.Clark D. Psychological myths in e-learning. Med Teach. 2002;24:598–604. [DOI] [PubMed]

- 5.Bell DS, Fonarow GC, Hays RD, Mangione CM. Self-study from web-based and printed guideline materials: a randomized, controlled trial among resident physicians. Ann Intern Med. 2000;132:938–46. [DOI] [PubMed]

- 6.Spickard A III, Alrajeh N, Cordray D, Gigante J. Learning about screening using an online or live lecture: does it matter? J Gen Intern Med. 2002;17:540–5. [DOI] [PMC free article] [PubMed]

- 7.Cook DA, Dupras DM, Thompson WG, Pankratz VS. Web-based learning in residents’ continuity clinics: a randomized, controlled trial. Acad Med. 2005;80:90–7. [DOI] [PubMed]

- 8.Fordis M, King JE, Ballantyne CM, et al. Comparison of the instructional efficacy of Internet-based cme with live interactive CME Workshops: a randomized controlled trial. JAMA. 2005;294:1043–51. [DOI] [PubMed]

- 9.Grundman J, Wigton R, Nickol D. A controlled trial of an interactive, Web-based virtual reality program for teaching physical diagnosis skills to medical students. Acad Med. 2000;75(10 Suppl):S47–9. [DOI] [PubMed]

- 10.Cook DA, Thompson WG, Thomas KG, Thomas M, Pankratz VS. Impact of self-assessment questions and learning styles in Web-based learning: a randomized, controlled, crossover trial. Acad Med. 2006;81:231–8. [DOI] [PubMed]

- 11.Spickard A, Smithers J, Cordray D, Gigante J, Wofford JL. A randomised trial of an online lecture with and without audio. Med Educ. 2004;38:787–90. [DOI] [PubMed]

- 12.Chumley-Jones HS, Dobbie A, Alford CL. Web-based learning: sound educational method or hype? A review of the evaluation literature. Acad Med. 2002;77(10 Suppl):S86–93. [DOI] [PubMed]

- 13.Kemper KJ, Amata-Kynvi A, Sanghavi D, et al. Randomized trial of an Internet curriculum on herbs and other dietary supplements for health care professionals. Acad Med. 2002;77:882–9. [DOI] [PubMed]

- 14.Kerfoot BP, Conlin P, Travison T, McMahon GT. Web-Based education in systems-based practice: a randomized trial. Arch Intern Med. 2007;167:361–6. [DOI] [PubMed]

- 15.Friedman C. The research we should be doing. Acad Med. 1994;69:455–7. [DOI] [PubMed]

- 16.Cook DA. The research we still are not doing: an agenda for the study of computer-based learning. Acad Med. 2005;80:541–8. [DOI] [PubMed]

- 17.AAMC. Effective Use of Educational Technology in Medical Education: Summary Report of the 2006 AAMC Colloquium on Educational Technology. Washington, DC: Association of American Medical Colleges; 2007.

- 18.Chen C, Czerwinski M, Macredie RD. Individual differences in virtual environments—introduction and overview. J Am Soc Inf Sci. 2000;51:499–507. [DOI]

- 19.Brusilovsky P. Adaptive educational systems on the World-Wide-Web: a review of available technologies. In: Proceedings of the Fourth International Conference in Intelligent Tutoring Systems, San Antonio, TX, August, 1998.

- 20.Brusilovsky P, Pesin L. Adaptive navigation support in educational hypermedia: an evaluation of the ISIS-Tutor. J Comp Inform Tech. 1998;6:27–38.

- 21.Specht M, Kobsa A. Interaction of domain expertise and interface design in adaptive educational hypermedia. In: Workshop on Adaptive Systems and User Modeling on the World Wide Web—Eighth International World Wide Web Conference, Toronto, Canada, May, 1999.

- 22.Weibelzahl S, Weber G. Adapting to prior knowledge of learners. Paper presented at: Second international conference on Adaptive Hypermedia and Adaptive Web Based Systems, Malaga, Spain, 2002.

- 23.Brusilovsky P. Adaptive navigation support in educational hypermedia: the role of student knowledge level and the case for meta-adaptation. Br J Educ Technol. 2003;34:487–97. [DOI]

- 24.Eliot CR, Williams KA, Woolf BP. An intelligent learning environment for advanced cardiac life support. In Proceedings of the AMIA Annual Fall Symposium 1996:7–11. [PMC free article] [PubMed]

- 25.Casebeer L, Strasser SM, Spettell CM, et al. Designing tailored Web-based instruction to improve practicing physicians’ preventive practices. J Med Int Res. 2003;5(3):20. [DOI] [PMC free article] [PubMed]

- 26.Kohlmeier M, McConathy WJ, Cooksey Lindell K, Zeisel SH. Adapting the contents of computer-based instruction based on knowledge tests maintains effectiveness of nutrition education. Am J Clin Nutr. 2003;77(4 Suppl):1025–7. [DOI] [PubMed]

- 27.Romero C, Ventura S, Gibaja EL, Hervas C, Romero F. Web-based adaptive training simulator system for cardiac life support. Artif Intell Med. 2006;38(1):67–78. [DOI] [PubMed]

- 28.Woo CW, Evens MW, Freedman R, et al. An intelligent tutoring system that generates a natural language dialogue using dynamic multi-level planning. Artif Intell Med. 2006;38(1):25–46. [DOI] [PubMed]

- 29.Kabanza F, Bisson G, Charneau A, Jang T-S. Implementing tutoring strategies into a patient simulator for clinical reasoning learning. Artif Intell Med. 2006;38(1):79–96. [DOI] [PubMed]

- 30.Cook DA, Beckman TJ, Thomas KG, Thompson WG. Introducing resident physicians to complexity in ambulatory medicine. Med Educ. (In press). [DOI] [PubMed]

- 31.Fraenkel J, Wallen NE. How to Design and Evaluate Research in Education. New York, NY: McGraw-Hill; 2003.

- 32.Case SM, Swanson DB. Constructing Written Test Questions for the Basic and Clinical Sciences. 3Philadelphia, PA: National Board of Medical Examiners; 2001.

- 33.Allison JJ, Kiefe CI, Wall T, et al. Multicomponent Internet continuing medical education to promote chlamydia screening. Am J Prev Med. 2005;28:285–90. [DOI] [PubMed]

- 34.Day RS. Challenges of biological realism and validation in simulation-based medical education. Artif Intell Med. 2006;38(1):47–66. [DOI] [PubMed]

- 35.Suebnukarn S, Haddawy P. Modeling individual and collaborative problem-solving in medical problem-based learning. User Model User-Adapt Interact. 2006;16:211–48. [DOI]

- 36.Clark RE. Confounding in Educational Computing Research. J Educ Comput Res. 1985;1:137–48.

- 37.MacKenzie JD, Greenes RA. The World Wide Web: redefining medical education. JAMA. 1997;278:1785–6. [DOI] [PubMed]

- 38.De Bra P. Pros and cons of adaptive hypermedia in Web-based education. Cyberpsychol Behav. 2000;3:71–7. [DOI]

- 39.Park OC, Lee J. Adaptive instructional systems. In: Jonassen DH, ed. Handbook of Research on Educational Communications and Technology. 2Mahwah, NJ: Lawrence Erlbaum; 2004:651–84.

- 40.Crowley, Gryzbicki D. Intelligent medical training systems. Artif Intell Med. 2006;38(1):1–4. [DOI] [PubMed]