Abstract

Recent controversies in medical research and the increasing reliance on randomized clinical trials to inform evidence-based practice have prompted coordinated attempts to standardize reporting and register information about trials for consistency and transparency. The Consolidated Standards of Reporting Trials guidelines (D. G. Altman et al., 2001) and trial registry are described in this article, and the implications for clinical and experimental research in psychopharmacology are discussed.

Keywords: clinical trials, registration, evidence, research methods

Randomized clinical trials (RCTs) are considered the premium methodological design in science, as they are logical extensions of experimental methods that represent the “closest science has come to a means for demonstrating causality” (Haaga & Stiles, 2000, p. 14). Well-designed RCTs, if properly executed, minimize experimental (and clinician) bias by randomizing assignment into groups—similar in the degree of variance attributable to measured and unmeasured factors—that are then statistically compared in their relative exposure to an intervention. Collectively, data from RCTs are considered essential in health intervention research (Jenicek, 2003; Sackett, 1989). Consequently, they are very influential in the development of evidence-based and clinical practice guidelines that ideally consist of the “accepted evidence of clinical efficacy or effectiveness” (Davidson et al., 2003, p. 161).

The value of RCTs has increased in recent years even though methodologists and critics have found considerable variance in the ways RCTs are reported in the peer-review literature; these inconsistencies frustrate efforts to make unambiguous and clear-cut recommendations for clinical practices based on empirical evidence. In addition, recent public controversies concerning the available evidence from RCTs about popular medications have increased the demands for systematic standards in the reporting of evidence and greater public transparency in the way RCTs are planned, conducted, and reported. These issues have resulted in the current practice of registering clinical trials in a database open to public access and in the development and adoption of standards for reporting RCTs in peer-reviewed outlets.

Inconsistencies and Controversies in the Evidentiary Base

Despite the understandable preeminence of the RCT as a gold standard in science, unfortunate realities of the scholarly enterprise and the entities that invest in scientific endeavors have undermined the confidence placed in the evidence available from RCTs. Academicians have long appreciated the “file drawer” problem that plagues their research: Intervention studies that do not produce significant results are difficult to publish in peer-reviewed journals. Editors and reviewers—the vanguard of the peer-review process—are typically more receptive to studies that demonstrate significant results, but they are disinclined to publish results that suggest an innovative, novel intervention may be no better (or even inferior) to a more familiar intervention (standard care) or to no care at all (DeAngelis et al., 2004). Although these issues are often addressed in psychological research (and controlled for in most meta-analytic studies), their absence in the extant literature can undermine the confidence placed in the published evidence used to determine and recommend best practices.

Moreover, the selective reporting and withholding of data from research sponsored by entities that have a pecuniary interest in how data are used erodes scientific integrity and the public trust (Committee on Clinical Trials Registries, 2006). During the legal proceedings brought against GlaxoSmithKline for increased suicide risk among children prescribed paroxetine (Paxil), internal memos from 1998 indicated that data implicating such risks were suppressed (Rennie, 2004). In addition, data from RCTs that found no effects for the drug were withheld; the withholding of this information was deemed commercially prudent to the company (Kondro, 2004). This included withholding unpublished reports from researchers who requested this information in their systematic review of available data to develop practice guidelines (Whittington et al., 2004).

In 2004, the International Committee of Medical Journal Editors (ICMJE) published a statement in many prestigious medical journals to advocate for the voluntary public registration of RCTs (DeAngelis et al., 2004). In this statement, the committee acknowledged the vested interests, academic biases, and market forces (including insurance coverage policies and reimbursement issues) that influence the selective reporting of RCTs. This statement grew from an ongoing collaboration between medical journal editors, methodologists, statisticians, and epidemiologists who recognized the inconsistencies and flaws across RCTs in the literature. These collaborative efforts resulted in a common list of items that, if reported consistently across RCTs, would improve the quality of evidence resulting from RCTs (Altman et al., 2001). In the wake of the public relations fiasco surrounding the GlaxoSmithKline litigation (and similar problems with Merck and the suppressed evidence of adverse side effects associated with Vioxx), the important issue of registering clinical trials for public review and record keeping received support from policymakers, academicians, and private industry (Rennie, 2004). The ICMJE member journals agreed to require the registration of any RCT submitted for review and possible publication (DeAngelis et al., 2004). In an attempt to improve the quality and consistent reporting of essential features of RCTs, the ICMJE also published the Consolidated Standards of Reporting Trials (CONSORT; Altman et al., 2001).

The CONSORT Guidelines

The CONSORT guidelines specify details that should be explicated in every RCT submitted for publication in many medical journals. Although most psychology journals do not require public registration or CONSORT standards as a prerequisite for publication, several notable outlets require CONSORT standards to be addressed as a condition of publication (Health Psychology, Journal of Consulting and Clinical Psychology), and others recommend elements of CONSORT (and registration) to assist the review process (e.g., Journal of Pediatric Psychology, Rehabilitation Psychology). Interdisciplinary journals that are actively supported by psychologists have been more receptive to the CONSORT guidelines (and some, e.g., Annals of Behavioral Medicine, have added items pertinent to psychological interventions; Davidson et al., 2003).

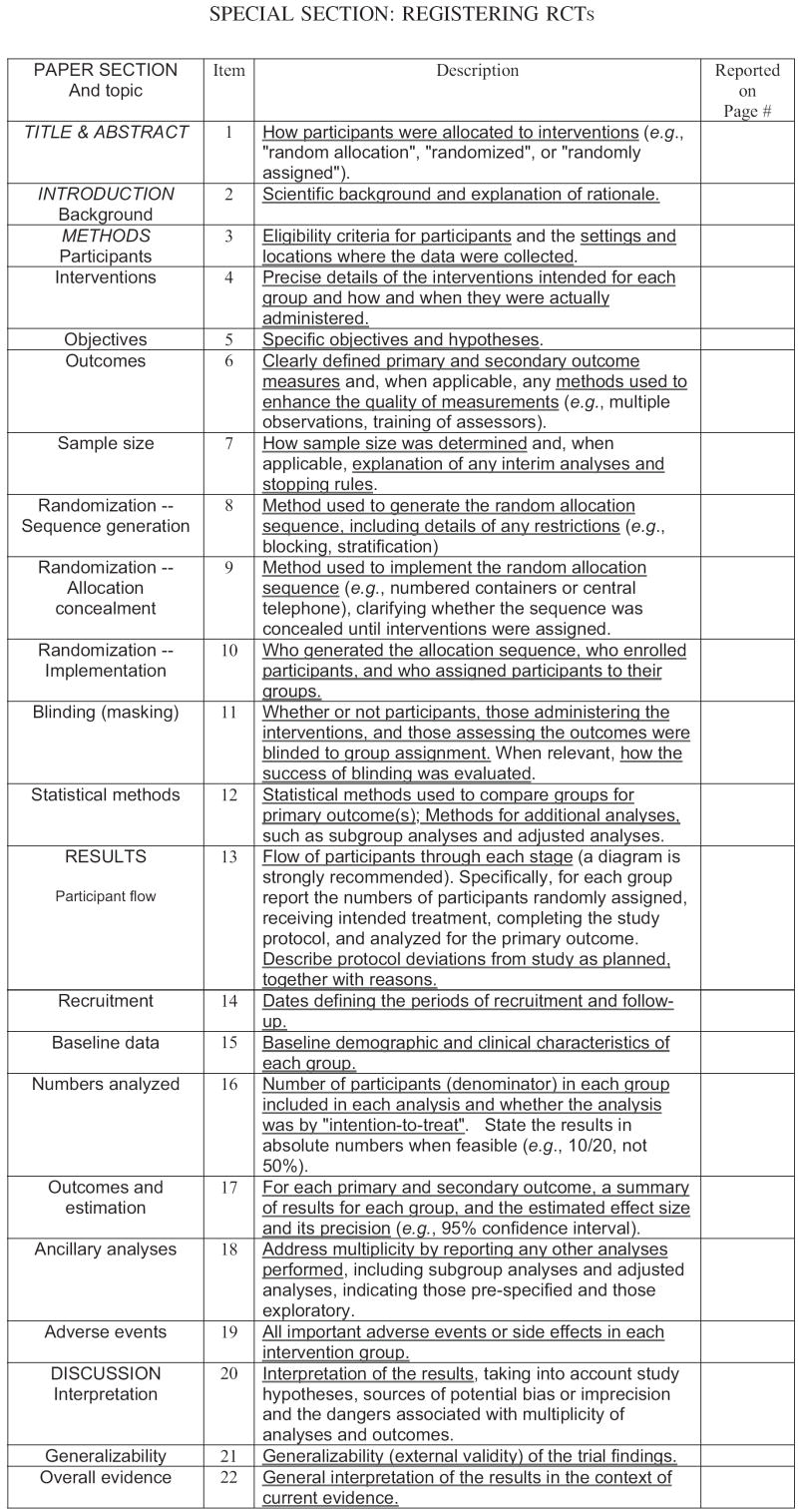

Figure 1 displays the 22 items that must be registered and presented upon submission for peer review (available at http://www.consort-statement.org). These items specify where this information should be contained within the manuscript; notably, the majority of these items parallel the basic sections of a manuscript (background, methods, results, discussion). The website provides explanations for each item to expedite accurate reporting (see also Altman et al., 2001; Davidson et al., 2003). The majority of the standards pertain to the internal validity of the study. The word “randomized” is required in both the title and the abstract to improve indexing and retrieval of the study in literature searches. Journal editors may require authors to submit a completed checklist with their manuscript, and the checklist will be evaluated by the peer reviewers who critique the submission (Kaplan, Trudeau, & Davidson, 2004).

Figure 1.

The CONSORT E-Checklist of items to include when reporting a randomized trial (available at http://www.consort-statement.org).

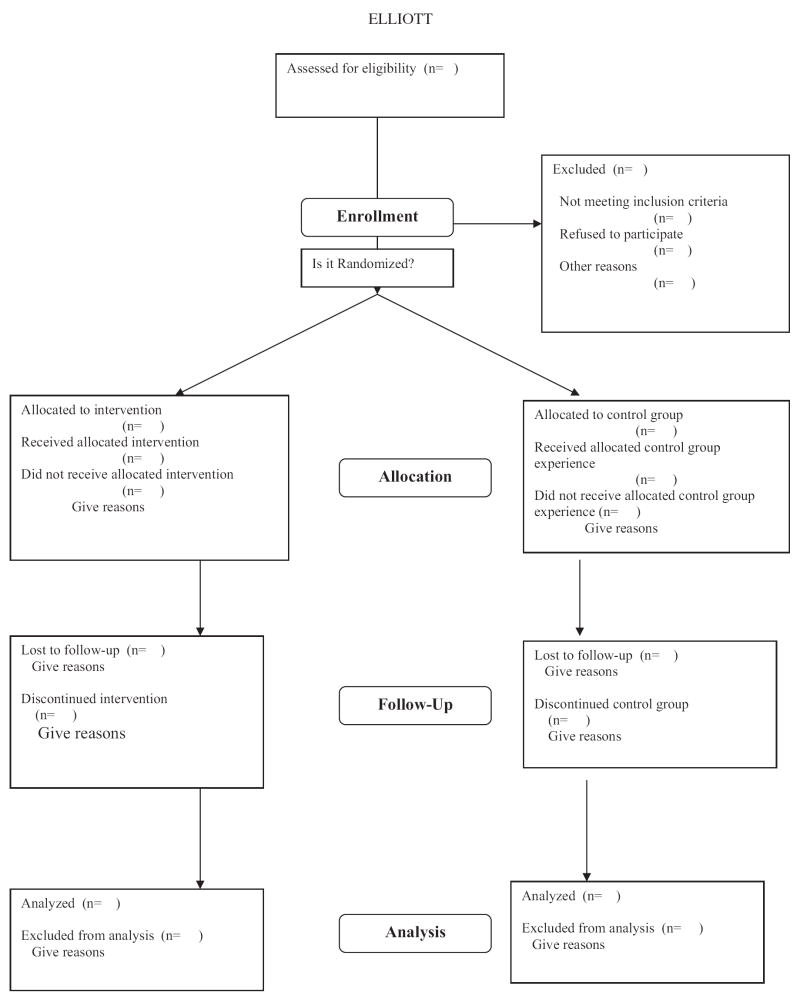

Figure 2 demonstrates a rather elegant and concise method for graphically depicting the process for determining eligibility, reasons for exclusion and refusing participation, assigning participants in a two-group design, and tracking attrition in each group over the duration of a study. This information is critical for appreciating the representativeness of a sample and for detecting possible inequities in the attrition rates observed in the treatment and control groups. The flow chart diagram is recommended as an efficient way to convey this information in a manuscript: When reported in the text (or in tables) this information can be dense, and comparisons between groups may be difficult to appreciate.

Figure 2.

Flowchart adapted from the CONSORT E-Flowchart (which is available at http://www.consort-statement.org).

Subsequent research has found that the overall quality and accuracy of studies reporting RCTs has improved substantially in journals that have adopted the CONSORT guidelines (Bennett, 2005; Kane, Wang, & Garrard, 2007; Moher, Jones, & Lepage, 2001), although some data suggest that the ICMJE journals have not rigorously adhered to the CONSORT standards (Mills, Wu, Gagnier, & Devereaux, 2005).

Registering Clinical Trials

The ICMJE argued that a public registry of current clinical trials is imperative for full transparency and public confidence in the process, as it guards against selective reporting (DeAngelis et al., 2005). Several registries have been developed (including some in the private sector). The ICMJE did not advocate for a specific registry, but it mandated that an acceptable registry should meet several important criteria: It should be accessible to the public; there should be no charge for registration; and it should be open to all interested registrants, managed by a nonprofit organization, and have a means for verifying the validity of the registered information (DeAngelis et al., 2004, p. 1364). The National Library of Medicine (within the National Institutes of Health) operates the only registry to date that meets these criteria, at www.clinicaltrials.gov. All of the journals that belong to the ICMJE expect a clinical trial to properly register in order to be published in a member journal (for a complete listing of these journals, see http://www.icmje.org). In 2005, the ICMJE announced that its editors would entertain unregistered trials on an individual basis if the author(s) had a compelling rationale that could “convince the editor” of their reasons; this practice was to be reviewed by the ICMJE in 2007 (DeAngelis et al., 2005, p. 146).

Registration requires researchers to record key features of their study for perusal in the electronic database. The clinical trials registry currently requires the following information (DeAngelis et al., 2005):

The funding source(s)

Secondary source(s) of funding

Responsible contact person for the trial

Research contact person (for scientific inquiries)

The title of the study

The official scientific title of the study (to include the name of the intervention, the condition under investigation, and the major outcomes)

Research ethics review information

Condition under investigation (e.g., asthma, depression)

Intervention(s) (to include a description and duration of the intervention and of the treatment group and the control group; for commercially available drugs and other products the generic name may be entered, but for other drugs or products a company serial number may be used)

Key inclusion and exclusion criteria

Study type (including details about randomization, double-blind or single-blind, types of controls, and type of assignment)

Anticipated trial start date

Target sample size

Recruitment status

Primary outcome (to include the times at which this will be assessed in the study)

Key secondary outcomes (which may be under investigation, as detailed in the approved study protocol)

When a study is registered, it is assigned a unique trial number (which can be used in future reports and manuscripts), and the trial registration date is recorded. If a sponsoring federal agency is listed, this information will be validated by the agency. Recently, the ICMJE recommended that the registry should make the results from all trials public, either with links to published reports or by other mechanisms that disseminate information through public clearinghouses (DeAngelis et al., 2005).

The ICMJE has also expanded the parameters of trials that should be registered. Initially, the dialogue concerned the registration of “medical” interventions that may affect a “health outcome.” However, the ICMJE definition of a “clinical trial” encompasses any study that uses a randomized assignment of participants into an intervention group and a control group, and the “intervention” may consist of drugs, behavioral treatments, medical devices, or surgical procedures (DeAngelis et al., 2005). Given these parameters, then, well-designed studies of psychological interventions are clearly within the definition of clinical trials that should be registered if the researchers are invested in public disclosure, advocacy, and the possibility of publishing in any of the journals with membership in the ICMJE.

Benefits of CONSORT and Trial Registration

Proponents of CONSORT have consistently maintained that the guidelines are a work in progress and thus open to revision. The Society of Behavioral Medicine recommended additional items for contributors to their journal (Annals of Behavioral Medicine) so that concerns unique to psychological interventions (and their external validity) were addressed (Davidson et al., 2003), including:

Specify the training of the treatment providers.

Describe the supervision of these providers.

Describe the treatment allegiance (preferences) of the providers.

Describe the integrity of the treatment and the monitoring of the treatment and adherence.

Treatment fidelity, implementation, and adherence issues have also been addressed in the public health literature (Mayo-Wilson, 2007). Representatives from the CONSORT Group have recommended improved and detailed reports of negative side effects (and “harms”; Ioannidis et al., 2004; only one item in the current CONSORT guidelines addresses the issue of safety). An epidemiological study detected increasing problems with participant attrition in studies that have adhered to CONSORT guidelines and urged greater detail in reporting reasons for attrition (Kane et al., 2007).

Although psychological outlets have not widely adopted the CONSORT guidelines, there is a sense of accomplishment among the ones that have (McGrath, Stinson, & Davidson, 2003). These proponents find the CONSORT applicable to psychosocial intervention research, and they cite benefits in increased accuracy and improvements in methodological designs that will eventually enhance the evidence base (Chambless & Ollendick, 2001; McGrath et al., 2003).

Indeed, a cursory review of the few RCTs published in Experimental and Clinical Psychopharmacology suggests that the use of CONSORT guidelines could have substantially improved these reports. In the study of a therapeutic workplace intervention for individuals in methadone maintenance clinics (Knealing, Wong, Diemer, Hampton, & Silverman, 2006), for example, there was a considerable variation observed in the number of individuals screened (N = 544), the number who qualified for the study and were invited to participate (n = 89), the number who were qualified for the study and were invited to sign the main consent form (n = 50), and the number who eventually signed the consent form (n = 47). Although it was reported why 374 did not qualify for participation, the exact reasons why others did not qualify were not reported. The use of the CONSORT diagram would have necessitated greater specificity in the reporting of those excluded from participation and the reasons for these exclusions, and the diagrammed information would have presented these details in an economical fashion.

Issues and Concerns

However, the above study also demonstrates some of the difficulties encountered in applying the CONSORT guidelines and, more specifically, in a strict adherence to the expectations of a trial registry. As a practical matter, it is recommended that researchers be familiar with CONSORT guidelines prior to data collection to ensure that these items are addressed in the study protocol. This information may be difficult—if not impossible—to collect in a study that is already in progress or completed. To be included in the clinical trials registry, the study must be registered before the onset of participant enrollment (DeAngelis et al., 2004).

If the researchers in the above study had adhered to the CONSORT standards, we might have a more informed idea of the representativeness of the sample culled from the larger population at these clinics. But, in light of the relatively small number of individuals that did participate, it is unclear how advance registration may have affected this report. Some ICMJE journals require strict adherence to the original methods and analytic plans stated at registration; some journals require, upon submission of the manuscript for review, documentation of the original study design, analyses, and any deviations from the planned analyses. It is a stated intention of the registry that this information is available to the general public upon request. More specifically, secondary analytic plans are discouraged (particularly from individual sites that participate in a multisite clinical trial) because studies that conduct these analyses are considered misleading and are strongly discouraged (although permission from an oversight board from the various sites may grant permission; Ehringhaus & Korn, 2006).

This rigid adherence to the ground rules of the trial registry has been criticized for overregulating research and for discouraging and inhibiting scientific inquiry (Horton, 2006, p. 1633). These issues are particularly salient in the study of interventions among individuals who live with health conditions—such as substance abuse, chronic disease, and disability—that are influenced by a multitude of contextual, social, and personal factors (Tucker & Roth, 2006). In their thought-provoking essay, Tucker and Roth (2006) advocate for a greater recognition of the ways in which reliance on RCTs as the primary source of evidence limits creativity, ignores the realities of multiply determined problems associated with chronic health conditions, and ignores the potential of modern, sophisticated analytic tools that can simultaneously examine intervention effects within the context of powerful, mitigating contextual and personal factors. If taken literally, then, secondary analyses that test tenets of a contemporary psychological theory of a more complex model different from the one originally hypothesized and registered would be discouraged, if not thwarted. Strict adherence to RCT registration may very well diminish the role of psychological theory in these studies.

In the example of the Knealing et al. (2006) study, we appreciate the clinical and contextual conditions that impinged upon the recruitment of eligible (and consenting) participants. The relatively small number that eventually agreed to participate also reflects the real-life concern that eligible participants who volunteer to be in a study—and agree to be randomized into a treatment or a control group—may have unique, unmeasured characteristics that may be underrepresented among the larger, more heterogeneous population of interest (Tucker & Roth, 2006). Furthermore, if researchers are bound to rigidly adhere to the original designs and analytic plans that are entered a priori into the registry, the investigation of under-studied, low-incidence problems may likely suffer. For example, spinal cord injury is a relatively low-incidence, high-cost disability. Despite the long-standing recognition of the problems of depression among persons with spinal cord injuries and the routine use of antidepressant medications, there are no RCTs of their effectiveness among persons with spinal cord injuries in the extant literature. Nevertheless, clinical practice guidelines give antidepressant therapy the highest grade of evidence possible and recommend it as the preferred treatment option (Elliott & Kennedy, 2004). Other topical issues, such as the use of propranolol for the treatment of agitation among persons with traumatic brain injuries, have been difficult to study due to the problems in recruiting eligible participants who can reasonably commit for an extended period of time to the protocol (Meythaler, Elliott, Chen, & Novack, 2006). In general, RCTs favor time-limited, discrete interventions for high-incidence problems unencumbered by the imposition of contextual, personal, and social factors that can influence attrition, so that a large number of data points will be available for original analytic plans (Tucker & Roth, 2006).

Recommendations

Ideally, RCTs serve an honored role in psychological science as the most elegant technique for testing cause-and-effect models and for gaining insight into mechanisms of change. These features are essential for refining theoretical explanations of behavior. Yet the current demand for standardizing and registering RCTs may inadvertently diminish the value of theory-driven research in favor of outcomes-based research that informs practice. The concern for testing and refining theory is secondary to the need for informing clinical practice. Certainly, theory-driven research will occur in this context, but it is noteworthy that standards place a premium on methodological details and express little interest in theoretical models (which may be difficult to explain to the lay public and may be of little interest to the prescribing clinician).

It is also somewhat disconcerting to witness the ascendancy of the RCT as the primary evidentiary basis for practice at the expense of other methodologies. We have known for some time that psychological interventions are generally supported across methodologies (Wampold, 2001), and a meta-analysis of methodologically diverse studies of medical treatments found similar effect sizes for experimental and observational studies (Concato, Shah, & Horwitz, 2000). In fact, these authors explicitly warned that it is erroneous to assume that designs other than RCTs produce misleading results, as this false assumption misinforms clinicians, patients, and the training of students and health care professionals, and it discourages the use of alternative techniques to the detriment of the science. Tucker and Roth (2006) persuasively argue for evidentiary pluralism in understanding the empirical base for practice and stress the ongoing need for effectiveness studies that embrace clinical realities and contextual and personal factors that are often notoriously excluded in clinical trials that are of relatively brief duration and conducted under fairly artificial conditions.

In light of the evidence supporting the use of CONSORT guidelines, it seems prudent for researchers to use the guidelines in an a priori fashion in developing research protocols and for monitoring recruitment, attrition, and data management. The guidelines may be especially helpful in training students to be aware of methodological details that are essential in accurate reporting (Bennett, 2005). The CONSORT E-Flowchart is particularly informative; it efficiently conveys vital details to a reader. Experimental and Clinical Psychopharmacology is encouraged to require the CONSORT E-Flowchart in studies that report RCTs. The use of the CONSORT E-Checklist could be required or left to the editor’s discretion for review purposes.

Trial registry has not been required by any leading psychological journal (as of this writing). The primary issue for any research project that features an RCT may be more practical than scholarly, per se: Does the study have the potential to merit publication in any of the ICMJE member journals? If so, it would be imperative for the trial to be registered before the study commences. Funding agencies, too, may expect registration, so the intention to register should be explicitly stated in grant proposals. If deviations do occur over the life of the project (or if secondary analytic strategies are implemented), the resulting studies may be submitted to non-ICMJE outlets for consideration.

A related issue that plagues many psychological journals concerns the visibility and availability of published research to a larger audience. The ICMJE member journals are readily available in various electronic databases (e.g., MEDLINE, PubMED) because they are included in Index Medicus. Several journals published by the American Psychological Association are included in this library (e.g., Journal of Consulting and Clinical Psychology, Health Psychology); inclusion guarantees greater visibility and exposure to the larger international audience. Experimental and Clinical Psychopharmacology should consider applying for admission to Index Medicus, as this would increase the likelihood that well-designed RCTs appearing in the journal would be included in searches and subsequent reviews of literature germane to evidence-based practice.

A larger, more global problem concerns the decreasing recognition of the value of converging evidence across methodological designs. To a certain degree, proponents have addressed this problem by offering standards that parallel CONSORT for quasi-experimental designs: The Transparent Reporting of Evaluation with Non-Randomized Designs guidelines have been developed to standardize features that should be reported in these situations (available at http://www.trend-statement.org/asp/trend.asp). In general, it behooves the profession to revisit the value of evidentiary pluralism and to consider ways to promote an expanded view of science that recognizes the need for well-executed studies of problems that may otherwise be considered low-incidence. Researchers should also reconsider the value of RCTs in clinical areas in which it may be difficult to operationalize a control group experience, in part because a “routine standard of care” may not exist or a reasonable approximation of a no-treatment comparison group is impossible. In these cases, greater value on quasi-experimental designs, program evaluation “effectiveness” studies, and advanced single-case designs may be warranted.

Acknowledgments

This article was supported in part by National Institute on Disability and Rehabilitation Research Grants H133N5009 and H133B980016A, National Institute on Child Health and Human Development Grant T32 HD07420, and Centers for Disease Control and Prevention–National Center for Injury Prevention and Control Grant R49/CE000191 to Timothy R. Elliott. Its contents are solely the responsibility of the author and do not necessarily represent the official views of the funding agencies.

References

- Altman DG, Schulz KF, Moher D, Egger M, Davidoff R, Elbourne D, et al. The revised CONSORT statement for reporting randomized trials: Explanation and elaboration. Annals of Internal Medicine. 2001;134:663–694. doi: 10.7326/0003-4819-134-8-200104170-00012. [DOI] [PubMed] [Google Scholar]

- Bennett JA. The consolidated standards of reporting trials (CONSORT): Guidelines for reporting randomized trials. Nursing Research. 2005;54:128–132. doi: 10.1097/00006199-200503000-00007. [DOI] [PubMed] [Google Scholar]

- Chambless DL, Ollendick TH. Empirically-supported psychological interventions: Controversies and evidence. Annual Review of Psychology. 2001;52:685–716. doi: 10.1146/annurev.psych.52.1.685. [DOI] [PubMed] [Google Scholar]

- Committee on Clinical Trials Registries. Developing a national registry of pharmacologic and biologic clinical trials: Workshop report. Washington, DC: National Academies Press; 2006. [PubMed] [Google Scholar]

- Concato J, Shah N, Horwitz R. Randomized, controlled trials, observational studies, and the hierarchy of research designs. New England Journal of Medicine. 2000;342:1887–1892. doi: 10.1056/NEJM200006223422507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson KW, Goldstein M, Kaplan R, Kaufmann P, Knatterud G, Orleans T, et al. Evidence-based medicine: What is it and how do we achieve it? Annals of Behavioral Medicine. 2003;26:161–171. doi: 10.1207/S15324796ABM2603_01. [DOI] [PubMed] [Google Scholar]

- DeAngelis C, Drazen J, Frizelle F, Haug C, Hoey J, Horton R, et al. Clinical trial registration: A statement from the International Committee of Medical Journal Editors. Journal of the American Medical Association. 2004;292:1363–1364. doi: 10.1001/jama.292.11.1363. [DOI] [PubMed] [Google Scholar]

- DeAngelis C, Drazen J, Frizelle F, Haug C, Hoey J, Horton R, et al. Is this clinical trial fully registered? A statement from the International Committee of Medical Journal Editors. Annals of Internal Medicine. 2005;143:146–148. doi: 10.7326/0003-4819-143-2-200507190-00016. [DOI] [PubMed] [Google Scholar]

- Ehringhaus S, Korn D. Principles for protecting integrity in the conduct and reporting of clinical trials. Washington, DC: Association of American Medical Colleges; 2006. Jan, [Google Scholar]

- Elliott T, Kennedy P. Treatment of depression following spinal cord injury: An evidence-based review. Rehabilitation Psychology. 2004;49:134–139. [Google Scholar]

- Haaga DA, Stiles W. Randomized clinical trials in psychotherapy research: Methodology, design, and evaluation. In: Snyder CR, Ingram RE, editors. Handbook of psychological change. New York: John Wiley & Sons; 2000. pp. 14–39. [Google Scholar]

- Horton R. Trial registers: Protecting patients, advancing trust. Lancet. 2006;367:1633–1635. doi: 10.1016/S0140-6736(06)68709-6. [DOI] [PubMed] [Google Scholar]

- Ioannidis J, Evans S, Getzsche P, O’Neill R, Altman D, Schulz K, Moher D. Better reporting of harms in randomized trials: An extension of the CONSORT statement. Annals of Internal Medicine. 2004;141:781–788. doi: 10.7326/0003-4819-141-10-200411160-00009. [DOI] [PubMed] [Google Scholar]

- Jenicek M. Foundations of evidence-based medicine. New York: Parthenon Publishing Group; 2003. [Google Scholar]

- Kane RL, Wang J, Garrard J. Reporting in randomized clinical trials improved after adoption of the CONSORT statement. Journal of Clinical Epidemiology. 2007;60:241–249. doi: 10.1016/j.jclinepi.2006.06.016. [DOI] [PubMed] [Google Scholar]

- Kaplan R, Trudeau K, Davidson K. New policy on reports of randomized clinical trials. Annals of Behavioral Medicine. 2004;27:81. doi: 10.1207/s15324796abm2702_1. [DOI] [PubMed] [Google Scholar]

- Knealing T, Wong C, Diemer K, Hampton J, Silverman K. A randomized controlled trial of the therapeutic work-place for community methadone patients: A partial failure to engage. Experimental and Clinical Psychopharmacology. 2006;14:350–360. doi: 10.1037/1064-1297.14.3.350. [DOI] [PubMed] [Google Scholar]

- Kondro W. Drug company experts advised staff to withhold data about SSRI use in children. Canadian Medical Association Journal. 2004;170:783. doi: 10.1503/cmaj.1040213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayo-Wilson E. Reporting implementation in randomized trials: Proposed additions to the consolidated standards of reporting trials statement. American Journal of Public Health. 2007;97:11–14. doi: 10.2105/AJPH.2006.094169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGrath PJ, Stinson J, Davidson K. Commentary: The Journal of Pediatric Psychology should adopt the CONSORT statement as a way of improving the evidence base in pediatric psychology. Journal of Pediatric Psychology. 2003;28:169–171. doi: 10.1093/jpepsy/jsg002. [DOI] [PubMed] [Google Scholar]

- Meythaler J, Elliott T, Chen Y, Novack T. Treatment of agitation with propranolol. Journal of Health Trauma Rehabilitation. 2006;21:416. [Google Scholar]

- Mills EJ, Wu P, Gagnier J, Devereaux P. The quality of randomized trial reporting in leading medical journals since the revised CONSORT statement. Contemporary Clinical Trials. 2005;26:480–487. doi: 10.1016/j.cct.2005.02.008. [DOI] [PubMed] [Google Scholar]

- Moher D, Jones A, Lepage L. Use of the CONSORT statement and quality of reports of randomized trials: A comparative before-and-after evaluation. Journal of the American Medical Association. 2001;285:1992–1995. doi: 10.1001/jama.285.15.1992. [DOI] [PubMed] [Google Scholar]

- Rennie D. Trial registration: A great idea switches from ignored to irresistible. Journal of the American Medical Association. 2004;292:1359–1362. doi: 10.1001/jama.292.11.1359. [DOI] [PubMed] [Google Scholar]

- Sackett DL. Rules of evidence and clinical recommendations on the use of antithrombotic agents. Chest. 1989;95:2S–4S. [PubMed] [Google Scholar]

- Tucker JA, Roth D. Extending the evidence hierarchy to enhance evidence-based practice for substance abuse disorders. Addiction. 2006;101:918–932. doi: 10.1111/j.1360-0443.2006.01396.x. [DOI] [PubMed] [Google Scholar]

- Wampold BE. The great psychotherapy debate: Models, methods, and findings. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 2001. [Google Scholar]

- Whittington CJ, Kendall T, Fonagy P, Cottrell D, Cotgrove A, Boddington E. Selective serotonin reuptake inhibitors in childhood depression: Systematic review of published versus unpublished data. Lancet. 2004;363:1341–1345. doi: 10.1016/S0140-6736(04)16043-1. [DOI] [PubMed] [Google Scholar]