Abstract

How we decide whether a course of action is worth undertaking is largely unknown. Recently, neuroscientists have been turning to ecological approaches to address this issue, examining how animals evaluate the costs and benefits of different options. We present here evidence from rodents and monkeys that demonstrate the degree to which they take into account work and energetic requirements when deciding what responses to make. These calculations appear to be critically mediated by the anterior cingulate cortex (ACC) and mesolimbic dopamine (DA) pathways, with damage to either causing a bias towards options that are easily obtain but yield relatively smaller reward rather than alternatives that required more work but result in greater reward. The evaluation of such decisions appears to be carried out in systems independent of those involved in delay-discounting. We suggest that top-down signals from ACC to nucleus accumbens (NAc) and/or midbrain DA cells may be vital for overcoming effort-related response costs.

Keywords: Anterior cingulate cortex, Dopamine, Cost-benefit decision making, Rat, Monkey, Effort

1. Introduction

One of the central questions in the behavioral sciences is how animals decide what is worth doing. Importantly, the behavior that is most advantageous is seldom constant, but instead dependent on a number of situational variables and the internal state of the animal (Stephens & Krebs, 1986). Up until recently, however, neuroscientists have tended to investigate the selection of behavior in situations which have limited possibilities of response and a single well-defined outcome. Frequently, there is no place for considering whether or not current motivation might play a part in performance, rendering one course of action appropriate under one state, another under a different one. While this kind of research has allowed scientists to make important progress in determining the neural mechanisms of selection and evaluation of goal-oriented actions (Miller & Cohen, 2001; Schall & Thompson, 1999), such an approach may not model faithfully the typical scenario faced by animals in real-world circumstances where there are multiple potential courses of action to choose between, each of which with a set of possible costs to achieve particular consequences.

On the vast majority of occasions, our survival is not critically dependent on the decisions we make. On a day-by-day basis, while our choices may have far-reaching consequences, for most people in rich countries, they tend to be confined to issues of immediate concern, of what we should eat today rather than what we need do in order to gather sufficient sustenance to last through the night. It is therefore perhaps unsurprising that psychology and neuroscience have tended to concentrate on characterizing how behavioral acts relate to proximal rewards, trying to delineate the neural mechanisms linking sensory processes with responses and the selection of voluntary actions, with little consideration for what long-term goals an animal might possess. Nevertheless, if we start from the relatively uncontroversial position that the brain is the result of its evolutionary history and this history has been driven by natural selection, then it seems likely that many aspects of overt behavior are too the products of selective, evolutionary processes (Kacelnik, 1997). This notion, of analyzing the behavior in terms of its costs and benefits to an individual’s overall fitness, has been one pioneered by behavioral ecologists for the past fifty years, building on the principles of Niko Tinbergen (Stephens & Krebs, 1986; Tinbergen, 1951). Their approach has generally been focused purely at a behavioral level, even actively avoiding issues of mechanisms (with the exception of Tinbergen himself). Nonetheless, this methodology is being increasingly courted by neuroscientists as novel way of approaching the link between brain and behavior (Glimcher, 2002; Platt, 2002).

In this paper, we will focus in particular on one type of cost-benefit decision making: choosing whether or not it is worth it for an animal to apply additional work to receive a larger reward. In the first section, we will consider evidence that animals do weigh up the amount of effort or persistent responding required by the available options to decide which course of action to take. We will then discuss the findings from a cost-benefit T-maze paradigm which indicates a vital role for the anterior cingulate cortex (ACC) and the mesolimbic dopamine (DA) systems in allowing animals, when conditions are favorable, to overcome effort-related response costs to obtain greater rewards (Salamone, Cousins, & Bucher, 1994; Walton, Bannerman, & Rushworth, 2002).

2. Behavioral analyses of effort-related decision making

In many natural circumstances, animals have to assess the value of putting in extra work to obtain greater benefit. One of the main issues that normative models of animal decision-making try to predict, assuming that factors such as predation, nutrition or variance are excluded, is how the allocation of energetic expenditure and time is evaluated in an optimal manner. In terms of foraging, this may involve deciding whether or not it is worth traveling further to reach a better source of food or whether to use a high or low energy mode of travel, such as flying or walking, depending on the value of the expected reward (Bautista, Tinbergen, & Kacelnik, 2001; Janson, 1998; Stevens, Rosati, Ross, & Hauser, 2005). Obtaining empirical data on the way in which animals choose between such options is imperative in order to study the neural basis of how animals overcome effort-based constraints. While there are obvious differences between species in their foraging modes and evolutionary histories, there is a consistent trend of work-based or spatial discounting, with animals requiring a greater quantity or more palatable food rewards to offset the attraction of an alternative easily obtained reward (e.g., Cousins & Salamone, 1994; Stevens et al., 2005).

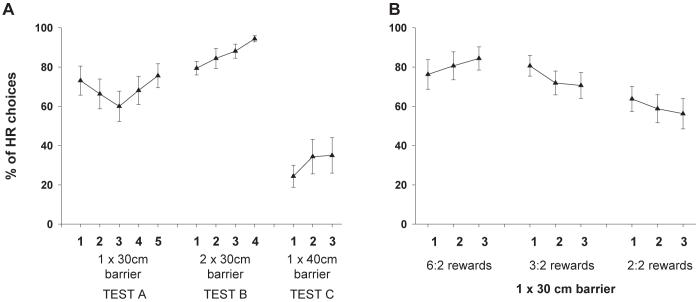

2.1. T-maze studies in rats

Using a version of a T-maze task originally devised by Salamone and colleagues (Salamone et al., 1994), we investigated the willingness of rats to choose between two courses of action that differed in their respective energetic demands and consequent reward sizes (Walton, Croxson, Rushworth, & Bannerman, 2005). One goal arm of the T-maze was designated the high cost / high reward (HR) arm and the other the low cost / low reward (LR) arm, the spatial locations of which were kept constant throughout the period of testing (though were counterbalanced between rats). The cost of the obtaining the larger quantity of reward was manipulated by requiring the rats selecting the HR option to scale a wire-mesh barrier to reach the food. During the initial stages of this experiment, the number of food pellets in the HR and LR arms was always 4 and 2 respectively. As can be observed in Figure 1a, the rats altered their pattern of choices in a manner dependent on the relative amount of work required to obtain reward in the two arms. Initially, the animals tended to prefer to put in more work by climbing over a 30 cm barrier to obtain the high reward (Test A: HR = 30 cm barrier vs LR = no barrier). This preference was significantly strengthened when the cost of selecting either arm was equated by putting identical 30 cm barriers in both the HR and LR arms (Test B: HR = 30 cm barrier vs LR = 30 cm barrier): all the animals now switched to choose the HR option on every trial [main effect of test session between last 3 days of Test A and first 3 days of Test B: F(1, 15) = 9.35, p < 0.01]. Conversely, when the difference in the energetic demands associated with each option was increased (Test C: HR = 40 cm barrier vs LR = no barrier), this preference reversed, with the majority of animals now opting for the LR choice [main effect of test session between last 3 days of Test A and Test C: F(1, 15) = 21.35, p < 0.01].

Figure 1.

Performance of rats on a T-maze cost-benefit task choosing between a high cost / high reward (HR) and a low cost / low reward (LR) alternatives (adapted from Walton et al., 2005). (A) In Test A, there was a 30 cm barrier in the HR arm while the LR arm was unoccupied; in Test B, an identical 30 cm barrier was present in both goal arms; in Test C, the LR arm was again vacant but the HR now contained a 40 cm barrier. In all three tests, there were 4 food pellets in the HR and 2 in the LR. (B) In each condition, rats chose between climbing a 30 cm barrier in the HR or selecting the unoccupied LR arm. However, the reward in the HR arm varied across tests, with the ratio between the HR and LR decreasing from 6:2, to 3:2 and finally to 2:2.

Similar changes in preference were also observed when the reward ratio in the two goal arms was manipulated, with fewer HR arm choices as the reward size available in the HR arm decreased [main effect of session: F(2, 30) = 10.36, p < 0.01] (Figure 1b). It is noticeable, however, that even when the reward sizes were equally small in the two arms there was still an overall preference for selecting to climb the 30 cm barrier to reach the food. Whether this was caused by a perseveration in choosing this particular option caused by extensive testing on the task or by an alteration in the perceived value of each arm, either as a result of the previous history of greater reward associated with the HR arm or through a change in the value of rewards obtained at a greater effort, is not clear from the present data. Previous studies have demonstrated that animals may attribute a higher value to one of two stimuli associated with identical amounts of food if they had previously had to work hard to obtain the reward associated with that stimulus (Clement, Feltus, Kaiser, & Zentall, 2000; Kacelnik & Marsh, 2002).

2.2 Operant studies in rats

While the T-maze task provides qualitative data about how animals make decisions when evaluating work costs in relation to expected outcomes, it is hard to translate these findings into semi-quantitative predictions as to what energetic currency they might be using. Rats are able to surmount the barrier to obtain the larger quantity of food with little delay compared to the time it takes them to reach the smaller reward in the unoccupied arm, but the exact expenditure difference of choosing the HR compared to the LR arm is unclear. One way of overcoming this difficulty and obtaining quantitative data is to run comparable experiments in operant boxes. In this situation, instead of having to choose whether to climb over a barrier, animals now have to decide between two options which differ in the numbers of lever presses required to earn reward. While such a response by necessity introduces a time component into the choices, there are several reasons to believe that this type of work-based decision making is still dissociable from delay-based tests of impulsive choice (Bradshaw & Szabadi, 1992; Cardinal, Robbins, & Everitt, 2000; Mazur, 1987; Rawlins, Feldon, & Butt, 1985; Richards, Mitchell, de Wit, & Seiden, 1997). First, behavioral studies have demonstrated that patterns of choices fail to correlate across the two types of decisions. Common marmosets, for example, discount temporal delays less steeply than cotton-top tamarins, and hence choose to wait longer for a large reward, but also appear less willing to travel large distances; marmosets are, therefore, more inclined than tamarins to select the option taking more time to reach a large reward, but only if it does not require increased energetic expenditure (Stevens et al., 2005). Moreover, there is preliminary evidence for dissociations between both the neural structures and neurotransmitters involved in assessing both types of cost (see Section 3.1 & 3.2) (Cardinal, Pennicott, Sugathapala, Robbins, & Everitt, 2001; Denk et al., 2005; Walton, Bannerman, Alterescu, & Rushworth, 2003).

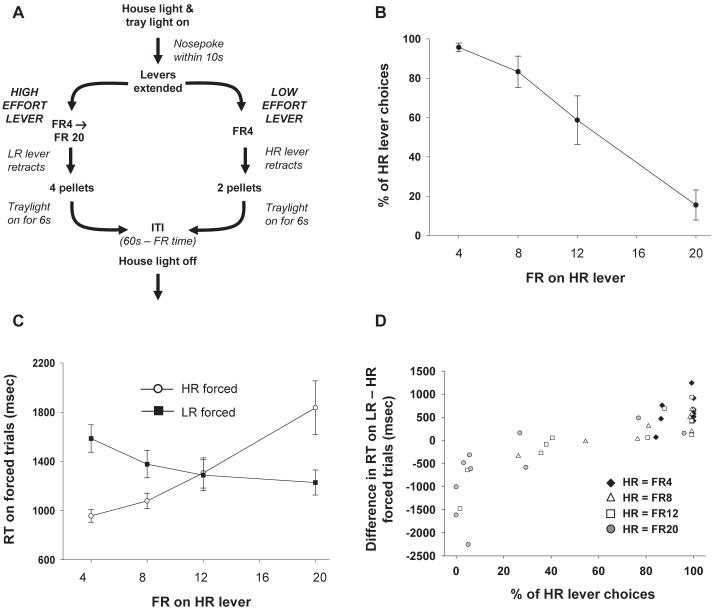

Methods

As in the T-maze paradigm, one lever was designated the HR option and lead to 4 food pellets, and the other the LR option resulting in 2 food pellets. Initially, both the HR and LR levers were set as having a fixed ratio schedule of four presses (FR4). In each session, 10 rats received 48 trials that included both choice trials (with both levers available) and forced trials (only one of the two levers extended), with there being 2 forced trials (1 to each lever) for every 4 choice ones. This compelled animals to sample each option on at least 1/6 of trials and allowed for comparison of reaction times when they were required to select a particular option. In order to minimize the effects of switching between responses and of uncertainty about the FR requirement on either lever, the work required to gain the HR was kept stationary during a set of 3 testing sessions and was only increased across sets (FR8, FR12, and FR20). The LR remained permanently fixed as FR4.

A schematic of the operant paradigm is shown in Figure 2a. The start of a trial was signaled by the illumination of the house and tray lights. In order to activate the levers, rats were required to make a nose-poke in the central food tray within 10s of the lights turning on. On choice trials, selection of one lever caused the other lever immediately to retract, meaning that all decisions were categorical and could not be changed midway through a course of action. Failure to respond within 10s of either the start of the trial with a nose-poke response or of the levers being extended resulted in a time-out period of 60s during which all lights were extinguished and levers retracted. Once a single response was made, the lever remained extended until completion of the FR requirement, whereupon the selected lever retracted and food was delivered to the central food tray, which was illuminated for a period of 6s. At no point during training or subsequent testing did a rat fail to finish an FR requirement. The intertrial interval (ITI) was 60s minus the amount of time it took rats to complete the selected FR schedule (with the minimum ITI being 30s), so that the rate of trials was not influenced by the animals’ choices.

Figure 2.

(A) Schematic of the operant work-based decision making paradigm. (B) Average percentage of HR lever choices as a function of the FR schedule assigned to the HR (each averaged across three sessions at each FR). The LR was fixed as being FR4 for 2 rewards. (C) Latency to make an initial response on a forced trial, when only one of the two levers was presented, as a function of the LR schedule on the HR lever. (D) Correlation between the percentage of HR lever choices on each FR schedule and the difference between RTs on forced HR and LR trials for each individual animal. Each rat is represented by an individual symbol at each FR schedule.

Results and Discussion

As with the previous T-maze paradigm, animals switched their preferences away from the HR lever as the work required to gain the reward increased [F(3, 27) = 25.37, p < 0.01] (Figure 2b). There was a near linear, monotonic relationship between the FR requirement on the HR lever and percentage of trials on which it was chosen, with the point of indifference (where the rats as a group choose the HR and LR on 50% of the trials) being at approximately FR13 on the HR lever when extrapolated from the available data.

Interestingly, this pattern of choices was also closely mirrored by response latencies on forced trials even though in the latter case only a single option was available; reaction times (RTs) on HR forced trials were faster than those on LR forced trials in blocks when the HR was preferred on the majority of choice trials and the converse was true when the LR was selected on choice trials (Figure 2c). This relationship between the two measures was also borne out by the choice performance and response latencies of individual animals (Pearson correlation coefficient: r = 0.85, 0.93, & 0.74 for HR = FR8, FR12, & FR20 respectively, all p < 0.05) (Figure 2d). This indicates that the response to a particular situation is not influenced merely by the immediate available alternatives (which, on forced trials, are nonexistent), but also on the context of options presented on previous trials.

2.3. Operant studies in monkeys

The behavioral choice in the cost-benefit task used with rats is restricted, consisting of two possible actions (high or low effort) and two known outcomes (high or low reward). While this simplicity is advantageous in constraining the problem, it fails to provide a complete sense of how animals weigh up costs and benefits. Primates can learn and retain with relative ease multiple pieces of information, meaning that it is possible to test them on a range of different choices by systematically varying the amount of effort and the size of the reward. To the best of our knowledge, there have been few studies to date that have systematically investigated the effect of different amounts of work and reward sizes on choice behavior in non-human primates.

Methods

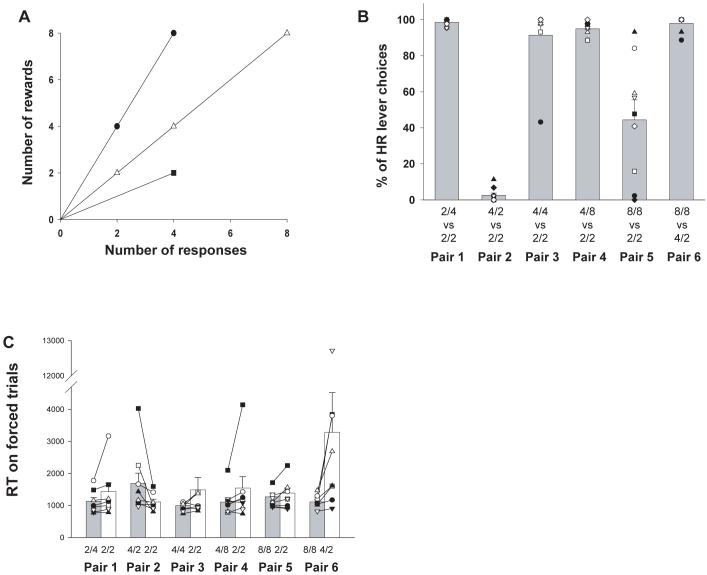

To examine this issue, 9 male macaque monkeys (Macaca mulatta) were presented with a series of two alternative choices on the left and right of a touchscreen monitor where they could either select a stimulus that required the animal to make more responses to obtain larger reward or one which entailed less effort and resulted in fewer food pellets. Animals were tested using a range of different stimuli, each associated with a particular number of responses required before reward was delivered and a particular quantity of food pellets that the animal received for completing the course of action. Some of the stimuli varied in their rates of gain (i.e., the response cost - responses per reinforcer - could be 2, 1 or 0.5) and others that had identical rates of gain but differed in relative work/reward ratios (Figure 3a). Each stimulus was a unique individual color clipart image, measuring 6cm by 6cm. Performance was indexed both by behavioral preference and response times.

Figure 3.

(A) Work/reward ratio for each of the different stimuli taught to the monkeys in their operant work-based decision making paradigm. A cost/benefit stimulus of, for example, “2/4” indicates that the monkey needed to make 2 presses to receive 4 rewards. (B) Percentage of HR stimulus selections (the upper work/reward ratio in the labeling under each bar) over the 6 pairs of stimuli tested. To aid presentation, the sequence of stimuli have been rearranged compared to the actual order of testing, which went Pairs 1, 2, 4, 5, 3, and 6. The data for some stimulus pairs without the 2/2 stimulus that were tested (e.g., 8/8 vs 4/4) have been omitted for ease of comprehension. (C) Latency to make an initial response on a forced trial for each stimulus pair, when only one of the two possible stimuli was displayed in the touchscreen.

Initially, monkeys were trained to understand the work/reward value of each rewarded stimulus by running a discrimination test for each stimulus against an unrewarded single response stimulus (which changed for each new rewarded stimulus). Selecting a rewarded stimulus caused a 350 Hz response tone to be presented for 250 ms and both stimuli to be extinguished, followed by the reappearance of the chosen stimulus 500 ms later at a random location on the screen. This would then be repeated (stimulus touched, response tone, stimulus extinguished, stimulus reappearance at new location) up to the number of times assigned for that stimulus until the final response, the selection of which resulted in the reward being delivered along with the presentation of a second, reward tone (500 Hz for 250 ms). After 50 trials of correctly choosing the rewarded stimulus with a particular set of stimuli, the monkeys were moved onto a new pair. The association between each discrete stimulus and its work/reward ratio was kept constant throughout the study. During all types of testing, animals were always presented with the same pair of stimuli throughout the session. In total, animals were taught ten pairs of stimuli, though only seven were subsequently used during cost/benefit decision making test phrase.

Once they had learned all of the rewarded stimuli, the animals commenced the test phase of the task. This was very similar to the training phase except that the decision was now between a pair of two rewarded stimuli that both varied in their associated response quotient and reward size. The stimulus with the greater reward size was termed the high reward stimulus (HR), the other the low reward stimulus (LR) [in the case when the reward was equal - 4 presses for 2 rewards (4/2) versus 2 presses for 2 rewards (2/2) - the 2/2 was termed the LR to allow for consistency between other choice pairs where the 2/2 stimulus was also the LR]. Two of the choice pairs varied in only one dimension (i.e., either both stimuli entailed the same work cost, but one resulted in twice the reward than the other, or both had the same reward outcome, but one required twice the work as the other) and hence had a “correct” optimal answer based on simple comparison between the work cost or reward magnitude of the two stimuli; however, the stimuli in the other pair differed in both the work and reward size and therefore required the integration of the costs and benefits to guide action choice. Selection of a particular stimulus caused the alternative immediately to disappear, thus committing the animals to a particular course of action.

Before receiving choice trials with a particular pair of stimuli, monkeys were required to complete 40 consecutive forced trials in which only the HR or LR was presented in isolation (20 for each stimulus in a pair, the side of presentation being varied pseudo-randomly). As with the rat operant testing, sessions of choice trials also contained forced trials. Choice testing always commencing with 4 successive forced trials and subsequently there were 2 forced trials (1 HR, 1 LR, presented pseudo-randomly left or right) for every 4 choice ones. Owing to the different reward sizes, the number of trials per day was varied such that the monkeys would not receive more than ∼120 rewards if they chose the HR throughout. The monkeys were tested with a particular pair of stimuli for 44 choice trials until behavior stabilized (meaning that performance was within ± 10% for at least 4 out of 5 consecutive sets of 11 choice trials) or, if their pattern of responses was variable, until they had completed 99 choice trials.

Results and Discussion

When there was a correct answer (choice pairs 1 and 2), all the monkeys chose the option which either lead to higher reward or required less work on almost all choice trials (Figure 3b). The fact that the same 2/2 stimulus was rejected with when paired against a 2/4 stimulus but selected when paired against a 4/2 stimulus indicates that all animals were selecting their options based on a comparison between the work/reward ratio of each pair of stimuli rather than by learning fixed stimulus preferences.

The other choice pairs (pairs 3-6) required an assessment of whether the increased size of reward associated with the HR stimulus was worth the extra work required to obtain it. As can be observed in Figure 3b, with all but one choice pair (pair 5), performance was extremely reliable across all animals, with them all choosing the HR stimulus against the 2/2 one regardless of whether it had the same rate of gain as the LR (both 1 response/reward) or a better one. The one exception to this was when the HR required 8 responses for 8 rewards, where there was a large degree of variability across monkeys. Interestingly, however, there was still a high degree of consistency between choices in the same animal, with the monkey least willing to choose this stimulus also being the least likely to choose the HR in all 3 other cost-benefit choices (4/4 vs 2/2; 4/8 vs 2/2; 8/8 vs 4/2). Moreover, the reluctance of some animals to choose the 8/8 stimulus when paired with the 2/2 one was not categorical because all of them selected this option when the alternative had half the rate of gain (4/2) (pair 6).

As with the rat operant testing, there was a good correspondence between choice behavior and the median response speed on forced trials to press the individually presented cost/benefit stimulus. The stimulus consistently selected on choice trials (pairs 1-4, 6) was responded to reliably faster than the LR on forced trials (two-tailed paired t-tests between log transformed RT on 1st HR and 1st LR response: p < 0.05 for choice pairs 1, 2 and 6; p < 0.06 for pairs 3 and 4) (Figure 3c). This indicates that these initial latencies were affected by the context of the “invisible alternative” (i.e., the alternative stimulus that would normally be presented in that pair on choice trials, but was absent on the forced trials). Again, the fact that animals respond on average faster to the 2/2 stimulus when it is the preferred stimulus (pair 2) than when it is ignored in choice trials (pair 1) suggests that the monkeys were assessing each decision on the basis of an evaluation of the costs and benefits of both options, though this difference failed to reach significance (p > 0.1). For the one pair where there was variation between animals in the patterns of choice behavior (pair 5), there was a degree of correlation between choice performance and the difference in RT between the 1st response on HR and LR forced trials (Pearson correlation coefficient: r = 0.66, p = 0.051), though this was mainly driven by two outlying animals. Clearly, it will require further testing to assess how closely response latencies match choice performance in order see whether or not the two measures are providing similar information about the animals’ preferences.

2.4. Summary of Behavioral Experiments

Taken together, these results indicate that monkeys, as rats, can make optimal decisions between options that differ in their work and reward quotients. Within the range of stimuli presented to them, they always maximized their rate of reward gain as calculated by the number of responses per reward, implying that they were selecting their responses on the basis of both the costs and benefits of each course of action. Both species, with slightly differing consistency, expressed their choice preferences both overtly through their responses on choice trials and covertly through the latency to respond when only one of the two options was available. The findings from the three behavioral tests clearly demonstrate the validity of these types of paradigm to assess effort-related decision making and provide several measures of performance to investigate in future studies manipulating or recording from the underlying neural mechanisms.

3. Neural basis of effort-related decision making

It has frequently been observed that the basic machinery of motivation favors behavior that results in more easily obtained and immediate reward (Monterosso & Ainslie, 1999). It is therefore interesting to question how the brain allows animals to overcome effort constraints in situations where the high work option leads to an increased quantity of reward or a greater return in terms of fitness or utility currencies. This is also an issue of great importance for those neuropsychiatric disorders such as depression where anergia and apathy are cardinal features. Almost all such studies on this issue have to date been performed in rodents.

3.1. DA and effort-related decision making

Mesocorticolimbic DA pathways have long been implicated in the modulation of goal-directed behavior (Wise & Rompre, 1989). There is a large body of evidence indicating a role for the DA pathway from the ventral tegmental area (VTA) to the nucleus accumbens (NAc) in enabling animals to work to obtain reinforcement and to maintain the value of conditioned incentive stimuli through periodic reinforcement. Numerous studies, using either systemic or targeted administration of DA antagonists or selective DA depletions of NAc, have demonstrated changes in the motivation of animals to pursue reward and the manner in which they allocate their responses (Berridge & Robinson, 1998; Ikemoto & Panksepp, 1999; Salamone & Correa, 2002).

Of particular relevance to the present question is a series of elegant studies by Salamone and colleagues examining how DA at the level of NAc was involved in allowing animals to overcome work-related response costs in the presence of a less demanding alternative. Working from a hypothesis originally propounded by Neill and Justice (1981) and using the T-maze barrier task described in Section 2.1, it was demonstrated that DA depletions of NAc biased rats away from scaling a large barrier in the HR arm to preferring to the choose the unobstructed LR arm (Salamone et al., 1994). Although DA has also been associated with more fundamental aspects of food-directed motor functions and motivation (Evenden & Robbins, 1984; Horvitz & Ettenberg, 1988; Liao & Fowler, 1990; Ungerstedt, 1971), it is unlikely that these played a central role in the change in the allocation of responses as, when the LR arm was empty of food, DA-depleted rats continued to choose to climb the barrier to obtain food in the HR arm, though with a slower latency than before surgery (Cousins, Atherton, Turner, & Salamone, 1996). Comparable changes in choice behavior away from work-related alternatives have also been shown using an operant paradigm in which animals had to decide whether to make lever presses on particular FR schedules to obtain palatable food rewards or to eat the freely-available lab chow (Salamone et al., 1991).

To date, there is little evidence as to how exactly DA facilitates overcoming effort-related response costs and what the temporal dynamics of its involvement are. Neurophysiological studies and computational modeling have suggested that phasic activity changes in dopaminergic VTA neurons could be encoding a reward prediction error signal that could be used by neural structures implementing reinforcement learning-type algorithms to guide reward seeking and choice (Doya, 2002; McClure, Daw, & Montague, 2003; Montague & Berns, 2002; Schultz, 2002). Using combined fast-scan cyclic voltammetry and electrical stimulation of the VTA in a paradigm with a single response option, Phillips et al. (2003) recently demonstrated that DA appears both to trigger the initiation of instrumental behavior to obtain cocaine and to signal receipt of the drug. Similarly, rapid increases in DA have also been observed following an eliciting stimulus in an intermittent access task in which rats were required to press a lever for sucrose reward (Roitman et al., 2003). There has also been a recent model suggesting that the vigor of a response may be set by tonic DA levels (Niv, Daw, & Dayan, 2005). However, a direct role of phasic and tonic DA release in guiding action selection between multiple alternatives is still far from clear.

3.2. How specific is the role of DA to effort-related decision making?

While the evidence for a vital role for mesolimbic DA in overcoming work constraints to achieve greater rewards is compelling, it is also clear that the role of DA is not confined to effort-related cost-benefit decisions which require an evaluation of rewarded alternatives. Numerous studies have demonstrated suppressed responding when animals are faced with any increase in energetic demands, even when the only other option is not to engage in the task and therefore not get rewarded (e.g., Aberman & Salamone, 1999; Caine & Koob, 1994; Ikemoto & Panksepp, 1996). Moreover, there is some evidence that systemic DA depletions fundamentally alter the manner in which animals make cost-benefit choices. When rats injected systemically with haloperidol, a D2 receptor antagonist, were tested on the T-maze barrier task with identical large barriers in both the HR and LR arms, there was a small but significant switch away from choosing the HR arm, even though selecting the LR arm entailed the same cost as for the HR arm but with half the concomitant reward (Denk et al., 2005). One possibility is that such anti-psychotic drugs, as well as being involved in activational aspects of motivation, may also influence the reinforcing properties of stimuli (Mobini, Chiang, Ho, Bradshaw, & Szabadi, 2000; Wise & Rompre, 1989). It may be constructive in the future to use quantitative techniques similar to those utilized by Ho and colleagues to model the parameters of impulsive choice (Ho, Mobini, Chiang, Bradshaw, & Szabadi, 1999) to identify how DA depletions individually affect processing of reward magnitude and work requirements.

The role of mesocorticolimbic DA in decision making is also unlikely to be restricted to those choices which require an assessment of work-related response costs. While targeted DA depletions of the NAc equivalent to those used by Salamone and colleagues have no effect on the choices of rats faced with one option resulting in large reward delayed for a number of seconds and another with an immediately available but smaller quantity of reward (Winstanley, Theobald, Dalley, & Robbins, 2005), systemic DA depletions have been shown to cause an increase in impulsive decisions (Denk et al., 2005; Wade, de Wit, & Richards, 2000). Conversely, administration of an indirect DA agonist d-amphetamine can increase the amount of time animals are willing to wait for the large reward (Cardinal et al., 2000; Richards, Sabol, & de Wit, 1999). However, the exact function of DA when choosing between courses of action with varying delays between response and outcome is far from clear, with differing effects of DA manipulations depending on whether there is a mediating cue between a response and its associated delayed reward, and whether the choice is between a delayed large and an immediate small reward or between two rewards of different sizes delayed by differing lengths of time (Cardinal et al., 2000; Evenden & Ryan, 1996; Kheramin et al., 2004).

While there might not be an exclusive role for mesocorticolimbic DA in work-related decision making, there is evidence for a degree of neuromodulatory specificity during the incorporation of behavioral costs into decision making. Rats given a serotonin synthesis blocker, para-chlorophenylalanine methyl ester (pCPA), made comparable numbers of HR choices in the T-maze barrier task to control animals. The same animals, however, were more likely to choose a small immediate reward in a delay-based version of the T-maze task, suggesting a possible dissociation between serotonin and DA, with the former only crucial for decisions involving delay (Denk et al., 2005).

3.3. Medial frontal cortex (MFC) and effort-related decision making

Several lines of evidence exist to indicate that parts of the MFC might be important in motivating cost-benefit decisions. Both human neuroimaging and single unit recording in monkeys and rodents have shown that activity in this region is modulated by anticipation of different reward sizes and in linking chosen responses with their outcomes (Knutson, Taylor, Kaufman, Peterson, & Glover, 2005; Matsumoto, Suzuki, & Tanaka, 2003; Pratt & Mizumori, 2001; Procyk, Tanaka, & Joseph, 2000; Walton, Devlin, & Rushworth, 2004). In rodents, MFC lesions reduce food hoarding, a species-typical behavior which involves choosing to work to collect food in a single location instead of eating it where it is found (de Brabander, de Bruin, & van Eden, 1991; Lacroix, Broersen, Weiner, & Feldon, 1998). There is also clinical evidence from studies of human patients with damage to orbital and medial prefrontal cortex that suggests this region may be involved in the behavioral phenomenon of persistence, meaning the ability to persevere with a pattern of responding in the absence of reinforcement (Barrash, Tranel, & Anderson, 2000). Finally, a small population of cells in the sulcal part of monkey ACC have been reported which increase their firing as an animal progresses through a fixed schedule of trials towards a final reward (Gusnard et al., 2003; Shidara & Richmond, 2002).

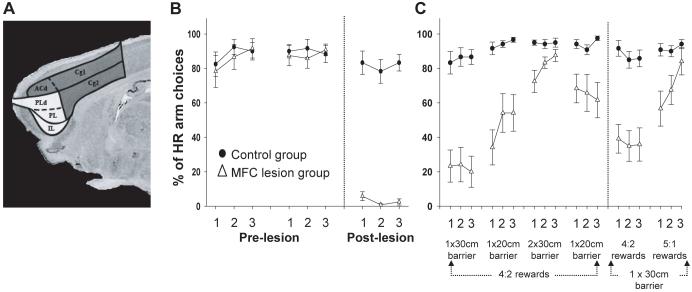

To investigate directly the role of rat MFC in effort-related decision making, we compared the choice performance of rats on the T-maze barrier task both before and after lesions to this region (Figure 4a) (Walton et al., 2002)1. As was discussed in Section 2.1, animals on this task typically chose to put in more work for an increased quantity of food. However, following excitotoxic lesions of MFC, there was a complete reversal in behavior, the lesioned rats always selecting the response involving less work and smaller reward (Figure 4b). This was not caused by insensitivity to costs and benefits, however, since changing the energetic calculation, either by putting an identical barrier in the LR arm or decreasing the size of the barrier in the HR arm, caused a significant increase in the tendency for the MFC group to switch back to choosing to climb the barrier in the HR arm to gain the larger reward. Similarly, increasing the reward ratio between the two arms from 4:2 to 5:1 also resulted in all animals with MFC lesions returning to the HR option (Figure 4c). Taken together, this implies that the alteration in choice behavior following the lesion was not merely caused by any gross motor or spatial memory impairment or by a fundamental inability to process reward quantities, but instead represented an alteration in the animals’ decision criterion, making them less willing to overcome the work constraints to gain the increased quantity of food.

Figure 4.

(A) Representation of the targeted MFC (all shaded areas) and ACC regions (dark gray shading only). In both experiments reported here, lesions were complete and restricted to the targeted cortical regions as intended (see Walton et al., 2002, 2003 for detailed histology). (B) Performance of groups of control and MFC-lesioned rats on a T-maze cost-benefit task both before and after excitotoxic lesions. Animals chose between climbing a 30 cm barrier to achieve a larger reward (HR) or selecting to obtain a smaller quantity of food from the vacant arm. (C) Choice behavior of control and MFC-lesioned rats postoperatively in response to changes in the effort quotients in each goal arm (as denoted by the size of the barriers) or the reward ratio between the HR and LR.

With both manipulations, the switch in the lesioned animals from LR to HR choices or the reverse did not occur immediately, but instead seemed to happen across several trials. It seems unlikely that this was caused by a complete lack of knowledge in the MFC-lesioned animals of the costs and benefits of a particular choice as both the effort and reward manipulations did alter this group’s behavior in the expected manner. Instead, this persistence may reflect learning-related or perseveration deficits observed in several previous studies of the effects of MFC lesions (Dias & Aggleton, 2000; Gabriel, Kubota, Sparenborg, Straube, & Vogt, 1991; Seamans, Floresco, & Phillips, 1995). However, it is also possible that this is simply the result of the fact that animals tend to be influenced by the previous cost/benefit context. Inspection of Figures 1a and b illustrate that even normal rats sometimes take a number of trials to respond to a change in either the barrier size or reward ratio in the two goal arms.

In a subsequent series of experiments, this change in effort-related decision making was localized to ACC in rat MFC (including pregenual ACd and supracallosal Cg1 and Cg2: Figure 4a, dark gray regions) (Walton et al., 2003). It has been argued that there are similarities between the rodent Cg1/Cg2 regions (incorporating ACd) and areas 24a and b of the primate brain (Vogt & Peters, 1981). Rats with damage to this region showed a switch away from HR choices in an identical work-based T-maze task that was comparable to that seen in the MFC group discussed above, and also returned to choosing the HR arm when the effort cost of either option was equated with the addition of an identical 30 cm barrier to the LR arm (further reward and/or effort manipulations comparable to those used in the above MFC study were not attempted with these animals). Nonetheless, they were able to learn a reward-related response rule (matching-to-sample) at a similar rate to control animals. By contrast, rats with lesions to an underlying part of MFC, prelimbic cortex, which, like ACC, receives a dense DA projection and has similar subcortical efferents to the nucleus accumbens, were just as willing to climb the barrier as the control group, but these animals were impaired at using recently acquired information to guide response selection in the matching-to-sample task.

3.4. How specific is the role of ACC to effort-related decision making?

Interestingly, while ACC lesions bias animals away from choosing to put in work to gain greater reward when there is an alternative rewarded option, they appear to have little effect on performance when there is only a single possible response, even if a large amount of work is required to obtain the food. Schweimer and Hauber (2005), for example, replicated the change in choice behavior on the work-based T-maze task but found identical break points on a progressive ratio test in which the number of lever presses to obtain reward increased in steps of 5, starting from an FR1, every 3 rewarded trials. It remains an issue for further research to assess whether similar results would be obtained if both types of experiment were run in the same apparatus (i.e., both tasks being operant or T-maze based).

In contrast to the findings with DA, there also appears to be a degree of specificity about the role of the ACC in work-based decision making rather than in all choices requiring an evaluation of the costs and benefits of each action. When rats with excitotoxic lesions of ACC were tested on a task requiring them to decide between an immediately available small reward and a larger but delayed reward, they showed similar patterns of delay discounting to control animals (Cardinal et al., 2001). However, there were also important distinctions between this study and the T-maze barrier ones, such as differences in the testing protocol and reward sizes, and therefore any firm conclusions must be withheld until future experiments are run using more comparable paradigms.

The notion of a crucial, and possibly distinct, role for the ACC in overcoming effort-related costs is, at first glance, something of a departure from the prevailing theories of ACC function that have arisen mainly from human electrophysiological and neuroimaging studies, namely that it is either involved in monitoring for response conflict or detecting when an error has been committed (Botvinick, Cohen, & Carter, 2004; Ridderinkhof, Ullsperger, Crone, & Nieuwenhuis, 2004). While all the T-maze experiments discussed above contain competing responses and would naturally evoke an assessment of the value of each choice, it is not immediately clear how a strict version of either account could explain the bias away from investing effort, particularly if it is correct that the ACC plays a specific role only when effort-related costs need to be considered. One possibility is that species and anatomical differences are driving these discrepancies. However, the results from the decision making experiments are consistent with a more general role for the ACC in evaluating whether or not a response is worth choosing (Rushworth, Walton, Kennerley, & Bannerman, 2004). The particular function of the ACC in deciding to invest effort may be linked to its connexions with autonomic regions such as the hypothalamus and periaqueductal grey (An, Bandler, Ongur, & Price, 1998; Floyd, Price, Ferry, Keay, & Bandler, 2000, 2001; Ongur, An, & Price, 1998), allowing the ACC to use representations of internal bodily states to assess and motivate the need to work hard in a particular context (Critchley et al., 2003). It will be necessary for future studies to compare these theories directly using more comparable paradigms and techniques.

3.5. Interactions between ACC and subcortical DA

One anatomical characteristic of both rodent and primate ACC is that, as well as being highly connected with other frontal and limbic regions, it also has reciprocal connexions with several nuclei which contain the cell bodies of the neuromodulatory systems. Of particular interest to the issue of work-related decision making is the fact that not only is ACC heavily innervated by afferents from the VTA and substantia nigra (Berger, 1992; Gaspar, Berger, Febvret, Vigny, & Henry, 1989; Lindvall, Bjorklund, & Divac, 1978), it also sends glutamatergic projections back down to this region and to NAc (Chiba, Kayahara, & Nakano, 2001; Sesack, Deutch, Roth, & Bunney, 1989; Uylings & van Eden, 1990). Stimulation of this pathway has been demonstrated to induce burst activity in midbrain DA neurons (Gariano & Groves, 1988; Tong, Overton, & Clark, 1996).

To investigate whether or not the DA projection to ACC was involved in allowing animals to put in extra work to receive greater rewards, animals with selective dopamine-depleting 6-hydroxydopamine (6-OHDA) lesions to this region were tested once again using the T-maze barrier task (Walton et al., 2005). Surprisingly, this manipulation resulted in no change in work-based decisions, with the ACC DA-depleted animals choosing to scale the barrier to reach the large reward as often as sham-operated controls. These rats were also just as likely to switch their behavior as the control group when the reward or barrier sizes associated with either option were altered, suggesting no obvious impairment in updating cost-benefit representations.

Given the profound effects of both excitotoxic cell body ACC lesions and DA-depletions to NAc on choice behavior, this is initially a perplexing result. However, in the context of the projection patterns of the ACC, it raises the possibility that this region exerts an important top-down, modulatory role over the mesolimbic DA system. In situations where the animal evaluates that it is worth persisting with a particular course of action to achieve greater reward rather than opting for a more easily available but less attractive reward, such a pathway could provide an activating signal, biasing an animal towards a high effort alternative.

4. Conclusions

Over the last few years, there has been increasing interest by those concerned with the neural basis of behavior in studying questions which have traditionally only been addressed by behavioral ecologists. While much progress has been made in starting to delineate the circuits involved in different types of cost-benefit decision making, much remains to be resolved. The evidence presented in this review has demonstrated that animals do weigh up work constraints against relative reward quantities of the available options when deciding which response to make. However, exactly how effort or work is calculated remains to be discovered. The comparable effects demonstrated by Salamone and colleagues of DA-depleting lesions of the NAc on either the T-maze barrier task, for which the HR option has little or no temporal cost, or an operant analogue requiring persistent responding over several seconds, when compared with the lack of a deficit shown on a delay-discounting paradigm following an equivalent targeted DA-depletion (Winstanley et al., 2005), suggests that the amount of time a course of action takes may be assessed separately from the amount of effort needed to be expended (see also Denk et al., 2005). There is additionally some recent evidence from studies of rats with 6-OHDA NAc lesions that putting in work, in terms of persistent responding over a period of time, is qualitatively different to that involved in applying extra force to a discrete response (Ishiwari, Weber, Mingote, Correa, & Salamone, 2004).

One interpretation of this result is that the evaluated effort cost may not simply be in terms of the animals’ output in joules/second. Furthermore, a behavioral study in starlings has indicated that when choosing whether to walk or to fly to rewards, the birds appeared to operate using a mode of foraging that optimized the net rate of gain over time. It will be interesting to compare whether or not rodents use a comparable method to integrate rewards and effort and, if so, whether ACC lesions and/or DA depletions can be shown to affect this calculation. Finally, work itself may be considered on more than one dimension; it may be necessary to expend effort both in traveling to reach a source of food and in handling and consuming it once obtained (Aoki & Matsushima, 2005; Stephens & Krebs, 1986), either or both of which may be evaluated by the ACC and mesolimbic DA pathways.

Similarly, while there is increasing evidence to implicate the ACC and mesolimbic DA system, particularly at the level of the NAc, in enabling an animal to overcome effort constraints to obtain an optimal outcome, exactly how this circuit causes such behaviors remains unknown. One possibility is that the ACC might provide an excitatory signal indicating choice preference to the NAc based on the amount of effort required and expected reward for each of the available options. This, in turn, can be biased by DA, so that in situations when the reward is of greater incentive value, the top-down signal from the ACC would be augmented. In addition to being influenced by activity in the superior colliculus and other frontal cortical areas (Dommett et al., 2005; Murase, Grenhoff, Chouvet, Gonon, & Svensson, 1993; Taber & Fibiger, 1995), DA neurons receive afferents from the ACC that may act as a feed-forward loop so that in situations where the animal decides it is worth putting in extra work to obtain a greater reward, signals from the ACC would cause additional DA to be released. It will be a requirement of future experiments to examine the interactions between ACC, NAc, the VTA and other candidate structures such as the amygdala and hippocampus using a variety of interference and recording techniques to gain a richer understanding of the way animals decide on the value of work.

Acknowledgements

This work was funded by the MRC and a Wellcome Trust Prize Studentship and Fellowship to MEW. Additional support came from the Royal Society (MFSR) and Wellcome Trust (DB). Parts of the research have been reported previously in Walton et al. (2002, 2003, 2005) and at SFN (Walton et al., 2004. Soc. Neurosci Abstr, 29, 211.6).

Footnotes

Rat MFC is taken here to include infralimbic, prelimbic and pre- and perigenual cingulate cortices extending back as far as bregma. Although the exact homologues of primate prefrontal regions in the rat brain is controversial, there is good anatomical and behavioral evidence to suggest that these areas are similar to medial frontal cortical regions in macaque monkeys (Preuss, 1995; Ongur and Price, 2000).

References

- Aberman JE, Salamone JD. Nucleus accumbens dopamine depletions make rats more sensitive to high ratio requirements but do not impair primary food reinforcements. Neuroscience. 1999;92(2):545–552. doi: 10.1016/s0306-4522(99)00004-4. [DOI] [PubMed] [Google Scholar]

- An X, Bandler R, Ongur D, Price JL. Prefrontal cortical projections to longitudinal columns in the midbrain periaqueductal gray in macaque monkeys. Journal of Comparative Neurology. 1998;401(4):455–479. [PubMed] [Google Scholar]

- Aoki N, Matsushima T. Labor investment in consumption: Neural correlates of anticipated food rewards and their roles on subjective evaluation in the avian arocpallium. Society for Neuroscience Abstracts. 2005;75:22. [Google Scholar]

- Barrash J, Tranel D, Anderson SW. Acquired personality disturbances associated with bilateral damage to the ventromedial prefrontal region. Developmental Neuropsychology. 2000;18(3):355–381. doi: 10.1207/S1532694205Barrash. [DOI] [PubMed] [Google Scholar]

- Bautista LM, Tinbergen J, Kacelnik A. To walk or to fly? How birds choose among foraging modes. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(3):1089–1094. doi: 10.1073/pnas.98.3.1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger B. Dopaminergic innervation of the frontal cerebral cortex. Evolutionary trends and functional implications. Advances in Neurology. 1992;57:525–544. [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Research. Brain Research Reviews. 1998;28(3):309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Cohen JD, Carter CS. Conflict monitoring and anterior cingulate cortex: an update. Trends in Cognitive Sciences. 2004;8(12):539–546. doi: 10.1016/j.tics.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Bradshaw CM, Szabadi E. Choice between delayed reinforcers in a discrete-trials schedule: the effect of deprivation level. Quarterly Journal of Experimental Psychology. B, Comparative and physiological psychology. 1992;44(1):1–6. doi: 10.1080/02724999208250599. [DOI] [PubMed] [Google Scholar]

- Caine SB, Koob GF. Effects of mesolimbic dopamine depletion on responding maintained by cocaine and food. Journal of the Experimental Analysis of Behavior. 1994;61(2):213–221. doi: 10.1901/jeab.1994.61-213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292(5526):2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Robbins TW, Everitt BJ. The effects of d-amphetamine, chlordiazepoxide, alpha-flupenthixol and behavioural manipulations on choice of signalled and unsignalled delayed reinforcement in rats. Psychopharmacology (Berl) 2000;152(4):362–375. doi: 10.1007/s002130000536. [DOI] [PubMed] [Google Scholar]

- Chiba T, Kayahara T, Nakano K. Efferent projections of infralimbic and prelimbic areas of the medial prefrontal cortex in the Japanese monkey, Macaca fuscata. Brain Research. 2001;888(1):83–101. doi: 10.1016/s0006-8993(00)03013-4. [DOI] [PubMed] [Google Scholar]

- Clement TS, Feltus JR, Kaiser DH, Zentall TR. “Work ethic” in pigeons: reward value is directly related to the effort or time required to obtain the reward. Psychonomic Bulletin & Review. 2000;7(1):100–106. doi: 10.3758/bf03210727. [DOI] [PubMed] [Google Scholar]

- Cousins MS, Atherton A, Turner L, Salamone JD. Nucleus accumbens dopamine depletions alter relative response allocation in a T-maze cost/benefit task. Behavioural Brain Research. 1996;74(1-2):189–197. doi: 10.1016/0166-4328(95)00151-4. [DOI] [PubMed] [Google Scholar]

- Cousins MS, Salamone JD. Nucleus accumbens dopamine depletions in rats affect relative response allocation in a novel cost/benefit procedure. Pharmacology, Biochemistry, and Behavior. 1994;49(1):85–91. doi: 10.1016/0091-3057(94)90460-x. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Mathias CJ, Josephs O, O’Doherty J, Zanini S, Dewar BK, et al. Human cingulate cortex and autonomic control: converging neuroimaging and clinical evidence. Brain. 2003;126(Pt 10):2139–2152. doi: 10.1093/brain/awg216. [DOI] [PubMed] [Google Scholar]

- de Brabander JM, de Bruin JP, van Eden CG. Comparison of the effects of neonatal and adult medial prefrontal cortex lesions on food hoarding and spatial delayed alternation. Behavioural Brain Research. 1991;42(1):67–75. doi: 10.1016/s0166-4328(05)80041-5. [DOI] [PubMed] [Google Scholar]

- Denk F, Walton ME, Jennings KA, Sharp T, Rushworth MF, Bannerman DM. Differential involvement of serotonin and dopamine systems in cost-benefit decisions about delay or effort. Psychopharmacology (Berl) 2005;179(3):587–596. doi: 10.1007/s00213-004-2059-4. [DOI] [PubMed] [Google Scholar]

- Dias R, Aggleton JP. Effects of selective excitotoxic prefrontal lesions on acquisition of nonmatching- and matching-to-place in the T-maze in the rat: differential involvement of the prelimbic-infralimbic and anterior cingulate cortices in providing behavioural flexibility. European Journal of Neuroscience. 2000;12(12):4457–4466. doi: 10.1046/j.0953-816x.2000.01323.x. [DOI] [PubMed] [Google Scholar]

- Dommett E, Coizet V, Blaha CD, Martindale J, Lefebvre V, Walton N, et al. How visual stimuli activate dopaminergic neurons at short latency. Science. 2005;307(5714):1476–1479. doi: 10.1126/science.1107026. [DOI] [PubMed] [Google Scholar]

- Doya K. Metalearning and neuromodulation. Neural Networks. 2002;15(4-6):495–506. doi: 10.1016/s0893-6080(02)00044-8. [DOI] [PubMed] [Google Scholar]

- Evenden JL, Robbins TW. Effects of unilateral 6-hydroxydopamine lesions of the caudate-putamen on skilled forepaw use in the rat. Behavioural Brain Research. 1984;14(1):61–68. doi: 10.1016/0166-4328(84)90020-2. [DOI] [PubMed] [Google Scholar]

- Evenden JL, Ryan CN. The pharmacology of impulsive behaviour in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology (Berl) 1996;128(2):161–170. doi: 10.1007/s002130050121. [DOI] [PubMed] [Google Scholar]

- Floyd NS, Price JL, Ferry AT, Keay KA, Bandler R. Orbitomedial prefrontal cortical projections to distinct longitudinal columns of the periaqueductal gray in the rat. Journal of Comparative Neurology. 2000;422(4):556–578. doi: 10.1002/1096-9861(20000710)422:4<556::aid-cne6>3.0.co;2-u. [DOI] [PubMed] [Google Scholar]

- Floyd NS, Price JL, Ferry AT, Keay KA, Bandler R. Orbitomedial prefrontal cortical projections to hypothalamus in the rat. Journal of Comparative Neurology. 2001;432(3):307–328. doi: 10.1002/cne.1105. [DOI] [PubMed] [Google Scholar]

- Gabriel M, Kubota Y, Sparenborg S, Straube K, Vogt BA. Effects of cingulate cortical lesions on avoidance learning and training-induced unit activity in rabbits. Experimental Brain Research. 1991;86(3):585–600. doi: 10.1007/BF00230532. [DOI] [PubMed] [Google Scholar]

- Gariano RF, Groves PM. Burst firing induced in midbrain dopamine neurons by stimulation of the medial prefrontal and anterior cingulate cortices. Brain Research. 1988;462(1):194–198. doi: 10.1016/0006-8993(88)90606-3. [DOI] [PubMed] [Google Scholar]

- Gaspar P, Berger B, Febvret A, Vigny A, Henry JP. Catecholamine innervation of the human cerebral cortex as revealed by comparative immunohistochemistry of tyrosine hydroxylase and dopamine-beta-hydroxylase. Journal of Comparative Neurology. 1989;279(2):249–271. doi: 10.1002/cne.902790208. [DOI] [PubMed] [Google Scholar]

- Glimcher P. Decisions, decisions, decisions: choosing a biological science of choice. Neuron. 2002;36(2):323–332. doi: 10.1016/s0896-6273(02)00962-5. [DOI] [PubMed] [Google Scholar]

- Gusnard DA, Ollinger JM, Shulman GL, Cloninger CR, Price JL, Van Essen DC, et al. Persistence and brain circuitry. Proceedings of the National Academy of Sciences of the United States of America. 2003;100(6):3479–3484. doi: 10.1073/pnas.0538050100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho MY, Mobini S, Chiang TJ, Bradshaw CM, Szabadi E. Theory and method in the quantitative analysis of “impulsive choice” behaviour: implications for psychopharmacology. Psychopharmacology (Berl) 1999;146(4):362–372. doi: 10.1007/pl00005482. [DOI] [PubMed] [Google Scholar]

- Horvitz JC, Ettenberg A. Haloperidol blocks the response-reinstating effects of food reward: a methodology for separating neuroleptic effects on reinforcement and motor processes. Pharmacology, Biochemistry, and Behavior. 1988;31(4):861–865. doi: 10.1016/0091-3057(88)90396-6. [DOI] [PubMed] [Google Scholar]

- Ikemoto S, Panksepp J. Dissociations between appetitive and consummatory responses by pharmacological manipulations of reward-relevant brain regions. Behavioral Neuroscience. 1996;110(2):331–345. doi: 10.1037//0735-7044.110.2.331. [DOI] [PubMed] [Google Scholar]

- Ikemoto S, Panksepp J. The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Research. Brain Research Reviews. 1999;31(1):6–41. doi: 10.1016/s0165-0173(99)00023-5. [DOI] [PubMed] [Google Scholar]

- Ishiwari K, Weber SM, Mingote S, Correa M, Salamone JD. Accumbens dopamine and the regulation of effort in food-seeking behavior: modulation of work output by different ratio or force requirements. Behavioural Brain Research. 2004;151(1-2):83–91. doi: 10.1016/j.bbr.2003.08.007. [DOI] [PubMed] [Google Scholar]

- Janson CH. Experimental evidence for spatial memory in foraging wild capuchin monkeys, Cebus apella. Animal Behaviour. 1998;55(5):1229–1243. doi: 10.1006/anbe.1997.0688. [DOI] [PubMed] [Google Scholar]

- Kacelnik A. Normative and descriptive models of decision making: time discounting and risk sensitivity. Ciba Foundation Symposium. 1997;208:51–67. doi: 10.1002/9780470515372.ch5. discussion 67-70. [DOI] [PubMed] [Google Scholar]

- Kacelnik A, Marsh B. Cost can increase preference in starlings. Animal Behaviour. 2002;63(2):245–250. [Google Scholar]

- Kheramin S, Body S, Ho MY, Velazquez-Martinez DN, Bradshaw CM, zabadi E, et al. Effects of orbital prefrontal cortex dopamine depletion on inter-temporal choice: a quantitative analysis. Psychopharmacology (Berl) 2004;175(2):206–214. doi: 10.1007/s00213-004-1813-y. [DOI] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. Journal of Neuroscience. 2005;25(19):4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacroix L, Broersen LM, Weiner I, Feldon J. The effects of excitotoxic lesion of the medial prefrontal cortex on latent inhibition, prepulse inhibition, food hoarding, elevated plus maze, active avoidance and locomotor activity in the rat. Neuroscience. 1998;84(2):431–442. doi: 10.1016/s0306-4522(97)00521-6. [DOI] [PubMed] [Google Scholar]

- Liao RM, Fowler SC. Haloperidol produces within-session increments in operant response duration in rats. Pharmacology, Biochemistry, and Behavior. 1990;36(1):191–201. doi: 10.1016/0091-3057(90)90150-g. [DOI] [PubMed] [Google Scholar]

- Lindvall O, Bjorklund A, Divac I. Organization of catecholamine neurons projecting to the frontal cortex in the rat. Brain Research. 1978;142(1):1–24. doi: 10.1016/0006-8993(78)90173-7. [DOI] [PubMed] [Google Scholar]

- Matsumoto K, Suzuki W, Tanaka K. Neuronal correlates of goal-based motor selection in the prefrontal cortex. Science. 2003;301(5630):229–232. doi: 10.1126/science.1084204. [DOI] [PubMed] [Google Scholar]

- Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior. Vol. 5. Hillsdale, NJ, England: Lawrence Erlbaum Associates, Inc.; 1987. pp. 55–73. [Google Scholar]

- McClure SM, Daw ND, Montague PR. A computational substrate for incentive salience. Trends in Neurosciences. 2003;26(8):423–428. doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Mobini S, Chiang TJ, Ho MY, Bradshaw CM, Szabadi E. Comparison of the effects of clozapine, haloperidol, chlorpromazine and d-amphetamine on performance on a time-constrained progressive ratio schedule and on locomotor behaviour in the rat. Psychopharmacology (Berl) 2000;152(1):47–54. doi: 10.1007/s002130000486. [DOI] [PubMed] [Google Scholar]

- Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36(2):265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- Monterosso J, Ainslie G. Beyond discounting: possible experimental models of impulse control. Psychopharmacology (Berl) 1999;146(4):339–347. doi: 10.1007/pl00005480. [DOI] [PubMed] [Google Scholar]

- Murase S, Grenhoff J, Chouvet G, Gonon FG, Svensson TH. Prefrontal cortex regulates burst firing and transmitter release in rat mesolimbic dopamine neurons studied in vivo. Neuroscience Letters. 1993;157(1):53–56. doi: 10.1016/0304-3940(93)90641-w. [DOI] [PubMed] [Google Scholar]

- Neill DB, Justice JB. An hypothesis for a behavioral function of dopaminergic transmission in nucleus accumbens. In: Chronister RB, Defrance JF, editors. The Neurobiology of the Nucleus Accumbens. Brunswick, Canada: Hue Institute; 1981. pp. 343–50. [Google Scholar]

- Niv Y, Daw ND, Dayan P. How fast to work: Response vigor, motivation and tonic dopamine. In: Weiss Y, Schölkopf B, Platt J, editors. Advances in Neural Information Processing Systems. Vol. 18. Cambridge, MA: MIT Press; 2005. pp. 1019–1026. [Google Scholar]

- Ongur D, An X, Price JL. Prefrontal cortical projections to the hypothalamus in macaque monkeys. Journal of Comparative Neurology. 1998;401(4):480–505. [PubMed] [Google Scholar]

- Ongur D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cerebral Cortex. 2000;10(3):206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- Phillips PE, Stuber GD, Heien ML, Wightman RM, Carelli RM. Subsecond dopamine release promotes cocaine seeking. Nature. 2003;422(6932):614–618. doi: 10.1038/nature01476. [DOI] [PubMed] [Google Scholar]

- Platt ML. Neural correlates of decisions. Current Opinion in Neurobiology. 2002;12(2):141–148. doi: 10.1016/s0959-4388(02)00302-1. [DOI] [PubMed] [Google Scholar]

- Pratt WE, Mizumori SJ. Neurons in rat medial prefrontal cortex show anticipatory rate changes to predictable differential rewards in a spatial memory task. Behavioural Brain Research. 2001;123(2):165–183. doi: 10.1016/s0166-4328(01)00204-2. [DOI] [PubMed] [Google Scholar]

- Preuss TM. Do rats have prefrontal cortex? The Rose-Woolsey-Akert program reconsidered. Journal of Cognitive Neuroscience. 1995;7(1):1–24. doi: 10.1162/jocn.1995.7.1.1. [DOI] [PubMed] [Google Scholar]

- Procyk E, Tanaka YL, Joseph JP. Anterior cingulate activity during routine and non-routine sequential behaviors in macaques. Nature Neuroscience. 2000;3(5):502–508. doi: 10.1038/74880. [DOI] [PubMed] [Google Scholar]

- Rawlins JN, Feldon J, Butt S. The effects of delaying reward on choice preference in rats with hippocampal or selective septal lesions. Behavioural Brain Research. 1985;15(3):191–203. doi: 10.1016/0166-4328(85)90174-3. [DOI] [PubMed] [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. Journal of the Experimental Analysis Behavior. 1997;67(3):353–366. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JB, Sabol KE, de Wit H. Effects of methamphetamine on the adjusting amount procedure, a model of impulsive behavior in rats. Psychopharmacology (Berl) 1999;146(4):432–439. doi: 10.1007/pl00005488. [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S. The role of the medial frontal cortex in cognitive control. Science. 2004;306(5695):443–447. doi: 10.1126/science.1100301. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends in Cognitive Sciences. 2004;8(9):410–417. doi: 10.1016/j.tics.2004.07.009. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M. Motivational views of reinforcement: implications for understanding the behavioral functions of nucleus accumbens dopamine. Behavioural Brain Research. 2002;137(1-2):3–25. doi: 10.1016/s0166-4328(02)00282-6. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behavioural Brain Research. 1994;65(2):221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Steinpreis RE, McCullough LD, Smith P, Grebel D, Mahan K. Haloperidol and nucleus accumbens dopamine depletion suppress lever pressing for food but increase free food consumption in a novel food choice procedure. Psychopharmacology (Berl) 1991;104(4):515–521. doi: 10.1007/BF02245659. [DOI] [PubMed] [Google Scholar]

- Schall JD, Thompson KG. Neural selection and control of visually guided eye movements. Annual Review of Neuroscience. 1999;22:241–259. doi: 10.1146/annurev.neuro.22.1.241. [DOI] [PubMed] [Google Scholar]

- Schultz W. Getting formal with dopamine and reward. Neuron. 2002;36(2):241–263. doi: 10.1016/s0896-6273(02)00967-4. [DOI] [PubMed] [Google Scholar]

- Schweimer J, Hauber W. Involvement of the rat anterior cingulate cortex in control of instrumental responses guided by reward expectancy. Learning and Memory. 2005;12(3):334–342. doi: 10.1101/lm.90605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seamans JK, Floresco SB, Phillips AG. Functional differences between the prelimbic and anterior cingulate regions of the rat prefrontal cortex. Behavioral Neuroscience. 1995;109(6):1063–1073. doi: 10.1037//0735-7044.109.6.1063. [DOI] [PubMed] [Google Scholar]

- Sesack SR, Deutch AY, Roth RH, Bunney BS. Topographical organization of the efferent projections of the medial prefrontal cortex in the rat: an anterograde tract-tracing study with Phaseolus vulgaris leucoagglutinin. Journal of Comparative Neurology. 1989;290(2):213–242. doi: 10.1002/cne.902900205. [DOI] [PubMed] [Google Scholar]

- Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science. 2002;296(5573):1709–1711. doi: 10.1126/science.1069504. [DOI] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging Theory. Princeton: Princeton University Press; 1986. [Google Scholar]

- Stevens JR, Rosati AG, Ross KR, Hauser MD. Will travel for food: spatial discounting in two new world monkeys. Current Biology. 2005;15(20):1855–1860. doi: 10.1016/j.cub.2005.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taber MT, Fibiger HC. Electrical stimulation of the prefrontal cortex increases dopamine release in the nucleus accumbens of the rat: modulation by metabotropic glutamate receptors. Journal of Neuroscience. 1995;15(5 Pt 2):3896–3904. doi: 10.1523/JNEUROSCI.15-05-03896.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tinbergen N. The study of instinct. New York, NY, US: Clarendon Press / Oxford University Press; 1951. [Google Scholar]

- Tong ZY, Overton PG, Clark D. Stimulation of the prefrontal cortex in the rat induces patterns of activity in midbrain dopaminergic neurons which resemble natural burst events. Synapse. 1996;22(3):195–208. doi: 10.1002/(SICI)1098-2396(199603)22:3<195::AID-SYN1>3.0.CO;2-7. [DOI] [PubMed] [Google Scholar]

- Ungerstedt U. Adipsia and aphagia after 6-hydroxydopamine induced degeneration of the nigro-striatal dopamine system. Acta physiologica Scandinavica. Supplementum. 1971;367:95–122. doi: 10.1111/j.1365-201x.1971.tb11001.x. [DOI] [PubMed] [Google Scholar]

- Uylings HB, van Eden CG. Qualitative and quantitative comparison of the prefrontal cortex in rat and in primates, including humans. Progress in Brain Research. 1990;85:31–62. doi: 10.1016/s0079-6123(08)62675-8. [DOI] [PubMed] [Google Scholar]

- Vogt BA, Peters A. Form and distribution of neurons in rat cingulate cortex: areas 32, 24, and 29. Journal of Comparative Neurology. 1981;195(4):603–625. doi: 10.1002/cne.901950406. [DOI] [PubMed] [Google Scholar]

- Wade TR, de Wit H, Richards JB. Effects of dopaminergic drugs on delayed reward as a measure of impulsive behavior in rats. Psychopharmacology (Berl) 2000;150(1):90–101. doi: 10.1007/s002130000402. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K, Rushworth MF. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. Journal of Neuroscience. 2003;23(16):6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Rushworth MF. The role of rat medial frontal cortex in effort-based decision making. Journal of Neuroscience. 2002;22(24):10996–11003. doi: 10.1523/JNEUROSCI.22-24-10996.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Croxson PL, Rushworth MF, Bannerman DM. The mesocortical dopamine projection to anterior cingulate cortex plays no role in guiding effort-related decisions. Behavioral Neuroscience. 2005;119(1):323–328. doi: 10.1037/0735-7044.119.1.323. [DOI] [PubMed] [Google Scholar]

- Walton ME, Devlin JT, Rushworth MF. Interactions between decision making and performance monitoring within prefrontal cortex. Nature Neuroscience. 2004;7(11):1259–1265. doi: 10.1038/nn1339. [DOI] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Dalley JW, Robbins TW. Interactions between serotonin and dopamine in the control of impulsive choice in rats: therapeutic implications for impulse control disorders. Neuropsychopharmacology. 2005;30(4):669–682. doi: 10.1038/sj.npp.1300610. [DOI] [PubMed] [Google Scholar]

- Wise RA, Rompre PP. Brain dopamine and reward. Annual Review of Psychology. 1989;40:191–225. doi: 10.1146/annurev.ps.40.020189.001203. [DOI] [PubMed] [Google Scholar]