Abstract

Many clinics and payers are beginning programs to collect and interpret outcomes related to quality of care and provider performance (ie, benchmarking). Outcomes assessment is commonly done using observational research designs, which makes it important for those involved in these endeavors to appreciate the underlying challenges and limitations of these designs. This perspective article discusses the advantages and limitations of using observational research to evaluate quality of care and provider performance in order to inform clinicians, researchers, administrators, and policy makers who want to use data to guide practice and policy or critically appraise observational studies and benchmarking efforts. Threats to internal validity, including potential confounding, patient selection bias, and missing data, are discussed along with statistical methods commonly used to address these limitations. An example is given from a recent study comparing physical therapy clinic performance in terms of patient outcomes and service utilization with and without the use of these methods. The authors demonstrate that crude differences in clinic outcomes and service utilization tend to be inflated compared with the differences that are statistically adjusted for selected threats to internal validity. The authors conclude that quality of care measurement and ranking procedures that do not use similar methods may produce findings that may be misleading.

Benchmarking and other quality assessment and reporting efforts often rely on the results of observational research designs. Over the past decade, there has been a proliferation of efforts to benchmark providers against national averages, as well as external quality assessment initiatives spearheaded by third-party payers that use clinical databases to evaluate, publicly report, or reward provider performance.1–10 Measuring health care quality (ie, outcomes assessment) and comparing providers’ performance using those measures of quality (ie, provider profiling) are important strategies for making providers accountable for the care they provide.11 While a relatively new area of research and effort in medical care, in general, provider profiling through the development of quality indicators has been done in long-term care,5,6 managed care,7 and hospital inpatient and outpatient clinic settings.8

Several health care quality indicators are based on measurement of a patient's performance or self-reported performance of functional tasks. For example, several of the nursing home quality indicators used by the Centers for Medicare and Medicaid Services are based on performance measurement data available in the Minimum Data Set assessments.6 As another example, the Health Outcomes Survey conducted by the Centers for Medicare and Medicaid Services uses the Medical Outcomes Study 36-Item Short-Form Health Survey (SF-36), a patient self-assessment tool, to assess health outcomes of Medicare beneficiaries in managed care settings and to measure performance of health plans.7

Provider profiles can provide practitioners with meaningful performance information, which can be used to help improve service quality and efficiency. Provider profiles can be used by purchasers to stimulate providers to reduce undesirable variation in services, control costs, and ensure that patients and payers are getting the best value for their money.1 Provider profiles, when publicly reported, also can aid consumer and purchaser decision making.12

Many strategies to evaluate quality of care or profile provider performance rely on analysis of observational data collected in administrative or outcomes databases. The advantage of using observational research is that many such studies use pre-existing data sets, making their implementation much more cost-effective compared to implementing an experimental design. Furthermore, studies using observational data, collected prospectively or analyzed retrospectively, can examine questions that are not feasible or ethical to ask using other research designs.

Although the use of observational study designs may be the most feasible approach to evaluating quality and comparing provider performance, these designs have limitations and require analytical approaches to strengthen the internal validity of the study. Threats to internal validity in these study designs include potential confounding and patient selection bias caused by nonrandom assignment of patients to providers,13 lack of independence of patients within providers,14 and missing data.

Many physical therapy providers are beginning programs to collect and interpret their own outcomes,15,16 and there is a strong likelihood that payers will expand efforts to redesign reimbursement policy based on provider performance.2 Thus, it is critically important for those involved in these endeavors, such as clinicians, researchers, administrators, and policy makers, to appreciate some of the underlying challenges and methodological issues related to outcomes assessment. Thus, the purposes of this perspective article are: (1) to discuss the advantages and limitations of using observational research to measure health care quality and evaluate provider performance and (2) to inform clinicians, researchers, administrators, and policy makers who want to use evidence to guide practice and policy or critically appraise observational studies. In this perspective article, we discuss key limitations of observational research designs and highlight statistical methods to address these limitations. We concentrate on managing potential confounding by patient demographic variables, patient selection bias, and missing data. Finally, we use an example from a recent research study comparing physical therapy clinic performance in terms of patient outcomes and service utilization with and without the use of these methods.

Potential Confounding

Administrative data collected from routine practice often are used in observational studies. These data are quite different from data collected in a study using an experimental design where patients are randomly assigned to treatment groups. With observational designs, there is no design mechanism to evenly distribute or control patients’ characteristics between groups.17 Thus, there is a strong potential for confounding of results because of differences in patient groups.

Confounding occurs if there are extraneous, or uncontrolled, variables associated with both the independent and dependent variables. A confounding variable, or confounder, can cause, prevent, or modify the outcome of interest by producing an association between independent and dependent variables when none exists or can mask a relationship that does exist. Observational studies compare pre-existing groups that may differ not only on the interventions they receive but also on many factors that could influence response to treatment. Thus, if an observational study shows a difference between 2 pre-existing groups, that difference may be due to the fact that they received different types of interventions, or it may be due to the differences in the characteristics of the groups.

Therefore, in an observational design, the researcher needs to establish group comparability through control of confounders during the data analysis phase to minimize potential confounding.18 Observational research designs should not use crude (ie, unadjusted) estimates of relationships between independent and dependent variables because crude estimates do not account for potential confounders. Instead, observational research designs should use statistical methods to make the groups more mathematically equivalent on baseline characteristics that may influence response to treatment. These methods often include variables that are potential confounders as covariates (ie, control variables that are added as independent variables) in the analyses.

In contrast, in a randomized controlled trial, patients are randomly assigned to treatment and control groups. Randomized controlled trials are the gold standard of research designs and provide the highest level of evidence on the effect of an intervention because the randomization process, if successful, creates comparability of patient characteristics as patients are randomly assigned to intervention and control groups during the design phase of study. If randomization works, the groups formed by random assignment differ only on the type of intervention they receive.

In observational studies, where patients are not randomly assigned to specific groups, we can attempt to control the influence of confounders by using statistical adjustment of patient characteristics.17,19 Because differences in the complexity and diversity of patients, also called the “case mix,” vary across clinicians and institutions and differences in patient outcomes are associated with type and severity of impairments as well as other factors, multivariable statistical analyses are used. For example, improvement in patients undergoing rehabilitation can be attributed to many variables, including—but not limited to—age, symptom acuity, surgical history, and comorbid conditions.20–22 Clearly, these risk variables, must be controlled before a meaningful interpretation of outcomes of treatment can be made.23 Iezzoni23 has written extensively about risk-adjustment methods for rehabilitation because risk-adjustment methods help explain variation in outcomes and service utilization related to patient factors so that the remaining differences in outcomes can be attributed to the care delivered. Without appropriate adjustment, outcomes cannot be interpreted in a meaningful manner or attributed to therapeutic interventions.16,22–25 Some authors24 have shown that comparisons between health care providers have been compromised by inadequate assessment of case mix.

Risk-adjustment methods typically use statistical models. Statistical models provide a way to evaluate the relationship between a dependent variable and one or more independent, or explanatory, variables. Using linear modeling, the dependent variable is expressed as a linear function of the explanatory variables and a random error term, which represents the measurement error or unaccounted random noise in the model. A regression coefficient corresponding to an independent variable in the model represents the rate of change of the dependent variable as a function of change in an independent variable. The works of Jette and colleagues,21,25,26 Freburger,27 and Horn et al,28 among others, provide examples of observational research in the physical therapy literature that have used statistical modeling as a risk-adjustment technique for physical therapy data.

There are a number of other techniques that can be used to control for group differences in observational studies. These techniques involve creating comparable groups through matching of subjects. Matching techniques may be relatively simple, such as that used by Hart and Dobrzykowski,29 who matched each member of the comparison group to a member of the group treated by orthopedic clinical specialists based on basic demographic characteristics. Matching also can involve more sophisticated approaches, such as the use of propensity scores to create comparison groups of individuals with an equal probability of being in the intervention group or in the control group.30

Regardless of best efforts to adjust for differences in case mix, the most serious limitation of observational research is that researcher can control only for potential confounders that have been measured. There also may be unmeasured confounders over which the providers have little or no control, such as motivation- or socioeconomic status-related adherence (eg, having the money to purchase the needed equipment or get transportation or time off work to attend therapy). When observational data are analyzed retrospectively, there may be little control over the choice of data elements, and thus important, unmeasured covariates may not have been measured. For example, most outcome data sets used for physical therapy do not include information that would allow classification of patients according to movement signs and symptoms or specific diagnosis thought to affect outcome.31 While patient classification is one example of a potential confounder, no risk-adjustment approach can control for every factor affecting outcomes of care.14 The reality is that there are always unobserved or unmeasured confounders.

Selection Bias

Patient selection bias is a distortion of results due to the way subjects are selected for inclusion in the study population. Patient randomization to clinics or therapists is uncommon in studies evaluating clinic or therapist performance17; rather, patients elect the clinics or therapists that care for them. Selection bias may result when providers offer a different mix of specialty services and, therefore, attract different types of patients. If a facility is known for a chronic pain program, for example, that clinic would be expected to have a larger population of patients with chronic pain. Thus, patients in one clinic may be different, for a variety of reasons, from patients treated in another clinic. Taking educational achievement as an example, we can hypothesize that low levels of education might be associated with poorer literacy and difficulties with understanding and adhering to treatment recommendations. Thus, education may be associated with worse treatment outcomes, and, if so, a provider whose patients were less educated might have, on average, worse treatment outcomes compared with a provider whose patients were more educated.

Furthermore, patients who go to the same clinic likely share certain characteristics. This phenomenon is called “clustering.” As examples, patients might cluster in clinics because they share similar primary sources of referral (eg, a specific group of primary care physicians, surgeons, neurologists, or other specialists), or clustering may be related to the location of a clinic within a similar geographic area consistent with people who share specific demographic characteristics, such as age, ethnicity, or socioeconomic status.

In addition, patients seen by the same therapist or provider may be clustered. Like patient clustering in clinics, therapist-level clustering in rehabilitation can occur for a variety of reasons. In some facilities, patients are assigned to certain therapists based on their clinical expertise, resulting in groups of patients who have similar characteristics (eg, patients with chronic pain, patients who are pregnant) within a therapist's practice. Provider-level clustering also might occur in rehabilitation because of therapist behavior, which often is individualized and consistent across patients and may or may not be similar to that of other therapists within the same clinics. Thus, variation in patient outcomes and service utilization might result from individual therapist practice patterns and behaviors. If any of these factors were associated with outcomes, the association could affect provider outcomes.

Furthermore, outcomes from clustered patients generally are not independently distributed. Failure to account for this dependence will result in biased estimates of the regression coefficients and their standard errors and may lead to incorrect conclusions about statistical significance. Greenfield et al19 demonstrated that comparisons of physician groups may be inaccurate if adjustment for both patient case mix and provider-level clustering is not performed before comparing quality measures of physician groups. Thus, statistical analyses of patient outcomes and service utilization by providers should control for patient clustering within providers. Failure to account for clustering generally will cause standard errors of regression coefficients to be underestimated.32

Control for clustering is relatively new to observational research in the physical therapy literature. However, Jewell and Riddle33 recently used a robust variance estimation method in their multiple logistic regression models to predict clinically meaningful improvement for patients with sciatica. Robust statistical methods are methods that are not unduly affected by departures from statistical assumptions, such as lack of independence of observations.

Another approach to control for clustering, especially when a data set has a hierarchical structure, is to use hierarchical linear models (or multilevel statistical models) to separate the within- and between-cluster variability and estimate cluster-specific parameters.14,34,35 Hierarchical linear models can incorporate the individual, patient-level information and attend to the dependency to higher-level groupings (ie, clustering). Resnik et al36 used hierarchical linear models, similar to those described in detail in the case example below, in research on the impact of state regulation of physical therapy services.

Missing Data

Another problem that can cause biases in the results of observational research is missing data. It is common in observational studies examining outcomes to have missing discharge observations due to loss of follow-up data.37 At other times, specific items or variables are missing because respondents leave items blank or refuse to respond to certain questions. The concern about missing data is that, if cases with missing values differ in analytically important ways from cases where values are present, this could bias the results and distort estimates of effect. Historically, physical therapy authors have attempted to estimate the bias introduced by missing outcomes data by comparing characteristics of subjects with and without missing data. However, there are a variety of analytical approaches that can be used to handle missing data. Cases with missing independent variables often are dropped in the analysis.21,25 If dropping cases with missing data results in the loss of too many observations, researchers may elect to create dummy variables to identify missing observations,38 or impute missing values by using mean or median values.30

Besides the above ad hoc methods, there are a variety of other analytical approaches that can be used to handle missing data. These approaches include methods of imputing values based on the results of regression, multiple imputation, inverse probability weighting, and likelihood-based methods.39 Inverse probability weighting is the approach demonstrated in the research example we present below. It involves giving different weights to subjects depending on their likelihood of being included in the sample of complete data, which is analogous to using survey weights, where subjects more likely be selected into the study are given less weight in the analysis. Although detailed discussion of each of the techniques to handle missing data is beyond the scope of this article, it is critical that researchers evaluate the impact of missing data in their analysis and, if necessary, take steps to minimize bias related to missing data or to acknowledge that, in some cases, excessive missing data may fundamentally invalidate a study's findings.

Application of Statistical Methods to Enhance Validity in an Observational Study

In this section, we demonstrate the application of specific statistical methods to address methodological challenges in observational research using an analysis of data from the Focus on Therapeutic Outcomes, Inc (FOTO) database as an example. A detailed description of the study design and findings are reported in our companion article in this issue.40 We then compare the impact of applying these methods by comparing our results (ie, our estimates of physical therapy clinic performance in terms of patient outcomes) with and without the use of these methods.

Data Source

We conducted a secondary analysis of previously collected data from the Focus On Therapeutic Outcomes, Inc. (FOTO) database.* Our analytical sample was selected from a larger data set of clinics (N=358) treating patients with a variety of syndromes that participated in the FOTO database in 2000–2001. Clinics were eligible for inclusion in our study if: there was at least one physical therapist on staff, clinician and facility registration forms had been completed, the clinic had entered intake data for at least 40 patients with a low back pain syndrome (LBPS), and follow-up data were available on at least two thirds of the clinic's patients with LBPS. Our final study sample consisted of 114 outpatient clinics with 1,058 therapists who treated 16,281 patients with LBPS.

Outcome Measurement: Overall Health Status Measure Scores at Discharge

The patient outcome measure we used was the FOTO overall health status measure (OHS), a health-related measure of quality of life derived from the SF-36 that assesses both mental and physical dimensions of health. Internal consistency of items in the OHS constructs with 2 or more items has been reported (α=.57–.91).41,42 Test-retest reliability of data obtained with the OHS was good (intraclass correlation coefficient [2,1]=.92).42 Responsiveness in the treatment of patients with LBPS (effect size=0.83) and the validity of the OHS measure to discriminate expert therapists from average therapists have been reported.43

Methods for Risk Adjustment and Control for Selection Bias

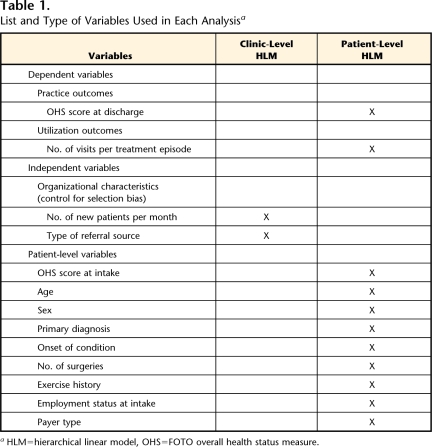

We used statistical risk-adjustment, also known as case-mix–adjustment, techniques to control effects of confounding variables seen in patient populations.23,44 Because we expected that improvement in patients undergoing rehabilitation could be confounded by many variables, including—but not limited to—patient demographic and financial variables,36,43,45 we considered the following patient-level variables in our analyses (Tab. 1).

Table 1.

List and Type of Variables Used in Each Analysisa

HLM=hierarchical linear model, OHS=FOTO overall health status measure.

The OHS scores at discharge and number of visits per treatment episode were analyzed using 2 separate 3-level hierarchical linear models (HLMs).36 We believed that multilevel models were most appropriate for this analysis, given the hierarchically structured data, where patients are clustered within therapists and therapists are clustered within practice or clinic and where the primary goal was to compare clusters (ie, clinics).

At level 1, the patients’ discharge OHS scores were assumed to be normally distributed and were modeled as a linear function of patient-level covariates (ie, the variables were considered potential confounders). Thus, all measured potential confounders were added into the model as covariates at level 1. The regression coefficients at this level are assumed to be directly related to the therapists at the next level. Because we did not include any therapist-level covariates, the second-level model was an intercept term, which represents the mean therapist effect within a clinic plus a random error term representing the therapist-specific effect within that clinic. The mean therapist effect within each clinic is assumed to be related to characteristics at the clinic level. This technique enables an estimate of the effect of each therapist separately. Because selection of the clinic or reason why a patient receives treatment in one specific clinic and not another clinic might be related to expected outcomes, we added 2 sets of variables at the clinic level: volume of new patients per month and variables representing the proportion of patients referred by physician type (see below for description of variables) in a further attempt to control for selection bias.46 Thus, at level 3, these therapist-specific intercept terms were modeled using the clinic-level covariates and a random clinic error term. Table 1 shows all of the variables in the multilevel model.

Methods to Address Bias From Missing Data

Because approximately 34% of follow-up data were missing, we assumed the missing data were missing at random47 and used the previously mentioned technique of inverse probability weighting to control for patient selection bias due to missing follow-up data.48 Inverse probability weighting was accomplished by performing a 2-step procedure. In step 1, we fit a logistic regression model where the dependent variable took the value of 1 if the observation was complete and the value of 0 if missing and where all patient baseline variables were included as covariates. In step 2, we used the inverse of the predicted probabilities of being complete as weights for the patient data.48 Thus, patients who, based on their data, were unlikely to have complete data were given more weight in estimating the effect model than were those who were likely to have complete data.

Classifying Clinics Into Performance Groups: Profiling

To determine the mean patient outcomes per clinic, we aggregated the patient residual scores within each clinic after fitting the 3-level model to form a clinic-specific residual score for patient outcomes. Residual scores are the difference between actual discharge scores and the predicted scores after modeling. The rationale for using residual scores to estimate provider performance is that residuals represent the amount of variance unexplained by the models that could potentially be explained by factors other than patient characteristics, including type of treatment given or some other aspect of service delivery. Thus, we consider residual scores to be “risk-adjusted” outcomes. The use of residuals to estimate provider performance is a method described by experts on provider profiling and risk adjustment and has been used in previous studies of nursing homes, hospitals, and physical therapists that classified expert and average physical therapists.43,45,49,50

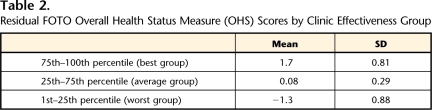

We used the clinic-specific aggregated residual score after modeling of OHS score to classify clinics into 3 effectiveness groups, which were determined based on the ranking of the residual scores. We considered the upper 76th–100th percentile of residual scores to denote the “best” effectiveness group, the 26th–75th percentile to be the middle group, and the 1st–25th percentile to be worst group. Thus, those patients whose observed scores exceeded expectations had more positive scores and were in the upper percentiles, whereas those patients whose scores did not meet expectations had more negative scores and were in the lower percentiles. The mean, standard deviation, and range of residual scores for each group are shown in Table 2.

Table 2.

Residual FOTO Overall Health Status Measure (OHS) Scores by Clinic Effectiveness Group

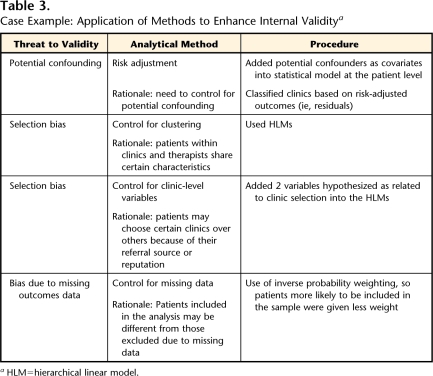

In order to examine the impact of using statistical techniques to adjust for potential confounding and selection bias, we compared differences in unadjusted, predicted, and risk-adjusted residual scores among clinic groups. A synopsis and rationale for all methods used in the analysis are shown in Table 3.

Table 3.

Case Example: Application of Methods to Enhance Internal Validitya

HLM=hierarchical linear model.

Synopsis of Results

We found many patient-level factors to be associated with lower (ie, worse) discharge OHS scores (results available upon request), including: greater age; lower OHS intake scores; onset of condition other than acute; greater number of surgeries; a history of not exercising regularly; employment status of employed but not working, receiving disability benefits, unemployed, or retired; payer type litigation, Medicaid, self-pay, or worker's compensation; and primary diagnosis of spinal stenosis. At the clinic level, except for the group referred from occupational medicine physicians, all other referral groups had a significant effect on discharge score, and a higher proportion of referrals was associated with better discharge scores.

Patient-level factors that were associated with more visits (results available upon request) included: female sex; greater age; higher OHS intake scores; diagnoses of herniated disk, pain, deformity, or “other”; onset of condition other than acute; greater number of surgeries; a history of not exercising regularly; and any employment status other than working full-time or modified duty. Patient-level factors associated with fewer visits (ie, more efficient utilization) included payer source of Medicare, self-pay, workers’ compensation, and health maintenance organization or preferred provider organization.

Clinic-level variables associated with discharge OHS were proportions of referrals from primary care, orthopedic surgery, and neurologists. No clinic-level variables were associated with number of visits per treatment episode.

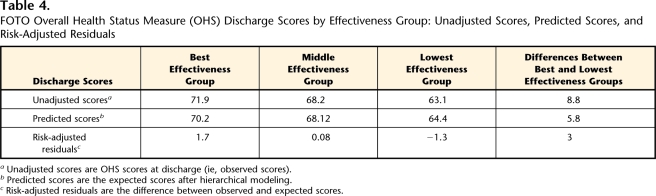

Impact of Applying Statistical Methods to Enhance Validity

Unadjusted OHS discharge scores were 71.9 (SD=18.6) for the best clinic group, 68.2 (SD=19.4) for the middle clinic group, and 63.1 (SD=20.9) for the worst clinic group. The difference between unadjusted mean scores of the highest and lowest quartiles of clinics was 8.8 points. On average, patients in the upper quartile improved almost 20 OHS points during therapy, and patients in the lower quartile improved an average of 11.2 OHS points. The predicted OHS scores (after modeling) for each of the groups are shown in Table 4. The data show that outcomes within clinics were largely what would have been expected, given their case mix. The models predicted that there would be a mean difference of 5.8 points between the highest and lowest quartiles of clinics. The mean difference in residual scores (ie, difference between observed [unadjusted] and predicted values) was only 3 points among groups beyond what was expected from the case-mix adjustment.

Table 4.

FOTO Overall Health Status Measure (OHS) Discharge Scores by Effectiveness Group: Unadjusted Scores, Predicted Scores, and Risk-Adjusted Residuals

aUnadjusted scores are OHS scores at discharge (ie, observed scores).

bPredicted scores are the expected scores after hierarchical modeling.

cRisk-adjusted residuals are the difference between observed and expected scores.

Discussion

In this perspective article, we identified several threats to internal validity of observational outcomes assessments. We further demonstrated the impact of statistically controlling these validity threats when assessing provider performance and explained the rationale behind the use of each technique. Consistent with prior research,21,36,43,51 our risk-adjustment models identified numerous patient-level factors associated with functional health outcomes in patients with LBPS: age, OHS intake scores, onset of condition, number of surgeries, a history of exercise, employment status, receipt of disability benefits or payer source, and primary diagnostic category. Thus, when using self-reported physical function data as an outcome in observational studies of patients with LBPS, we recommend that statistical techniques be used to control for potential confounding due to these patient-level variables.

We demonstrated 2 methods to control for selection bias. First, we used a HLM to account for clustering of patients by therapists and clinics. Second, we used 2 clinic-level variables to control for what we hypothesized would be related to patient selection volume (ie, number of new patients in the clinic as a measure of reputation and proportion of patients referred by each physician type). Using these variables, we demonstrated that patients treated in clinics that have a higher proportion of referrals from primary care physicians and orthopedic surgeons tend to report superior discharge outcomes compared with patients treated in clinics with higher proportions of patients referred by neurologists, physiatrists, and occupational medicine physicians. Our analyses support the contention that there is potential patient selection bias related to the type of physician referring patients to specific clinics. This hypothesis appears logical given that physician generalists and specialists typically serve different types of patient populations and specific specialties tend to manage their patients in a similar fashion.

Implications and Conclusions

Many facilities are beginning programs to collect and interpret outcomes. Thus, it is critically important for physical therapists to appreciate some of the underlying challenges and issues in methodology for outcomes assessment. In this perspective article, we discussed methods to enhance validity of outcomes assessment using observational data and provided an analytical example to demonstrate the importance of these techniques. We demonstrated that crude differences in clinic outcomes were minimized after statistically adjusting for patient characteristics, selection bias, and clustering of patients within providers. The implications of our analyses are that quality measurement and ranking systems that do not use similar types of methods are likely to produce misleading findings.

Although it is generally accepted that the methods used to obtain a general estimate of provider performance for the purposes of internal quality improvement can be less rigorous than methods used to develop quality profiles that might be publicly reported, we believe that some effort needs to be made to adjust for the case mix of the population before comparing provider outcomes. Otherwise, the information gained through outcomes analysis will not be a true gauge of the performance of the provider and may lead to invalid conclusions. Furthermore, assessments of provider performance that are tied to public reporting or financial incentives that are based on unadjusted outcomes may penalize providers treating the sickest patients who fail to show enough improvement or require more visits in a treatment episode.52 Physical therapists, policy makers, and researchers should be aware of the threats to internal validity in these types of study designs.

Dr Resnik, Dr Liu, and Dr Hart provided concept/idea/project design. All authors provided writing. Dr Resnik, Dr Liu, and Dr Mor provided data analysis. Dr Resnik provided project management and fund procurement. Dr Hart provided data collection and consultation (including review of manuscript before submission). The authors acknowledge the invaluable assistance of Sharon-Lise T Normand, PhD, who served as statistical consultant.

Funding was provided by the National Institute of Child Health and Human Development (grant 1RO3HD051475-01).

A poster presentation of this work was given at the Combined Sections Meeting of the American Physical Therapy Association; February 4–8, 2004; Nashville, Tennessee.

Dr Hart is an employee of and investor in Focus On Therapeutic Outcomes, Inc, the database management company that manages the data analyzed in this study.

Focus On Therapeutic Outcomes, Inc, PO Box 11444, Knoxville, TN 37939-1444.

References

- 1.Goldfield N, Burford R, Averill R, et al. Pay for performance: an excellent idea that simply needs implementation. Qual Manag Health Care. 2005;14:31–44. [DOI] [PubMed] [Google Scholar]

- 2.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press; 2002.

- 3.PriceWaterhouseCoopers. Pay for Performance's Small Steps of Progress. Available at: http://www.pwc.com/healthcare. Accessed August 10, 2005.

- 4.Bjorkgren M, Fries B, Hakkinen U, Brommels M. Case-mix adjustment and efficiency measurement. Scand J Public Health. 2004;32:464–471. [DOI] [PubMed] [Google Scholar]

- 5.Mor V, Berg K, Angelelli J, et al. The quality of quality measurement in US nursing homes. Gerontologist. 2003;43(Spec No. 2): 37–46. [DOI] [PubMed] [Google Scholar]

- 6.Zimmerman DR. Improving nursing home quality of care through outcomes data: the MDS quality indicators. Int J Geriatr Psychiatry. 2003;18:250–257. [DOI] [PubMed] [Google Scholar]

- 7.Cooper JK, Kohlmann T, Michael JA, et al. Health outcomes: new quality measure for Medicare. Int J Qual Health Care. 2001;13:9–16. [DOI] [PubMed] [Google Scholar]

- 8.Johantgen M, Elixhauser A, Bali JK, et al. Quality indicators using hospital discharge data: state and national applications. Jt Comm J Qual Improv. 1998;24:88–105. [DOI] [PubMed] [Google Scholar]

- 9.US Dept of Health and Human Services. Medicare Physician Group Practice Demonstration. Available at: http://www.cms.hhs.gov/researchers/demos/fact_sheet_mdpg_lh.pdf. Accessed August, 29, 2005.

- 10.Farin E, Follert P, Gerdes N, et al. Quality assessment in rehabilitation centres: the indicator system “Quality Profile.” Disabil Rehabil. 2004;26:1096–1104. [DOI] [PubMed] [Google Scholar]

- 11.Jencks SF. The government's role in hospital accountability for quality of care. Jt Comm J Qual Improv. 1994;20:364–369. [DOI] [PubMed] [Google Scholar]

- 12.Brook RH, McGlynn EA, Shekelle PG. Defining and measuring quality of care: a perspective from US researchers. Int J Qual Health Care. 2000;12:281–295. [DOI] [PubMed] [Google Scholar]

- 13.Landon BE, Normand SL, Blumenthal D, Daley J. Physician clinical performance assessment: prospects and barriers. JAMA. 2003;290:1183–1189. [DOI] [PubMed] [Google Scholar]

- 14.Normand S, Glickman M, Gatsonis C. Statistical methods for profiling providers of medical care: issues and applications. Journal of the American Statistical Association. 1997;92:803–814. [Google Scholar]

- 15.Hunter S, Zollinger B. Collecting and reporting patient-reported clinical outcomes: a work in progress. Health Policy Resource. 2003;3(3): 9–10. [Google Scholar]

- 16.Dobrzykowski EA. The methodology of outcomes measurement. Journal of Rehabilitation Outcomes Measurement. 1997;1:8–17. [Google Scholar]

- 17.Iezzoni LI. Risk adjusting rehabilitation outcomes: an overview of methodologic issues. Am J Phys Med Rehabil. 2004;83:316–326. [DOI] [PubMed] [Google Scholar]

- 18.Hennekens CH, Buring JE, Mayrent SL. Epidemiology in Medicine. Boston, MA: Little, Brown and Co Inc; 1987.

- 19.Greenfield S, Kaplan SH, Kahn R, et al. Profiling care provided by different groups of physicians: effects of patient case-mix (bias) and physician-level clustering on quality assessment results. Ann Intern Med. 2002;136:111–121. [DOI] [PubMed] [Google Scholar]

- 20.Greenfield S, Apolone G, McNeil BJ, Cleary PD. The importance of co-existent disease in the occurrence of postoperative complications and one-year recovery in patients undergoing total hip replacement: comorbidity and outcomes after hip replacement. Med Care. 1993;31:141–154. [DOI] [PubMed] [Google Scholar]

- 21.Jette DU, Jette AM. Physical therapy and health outcomes in patients with spinal impairments. Phys Ther. 1996;76:930–945. [DOI] [PubMed] [Google Scholar]

- 22.Di Fabio RP, Boissonnault W. Physical therapy and health-related outcomes for patients with common orthopaedic diagnoses. J Orthop Sports Phys Ther. 1998;27:219–230. [DOI] [PubMed] [Google Scholar]

- 23.Iezzoni LI, ed. Risk Adjustment for Measuring Health Care Outcomes. Chicago, IL: Health Administration Press; 2003.

- 24.Greenfield S, Sullivan L, Silliman RA, et al. Principles and practice of case mix adjustment: applications to end-stage renal disease. Am J Kidney Dis. 1994;24:298–307. [DOI] [PubMed] [Google Scholar]

- 25.Jette DU, Jette AM. Physical therapy and health outcomes in patients with knee impairments. Phys Ther. 1996;76:1178–1187. [DOI] [PubMed] [Google Scholar]

- 26.Jette DU, Warren RL, Wirtalla C. The relation between therapy intensity and outcomes of rehabilitation in skilled nursing facilities. Arch Phys Med Rehabil. 2005;86:373–379. [DOI] [PubMed] [Google Scholar]

- 27.Freburger JK. An analysis of the relationship between the utilization of physical therapy services and outcomes of care for patients after total hip arthroplasty. Phys Ther. 2000;80:448–458. [PubMed] [Google Scholar]

- 28.Horn SD, DeJong G, Smout RJ, et al. Stroke rehabilitation patients, practice, and outcomes: is earlier and more aggressive therapy better? Arch Phys Med Rehabil. 2005;86(12 suppl 2): S101–S114. [DOI] [PubMed] [Google Scholar]

- 29.Hart DL, Dobrzykowski EA. Influence of orthopaedic clinical specialist certification on clinical outcomes. J Orthop Sports Phys Ther. 2000;30:183–193. [DOI] [PubMed] [Google Scholar]

- 30.Freburger JK, Carey TS, Holmes GM. Effectiveness of physical therapy for the management of chronic spine disorders: a propensity score approach. Phys Ther. 2006;86:381–394. [PubMed] [Google Scholar]

- 31.Swinkels ICS, van den Ende CHM, de Bakker D, et al. Clinical databases in physical therapy. Physiother Theory Pract. 2007;23:153–167. [DOI] [PubMed] [Google Scholar]

- 32.Goldstein H. Multilevel Statistical Models. New York, NY: Edward Arnold Wiley; 1995.

- 33.Jewell DV, Riddle DL. Interventions that increase or decrease the likelihood of a meaningful improvement in physical health in patients with sciatica. Phys Ther. 2005;85:1139–1150. [PubMed] [Google Scholar]

- 34.Burgess JF Jr, Christiansen CL, Michalak SE, Morris CN. Medical profiling: improving standards and risk adjustments using hierarchical models. J Health Econ. 2000;19:291–309. [DOI] [PubMed] [Google Scholar]

- 35.Zou KH, Normand SL. On determination of sample size in hierarchical binomial models. Stat Med. 2001;20:2163–2182. [DOI] [PubMed] [Google Scholar]

- 36.Resnik L, Feng Z, Hart DL. State regulation and the delivery of physical therapy services. Health Serv Res. 2006;41(4 Pt 1): 1296–1316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hart DL, Connolly J. Pay-for-Performance for Physical Therapy and Occupational Therapy: Medicare Part B Services. Grant #18-P-93066/9-01. Washington, DC: US Dept of Health & Human Services, Centers for Medicare & Medicaid Services; 2006.

- 38.Freburger JK, Carey TS, Holmes GM. Physician referrals to physical therapists for the treatment of spine disorders. Spine. 2005;5:530–541. [DOI] [PubMed] [Google Scholar]

- 39.Little RJA, Rubin DB, eds. Statistical Analysis With Missing Data. New York, NY: John Wiley & Sons Inc; 2002.

- 40.Resnik L, Liu D, Mor V, Hart DL. Predictors of physical therapy clinic performance in the treatment of patients with low back pain syndromes. Phys Ther. 2008;88:989–1011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hart DL, Wright BD. Development of an index of physical functional health status in rehabilitation. Arch Phys Med Rehabil. 2002;83:655–665. [DOI] [PubMed] [Google Scholar]

- 42.Hart DL. Test-retest reliability of an abbreviated self-report overall health status measure. J Orthop Sports Phys Ther. 2003;33:734–744. [DOI] [PubMed] [Google Scholar]

- 43.Resnik L, Hart DL. Using clinical outcomes to identify expert physical therapists. Phys Ther. 2003;83:990–1002. [PubMed] [Google Scholar]

- 44.Iezzoni LI. An introduction to risk adjustment. Am J Med Qual. 1996;11:S8–S11. [PubMed] [Google Scholar]

- 45.Resnik L, Hart DL. Influence of advanced orthopaedic certification on clinical outcomes of patients with low back pain. Journal of Manual & Manipulative Therapy. 2004;12:32–41. [Google Scholar]

- 46.Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55. [Google Scholar]

- 47.Amato AL, Dobrzykowski EA, Nance T. The effect of timely onset of rehabilitation on outcomes in outpatient orthopedic practice: a preliminary report. Journal of Rehabilitation Outcomes Measurement. 1997;1(3): 32–38. [Google Scholar]

- 48.Robins JM, Hernan MA, Brumback B. Marginal structural models and causal inference in epidemiology. Epidemiology. 2000;11:550–560. [DOI] [PubMed] [Google Scholar]

- 49.Ash AS, Schwartz M, Pekoz E. Comparing outcomes across providers. In: Iezzoni LI, ed. Risk Adjustment for Measuring Health Care Outcomes. Chicago, IL: Health Administration Press; 2003:297–333.

- 50.Castle NG. Providing outcomes information to nursing homes: can it improve quality of care? Gerontologist. 2003;43:483–492. [DOI] [PubMed] [Google Scholar]

- 51.Jette AM, Delitto A. Physical therapy treatment choices for musculoskeletal impairments. Phys Ther. 1997;77:145–154. [DOI] [PubMed] [Google Scholar]

- 52.Casalino LP, Elster A, Eisenberg A, et al. Will pay-for-performance and quality reporting affect health care disparities? Health Aff (Millwood). 2007;26:405–414. [DOI] [PubMed] [Google Scholar]