Abstract

Purpose

This study examined children's word learning in limited and extended high-frequency bandwidth conditions. These conditions represent typical listening environments for children with hearing loss (HL) and children with normal hearing (NH), respectively.

Method

Thirty-six children with NH and 14 children with moderate-to-severe HL served as participants. All of the children were between 8 and 10 years of age and were assigned to either the limited or the extended bandwidth conditions. Five nonsense words were paired with 5 novel pictures. Word learning was assessed in a single session, multi-trial, learning paradigm lasting approximately 15 minutes. Learning rate was defined as the number of exposures necessary to achieve 70% correct performance.

Results

Analysis of variance revealed a significant main effect for bandwidth but not for group. A bandwidth x group interaction was also not observed. In this short-term learning paradigm, the children in both groups required three times as many exposures to learn each new word in the limited bandwidth condition compared to the extended bandwidth condition.

Conclusion

These results suggest that children with HL may benefit from extended high-frequency amplification when learning new words and for other long-term auditory processes.

It is estimated that by the time a child graduates from high school, he or she will have acquired an understanding of more than 60,000 words. To achieve a vocabulary of this size the child must learn multiple words per day throughout childhood [See Bloom (2000) for a review]. The immediate benefits of a well developed vocabulary allow a child to learn to read comprehensively, write meaningfully, and speak effectively. The long term benefits are apparent in the child's ability to communicate, socialize, and achieve academically and vocationally. Unfortunately, vocabulary development in children with hearing loss (HL) is often delayed and appears to be related to the child's degree of hearing loss (Blamey et al., 2001; Boothroyd & Boothroyd-Turner 2002; Davis et al., 1986; Lederberg, Prezbindowski, & Spencer 2000; Pittman et al., 2005). Although children with language impairment (LI) also share similarly underdeveloped vocabularies, the underlying cause may differ from that of children with HL. Specifically, while children with LI may employ inefficient word learning strategies, children with HL may have difficulty learning new words due to a degraded auditory signal.

These two groups also experience difficulty with phonological processing which may be related to their ability to learn new words (Briscoe, Bishop, & Norbury 2001; Hansson et al., 2004). Briscoe et al. (2001) compared the phonological, language, and literacy skills of 5- to 10-year-old children with HL to those of children with normal hearing (NH) and to children with LI. Their intent was to characterize the language skills of these children and to determine the impact of phonological processing difficulties on those skills. Their results revealed a significant relation between phonological processing and language in children with LI but not in children with HL. Specifically, the children with LI performed at levels significantly below that of their age- and vocabulary-matched peers on all but one measure (nonverbal reasoning). The children with HL, on the other hand, performed as well as the typically developing children on measures of nonverbal reasoning, receptive vocabulary, grammar, working memory, literacy, and digit span. The only exception was for children with greater hearing losses who were found to have poorer phonological skills than the normally hearing, typically developing children. When the language and literacy skills of these children were evaluated, the only significant effect of phonological impairment appeared to involve vocabulary knowledge. The authors concluded that poor phonological skills in children with HL are not necessarily associated with comprehensive language impairment but may impact word learning.

To better understand the association between phonological processing and word learning, Gilberston and Kamhi (1995) examined word learning in 20 children with HL between the ages of 7 and 10 years relative to children with NH who were matched for receptive language. They theorized that word learning in children with HL is related more to their ability to encode, store, and retrieve phonemic information than to the level of residual hearing. Four nonsense words differing in phonemic content and syllable length (tam, jaften, gadakik, and shabiffidy) were presented orally to each child and paired with a specific novel object. The child's ability to recognize, produce, and retain each word was measured. In addition, the child's performance on a number of phonological processing tasks (i.e., word repetition, rapid labeling) was measured. The children with HL wore their personal amplification devices (e.g., hearing aids, FM systems) during testing. The results revealed no significant differences between the groups for any of the phonological processing tasks or for recognition of the nonsense words. However, the children with HL required significantly more repetitions of the nonsense words “jaften” and “shabiffidy” to produce them accurately. Further, analyses revealed a bimodal distribution in which half of the children with HL required significantly more repetitions of the nonsense words than the children with NH, whereas half did not. From this, the authors concluded that some children with HL may have significant language impairment that may be obscured and possibly complicated by the presence of hearing loss.

However, studies like those described above often do not control for the quality of the signal received by the children with HL. Instead, they rely on the child's personal amplification devices (hearing aids) or FM systems to provide an adequate speech signal during testing. Because phonological tasks like those used in the Briscoe et al. (2001) and Gilbertson and Kamhi (1995) studies depend heavily on the child's ability to perceive the full acoustic content of the speech signal, it is possible that limitations in the children's personal hearing aids contributed to the phonological problems demonstrated by these children. For example, the children with HL in the Gilbertson and Kamhi (1995) study may have required more exposures to the nonsense words “jaften” and “shabiffidy” because hearing aids do not amplify the high-frequency fricatives well. Instead, the children may have required repeated attempts to produce these nonsense words as they searched for the correct acoustic phonetic content.

The effective bandwidth of most commercially available hearing aids is approximately 0.3 to 5 kHz which is comparable to the bandwidth of a home telephone. It is often the case that proper names or unfamiliar terms must be spelled out over the telephone due to the ambiguity imposed by the restricted bandwidth. Also, the letters of an unfamiliar name or term are often associated with common names to avoid confusion with other letters having the same acoustic characteristics (e.g., T as in Tom, F as in Frank). Adult listeners appear to tolerate well the reduced spectral information provided by the telephone (and hearing aids) probably because very little information is new to them. Children, on the other hand, are bombarded with new information throughout childhood and into adolescence. Therefore, an ambiguous signal provided by hearing aids may reduce their ability to perceive speech as accurately as adults or their normal-hearing peers.

Several studies in children confirm the value of high-frequency information for perception, production, and clarity of speech. Stelmachowicz and colleagues studied perception of the phoneme /s/ as a function of bandwidth (Stelmachowicz et al., 2001; Stelmachowicz et al., 2002). They reported systematic improvements in the performance of children with NH and children with HL as the bandwidth of the signal increased up to the 9-kHz bandwidth limit of the test protocol. In a later study, these investigators reported a distinct delay in the production of fricatives in a group of young children with HL who were aided within the first year of life compared to their normal-hearing peers (Stelmachowicz et al., 2004b). Because the delay was restricted to the fricative class only, the authors theorized that the children received an ambiguous representation of fricatives via the limited bandwidth of their hearing aids. That is, the limited bandwidth made fricatives difficult to distinguish from one another. This notion is supported by the results of Kortekaas and Stelmachowicz (2000) who reported that 5- to 10-year-old children with NH required a wider bandwidth than adults to rate the phoneme /s/ as being clear. The results of these studies suggest that, unlike adults, children benefit from extended frequency bandwidths regardless of hearing status.

Although the immediate effect of a reduced bandwidth appears to be limited to the perception and production of certain phonemes (fricatives), it is important to recall that speech perception and production are only part of a child's communication development. Over the long term, children use these skills to increase their knowledge and understanding of the world. That is, while adults use their hearing to continue to communicate; children use their hearing to learn to communicate. Therefore, the value of an extended high-frequency bandwidth may be equally important for long-term auditory processes, like word learning, that promote the development of communication.

Word Learning

Experimental paradigms for word learning typically include a period in which the child is introduced to new words through direct labeling or indirectly in the form of a story or reference. Lederberg (2000) distinguishes these two types of word learning paradigms as either rapid word-learning (i.e., fast mapping) in which the child is given an explicit reference for the new word, or novel mapping (i.e., quick incidental learning) in which the child is expected to make the connection between the new word and the unfamiliar object without assistance. In either paradigm, the words may be real, but unknown to the child, or nonsense words with a specific phonemic content. After a pre-determined number of exposures, the child is tested to determine the degree to which he/she was able to learn the new words under the conditions imposed in the experiment. Although these paradigms may not represent exactly the process by which children learn words in their natural environment, they provide valuable information about factors that may affect word learning. To date, several factors have been identified and include age, receptive vocabulary, working memory, hearing level, number of exposures, phonemic awareness, phonotactic probability1, and others (Gilbertson & Kamhi 1995; Hansson et al., 2004; Lederberg, Prezbindowski, & Spencer 2000; Oetting, Rice, & Swank 1995; Pittman et al., 2005; Rice et al., 1994; Rice, Buhr, & Nemeth 1990; Stelmachowicz et al., 2004a). Although all of these factors are accounted for in the present study, the effects of phonemic awareness, current receptive vocabulary, and hearing level are particularly relevant.

Storkel (2001; 2003) demonstrated the importance of the acoustic phonetic content of novel words when she investigated word learning in 3- to 6-year-old children. She carefully controlled the phonemic content of the novel words (nouns and verbs) so that the influence of phonotactic probability could be determined. The results suggested that the children's ability to learn words was influenced more by the acoustic-phonetic content of the word than by the grammatical function of the word. In addition, word learning was greater for words containing more commonly occurring phonemes. Because the acoustic-phonetic content of a word has a strong effect on learning, it is possible that a reduced bandwidth may affect the perception of phonemes and therefore learning.

Stelmachowicz et al. (2004b) examined word learning in children with NH and children with HL as a function of presentation level. The children were between the ages of 6 and 9 years. They reported a positive relation between presentation level and word learning in both groups. That is, significantly more words were learned at higher presentation levels. Pittman et al. (2005) examined word learning in children with NH and children with HL as a function of stimulus bandwidth. In that study, the children were between the ages of 5-14 years. The bandwidth of the stimuli approximated the frequency response of a typical ear-level hearing aid appropriate for children (4 kHz) as well as a wider bandwidth that more closely approximated the range of normal human hearing (9 kHz). The children were exposed to 8 CVCVC novel words a total of 6 times each in a single session before their retention was tested. Each novel word contained three unique phonemes in the same vowel context. Stelmachowicz, Lewis, Choi and Hoover (2007) conducted a similar study of word learning in noise. Their participants were 7- to 14-year-old children with NH and with HL. They also presented 6 repetitions of 8 novel words embedded in an animated story; however the 8 CVC words were comprised of unique consonants and vowels and filtered to produce bandwidths of 5 and 10 kHz.

The results of both studies showed no significant difference in word learning between the limited and extended bandwidth conditions. However, because the novel words in each study contained unique phonemes, the benefits of an extended high-frequency bandwidth may have been reduced in that perception of only one phoneme was necessary to identify any one word. The finite number of exposures to each word may have limited the evaluation of learning as well. A longitudinal, rather than a cross-sectional, approach may better inform our understanding of word learning and be more sensitive to the effects of some amplification characteristics. That is, more may be learned by determining the number of exposures necessary to learn a new word in a short period of time rather than evaluating performance after a predetermined number of exposures. This may be particularly informative for language processes that develop over extended periods of time and for subtle amplification characteristics that may have significant cumulative effects.

In the present study, the acoustic phonetic content of the stimuli and the presentation parameters were carefully controlled to avoid variations in amplification that might arise from using the children's personal hearing aids. Also, a modified word-learning paradigm was used to determine the number of exposures required to learn a new word in a short period of time (15 minutes). The purpose of the present study was to determine the rate of word learning in children with NH and children with HL for words having frequency bandwidths that approximate typical hearing aids and that of normal human hearing. Rate of word learning was defined as the number of exposures necessary to achieve 70% correct performance in the multi-trial paradigm. It was hypothesized that word learning would be significantly affected by the acoustic parameters of the physical signal in both groups of children.

Method

Participants

Thirty-six children with NH and 14 children with sensorineural hearing loss served as participants. All children were between the ages of 8 and 10 years of age (NH mean: 9.6 years, SD: 0.9 months; HL mean: 9.3 years, SD: 0.9 months). Seven (50%) of the children with HL were boys as were 20 (56%) of the children with NH. A clinical audiometer was used to confirm the hearing status of the children with NH. Thresholds were ≤15 dB HL at octave frequencies between 0.25 and 4 kHz, ≤25 dB HL at 8 kHz, and ≤40 dB HL at 12 kHz. Table 1 shows the age, gender, hearing thresholds for the left and right ears, age at identification (Age ID), age at amplification (Aided), years of hearing aid use (HA use), and standardized vocabulary score (PPVT Std) for each child with hearing loss. The thresholds of the children with HL were measured using custom laboratory software (described below) and expressed in dB SPL. All of the children were oral and placed in mainstreamed classrooms with their age-matched peers. Each child wore hearing aids bilaterally with the exception of 2 children who were not aided.

Table 1.

Age, gender, hearing thresholds (in dB SPL) for the right and left ears, age at identification (Age ID), age at amplification (Aided), years of hearing aid use (HA use), and PPVT standard score for each child with hearing loss.

| Child

# |

Age

(Y:M) |

Frequency (kHz) | Age ID

(Yrs) |

Aided

(Yrs) |

HA use

(Y:M) |

PPVT

Std |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M/F | Ear | 0.25 | 0.5 | 1 | 2 | 4 | 8 | 9 | 10 | ||||||

| Limited Bandwidth Condition | |||||||||||||||

| 1 | 9:0 | M | R | 73 | 65 | 77 | 83 | 73 | 92 | 80 | 83 | 2 | 2 | 7:0 | 98 |

| L | 72 | 68 | 78 | 75 | 70 | 97 | 83 | 93 | |||||||

| 2 | 10:7 | M | R | 87 | 76 | 70 | 68 | 61 | 98 | 82 | 88 | 3 | 4 | 6:7 | 89 |

| L | 98 | 92 | 70 | 73 | 73 | 103 | 93 | 118 | |||||||

| 3 | 8:1 | F | R | 83 | 75 | 79 | 87 | 78 | 98 | 63 | 84 | 2 | 3 | 5:1 | 95 |

| L | 87 | 58 | 97 | 89 | 61 | 67 | 58 | 72 | |||||||

| 4 | 8:8 | F | R | 51 | 31 | 26 | 80 | 102 | 125 | 125 | 125 | 5 | 6 | 2:8 | 80 |

| L | 58 | 28 | 13 | 13 | 83 | 113 | 118 | 109 | |||||||

| 5 | 9:11 | F | R | 33 | 25 | 43 | 73 | 88 | 113 | 102 | 123 | 5 | 6 | 3:11 | 113 |

| L | 23 | 27 | 44 | 63 | 98 | 110 | 94 | 99 | |||||||

| 6 | 8:2 | M | R | 53 | 52 | 45 | 83 | 68 | 73 | 78 | 58 | 0 | 1 | 7:2 | 113 |

| L | 50 | 53 | 68 | 78 | 73 | 83 | 73 | 68 | |||||||

| 7 | 8:2 | M | R | 47 | 38 | 38 | 43 | 28 | 63 | 78 | 78 | 0 | 2 | 6:2 | 102 |

| L | 48 | 33 | 48 | 42 | 33 | 63 | 73 | 72 | |||||||

| Mean | 9:1 | R | 61 | 52 | 54 | 74 | 71 | 95 | 87 | 91 | 2 | 3:5 | 5:5 | 99 | |

| L | 62 | 51 | 60 | 62 | 70 | 91 | 85 | 90 | |||||||

| Extended Bandwidth Condition | |||||||||||||||

| 8 | 10:1 | F | R | 27 | 13 | 13 | 18 | 63 | 33 | 63 | 73 | 3 | - | - | 108 |

| L | 28 | 13 | 13 | 63 | 48 | 58 | 78 | 83 | |||||||

| 9 | 9:8 | M | R | 69 | 98 | 97 | 72 | 68 | 46 | 48 | 53 | 0 | 2 | 7:8 | 84 |

| L | 61 | 72 | 68 | 86 | 76 | 67 | 36 | 43 | |||||||

| 10 | 8:1 | F | R | 48 | 41 | 51 | 58 | 48 | 53 | 48 | 48 | 3 | 3 | 5:1 | 64 |

| L | 47 | 43 | 48 | 58 | 48 | 53 | 56 | 58 | |||||||

| 11 | 9:6 | F | R | 68 | 68 | 78 | 75 | 53 | 63 | 78 | 93 | 2 | 2 | 7:6 | 116 |

| L | 63 | 67 | 73 | 85 | 55 | 68 | 73 | 73 | |||||||

| 12 | 10:5 | M | R | 30 | 28 | 28 | 63 | 58 | 63 | 58 | 55 | 5 | 5 | 5:5 | 109 |

| L | 38 | 33 | 43 | 60 | 68 | 83 | 72 | 68 | |||||||

| 13 | 9:11 | F | R | 47 | 33 | 38 | 48 | 48 | 58 | 58 | 63 | - | - | - | 115 |

| L | 38 | 33 | 38 | 48 | 52 | 58 | 58 | 62 | |||||||

| 14 | 9:10 | M | R | 103 | 93 | 93 | 88 | 68 | 65 | 58 | 72 | 3 | 3 | 6:10 | 68 |

| L | 108 | 93 | 95 | 88 | 78 | 73 | 83 | 83 | |||||||

| Mean | 9:7 | R | 56 | 53 | 57 | 60 | 58 | 54 | 59 | 65 | 3 | 3 | 6:9 | 95 | |

| L | 55 | 51 | 54 | 70 | 61 | 66 | 65 | 67 | |||||||

Stimuli

Five CaCəC nonsense words were created and paired with five pictures of nonsense toys. The words were /saθnəd/, /daztəl/, /fasnəʃ/, /stamən/, and /hamtəl/. The vowels /a/ and /ə/ occurred in the first and second syllables of each word, respectively. Also, three repetitions of /t/, /s/, and /n/, two repetitions of /d/, /m/, and /l/, and one repetition of /ʃ/, /f/, /θ/, /z/, and /h/ were distributed across the 5 words. This distribution approximates the frequency with which these phonemes occur in spoken American English (Denes, 1963). Also, many of the consonant phonemes occurred in more than one word so that each child would be required to recognize and learn a combination of phonemes rather than relying on the intelligibility of just one of several unique phonemes in each word. Table 2 lists the orthographic representation of each word as well as the phonetic transcription and the phonotactic probability. Phonotactic probability was calculated using the procedure suggested by Vitevitch and Luce (2004). The first number listed (positional segment frequency) represents the likelihood of the phonemes occurring in their positions within the nonsense word as they may in real English words. The second number (biphone frequency) represents the likelihood that each pair of adjacent phonemes also occurs in English words. Higher values indicate that the phonemes occur frequently together or in a particular position. By design, these words were equated as closely as possible for phonotactic probability so that word learning would be independent of these effects.

Table 2.

Orothographic and phonetic transcriptions of the five novel words. Phonotactic probability (positional and biphone frequencies) are also provided.

| Word | Phonetic Transcription | Phonotactic probability | |

|---|---|---|---|

| Positional segment | Biphone | ||

| Sothnud | saθnəd | 1.3347 | 1.0081 |

| Doztul | daztəl | 1.3425 | 1.0146 |

| Fosnush | fasnəʃ | 1.3345 | 1.0073 |

| Stomun | stamən | 1.3445 | 1.0455 |

| Homtul | hamtəl | 1.3594 | 1.0212 |

Prior to testing, the nonsense words were presented orally to a class of 16 undergraduate students who were instructed to write an English word that sounded most like the nonsense word. The written responses were then tallied to determine if a substantial number of the students associated any of the nonsense words with the same English word. No consistent responses were given for 4 of the 5 nonsense words but half (8) of the students wrote the word “stamen” (the pollen-bearing part of a flower) for the word /stamən/. Coincidentally, the word “stamen” was an item in the vocabulary test administered to each child as part of the study protocol. Only 4 children recruited for the present study had vocabularies sufficient to reach that level of the test and only 1 responded correctly. Therefore, the English word “stamen” was considered to be beyond the vocabularies of most of the children in the study (8- to 10-year-olds) and likely had little effect on their ability to learn the word /stamən/.

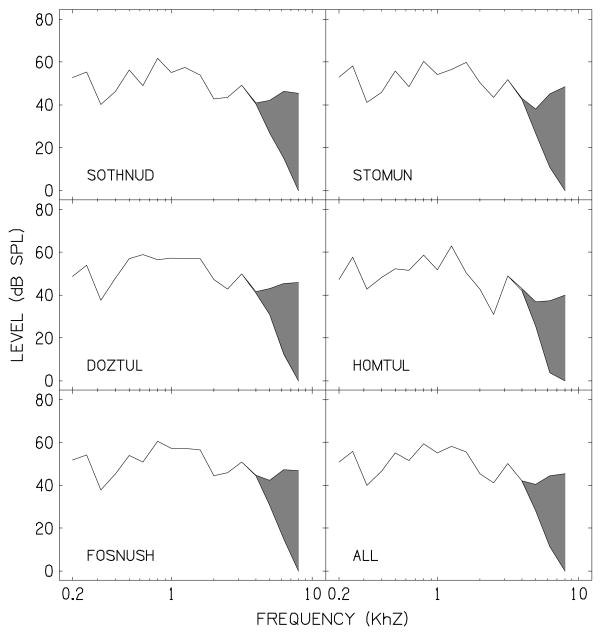

The words were recorded by a female talker with a typical American English dialect using a sampling rate of 22.05 kHz and a microphone with a flat frequency response to 10 kHz (AKG, C535EB). As in typical conversational English, the stress was placed on the initial syllable. The words were digitally isolated from the original recording using Adobe Audition (V1.5) and saved as separate wave files. The words were then low-pass filtered to create two stimulus conditions. In the first stimulus condition, the words were low-pass filtered at 4 kHz (with a rejection rate of 60 dB/octave) which approximates the bandwidth of currently available hearing aids appropriate for children (ear-level devices). For the second stimulus condition, the words were low-pass filtered at 9 kHz (with a rejection rate of 60 dB/octave) which approximates the frequency range of normal human hearing. Figure 1 shows the 1/3-octave band spectrum levels of each word as well as the average spectrum of all the words as presented to the children with NH. The shaded area in each panel represents the additional high-frequency amplitude provided in the extended bandwidth condition.

1.

Third-octave spectrum levels for each word as well as the combined long-term spectrum (lower right panel) as presented to the children with NH.

All stimuli were routed binaurally through earphones having a flat frequency response ≥10 kHz (Sennheiser, 25D). For the children with NH, the stimuli were presented at 65 dB SPL which is consistent with average conversational speech. To accommodate the specific hearing losses of each HI child, the stimuli were frequency shaped according to the target gain parameters provided by the Desired Sensation Level (DSL) v4.1 fitting algorithm (Seewald et al., 1997). DSL does not provide targets for frequencies >6 kHz so the targets at higher frequencies were estimated by comparing the level of the long-term average speech spectrum at adjacent frequencies and then providing similar amplification up to 15 dB of sensation at 9 kHz. Because the hearing thresholds and experimental results were obtained using the same equipment and calibration parameters, audibility of the stimuli was easily determined by referencing both to a 6-cm3 coupler. Although real-ear dB SPL is ideal, standing waves in the ear canal make it difficult to determine the exact presentation levels at frequencies >4 kHz (Gilman & Dirks 1986). Therefore, threshold and stimulus measures were referenced to a 6-cm3 coupler to obtain relative estimates of sensation for each child.

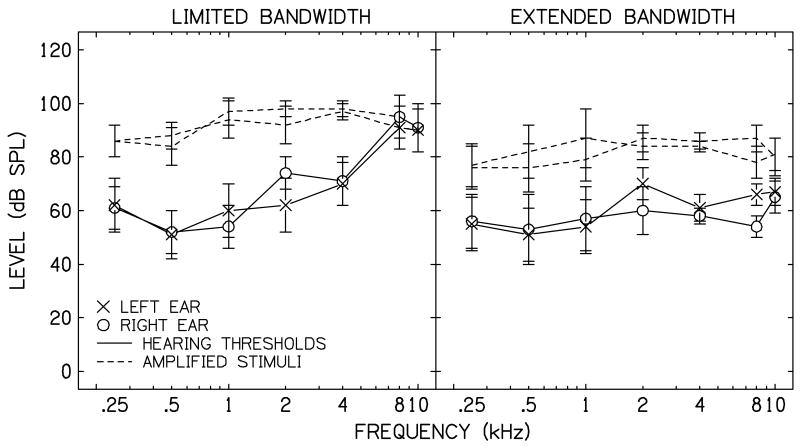

Figure 2 shows the average hearing thresholds (±1 SE) for the right and left ears for the children with HL in the limited and extended bandwidth conditions (left and right panels, respectively). The average presentation levels of the stimuli are also shown. Note that the hearing levels of the children in the limited bandwidth condition are more severe in the high-frequencies than for the children in the extended bandwidth condition. Although group assignment was random for most of the children with HL, those children with greater high-frequency losses at 9 kHz were placed in the limited bandwidth group because adequate sensation of the stimuli would have exceeded the output limits imposed in this study.

2.

Average presentation levels (in dB SPL) as a function of frequency (kHz) for the children with HL in the limited and extended bandwidth conditions (left and right panels, respectively). Average hearing thresholds for the right and left ears are also provided. Error bars represent ±1 SE. All levels were referenced to a 6 cm3 coupler.

Procedure

Receptive Vocabulary

Prior to testing, the Peabody Picture Vocabulary Test III [PPVT-III, Form B (Dunn & Dunn 2006)] was administered to determine each child's current receptive vocabulary. For this and the following test, the children with HL wore their personal hearing aids and were tested in a quiet room. The results of the PPVT were used to equate the receptive vocabularies of the children assigned to each listening condition (described below) because previous research suggests that word learning is related to the size of a child's current vocabulary. In this way, the influence of receptive vocabulary on word learning could be controlled a priori so that the effects of stimulus condition might be more apparent.

Working Memory

The Rey Auditory-Verbal Learning Test (AVLT) was also administered to assess verbal memory and retention (Lezak, Howieson, & Loring 2004; Taylor 1959; van den Burg & Kingma 1999). The test requires the child to learn and retain a list of familiar words read aloud. This test has been used extensively with both children and adults to determine the effects of various disorders like traumatic brain injury and degenerative diseases on working memory (e.g., multiple sclerosis, Alzheimer's, Huntington's). A list of 15 monosyllabic words familiar to children is read 5 times to the child at a rate of one word per second. After each repetition of the list, the child's task is to recall as many words as possible in no particular order and in no predetermined period of time. The number of words recalled on each of the five repetitions of the list is then tallied and compared to normative values for typical children (van den Burg & Kingma 1999). Although these data may be analyzed a number of ways (e.g., total words acquired, interference, number of repetitions, retention after delay), for purposes of this study, only the total number of words retained after each of the 5 recitations was calculated. The results of this test were used to identify any apparent differences between the children in the two stimulus conditions so that the effects of verbal learning and retention could be accounted for statistically.

Word-Learning

The children with NH and the children with HL were divided into two equal subgroups. Each subgroup learned the five words in the limited or the extended bandwidth condition, but not in both. Each child participated in two word-learning tasks: a familiarization task and a learning task. In the familiarization task, the child was briefly exposed to the visual pictures of toys and their names. A picture of a novel toy was displayed on a computer screen while the child heard, “This is a ____. Can you say_____?” The examiner then listened to the child's production to confirm that he/she produced the word correctly before moving on to the next picture. The familiarization task consisted of three repetitions of each word (two aural presentations and one production by the child). This task was similar to fast mapping in that the child was provided several directed exposures to the words and their associated toys before beginning the learning task.

In the learning task, the child played a simple computer game to learn the names of the five toys. Prior to implementing the task, each child was given the following instructions by the examiner, “You will play a computer game to see how quickly you can learn the names of the toys. In the game you will see the pictures of the five toys on the computer screen. Then you'll hear a woman tell you a toy name. Select the toy that you think has that name. If you're right, the game will play. If you're not right, nothing will happen. At first you may not get very many right, but keep trying. As you play the game, you'll get better and better.” This task was similar to novel mapping in that the child was expected to connect the novel word to the correct novel object.

Custom laboratory software was used to randomly select a word, process it according to the frequency-shaping parameters calculated for each child, provide feedback in the form of a simple video game (e.g., dot-to-dot, puzzle), record the trial-by-trial data, and display real-time data analysis for the examiner to monitor. Figure 3 shows the interactive response screen used by the children for this task. A picture of each toy was displayed as response buttons on the left side of the computer screen. For each correct response, the video game on the right side of the screen was advanced incrementally (e.g., the next line was drawn in the dot-to-dot game). The video game did not advance for incorrect selections. The learning task consisted of a total of 150 trials (30 repetitions of each word presented randomly) and took approximately 15 minutes to complete.

3.

Interactive response screen used by the children to select a toy after the presentation of each novel word. Feedback was provided via several video games. In this example, the dot-to-dot game was incrementally advanced after each correct response.

In the event one toy-name association was more salient than the others or if one toy was simply more appealing, the name associated with each toy was rotated systematically for each child. That is, the toy-name association was different for each child. This reduced the effects of picture or word preference by distributing the effect across children. All testing was conducted in a sound treated booth in the presence of an examiner who evaluated the child's production of the words, encouraged consistent attention to each task, and answered any questions the child had.

Results

Preliminary Tests

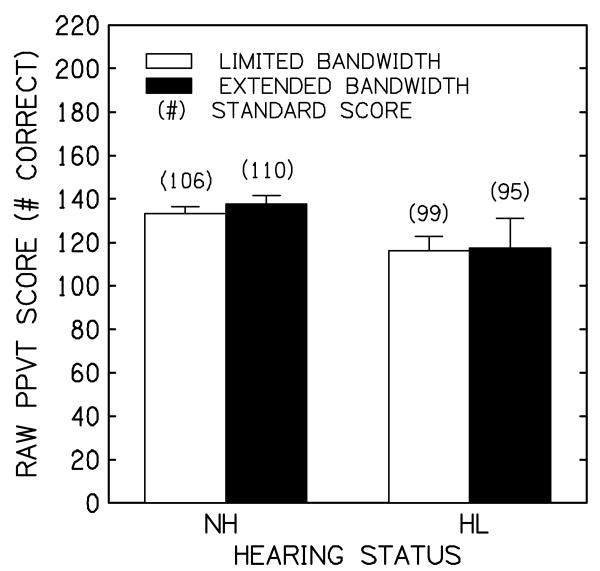

Figure 4 shows the average PPVT raw scores (+1 SE) as a function of group (NH and HL). The children enrolled in the limited and extended bandwidth conditions are indicated by open and filled bars, respectively. The average standard score for each group and condition are also indicated in parenthesis above each bar. The raw scores were subjected to a univariate analysis of variance (ANOVA) with hearing status (NH, HL) and bandwidth (limited, extended) as between-subjects factors. A significant main effect of hearing status was revealed, F(1, 46) = 9.1, p < .01, but not for bandwidth, F(1, 46) = 0.2, p = 0.63. There was no hearing status × bandwidth interaction, F(1, 46) = 0.07, p = 0.79. These results indicate that across groups the children with NH had significantly higher receptive vocabularies but within each group the children assigned to each bandwidth condition possessed similar vocabularies. Therefore, any difference between the word-learning rates of the children in each stimulus condition was not due to differences in receptive vocabulary.

4.

Average raw scores for the PPVT as a function of hearing status (normal hearing and hearing loss). Error bars represent ±1 SE. The children enrolled in the limited and extended bandwidth conditions are indicated by open and filled bars, respectively. Standard scores are shown in parentheses.

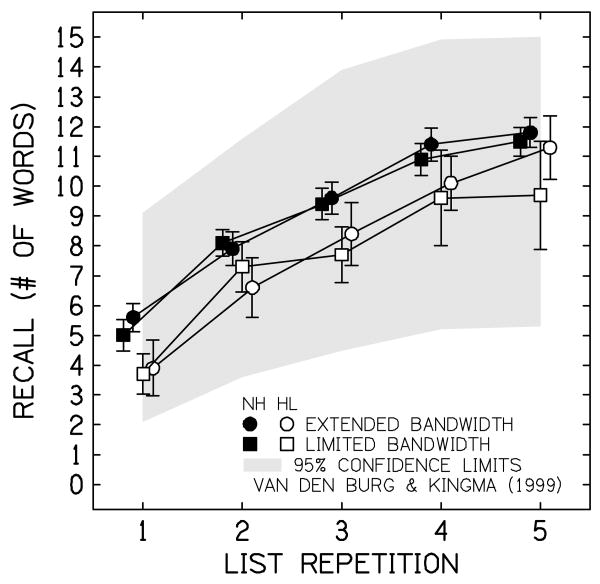

Figure 5 shows the mean AVLT scores (±1 SE) as a function of list repetition for the children with NH (filled symbols) and the children with HL (open symbols). The children enrolled in the extended and limited bandwidth conditions are indicated by circles and squares, respectively. The symbols have been jittered slightly to expose the overlapping error bars. The shaded area represents the 95% confidence intervals for typical 8- to 10-year-old children (van den Berg & Kingma, 1999). Recall that the AVLT is a measure of verbal memory and retention of familiar words. The average performance of each group suggests a systematic increase in the number of words retained with each repetition of the list. The children with HL however, retained consistently fewer words across repetitions than the children with NH. Even so, the children assigned to the two stimulus conditions within each group demonstrated similar learning functions suggesting that any difference between the word-learning rates across stimulus conditions was not due to differences in verbal memory and retention.

5.

Average recall for the AVLT as a function of list repetition. Error bars represent ±1 SE. Children with NH and children with HL are represented by filled and open symbols, respectively. The children enrolled in the limited and extended bandwidth conditions are indicated by squares and circles, respectively. The shaded area represents the 95% confidence interval for typical 8- to 10-year-old children (Van Den Burg & Kingma, 1999).

Word learning

To characterize word learning, the data from the learning task were used to create growth functions for each of the 4 groups (2 hearing status × 2 bandwidth). The most efficient way to do this was to fit learning curves to the data, and base any inferences on the parameter that characterized the curve. In this case, an exponential growth function was chosen and used the time constant of that function to indicate the number of exposures necessary to acquire new words. The exponential growth function is:

| (1) |

where PL, the probability of learning, ranges from 0 to 1; e is 2.718…; n is the number of the trial block, and c is the time-constant of the process. When the number of trials happens to equal the time constant, n = c, learning is almost 2/3 complete (PL = 0.63); and when n = 3c, learning is 95% complete.

In this experiment, a child who could make no discrimination at all would be correct just 1 time out of 5 by chance. Therefore, the floor of the performance was not 0% correct, but 20% correct. Thus, the model of learning, Equation 1, was corrected for chance to give a model of performance that could be used to map the data. The standard correction for guessing was used: the probability of being correct (PC) equals the probability of having learned (PL), plus the probability of not yet having learned (1-PL), times the probability of getting the answer by chance (PCh):

| (2) |

Putting this together with the learning model provided the predicted rate of improvement in performance:

| (3) |

where PC is the probability of a correct answer, and, as before, n is the number of the trial block and c is the time constant for the learning process. With this formula, PC = 0.2 when n = 0 with the curve growing from that raised floor in a smooth fashion to 1.0. When n = c, performance is approximately 70% (70.57%) correct.

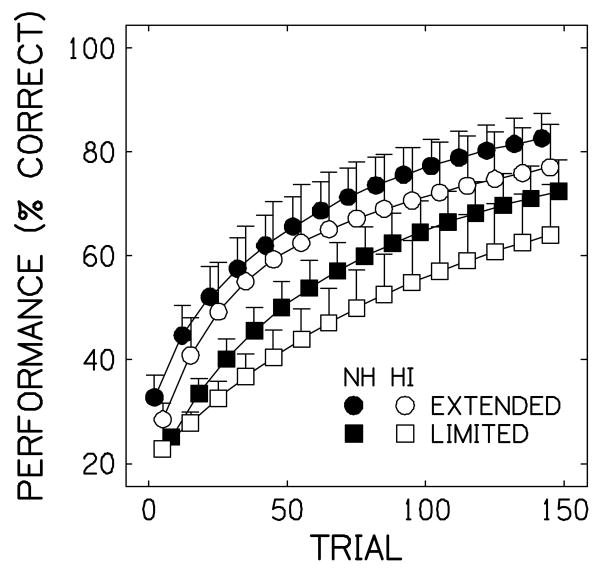

The advantage of using Equation 3 to model the data was that all data points in the learning process contributed to the determination of the time constant. Since the speed of learning—the key dependent variable—is simply 1/c, fitting Equation 3 to the data was the best way to address the experimental question. This was accomplished by adjusting estimates of c to minimize the sum of squared deviations between the data and the points predicted. Figure 6 shows the learning functions (+1 SE) for each group and bandwidth condition averaged across children. Hearing status is indicated by filled and unfilled symbols whereas bandwidth is indicated by circles and squares. The symbols have been jittered to expose the overlapping error bars. The slopes of the learning functions were greater for the children in the extended bandwidth condition as were the slopes for the children with NH. That is, the performance of the children in the extended bandwidth condition exceeded that of the children in the limited bandwidth condition as did the performance of the children with NH relative to the children with HL. These results suggest that the extended bandwidth condition allowed both groups of children to learn the words more rapidly than in the limited bandwidth condition.

6.

Averaged learning functions (+1 SE) for the novel words as a function of trial number. Children with NH and children with HL are represented by filled and open symbols, respectively. The children enrolled in the limited and extended bandwidth conditions are indicated by squares and circles, respectively.

To determine whether the acoustic characteristics of the input affected the number of exposures necessary for the children to learn new words, the number of exposures necessary to reach a performance of 70% was estimated for each child using the harmonic mean of the blocked trials. The harmonic mean, rather than the arithmetic or geometric mean, was used because it is appropriate for calculating the average of rates. It more accurately describes the number of trials required for learning and it tends to compensate for extreme outliers. The learning rates were then log transformed and subjected to a univariate ANOVA with hearing status (NH, HL) and bandwidth (limited, extended) as between-subjects factors. A significant main effect of bandwidth condition was revealed, F(1, 46) = 5.8, p = .02, but not an effect of hearing status, F(1, 46) = 1.8, p = .19. There was also no hearing status × bandwidth interaction, F(1, 46) = 0.6, p = .44. These results indicate that on average, the children required fewer exposures to learn the words in the extended bandwidth condition regardless of hearing status.

Two additional analyses were conducted to address directly the primary question of interest. That is, did the word learning rate of each group increase in the extended bandwidth condition. A one-tailed t-test was used to compare the learning rates of the children with NH in each bandwidth condition. The results revealed a significant difference between the bandwidth conditions, t(34) = 1.8, p = .04, d = .75, and suggest that these children required significantly fewer exposures to learn the new words in the extended bandwidth. The effect size indicates that, on average, the rate of word learning by the children in the extended bandwidth exceeded that of the children in the limited bandwidth condition by 0.75 standard deviations. An additional one-tailed t-test was conducted to compare the learning rates of the children with HL as a function of bandwidth. No significant difference between the learning rates in each bandwidth condition was revealed, t(12) = 1.4, p = .10. Although the learning functions for these children suggest a large effect of bandwidth (see Figure 6), the lack of significance is likely due to the smaller number of children in this group.

Number of Exposures Required for Learning

On average, the children with NH required 20 trials to achieve the criterion performance level (70%) in the extended bandwidth condition whereas 72 trials were necessary in the limited bandwidth condition. For the children with HL, 43 trials were necessary to achieve the criterion performance in the extended bandwidth condition whereas 121 trials were necessary in the limited bandwidth condition. For easier interpretation, the learning rate for a single word in these conditions was also calculated using the same criterion performance (70%). On average, the children with NH required 5 trials to learn each word in the extended bandwidth condition whereas 16 trials were required in the limited bandwidth condition. The children with HL required 10 trials to learn each word in the extended bandwidth condition whereas 27 trials were necessary in the limited bandwidth condition. The results suggest that, compared to the children with NH, the children with HL required approximately twice the number of exposures to learn each word. Also, although the group effect was not statistically significant, the children with NH and with HL required 3 times as many trials to learn the new words in the limited bandwidth condition.

Discussion

The purpose of the present study was to determine the short-term word learning rate of children as a function of their hearing status and the bandwidth of the speech signal. It was hypothesized that word learning would be significantly affected by the acoustic parameters of the physical signal. The principle result from this study suggests that, regardless of hearing status, the children learned words significantly faster (fewer exposures were necessary) when they were provided with a speech signal that encompassed a bandwidth similar that of normal hearing. Conversely, the children learned words more slowly (more exposures were necessary) when they were provided with a limited speech signal.

These results are consistent with the results of studies showing that children with HL possess significantly smaller vocabularies than children with NH and may explain, in part, why they are unable to learn words as well as their normally-hearing peers (Briscoe, Bishop, & Norbury 2001; Gilbertson & Kamhi 1995; Hansson et al., 2004). That is, the amplified signal provided to children with HL may be insufficient to promote optimal word learning. These results also suggest that the phonological difficulties demonstrated by children with HL may be associated with the somewhat ambiguous signal they receive through their hearing aids. Because word learning requires a child to perceive the subtle acoustic elements of the word to distinguish it from other similar words, they may require more exposures to overcome the ambiguous signal they receive. That ambiguity also may be responsible, in part, for the poorer performance demonstrated by children with HL on phonological processing tasks (Briscoe, Bishop, & Norbury 2001; Hansson et al., 2004) and would suggest that some speech and language measures are sensitive to variations in the integrity of the acoustic signal. For example, a common test of phonological processing requires that the child repeat aloud nonsense words that increase in syllable length. It is not difficult to imagine that a child receiving a limited acoustic signal would find it difficult to repeat the word, much like trying to perceive an unfamiliar name or term over the telephone.

Recall that the qualitative difference between the limited and extended bandwidth conditions is quite subtle, particularly when perceiving familiar speech. The results of this study suggest that the qualitative contributions of high-frequency energy to speech perception may be quantitative over the long term for children who are listening to and learning unfamiliar speech. In addition to the subtle effects of high-frequency amplification, it is possible that other amplification characteristics (e.g., frequency compression, noise reduction, directional microphones) also may interact with word learning in children with HL. To date, no studies are available regarding the effect of these amplification characteristics on word learning. The consistent difference, and in some studies the increasing difference, between the receptive vocabularies of children with HL and age-matched children with NH (Blamey et al., 2001; Pittman et al., 2005) suggests an urgent need to determine those amplification parameters that provide a comprehensive acoustic signal so that word learning may be optimized in children with HL.

Limitations of the Current Study

It is important to recognize the limitations of the short-term paradigm used in the present study as well as the results of that paradigm. First, the controlled conditions necessary to examine an acoustic effect (e.g., sound treated room, high fidelity earphones) limit the generalization of the results because the test conditions are far from most naturalistic learning environments. It is possible that the benefits of an extended bandwidth may be enhanced or reduced by such things as speech reading, contextual cues, background noise, and distance from the talker. Second, and most important, the number of exposures necessary to learn the words in this short-term paradigm should not be interpreted as the number of exposures that a child may require over a more extended period of time or under different circumstances. Instead, they should be interpreted in relative terms and in the context of the acoustic parameters under examination.

Finally, it is important to recall that children with HL are a highly heterogeneous population. In addition to the factors that contribute to the variability of all children (e.g., age, IQ), children with HL also vary in terms of the etiology of the hearing loss, the degree and configuration of the hearing loss, the age at which they were identified and provided with intervention, the hearing aid make, model, and features prescribed to them, their mode of communication, the consistency with which they use their hearing aids or attend intervention programs, and the support they receive from their parents and other family members. Not only do these factors make it difficult to recruit a homogenous group of children for research, the application of the results to this population is even more questionable. It is likely that each child with HL requires a unique intervention plan to optimize their auditory processing. However, because some direction regarding the best course of action is helpful, children with NH are also examined (as was done in the present study) to confirm the benefits of a particular form of intervention in the absence of the factors that can confound the performance of children with HL.

Future Directions for Research

The results of the present study suggest that subtle variations in the acoustic signal may affect long-term auditory processes in children. Although extending the bandwidth of a signal is perceived as an increase in sound quality (Munro & Lutman 2005; Versfeld, Festen, & Houtgast 1999), that increased quality appears to have a quantitative effect in children who use their hearing primarily to learn. More research is needed to determine the extent to which other seemingly subtle properties of the amplified signal affect long-term auditory processes in children. For example, noise-reduction algorithms may have negligible effects on speech recognition thresholds (Alcantara et al., 2003) but may have a significant cumulative effect on long-term processes like word learning (Marcoux et al., 2006). Likewise, frequency transposition may not improve speech perception significantly in the short term (McDermott et al., 1999; McDermott & Dean 2000; McDermott & Knight 2001) but may improve word learning over time. The learning paradigm employed in the present study may be a useful tool for tapping into the word-learning process to evaluate the effect of these and other amplification characteristics.

Acknowledgments

The author would like to thank Christina Sergi for her help with data collection and manuscript preparation, Peter Killeen for his help with data analyses, and Mary Pat Moeller for her comments on earlier versions of this manuscript.

Footnotes

Phonotactic probability is the likelihood of certain phoneme sequences occurring in a particular order within a given language (Vitevitch, Luce, Charles-Luce, & Kemmerer, 1997; Vitevitch, Luce, Pisoni, & Auer, 1999).

Reference List

- Alcantara JL, Moore BC, Kuhnel V, Launer S. Evaluation of the noise reduction system in a commercial digital hearing aid. International Journal of Audiology. 2003;42:34–42. doi: 10.3109/14992020309056083. [DOI] [PubMed] [Google Scholar]

- Blamey PJ, Sarant JZ, Paatsch LE, Barry JG, Bow CP, Wales RJ, Wright M, Psarros C, Rattigan K, Tooher R. Relationships among speech perception, production, language, hearing loss, and age in children with impaired hearing. Journal of Speech, Language, and Hearing Research. 2001;44:264–285. doi: 10.1044/1092-4388(2001/022). [DOI] [PubMed] [Google Scholar]

- Bloom P. First words. How Children Learn the Meanings of Words. 2000:1–23. doi: 10.1017/s0140525x01000139. [DOI] [PubMed] [Google Scholar]

- Boothroyd A, Boothroyd-Turner D. Postimplantation audition and educational attainment in children with prelingually acquired profound deafness. Annals of Otology, Rhinology, and Laryngology. 2002;189:79–84. doi: 10.1177/00034894021110s517. [DOI] [PubMed] [Google Scholar]

- Briscoe J, Bishop DV, Norbury CF. Phonological processing, language, and literacy: a comparison of children with mild-to-moderate sensorineural hearing loss and those with specific language impairment. Journal of Child Psychology and Psychiatry and Allied Disciplines. 2001;42:329–340. [PubMed] [Google Scholar]

- Davis JM, Elfenbein J, Schum R, Bentler RA. Effects of mild and moderate hearing impairments on language, educational, and psychosocial behavior of children. Journal of Speech and Hearing Disorders. 1986;51:53–62. doi: 10.1044/jshd.5101.53. [DOI] [PubMed] [Google Scholar]

- Denes PB. On the statistics of spoken English. Journal of the Acoustical Society of America. 1963;35:892–905. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test III. Circle Pines, MN: American Guidance Services, Inc.; 2006. [Google Scholar]

- Gilbertson M, Kamhi AG. Novel word learning in children with hearing impairment. Journal of Speech and Hearing Research. 1995;38:630–642. doi: 10.1044/jshr.3803.630. [DOI] [PubMed] [Google Scholar]

- Gilman S, Dirks DD. Acoustics of ear canal measurement of eardrum SPL in simulators. Journal of the Acoustical Society of America. 1986;80:783–793. doi: 10.1121/1.393953. [DOI] [PubMed] [Google Scholar]

- Hansson K, Forsberg J, Lofqvist A, Maki-Torkko E, Sahlen B. Working memory and novel word learning in children with hearing impairment and children with specific language impairment. International Journal of Language and Communication Disorders. 2004;39:401–422. doi: 10.1080/13682820410001669887. [DOI] [PubMed] [Google Scholar]

- Kortekaas RW, Stelmachowicz PG. Bandwidth effects on children's perception of the inflectional morpheme /s/: acoustical measurements, auditory detection, and clarity rating. Journal of Speech, Language, and Hearing Research. 2000;43:645–660. doi: 10.1044/jslhr.4303.645. [DOI] [PubMed] [Google Scholar]

- Lederberg AR, Prezbindowski AK, Spencer PE. Word-learning skills of deaf preschoolers: the development of novel mapping and rapid word-learning strategies. Child Development. 2000;71:1571–1585. doi: 10.1111/1467-8624.00249. [DOI] [PubMed] [Google Scholar]

- Lezak MD, Howieson DB, Loring DW. Neuropsychological Assessment. New York, NY: Oxford University Press; 2004. [Google Scholar]

- Marcoux AM, Yathiraj A, Cote I, Logan J. The effect of a hearing aid noise reduction algorithm on the acquisition of novel speech contrasts. International Journal of Audiology. 2006;45:707–714. doi: 10.1080/14992020600944416. [DOI] [PubMed] [Google Scholar]

- McDermott HJ, Dean MR. Speech perception with steeply sloping hearing loss: effects of frequency transposition. British Journal of Audiology. 2000;34:353–361. doi: 10.3109/03005364000000151. [DOI] [PubMed] [Google Scholar]

- McDermott HJ, Dorkos VP, Dean MR, Ching TY. Improvements in speech perception with use of the AVR TranSonic frequency-transposing hearing aid. Journal of Speech, Language and Hearing Research. 1999;42:1323–1335. doi: 10.1044/jslhr.4206.1323. [DOI] [PubMed] [Google Scholar]

- McDermott HJ, Knight MR. Preliminary results with the AVR ImpaCt frequency-transposing hearing aid. Journal of the American Academy of Audiology. 2001;12:121–127. [PubMed] [Google Scholar]

- Munro KJ, Lutman ME. Sound quality judgments of new hearing instrument users over a 24-week post-fitting period. International Journal of Audiology. 2005;44:92–101. doi: 10.1080/14992020500031090. [DOI] [PubMed] [Google Scholar]

- Oetting JB, Rice ML, Swank LK. Quick Incidental Learning (QUIL) of words by school-age children with and without SLI. Journal of Speech and Hearing Research. 1995;38:434–445. doi: 10.1044/jshr.3802.434. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Lewis DE, Hoover BM, Stelmachowicz PG. Rapid word-learning in normal-hearing and hearing-impaired children: effects of age, receptive vocabulary, and high-frequency amplification. Ear and Hearing. 2005;26:619–629. doi: 10.1097/01.aud.0000189921.34322.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rice ML, Buhr JC, Nemeth M. Fast mapping word-learning abilities of language-delayed preschoolers. Journal of Speech and Hearing Disorders. 1990;55:33–42. doi: 10.1044/jshd.5501.33. [DOI] [PubMed] [Google Scholar]

- Rice ML, Oetting JB, Marquis J, Bode J, Pae S. Frequency of input effects on word comprehension of children with specific language impairment. Journal of Speech and Hearing Research. 1994;37:106–122. doi: 10.1044/jshr.3701.106. [DOI] [PubMed] [Google Scholar]

- Seewald RC, Cornelisse LE, Ramji KV, Sinclair ST, Moodie KS, Jamieson DG. DSL v4.1 for Windows: A software implementation of the Desired Sensation Level (DSL[i/o]) Method for fitting linear gain and wide-dynamic-range compression hearing instruments. User's manual. London, ON: The University of Western Ontario Hearing Health Care Research Unit; 1997. [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. Journal of the Acoustical Society of America. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear and Hearing. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Novel-word learning in children with normal hearing and hearing loss. Ear and Hearing. 2004a;25:47–56. doi: 10.1097/01.AUD.0000111258.98509.DE. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE, Moeller MP. The importance of high-frequency audibility in the speech and language development of children with hearing loss. Archives of Otolaryngology - Head and Neck Surgery. 2004b;130:556–562. doi: 10.1001/archotol.130.5.556. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Lewis DE, Choi S, Hoover B. Effect of stimulus bandwidth on auditory skills in normal-hearing and hearing-impaired children. Ear and Hearing. 2007;28:483–494. doi: 10.1097/AUD.0b013e31806dc265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Storkel HL. Learning new words: phonotactic probability in language development. Journal of Speech, Language, and Hearing Research. 2001;44:1321–1337. doi: 10.1044/1092-4388(2001/103). [DOI] [PubMed] [Google Scholar]

- Storkel HL. Learning new words II: Phonotactic probability in verb learning. Journal of Speech, Language, and Hearing Research. 2003;46:1312–1323. doi: 10.1044/1092-4388(2003/102). [DOI] [PubMed] [Google Scholar]

- Taylor EM. Psychological Appraisal of Children with Cerebral Defects. Cambridge, MA: Harvard University Press; 1959. [Google Scholar]

- van den Burg W, Kingma A. Performance of 225 Dutch school children on Rey's Auditory Verbal Learning Test (AVLT): parallel test-retest reliabilities with an interval of 3 months and normative data. Archives of Clinical Neuropsychology. 1999;14:545–559. doi: 10.1016/s0887-6177(98)00042-0. [DOI] [PubMed] [Google Scholar]

- Versfeld NJ, Festen JM, Houtgast T. Preference judgments of artificial processed and hearing-aid transduced speech. Journal of the Acousitical Socity of America. 1999;106:1566–1578. doi: 10.1121/1.428035. [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA. A web-based interface to calculate phonotactic probability for words and nonwords in English. Behavior Research Methods, Instruments, and Computers. 2004;36:481–487. doi: 10.3758/bf03195594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Charles-Luce J, Kemmerer D. Lang Speech. Pt 1. Vol. 40. 1997. Phonotactics and syllable stress: implications for the processing of spoken nonsense words; pp. 47–62. [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Pisoni DB, Auer ET. Phonotactics, neighborhood activation, and lexical access for spoken words. Brain and Language. 1999;68:306–311. doi: 10.1006/brln.1999.2116. [DOI] [PMC free article] [PubMed] [Google Scholar]