Abstract

One of the major lessons of memory research has been that human memory is fallible, imprecise, and subject to interference. Thus, although observers can remember thousands of images, it is widely assumed that these memories lack detail. Contrary to this assumption, here we show that long-term memory is capable of storing a massive number of objects with details from the image. Participants viewed pictures of 2,500 objects over the course of 5.5 h. Afterward, they were shown pairs of images and indicated which of the two they had seen. The previously viewed item could be paired with either an object from a novel category, an object of the same basic-level category, or the same object in a different state or pose. Performance in each of these conditions was remarkably high (92%, 88%, and 87%, respectively), suggesting that participants successfully maintained detailed representations of thousands of images. These results have implications for cognitive models, in which capacity limitations impose a primary computational constraint (e.g., models of object recognition), and pose a challenge to neural models of memory storage and retrieval, which must be able to account for such a large and detailed storage capacity.

Keywords: object recognition, gist, fidelity

We have all had the experience of watching a movie trailer and having the overwhelming feeling that we can see much more than we could possibly report later. This subjective experience is consistent with research on human memory, which suggests that as information passes from sensory memory to short-term memory and to long-term memory, the amount of perceptual detail stored decreases. For example, within a few hundred milliseconds of perceiving an image, sensory memory confers a truly photographic experience, enabling you to report any of the image details (1). Seconds later, short-term memory enables you to report only sparse details from the image (2). Days later, you might be able to report only the gist of what you had seen (3).

Whereas long-term memory is generally believed to lack detail, it is well established that long-term memory can store a massive number of items. Landmark studies in the 1970s demonstrated that after viewing 10,000 scenes for a few seconds each, people could determine which of two images had been seen with 83% accuracy (4). This level of performance indicates the existence of a large storage capacity for images.

However, remembering the gist of an image (e.g., “I saw a picture of a wedding not a beach”) requires the storage of much less information than remembering the gist and specific details (e.g., “I saw that specific wedding picture”). Thus, to truly estimate the information capacity of long-term memory, it is necessary to determine both the quantity of items that can be remembered and the fidelity (amount of detail), with which each item is remembered. This point highlights an important limitation of large-scale memory studies (4–6): the level of detail required to succeed at the memory tests was not systematically examined. In these studies, the stimuli were images taken from magazines, where the foil items used in the two-alternative forced-choice tests were random images drawn from the same set (4). Thus, the foil items were typically quite different from the studied images, making it impossible to conclude whether the memories for each item in these previous experiments consisted of only the “gist” or category of the image, or whether they contained specific details about the images. Therefore, it remains unclear exactly how much visual information can be stored in human long-term memory.

There are reasons for thinking that the memories for each item in these large-scale experiments might have consisted of only the gist or category of the image. For example, a well known body of research has shown that human observers often fail to notice significant changes in visual scenes; for instance, if their conversation partner is switched to another person, or if large background objects suddenly disappear (7, 8). These “change blindness” studies suggest that the amount of information we remember about each item may be quite low (8). In addition, it has been elegantly demonstrated that the details of visual memories can easily be interfered with by experimenter suggestion, a matter of serious concern for eyewitness testimony, as well as another indication that visual memories might be very sparse (9). Taken together, these results have led many to infer that the representations used to remember the thousands of images from the experiments of Shepard (5) and Standing (4) were in fact quite sparse, with few or no details about the images except for their basic-level categories (8, 10–12).

However, recent work has also suggested that visual long-term memory representations can be more detailed than previously believed. Long-term memory for objects in scenes can contain more information than only the gist of the object (13–16). For instance, Hollingworth (13) showed that, when requiring memory for a hundred or more objects, observers remain significantly above chance at remembering which exemplar of an object they have seen (e.g., “did you see this power drill or that one?”) even after seeing up to 400 objects in between studying the object and being tested on it. This result suggests that memory is capable of storing fairly detailed visual representations of objects over long time periods (e.g., longer than working memory).

The current study was designed to estimate the information capacity of visual long-term memory by simultaneously pushing the system in terms of both the quantity and the fidelity of the representations that must be stored. First, we used isolated objects that were not embedded in scenes, to more systematically control the conceptual content of the stimulus set and prevent the role of contextual cues that may have contributed to memory performance in previous experiments. In addition, we used very subtle visual discriminations to probe the fidelity of the visual representations. Last, we had people remember several thousand objects. Combined, these manipulations enable us to estimate a new bound on the capacity of memory to store visual information.

Results

Observers were presented with pictures of 2,500 real world objects for 3 s each. The experiment instructions and displays were designed to optimize the encoding of object information into memory. First, observers were informed that they should try to remember all of the details of the items (17). Second, objects from mostly distinct basic-level categories were chosen to minimize conceptual interference (18). Last, memory was tested with a two-alternative forced-choice test, in which a studied item was paired with a foil and the task was to choose the studied item, allowing for recognition memory rather than recall memory (as in 4).

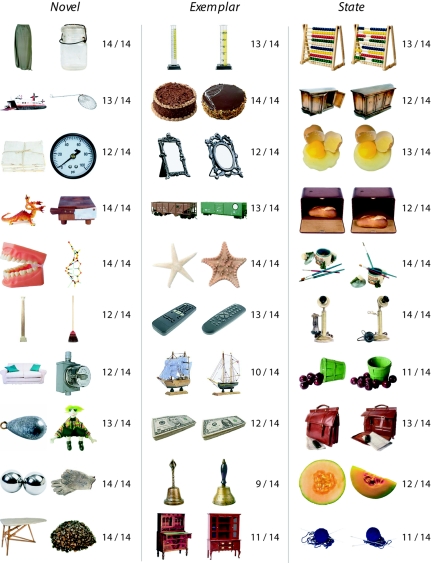

We varied the similarity of the studied item and the foil item in three ways (Fig. 1). In the novel condition, the old item was paired with a new item that was categorically distinct from all of the previously studied objects. In this case, remembering the category of the object, even without remembering the visual details of the object, would be sufficient to choose the appropriate item. In the exemplar condition, the old item was paired with a physically similar new object from the same basic-level category. In this condition, remembering only the basic-level category of the object would result in chance performance. Last, in the state condition, the old item was paired with a new item that was exactly the same object, but appeared in a different state or pose. In this condition, memory for the category of the object, or even for the identity of the object, would be insufficient to select the old item from the pair. Thus, memory for specific details from the image is required to select the appropriate object in both the exemplar and state conditions. Critically, observers did not know during the study session which items of the 2,500 would be tested afterward, nor what they would be tested against. Thus, any strategic encoding of a specific detail that would distinguish between the item and the foil was not possible. To perform well on average in both the exemplar and the state conditions, observers would have to encode many specific details from each object.

Fig. 1.

Example test pairs presented during the two-alternative forced-choice task for all three conditions (novel, exemplar, and state). The number of observers reporting the correct item is shown for each of the depicted pairs. The experimental stimuli are available from the authors.

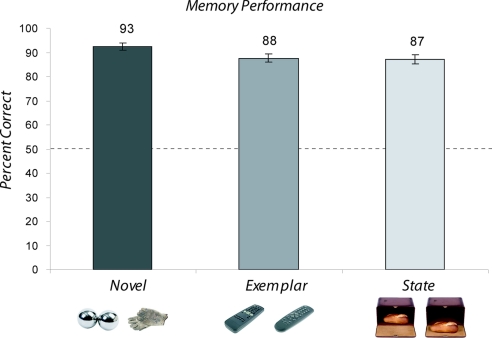

Performance was remarkably high in all three of the test conditions in the two-alternative forced choice (Fig. 2). As anticipated based on previous research (4), performance was high in the novel condition, with participants correctly reporting the old item on 92.5% (SEM, 1.6%) of the trials. Surprisingly, performance was also exceptionally high in both conditions that required memory for details from the images: on average, participants responded correctly on 87.6% (SEM, 1.8%) of exemplar trials, and 87.2% (SEM, 1.8%) of the state trials. A one-way repeated measures ANOVA revealed a significant effect of condition, F(2,26) = 11.3, P < 0.001. Planned pairwise t tests show that performance in the novel condition was significantly more accurate than the state and exemplar conditions [novel vs. exemplar: t(13) = 3.4, P < 0.01; novel vs. state: t(13) = 4.3, P < 0.01; and exemplar vs. state, n.s. P > 0.10]. However, reaction time data were slowest in the state condition, intermediate in the exemplar condition, and fastest in the novel conditions [M = 2.58 s, 2.42 s, 2.28 s, respectively; novel vs. exemplar: t(13) = 1.81, P = 0.09; novel vs. state: t(13) = 4.05, P = 0.001; and exemplar vs. state: t(13) = 2.71, P = 0.02], consistent with the idea that the novel, exemplar, and state conditions required increasing detail. Participant reports afterward indicated that they were usually explicitly aware of which item they had seen, as they expressed confidence in their performance and volunteered information about the details that enabled them to pick the correct items.

Fig. 2.

Memory performance for each of the three test conditions (novel, exemplar, and state) is shown above. Error bars represent SEM. The dashed line indicates chance performance.

During the presentation of the 2,500 objects, participants monitored for any repeat images. Unbeknownst to the participants, these repeats occurred anywhere from 1 to 1,024 images previously in the sequence (on average, one in eight images was a repeat). This task insured that participants were actively attending to the stream of images as they were presented and provided an online estimate of memory storage capacity over the course of the entire study session.

Performance on the repeat-detection task also demonstrated remarkable memory. Participants rarely false-alarmed (1.3%; SEM, ± 1%), and were highly accurate in reporting actual repeats (96% overall; SEM, ± 1%). Accuracy was near ceiling for repeat images with up to 63 intervening items, and declined gradually for detecting repeat items with more intervening items (supporting information (SI) Text and Fig. S1). Even at the longest condition of 1,023 intervening items (i.e., items that were initially presented ≈2 h previously), the repeats were detected ≈80% of the time. The correlation between the performance of the observers at the repeat-detection task and their performance in the forced choice was high (r = 0.81). The error rate as a function of number of intervening items fits well with a standard power law of forgetting (r2 = 0.98). The repeat-detection task also shows that this high capacity memory arises not only in two-alternative forced-choice tasks, but also in ongoing old/new recognition tasks, although the repeat-detection task did not probe for more detailed representations beyond the category level. Together with the memory test, these results indicate a massive capacity-memory system, in terms of both the quantity and fidelity of the visual information that can be remembered.

Discussion

We found that observers could successfully remember details about thousands of images after only a single viewing. What do these data say about the information capacity of visual long-term memory? It is known from previous research that people can remember large numbers of pictures (4–6) but it has often been assumed that they were storing only the gist of these images (8, 10–12). Whereas some evidence suggested observers are capable of remembering details about a few hundred objects over long time periods (13), to our knowledge, no experiment had previously demonstrated accurate memory at the exemplar or state level on such a large scale. The present results demonstrate visual memory is a massive store that is not exhausted by a set of 2,500 detailed representations of objects. Importantly, these data cannot reveal the format of these representations, and should not be taken to suggest that observers have a photographic-like memory (19). Further work is required to understand how the details from the images are encoded and stored in visual long-term memory.

The Information Capacity of Memory.

Memory capacity cannot solely be characterized by the number of items stored: a proper capacity estimate takes into account the number of items remembered and multiplies this by the amount of information per item. In the present experiment we show that the information remembered per item is much higher than previously believed, as observers can correctly choose among visually similar foils. Therefore, any estimate of long-term memory capacity will be significantly increased by the present data. Ideally, we could quantify this increase, for example, by using information-theoretic bits, in terms of the actual visual code used to represent the objects. Unfortunately, one must know how the brain encodes visual information into memory to truly quantify capacity in this way.

However, Landauer (20) provided an alternate method for quantifying the capacity of memory by calculating the number of bits required to correctly make a decision about which items have been seen and which have not (21). Rather than assign images codes based on visual similarity, this model assigns each image a random code regardless of its visual appearance. Memory errors happen when two images are assigned the same code. In this model the optimal code length is computed from the total number of items to remember and the percentage correct achieved on a two-alternative forced-choice task (see SI Text). Importantly, this model does not take into account the content of the remembered items: the same code length would be obtained if people remembered 80% of 100 natural scenes or 80% of 100 colored letters. In other words, the bits in the model refer to content-independent memory addresses, and not estimated codes used by the visual system.

Given the 93% performance in the novel condition, the optimal code would require 13.8 bits per item, which is comparable with estimates of 10–14 bits needed for previous large-scale experiments (20). To expand on the model of Landauer, we assume a hierarchical model of memory where we first specify the category and the additional bits of information specify the exemplar and state of the item in that category (see SI Text). To match 88% performance in the exemplar conditions, 2.0 additional bits per item are required for each item. Similarly, 2.0 additional bits are required to achieve 87% correct in the state condition. Thus, we increase the estimated code length from 13.8 to 17.8 bits per item. This raises the lower bound on our estimate of the representational capacity of long-term memory by an order of magnitude, from ≈14,000 (213.8) to ≈228,000 (217.8) unique codes. This number does not tell us the true visual information capacity of the system. However, this model is a formal way of demonstrating how quickly any estimate of memory capacity grows if we increase the size of the representation of each individual object in memory.

Why examine the capacity of people to remember visual information? One reason is that the evolution of more complicated cognition and behavioral repertoires involved the gradual enlargement of the long-term memory capacities of the brain (22). In particular, there are reasons to believe the capacity of our memory systems to store perceptual information may be a critical factor in abstract reasoning (23, 24). It has been argued, for example, that abstract conceptual knowledge that appears amodal and abstracted from actual experience (25) may in fact be grounded in perceptual knowledge (e.g., perceptual symbol systems; see ref. 26). Under this view, abstract conceptual properties are created on the fly by mental simulations on perceptual knowledge. This view suggests an adaptive significance for the ability to encode a large amount of information in memory: storing large amounts of perceptual information allows abstraction based on all available information, rather than requiring a decision about what information might be necessary at some later point in time (24, 27).

Organization of Memory.

All 2,500 items in our study stream were categorically distinct and thus had different high level, conceptual representations. Long-term memory is often seen as organized by conceptual similarity (e.g., in spreading activation models; see refs. 28 and 29). Thus, the conceptual distinctiveness of the objects may have reduced interference between them and helped support the remarkable memory performance we observed (18). In addition, recent work has suggested that the representation of the perceptual features of an object may often differ depending on the category the object is drawn from (30). Taken together, these ideas suggest an important role for categories and concepts in the storage of the visual details of objects, an important area of future research.

Another possible distinction in the organization of memory is between memory for objects, memory for collections of objects, and memory for scenes. Whereas some work has shown that it is possible to remember details from scenes drawn from the same category (15), future work is required to examine massive and detailed memory for complex scenes.

Familiarity versus Recollection.

The literature on long-term memory frequently distinguishes between two types of recognition memory: familiarity, the sense that you have seen something before; and recollection, specific knowledge of where you have seen it (31). However, there remains controversy over the extent to which these types of memory can be dissociated, and the extent to which forced-choice judgments tap into familiarity more than recollection or vice versa (32). In addition, whereas some have argued that perceptual information is often more associated with familiarity and conceptual information is associated more with recollection (31), this view also remains disputed (32, 33). Thus, it is unclear the relative extent to which choices of the observers in the current two-alternative forced-choice tests were based on familiarity versus recollection. Given the perceptual nature of the details required to select the studied item, it is likely that familiarity has a major role, and that recollection aids recognition performance on the subset of trials in which observers were explicitly aware of the details that were most helpful to their decision (a potentially large subset of trials, based on self-reports). Importantly, however, whether observers were depending on recollection or familiarity alone, the stored representation still requires enough detail to distinguish it from the foil at test. Our main conclusion is the same whether the memory is subserved by familiarity or recollection: observers encoded and retained many specific details about each object.

Constraints on Models of Object Recognition and Categorization.

Long-term memory capacity imposes a constraint on high-level cognitive functions and on neural models of such functions. For example, approaches to object recognition often vary by either relying on brute force online processing or a massive parallel memory (34, 35). The present data lend credence to object-recognition approaches that require massive storage of multiple object viewpoints and exemplars (36–39). Similarly, in the domain of categorization, a popular class of models, so-called exemplar models (40), have suggested that human categorization can best be modeled by positing storage of each exemplar that is viewed in a category. The present results demonstrate the feasibility of models requiring such large memory capacities.

In the domain of neural models, the present results imply that visual processing stages in the brain do not, by necessity, discard visual details. Current models of visual perception posit a hierarchy of processing stages that reach more and more abstract representations in higher-level cortical areas (35, 41). Thus, to maintain featural details, long-term memory representations of objects might be stored throughout the entire hierarchy of the visual processing stream, including early visual areas, possibly retrieved on demand by means of a feedback process (41, 42). Indeed, imagery processes, a form of representation retrieval, have been shown to activate both high-level visual cortical areas and primary visual cortex (43). In addition, functional MRI studies have indicated that a relatively mid-level visual area, the right fusiform gyrus, responds more when observers are encoding objects for which they will later remember the specific exemplar, compared with objects for which they will later remember only the gist (44). Understanding the neural substrates underlying this massive and detailed storage of visual information is an important goal for future research and will inform the study of visual object recognition and categorization.

Conclusion

The information capacity of human memory has an important role in cognitive and neural models of memory, recognition, and categorization, because models of these processes implicitly or explicitly make claims about the level of detail stored in memory. Detailed representations allow more computational flexibility because they enable processing at task-relevant levels of abstraction (24, 27), but these computational advantages tradeoff with the costs of additional storage. Therefore, establishing the bounds on the information capacity of human memory is critical to understanding the computational constraints on visual and cognitive tasks.

The upper bound on the size of visual long-term memory has not been reached, even with previous attempts to push the quantity of items (4), or the attempt of the present study to push both the quantity and fidelity. Here, we raise only the lower bound of what is possible, by showing that visual long-term memory representations can contain not only gist information but also details sufficient to discriminate between exemplars and states. We think that examining the fidelity of memory representations is an important addition to existing frameworks of visual long-term memory capacity. Whereas in everyday life we may often fail to encode the details of objects or scenes (7, 8, 17), our results suggest that under conditions where we attempt to encode such details, we are capable of succeeding.

Materials and Methods

Participants.

Fourteen adults (aged 20–35) gave informed consent and participated in the experiment. All of the participants were tested simultaneously, by using computer workstations that were closely matched for monitor size and viewing distance.

Stimuli.

Stimuli were gathered by using both a commercially available database (Hemera Photo-Objects, Vol. I and II) and internet searches by using Google Image Search. Overall, 2,600 categorically distinct images were gathered for the main database, plus 200 paired exemplar images and 200 paired state images drawn from categories not represented in the main database. The experimental stimuli are available from the authors. Once these images had been gathered, 200 were selected at random from the 2,600 objects to serve in the novel test condition. Thus, all participants were tested with the same 300 pairs of novel, exemplar, and state images. However, the item seen during the study session and the item used as the foil at test were randomized across participants.

Study Blocks.

The experiment was broken up into 10 study blocks of ≈20 min each, followed by a 30 min of testing session. Between blocks participants were given a 5-min break, and were not allowed to discuss any of the images they had seen. During a block, ≈300 images were shown, with 2,896 images shown overall: 2,500 new and 396 repeated images. Each image (subtending 7.5 by 7.5° of visual angle) was presented for 3 s, followed by an 800-ms fixation cross.

Repeat-Detection Task.

To maintain attention and to probe online memory capacity, participants performed a repeat-detection task during the 10 study blocks. Repeated images were inserted into the stream such that there were between 0 and 1,023 intervening items, and participants were told to respond by using the spacebar anytime that an image repeated throughout the entire study period. They were not informed of the structure of the repeat conditions. Participants were given feedback only when they responded, with the fixation cross turning red if they had incorrectly pressed the space bar (false alarm) or green if they had correctly detected a repeat (hit), and were given no feedback for misses or correct rejections.

Overall, 56 images were repeated immediately (1-back), 52 were repeated with 1 intervening item (2-back), 48 were repeated with 3 intervening items (4-back), 44 were repeated with 7 intervening items (8-back), and so forth, down to 16 repeated with 1,023 intervening items (1,024-back). Repeat items were inserted into the stream uniformly, with the constraint that all of the lengths of n-backs (1-back, 2-back, 4-back, and 1,024-back) had to occur equally in the first half of the experiment and the second half. This design ensured that fatigue would not differentially affect images that were repeated from further back in the stream. Due to the complexity of generating a properly counterbalanced set of repeats, all participants had repeated images appear at the same places within the stream. However, each participant saw a different order of the 2,500 objects, and the specific images repeated in the n-back conditions were also different across participants. Images that would later be tested in one of the three memory conditions were never repeated during the study period.

Forced-Choice Tests.

Following a 10-min break after the study period, we probed the fidelity with which objects were remembered. Two items were presented on the screen, one previously seen old item, and one new foil item. Observers reported which item they had seen before in a two-alternative forced-choice task.

Participants were allowed to proceed at their own pace and were told to emphasize accuracy, not speed, in making their judgments. The 300 test trials were presented in a random order for each participant, with the three types of test trials (novel, exemplar, and state) interleaved. The images that would later be tested were distributed uniformly throughout the study period.

Supplementary Material

Acknowledgments.

We thank P. Cavanagh, M. Chun, M. Greene, A. Hollingworth, G. Kreiman, K. Nakayama, T. Poggio, M. Potter, R. Rensink, A. Schachner, T. Thompson, A. Torralba, and J. Wolfe for helpful conversation and comments on the manuscript. This work was partly funded by National Institutes of Health Training Grant T32-MH020007 (to T.F.B.), a National Defense Science and Engineering Graduate Fellowship (T.K.), National Research Service Award Fellowship F32-EY016982 (to G.A.A.), and National Science Foundation (NSF) Career Award IIS-0546262 and NSF Grant IIS-0705677 (to A.O.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0803390105/DCSupplemental.

References

- 1.Sperling G. The information available in brief visual presentations. Psychol Monogr. 1960;74:1–29. [Google Scholar]

- 2.Phillips WA. On the distinction between sensory storage and short-term visual memory. Percept Psychophys. 1974;16:283–290. [Google Scholar]

- 3.Brainerd CJ, Reyna VF. The Science of False Memory. New York: Oxford Univ Press; 2005. [Google Scholar]

- 4.Standing L. Learning 10,000 pictures. Q J Exp Psychol. 1973;25:207–222. doi: 10.1080/14640747308400340. [DOI] [PubMed] [Google Scholar]

- 5.Shepard RN. Recognition memory for words, sentences, and pictures. J Verb Learn Verb Behav. 1967;6:156–163. [Google Scholar]

- 6.Standing L, Conezio J, Haber RN. Perception and memory for pictures: Single-trial learning of 2500 visual stimuli. Psychon Sci. 1970;19:73–74. [Google Scholar]

- 7.Rensink RA, O'Regan JK, Clark JJ. To see or not to see: The need for attention to perceive changes in scenes. Psychol Sci. 1997;8:368–373. [Google Scholar]

- 8.Simons DJ, Levin DT. Change blindness. Trends Cogn Sci. 1997;1:261–267. doi: 10.1016/S1364-6613(97)01080-2. [DOI] [PubMed] [Google Scholar]

- 9.Loftus EF. Our changeable memories: Legal and practical implications. Nat Rev Neurosci. 2003;4:231–234. doi: 10.1038/nrn1054. [DOI] [PubMed] [Google Scholar]

- 10.Chun MM. In: Cognitive Vision. Psychology of Learning and Motivation: Advances in Research and Theory. Irwin D, Ross BH, editors. Vol 42. San Diego, CA: Academic; 2003. pp. 79–108. [Google Scholar]

- 11.Wolfe JM. Visual Memory: What do you know about what you saw? Curr Biol. 1998;8:R303–R304. doi: 10.1016/s0960-9822(98)70192-7. [DOI] [PubMed] [Google Scholar]

- 12.O'Regan JK, Noë A. A sensorimotor account of vision and visual consciousness. Behav Brain Sci. 2001;24:939–1011. doi: 10.1017/s0140525x01000115. [DOI] [PubMed] [Google Scholar]

- 13.Hollingworth A. Constructing visual representations of natural scenes: The roles of short- and long-term visual memory. J Exp Psychol Hum Percept Perform. 2004;30:519–537. doi: 10.1037/0096-1523.30.3.519. [DOI] [PubMed] [Google Scholar]

- 14.Tatler BW, Melcher D. Pictures in mind: Initial encoding of object properties varies with the realism of the scene stimulus. Perception. 2007;36:1715–1729. doi: 10.1068/p5592. [DOI] [PubMed] [Google Scholar]

- 15.Vogt S, Magnussen S. Long-term memory for 400 pictures on a common theme. Exp Psycol. 2007;54:298–303. doi: 10.1027/1618-3169.54.4.298. [DOI] [PubMed] [Google Scholar]

- 16.Castelhano M, Henderson J. Incidental visual memory for objects in scenes. Vis Cog. 2005;12:1017–1040. [Google Scholar]

- 17.Marmie WR, Healy AF. Memory for common objects: Brief intentional study is sufficient to overcome poor recall of US coin features. Appl Cognit Psychol. 2004;18:445–453. [Google Scholar]

- 18.Koutstaal W, Schacter DL. Gist-based false recognition of pictures in older and younger adults. J Mem Lang. 1997;37:555–583. [Google Scholar]

- 19.Intraub H, Hoffman JE. Remembering scenes that were never seen: Reading and visual memory. Am J Psychol. 1992;105:101–114. [PubMed] [Google Scholar]

- 20.Landauer TK. How much do people remember? Some estimates of the quantity of learned information in long-term memory. Cognit Sci. 1986;10:477–493. [Google Scholar]

- 21.Dudai Y. How big is human memory, or on being just useful enough. Learn Mem. 1997;3:341–365. doi: 10.1101/lm.3.5.341. [DOI] [PubMed] [Google Scholar]

- 22.Fagot J, Cook RG. Evidence for large long-term memory capacities in baboons and pigeons and its implications for learning and the evolution of cognition. Proc Natl Acad Sci USA. 2006;103:17564–17567. doi: 10.1073/pnas.0605184103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fuster JM. More than working memory rides on long-term memory. Behav Brain Sci. 2003;26:737. doi: 10.1017/s0140525x03000165. [DOI] [PubMed] [Google Scholar]

- 24.Palmeri TJ, Tarr MJ. In: Visual Memory. Luck SJ, Hollingworth A, editors. New York: Oxford Univ Press; 2008. pp. 163–207. [Google Scholar]

- 25.Fodor JA. The Language of Thought. Cambridge, MA: Harvard Univ Press; 1975. [Google Scholar]

- 26.Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- 27.Barsalou LW. In: Content and Process Specificity in the Effects of Prior Experiences. Srull TK, Wyer RS Jr, editors. Hillsdale, NJ: Erlbaum; 1990. pp. 61–88. [Google Scholar]

- 28.Collins AM, Loftus EF. A spreading activation theory of semantic processing. Psychol Rev. 1975;82:407–428. [Google Scholar]

- 29.Anderson JR. Retrieval of information from long-term memory. Science. 1983;220:25–30. doi: 10.1126/science.6828877. [DOI] [PubMed] [Google Scholar]

- 30.Sigala N, Gabbiani F, Logothetis NK. Visual categorization and object representation in monkeys and humans. J Cog Neuro. 2002;14:187–198. doi: 10.1162/089892902317236830. [DOI] [PubMed] [Google Scholar]

- 31.Mandler G. Recognizing: The judgment of previous occurrence. Psychol Rev. 1980;87:252–271. [Google Scholar]

- 32.Yonelinas AP. The nature of recollection and familiarity: A review of 30 years of research. J Mem Lang. 2002;46:441–517. [Google Scholar]

- 33.Jacoby LL. A process dissociation framework: Separating automatic from intentional uses of memory. J Mem Lang. 1991;30:513–541. [Google Scholar]

- 34.Tarr MJ, Bulthoff HH. Image-based object recognition in man, monkey and machine. Cognition. 1998;67:1–20. doi: 10.1016/s0010-0277(98)00026-2. [DOI] [PubMed] [Google Scholar]

- 35.Riesenhuber M, Poggio T. Hierarchical models of object recognition in cortex. Nat Neurosci. 1999;2:1019–1025. doi: 10.1038/14819. [DOI] [PubMed] [Google Scholar]

- 36.Logothetis NK, Pauls J, Poggio T. Shape representation in the inferior temporal cortex of monkeys. Curr Biol. 1995;5:552–563. doi: 10.1016/s0960-9822(95)00108-4. [DOI] [PubMed] [Google Scholar]

- 37.Tarr MJ, Williams P, Hayward WG, Gauthier I. Three-dimensional object recognition is viewpoint dependent. Nat Neurosci. 1998;1:275–277. doi: 10.1038/1089. [DOI] [PubMed] [Google Scholar]

- 38.Bulthoff HH, Edelman S. Psychological support for a two-dimensional view interpolation theory of object recognition. Proc Natl Acad Sci USA. 1992;89:60–64. doi: 10.1073/pnas.89.1.60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Torralba A, Fergus R, Freeman W. 80 million tiny images: A large dataset for non-parametric object and scene recognition. IEEE Trans PAMI. doi: 10.1109/TPAMI.2008.128. in press. [DOI] [PubMed] [Google Scholar]

- 40.Nosofsky RM. Attention, similarity, and the identification-categorization relationship. J Exp Psychol Gen. 1986;115:39–61. doi: 10.1037//0096-3445.115.1.39. [DOI] [PubMed] [Google Scholar]

- 41.Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends Cogn Sci. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- 42.Wheeler ME, Petersen SE, Buckner RL. Memory's echo: Vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci USA. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kosslyn SM, et al. The Role of Area 17 in Visual Imagery: Convergent Evidence from PET and rTMS. Science. 1999;284:167–170. doi: 10.1126/science.284.5411.167. [DOI] [PubMed] [Google Scholar]

- 44.Garoff RJ, Slotnick SD, Schacter DL. The neural origins of specific and general memory: The role of fusiform cortex. Neuropsychologia. 2005;43:847–859. doi: 10.1016/j.neuropsychologia.2004.09.014. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.