Abstract

Reward-modulated spike-timing-dependent plasticity (STDP) has recently emerged as a candidate for a learning rule that could explain how behaviorally relevant adaptive changes in complex networks of spiking neurons could be achieved in a self-organizing manner through local synaptic plasticity. However, the capabilities and limitations of this learning rule could so far only be tested through computer simulations. This article provides tools for an analytic treatment of reward-modulated STDP, which allows us to predict under which conditions reward-modulated STDP will achieve a desired learning effect. These analytical results imply that neurons can learn through reward-modulated STDP to classify not only spatial but also temporal firing patterns of presynaptic neurons. They also can learn to respond to specific presynaptic firing patterns with particular spike patterns. Finally, the resulting learning theory predicts that even difficult credit-assignment problems, where it is very hard to tell which synaptic weights should be modified in order to increase the global reward for the system, can be solved in a self-organizing manner through reward-modulated STDP. This yields an explanation for a fundamental experimental result on biofeedback in monkeys by Fetz and Baker. In this experiment monkeys were rewarded for increasing the firing rate of a particular neuron in the cortex and were able to solve this extremely difficult credit assignment problem. Our model for this experiment relies on a combination of reward-modulated STDP with variable spontaneous firing activity. Hence it also provides a possible functional explanation for trial-to-trial variability, which is characteristic for cortical networks of neurons but has no analogue in currently existing artificial computing systems. In addition our model demonstrates that reward-modulated STDP can be applied to all synapses in a large recurrent neural network without endangering the stability of the network dynamics.

Author Summary

A major open problem in computational neuroscience is to explain how learning, i.e., behaviorally relevant modifications in the central nervous system, can be explained on the basis of experimental data on synaptic plasticity. Spike-timing-dependent plasticity (STDP) is a rule for changes in the strength of an individual synapse that is supported by experimental data from a variety of species. However, it is not clear how this synaptic plasticity rule can produce meaningful modifications in networks of neurons. Only if one takes into account that consolidation of synaptic plasticity requires a third signal, such as changes in the concentration of a neuromodulator (that might, for example, be related to rewards or expected rewards), then meaningful changes in the structure of networks of neurons may occur. We provide in this article an analytical foundation for such reward-modulated versions of STDP that predicts when this type of synaptic plasticity can produce functionally relevant changes in networks of neurons. In particular we show that seemingly inexplicable experimental data on biofeedback, where a monkey learnt to increase the firing rate of an arbitrarily chosen neuron in the motor cortex, can be explained on the basis of this new learning theory.

Introduction

Numerous experimental studies (see [1] for a review; [2] discusses more recent in-vivo results) have shown that the efficacy of synapses changes in dependence of the time difference Δt = tpost−tpre between the firing times tpre and tpost of the pre- and postsynaptic neurons. This effect is called spike-timing-dependent plasticity (STDP). But a major puzzle for understanding learning in biological organisms is the relationship between experimentally well-established rules for STDP on the microscopic level, and adaptive changes of the behavior of biological organisms on the macroscopic level. Neuromodulatory systems, which send diffuse signals related to reinforcements (rewards) and behavioral state to several large networks of neurons in the brain, have been identified as likely intermediaries that relate these two levels of plasticity. It is well-known that the consolidation of changes of synaptic weights in response to pre- and postsynaptic neuronal activity requires the presence of such third signals [3],[4]. In particular, it has been demonstrated that dopamine (which is behaviorally related to novelty and reward prediction [5]) gates plasticity at corticostriatal synapses [6],[7] and within the cortex [8]. It has also been shown that acetylcholine gates synaptic plasticity in the cortex (see for example [9] and [10],[11] contains a nice review of the literature).

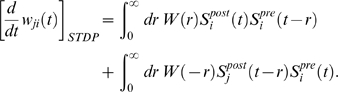

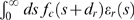

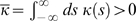

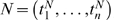

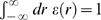

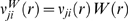

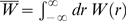

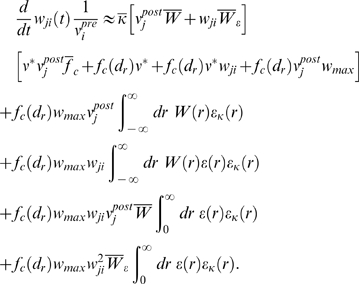

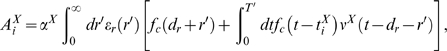

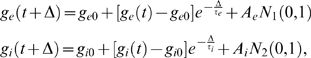

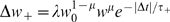

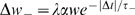

Corresponding spike-based rules for synaptic plasticity of the form

| (1) |

have been proposed in [12] and [13] (see Figure 1 for an illustration of this learning rule), where wji is the weight of a synapse from neuron i to neuron j, cji(t) is an eligibility trace of this synapse which collects weight changes proposed by STDP, and d(t) = h(t)−h̅ results from a neuromodulatory signal h(t) with mean value h̅. It was shown in [12] that a number of interesting learning tasks in large networks of neurons can be accomplished with this simple rule in Equation 1. It has recently been shown that quite similar learning rules for spiking neurons arise when one applies the general framework of distributed reinforcement learning from [14] to networks of spiking neurons [13],[15], or if one maximizes the likelihood of postsynaptic firing at desired firing times [16]. However no analytical tools have been available, which make it possible to predict for what learning tasks, and under which parameter settings, reward-modulated STDP will be successful. This article provides such analytical tools, and demonstrates their applicability and significance through a variety of computer simulations. In particular, we identify conditions under which neurons can learn through reward-modulated STDP to classify temporal presynaptic firing patterns, and to respond with particular spike patterns.

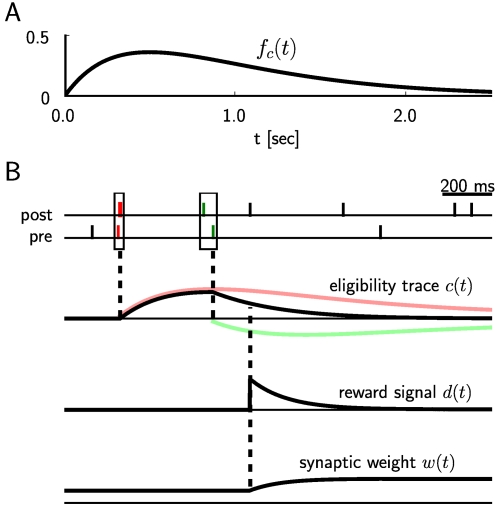

Figure 1. Scheme of reward-modulated STDP according to Equations 1–4.

(A) Eligibility function fc(t), which scales the contribution of a pre/post spike pair (with the second spike at time 0) to the eligibility trace c(t) at time t. (B) Contribution of a pre-before-post spike pair (in red) and a post-before-pre spike pair (in green) to the eligibility trace c(t) (in black), which is the sum of the red and green curves. According to Equation 1 the change of the synaptic weight w is proportional to the product of c(t) with a reward signal d(t).

We also provide a model for the remarkable operant conditioning experiments of [17] (see also [18],[19]). In the simpler ones of these experiments the spiking activity of single neurons (in area 4 of the precentral gyrus of monkey cortex) was recorded, the deviation of the current firing rate of an arbitrarily selected neuron from its average firing rate was made visible to the monkey through the displacement of an illuminated meter arm, whose rightward position corresponded to the threshold for the feeder discharge. The monkey received food rewards for increasing (or in alternating trials for decreasing) the firing rate of this neuron. The monkeys learnt quite reliably (within a few minutes) to change the firing rate of this neuron in the currently rewarded direction. Adjacent neurons tended to change their firing rate in the same direction, but also differential changes of directions of firing rates of pairs of neurons are reported in [17] (when these differential changes were rewarded). For example, it was shown in Figure 9 of [17] (see also Figure 1 in [19]) that pairs of neurons that were separated by no more than a few hundred microns could be independently trained to increase or decrease their firing rates. Obviously the existence of learning mechanisms in the brain which are able to solve this extremely difficult credit assignment problem provides an important clue for understanding the organization of learning in the brain. We examine in this article analytically under what conditions reward-modulated STDP is able to solve such learning problem. We test the correctness of analytically derived predictions through computer simulations of biologically quite realistic recurrently connected networks of neurons, where an increase of the firing rate of one arbitrarily selected neuron within a network of 4000 neurons is reinforced through rewards (which are sent to all 142813 synapses between excitatory neurons in this recurrent network). We also provide a model for the more complex operant conditioning experiments of [17] by showing that pairs of neurons can be differentially trained through reward-modulated STDP, where one neuron is rewarded for increasing its firing rate, and simultaneously another neuron is rewarded for decreasing its firing rate. More precisely, we increased the reward signal d(t) which is transmitted to all synapses between excitatory neurons in the network whenever the first neuron fired, and decreased this reward signal whenever the second neuron fired (the resulting composed reward corresponds to the displacement of the meter arm that was shown to the monkey in these more complex operant conditioning experiments).

Our theory and computer simulations also show that reward-modulated STDP can be applied to all synapses within a large network of neurons for long time periods, without endangering the stability of the network. In particular this synaptic plasticity rule keeps the network within the asynchronous irregular firing regime, which had been described in [20] as a dynamic regime that resembles spontaneous activity in the cortex. Another interesting aspect of learning with reward-modulated STDP is that it requires spontaneous firing and trial-to-trial variability within the networks of neurons where learning takes place. Hence our learning theory for this synaptic plasticity rule provides a foundation for a functional explanation of these characteristic features of cortical network of neurons that are undesirable from the perspective of most computational theories.

Results

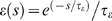

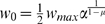

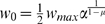

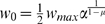

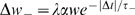

We first give a precise definition of the learning rule in Equation 1 for reward-modulated STDP. The standard rule for STDP, which specifies the change W(Δt) of the synaptic weight of an excitatory synapse in dependence on the time difference Δt = tpost−tpre between the firing times tpre and tpost of the pre- and postsynaptic neuron, is based on numerous experimental data (see [1]). It is commonly modeled by a so-called learning curve of the form

|

(2) |

where the positive constants A

+

and A

− scale the strength of potentiation and

depression respectively, and τ

+ and

τ

− are positive time constants

defining the width of the positive and negative learning window. The resulting

weight change at time t of synapse ji for a

presynaptic spike train  and a postsynaptic spike train

and a postsynaptic spike train  is usually modeled [21] by the instantaneous

application of this learning rule to all spike pairings with the second spike at

time t

is usually modeled [21] by the instantaneous

application of this learning rule to all spike pairings with the second spike at

time t

|

(3) |

The spike train of a neuron i which fires action

potentials at times  ,

,  ,

,  ,… is formalized here by a sum of Dirac delta functions

,… is formalized here by a sum of Dirac delta functions  .

.

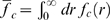

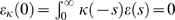

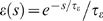

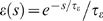

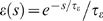

The model analyzed in this article is based on the assumption that positive and negative weight changes suggested by STDP for all pairs of pre- and postsynaptic spikes at synapse ji (according to the two integrals in Equation 3) are collected in an eligibility trace cji(t) at the site of the synapse. The contribution to cij(t) of all spike pairings with the second spike at time t−s is modeled for s>0 by a function fc(s) (see Figure 1A); the time scale of the eligibility trace is assumed in this article to be on the order of seconds. Hence the value of the eligibility trace of synapse ji at time t is given by

| (4) |

see Figure 1B.

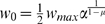

The actual weight change  at time t for reward-modulated STDP is the

product

cij(t)·d(t)

of the eligibility trace with the reward signal

d(t) as defined by Equation 1. Since this simple

model can in principle lead to unbounded growth of weights, we assume that weights

are clipped at the lower boundary value 0 and an upper boundary

wmax.

at time t for reward-modulated STDP is the

product

cij(t)·d(t)

of the eligibility trace with the reward signal

d(t) as defined by Equation 1. Since this simple

model can in principle lead to unbounded growth of weights, we assume that weights

are clipped at the lower boundary value 0 and an upper boundary

wmax.

The network dynamics of a simulated recurrent network of spiking neurons where all connections between excitatory neurons are subject to STDP is quite sensitive to the particular STDP-rule that is used. Therefore we have carried out our network simulations not only with the additive STDP-rule in Equation 3, whose effect can be analyzed theoretically, but also with the more complex rule proposed in [22] (which was fitted to experimental data from hippocampal neurons in culture [23]), where the magnitude of the weight change depends on the current value of the weight. An implementation of this STDP-rule (with the parameters proposed in [22]) produced in our network simulations of the biofeedback experiment (computer simulation 1) as well as for learning pattern classification (computer simulation 4) qualitatively the same result as the rule in Equation 3.

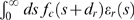

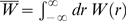

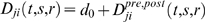

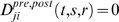

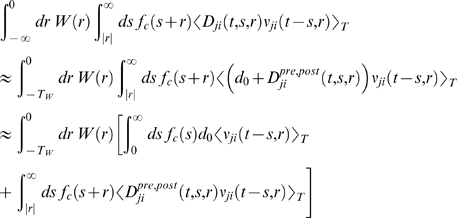

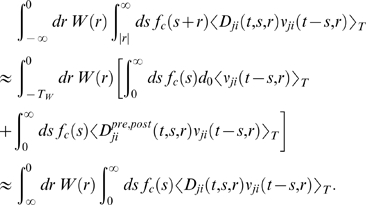

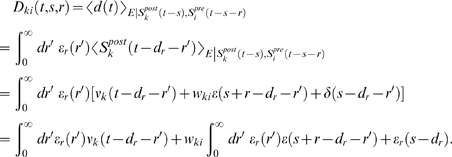

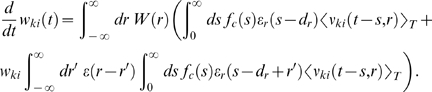

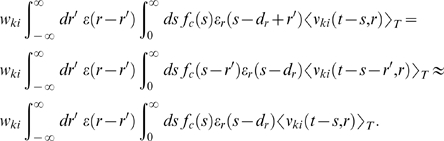

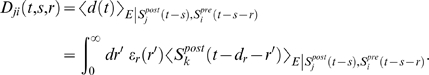

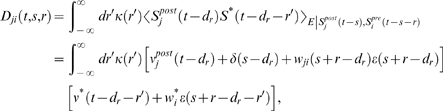

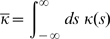

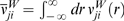

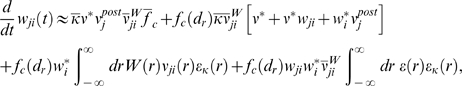

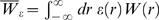

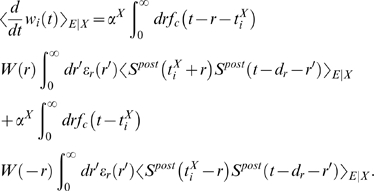

Theoretical Analysis of the Resulting Weight Changes

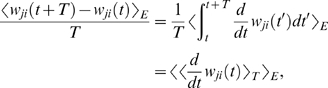

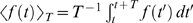

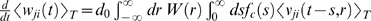

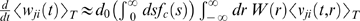

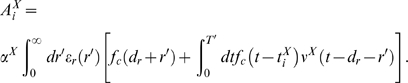

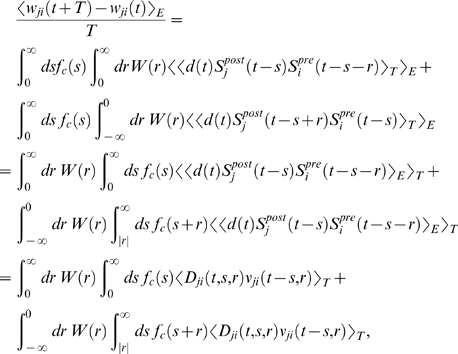

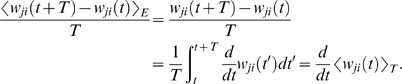

In this section, we derive a learning equation for reward-modulated STDP. This learning equation relates the change of a synaptic weight wji over some sufficiently long time interval T to statistical properties of the joint distribution of the reward signal d(t) and pre- and postsynaptic firing times, under the assumption that the weight and correlations between pre- and postsynaptic spike times are slowly varying in time. We treat spike times as well as the reward signal d(t) as stochastic variables. This mathematical framework allows us to derive the expected weight change over some time interval T (see [21]), with the expectation taken over realizations of the stochastic input- and output spike trains as well as stochastic realizations of the reward signal, denoted by the ensemble average 〈·〉E

|

(5) |

where we used the abbreviation  . If synaptic plasticity is sufficiently slow, synaptic weights

integrate a large number of small changes. In this case, the weight

wji can be approximated by its average

〈wji〉E (it is “self-averaging”, see [21]). We can thus

drop the expectation on the left hand side of Equation 5 and write it as

. If synaptic plasticity is sufficiently slow, synaptic weights

integrate a large number of small changes. In this case, the weight

wji can be approximated by its average

〈wji〉E (it is “self-averaging”, see [21]). We can thus

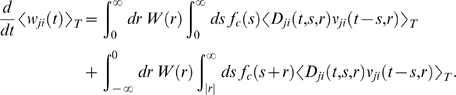

drop the expectation on the left hand side of Equation 5 and write it as  . Using Equation 1, this yields (see Methods)

. Using Equation 1, this yields (see Methods)

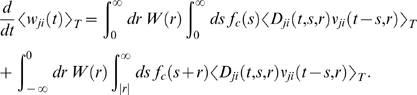

|

(6) |

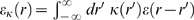

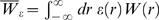

This formula contains the reward correlation for synapse ji

| (7) |

which is the average reward at time t given a presynaptic spike at time t−s−r and a postsynaptic spike at time t−s. The joint firing rate νji(t,r) = 〈Sj(t)Si(t−r)〉E describes correlations between spike timings of neurons j and i, i.e., it is the probability density for the event that neuron i fires an action potential at time t−r and neuron j fires an action potential at time t. For synapses subject to reward-modulated STDP, changes in efficacy are obviously driven by co-occurrences of spike pairings and rewards within the time scale of the eligibility trace. Equation 6 clarifies how the expected weight change depends on how the correlations between the pre- and postsynaptic neurons correlate with the reward signal.

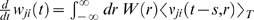

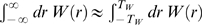

If one assumes for simplicity that the impact of a spike pair on the eligibility trace is always triggered by the postsynaptic spike, one gets a simpler equation (see Methods)

| (8) |

The assumption introduces a small error for post-before-pre spike

pairs, because for a reward signal that arrives at some time

dr after the pairing, the weight update will be

proportional to fc(dr)

instead of

fc(dr+r).

The approximation is justified if the temporal average is performed on a much

longer time scale than the time scale of the learning window, the effect of each

pre-post spike pair on the reward signal is delayed by an amount greater than

the time scale of the learning window, and fc

changes slowly compared to the time scale of the learning window (see Methods for details). For the analyzes

presented in this article, the simplified Equation 8 is a good approximation for

the learning dynamics. Equation 8 is a generalized version of the STDP learning

equation  in [21] that includes the impact of the reward

correlation weighted by the eligibility function. To see the relation between

standard STDP and reward-modulated STDP, consider a constant reward signal

d(t) = d

0.

Then also the reward correlation is constant and given by

D(t,s,r) = d

0.

We recover the standard STDP learning equation scaled by

d

0 if the eligibility function is an

instantaneous delta-pulse

fc(s) = δ(s).

Furthermore, if the statistics of the reward signal

d(t) is time-independent and independent from

the pre- and postsynaptic spike statistics of some synapse ji,

then the reward correlation is given by

Dji(t,s,r) = 〈d(t)〉E = d

0 for

some constant d

0. Then, the weight change for

synapse ji is

in [21] that includes the impact of the reward

correlation weighted by the eligibility function. To see the relation between

standard STDP and reward-modulated STDP, consider a constant reward signal

d(t) = d

0.

Then also the reward correlation is constant and given by

D(t,s,r) = d

0.

We recover the standard STDP learning equation scaled by

d

0 if the eligibility function is an

instantaneous delta-pulse

fc(s) = δ(s).

Furthermore, if the statistics of the reward signal

d(t) is time-independent and independent from

the pre- and postsynaptic spike statistics of some synapse ji,

then the reward correlation is given by

Dji(t,s,r) = 〈d(t)〉E = d

0 for

some constant d

0. Then, the weight change for

synapse ji is  . The temporal average of the joint firing rate

〈νji(t−s,r〉T is thus filtered by the eligibility trace. We assumed in the preceding

analysis that the temporal average is taken over some long time interval

T. If the time scale of the eligibility trace is much

smaller than this time interval T, then the weight change is

approximately

. The temporal average of the joint firing rate

〈νji(t−s,r〉T is thus filtered by the eligibility trace. We assumed in the preceding

analysis that the temporal average is taken over some long time interval

T. If the time scale of the eligibility trace is much

smaller than this time interval T, then the weight change is

approximately  , and the weight wji will change

according to standard STDP scaled by a constant proportional to the mean reward

and the integral over the eligibility function. In the remainder of this

article, we will always use the smooth time-averaged weight change

, and the weight wji will change

according to standard STDP scaled by a constant proportional to the mean reward

and the integral over the eligibility function. In the remainder of this

article, we will always use the smooth time-averaged weight change  , but for brevity, we will drop the angular brackets and simply

write

, but for brevity, we will drop the angular brackets and simply

write  .

.

The learning Equation 8 provides the mathematical basis for our following analyses. It allows us to determine synaptic weight changes if we can describe a learning situation in terms of reward correlations and correlations between pre- and postsynaptic spikes.

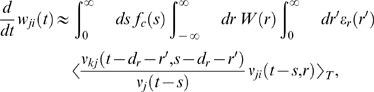

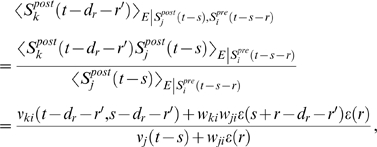

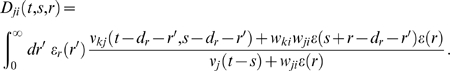

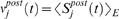

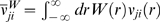

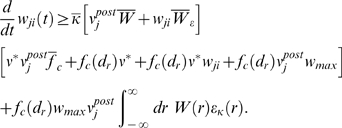

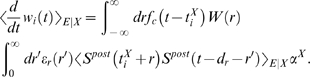

Application to Models for Biofeedback Experiments

We now apply the preceding analysis to the biofeedback experiment of [17] that were described in the introduction. These experiments pose the challenge to explain how learning mechanisms in the brain can detect and exploit correlations between rewards and the firing activity of one or a few neurons within a large recurrent network of neurons (the credit assignment problem), without changing the overall function or dynamics of the circuit.

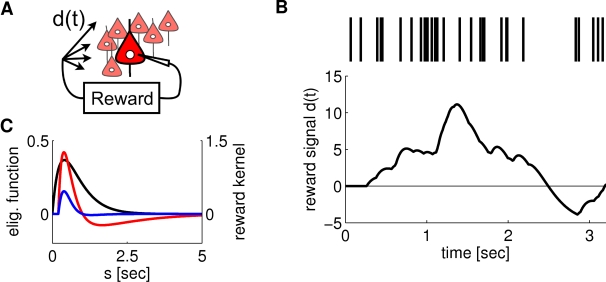

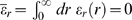

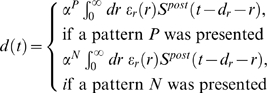

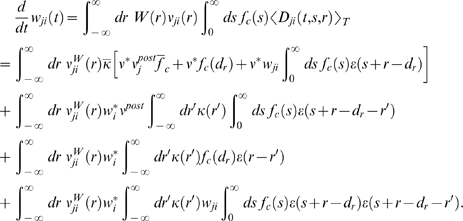

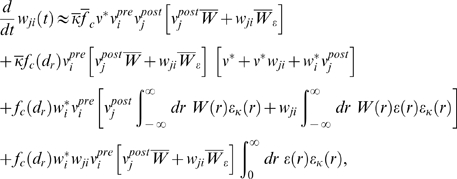

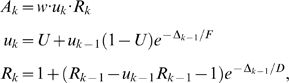

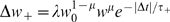

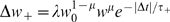

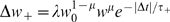

We show that this phenomenon can in principle be explained by reward-modulated STDP. In order to do that, we define a model for the experiment which allows us to formulate an equation for the reward signal d(t). This enables us to calculate synaptic weight changes for this particular scenario. We consider as model a recurrent neural circuit where the spiking activity of one neuron k is recorded by the experimenter (Experiments where two neurons are recorded and reinforced were also reported in [17]. We tested this case in computer simulations (see Figure 2) but did not treat it explicitly in our theoretical analysis). We assume that in the monkey brain a reward signal d(t) is produced which depends on the visual feedback (through an illuminated meter, whose pointer deflection was dependent on the current firing rate of the randomly selected neuron k) as well as previously received liquid rewards, and that this signal d(t) is delivered to all synapses in large areas of the brain. We can formalize this scenario by defining a reward signal which depends on the spike rate of the arbitrarily selected neuron k (see Figure 3A and 3B). More precisely, a reward pulse of shape εr(r) (the reward kernel) is produced with some delay dr every time the neuron k produces an action potential

| (9) |

Note that

d(t) = h(t)−h̅

is defined in Equation 1 as a signal with zero mean. In order to satisfy this

constraint, we assume that the reward kernel

εr has zero mass, i.e.,  . For the analysis, we use the linear Poisson neuron model

described in Methods. The mean weight

change for synapses to the reinforced neuron k is then

approximately (see Methods)

. For the analysis, we use the linear Poisson neuron model

described in Methods. The mean weight

change for synapses to the reinforced neuron k is then

approximately (see Methods)

|

(10) |

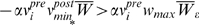

This equation describes STDP with a learning rate proportional to  . The outcome of the learning session will strongly depend on

this integral and thus on the form of the reward kernel

εr. In order to reinforce high firing

rates of the reinforced neuron we have chosen a reward kernel with a positive

bump in the first few hundred milliseconds, and a long negative tail afterwards.

Figure 3C shows the

functions fc and

εr that were used in our computer model, as

well as the product of these two functions. One sees that the integral over the

product is positive and according to Equation 10 the synapses to the reinforced

neuron are subject to STDP. This does not guarantee an increase of the firing

rate of the reinforced neuron. Instead, the changes of neuronal firing will

depend on the statistics of the inputs. In particular, the weights of synapses

to neuron k will not increase if that neuron does not fire

spontaneously. For uncorrelated Poisson input spike trains of equal rate, the

firing rate of a neuron trained by STDP stabilizes at some value which depends

on the input rate (see [24],[25]). However, in

comparison to the low spontaneous firing rates observed in the biofeedback

experiment [17], the stable firing rate under STDP can be much

higher, allowing for a significant rate increase. It was shown in [17] that

also low firing rates of a single neuron can be reinforced. In order to model

this, we have chosen a reward kernel with a negative bump in the first few

hundred milliseconds, and a long positive tail afterwards, i.e. we inverted the

kernel used above to obtain a negative integral

. The outcome of the learning session will strongly depend on

this integral and thus on the form of the reward kernel

εr. In order to reinforce high firing

rates of the reinforced neuron we have chosen a reward kernel with a positive

bump in the first few hundred milliseconds, and a long negative tail afterwards.

Figure 3C shows the

functions fc and

εr that were used in our computer model, as

well as the product of these two functions. One sees that the integral over the

product is positive and according to Equation 10 the synapses to the reinforced

neuron are subject to STDP. This does not guarantee an increase of the firing

rate of the reinforced neuron. Instead, the changes of neuronal firing will

depend on the statistics of the inputs. In particular, the weights of synapses

to neuron k will not increase if that neuron does not fire

spontaneously. For uncorrelated Poisson input spike trains of equal rate, the

firing rate of a neuron trained by STDP stabilizes at some value which depends

on the input rate (see [24],[25]). However, in

comparison to the low spontaneous firing rates observed in the biofeedback

experiment [17], the stable firing rate under STDP can be much

higher, allowing for a significant rate increase. It was shown in [17] that

also low firing rates of a single neuron can be reinforced. In order to model

this, we have chosen a reward kernel with a negative bump in the first few

hundred milliseconds, and a long positive tail afterwards, i.e. we inverted the

kernel used above to obtain a negative integral  . According to Equation 10 this leads to anti-STDP where not

only inputs to the reinforced neuron which have low correlations with the output

are depressed (because of the negative integral of the learning window), but

also those which are causally correlated with the output. This leads to a quick

firing rate decrease at the reinforced neuron.

. According to Equation 10 this leads to anti-STDP where not

only inputs to the reinforced neuron which have low correlations with the output

are depressed (because of the negative integral of the learning window), but

also those which are causally correlated with the output. This leads to a quick

firing rate decrease at the reinforced neuron.

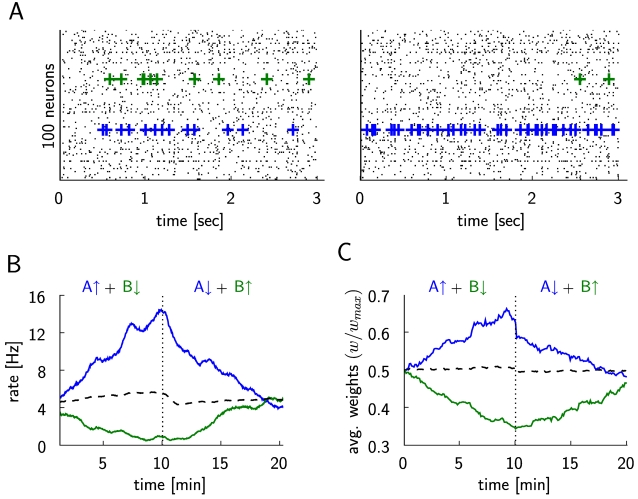

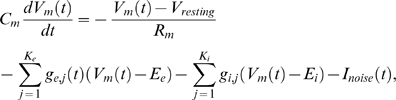

Figure 2. Differential reinforcement of two neurons (within a simulated network of 4000 neurons, the two rewarded neurons are denoted as A and B), corresponding to the experimental results shown in Figure 9 of [17] and Figure 1 of [19].

(A) The spike response of 100 randomly chosen neurons at the beginning of the simulation (20 sec–23 sec, left plot), and at the middle of simulation just before the switching of the reward policy (597 sec–600 sec, right plot). The firing times of the first reinforced neuron A are marked by blue crosses and those of the second reinforced neuron B are marked by green crosses. (B) The dashed vertical line marks the switch of the reinforcements at t = 10 min. The firing rate of neuron A (blue line) increases while it is positively reinforced in the first half of the simulation and decreases in the second half when its spiking is negatively reinforced. The firing rate of the neuron B (green line) decreases during the negative reinforcement in the first half and increases during the positive reinforcement in the second half of the simulation. The average firing rate of 20 other randomly chosen neurons (dashed line) remains unchanged. (C) Evolution of the average weight of excitatory synapses to the rewarded neurons A and B (blue and green lines, respectively), and of the average weight of 1744 randomly chosen excitatory synapses to other neurons in the circuit (dashed line).

Figure 3. Setup of the model for the experiment by Fetz and Baker [17].

(A) Schema of the model: The activity of a single neuron in the circuit determines the amount of reward delivered to all synapses between excitatory neurons in the circuit. (B) The reward signal d(t) in response to a spike train (shown at the top) of the arbitrarily selected neuron (which was selected from a recurrently connected circuit consisting of 4000 neurons). The level of the reward signal d(t) follows the firing rate of the spike train. (C) The eligibility function fc(s) (black curve, left axis), the reward kernel εr(s) delayed by 200 ms (red curve, right axis), and the product of these two functions (blue curve, right axis) as used in our computer experiment. The integral of fc(s+dr)εr(s) is positive, as required according to Equation 10 in order to achieve a positive learning rate for the synapses to the selected neuron.

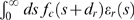

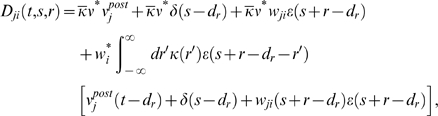

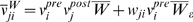

The mean weight change of synapses to non-reinforced neurons j≠k is given by

|

(11) |

where νj(t) = 〈Sj(t)〉E is the instantaneous firing rate of neuron j at time t. This equation indicates that a non-reinforced neuron is trained by STDP with a learning rate proportional to its correlation with the reinforced neuron given by νkj(t−dr−r′,s−dr−r′)/νj(t−s). In fact, it was noted in [17] that neurons nearby the reinforced neuron tended to change their firing rate in the same direction. This observation might be explained by putative correlations of the recorded neuron with nearby neurons. On the other hand, if a neuron j is uncorrelated with the reinforced neuron k, we can decompose the joint firing rate into νkj(t−dr−r′,s−dr−r′) = νk(t−dr−r′)νj(t−s). In this case, the learning rate for synapse ji is approximately zero (see Methods). This ensures that most neurons in the circuit keep a constant firing rate, in spite of continuous weight changes according to reward-modulated STDP.

Altogether we see that the weights of synapses to the reinforced neuron

k can only change if there is spontaneous activity in the

network, so that in particular also this neuron k fires

spontaneously. On the other hand the spontaneous network activity should not

consist of repeating large-scale spatio-temporal firing patterns, since that

would entail correlations between the firing of neuron k and

other neurons j, and would lead to similar changes of synapses

to these other neurons j. Apart from these requirements on the

spontaneous network activity, the preceding theoretical results predict that

stability of the circuit is preserved, while the neuron which is causally

related to the reward signal is trained by STDP, if  is positive.

is positive.

Computer Simulation 1: Model for Biofeedback Experiment

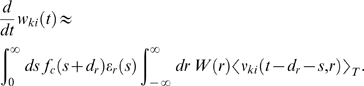

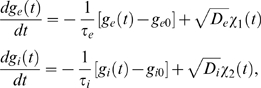

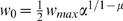

We tested these theoretical predictions through computer simulations of a generic cortical microcircuit receiving a reward signal which depends on the firing of one arbitrarily chosen neuron k from the circuit (reinforced neuron). The circuit was composed of 4000 LIF neurons, with 3200 being excitatory and 800 inhibitory, interconnected randomly by 228954 conductance based synapses with short term dynamics (All computer simulations were also carried out as a control with static current based synapses, see Methods and Suppl.). In addition to the explicitly modeled synaptic connections, conductance noise (generated by an Ornstein-Uhlenbeck process) was injected into each neuron according to data from [26], in order to model synaptic background activity of neocortical neurons in-vivo (More precisely, for 50% of the excitatory neurons the amplitude of the noise injection was reduced to 20%, and instead their connection probabilities from other excitatory neurons were chosen to be larger, see Methods and Figure S1 and Figure S2 for details. The reinforced neuron had to be chosen from the latter population, since reward-modulated STDP does not work properly if the postsynaptic neuron fires too often because of directly injected noise). This background noise elicited spontaneous firing in the circuit at about 4.6 Hz. Reward-modulated STDP was applied continuously to all synapses which had excitatory presynaptic and postsynaptic neurons, and all these synapses received the same reward signal. The reward signal was modeled according to Equation 9. Figure 3C shows one reward pulse caused by a single postsynaptic spike at time t = 0 with the parameters used in the experiment. For several postsynaptic spikes, the amplitude of the reward signal follows the firing rate of the reinforced neuron, see Figure 3B.

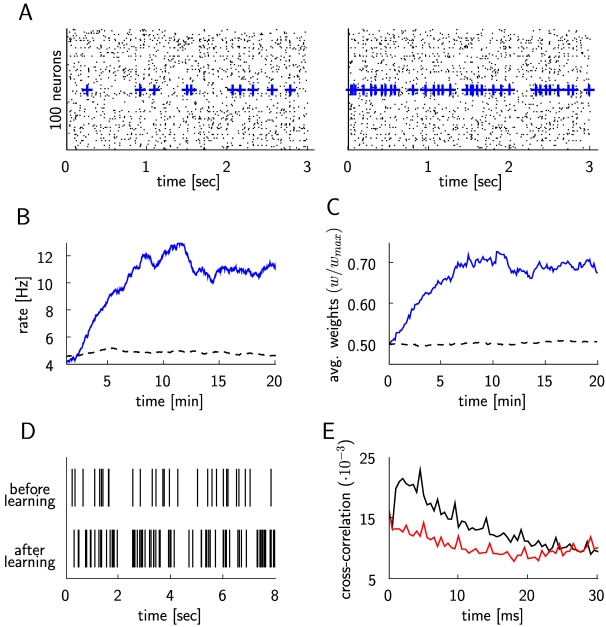

This model was simulated for 20 minutes of biological time. Figure 4A, 4B, and 4D show that the firing rate of the reinforced neuron increases within a few minutes (like in the experiment of [17]), while the firing rates of the other neurons remain largely unchanged. The increase of weights to the reinforced neuron shown in Figure 4C can be explained by the correlations between its presynaptic and postsynaptic spikes shown in panel E. This panel shows that pre-before-post spike pairings (black curve) are in general more frequent than post-before-pre spike pairings. The reinforced neuron increases its rate from around 4 Hz to 12 Hz, which is comparable to the measured firing rates in [15] before and after learning.

Figure 4. Simulation of the experiment by Fetz and Baker [17] for the case where an arbitrarily selected neuron triggers global rewards when it increases its firing rate.

(A) Spike response of 100 randomly chosen neurons within the recurrent network of 4000 neurons at the beginning of the simulation (20 sec–23 sec, left plot), and at the end of the simulation (the last 3 seconds, right plot). The firing times of the reinforced neuron are marked by blue crosses. (B) The firing rate of the positively rewarded neuron (blue line) increases, while the average firing rate of 20 other randomly chosen neurons (dashed line) remains unchanged. (C) Evolution of the average weight of excitatory synapses to the reinforced neuron (blue line), and of the average weight of 1663 randomly chosen excitatory synapses to other neurons in the circuit (dashed line). (D) Spike trains of the reinforced neuron before and after learning. (E) Histogram of the time-differences between presynaptic and postsynaptic spikes (bin size 0.5 ms), averaged over all excitatory synapses to the reinforced neuron. The black curve represents the histogram values for positive time differences (when the presynaptic spike precedes the postsynaptic spike), and the red curve represents the histogram for negative time differences.

In Figure 9 of [17] and

Figure 1 of [19] the

results of another experiment were reported where the activity of two adjacent

neurons was recorded, and high firing rates of the first neuron and low firing

rates of the second neuron were reinforced simultaneously. This kind of

differential reinforcement resulted in an increase and decrease of the firing

rates of the two neurons correspondingly. We implemented this type of

reinforcement by letting the reward signal in our model depend on the spikes of

the two randomly chosen neurons (we refer to these neurons as neuron A and

neuron B), i.e.  , where

, where  is the component that positively rewards spikes of neuron A,

and

is the component that positively rewards spikes of neuron A,

and  negatively rewards spikes of neuron B. Both parts of the

reward signal,

negatively rewards spikes of neuron B. Both parts of the

reward signal,  and

and  , were defined as in Equation 9 for the corresponding neuron.

For

, were defined as in Equation 9 for the corresponding neuron.

For  we used the reward kernel

εr as defined in Equation 29, whereas for

we used the reward kernel

εr as defined in Equation 29, whereas for  we used

εr

− = −εr

(note that the integral over

εr

− is still zero).

At the middle of the simulation (simulation time

t = 10 min), we changed the

direction of the reinforcements by negatively rewarding the firing of neuron A

and positively rewarding the firing of neuron B (i.e.,

we used

εr

− = −εr

(note that the integral over

εr

− is still zero).

At the middle of the simulation (simulation time

t = 10 min), we changed the

direction of the reinforcements by negatively rewarding the firing of neuron A

and positively rewarding the firing of neuron B (i.e.,  ). The results are summarized in Figure 2. With a reward signal modeled in

this way, we were able to independently increase and decrease the firing rates

of the two neurons according to the reinforcements, while the firing rates of

the other neurons remained unchanged. Changing the type of reinforcement during

the simulation from positive to negative for neuron A and from negative to

positive for neuron B resulted in a corresponding shift in their firing rate

change in the direction of the reinforcement.

). The results are summarized in Figure 2. With a reward signal modeled in

this way, we were able to independently increase and decrease the firing rates

of the two neurons according to the reinforcements, while the firing rates of

the other neurons remained unchanged. Changing the type of reinforcement during

the simulation from positive to negative for neuron A and from negative to

positive for neuron B resulted in a corresponding shift in their firing rate

change in the direction of the reinforcement.

The dynamics of a network where STDP is applied to all synapses between excitatory neurons is quite sensitive to the specific choice of the STDP-rule. The preceding theoretical analysis (see Equations 10 and 11) predicts that reward-modulated STDP affects in the long run only those excitatory synapses where the firing of the postsynaptic neuron is correlated with the reward signal. In other words: the reward signal gates the effect of STDP in a recurrent network, and thereby can keep the network within a given dynamic regime. This prediction is confirmed qualitatively by the two panels of Figure 4A, which show that even after all excitatory synapses in the recurrent network have been subject to 20 minutes (in simulated biological time) of reward-modulated STDP, the network stays within the asynchronous irregular firing regime. It is also confirmed quantitatively through Figure 5. These figures show results for the simple additive version of STDP (according to Equation 3). Very similar results (see Figure S3 and Figure S4) arise from an application of the more complex STDP-rule proposed in [22] where the weight-change depends on the current weight value.

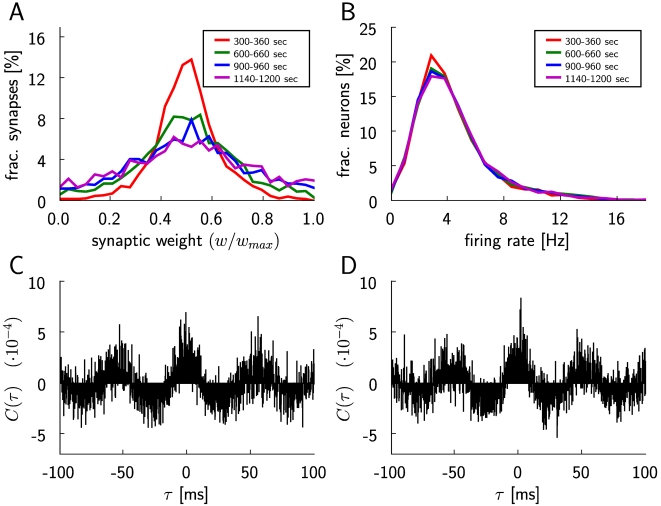

Figure 5. Evolution of the dynamics of a recurrent network of 4000 LIF neurons during application of reward-modulated STDP.

(A) Distribution of the synaptic weights of excitatory synapses to 50 randomly chosen non-reinforced neurons, plotted for 4 different periods of simulated biological time during the simulation. The weights are averaged over 10 samples within these periods. The colors of the curves and the corresponding intervals are as follows: red (300–360 sec), green (600–660 sec), blue (900–960 sec), magenta (1140–1200 sec). (B) The distribution of average firing rates of the non-reinforced excitatory neurons in the circuit, plotted for the same time periods as in (A). The colors of the curves are the same as in (A). The distribution of the firing rates of the neurons in the circuit remains unchanged during the simulation, which covers 20 minutes of biological time. (C) Cross-correlogram of the spiking activity in the circuit, averaged over 200 pairs of non-reinforced neurons and over 60 s, with a bin size of 0.2 ms, for the period between 300 and 360 seconds of simulated biological time. It is calculated as the cross-covariance divided by the square root of the product of variances. (D) As in (C), but between seconds 1140 and 1200. (Separate plots of (B), (C), and (D) for two types of excitatory neurons that received different amounts of noise currents are given in Figure S1 and Figure S2.)

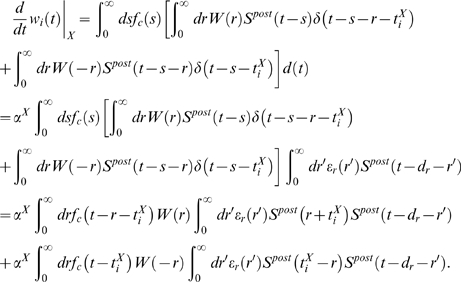

Rewarding Spike-Times

The preceding model for the biofeedback experiment of Fetz and Baker focused on learning of firing rates. In order to explore the capabilities and limitations of reward-modulated STDP in contexts where the temporal structure of spike trains matters, we investigated another reinforcement learning scenario where a neuron should learn to respond with particular temporal spike patterns. We first apply analytical methods to derive conditions under which a neuron subject to reward-modulated STDP can achieve this.

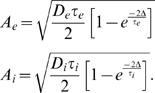

In this model, the reward signal d(t) is given

in dependence on how well the output spike train  of a neuron j matches some rather arbitrary

spike train S* (which might for example represent spike

output from some other brain structure during a developmental phase).

S* is produced by a neuron

μ* that receives the same n

input spike trains

S

1,…,Sn as

the trained neuron j, with some arbitrarily chosen weights

of a neuron j matches some rather arbitrary

spike train S* (which might for example represent spike

output from some other brain structure during a developmental phase).

S* is produced by a neuron

μ* that receives the same n

input spike trains

S

1,…,Sn as

the trained neuron j, with some arbitrarily chosen weights  ,

,  . But in addition the neuron

μ* receives

n′−n further spike

trains

Sn

+1,…,Sn

′

with weights

. But in addition the neuron

μ* receives

n′−n further spike

trains

Sn

+1,…,Sn

′

with weights  . The setup is illustrated in Figure 6A. It provides a generic

reinforcement learning scenario, when a quite arbitrary (and not perfectly

realizable) spike output is reinforced, but simultaneously the performance of

the learner can be evaluated clearly according to how well its weights

wj

1,…,wjn

match those of the neuron μ* for those

n input spike trains which both of them have in common. The

reward d(t) at time t depends

in this task on both the timing of action potentials of the trained neuron and

spike times in the target spike train S*

. The setup is illustrated in Figure 6A. It provides a generic

reinforcement learning scenario, when a quite arbitrary (and not perfectly

realizable) spike output is reinforced, but simultaneously the performance of

the learner can be evaluated clearly according to how well its weights

wj

1,…,wjn

match those of the neuron μ* for those

n input spike trains which both of them have in common. The

reward d(t) at time t depends

in this task on both the timing of action potentials of the trained neuron and

spike times in the target spike train S*

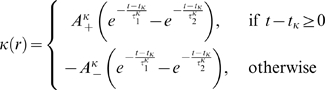

| (12) |

where the function κ(r)

with  describes how the reward signal depends on the time difference

r between a postsynaptic spike and a target spike, and

dr>0 is the delay of the reward.

describes how the reward signal depends on the time difference

r between a postsynaptic spike and a target spike, and

dr>0 is the delay of the reward.

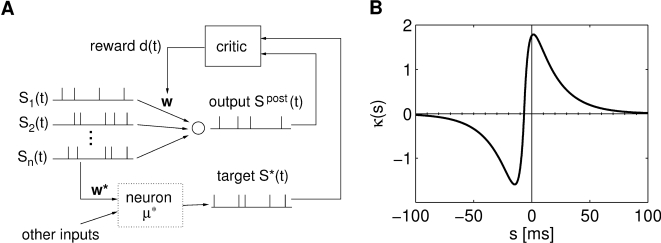

Figure 6. Setup for reinforcement learning of spike times.

(A) Architecture. The trained neuron receives n input spike trains. The neuron μ* receives the same inputs plus additional inputs not accessible to the trained neuron. The reward is determined by the timing differences between the action potentials of the trained neuron and the neuron μ*. (B) A reward kernel with optimal offset from the origin of tκ = −6.6 ms. The optimal offset for this kernel was calculated with respect to the parameters from computer simulation 1 in Table 1. Reward is positive if the neuron spikes around the target spike or somewhat later, and negative if the neuron spikes much too early.

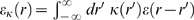

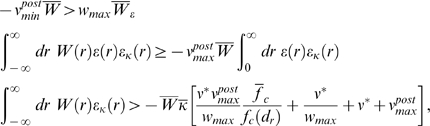

Our theoretical analysis (see Methods) predicts that under the assumption of constant-rate uncorrelated Poisson input statistics this reinforcement learning task can be solved by reward-modulated STDP for arbitrary initial weights if three constraints are fulfilled:

| (13) |

| (14) |

| (15) |

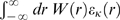

The following parameters occur in these equations:

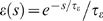

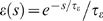

ν* is the output rate of neuron

μ*,  is the minimal output rate,

is the minimal output rate,  is the maximal output rate of the trained neuron,

is the maximal output rate of the trained neuron,  is the integral over the eligibility trace,

is the integral over the eligibility trace,  is the integral over the STDP learning curve (see Equation 2),

is the integral over the STDP learning curve (see Equation 2),  is the convolution of the reward kernel with the shape of the

postsynaptic potential (PSP) ε(s), and

is the convolution of the reward kernel with the shape of the

postsynaptic potential (PSP) ε(s), and  is the integral over the PSP weighted by the learning window.

is the integral over the PSP weighted by the learning window.

If these inequalities are fulfilled and input rates are larger than zero, then

the weight vector of the trained neuron converges on average from any initial

weight vector to w* (i.e., it mimics the weight

distribution of neuron μ* for those

n inputs which both have in common). To get an intuitive

understanding of these inequalities, we first examine the idea behind Constraint

13. This constraint assures that weights of synapses i with  decay to zero in expectation. First note that input spikes

from a spike train Si with

decay to zero in expectation. First note that input spikes

from a spike train Si with  have no influence on the target spike train

S*. In the linear Poisson neuron model, this leads to

weight changes similar to STDP which can be described by two terms. First, all

synapses are subject to depression stemming from the negative part of the

learning curve W and random pre-post spike pairs. This weight

change is bounded from below by

have no influence on the target spike train

S*. In the linear Poisson neuron model, this leads to

weight changes similar to STDP which can be described by two terms. First, all

synapses are subject to depression stemming from the negative part of the

learning curve W and random pre-post spike pairs. This weight

change is bounded from below by  for some positive constant α. On the

other hand, the positive influence of input spikes on postsynaptic firing leads

to potentiation of the synapse bounded from above by

for some positive constant α. On the

other hand, the positive influence of input spikes on postsynaptic firing leads

to potentiation of the synapse bounded from above by  . Hence the weight decays to zero if

. Hence the weight decays to zero if  , leading to Inequality 13. For synapses i

with

, leading to Inequality 13. For synapses i

with  , there is an additional drive, since each presynaptic spike

increases the probability of a closely following spike in the target spike train

S*. Therefore, the probability of a delayed reward

signal after a presynaptic spike is larger. This additional drive leads to

positive weight changes if Inequalities 14 and 15 are fulfilled (see Methods).

, there is an additional drive, since each presynaptic spike

increases the probability of a closely following spike in the target spike train

S*. Therefore, the probability of a delayed reward

signal after a presynaptic spike is larger. This additional drive leads to

positive weight changes if Inequalities 14 and 15 are fulfilled (see Methods).

Note that also for the learning of spike times spontaneous spikes (which might be

regarded as “noise”) are important, since they may lead to

reward signals that can be exploited by the learning rule. It is obvious that in

reward-modulated STDP, a silent neuron cannot recover from its silent state,

since there will be no spikes which can drive STDP. But in addition, Condition

13 shows that in this learning scenario, the minimal output rate  —which increases with increasing noise—has

to be larger than some positive constant, such that depression is strong enough

to weaken synapses if needed. On the other hand, if the noise is too strong also

synapses i with

wi = wmax

will be depressed and may not converge correctly. This can happen when the

increased noise leads to a maximal postsynaptic rate

—which increases with increasing noise—has

to be larger than some positive constant, such that depression is strong enough

to weaken synapses if needed. On the other hand, if the noise is too strong also

synapses i with

wi = wmax

will be depressed and may not converge correctly. This can happen when the

increased noise leads to a maximal postsynaptic rate  such that Constraints 14 and 15 are not satisfied anymore.

such that Constraints 14 and 15 are not satisfied anymore.

Conditions 13–15 also reveal how parameters of the model influence the applicability of this setup. For example, the eligibility trace enters the equations only in the form of its integral and its value at the reward delay in Equation 15. In fact, the exact shape of the eligibility trace is not important. The important property of an ideal eligibility trace is that it is high at the reward delay and low at other times as expressed by the fraction in Condition 15. Interestingly, the formulas also show that one has quite some freedom in choosing the form of the STDP window, as long as the reward kernel εκ is adjusted accordingly. For example, instead of a standard STDP learning window W with W(r)≥0 for r>0 and W(r)≤0 for r<0 and a corresponding reward kernel κ, one can use a reversed learning window W′ defined by W′(r)≡W(−r) and a reward kernel κ′ such that εκ ′(r) = εκ(−r). If Condition 15 is satisfied for W and κ, then it is also satisfied for W′ and κ′ (and in most cases also Condition 14 will be satisfied). This reflects the fact that in reward modulated STDP the learning window defines the weight changes in combination with the reward signal.

For a given STDP learning window, the analysis reveals what reward kernels

κ are suitable for this learning setup. From

Condition 15, we can deduce that the integral over κ

should be small (but positive), whereas the integral  should be large. Hence, for a standard STDP learning window

W with W(r)≥0 for

r>0 and

W(r)≤0 for r<0,

the convolution

εκ(r) of the reward

kernel with the PSP should be positive for r>0 and

negative for r<0. In the computer simulation we used a

simple kernel depicted in Figure

6B, which satisfies the aforementioned constraints. It consists of two

double-exponential functions, one positive and one negative, with a zero

crossing at some offset tκ from the origin.

The optimal offset tκ is always negative and

in the order of several milliseconds for usual PSP-shapes

ε. We conclude that for successful learning in this

scenario, a positive reward should be produced if the neuron spikes around the

target spike or somewhat later, and a negative reward should be produced if the

neuron spikes much too early.

should be large. Hence, for a standard STDP learning window

W with W(r)≥0 for

r>0 and

W(r)≤0 for r<0,

the convolution

εκ(r) of the reward

kernel with the PSP should be positive for r>0 and

negative for r<0. In the computer simulation we used a

simple kernel depicted in Figure

6B, which satisfies the aforementioned constraints. It consists of two

double-exponential functions, one positive and one negative, with a zero

crossing at some offset tκ from the origin.

The optimal offset tκ is always negative and

in the order of several milliseconds for usual PSP-shapes

ε. We conclude that for successful learning in this

scenario, a positive reward should be produced if the neuron spikes around the

target spike or somewhat later, and a negative reward should be produced if the

neuron spikes much too early.

Computer Simulation 2: Learning Spike Times

In order to explore this learning scenario in a biologically more realistic

setting, we trained a LIF neuron with conductance based synapses exhibiting

short term facilitation and depression. The trained neuron and the neuron

μ* which produced the target spike train

S* both received inputs from 100 input neurons

emitting spikes from a constant rate Poisson process of 15 Hz. The synapses to

the trained neuron were subject to reward-modulated STDP. The weights of neuron

μ* were set to  for 0≤i<50 and

for 0≤i<50 and  for 50≤i<100. In order to

simulate a non-realizable target response, neuron

μ* received 10 additional synaptic inputs (with

weights set to wmax/2). During the simulations we

observed a firing rate of 18.2 Hz for the trained neuron, and 25.2 Hz for the

neuron μ*. The simulations were run for 2 hours

simulated biological time.

for 50≤i<100. In order to

simulate a non-realizable target response, neuron

μ* received 10 additional synaptic inputs (with

weights set to wmax/2). During the simulations we

observed a firing rate of 18.2 Hz for the trained neuron, and 25.2 Hz for the

neuron μ*. The simulations were run for 2 hours

simulated biological time.

We performed 5 repetitions of the experiment, each time with different randomly

generated inputs and different initial weight values for the trained neuron. In

each of the 5 runs, the average synaptic weights of synapses with  and

and  approached their target values, as shown in Figure 7A. In order to test

how closely the trained neuron reproduces the target spike train

S* after learning, we performed additional simulations

where the same spike input was applied to the trained neuron before and after

the learning. Then we compared the output of the trained neuron before and after

learning with the output S* of neuron

μ*. Figure 7B shows that the trained neuron

approximates the part of S* which is accessible to it

quite well. Figure

7C–F provide more detailed analyses of the evolution of weights

during learning. The computer simulations confirmed the theoretical prediction

that the neuron can learn well through reward-modulated STDP only if a certain

level of noise is injected into the neuron (see preceding discussion and Figure S6).

approached their target values, as shown in Figure 7A. In order to test

how closely the trained neuron reproduces the target spike train

S* after learning, we performed additional simulations

where the same spike input was applied to the trained neuron before and after

the learning. Then we compared the output of the trained neuron before and after

learning with the output S* of neuron

μ*. Figure 7B shows that the trained neuron

approximates the part of S* which is accessible to it

quite well. Figure

7C–F provide more detailed analyses of the evolution of weights

during learning. The computer simulations confirmed the theoretical prediction

that the neuron can learn well through reward-modulated STDP only if a certain

level of noise is injected into the neuron (see preceding discussion and Figure S6).

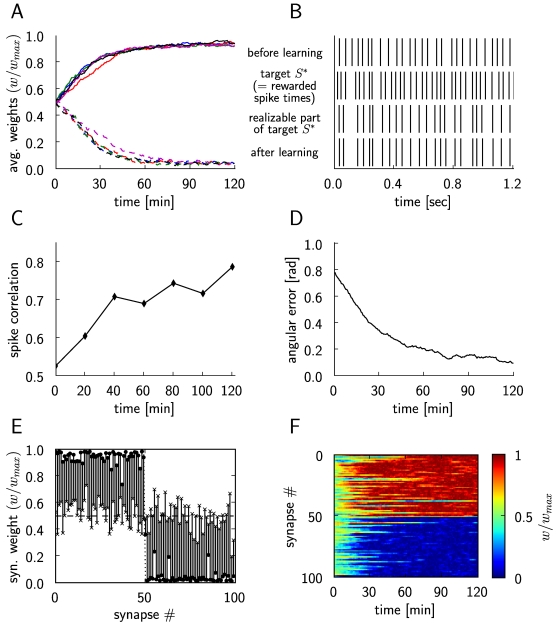

Figure 7. Results for reinforcement learning of exact spike times through reward-modulated STDP.

(A) Synaptic weight changes of the trained LIF neuron, for 5 different

runs of the experiment. The curves show the average of the synaptic

weights that should converge to  (dashed lines), and the average of the synaptic

weights that should converge to

(dashed lines), and the average of the synaptic

weights that should converge to  (solid lines) with different colors for each

simulation run. (B) Comparison of the output of the trained neuron

before (top trace) and after learning (bottom trace). The same input

spike trains and the same noise inputs were used before and after

training for 2 hours. The second trace from above shows those spike

times S* which are rewarded, the third trace

shows the realizable part of S* (i.e. those

spikes which the trained neuron could potentially learn to reproduce,

since the neuron μ* produces them

without its 10 extra spike inputs). The close match between the third

and fourth trace shows that the trained neuron performs very well. (C)

Evolution of the spike correlation between the spike train of the

trained neuron and the realizable part of the target spike train

S*. (D) The angle between the weight vector

w of the trained neuron and the weight vector w* of the neuron

μ* during the simulation, in

radians. (E) Synaptic weights at the beginning of the simulation are

marked with ×, and at the end of the simulation with

•, for each plastic synapse of the trained neuron. (F)

Evolution of the synaptic weights

w/wmax during the

simulation (we had chosen

(solid lines) with different colors for each

simulation run. (B) Comparison of the output of the trained neuron

before (top trace) and after learning (bottom trace). The same input

spike trains and the same noise inputs were used before and after

training for 2 hours. The second trace from above shows those spike

times S* which are rewarded, the third trace

shows the realizable part of S* (i.e. those

spikes which the trained neuron could potentially learn to reproduce,

since the neuron μ* produces them

without its 10 extra spike inputs). The close match between the third

and fourth trace shows that the trained neuron performs very well. (C)

Evolution of the spike correlation between the spike train of the

trained neuron and the realizable part of the target spike train

S*. (D) The angle between the weight vector

w of the trained neuron and the weight vector w* of the neuron

μ* during the simulation, in

radians. (E) Synaptic weights at the beginning of the simulation are

marked with ×, and at the end of the simulation with

•, for each plastic synapse of the trained neuron. (F)

Evolution of the synaptic weights

w/wmax during the

simulation (we had chosen  for i<50,

for i<50,  for i≥50).

for i≥50).

Both the theoretical results and these computer simulations demonstrate that a neuron can learn quite well through reward-modulated STDP to respond with specific spike patterns.

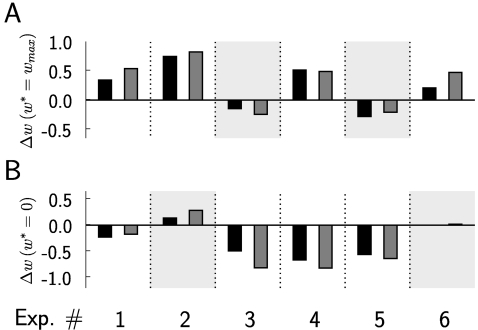

Computer Simulation 3: Testing the Analytically Derived Conditions

Equations 13–15 predict under which relationships between the parameters involved the learning of particular spike responses through reward-modulated STDP will be successful. We have tested these predictions by selecting 6 arbitrary settings of these parameters, which are listed in Table 1. In 4 cases (marked by light gray shading in Figure 8) these conditions were not met (either for the learning of weights with target value wmax, or for the learning of weights with target value 0. Figure 8 shows that the derived learning result is not achieved in exactly these 4 cases. On the other hand, the theoretically predicted weight changes (black bar) predict in all cases the actual weight changes (gray bar) that occur for the chosen simulation times (listed in the last column of Table 1) remarkably well.

Table 1. Parameter values used for computer simulation 3 (see Figure 8).

| Ex. | τε [ms] | wmax | υpostmin [Hz] | A+ 106 | A−/A+ | τ+ [ms] | Aκ+, Aκ− | τκ 2 [ms] | tsim [h] |

| 1 | 10 | 0.012 | 10 | 16.62 | 1.05 | 20 | 3.34, −3.12 | 20 | 5 |

| 2 | 7 | 0.020 | 5 | 11.08 | 1.02 | 15 | 4.58, −4.17 | 16 | 10 |

| 3 | 20 | 0.010 | 6 | 5.54 | 1.10 | 25 | 1.50, −1.39 | 40 | 19 |

| 4 | 7 | 0.020 | 5 | 11.08 | 1.07 | 25 | 4.67, −4.17 | 16 | 13 |

| 5 | 10 | 0.015 | 6 | 20.77 | 1.10 | 25 | 3.75, −3.12 | 20 | 2 |

| 6 | 25 | 0.005 | 3 | 13.85 | 1.01 | 25 | 3.34, −3.12 | 20 | 18 |

Figure 8. Test of the validity of the analytically derived conditions 13–15 on the relationship between parameters for successful learning with reward-modulated STDP.

Predicted average weight changes (black bars) calculated from Equation 22

match in sign and magnitude the actual average weight changes (gray

bars) in computer simulations, for 6 different experiments with

different parameter settings (see Table 1). (A) Weight changes for

synapses with  . (B) Weight changes for synapses with

. (B) Weight changes for synapses with  . Four cases where constraints 13–15 are not

fulfilled are shaded in light gray. In all of these four cases the

weights move into the opposite direction, i.e., a direction that

decreases rewards.

. Four cases where constraints 13–15 are not

fulfilled are shaded in light gray. In all of these four cases the

weights move into the opposite direction, i.e., a direction that

decreases rewards.

Pattern Discrimination with Reward-Modulated STDP

We examine here the question whether a neuron can learn through reward-modulated STDP to discriminate between two spike patterns P and N of its presynaptic neurons, by responding with more spikes to pattern P than to pattern N. Our analysis is based on the assumption that there exist internal rewards d(t) that could guide such pattern discrimination. This reward based learning architecture is biologically more plausible than an architecture with a supervisor which provides for each input pattern a target output and thereby directly produces the desired firing behavior of the neuron (since the question becomes then how the supervisor has learnt to produce the desired spike outputs).

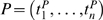

We consider a neuron that receives input from n presynaptic

neurons. A pattern X consists of n spike

trains, each of time length T, one for each presynaptic neuron.

There are two patterns, P and N, which are

presented in alternation to the neuron, with some reset time between

presentations. For notational simplicity, we assume that each of the

n presynaptic spike trains consists of exactly one spike.

Hence, each pattern can be defined by a list of spike times:  ,

,  , where

, where  is the time when presynaptic neuron i spikes

for pattern X∈{P,N}.

A generalization to the easier case of learning to discriminate spatio-temporal

presynaptic firing patterns (where some presynaptic neurons produce different

numbers of spikes in different patterns) is straightforward, however the main

characteristics of the learning dynamics are better accessible in this

conceptually simpler setup. It had already been shown in [12] that neurons

can learn through reward-modulated STDP to discriminate between different

spatial presynaptic firing patterns. But in the light of

the analysis of [27] it is still open whether neurons can learn

with simple forms of reward-modulated STDP, such as the one considered in this

article, to discriminate temporal presynaptic firing patterns.

is the time when presynaptic neuron i spikes

for pattern X∈{P,N}.

A generalization to the easier case of learning to discriminate spatio-temporal

presynaptic firing patterns (where some presynaptic neurons produce different

numbers of spikes in different patterns) is straightforward, however the main

characteristics of the learning dynamics are better accessible in this

conceptually simpler setup. It had already been shown in [12] that neurons

can learn through reward-modulated STDP to discriminate between different

spatial presynaptic firing patterns. But in the light of

the analysis of [27] it is still open whether neurons can learn

with simple forms of reward-modulated STDP, such as the one considered in this

article, to discriminate temporal presynaptic firing patterns.

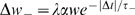

We assume that the reward signal d(t) rewards—after some delay dr—action potentials of the trained neuron if pattern P was presented, and punishes action potentials of the neuron if pattern N was presented. More precisely, we assume that

|

(16) |

with some reward kernel εr and constants αN<0<αP. The goal of this learning task is to produce many output spikes for pattern P, and few or no spikes for pattern N.

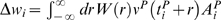

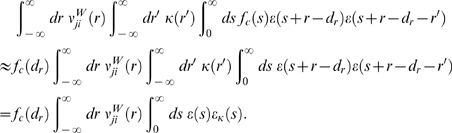

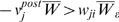

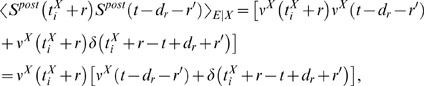

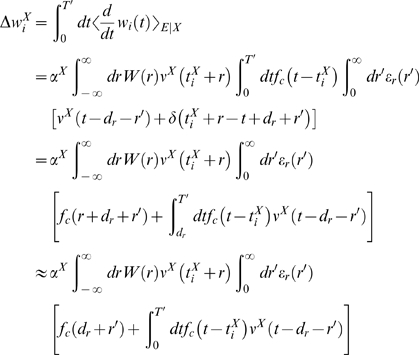

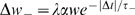

The main result of our analysis is an estimate of the expected weight change of synapse i of the trained neuron for the presentation of pattern P, followed after a sufficiently long time T′ by a presentation of pattern N

where 〈·〉E |X is the expectation over the ensemble given that pattern X was presented. This weight change can be estimated as (see Methods)

| (17) |

where νX(t)

is the postsynaptic rate at time t for pattern

X, and the constants  for

X∈{P,N} are given by

for

X∈{P,N} are given by

|

(18) |

As we will see shortly, an interesting learning effect is

achieved if  is positive and

is positive and  is negative. Since

fc(r) is non-negative, a natural

way to achieve this is to choose a positive reward kernel

εr(r)≥0 for

r>0 and

εr(r) = 0

for r<0 (also,

fc(r) and

εr(r) must not be

identical to zero for all r).

is negative. Since

fc(r) is non-negative, a natural

way to achieve this is to choose a positive reward kernel

εr(r)≥0 for

r>0 and

εr(r) = 0

for r<0 (also,

fc(r) and

εr(r) must not be

identical to zero for all r).

We use Equation 17 to provide insight on when and how the classification of

temporal spike patterns can be learnt with reward-modulated STDP. Assume for the

moment that  . We first note that it is impossible to achieve through any

synaptic plasticity rule that the time integral over the membrane potential of

the trained neuron has after training a larger value for input pattern

P than for input pattern N. The reason is that

each presynaptic neuron emits the same number of spikes in both patterns (namely

one spike). This simple fact implies that it is impossible to train a linear

Poisson neuron (with any learning method) to respond to pattern

P with more spikes than to pattern N. But

Equation 17 implies that reward-modulated STDP increases the variance of the

membrane potential for pattern P, and reduces the variance for

pattern N. This can be seen as follows. Because of the specific

form of the STDP learning curve W(r), which is

positive for (small) positive r, negative for (small) negative

r, and zero for large r,

. We first note that it is impossible to achieve through any

synaptic plasticity rule that the time integral over the membrane potential of

the trained neuron has after training a larger value for input pattern

P than for input pattern N. The reason is that

each presynaptic neuron emits the same number of spikes in both patterns (namely

one spike). This simple fact implies that it is impossible to train a linear

Poisson neuron (with any learning method) to respond to pattern

P with more spikes than to pattern N. But

Equation 17 implies that reward-modulated STDP increases the variance of the

membrane potential for pattern P, and reduces the variance for

pattern N. This can be seen as follows. Because of the specific

form of the STDP learning curve W(r), which is

positive for (small) positive r, negative for (small) negative

r, and zero for large r,  has a potentiating effect on synapse i if the

postsynaptic rate for pattern P is larger (because of a higher

membrane potential) shortly after the presynaptic spike at this synapse

i than before that spike. This tends to further increase

the membrane potential after that spike. On the other hand, since

has a potentiating effect on synapse i if the

postsynaptic rate for pattern P is larger (because of a higher

membrane potential) shortly after the presynaptic spike at this synapse

i than before that spike. This tends to further increase

the membrane potential after that spike. On the other hand, since  is negative, the same situation for pattern N

has a depressing effect on synapse i, which counteracts the

increased membrane potential after the presynaptic spike. Dually, if the

postsynaptic rate shortly after the presynaptic spike at synapse

i is lower than shortly before that spike, the effect on

synapse i is depressing for pattern P. This

leads to a further decrease of the membrane potential after that spike. In the

same situation for pattern N, the effect is potentiating, again

counteracting the variation of the membrane potential. The total effect on the

postsynaptic membrane potential is that the fluctuations for pattern

P are increased, while the membrane potential for pattern

N is flattened.

is negative, the same situation for pattern N

has a depressing effect on synapse i, which counteracts the

increased membrane potential after the presynaptic spike. Dually, if the

postsynaptic rate shortly after the presynaptic spike at synapse

i is lower than shortly before that spike, the effect on

synapse i is depressing for pattern P. This

leads to a further decrease of the membrane potential after that spike. In the

same situation for pattern N, the effect is potentiating, again

counteracting the variation of the membrane potential. The total effect on the

postsynaptic membrane potential is that the fluctuations for pattern

P are increased, while the membrane potential for pattern

N is flattened.

For the LIF neuron model, and most reasonable other non-linear spiking neuron models, as well as for biological neurons in-vivo and in-vitro [28]–[30], larger fluctuations of the membrane potential lead to more action potentials. As a result, reward-modulated STDP tends to increase the number of spikes for pattern P for these neuron models, while it tends to decrease the number of spikes for pattern N, thereby enabling a discrimination of these purely temporal presynaptic spike patterns.

Computer Simulation 4: Learning Pattern Classification

We tested these theoretical predictions through computer simulations of a LIF neuron with conductance based synapses exhibiting short-term depression and facilitation. Both patterns, P and N, had 200 input channels, with 1 spike per channel (hence this is the extreme where all information lies in the timing of presynaptic spikes). The spike times were drawn from an uniform distribution over a time interval of 500 ms, which was the duration of the patterns. We performed 1000 training trials where the patterns P and N were presented to the neuron in alternation. To introduce exploration for this reinforcement learning task, the neuron had injected 20% of the Ornstein-Uhlenbeck process conductance noise (see Methods for further details).

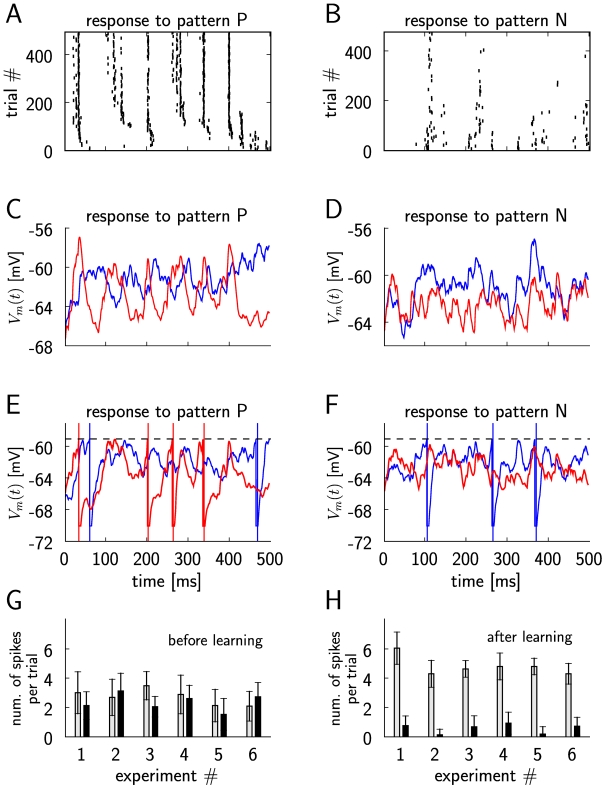

The theoretical analysis predicted that the membrane potential will have after learning a higher variance for pattern P, and a lower variance for pattern N. When in our simulation of a LIF neuron the firing of the neuron was switched off (by setting the firing threshold potential too high) we could observe the membrane potential fluctuations undisturbed by the reset mechanism after each spike (see Figure 9C and 9D). The variance of the membrane potential did in fact increase for pattern P from 2.49 (mV)2 to 5.43 (mV)2 (Figure 9C), and decrease for pattern N (Figure 9D), from 2.34 (mV)2 to 1.33 (mV)2. The corresponding plots with the firing threshold included are given in panels E and F, showing an increased member of spikes of the LIF neuron for pattern P, and a decreased number of spikes for pattern N. Furthermore, as Figure 9A and 9B show, the increased variance of the membrane potential for the positively reinforced pattern P led to a stable temporal firing pattern in response to pattern P.

Figure 9. Training a LIF neuron to classify purely temporal presynaptic firing patterns: a positive reward is given for firing of the neuron in response to a temporal presynaptic firing pattern P, and a negative reward for firing in response to another temporal pattern N.

(A) The spike response of the neuron for individual trials, during 500 training trials when pattern P is presented. Only the spikes from every 4-th trial are plotted. (B) As in (A), but in response to pattern N. (C) The membrane potential Vm(t) of the neuron during a trial where pattern P is presented, before (blue curve) and after training (red curve), with the firing threshold removed. The variance of the membrane potential increases during learning, as predicted by the theory. (D) As in (C), but for pattern N. The variance of the membrane potential for pattern N decreases during learning, as predicted by the theory. (E) The membrane potential Vm(t) of the neuron (including action potentials) during a trial where pattern P is presented before (blue curve) and after training (red curve). The number of spikes increases. (F) As in (E), but for trials where pattern N is given as input. The number of spikes decreases. (G) Average number of output spikes per trial before learning, in response to pattern P (gray bars) and pattern N (black bars), for 6 experiments with different randomly generated patterns P and N, and different random initial synaptic weights of the neuron. (H) As in (G), for the same experiments, but after learning. The average number of spikes per trial increases after training for pattern P, and decreases for pattern N.

We repeated the experiment 6 times, each time with different randomly generated patterns P and N, and different random initial synaptic weights of the neuron. The results in Figure 9G and 9H show that the learning of temporal pattern discrimination through reward-modulated STDP does not depend on the temporal patterns that are chosen, nor on the initial values of synaptic weights.

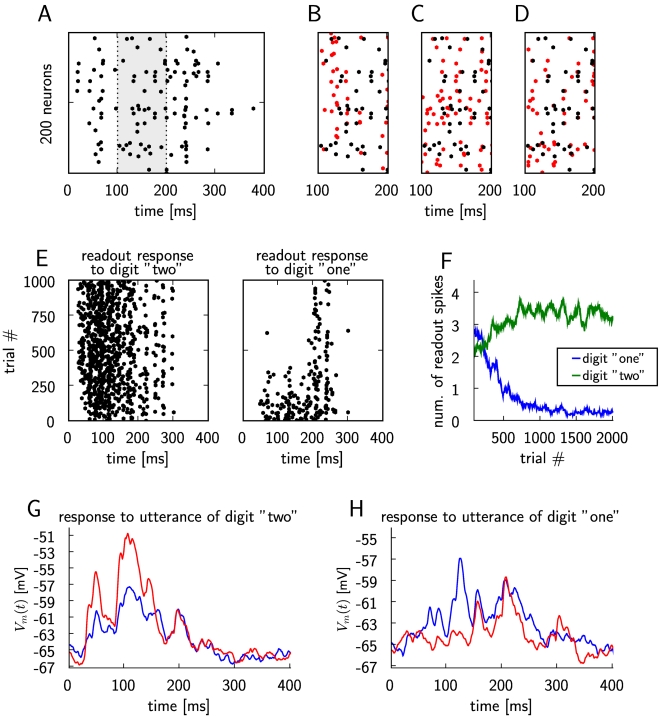

Computer Simulation 5: Training a Readout Neuron with Reward-Modulated STDP To Recognize Isolated Spoken Digits

A longstanding open problem is how a biologically realistic neuron model can be trained in a biologically plausible manner to extract information from a generic cortical microcircuit. Previous work [31]–[35] has shown that quite a bit of salient information about recent and past inputs to the microcircuit can be extracted by a non-spiking linear readout neuron (i.e., a perceptron) that is trained by linear regression or margin maximization methods. Here we examine to what extent a LIF readout neuron with conductance based synapses (subject to biologically realistic short term synaptic plasticity) can learn through reward-modulated STDP to extract from the response of a simulated cortical microcircuit (consisting of 540 LIF neurons), see Figure 10A, the information which spoken digit (transformed into spike trains by a standard cochlea model) is injected into the circuit. In comparison with the preceding task in simulation 4, this task is easier because the presynaptic firing patterns that need to be discriminated differ in temporal and spatial aspects (see Figure 10B; Figure S10 and S11 show the spike trains that were injected into the circuit). But this task is on the other hand more difficult, because the circuit response (which creates the presynaptic firing pattern for the readout neuron) differs also significantly for two utterances of the same digit (Figure 10C), and even for two trials for the same utterance (Figure 10D) because of the intrinsic noise in the circuit (which was modeled according to [26] to reflect in-vivo conditions during cortical UP-states). The results shown in Figure 10E–H demonstrate that nevertheless this learning experiment was successful. On the other hand we were not able to achieve in this way speaker-independent word recognition, which had been achieved in [31] with a linear readout. Hence further work will be needed in order to clarify whether biologically more realistic models for readout neurons can be trained through reinforcement learning to reach the classification capabilities of perceptrons that are trained through supervised learning.

Figure 10. A LIF neuron is trained through reward-modulated STDP to discriminate as a “readout neuron” responses of generic cortical microcircuits to utterances of different spoken digits.