Abstract

Previous research suggests that sublexical and lexical representations are involved in spoken word recognition. The current experiment examined when sublexical and lexical representations are used in the processing of real words in English. The same set of words varying in phonotactic probability/neighbourhood density was presented in three different versions of a same-different matching task: (1) mostly real words as filler items, (2) an equal number of words and nonsense words as filler items and (3) mostly nonsense words as filler items. The results showed that lexical representations were used in version 1 of the same-different matching task to process the words, whereas sublexical representations were used in version 3 of the same-different matching task to process the words. Finally, in version 2 of the same-different matching task individual variation was observed in the form of distinct sublexical and lexical biases. Implications for the processing of spoken words are discussed.

Keywords: lexical representations, sublexical representations, word recognition, language processing

Introduction

Previous research has shown that neighbourhood density and phonotactic probability influence the speed and accuracy of spoken word recognition (Luce and Pisoni, 1998; Vitevitch and Luce, 1998; 1999). Neighbourhood density refers to the number of words that resemble a given word; these similar words are called neighbours. One way to estimate the number of neighbours a word has is to add, subtract, or substitute a single phoneme in that word (Landauer and Streeter, 1973; see also Luce and Pisoni, 1998). For example, adding, subtracting, or substituting a single phoneme in the word cat yields as neighbours the words scat, at, hat, kit and cab (NB, cat has other neighbours, but only a few were listed for illustration). Words that have many neighbours are said to have a dense neighbourhood, whereas words that have few neighbours are said to have a sparse neighbourhood. Luce and Pisoni (1998) demonstrated in a variety of tasks that words with sparse neighbourhoods were responded to more quickly and accurately than words with dense neighbourhoods. Similar results have been observed in many different listener populations, including normal hearing adults (Luce and Pisoni, 1998), elderly adults (Sommers, 1996; Sommers and Danielson, 1999) and adults with cochlear implants (Kirk, Pisoni and Miyamoto, 1997). The influence of neighbourhood density has also been found in a corpus of naturalistically collected perceptual errors known as ‘slips of the ear’ (Vitevitch, in press).

Phonotactic probability refers to the frequency with which phonological segments and sequences of phonological segments occur in words in the English language (Jusczyk, Luce and Charles-Luce, 1994). Certain segments, such as word initial /s/, and sequences of segments, such as /sʌ/, are more common than other segments, such as word initial /j/, and sequences of segments, such as /ji/. Vitevitch, Luce, Charles-Luce and Kemmerer (1997; see also Vitevitch, Pisoni, Kirk, Hay-McCutcheon, and Yount, 2002) found that adult listeners rated nonsense words that contained common segments and sequences of segments (i.e., nonwords with high phonotactic probability) more like words in English than nonsense words that contained less common segments and sequences of segments (i.e., nonwords with low phonotactic probability). Vitevitch et al. (1997) also found that listeners repeated nonwords with high phonotactic probability more quickly than nonwords with low phonotactic probability, suggesting that adults were sensitive to phonotactic information and may use it in the processing of spoken words.

Interestingly there is a positive correlation between neighbourhood density and phonotactic probability (Vitevitch, Luce, Pisoni and Auer, 1999). Words with dense neighbourhoods, or many neighbours, tend to be comprised of segments and sequences of segments that are common in English, whereas words with sparse neighbourhoods, or few neighbours, tend to be comprised of segments and sequences of segments that are less common in English. Vitevitch and Luce (1998) showed that words with low phonotactic probability/sparse neighbourhoods were repeated more quickly than words with high phonotactic probability/dense neighbourhoods, but that nonwords with high phonotactic probability/dense neighbourhoods were repeated more quickly than nonwords with low phonotactic probability/sparse neighbourhoods, presenting an intriguing contradiction.

To resolve this apparent contradiction, Vitevitch and Luce (1998; 1999; see also Pitt and Samuel, 1995) hypothesized that lexical and sublexical representations may be used to process spoken words. Lexical representations correspond to whole word forms, whereas sublexical representations correspond to parts of words, such as phonological segments (i.e., phonemes) or sequences of segments (i.e., biphones). When lexical representations are used to process spoken stimuli, effects of neighbourhood density are observed. When sublexical representations are used to process spoken stimuli, effects of phonotactic probability are observed. Vitevitch and Luce (1998, 1999) tested this hypothesis in a series of experiments with words and nonwords varying in phonotactic probability/neighbourhood density in several tasks.

In tasks such as auditory shadowing and same-or-different-matching—tasks that do not require lexical representations to be accessed for accurate performance— Vitevitch and Luce (1998, 1999) found that high probability/dense nonwords (such as /s^v) were responded to more quickly than low probability/sparse nonwords (such as /j^∫/) as observed previously in Vitevitch et al. (1997). For real words in the same tasks, high probability/dense words (such as kick) were responded to more slowly than low probability/sparse words (such as fish), as previously observed in Luce and Pisoni (1998). Note that only words or only nonwords were presented to listeners in each of these tasks, allowing participants to rely on the type of representation that would enable them to perform the task optimally. In the case of nonwords, sublexical representations enabled optimal performance in these tasks. In the case of real words, lexical representations enabled optimal performance in these tasks.

When the same nonwords and words were presented together in a lexical decision task—a task that requires lexical access for accurate performance—a different pattern of results was found. High probability/dense nonwords were now responded to more slowly than the low probability/sparse nonwords. Deciding whether a stimulus was a real word in English or a nonsense word led to lexical representations influencing the processing of the nonword stimuli. As in the auditory shadowing and same-or-different-matching tasks, the high probability/dense words were again responded to more slowly than the low probability/sparse nonwords. By manipulating phonotactic probability/neighbourhood density, and lexicality (by presenting only words or only nonwords) across tasks the previous experiments supported the hypothesis that there were two levels of representation with different processes operating on them. Specifically, when lexical representations were used to process input, effects of neighbourhood density were observed. When sublexical representations were used to process input, effects of phonotactic probability were observed.

The present experiment was conducted to further examine when sublexical and lexical representations might be used to process a set of real, highly familiar words in English. Rather than manipulate lexicality (by presenting only words or only nonwords) and vary the experimental task (auditory shadowing versus lexical decision), we attempted to bias listeners to use either sublexical or lexical representations to process the set of highly familiar real words they heard by presenting both words and nonwords together in the same task (i.e, a same-or-different matching task), but manipulate the proportion of word pairs and nonword pairs that were presented. One group of listeners heard more word pairs than nonword pairs, a second group of listeners heard equal numbers of word pairs and nonword pairs, and a third group of listeners heard more nonword pairs than word pairs.

It was predicted that when mostly words were heard, lexical representations would be used to process the highly familiar real words resulting in an effect of neighbourhood density. That is, low probability/sparse words would be responded to more quickly than high probability/dense words. In the case where mostly nonwords were heard, it was predicted that sublexical representations would be used to process the highly familiar real words. Attempting to use lexical representations in a situation dominated by stimuli that do not have lexical representations (i.e., nonwords) when sublexical representations would be adequate for accurate task performance would be an inefficient strategy given the few occasions that a real word would be heard. Thus, sublexical representations would be the most efficient representation to use for both real words and nonwords, resulting in an effect of phonotactic probability for the real words. That is, low probability/sparse words would be responded to more slowly than high probability/dense words.

Perhaps the most interesting case is the condition containing equal numbers of words and nonwords. Several possible outcomes were predicted for this condition. One prediction is that all of the listeners may simply pick one type of representation to process all the stimuli they heard. This situation would be analogous to the processing done by listeners in the lexical decision task of Vitevitch and Luce (1999), in which both words and nonwords produced neighbourhood density effects. If listeners in the present experiment selected lexical representations to process all the stimuli they heard in the same-or-different matching task, we should observe a neighbourhood density effect for the set of highly familiar real words. In contrast, if sublexical representations dominated processing, we might observe a phonotactic probability effect for the set of highly familiar real words.

Another possibility is that a subset of listeners may use sublexical representations to process the set of highly familiar real words, while another subset of listeners may use lexical representations to process the set of highly familiar real words. When the data is examined overall, the effects of phonotactic probability in one subset would negate the effects of neighbourhood density in the other subset, resulting in no omnibus difference in the set of highly familiar real words varying in phonotactic probability and neighbourhood density. However, by closely examining the patterns of individual response latencies (Fox, 1984), two separate sub-groups should emerge with statistically significant (and opposing) response patterns.

A final possibility is that each participant may, on a trial by trial or random basis, switch from using lexical to sublexical representations. When the data is aggregated and examined in an overall statistical analysis, no difference in the set of highly familiar real words varying in phonotactic probability and neighbourhood density would again be observed. Furthermore, attempts to examine the patterns of individual response latencies (Fox, 1984) would fail to reveal two distinct sub-groups in the aggregated data.

Although the last two outcomes both predict a null result (i.e., no overall difference in the set of real words) when listeners hear equal numbers of words and nonwords, it is possible to distinguish the two potential processing strategies from each other. If a subset of participants is using sublexical representations to process the real words, while another subset of participants is using lexical representations to process the real words, then two distinct groups should be observable in the data. However, if participants are randomly switching between sublexical and lexical representations on a trial by trial basis, then there should be no distinct ‘subsets’ observable in the data.

Method

Participants

Seventy-eight native English speakers from the pool of Introductory Psychology students participated in partial fulfilment of a course requirement. Twenty-six participants were in each of the three conditions described below. None of the participants reported a history of speech or hearing problems, and all were right-handed.

Materials

One hundred-twenty words and 120 nonwords from Vitevitch and Luce (1999) served as filler items. These filler items comprised SAME and DIFFERENT stimulus pairs in each of the three listening conditions (adjusting the number of word and nonword pairs appropriately). Twenty real words varying in phonotactic probability and neighbourhood density—a subset of the words used in Vitevitch and Luce (1999)—served as the stimuli that were examined in all three conditions in the present experiment. Half of the words were high probability/dense and half were low probability/sparse. These words only appeared in SAME pairs.

Phonotactic probabilities

As in Vitevitch and Luce (1998, 1999), two measures were used to determine phonotactic probability: (1) positional segment frequency (i.e., how often a particular segment occurs in a position in a word) and (2) biphone frequency (i.e., segment-to-segment co-occurrence probability). These metrics were based on log frequency-weighted counts of words in an on-line version of Webster’s (1967) Pocket Dictionary, which contains approximately 20,000 computer-readable phonemic transcriptions. The same metric has been used in other studies examining the influence of phonotactic probability on the processing of spoken language (e.g., Jusczyk, Luce and Charles-Luce, 1994; Mattys and Jusczyk, 2001; Storkel, 2001).

Words that were classified as high-probability patterns consisted of segments with high segment positional probabilities. For example, in the high probability word, /kæp/ (‘cap’), the consonant /k/ is relatively frequent in initial position, the vowel /æ/ is relatively frequent in the medial position, and the consonant /p/ is relatively frequent in the final position. In addition, a high probability phonotactic pattern consisted of biphones with high probability initial consonant-vowel and vowel-final consonant sequences (e.g., /k/ followed by /æ/ and /æ/ followed by /p/ in the word, /kæp/). Words that were classified as low-probability patterns consisted of segments with low segment positional probabilities and low biphone probabilities. The average segment and biphone probabilities were 0.2030 and 0.0140 for the high probability/dense words and 0.1350 and 0.0060 for the low probability/sparse words. The differences between the average segment probabilities (F(1, 18) = 58.49, p<0.001) and the average biphone probabilities (F(1, 18)=20.48, p<0.001) were both statistically significant. The difference in the magnitudes of the segment and biphone probabilities reflects the fact that there are many more biphones than segments.

Similarity neighbourhoods

Phonological similarity neighbourhoods were computed for each stimulus by comparing a given phonemic transcription (constituting the stimulus word) to all other transcriptions in the lexicon (see Luce and Pisoni, 1998). A neighbour was defined as any transcription that could be converted to the transcription of the stimulus word by a one phoneme substitution, deletion, or addition in any position. The mean neighbourhood density values for the high probability/dense and low probability/sparse words were 25 and 17 respectively (F (1, 18) = 11.05, p<.01.

Word frequency

Frequency of occurrence (Kucera and Francis, 1967) was matched for the two conditions of words. Average log word frequency was 1.52 for the high probability/dense words and 1.57 for the low probability/sparse words (F<1).

Durations

The durations of the stimuli in the two phonotactic/density conditions were equivalent. The high probability/dense words had a mean duration of 603 ms and the low probability/sparse words had a mean duration of 611 ms (F (1, 18) < 1). All stimuli were low-pass filtered at 4.8kHz and digitized at a sampling rate of 10 kHz using a 12-bit analogue-to-digital converter. Stimuli were edited into individual files and stored on computer disk.

Procedure

Each participant was seated in a booth equipped with a computer terminal, a pair of Beyerdynamic DT-100 headphones, and a two-button response box interfaced to a dedicated timing board in the computer. The left-hand button on the response box was labelled DIFFERENT, and the right-hand button (i.e., the dominant hand of the participants) was labelled SAME. A 200 MHz Gateway 2000 Pentium computer controlled the presentation of stimuli and the collection of responses.

A trial proceeded as follows: A prompt (the word ‘READY’) appeared on the computer screen for 500 ms to indicate the beginning of a trial. Participants were then presented with two of the spoken stimuli at 70 db SPL over the headphones. The inter-stimulus interval was 50 ms. Reaction times were measured from the onset of the second stimulus in the pair to the button press response. If the maximum reaction time (3 s) expired, the computer automatically recorded an incorrect response and presented the next trial. Participants were told that they would hear pairs of words and nonwords, and were instructed to respond as quickly and as accurately as possible. SAME responses were made with the dominant hand. Non-matching stimuli were created by pairing stimulus items from the same lexical and phonotactic categories. For the different stimulus pairs, items with the same initial phoneme and (when possible) the same vowel were paired.

Every participant received a total of 160 trials. Participants were not informed which condition (more word pairs, more nonword pairs, or equal numbers of word and nonword pairs) they would be presented with. In every condition half of the trials consisted of two identical stimuli (constituting SAME trials) and half of the trials consisted of different stimuli. In one condition, 120 of the stimulus pairs were words and 40 were nonwords. In the second condition, 80 of the stimulus pairs were words and 80 were nonword pairs. In the third condition, 40 of the stimulus pairs were words and 120 were nonword pairs. In every condition the same 20 word pairs were the words of interest; the rest of the stimulus pairs served as filler items. The 20 words of interest always constituted SAME trials.

Results

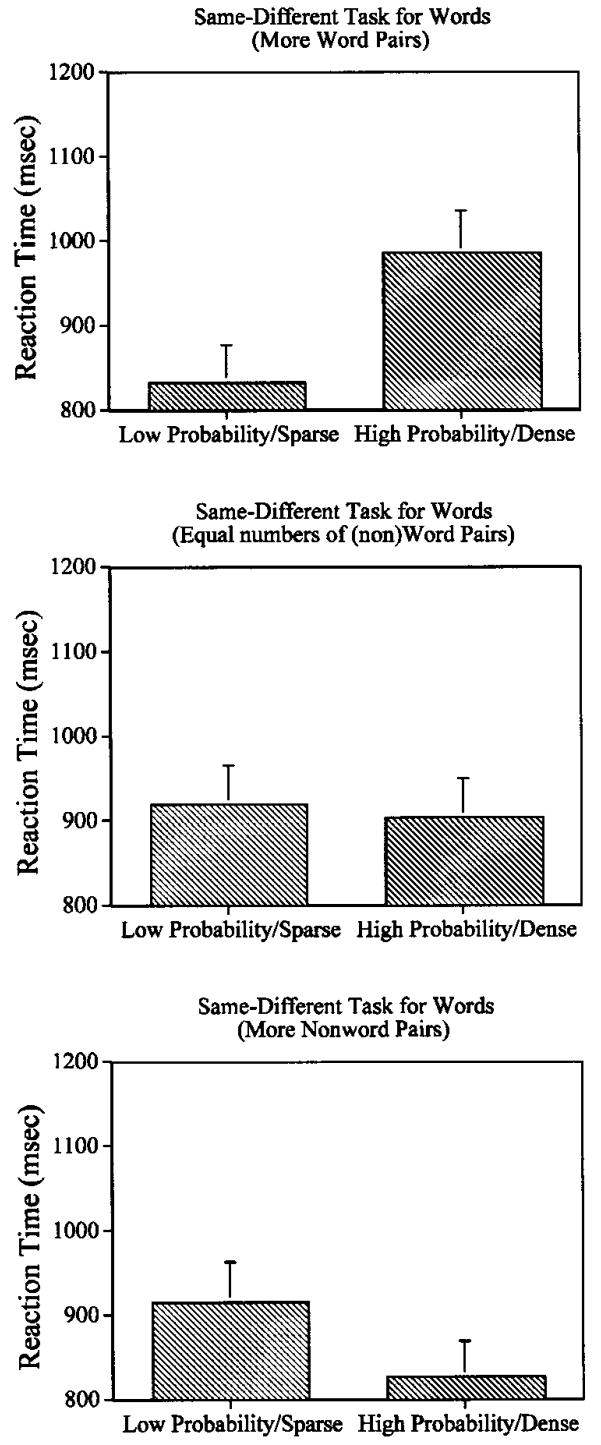

The mean reaction times in milliseconds for correct SAME responses to the 20 words of interest across the three processing environments are shown in figure 1. The top panel shows the results for the condition containing more word than nonword pairs. The middle panel shows the results for the condition containing equal numbers of word and nonword pairs. The bottom panel shows the results for the condition containing more nonword than word pairs.

Figure 1.

The mean reaction times for correct SAME responses to the 20 words of interest. The top panel shows the results for the condition containing more word than non-word pairs. The middle panel shows the results for the condition containing equal numbers of word and nonword pairs. The bottom panel shows the results for the condition containing more nonword than word pairs.

Mixed 2 (Probability/Density; within participants) × 3 (Proportion of nonwords; between participants) ANOVAs were performed for both reaction times and percentages correct. No significant effects were obtained for accuracy (all Fs <1), suggesting that participants sacrificed neither speed nor accuracy in responding.

For the reaction times, main effects of Probability/Density and Proportion of nonwords were not significant (all Fs < 1). There was, however, a significant interaction of Probability/Density and Proportion of nonwords (F (2, 75) = 11.69, p<0.001). Planned comparisons show that for the condition in which there was a greater number of word than nonword pairs, high probability/dense words (mean = 986) were responded to more slowly than low probability/sparse words (mean = 834; F (1, 25) = 8.61, p<0.01). For the condition in which there were equal numbers of word and nonword pairs, there was no significant difference between high (mean = 904) and low (mean=919) probability/density words (Fs<1). For the condition in which there was a greater number of nonword than word pairs, high probability/dense words (mean = 827) were responded to more quickly than low probability/sparse words (mean = 916; F (1, 25) = 12.12, p<0.01).

Additional analysis of the equal proportion of words and nonwords condition

The statistically non-significant difference observed for the real words in the condition in which there were equal numbers of words and nonwords could have been produced by either of two outcomes. One outcome is that participants switched from sublexical to lexical representations on a trial by trial basis, resulting in the effects of phonotactic probability and neighbourhood density offsetting each other for each participant. Alternatively, the non-significant outcome could have been the result of two different groups of participants—one group responding using lexical representations, the other group responding using sublexical representations— that obscured any significant differences when they were combined and analysed together.

To examine which processing strategy (trial by trial, versus two subsets) might have produced the observed results, the participants were partitioned into two groups: those participants who responded more quickly to the subset of high probability/dense words, and those who responded more quickly to the subset of low probability/sparse words (see Fox, 1984, for a similar method of partitioning responses). The subset of real words for each group of participants was then reanalysed as a function of phonotactic probability/neighbourhood density. If participants switched from sublexical to lexical representations on a trial by trial basis, then there should still be no statistically significant difference between the two types of real words in the two subsets of participants. However, if there was a distinct group of ‘lexical processors’ and a distinct group of ‘sublexical processors’, then we should observe a statistically significant effect of neighbourhood density for the lexical processors and a statistically significant effect of phonotactic probability for the sublexical processors.

There were 16 participants who responded more quickly to the words with high phonotactic probability/dense neighbourhoods than to the words with low phonotactic probability/sparse neighbourhoods; these participants were identified as those with a bias to use sublexical representations. There were ten participants who responded more quickly to the words with low phonotactic probability/sparse neighbourhoods than to the words with high phonotactic probability/dense neighbourhoods; these participants were identified as those with a bias to use lexical representations. A chi-square analysis shows that there was no difference in the number of participants in each group (χ2 = 1.38; n.s.).

A mixed 2 (Probability/Density; within participants) × 2 (response bias; between participants) ANOVA showed several interesting results. First, there were no significant main effects (both Fs<1) suggesting that there was no difference in response times between the two types of participants (i.e., the two types of response bias), and no difference in the speed with which participants responded to the two types of real words. There was, however, a significant interaction between the particular response bias participants had and the two types of real words (F (1, 24) = 62.82, p<0.001). For the participants that had a sublexical response bias, real words with low phonotactic probability/sparse neighbourhoods were responded to more slowly (972 msec) than real words with high phonotactic probability/dense neighbourhoods (877 msec; F (1, 15) = 29.48, p<0.001). For the participants that had a lexical response bias, real words with low phonotactic probability/sparse neighbourhoods were responded to more quickly (833 msec) than real words with high phonotactic probability/dense neighbourhoods (948 msec; F (1, 9) = 39.45, p<0.001). This significant interaction suggests that the overall non-significant difference between the two types of words was not due to each participant switching between sublexical and lexical representations on a trial by trial basis. Rather, it suggests that participants adopted either a lexical response bias, or a sublexical response bias to process the real words they heard in the same-or-different task. When these two antagonistic groups were combined, the overall effect was a non-significant difference between the two types of words.

One possible explanation for why a particular processing bias might have emerged in this condition is that participants may have heard a disproportionate number of one stimulus type in the first half of the experiment, leading them to process subsequently presented stimuli in the same way the initially presented stimuli were processed. Said another way, participants that heard more nonword than word pairs in the first half of the experiment may be those listeners in the sublexical bias group, and participants that heard more word than nonword pairs in the first half of the experiment may be those listeners in the lexical bias group. To examine this possibility, the number of word and nonword pairs with low phonotactic probability/sparse neighbourhoods and with high phonotactic probability/ dense neighbourhoods presented in the first and second halves of the experiment were counted for each participant in each group (sub-lexical versus lexical bias).

A mixed ANOVA revealed that there were no significant differences in the number of words and nonwords varying in phonotactic probability/neighbourhood density presented in the two halves of the experiment in each group of listeners (all (Fs (1, 50) <1). This analysis casts doubt on an extrinsic explanation for the processing biases that were observed in the present experiment. That is, participants in the sub-lexical group did not simply hear more nonword pairs in the beginning of the experiment, and participants in the lexical group did not simply hear more word pairs in the beginning of the experiment. Rather, the processing biases that were observed in the present experiment were most likely due to characteristics intrinsic to the listeners.

Discussion

The present experiment examined the way that the same set of highly familiar, real English words was processed in three different conditions that varied the ratio of words and nonwords in a same-different matching task. Taken together, the results from the three conditions of this experiment support the hypothesis that sublexical and lexical representations are used to process spoken words (Pitt and Samuel, 1995; Vitevitch and Luce, 1998, 1999). Specifically, when mostly words were heard, participants used lexical representations to process the real words, as evidenced by the neighbourhood density effect in that condition. That is, words with high phonotactic probability/dense neighbourhoods were responded to more slowly than words with low phonotactic probability/sparse neighbourhoods (Luce and Pisoni, 1998). This result suggests that under normal processing situations (i.e., conditions in which we hear words in our native language) we primarily rely on lexical representations to recognize spoken words. Indeed, competition among lexical representations is perhaps one of the few commonalities among current models of spoken word recognition (e.g., Luce and Pisoni, 1998; McClelland and Elman, 1986; Norris, McQueen and Cutler, 1998).

In contrast, when mostly nonwords were heard, participants used sublexical representations to process the real words, as evidenced by the phonotactic probability effect in that condition. Words with high phonotactic probability/dense neighbourhoods were responded to more quickly than words with low phonotactic probability/sparse neighbourhoods (Vitevitch and Luce, 1998, 1999). To the best of my knowledge, this is the first demonstration in which the same set of real words showed an effect of both neighbourhood density and phonotactic probability, albeit in different conditions (cf. Experiment 2 of Vitevitch and Luce, 1999). This finding suggests that, under circumstances in which we hear mostly nonwords, sublexical representations may be used to process spoken input.

Although listening to nonwords sounds like an improbable situation in daily life, such circumstances present themselves more often then one might imagine. Consider, for example, the case of learning a new word. When you first encounter a novel sequence of sounds, there is no distinct lexical representation to access. Therefore, sublexical representations may be used to retain that sequence of sounds until semantic information can be associated with it, and a holistic lexical representation can be created. Storkel (2001, 2002, see also Storkel and Rogers, 2000) has found that children do indeed use sublexical representations to help them acquire novel words. Despite the typical association of word-learning occurring only in children, adults do acquire novel lexical items, suggesting that adults may also use sublexical representations to learn new words. Now consider the process of segmenting words from fluent speech. Essentially one is presented with a long stream of nonsense that must be partitioned into meaningful units (i.e., words). Evidence from infants (Mattys and Jusczyk, 2001) and adults (Gaygen, 1999; McQueen, 1998) suggests that sublexical representations are used to extract meaningful words from the ‘nonsense’ of fluent speech. Thus, sublexical representations may be used to extract meaning from ambient nonsense more often than we might initially think.

The results of the present experiment further showed that subsets of participants used either sublexical or lexical representations to process the real words in the condition containing equal numbers of words and nonwords. Finding distinct subsets (i.e., sublexical and lexical processors) suggests that listeners did not randomly switch between sublexical and lexical representations on a trial by trial basis. It further suggests that individual differences may play a role in the processing of spoken words. For example, individuals with larger vocabularies (expressive, receptive, or both) may have more efficient lexical processes and, therefore, may be more likely to rely on lexical representations in ambiguous processing environments than individuals with smaller vocabularies and less efficient lexical processes. Differences in short-term memory capacity may have also influenced how much listeners relied on sublexical versus lexical representations (e.g., Gathercole, Frankish, Pickering and Peaker, 1999; Gathercole, Service, Hitch, Adams and Martin, 1999); unfortunately such additional measures were not collected in the present investigation. Additional research must examine the complex relationships that exist among short-term memory capacity, vocabulary size, (sub)lexical biases in language processing, and word learning ability.

Intrinsic (sub)lexical biases may also influence outcome measures in hearing impaired listeners who use cochlear implants. For example, Vitevitch et al. (2002) found that cochlear implant users with good word recognition ability (as assessed by their scores on the Northwestern University Auditory Test No. 6, or NU-6; Tillman and Carhart, 1966) used both lexical and sublexical representations in optimal ways in a variety of tasks, much like the normal hearing listeners in Vitevitch and Luce (1999). In contrast, Vitevitch et al. (2002) found that cochlear implant users with poor word recognition ability (as assessed by their scores on the NU-6) did not use sublexical and lexical representations in an optimal manner. The results of the present study demonstrate that it is possible to identify specific cognitive processing biases used by normal hearing listeners to recognize spoken words. A small sample size prohibited the additional analyses that might have identified such biases in the two groups of cochlear implant users in Vitevitch et al. (2002). Future research that distinguishes when specific processing biases yield optimal performance may lead to the development of effective interventions for hearing impaired listeners who do not receive maximal benefit from a cochlear implant.

Finally, the present study demonstrated the utility of using classic chronometric techniques (Posner, 1978) in studies of psycholinguistic phenomena. These and other techniques that measure online processing may prove useful in investigating the nature of processing and representational systems in many clinical populations (Tompkins, 1998).

Acknowledgments

This research was supported in part by training grant DC00012 (while the author was a post-doctoral research associate at Indiana University) and research grant DC 04259 from the National Institute of Deafness and Other Communicative Disorders, National Institutes of Health. I would also like to thank David B. Pisoni for his guidance, assistance and generosity while I was at IU and Holly Storkel for many helpful discussions. A portion of these findings were presented at the 138th Meeting of the Acoustical Society of America, Columbus, OH, November, 1999.

Appendix

| Words with High Probability/Density | Words with Low Probability/Density |

|---|---|

| back | bomb |

| bar | dog |

| coat | dumb |

| cap | fall |

| coat | feed |

| cot | home |

| cut | Hop |

| deer | jack |

| fan | nap |

| fair | lock |

References

- Fox RA. Effect of lexical status on phonetic categorization. Journal of Experimental Psychology: Human Perception and Performance. 1984;10:526–540. doi: 10.1037//0096-1523.10.4.526. [DOI] [PubMed] [Google Scholar]

- Gathercole SE, Frankish CR, Pickering SJ, Peaker S. Phonotactic influences on short-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:84–95. doi: 10.1037//0278-7393.25.1.84. [DOI] [PubMed] [Google Scholar]

- Gathercole SE, Service E, Hitch GJ, Adams A, Martin AJ. Phonological short-term memory and vocabulary development: further evidence on the nature of the relationship. Applied Cognitive Psychology. 1999;13:65–77. [Google Scholar]

- Gaygen DE. Effects of phonotactic probability on the recognition of words incontinuous speech. Buffalo, NY: 1999. Unpublished doctoral dissertation from the University. [Google Scholar]

- Jusczyk PW, Luce PA, Charles-Luce J. Infants’ sensitivity to phonotactic patterns in the native language. Journal of Memory and Language. 1994;33:630–645. [Google Scholar]

- Kirk KI, Pisoni DB, Miyamoto RC. Effects of stimulus variability on speech perception in listeners with hearing impairment. Journal of Speech, Language, and Hearing Research. 1997;40:1395–1405. doi: 10.1044/jslhr.4006.1395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kucera H, Francis WN. Computational Analysis of Present-Day American English. Providence, RI: Brown University Press; 1967. [Google Scholar]

- Landauer, Streeter Structural differences between common and rare words: Failure of equivalence assumptions for theories of word recognition. Journal of Verbal Learning and Verbal Behavior. 1973;12:119–131. [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: the neighbourhood activation model. Ear and Hearing. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattys SL, Jusczyk PW. Phonotactic cues for segmentation of fluent speech by infants. Cognition. 2001;78:91–121. doi: 10.1016/s0010-0277(00)00109-8. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cognitive Psychology. 1986;18:1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- McQueen JM. Segmentation of continuous speech using phonotactics. Journal of Memory and Language. 1998;39:21–46. [Google Scholar]

- Norris D, McQueen JM, Cutler A. Merging information in speech recognition: Feedback is never necessary. Brain and Behavioural Sciences. 1998;23:299–370. doi: 10.1017/s0140525x00003241. [DOI] [PubMed] [Google Scholar]

- Pitt MA, Samuel AG. Lexical and sublexical feedback in auditory word recognition. Cognitive Psychology. 1995;29:149–188. doi: 10.1006/cogp.1995.1014. [DOI] [PubMed] [Google Scholar]

- Posner MI. Chronometric Explorations of Mind. Hillsdale, NJ: Lawrence Earlbaum Associates; 1978. pp. 1–26. [Google Scholar]

- Sommers MS. The structural organization of the mental lexicon and its contribution to age-related declines in spoken-word recognition. Psychology and Aging. 1996;11:333–341. doi: 10.1037//0882-7974.11.2.333. [DOI] [PubMed] [Google Scholar]

- Sommers MS, Danielson SM. Inhibitory processes and spoken word recognition in young and older adults: the interaction of lexical competition and semantic context. Psychology and Aging. 1999;14:458–472. doi: 10.1037//0882-7974.14.3.458. [DOI] [PubMed] [Google Scholar]

- Storkel HL. Learning new words: phonotactic probability in language development. Journal of Speech-Language-Hearing Research. 2001;44:1321–1337. doi: 10.1044/1092-4388(2001/103). [DOI] [PubMed] [Google Scholar]

- Storkel HL. Restructuring similarity neighbourhoods in the developing mental lexicon. Journal of Child Language. 2002;29:251–274. doi: 10.1017/s0305000902005032. [DOI] [PubMed] [Google Scholar]

- Storkel HL, Rogers MA. The effect of probabilistic phonotactics on lexical acquisition. Clinical Linguistics and Phonetics. 2000;14:407–425. [Google Scholar]

- Tillman TW, Carhart R. North western University Auditory Test No. 6. Brooks Air Force Base, TX: USAF School of Aerospace Medicine; 1966. An expanded test for speech discrimination utilizing CNC monosyllabic words. Technical Report No. SAM-TR-66-55. [DOI] [PubMed] [Google Scholar]

- Tompkins CA. Special forum on online measures of comprehension: implications for speech-language pathologists. American Journal of Speech-Language Pathology. 1998;7:48. [Google Scholar]

- Vitevitch MS. Naturalistic and experimental analyses of word frequency and neighbourhood density effects in slips of the ear. Language and Speech. 45(4) doi: 10.1177/00238309020450040501. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA. When words compete: levels of processing in spoken word perception. Psychological Science. 1998;9:325–329. [Google Scholar]

- Vitevitch MS, Luce PA. Probabilistic phonotactics and spoken word recognition. Journal of Memory and Language. 1999;40:374–408. [Google Scholar]

- Vitevitch MS, Luce PA, Charles-Luce J, Kemmerer D. Phonotactics and syllable stress: implications for the processing of spoken nonsense words. Language and Speech. 1997;40:47–62. doi: 10.1177/002383099704000103. [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Pisoni DB, Auer ET. Phonotactics, neighbourhood activation and lexical access for spoken words. Brain and Language. 1999;68:306–311. doi: 10.1006/brln.1999.2116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vitevitch MS, Pisoni DB, Kirk KI, Hay-McCutcheon M, Yount S. Effects of phonotactic probabilities on the processing of spoken words and nonwords by adults with cochlear implants who were postlingually deafened. Volta Review. 2002;102:283–302. [PMC free article] [PubMed] [Google Scholar]