Abstract

Humans produce hand movements to manipulate objects, but also make hand movements to convey socially relevant information to one another. The mirror neuron system (MNS) is activated during the observation and execution of actions. Previous neuroimaging experiments have identified the inferior parietal lobule (IPL) and frontal operculum as parts of the human MNS. Although experiments have suggested that object-directed hand movements drive the MNS, it is not clear whether communicative hand gestures that do not involve an object are effective stimuli for the MNS. Furthermore, it is unknown whether there is differential activation in the MNS for communicative hand gestures and object-directed hand movements. Here we report the results of a functional magnetic resonance imaging (fMRI) experiment in which participants viewed, imitated and produced communicative hand gestures and object-directed hand movements. The observation and execution of both types of hand movements activated the MNS to a similar degree. These results demonstrate that the MNS is involved in the observation and execution of both communicative hand gestures and object-direct hand movements.

Keywords: action, fMRI, mirror neurons, non-verbal communication, STS

INTRODUCTION

Hand gestures are one of the principal means for conveying socially relevant information to another person (Hobson, 1993; Parr et al., 2005). Understanding of both communicative hand gestures and object-directed hand movements may be mediated by a common or overlapping representation for perception and action (Bandura, 1977; Prinz, 1992). The discovery of mirror neurons, neurons that respond during both the observation and execution of an action, suggested a neural basis for the link between perception and action. Mirror neurons are found in ventral premotor cortex (area F5) and inferior parietal lobule (IPL) of the macaque monkey (di Pellegrino et al., 1992; Gallese et al., 1996; Fogassi et al., 2005). Cytoarchitectonic maps suggest that the human homologue for area F5 is the frontal operculum (Petrides and Pandya, 1994). Functional magnetic resonance imaging (fMRI) and positron emission tomography (PET) studies suggest that the IPL and frontal operculum form a human mirror neuron system (Iacoboni et al., 1999; Decety et al., 2002; Leslie et al., 2004). Previous neurophysiological and neuroimaging studies have indicated that the STS is involved in the perception of biological motion and, more broadly, in social communication (Perrett et al., 1985; Allison et al., 2000; Hoffman and Haxby, 2000; Puce and Perrett, 2003). There are direct anatomical connections between the STS and the IPL and between the IPL and F5, but not between the STS and area F5 (Rizzolatti et al., 2001). Thus, the STS along with the mirror neuron system form a network of areas that plays a central role in action understanding (Bruce et al., 1981; Rizzolatti and Craighero, 2004).

Mirror neurons in the monkey have been described as firing in association with goal-directed actions made with an object (Rizzolatti and Craighero, 2004). Recently, communicative mouth mirror neurons were discovered in area F5 of the monkey that fire in association with the observation and execution of mouth movements that are used in communication and are not object-directed, such as lip smacking (Ferrari et al., 2003). There have been no reports of communicative hand mirror neurons in the monkey that respond to the observation and execution of hand movements that do not involve an object. There have been several neuroimaging studies that have reported significant activation in the frontal operculum during the observation and execution of facial expressions (Carr et al., 2003; Dapretto et al., 2006). Our previous study (Montgomery, et al, 2003) found significant activation for both facial expressions and communicative hand gestures. To our knowledge, there has been no direct comparison of the activations in the human MNS elicited by object-directed hand movements and communicative hand gestures.

To test whether there is differential activation in the MNS for communicative hand gestures and object-directed hand movements, we measured local hemodynamic responses with fMRI while participants viewed, imitated and produced communicative hand gestures and object-directed hand movements. We predicted that the MNS would respond significantly in association with both types of hand movements.

MATERIALS AND METHODS

Participants

Fourteen healthy male participants between 20 and 25 years of age (mean = 22 years) participated in the study. They gave informed consent for participation in the study, which was approved by the Institutional Review Panel for Human Subjects of the Princeton University Research Board. The participants were paid for their participation. All participants were right-handed and had normal or corrected-to-normal vision.

Stimuli

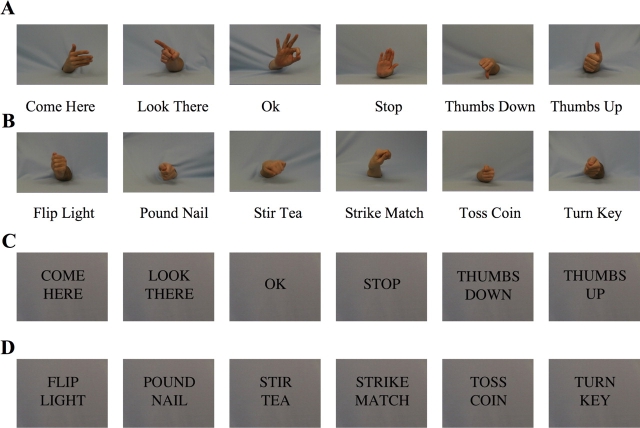

Stimuli were video clips of communicative hand gestures (come here, look there, okay sign, stop, thumbs down and thumbs up), object-directed hand movements (flip light, pound nail, stir tea, strike match, toss coin and turn key), and word stimuli that described the communicative or object-directed hand movements (Figure 1). The video stimuli were from six people (three females) making the gestures with their right hand and only the hand and forearm were visible in the video clips. The videos of object-directed hand movements were mimed actions of the object-directed hand movements and did not include the object. The word stimuli were presented as black text on a gray background. In total, there were 36 communicative hand gesture stimuli, 36 object-directed hand movement stimuli and 12 word stimuli (six to describe the communicative hand gestures and six to describe the object-directed hand movements).

Fig. 1.

Communicative hand gesture and object-directed hand movement stimuli. (A) Examples of communicative hand gesture stimuli (come here, look there, okay, stop, thumbs down and thumbs up). (B) Examples of object-directed hand movement stimuli (flip light, pound nail, stir tea, strike match, toss coin and turn key). (C) The six word stimuli that describe the communicative hand gestures. (D) The six word stimuli that describe the object-directed hand movements.

Stimuli were produced using a Canon XL1s 3CCD digital video camera and were edited using iMovie (Apple Computer, California) and Final Cut Pro (Apple Computer, California). Sixteen runs, eight runs of communicative hand gestures and eight runs of object-directed hand movements were produced as DVD chapters and burned to a DVD. The DVD was used to present the stimuli to the participants in high resolution via an Epson 7250 LCD projector, projected onto a rear projection screen in the scanner bore. The participants viewed the images via a small mirror placed above their eyes.

Experimental design

For each participant, we obtained 16 time series, eight for communicative hand gestures and eight for object-directed hand movements. During each time series there were three conditions: passive viewing, imitation and production. In the passive viewing condition, participants viewed the video clips of communicative hand gestures or object-directed hand movements. During the imitation condition, participants imitated the communicative hand gesture or object-directed hand movement that they saw in the observed video clip. Finally, during the production condition, participants saw a word or phrase describing the communicative hand gestures or object-directed hand movements and produced the action described. These three conditions made it possible to distinguish perception alone, perception and action and action without perception. Every time series had three blocks with one block for each condition. The blocks were 62 s in duration and began with a 2 s cue that indicated the condition type, followed by six items. Each item consisted of a 2 s stimulus followed by an 8 s pause. Each time series began and ended with a 10 s period of a gray screen with a black fixation cross in the center. Each time series had a duration of 3 min and 26 s. Both the order of blocks and of the time series were counterbalanced and pseudo-randomized across participants. Due to a technical problem, only 12 time series were analyzed for one of the participants.

During two of the three conditions, the participants made hand movements during fMRI scanning, which can introduce movement-related artifacts in the images. Motion produces an immediate magnetic field artifact leading to signal changes, whereas, the blood oxygenation level dependent (BOLD) signal is delayed and peaks ∼4–6 s after stimulus presentation (Friston, 1994; Birn et al., 1999). To distinguish BOLD MR signal changes from movement-related artifacts, each stimulus was followed by an 8 s pause when the participant did not move. Consequently, the fast signal changes linked with brief movements could be distinguished from the slow hemodynamic responses related to brain activity.

Participants were trained before the scanning session to familiarize them with the conditions and stimuli. During the scanning session, the hands of the participants were videotaped to monitor performance accuracy.

Data acquisition and analyses

MRI scanning was performed using a 3T head scanner (Allegra, Siemens, Erlangen, Germany) with a standard birdcage head coil. Functional images were taken with a gradient echo echoplanar imaging (EPI) sequence (TR = 2000 ms, TE = 30 ms, FoV = 192 mm, flip angle = 80°; 64 × 64 matrix). Thirty-two contiguous, axial slices that covered most of the brain were used (thickness = 3 mm; gap = 1 mm; in-plane resolution = 3 × 3 mm2). For each time series, a total of 103 EPI volume images were acquired. A high-resolution anatomical scan of the whole brain (T1-MPRAGE, 256 × 256 matrix, TR = 2500 ms, TE = 4.3, flip angle = 8°) was acquired in the same session for anatomical localization and spatial normalization.

Data were analyzed using AFNI (Cox, 1996). Prior to statistical analysis, images were motion corrected to the fifth volume of the first EPI time series and smoothed with a 6-mm FWHM 3D Gaussian filter. The first four images of each time series were excluded from analysis. Images were analyzed using voxelwise multiple regression with square wave functions reflecting each condition (communicative hand gestures: view, imitate, do; object-directed hand movements: view, imitate, do) that were convolved with a Gamma function model of the hemodynamic response to reflect the time course of the BOLD signal. In addition, unconvolved square wave functions for each condition were included as regressors of non-interest to account for brief movement-artifacts associated with hand movements. See supplementary Figure 1 for an illustration of the predicted non-overlapping signal changes that were modeled by these condition and movement regressors. Additional regressors of non-interest were used to factor out variance due to overall motion of the participant between time series, as well as regressors accounting for mean, linear and quadratic trends within time series. Thus, the multiple regression analysis models included six regressors of interest, six regressors to account for signal changes due to the execution of hand movements, a regressor for the condition cue, six regressors for head movement (roll, pitch, yaw, x, y and z) and 48 regressors that accounted for mean, linear and quadratic trends. The multiple regression model results identified the areas that were activated for each condition compared to baseline, which was defined as the rest periods when the participants were viewing a blank screen. The beta coefficients for each regressor of interest were normalized to the mean baseline response, which was found by calculating the mean activity for the baseline periods between each condition, and converted to percent signal change maps. The percent signal change maps for each individual participant were converted into Talairach space for group analysis (Talairach and Tournoux, 1988). A random-effects analysis of variance (ANOVA) was performed to obtain group results. Regions that were activated significantly by the perception and production of actions were identified based on the response during imitation, using a threshold of P < 0.001 (uncorrected) and a cluster size of 540 mm3 (Tables 1 and 2). To examine activity during observation or execution alone, we tested the significance of the response during the view and do conditions in the peak voxel for the imitate condition.

Table 1.

Coordinates and statistics for activation evoked during viewing, imitating and producing communicative hand gestures as compared to rest

| Brain region | Talairach coordinates | T value for view | T values for imitate | T value for do |

|---|---|---|---|---|

| Primary motor cortex, BA 4 | LH: −34, −20, 58 | 4.65*** | 7.55*** | 5.93*** |

| Primary somatosensory cortex, BA3 | LH: −38, −33, 52 | 4.68*** | 8.21*** | 7.25*** |

| Premotor cortex, BA6 | RH: 53, 0, 42 | 2.56* | 4.53*** | 3.68** |

| LH: −49, 4, 41 | 4.03** | 5.16*** | 4.04** | |

| Frontal operculum, BA44 | RH: 54, 11, 15 | 3.84** | 6.35*** | 6.92*** |

| LH: −53, −10, 16 | 3.94** | 6.45*** | 6.91*** | |

| Inferior parietal lobe, BA40 | RH: 58, −32, 39 | 3.57** | 6.07*** | 6.23*** |

| LH: −53, −48, 38 | 4.39*** | 6.77*** | 6.67*** | |

| Superior temporal sulcus, BA22 | RH: 46, −49, 7 | 3.66** | 5.04*** | 4.27*** |

| LH: −52, −51, 9 | 3.01** | 4.10** | 4.06** | |

| Middle occipital gyrus, BA19/37 (EBA) | RH: 48, −61, 3 | 4.10** | 5.57*** | n.s. |

| LH: −52, −65, 3 | 3.85** | 5.96*** | 3.19** | |

| Insula | RH: 38, 3, 4 | 2.30* | 6.63*** | 4.51*** |

| LH: −36, −4, −5 | 2.30* | 5.86*** | 6.02*** | |

| Early visual cortex, BA 17/18 | RH: 4, −90, 2 | 6.03*** | 4.94*** | 3.83** |

| LH: −2, −89, −1 | 4.26*** | 4.33*** | 3.23** | |

| Cerebellum | RH: 39, −52, −25 | n.s. | 4.31*** | 5.54*** |

| LH: −24, −51, −25 | n.s. | 3.20** | 5.34*** |

*P < 0.05, **P < 0.01, ***P < 0.001.

BA: Brodmann area; RH: right hemisphere; LH: left hemisphere; n.s.: not significant.

Table 2.

Coordinates and statistics for activation evoked during viewing, imitating and producing object-directed hand gestures as compared to rest

| Brain region | Talairach coordinates | T value for view | T values for imitate | T value for do |

|---|---|---|---|---|

| Primary motor cortex, BA 4 | LH: −34, −20, 56 | 4.72*** | 7.61*** | 6.09*** |

| Primary somatosensory cortex, BA3 | LH: −38, −33, 52 | 4.80** | 8.18*** | 7.07*** |

| Premotor cortex, BA6 | RH: 54, 0, 41 | 3.87** | 5.31*** | 5.23*** |

| LH: −49, 3, 41 | 3.90** | 6.45*** | 5.70*** | |

| Frontal operculum, BA44 | RH: 53, 11, 15 | 3.03* | 6.47*** | 6.11*** |

| LH: −53, −12, 14 | 4.06** | 7.20*** | 6.79*** | |

| Inferior parietal lobe, BA40 | RH: 54, −32, 39 | 3.98** | 6.57*** | 6.10*** |

| LH: −52, −45 38 | 4.25*** | 6.84*** | 6.48*** | |

| Superior temporal sulcus, BA22 | RH: 46, −47, 8 | 3.58** | 5.27*** | 3.40** |

| LH: −54, −51, 9 | 3.16** | 4.40*** | 3.01** | |

| Middle occipital gyrus, BA19/37 (EBA) | RH: 47, −61, 5 | 5.57*** | 6.51*** | n.s. |

| LH: −51, −65, 3 | 5.68*** | 6.74*** | 2.86* | |

| Insula | RH: 38, 2, 3 | 2.54* | 6.82*** | 4.75*** |

| LH: −34, −6, −1 | 2.30* | 6.23*** | 6.91*** | |

| Early visual cortex, BA 17/18 | RH: 5, −86, 11 | 4.78*** | 5.20*** | 4.17** |

| LH: −5, −94, 13 | 4.16*** | 5.29*** | 4.04** | |

| Cerebellum | RH: 37, −52, −24 | n.s. | 6.70*** | 5.30*** |

| LH: −24, −52, −23 | n.s. | 5.69*** | 5.26*** |

*P < 0.05, **P < 0.01, ***P < 0.001.

BA: Brodmann area; RH: right hemisphere; LH: left hemisphere; n.s.: not significant.

For the analyses of time series data, anatomically defined volumes of interest (VOI) were drawn on high-resolution structural images to identify the three areas for which we had specific hypotheses: the STS, IPL and frontal operculum. The VOI for the STS extended from 60 to 10 mm posterior to the anterior commissure in Talairach brain atlas coordinates (Talairach and Tournoux, 1998). The VOI for the IPL extended from 60 to 24 mm posterior to the anterior commissure and included the intraparietal sulcus and supramarginal gyrus. The VOI for the frontal operculum extended from 8 to 32 mm anterior to the anterior commissure and included the pars opercularis and pars triangularis. Voxels within these VOIs that were significantly responsive to any of the experimental conditions determined by an omnibus general linear test at (P < 0.0001) were identified in each individual.

Mean signals for all activated voxels within a given VOI were computed by averaging across the condition blocks of detrended, raw time signals. The average number of activated voxels (1 mm3) was 95 in the bilateral STS, 123 in the bilateral IPL and 402 in the bilateral frontal operculum. Selected contrasts were evaluated with matched paired t-tests.

RESULTS

We found significant activations in the STS, IPL and frontal operculum in all conditions (Figure 2, Tables 1 and 2).

Fig. 2.

Significant activity in the mirror neuron system during the observation, imitation and production of communicative hand gestures (A) and object-directed hand movements (B) as compared to baseline activity (P < 0.001, uncorrected for multiple comparisons and a cluster size 540 mm3). Group data (n = 14) from a random-effects ANOVA has been overlaid on a single participant's high-resolution anatomical scan.

STS activity

We found significant differences between the responses in the STS to communicative hand gestures and object-directed hand movements based on the analysis of time courses of individual responses [F(1,89) = 5.34, P = 0.02] (Figure 3). Pairwise comparisons revealed a significantly larger response during production of communicative hand gestures (P < 0.001). There was not a significant effect of task for communicative hand gestures [F(2,29) = 0.05, P = 0.95], but there was a significant effect of task for object-directed movements [F(2,29) = 8.04, P = 0.0008]. Pairwise comparisons showed that for object-directed hand movements, there was a significantly greater response for imitating as compared to viewing and producing (P < 0.01). There was no significant difference between viewing and producing. The volumes of activated voxels in the right and left STS were not significantly different.

Fig. 3.

Time course analyses from the STS and mirror neuron system. (A) Time course results for the STS. There was a significant main effect for stimulus type (P = 0.02) and significantly greater activity in the production task for producing communicative hand gestures as compared to object-direct hand movements (P < 0.001). (B) Time course results for the IPL. There was no significant main effect for stimulus type (P = 0.78). (C) Time course results for the frontal operculum. There was no significant main effect for stimulus type (P = 0.10).

IPL activity

We did not find significant differences between the responses in the IPL to communicative hand gestures and object-directed hand movements based on the analysis of time courses of individual responses [F(1,89) = 0.08, P = 0.78] (Figure 3). There was a significant effect of task for both communicative hand gestures [F(2,29) = 4.32, P = 0.02] and object-directed hand movements [F(2,29) = 7.6, P = 0.001]. For both communicative hand gestures and object-directed hand movements, there was a significantly greater response for imitating and producing than for viewing (P < 0.001), but imitating and producing were not significantly different from one another. The regional analysis revealed a left-hemisphere advantage with a larger volume of activated voxels (252 vs 150) in the left IPL as compared to the right (P < 0.02).

Frontal operculum activity

We did not find significant differences between the responses in the frontal operculum to communicative hand gestures and object-directed hand movements based on the analysis of time courses of individual responses [F(1,89) = 2.66, P = 0.10] (Figure 3). There was a significant effect of task for both communicative hand gestures [F(2,29) = 4.4, P = 0.02] and for object-directed hand movements [F(2,29) = 5.4, P = 0.007]. For both communicative hand gestures and object-directed hand movements, there was a significantly greater response for imitating and producing than for viewing (P < 0.001), but imitating and producing were not significantly different from one another. The volumes of activated voxels in the right and left frontal operculum were not significantly different.

DISCUSSION

We investigated whether communicative hand gestures and object-directed hand movements activate the STS and the MNS. We found significant activation in the STS and MNS for the observation, imitation and production of both object-directed hand movements and communicative hand gestures. Interestingly, we found the same level of activation in the MNS for both communicative hand gestures and object-directed hand movements suggesting that communicative hand gestures activate the MNS in a similar manner as the object-directed hand movements.

There was a significant response in the STS during observation, imitation and production of communicative hand gestures and object-directed hand movements. This finding is consistent with previous neurophysiological and neuroimaging studies that have found the STS to be involved with the perception of biological movements (Perrett et al., 1985; Allison et al., 2000; Jellema et al., 2000; Puce and Perrett, 2003). We found significant activation in the STS during production of hand gestures without perception. This finding raises the question of whether the STS is involved only in the visual perception of action or in both the perception and execution of action. To our knowledge, there are no reports from single unit recordings in monkey cortex of STS neurons that respond to an action when the monkey cannot see the action that is being produced. Previous neuroimaging studies have found that the STS responds to the imagery of biological motion (Grossman and Blake, 2001), but the activation to imagery is weaker than the response to observation (Ishai et al., 2000; O’Craven and Kanwisher, 2000). In our experiment, however, the response during production was stronger than the response during observation, which suggests that imagery by itself is an unlikely explanation for this activity. Another possible explanation is that STS activity could be modulated by the MNS, which is connected to the STS (Rizzolatti et al., 2001). We found greater activation in the STS while imitating actions as compared to viewing actions, suggesting augmentation by feedback derived from motor-related activity. Previous findings (Iacoboni et al., 2001; Keysers and Perrett, 2004; Gazzola et al., 2006; Montgomery, et al, 2003) have suggested that STS activity during action production is due to feedback from the MNS, imagery or a combination of the two.

We found a significant difference in the STS between communicative hand gestures and object-directed hand movements. This significant difference only existed during the production condition. In our previous study (Montgomery, et al, 2003), that investigated activity in the STS and MNS for facial expressions and communicative hand gestures, we found that the activation during action production was equal to the activation during imitation in the STS. In the current study, we found equal activation during the production and imitation conditions for communicative hand gestures, consistent with our previous finding (Montgomery, et al, 2003). In contrast, activation in the production condition for object-directed hand movements was less than the activation during the imitation condition in the STS. The finding of increased activation in the STS for the production of communicative hand gestures compared to object-directed hand movements might reflect the role of the STS in social communication (Allison et al., 2000; Haxby et al., 2000; Puce and Perrett, 2003).

There was significant bilateral IPL activity during the observation, imitation and production of communicative hand gestures and object-directed hand movements. The significant bilateral IPL response during observation and execution is consistent with previous imitation neuroimaging studies (Iacoboni et al., 1999; Decety et al., 2002; Buccino et al., 2004). We found greater activation in the left IPL than in the right IPL, which is consistent with the patient literature that suggests left hemisphere damage is linked to hand and finger imitation deficits (Goldenberg, 1999; Goldenberg and Hermsdorfer, 2002) along with neuroimaging studies suggesting that gesture imitation is left-lateralized (Muhlua et al., 2005; Montgomery, et al, 2003). Furthermore, a recent neuroimaging study investigating imitation found that IPL activity was bilateral, but was stronger on the side contralateral to the response hand (Aziz-Zadeh et al., 2006). Since in our experiment participants made gestures with their right hand, stronger activation in the left IPL is consistent with these previous findings.

We did not find a significant difference in activation in bilateral IPL for communicative hand gestures and object-directed hand movements suggesting that the IPL is involved with the observation and execution of both object-directed and communicative hand movements. Previous single-cell findings have found mirror neurons in inferior parietal lobe of the monkey that respond to object-directed actions such as grasping a piece of food to eat (Fogassi et al., 2005). To date, there are no reports of communicative mirror neurons in the IPL that fire during the observation and execution of actions that are not object-directed. Our results suggest that the IPL is involved with hand gestures that are used in nonverbal communication that do not include an object.

We found significant responses in bilateral frontal operculum, including pars opercularis, during the viewing, imitation and production of communicative hand gestures and object-directed hand movements, in agreement with previous findings (Gallese et al., 1996; Iacoboni et al., 1999). We did not find that activity in the frontal operculum was lateralized, as it was in the IPL. The lack of laterality in the frontal operculum may be due to the perspective of the stimulus. Our study used stimuli that were from the third person perspective, not the first person perspective. Previous neuroimaging studies have found that the observation and imitation of first person perspective stimuli resulted in significantly more activation in the inferior frontal gyrus as compared to third person perspective stimuli (Jackson et al., 2006). As suggested by Aziz-Zadeh and colleagues (2006), the question of whether the perspective of the stimulus results in laterality differences in activation of the MNS should be explored in future studies.

We did not find a significant difference in activation in the frontal operculum for communicative hand gestures and object-directed hand movements, suggesting that the frontal operculum responds to the observation and execution of both communicative and object-directed hand movements. In the monkey, there are communicative mouth mirror neurons in area F5 that fire in response to the observation and execution of mouth actions that are not object-directed, such as lip smacking and teeth-chatter (Ferrari et al., 2003). In the human, there have been several neuroimaging reports of significant activation in the frontal operculum during the observation and execution of communicative facial actions, such as facial expressions (Carr et al., 2003; Dapretto et al., 2006), and one neuroimaging experiment of significant activation in response to the observation and execution of communicative hand gestures (Montgomery, et al, 2003). Our result of equivalent activity in the frontal operculum for communicative hand gestures and object-direct hand movements suggests that the frontal operculum is activated during the observation and execution of communicative hand gestures as well as communicative facial gestures.

Outside of the MNS, the neural representation of communicative hand gestures and object-directed hand movements did differ. We found significant differences in activation between communicative hand gestures and object-directed hand movements with stronger activity for object-directed hand movements in areas associated with motor behavior (cerebellum, putamen and premotor cortex) and stronger activity for communicative hand gestures in areas associated with social cognition (anterior STS, temporal pole and medial prefrontal cortex). These differences suggest that the object-directed actions evoked stronger activity in areas associated with motor skills (Toni et al., 1998; Doyon et al., 2002), whereas the communicative gestures evoked stronger activity in areas associated with social cognition, theory of mind and person knowledge (Frith and Frith, 2003; Amodio and Frith, 2006). A more detailed analysis of these results and a discussion of their significance is the subject of a separate report (Isenberg et al., 2004;).

In the present study object-directed hand movements did not include the object, but were mimed actions of the movements. Thus, there is the possibility that the participants in the study treated the object-directed hand movements as communicative hand gestures. The verbal labels that we presented in the production condition, which was included in the training session, and participant comments during debriefing, however, suggested that participants did not misunderstand the meaning of these hand movements as communicative gestures, such as an instruction. We used stimuli that depicted object-directed hand movements so that any difference between the neural activity evoked by the two types of movements could not be attributed to the perception of the objects in one condition that were not present in the other. Whether the engagement of the STS and MNS is modulated by the perception of action-related objects should be explored in future experiments.

In this study, we found significant activation in the STS and the MNS during the observation, imitation and execution of communicative hand gestures and object-directed hand movements supporting the hypothesis that the STS and the MNS form a network of areas that are involved in action understanding. We found comparable activation in the MNS for communicative hand gestures and object-directed hand movements. This suggests that the human MNS responds to hand gestures that are utilized to convey social non-verbal communication that does not involve an object, as well as object-directed hand movements.

There have been reports that the human MNS is involved in communicative mouth actions and hand gestures (Carr et al., 2003; Leslie et al., 2004; Dapretto et al., 2006; Montgomery, et al, 2003), but to our knowledge, this is the first study that directly compares activation for communicative and object-directed actions. This finding of similar activation in the mirror neuron system associated with communicative hand gestures and object-directed hand movements in the human is surprising in light of the mirror neuron studies in the monkey, which have reported that mirror neurons respond mainly to object-directed actions (Gallese et al., 1996; Ferrari et al., 2003). There is only one report (Ferrari et al., 2003) that found a significant mirror neuron response for an action that did not involve an object in the monkey. They found communicative mouth mirror neurons in the monkey that responded significantly during the observation and execution of communicative mouth actions like lip smacking. In contrast to the present study, only 15% of the mouth mirror neurons responded to communicative mouth actions as compared to 85% of mouth mirror neurons responding to object-directed mouth actions. The communication and language abilities of humans are greater than those of macaque monkeys, and our results suggest that the human MNS is more involved in understanding communicative actions than is the monkey MNS. Thus, our finding of similar activation in the MNS for communicative hand gestures and object-directed hand movements support the hypothesis that the human mirror neuron system, as compared to that of the monkey, has evolved to play a greater role in understanding the communicative intent associated with gestures, in addition to its role in understanding the utilitarian intent associated with object-directed actions.

SUPPLEMENTARY DATA

Supplementary Data can be found at SCAN online.

Acknowledgments

We thank A.D. Engell, M. I. Gobbini, S. Kastner and M.A. Pinsk for valuable discussions, and R. Lipke, I. Neuberger and M. Roche for help with stimuli production and data analysis. Supported by Princeton University, a National Institute of Neurological Disorders and Stroke (NINDS) K award to N.I., a National Alliance for Autism Research (NAAR) pre-doctoral fellowship to K.J.M, and an American Association of University Women (AAUW) dissertation fellowship to K.J.M.

Footnotes

Conflict of Interest

None declared.

REFERENCES

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends of Cognitive Science. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;7:268–77. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Aziz-Zadeh L, Koski L, Zaidel E, Mazziotta J, Iacoboni M. Lateralization of the human mirror neuron system. Journal of Neuroscience. 2006;26:2964–70. doi: 10.1523/JNEUROSCI.2921-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A. Social Learning Theory. Englewood Cliffs, NJ: Prentice Hall; 1977. [Google Scholar]

- Birn RM, Bandettini PA, Cox RW, Shaker R. Event-related fMRI of tasks involving brief motion. Human Brain Mapping. 1999;7:106–14. doi: 10.1002/(SICI)1097-0193(1999)7:2<106::AID-HBM4>3.0.CO;2-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buccino G, Vogt S, Ritzl A, et al. Neural circuits underlying imitation learning of hand actions: an event-related fMRI study. Neuron. 2004;42:323–34. doi: 10.1016/s0896-6273(04)00181-3. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the Macaque. Journal of Neurophysiology. 1981;46:369–84. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta J, Lenzi GL. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. PNAS. 2003;100:5497–502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–73. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dapretto M, Davies MS, Pfeifer JH, et al. Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nature Neuroscience. 2006;9:28–30. doi: 10.1038/nn1611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Chaminade T, Grezes J, Meltzoff AN. A PET exploration of the neural mechanisms involved in reciprocal imitation. NeuroImage. 2002;15:265–72. doi: 10.1006/nimg.2001.0938. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G. Understanding motor events: a neurophysiological study. Experimental Brain Research. 1992;91:176–80. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- Doyon J, Song AW, Karni A, Lalonde F, Adams MM, Ungerleider LG. Experience-dependent changes in cerebellar contributions to motor sequence learning. Proceedings of the National Academy of Sciences. 2002;99:1017–22. doi: 10.1073/pnas.022615199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari PF, Gallese V, Rizzolatti G, Fogassi L. Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. European Journal of Neuroscience. 2003;17:1703–14. doi: 10.1046/j.1460-9568.2003.02601.x. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G. Parietal lobe: from action organization to intention understanding. Science. 2005;303:662–7. doi: 10.1126/science.1106138. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Jezzard P, Turner R. Analysis for functional MRI time-series. Human Brain Mapping. 1994;1:153–71. [Google Scholar]

- Frith U, Frith CD. Development and neurophysiology of mentalizing. Proceedings of the Royal Society of London Series B. 2003;358:459–73. doi: 10.1098/rstb.2002.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Aziz-Zadeh L, Keysers C. Empathy and the somatotopic auditory mirror system in humans. Current Biology. 2006;16:1824–9. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- Goldenberg G. Matching and imitation of hand and finger postures in patients with damage in the left or right hemisphere. Neuropsychologia. 1999;37:559–66. doi: 10.1016/s0028-3932(98)00111-0. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Hermsdorfer J. Imitation, apraxia, and hemisphere dominance. In: Meltzoft AN, Prinz W, editors. The Imitative Mind: Development, Evolution, and Brain Bases. Cambridge: Cambridge University Press; 2002. pp. 331–46. [Google Scholar]

- Grossman ED, Blake R. Brain activity evoked by inverted and imagined biological motion. Vision Research. 2001;41:1475–82. doi: 10.1016/s0042-6989(00)00317-5. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Science. 2000;4:223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hobson P. Understanding persons: the role of affect. In: Baron-Cohen S, Tager-Flusberg H, Cohen DJ, editors. Understanding Other Minds: Perspectives from Autism. Oxford: Oxford University Press; 1993. pp. 204–27. [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–4. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Koski LM, Brass M, et al. Reafferent copies of imitated actions in the right superior temporal cortex. Proceedings of the National Academy of Sciences. 2001;98:13995–9. doi: 10.1073/pnas.241474598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering HI, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–8. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Montgomery KJ, Gobbini MI, Haxby JV. Imitation, production and viewing of social communication: an fMRI study. Society for Neuroscience Abstracts. 2003 128.10. [Google Scholar]

- Ishai A, Ungerleider LG, Haxby JV. Distributed neural systems for the generation of visual images. Neuron. 2000;28:979–90. doi: 10.1016/s0896-6273(00)00168-9. [DOI] [PubMed] [Google Scholar]

- Jackson PL, Meltzoff AN, Decety J. Neural circuits involved in imitation and perspective-taking. NeuroImage. 2006;31:429–39. doi: 10.1016/j.neuroimage.2005.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jellema T, Baker CI, Wicker B, Perrett DI. Neural representation for the perception of the intentionality of hand actions. Brain Cognition. 2000;44:280–302. doi: 10.1006/brcg.2000.1231. [DOI] [PubMed] [Google Scholar]

- Keysers C, Perrett DI. Demystifying social cognition: a Hebbian perspective. Trends in Cognitive Science. 2004;8:501–7. doi: 10.1016/j.tics.2004.09.005. [DOI] [PubMed] [Google Scholar]

- Leslie KR, Johnson-Frey SH, Grafton ST. Functional imaging of face and hand imitation: towards a motor theory of empathy. NeuroImage. 2004;21:601–7. doi: 10.1016/j.neuroimage.2003.09.038. [DOI] [PubMed] [Google Scholar]

- Muhlua M, Hermsdorfer J, Goldenberg G, et al. Left inferior parietal dominance in gesture imitation: an fMRI study. Neuropsychologia. 2005;43:1086–98. doi: 10.1016/j.neuropsychologia.2004.10.004. [DOI] [PubMed] [Google Scholar]

- O'Craven KM, Kanwisher N. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. Journal of Cognitive Neuroscience. 2000;12:1013–23. doi: 10.1162/08989290051137549. [DOI] [PubMed] [Google Scholar]

- Parr LA, Waller BM, Fugate J. Emotional communication in primates: implications for neurobiology. Current Opinion in Neurobiology. 2005;15:716–20. doi: 10.1016/j.conb.2005.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett DI, Smith PA, Potter DD, et al. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proceedings of the Royal Society of London Series B. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative architectonic analysis of the human and the macaque frontal cortex. In: Boller F, Grafman J, editors. Handbook of Neuropsychology. Elsevier Science B.V.; 1994. pp. 17–58. [Google Scholar]

- Prinz W. Why don't we perceive our brain states? European Journal of Cognitive Psychology. 1992;4:1–20. [Google Scholar]

- Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philosophical Transactions of Royal Society of London, B. Biological Sciences. 2003;358:435–45. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The Mirror-neuron system. Annual Reviews of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nature Reviews Neuroscience. 2001;2:661–70. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain. New York: Thieme Medical; 1988. [Google Scholar]

- Toni I, Krams M, Turner R, Passingham RE. The time course of changes during motor sequence learning: a whole-brain fMRI study. Neuroimage. 1998;8:50–61. doi: 10.1006/nimg.1998.0349. [DOI] [PubMed] [Google Scholar]