Abstract

There is a growing consensus that implementation of evidence-based intervention and treatment models holds promise to improve the quality of services in child public service systems such as mental health, juvenile justice, and child welfare. Recent policy initiatives to integrate such research-based services into public service systems have created pressure to expand knowledge about implementation methods. Experimental strategies are needed to test multi-level models of implementation in real world contexts. In this article, the initial phase of a randomized trial that tests two methods of implementing Multidimensional Treatment Foster Care (an evidence-based intervention that crosses child public service systems) in 40 non-early adopting California counties is described. Results are presented that support the feasibility of using a randomized design to rigorously test contrasting implementation models and engaging system leaders to participate in the trial.

Keywords: Implementation, Randomized design, Community development team, Multidimensional treatment foster care

A number of rigorous randomized trials have shown that theoretically based, developmentally and culturally sensitive interventions can produce positive outcomes for children and adolescents with mental health and behavioral problems (e.g., Olds et al. 2003; Henggeler et al. 1998). As the number and variety of well-validated interventions increases, the pressure also has increased from a broad range of stakeholders (e.g., scientific and practice institutes, state legislatures, public interest legal challenges) to incorporate evidence-based practices (EBP) into publicly funded child welfare, mental health, and juvenile justice systems (NIMH 2001, 2004). Despite the increasing availability and demand for well-validated interventions, it is estimated that 90% of public systems do not deliver treatments or services that are evidence-based (Rones and Hoagwood 2000). As was noted by the President’s New Freedom Commission on Mental Health (2003), these delays in the implementation of rigorously tested and effective programs in practice settings are simply too long. Though the integration of evidence-based practices into existing systems holds promise to improve the quality of care for children and their families, it is too often the case that treatments and services based on rigorous clinical research languish for years and are not integrated into routine practice (IOM 2001).

If only about 10% of child-serving public agencies are early adopters of evidence-based programs, a passive dissemination approach to implementation will almost assuredly lead to long delays in bringing such programs to practice. Thus, it seems important to evaluate the existence of contexts and circumstances in non-early adopting systems that could be improved to increase their motivation, willingness, and ability to adopt, implement, and sustain such models. The current article describes a theory-driven randomized trial designed to evaluate two methods of implementation of an evidence-based treatment into publicly funded child service systems in non-early adopting counties throughout the State of California. The overarching aim of this study is to add to the understanding of “what it takes” to engage, motivate, and support counties to make the decision to adopt, conduct, and sustain a research-based practice model, and to examine the role of specific factors thought to influence uptake, implementation, and sustainability.

The current article describes the results from the initial phase of the study, including an overview of the study design procedures for randomizing counties to study conditions and timeframes, statistical power considerations, methods for recruiting participation from county leadership, and reactions to randomization from county leadership. In addition, the feasibility of using a randomized design to study a multi-level implementation project is discussed in terms of acceptability and the ability to adapt the design to real world demands without compromising design integrity. Background information on the study is provided, including information on the conceptual model and how intervention effects are expected to operate on moderators and mediators. The logic behind using a randomized design is discussed and results on initial participation rates are provided.

Overview of the Multidimensional Treatment Foster Care Model

The EBP model offered to counties in the current study is Multidimensional Treatment Foster Care (MTFC). MTFC was developed in 1983 as a community-based alternative to incarceration and placing youth in residential/group care. Since then, randomized trials testing the efficacy of MTFC have been conducted with boys and girls referred for chronic delinquency, children and adolescents leaving the state mental hospital, and youth in foster care at risk for placement disruptions. Outcomes have demonstrated MTFC’s success in decreasing serious behavioral problems in youth, including rates of re-arrest and institutionalization (Chamberlain et al. 2007; Chamberlain and Reid 1991). The success of these studies led to MTFC’s designation as a cost-effective alternative to institutional and residential care (Aos et al. 1999). MTFC was selected as a model program by The Blueprints for Violence Prevention Programs (Office of Juvenile Justice and Delinquency Prevention), a National Exemplary Safe, Disciplined, and Drug-Free Schools model program (US Department of Education), a Center for Substance Abuse Prevention and OJJDP as an Exemplary I program for Strengthening America’s Families (Chamberlain 1998), and was highlighted in two US Surgeon General’s reports (a, bU.S. Department of Health and Human Services 2000a, b). Given the strong evidence base for MTFC and the need to provide community-based alternatives to group home placement in California that had been established in previous reports and studies (Marsenich 2002), this treatment model seemed to be a good fit to community need. In addition, a large-scale implementation of MTFC provided the opportunity to systematically examine the processes of adoption, implementation and sustainability at multiple levels—with system leaders, practitioners, youth, and families.

Overview of Study Conditions

Two methods of implementation are contrasted in the study: Standard implementation of MTFC that engages counties individually (IND) and Community Development Teams (CDT), where small groups of counties engage in peer-to-peer networking with technical assistance from local consultants. Participating counties in both conditions receive funds to train staff to implement MTFC and receive ongoing consultation for 1 year, which is a sufficient time for them to become well-versed in using the MTFC model.

Standard services (IND)

Counties in this condition use the protocols developed by TFC Consultants, Inc. (TFCC), an agency established in 2002 to disseminate MTFC. TFCC assists communities in developing MTFC programs and in implementing the treatment model with adherence to key elements that have been shown to relate to positive outcomes in the research trials. TFCC has assisted over 65 sites using standard protocols for staff training, ongoing consultation, and site evaluation. Once sites meet performance criteria they are certified as MTFC providers. These same strategies are used in the IND condition in the current study.

Community Development Teams (CDT)

This condition involves the assembly of small groups of counties (from 4 to 8) who are all interested in dealing with a common issue or implementing a given practice or strategy. They are provided with support and technical assistance on local issues (e.g., funding). The CDT model was developed by the California Institute for Mental Health (CiMH) in 1993 to encourage county efforts through the provision of technical assistance and support on key issues and to encourage counties to collaborate on projects and programs that would improve their mental health services. The first CDTs focused on assisting counties with common issues such as dealing with unique challenges faced by rural counties. In 2003, the CDT model was used to encourage and assist 10 counties in implementing MTFC. This was the first time CDTs were used to promote adoption of an evidence-base model. Since then, CDTs have been used with several additional evidence-based approaches (e.g., The Incredible Years, Aggression Replacement Training). The CDTs involve regular group meetings (i.e., six in this study) and telephone contacts. CDTs are operated by a pair of local consultants who work with county teams in collaboration with the model developer (in this case, TFCC). In the current study, counties in the CDT condition receive the training and consultation from TFCC that is typical for the standard IND implementation services plus the CDT services. Both conditions are described in greater detail in the Method section, including a description of the seven core processes that are used in the CDT.

The Logic for Using a Randomized Design

In order to make fair comparisons across these two alternative models of implementation, a randomized design was conducted at the county level with individual counties randomized to either the IND or CDT condition. To date, most of the studies in this field have examined a single implementation model without the use of random assignment of large service units such as counties, health care providers, or agencies. In studies using non-randomized designs, it is very difficult to disentangle how community factors, including readiness, might relate to the level of implementation, any problems or barriers encountered, and program sustainability. In the current study, the design includes all counties1 who send more than six youth per year to group home placements in the State of California that are not early adopters of MTFC. Counties sending fewer than six youth to group care were excluded because their need for MTFC placements was low and it was thought that it would not be feasible for them to implement from a cost standpoint. Early adopters had all been previously exposed to CDT assistance in implementing MTFC and therefore could not legitimately be randomly assigned to the non-CDT condition. Also, these counties had already implemented (or attempted to implement MTFC) so the study aims related to “what it takes to implement” were not relevant for them. Randomization of counties occurred at two levels: (1) to either the IND or CDT condition and (2) to a time frame for beginning the implementation process (the randomization process to each of these levels is described further in the Method section). This type of direct head-to-head testing of two alternative, realistic models is relatively novel for the field of mental health services as well as in many areas of physical health and public policy. However, as the field moves toward the adoption of well specified models, it is anticipated that similar studies will begin to follow this type of design out of necessity to evaluate the relatively complex and imbedded factors that drive the successful program implementation described here. Namely, does a peer-to-peer support model with ongoing technical support (i.e., the CDT) increase the adoption, implementation, and sustainability of an empirically-based program across a broad range of community contexts (e.g., rural and urban), and do these increases ultimately lead to detectable benefits for youth and families?

Conceptual Model Underlying the Study Design

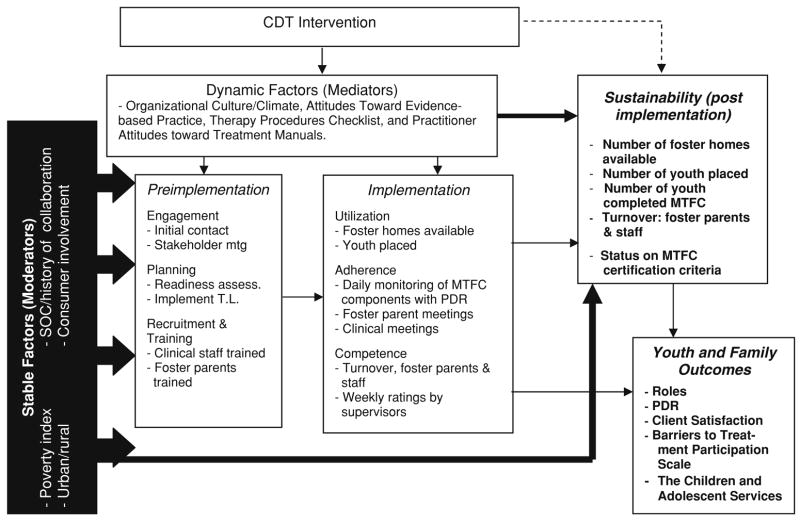

The current study is based on a conceptual model that is derived from both the empirical and theoretical literature that specifies hypothesized mechanisms of change (i.e., mediators and moderators) related to the CDT intervention. The model is shown in Fig. 1. It is hypothesized that participation in the CDT will have positive effects on the decision of counties to adopt, as well as on their ability to implement and sustain MTFC, to serve more youth, and ultimately to improve youth and family outcomes. The effect of the CDT is expected to operate, or be mediated, through a set of dynamic factors that affect key individuals at various organizational levels in the counties, including county system leaders, agency directors, and practitioners (including treatment staff and foster parents). The study examines the following dynamic factors hypothesized to mediate positive outcomes: organizational culture and climate (Glisson 1992), system and practitioner attitudes toward evidence-based practices (Aarons 2004), and adherence to competing treatment models or philosophies (Judge et al. 1999). These dynamic factors are expected to influence how well MTFC is accepted and integrated into implementing agencies. The mediation hypothesis is that these dynamic factors will change with exposure to the CDT, and that these changes will mediate the effects of the CDT on outcomes realized by the counties.

Fig. 1.

Conceptual model of the moderators and mediators of intervention

However, it is also hypothesized that regardless of study condition (i.e., IND or CDT), counties with positive scores on these dynamic factors (as measured by a set of standardized instruments) are predicted to successfully and rapidly progress through the stages of implementation compared to counties that have low scores (e.g., poor climate, negative attitude toward evidence-based interventions, prominent competing treatment philosophies). Successful counties will be defined according to how they progress through the stages of implementation (shown in Table 1 and described in Method section).

Table 1.

Stages of MTFC implementation

| Stage | Indicators | |

|---|---|---|

| 1 | County considers adopting MTFC: Engagement | Contact Logs of calls; Ratings of interest, motivation; Signed consent; System leader questionnaires (attitudes, organizational culture/climate, demographics) |

| 2 | Stakeholders meeting | Meeting attendance; Participant feedback; Staff impressions of engagement; Feasibility questionnaire |

| 3 | Readiness process | Log of readiness topics covered (funding, costs, staffing, timeline, foster parent recruitment, referral criteria & process); Rating of interagency coordination/cooperation |

| 4 | Implementation plan | Date of agency selection; Identification of implementation barriers; Written implementation plan |

| 5 | MTFC team hired and foster parents recruited | Number of foster parents recruited; Staff hiring dates; Practitioner questionnaires (attitudes, organizational culture/climate, therapy procedures); Practitioner demographics |

| 6 | Clinical staff training and foster parent certification | Training Log; Trainer impressions; Participant feedback; State/county certification of foster parents |

| 7 | Foster parents trained and Web PDR installed | FP training log; WebPDR training completed; Foster parent video role play; Trainer/participant impressions |

| 8 | Youth placed | PDR data; Point and level charts; Foster parent rating of stress; School cards; Youth and family outcomes (discharge living status, successful/unsuccessful completions, barriers to service, satisfaction) |

| 9 | Model fidelity and adherence | Coding of FP meetings; Coding of clinical meetings; Site consultants weekly call data and ratings of competence, & adherence; Site visit logs and reports |

| 10 | Site certified | Meets certification criteria; # foster homes available; Foster parent and staff turnover rates |

Rather than randomizing individual youth and families to different treatment conditions as is typical in treatment efficacy and effectiveness trials, randomization occurred at the county level in this implementation study. The primary outcome in this trial is the time it takes until a county begins placement of youth in foster care being delivered under an MTFC model. Because randomization occurs at the county level, the primary outcome will be analyzed at that level (i.e., the county) and therefore statistical power is a critical issue.

Detailed power calculations were computed under a range of realistic conditions to test the effect of the implementation condition on the time it takes for an MTFC placement to occur. The test statistic was based on the hazard coefficient using the Cox proportional hazards model. This is a standard model for comparing the effects of covariates on a time-to-event outcome (Cox 1972), and is designed to handle situations such as this where some counties are expected never to implement MTFC and therefore have right censored times of implementation. Through simulation studies, the exact statistical power for various alternative rates of completion were calculated taking into account variation across the three cohorts and intraclass correlation due to the peer to peer networking in the CDT condition (no correlation within cohort is expected in the IND condition). With the sample of 40 counties, there is sufficient statistical power to detect a moderate effect based on standard survival analysis modeling.

Simple chi-square tests examining proportions of counties that place a foster child by 18 months are less powerful but easier to understand. For example, if 60% of the CDT counties successfully place a youth in MTFC by 18 months (which matches previous experience), compared to 20% completing the placement in the IND condition, and the intraclass correlation (ICC) is minimal in the CDT condition, the statistical power for a standard two-sided test is 80%. On the other hand, if the ICC is large (e.g., 0.3), this same statistical power can be met if 80% of the CDT counties complete placements by 18 months. Higher statistical power can be achieved by including county level covariates in the proportional hazards model.

This multi-level study also allows us to examine impacts on the agency and family levels (Brown et al., 2008). Because all families in the current study will be exposed to MTFC and no attempt was made to balance or randomly assign families to the IND or CDT condition, this naturally limits the conclusions that can be drawn about the impact on youth outcomes. Nevertheless, in successful counties (as measured by the stages of implementation) more youth are predicted to complete their MTFC programs and move to less-restrictive placements in aftercare than in the non-successful implementation counties. It is expected that compared to counties that require extensive time to implement MTFC, those that demonstrate success will have MTFC programs that will be sustained over time, have higher levels of consumer satisfaction with MTFC, lower rates of youth problem behavior at discharge, and will have parents and youth who experience relatively fewer barriers to receiving services.

As illustrated on the left side of Fig. 1, a set of background contextual factors have been identified and are hypothesized to be stable (e.g., relatively fixed) over time and to moderate study outcomes. These factors include the following: the county’s level of poverty, whether they operate services primarily within rural or urban settings, the county’s history of consumer involvement and advocacy, and the county’s previous history of cross-agency collaboration on children’s mental health issues (Fox et al. 1999).

For counties in both conditions, these fixed factors are expected to influence both the probability of successful MTFC adoption and their successful progression through the stages of implementation. It is hypothesized that, in the CDT condition, these fixed background contextual factors will have less influence on adoption, implementation, and sustainability than in the IND condition. This is because the CDT is expected to address the overall motivation and support for the counties to proceed as well as to deal with the unique barriers that they encounter. This is expected to be due to both the processes that occur within the CDTs and because of the longstanding positive relationships between the counties and the California Institute for Mental Health (who is implementing the CDT). Thus, overall, the CDT is expected to improve adoption, implementation, and sustainability by reducing the salience of the barriers related to these fixed factors.

Method

Sample

California is comprised of 58 counties. Of these, 18 counties were excluded from the study at the onset based on our exclusion criteria: 9 had implemented MTFC previously (i.e., early adopters), 8 sent fewer that 6 youth per year to group or residential placement centers (the prevention of which is a key outcome targeted by MTFC), and 1 was involved in a class action lawsuit that precluded their participation. The 40 remaining counties were targeted for recruitment into the study.

Design and Timeframe

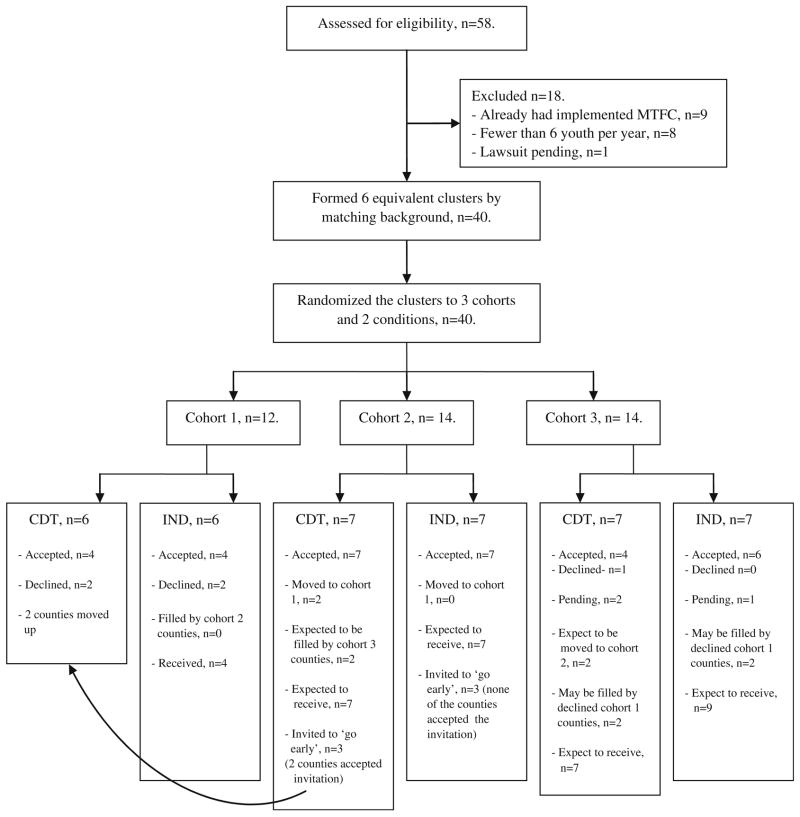

Counties were matched on background factors (e.g., population, rural/urban, poverty, Early Periodic Screening and Diagnosis and Treatment utilization rates) and then were divided into six equivalent clusters: two with six counties and four with seven counties. Each of these six comparable clusters was assigned randomly to one of three time cohorts (n = 12, 14, and 14, respectively), dictating when training towards implementation would be offered. The random assignment of counties to three timeframes allowed for the management of capacity (i.e., it was logistically impossible to implement in all counties at the same time). Within cohorts, counties were then randomized to the IND or CDT conditions. The enrollment for each cohort has been scheduled at yearly intervals at the cohort (rather than county) level beginning in January of 2007, 2008, and 2009. This design thus allows for three replications of the IND and CDT interventions. In addition, those counties assigned to later cohorts serve as a wait list condition, and because this design relies on replicates of counties in the three cohorts, cohort differences in outcomes could point to training differences across time. Thus, there is a built-in check against drift in training by comparing the time it takes for counties to place foster youth across the three cohorts. The consort diagram for the study design which describes enrollment, consent, and declination is shown in Fig. 2.

Fig. 2.

Consort diagram of study participation by cohort and condition

Design Adaptation

The sequencing of cohorts allowed for the management of study resources and training needs while providing counties time to arrange for their commitments. However, some counties were unable or unwilling to participate at their randomly chosen time. To address this issue, the design protocol was adapted to allow for an additional step to help maximize the efficiency of study resources while remaining sensitive to real-world county limitations. That is, procedures were created whereby the “vacancy” left by such a county was filled by a county in the succeeding cohort that was assigned to the same IND or CDT condition as the vacated slot. To fill these vacancies while maintaining the original intervention assignment, counties within each condition in Cohorts 2 and 3 were randomly permuted to determine the order in which they would be offered the opportunity to move up in timing (e.g., IND and CDT counties in Cohort 2 were randomly ordered in 2007, Cohort 1’s timeframe). This permutation process was conducted by the research staff and the outcome of the process was not revealed to the field staff until counties reported their decision to accept or decline the opportunity to “go early.” This precaution was taken to ensure that field staff did not influence a county’s decision to change their timing cohort. A vacancy created by non-participation of a Cohort 1 county then was offered to the next county in Cohort 2 that was in the same intervention condition. This provided the Cohort 2 counties with the opportunity to “go early” if there were vacancies, but did not affect intervention assignment. This procedure allowed counties to have some flexibility in their randomly assigned time slot without distorting the balance between the IND and CDT groups, and it allowed the research project to maximize the number of counties in the implementation phase of the study at any one time (i.e., to capitalize on study resources and limit the potential fiscal limitations brought about by conducting external funded time-limited research). Thus, because the counties’ decision to modify their randomly assigned time to begin the implementation process did not depend on whether they were assigned to IND or CDT, this adaptation to the design continues to maintain equivalent comparison groups.

Implementation Conditions

Individualized (IND) condition

The standard condition includes participation in the regular MTFC clinical training and consultation package designed to reflect the “usual” way organizations engage to adopt and implement an evidence-based practice. Typically, this involves having interested and motivated representatives from those organizations working with the model developer or organization responsible for disseminating the practice to receive the required consultation services package to implement the practice. In the case of MTFC, this includes the following ten steps:

A county (or other entity) contacts TFCC, and is sent information on the MTFC model and related research.

A stakeholder meeting is conducted at the local site.

The site participates in a readiness process including preparing for funding, staffing, referrals, and foster parent recruitment.

An implementation plan and timeline is developed and potential barriers are identified.

The MTFC treatment team is hired and fosters parent recruitment begins.

The treatment team is trained in Oregon and the foster parents are certified by their state or county.

Foster parents are trained on site and the web-based quality assurance system is installed at the site.

The first youth are placed.

The site participates in ongoing consultation to achieve model fidelity and adherence.

The site meets performance standards and is certified to provide MTFC services.

During ongoing consultation (stage 9), which typically lasts 12 months, weekly videotapes of foster parent and clinical meetings are sent from the site and are reviewed prior to weekly telephone consultation calls with the TFCC site consultant; and TFCC conducts two 3-day, on-site booster trainings.

Community Development Team

In the CDT condition, the implementation process described above is augmented by seven core processes designed to facilitate the successful adoption, implementation, and sustainability of the MTFC model. The core processes (detailed in Sosna and Marsenich 2006) are designed to promote motivation, engagement, commitment, persistence, and competence that lead to the development of organizational structures, policies, and procedures that support model-adherent and sustainable programs. The core processes include the following:

The need-benefit analysis involves sharing empirical information about the relative benefits of the evidence-based practice that is intended to help overcome risk hesitancy and promote the adoption and ongoing commitment to implementing with adherence.

The planning process is designed to assist sites in overcoming implementation barriers and to promote execution of a model-adherent version of the practice.

Monitoring and support motivate persistence with implementation of the site’s program and provide technical assistance in addressing emergent barriers.

Fidelity focus emphasizes the importance of fidelity and frames programmatic and administrative recommendations in the context of promoting fidelity.

Technical investigation and problem solving clarifies actual (versus perceived) implementation barriers, and develops potential solutions to actual barriers.

Procedural skills development enhances the organizational, management, and personnel skills needed to develop and execute the implementation plan.

Peer-to-Peer exchange and support promotes engagement, commitment, and learning by a group of sites, which encourages cross-fertilization of ideas.

Recruitment

County leaders from the mental health, child welfare and juvenile justice systems were recruited to participate because studies have shown that successful incorporation of research-based interventions require changes in existing policies, procedures, and practices (Olds et al. 2003; Schoenwald et al. 2004). Upon notification of study funding, the CiMH investigators emailed then sent a letter to the system leaders in all eligible counties notifying them that the grant was underway and inviting them to participate in the study. One week later, a second email and letter was sent to these leaders by the study’s Principal Investigator to notify them of the cohort and condition to which they were randomly assigned.

Recruitment of system leaders from all 40 counties began in January 2007. Because of the wait-list nature of the design, an attempt was made to recruit all 40 counties at baseline. Recruitment of county leaders was conducted jointly by the research project recruiter and CiMH and was identical for all cohorts. If the CiMH investigators had a working relationship with the system leaders in a given county, they made a “pre-call” to inform their contact that the research recruiter would be calling them. In addition, a letter was sent to all system leaders in all counties telling them to expect a call from the recruiter. During the recruitment call, the project recruiter followed a standardized recruitment script inviting the system leader to participate. At this time it was explained that agreement to participate in the study did not indicate agreement to implement MTFC; rather, agreement indicated a willingness to learn more about the MTFC model and to complete a baseline assessment. Those system leaders who agreed to participate were asked to sign the IRB-approved consent form and fax the signature to the project recruiter.

Measures2

Stages of implementation

A measure of the 10 stages of implementation of MTFC was developed to track the progress of counties (shown in Table 1). As illustrated there, multiple indicators are used to measure both the progression through the stage and quality of participation of the individuals involved at each stage. Stages 1–4 track the site’s decision to adopt/not-adopt MTFC, their readiness and the adequacy of their planning to implement. In stages 5–7, recruitment and training of the MTFC treatment staff (i.e., program supervisor, family therapist, individual therapist, foster parent trainer/recruiter, and behavioral skills trainer) and foster parents is measured. Stages 8–9 focus on fidelity monitoring and how sites use that data to improve adherence. Stage 10 evaluates the county’s functioning in the domains required for certification as an independent MTFC program.

Contact logs

As noted previously, initial contacts were made with system leaders to recruit them into the project and each of these contacts was logged. All subsequent correspondence between system leaders and study staff (including research staff, CiMH, and TFCC staff) was tracked and maintained in an electronic contact log developed for the study. The contact log was completed by the study staff member who was involved in each of the communications and included: (a) the county with whom contact was made; (b) type of contact (i.e., telephone, email, in person, letter, fax); and (c) nature of the contact (i.e., related to recruitment, assessment, timing issues, implementation). The written responses for these contacts were then reviewed by an independent coder, not related to the study, who verified the accuracy of each of the coding decisions by the entering study staff.

Results

As noted previously, the goal of the current article is to provide a description of the design and recruitment strategies utilized to engage counties in a large scale implementation study for “non-early adopters” of an evidence-based practice. Thus, the results presented relate to the efforts made to accomplish these “set-up” goals: (a) reaction of counties to the random assignment to cohort and condition; (b) the degree of success in recruiting during Year 1 of the study including attempts to recruit all eligible counties in all three cohorts; and (c) assessment of whether any changes in the design thus far would affect our ability to assess impact. This includes attempts to engage counties in Cohort 1 to consider adopting MTFC and attempts to fill vacancies in Cohort 1 with Cohort 2 counties who were given an opportunity to “go early.”

Reaction of Counties to Random Assignment

Several steps were taken prior to the initiation of the study to notify and inform counties that an attempt was being made to obtain funding to conduct a study of implementation that would involve random assignment of counties to timeframes and differing methods of implementation/transport of MTFC. These included a number of presentations about the research on MTFC at state- and county-level conferences by the study P.I. during the 5 years that preceded the study. In addition, the P.I. from CiMH had longstanding collaborative relationships with many of the county leaders. At the time the study was submitted for peer review, letters of support were obtained from 38 of the 40 eligible counties. Thus, by the time that the study was funded, the concept of random assignment was familiar to most individuals in county leadership positions.

As discussed, there were two levels of random assignment employed: to condition (IND or CDT), and to cohort (timeframe to begin implementation). None of the 40 counties targeted for recruitment objected to or questioned their assignment to condition. The assignment to IND or CDT did not appear to cause concern or problems for any of the counties. The cohort assignment to timeframe to begin implementation was more controversial. At the time of recruitment, 9 (23%) of the 40 counties expressed problems or concerns with the timeframe to which they had been assigned, with one of these counties declining participation altogether. In addition, five of the Cohort 1 counties that consented to participate have delayed their implementation start-up activities (but are still within the Cohort 1 timeframe). Thus, a total of 14 (35%) of the counties have had problems with their assigned timeframe suggesting difficulties with maintaining the scheduled timeline. There were no differences between the IND and CDT conditions on this variable.

Recruitment of Counties to Participate in the Overall Study

At the end of the first year of the study, recruitment status for each county was defined as: (a) recruited; (b) declined; or (c) pending. During the first year, 32 of the 40 eligible counties (80%) were recruited to participate in the study. Recruited counties had at least one system leader from child welfare, juvenile probation, and/or mental health who agreed to consider adopting MTFC and consented to participate in the research study (M = 4.72; range, 3–93). For consenting counties, there was an average of 19.88 days (range, 0–79; median = 10) from the time that the first recruitment call was made until a consent to participate was signed by at least one county leader. This length of time did not differ between study conditions (p = ns). An average of 5.41 contacts was required to obtain this written consent (range, 1–15). The majority of these contacts were made by phone (78%) or email (16%). Of note, eight counties consented at the time of the first contact (20%). In an additional three counties, participation is still pending; they have neither consented nor declined to participate. For those pending counties, an average of four recruitment contacts has been made (range, 3–5). Thus far, five counties have declined to participate, four within Cohort 1 (IND = 2; CDT = 2) and one within Cohort 3 (CDT). Declining counties had an average of 13.8 contacts (range, 9–22). Reasons for declining included staffing shortages, new leadership, and system reorganization. One county noted concern about the cost of the MTFC program. The declining counties, along with the pending counties, will be re-contacted during 2008 to see if their circumstances have changed to permit their participation.

Although no significant differences were found by cohort in the counties’ decisions to participate in the study, [χ2 (2) = 5.48, p = ns], an interesting pattern emerged as to the current consent status at the end of Year 1. That is, the counties in Cohort 2 all agreed to participate (n = 14; 100%); the smallest percentage agreed in Cohort 1 (n = 8; 67%), with all of those not participating declining; and the mid-range level of recruitment occurred in Cohort 3 (n = 10; 71%), with three of those not recruited remaining in the pending category (i.e., no firm declinations) and one declining. Specific details of these cohort differences are illustrated in the consort diagram (Fig. 2).

Filling Vacancies

As noted previously, four of the counties in Cohort 1, evenly distributed between the IND and CDT conditions, declined participation in the study. Thus, as described in the Method section, counties in Cohort 2 were randomly ordered within their study condition and invited to “go early.” Three counties in each in the IND and CDT conditions (six total) were contacted and asked if they would like to move to the Cohort 1 timeline. No counties in the IND condition decided to move up their timeline for implementation. Within the CDT condition, both vacancies were filled with counties from Cohort 2 who chose to “go early.”

Engagement of Cohort 1 Counties to Consider Implementing MTFC

For those counties who were either randomly assigned to or moved up to Cohort 1, current efforts to move toward implementation of MTFC are underway. At the time of consent, the study recruiter rated the consenting county leaders’ overall interest in participating in the project, as well as their overall enthusiasm about implementing MTFC in their communities. These ratings were based on the recruiter’s impressions of the county leaders during their interactions over the course of the recruitment process (e.g., based on comments made and questions asked by each county leader). Each of these impressions was rated on a scale of 1–10, with 10 demonstrating the highest degree of interest and enthusiasm. At the time of consent, the average impression rating for county leader interest in participating in the project was 7.71 (range, 5–10), and the average impression for enthusiasm about implementing MTFC was 6.70 (range, 5–10). There were no differences observed regarding the level of enthusiasm or interest between conditions.

Discussion

The initial results described here attest to the feasibility of implementing a large scale study using a randomized trial design involving up to 40 counties that were not early adopters of MTFC. The focus on non-early adopters differentiates the participants in this study from typical consumers of research-based interventions, who tend to be highly motivated and often well-resourced. Implementation efforts that focus only on such early adopters could result in the neglect of settings where the intervention is needed most. This conundrum was labeled as the innovation/needs paradox by Rogers (1995).

It is noteworthy that none of the county leaders expressed disappointment about randomization to either implementation condition (IND or CDT). However, there were numerous questions and concerns about the timing of when the intervention would take place. The current article described the initial procedures and methods that were adapted to allow for some changes in timing without compromising the study design. An early version of this type of randomized trial design, called a dynamic wait-listed design, also has been used to evaluate a suicide prevention program with schools as the level of randomization (Brown et al. 2006). In that trial, the primary endpoint was the rate of referral of youth for life threatening behavior (including suicide). Thirty-two eligible schools were randomly assigned to a different time for initiating a training protocol for staff at each school. Five equivalent cohorts of schools were formed by stratifying on background factors (e.g., middle/high school, history of high or low referral rates). Just as in the current study, the multiple cohorts allowed a comparison of equivalent trained and non-trained schools in each period. This increases statistical power over the traditional wait-listed design, and the selection of multiple cohorts simplifies the logistics demands of training, just as it does in the current study. Most importantly, it allows for a true randomized trial design to provide a rigorous evaluation, and at the same time satisfied the school district’s requirement that all schools receive training by the end of the study.

Given the success in achieving a good initial level of participation in this study (i.e., 80% of counties consented to participate), early indications suggest that this design can be maintained across multiple cohorts. Further, the initial experience suggests that these types of randomization designs are feasible and that they have the potential to provide high quality information about the effectiveness of using specific strategies to improve implementation. For example, because of the randomization to conditions in this study, it will be possible to evaluate whether participation in the CDT obviates challenging community characteristics and/or increases factors such as the motivation to implement, improvements in system leaders’ and practitioners’ attitudes towards implementing an evidence-based practice, and the sustainability of that practice over time. If participation in the CDT increases adoption and sustainability, further studies would be initiated to examine specific aspects of the CDT’s core processes that account for positive effects. Given the increasing frequency with which communities are considering the adoption of EBPs, it is anticipated that other opportunities will occur for future studies such as this with states, territories, and national governments who are interested in supporting the adoption of empirically based practices and programs.

Acknowledgments

The principal support for this research was provided by NIMH grant MH076158 and the Department of Health and Human Services Children’s Administration for Children and Families. Other support was provided by NIMH grant MH054257; and NIDA grants: DA017592, DA015208, DA2017202, and K23DA021603.

Footnotes

The first author is the Principal Investigator on the study, which was awarded to the Center for Research to Practice in Eugene Oregon. Subcontracts were awarded to the California Institute for Mental Health, the University of South Florida, and TFC Consultants, Inc. Patricia Chamberlain, John Reid and Gerard Bouwman are three of four owners of TFC Consultants, Inc., the company implementing MTFC in this study.

One additional county involved in a class action lawsuit that precluded their participation was also excluded.

Although not relevant to the current article, it is noteworthy that at the time of recruitment, system leaders were asked to complete a set of pre-implementation assessment measures. Baseline measures included: the Organizational Culture Survey (Glisson and James 2002) an adapted version of the Organizational Readiness for Change (ORC; Lehman et al. 2002), an adapted version of the MST Personnel Data Inventory (Schoenwald 1998), the Attitudes toward Treatment Manuals Survey (Addis and Krasnow 2000) and the Evidence-based Practice Attitude Scale (Aarons 2004). Those system leaders in counties who were randomized to Cohorts 2 and 3 will be asked to repeat these measures once or twice, respectively (a wait-list feature of the design).

Some counties had more than one person from each service sector choose to consent to participate in the study (i.e., the discussions about whether or not to implement MTFC and completing the pre-implementation assessment battery).

References

- Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: The evidence-based practice attitude scale (EBPAS) Mental Health Services Research. 2004;6:61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addis ME, Krasnow AD. A national survey of practicing psychologists’ attitudestoward pscyhotherapy treatment manuals. Journal of Counseling and Clinical Psychology. 2000;68(2):331–339. doi: 10.1037//0022-006x.68.2.331. [DOI] [PubMed] [Google Scholar]

- Aos S, Phipps P, Barnoski R, Leib R. The comparative costs and benefits of programs to reduce crime: A review of national research findings with implications for Washington State. Olympia: Washington State Institute for Public Policy; 1999. [Google Scholar]

- Brown CH, Wang W, Kellam SG, Muthén BO, et al. Methods for testing theory and evaluating impact in randomized field trials: Intent-to-treat analyses for integrating the perspectives of person, place, and time. Journal of Drug and Alcohol Dependence. 2008 doi: 10.1016/j.drugalcdep.2007.11.013. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CH, Wyman PA, Guo J, Peña J. Dynamic wait-listed designs for randomized trials: New designs for prevention of youth suicide. Clinical Trials. 2006;3:259–271. doi: 10.1191/1740774506cn152oa. [DOI] [PubMed] [Google Scholar]

- Chamberlain P. Treatment foster care. Family strengthening series (OJJDP Bulletin No. NCJ 1734211) Washington, DC: U.S. Department of Justice; 1998. [Google Scholar]

- Chamberlain P, Reid JB. Using a specialized foster care treatment model for children and adolescents leaving the state mental hospital. Journal of Community Psychology. 1991;19:266–276. [Google Scholar]

- Chamberlain P, Leve LD, DeGarmo DS. Multidimensional treatment foster care for girls in the juvenile justice system: 2-year follow-up of a randomized clinical trial. Journal of Consulting and Clinical Psychology. 2007;75(1):187–193. doi: 10.1037/0022-006X.75.1.187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox DR. Regression models and life tables. Journal of the Royal Statistical Society Series B. 1972;34:187–220. [Google Scholar]

- Fox JC, Blank M, Berman J, Rovnyak VG. Mental disorders and help seeking in a rural impoverished population. International Journal of Psychiatry in Medicine. 1999;29:181–195. doi: 10.2190/Y4KA-8XYC-KQWH-DUXN. [DOI] [PubMed] [Google Scholar]

- Glisson C. Structure and technology in human service organizations. In: Hasenfeld Y, editor. Human services as complex organizations. Beverly Hills: Sage; 1992. pp. 184–202. [Google Scholar]

- Glisson C, James LR. The cross-level effects of culture and climate in human service teams. Journal of Organizational Behavior. 2002;23:767–794. [Google Scholar]

- Henggeler SW, Schoenwald SK, Borduin CM, Rowland MD, Cunningham PB. Multisystemic treatment of antisocial behavior in children and adolescents. New York: Guilford Press; 1998. [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington DC: National Academy Press: Author; 2001. [PubMed] [Google Scholar]

- Judge TA, Thoresen CJ, Pucik V, Welbourne TM. Managerial coping with organizational change: A dispositional perspective. Journal of Applied Psychology. 1999;84:107–122. [Google Scholar]

- Lehman EK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22:197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Marsenich L. Evidence-based practices in mental health services for foster youth. Sacramento: California Institute for Mental Health; 2002. [Google Scholar]

- National Institute of Mental Health. Treatment research in mental illness: improving the nation’s public mental health care through NIMH funded interventions research. Washington, DC: National Advisory Mental Health Council’s Workgroup on Clinical Trials: Author; 2004. [Google Scholar]

- National Institute of Mental Health. Blueprint for change: Research on child and adolescent mental health. Washington, DC: National Advisory Mental Health Council’s Workgroup on Child and Adolescent Mental Health Intervention Development and Deployment: Author; 2001. [Google Scholar]

- Olds DL, Hill PL, O’Brien R, Racine D, Moritz P. Taking preventive intervention to scale: The nurse-family partnership. Cognitive and Behavioral Practice. 2003;10(4):278–290. [Google Scholar]

- Substance Abuse, Mental Health Services Administration. President’s new freedom commission on mental health (SMA 03–3832) Washington, DC: Author; 2003. [Google Scholar]

- Rogers EM. Diffusion of innovation. 4. New York: The Free Press; 1995. [Google Scholar]

- Rones M, Hoagwood K. School-based mental health services: A research review. Clinical Child & Family Psychology Review. 2000;3:223–241. doi: 10.1023/a:1026425104386. [DOI] [PubMed] [Google Scholar]

- Schoenwald SK. MST personnel data inventory. Charleston: Medical Universityof South Carolina, Family Services Research Center; 1998. [Google Scholar]

- Schoenwald SK, Sheidow AJ, Letourneau EJ. Toward effective quality assurance in evidence-based practice: Links between expert consultation, therapist fidelity, and child outcome. Journal of Clinical Child and Adolescent Psychology. 2004;33(1):94–104. doi: 10.1207/S15374424JCCP3301_10. [DOI] [PubMed] [Google Scholar]

- Sosna T, Marsenich L. Community development team model: Supporting the model adherent implementation of programs and practices. Sacramento: California Institute for Mental Health; 2006. [Google Scholar]

- U. S. Department of Health and Human Services. Mental health: A report of the surgeon general (DHHS publication No. DSL 2000–0134-P, pp. 123–220) Washington, DC: U.S. Government Printing Office: Author; 2000a. Children and mental health. [Google Scholar]

- U. S. Department of Health and Human Services. Mental health: A report of the surgeon general (DHHS publication No. DSL 2000–0134-P) Washington, DC: U.S. Government Printing Office: Author; 2000b. Prevention of violence. [Google Scholar]