Abstract

Background

SentenceShaper® (SSR) is a computer program that is for speech what a word-processing program is for written text; it allows the user to record words and phrases, play them back, and manipulate them on-screen to build sentences and narratives. A recent study demonstrated that when listeners rated the informativeness of functional narratives produced by chronic aphasic speakers with and without the program they gave higher informativeness ratings to the language produced with the aid of the program (Bartlett, Fink, Schwartz, & Linebarger, 2007). Bartlett et al. (2007) also compared unaided (spontaneous) narratives produced before and after the aided version of the narrative was obtained. In a subset of comparisons, the sample created after was judged to be more informative; they called this “topic-specific carryover”.

Aims

(1) To determine whether differences in informativeness that Bartlett et al.’s listeners perceived are also revealed by Correct Information Unit (CIU) analysis (Nicholas & Brookshire, 1993)—a well studied, objective method for measuring informativeness—and (2) to demonstrate the usefulness of CIU analysis for samples of this type.

Methods & Procedures

A modified version of the CIU analysis was applied to the speech samples obtained by Bartlett et al. (2007). They had asked five individuals with chronic aphasia to create functional narratives on two topics, under three conditions: Unaided (“U”), Aided (“SSR”), & Post-SSR Unaided (“Post-U”). Here, these samples were analysed for differences in % CIUs across conditions. Linear associations between listener judgements and CIU measures were evaluated with bivariate correlations and multiple regression analysis.

Outcomes & Results

(1) The aided effect was confirmed: samples produced with SentenceShaper had higher % CIUs, in most cases exceeding 90%. (2) There was little

Conclusions

That the percentage of CIUs was higher in SSR-aided samples than in unaided samples confirms the central finding in Bartlett et al. (2007), based on subjective judgements, and thus extends the evidence that aided effects from SentenceShaper are demonstrable across a range of measures, stimuli and participants (cf. Linebarger, Schwartz, Romania, Kohn, & Stephens, 2000). The data also attest to the effectiveness of the CIU analysis for quantifying differences in the informativeness of aphasic speech with and without SentenceShaper; and they support prior studies that have shown that CIU measures correlate with the informativeness ratings of unfamiliar listeners.

Keywords: Aphasia, Sentenceshaper, AAC, Informativeness, functional communication

SentenceShaper® is a computer program designed to support spoken language production in aphasia by minimising the temporal and working memory demands of real-time speech (Linebarger et al., 2000).1 2 It allows the user to record spoken fragments and to associate these saved fragments with visual icons that can be played back, combined, and integrated into larger structures. Thus in its core functionality, described more fully in earlier papers (e.g., Bartlett et al., 2007), SentenceShaper can be described as a word processing program for spoken language, allowing the user to review and modify initial “drafts” of spoken productions.

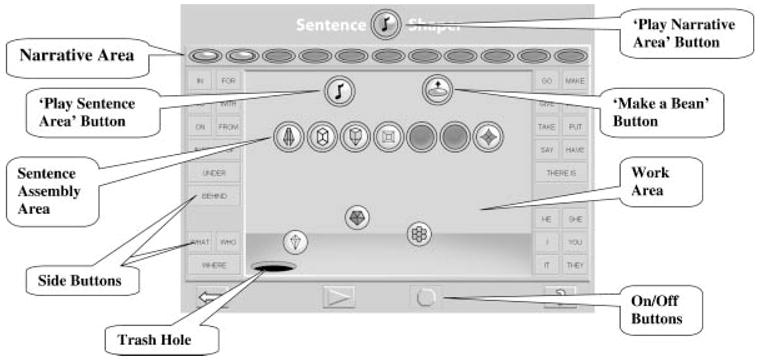

Figure 1 shows SentenceShaper’s main components. The user records words or phrases by touching the On/Off Buttons. Touching the “Off” button creates an icon (or shape) in the Work Area. The sound file associated with this shape can be replayed by touching it. Shapes can be dragged to the Trash Hole or the Sentence Assembly Area, where a sentence is “built” from left to right. Once the user has assembled her shapes, she can play all of them in sequence by touching the Play Sentence Area button. She can then rearrange the shapes or touch the Make a Bean button, which combines all the shapes into a single icon (a purple “bean”). All beans are displayed in the Narrative Assembly Area where they can be reordered, put in the Trash Hole, or dragged down to the Sentence Assembly Area for revision. In addition to replaying and arranging recorded fragments, the user has access to Side Buttons which, when clicked, play pre-recorded content (e.g., prepositions, pronouns, high-frequency verbs, and discourse cues) which can then be recorded in the user’s own voice and incorporated into a SentenceShaper production.

Figure 1.

SentenceShaper screen with major components labelled.

Prior research has shown that spoken narratives created with SentenceShaper can be superior to those produced without the aid of the program. The measured improvements in language with versus without the program are called “aided effects”. Linebarger et al. (2000) studied aided effects from SentenceShaper in six participants with chronic, nonfluent aphasia, who produced the same story-plot narratives with the program (aided) and without it (unaided). Application of the Quantitative Production Analysis (QPA) methodology (Saffran, Berndt, & Schwartz, 1989) revealed statistically significant aided effects on measures of length and grammatical structure, although not on measures of grammatical morphology

In a recent study, Bartlett et al. (2007) confirmed the presence of aided effects using functional narratives as stimuli and listener-rated “informativeness” as the dependent measure. Unlike measures of length and grammatical structure, informativeness measures are typically used to quantify changes in how effectively the person communicates the content of a message. Informativeness can be measured objectively with published procedures such as Correct Information Unit (CIU) Analysis (Nicholas & Brookshire, 1993) or content unit analysis (Yorkston & Beukelman, 1980). Informativeness can also be measured subjectively, using listener judgements. Listener judgements have been used in several studies to assess the informativeness of connected discourse produced by people with expressive language deficits and to socially validate treatment-related change. The definition of informativeness in such studies has ranged from simply “amount of information conveyed” (Campbell & Dollaghan, 1992) to “amount of correct information” given about a topic (Jacobs, 2001) to “how accurately and completely” a discourse sample reflects a picture or procedure (Doyle, Tsironas, Goda, & Kalinyak, 1996). Similar to Doyle et al. (1996), Bartlett et al. (2007) defined informativeness as the “accuracy and completeness” of a functional narrative.

In Bartlett et al. (2007) functional narratives were elicited by items (hereafter, “topics”) from the Amsterdam-Nijmegen Everyday Language Test (ANELT) (Blomert, Kean, Koster, & Schokker, 1994), which asks participants to verbally respond to hypothetical daily life situations (see Method section for examples). Five participants with aphasia produced responses to two ANELT topics in three conditions: without SentenceShaper (“unaided” [U]), with SentenceShaper (“SSR”), and unaided after having used SentenceShaper to respond to the same topic (“Post-U”). Speech-language pathology graduate students who were unfamiliar with the participants and unaware of the three study conditions then rated, without the benefit of written transcripts, the informativeness of the 30 audiotaped samples (five participants, two topics, three conditions). The method for rating informativeness was Direct Magnitude Estimation (DME). Unlike fixed-interval rating scales, DME allows raters to assign numbers of their own choosing to express the ratio of stimulus magnitudes (in this case, the informativeness of one sample relative to the one that preceded it). These numbers are then normalised to a standard scale (here, 0–100) for data analysis. For detailed discussion of DME methods see, e.g., Stevens (1975), Doyle et al. (1996), and Bartlett et al. (2007).

In Bartlett et al. (2007), statistical analyses of the normalised DME ratings revealed significant aided effects for four of five participants, on one or both narratives. The grand average of the ratings was 47.5 (on a 100-point scale) for the U samples, versus 72.2 for SSR.

The significance of Bartlett et al. ’s (2007) study is two-fold. First, it broadened the evidence for aided effects from story-plot narratives to functional narratives; second, it revealed that aided effects are demonstrable with listener judgements of informativeness, as well as laboratory measures of grammaticality and fluency. These findings constitute important steps towards evaluating SentenceShaper’s potential to help people with aphasia communicate in real-life situations.

In the listener judgement study, Bartlett et al. (2007) also presented evidence for “topic-specific carryover”, meaning that, for a given topic, the unaided sample produced one session after the aided production was judged more informative than the unaided sample produced one session before the aided production. Compared to the aided effect, the evidence for topic-specific carryover was weaker, showing statistical significance in just two of the five participants. For participants who show reliable topic-specific carryover, the possibility exists that they could use SentenceShaper effectively to practice for upcoming face-to-face interactions.

The current study applied a modified version of the Correct Information Unit analysis (Nicholas & Brookshire, 1993) to the same speech samples used in the listener judgement study (Bartlett et al., 2007) with the aim of validating the results and demonstrating the usefulness of the CIU analysis for data of this type. We chose CIU analysis because it has been shown to have high inter-rater scoring reliability and to be useful with a range of aphasic speech (Nicholas & Brookshire, 1993). Moreover, there is a small literature comparing CIU analysis and listener ratings (e.g., Doyle et al., 1996; Jacobs, 2001). The Doyle et al. (1996) study is noteworthy for having demonstrated strong correlations between CIU measures and DME judgements of informativeness (for % CIUs and informativeness ratings, r = .81).

In line with this, we predicted close correspondence between the DME-measured effects that Bartlett et al. (2007) reported and those that our modified CIU methods would reveal. Prediction 1 was that all or most participants would show an aided effect, indexed here by greater % CIUs in the SentenceShaper sample than in the comparable unaided sample (SSR > U). Prediction 2 was that at least a subset would show topic-specific carryover, here indexed by greater % CIUs in Post-U than U samples. Prediction 3 was that % CIUs and mean listener ratings would correlate.

METHOD

Data set analysed

Narratives analysed in this study were those collected for Bartlett et al. (2007) from five people diagnosed with mild to moderate non-fluent aphasia. Individual profiles are presented in Table 1. Although two participants (OT & DCN) were classified as Broca’s resolving to anomic on the basis of the Western Aphasia Battery (WAB; Kertesz, 1982), all five were non-fluent; their speech rate was below 87 words per minute, the 2 SD cut-off for controls (Rochon, Saffran, Berndt, & Schwartz, 2000). In addition, they had varying degrees of agrammatism, as illustrated in Table 1 by their Proportion of Words in Sentences (Control mean, .98, SD, .05; Rochon et al., 2000).

TABLE 1.

Profiles of the five participants

| Participant | Gender | Age | Educ. | WAB MPO | AQ | Classification | Wds. per minutea | Prop. wds. in sentencesa |

|---|---|---|---|---|---|---|---|---|

| EC | F | 57 | 18 | 201 | 68.2 | B | 39.2 | .24 |

| MAI | F | 53 | 20 | 59 | 67.4 | B | 43.9 | .82 |

| DCN | M | 32 | 12 | 43 | 79.8 | B/A | 14.5 | .19 |

| MO | M | 62 | 14 | 162 | 70.0 | B | 45.0 | .70 |

| OT | F | 54 | 16 | 64 | 75.8 | B/A | 70.9 | .53 |

| Mn | 51.6 | 16 | 105.8 | 72.2 | ||||

| SD | 11.5 | 3.2 | 70.9 | 5.4 |

Mn, mean; SD, standard deviation; M, male; F, female; MPO, months post onset; WAB, Western Aphasia Battery (Kertesz, 1982); AQ, Aphasia quotient from the WAB. B, Broca’s; B/A, Broca’s resolving to anomic; Results of testing performed 13 to 30 months prior to the experiment.

= Words per minute and proportion words in sentences were calculated from the Cinderella narrative, as part of the Quantitative Production Analysis (Berndt, Wayland, Rochon, Saffran, & Schwartz, 2000).

The analysed speech samples represent participants’ responses to two ANELT situations. The Lost Glove situation was: “You are in the drugstore and this [present glove] is lying on the floor. You take it to the counter. What do you say?” The Broken Glasses situation was: “You’re now at the optician’s shop. You’ve brought these [broken glasses] in with you. I’m the salesperson. What do you say?”

Responses were elicited under three conditions, in the following order: without SentenceShaper (hereafter, “Unaided” or “U”); with SentenceShaper (“SSR”); and unaided after having used SentenceShaper to respond to the same topic (“Post-U”).3 In all, 30 samples (15 Glove and 15 Glasses) comprised the data set for the CIU analysis of the current study (5 participants ×2 topics ×3 conditions). Detailed explanation of training and stimulus-elicitation procedures can be found in Bartlett et al. (2007).

CIU procedures

A correct information unit (CIU) is “a word that is intelligible in context, accurate in relation to the picture(s) or topic, and relevant to and informative about the content of the picture(s) or the topic” (Nicholas & Brookshire, 1993, p. 348). Scoring requires two steps: (1) stripping the narrative of nonwords (e.g., “uh” and “um” or unintelligible neologisms), and (2) determining the CIU and Non-CIU counts from the remaining words, using the comprehensive guidelines included in the appendix of Nicholas and Brookshire (1993). Once scoring is complete, the counts for Words, CIUs, and Non-CIUs can be totalled and other measures can be derived, such as Percent CIUs (calculated by dividing the number of CIUs by the total number of Words and multiplying by 100).

The current study applied a modified version of Nicholas and Brookshire’s (1993) CIU scoring rules to the 30 experimental samples. The use of ANELT stimuli was the first departure from the published procedures. The second, more significant, difference was that our scorers derived the CIU count from transcribed samples without benefit of auditory tapes or prosodic markings. This was necessary because the prosody of speech recorded on SentenceShaper occasionally reflects the piecemeal construction of aided utterances; users recording a word or phrase that they intend to incorporate into a larger structure may nonetheless speak this word or phrase with the intonation appropriate to an isolated utterance. In contrast to the naïve raters in Bartlett et al. (2007), our CIU scorers were familiar with SentenceShaper recordings; for them, these subtle prosodic distinctions could have cued the experimental condition, thereby compromising blinded scoring. Other minor modifications to the published CIU scoring rules were necessitated by the design and stimuli in this study. These are described in the Appendix. In other respects, the scorers followed the guidelines set down in Nicholas and Brookshire (1993) to extract the various CIU measures. Our primary measure was % CIUs. Additional measures were No. CIUs and No. Non-CIUs.

CIU scorer reliability

The experimental samples were transcribed and timed from audiotapes by a member of the study team. These were scored by well-practised speech-language pathologists, who were blinded to the experimental conditions. For measurement of inter-rater reliability, two scorers independently coded 20% of the 30 experimental samples (N = 6; 3 per topic), and point-to-point agreement was calculated for Word/Nonword counts (Mn. = 100%) and CIU/Non-CIU counts. Agreement ranged from 81.8% to 100%; Mn. = 94.9%. One of these scorers went on to code the remaining 24 samples.

Data analysis

The size of the aided effect for each participant was measured by the difference in % CIUs in aided versus unaided samples (SSR-U). The topic-specific carryover effect was measured by the difference in % CIUs in Post-U versus U samples. A difference of 20% or more was considered evidence of an aided effect or topic-specific carryover. This arbitrary criterion was chosen based on evidence that the % CIU measure has excellent test–retest reliability (Nicholas & Brookshire, 1993) and that repeated production of a narrative by persons with aphasia does not lead to increases in this measure (i.e., no practice effects; Linebarger et al., 2007). Given this, the 20% change criterion was deemed appropriately conservative for defining an aided effect at the level of the individual.

Pearson Product Moment correlations, with one-tailed p-values, were used to assess the linear association between the mean informativeness rating (average of 12 raters) and % CIUs (as well as No. CIUs and No. Non-CIUs). These correlations were computed for Glove and Glasses combined (N = 30; 5 participants ×3 samples ×2 topics). In addition, multiple regression analyses were run to investigate whether No. CIUs and No. Non-CIUs independently predicted listener ratings. This was done to determine the extent to which increases in % CIUs were due to an increase in the number of informative words (No. CIUs) and/or a decrease in uninformative words (No. Non-CIUs). These analyses, too, were run collapsed across topics.

RESULTS

Aided effects

Table 2 presents the data from this study and, for comparison, Bartlett et al. (2007). On the left side of the table % CIU scores are shown, broken down by participant, topic and condition. Difference scores are also presented, with higher values indicating a larger aided effect. Looking down the columns for U and SSR conditions, it is evident that all participants had higher % CIU scores in the SSR condition. Indeed every participant but OT produced 90% or more CIUs in the SSR condition. In this aided condition, the mean for Glove was 94.8%, compared to 56.4% in U, for a mean aided effect of 38.4%. For Glasses, mean % CIU scores were 92.2% and 65.8% in SSR and U, respectively, for a mean aided effect of 26.4%. The size of the aided effect averaged across Glove and Glasses (not shown in table) exceeded 20% for all participants but OT.

TABLE 2.

Percent CIUs, total words and difference scores

| % CIUs

|

Listener Ratings

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pt. | Topic | U | SSR | PU | Aided Effect (SSR- U) | Topic- specific carryover (PU-U) | U | SSR | PU | Aided Effect (SSR- U) | Topic- specific carryover (PU-U) |

| EC | Glove | 56 [25] | 100 [21] | 42 [43] | 44 | −14 | 43 (20) | 82 (19) | 32 (15) | 39 | −11 |

| MAI | Glove | 58 [33] | 92 [25] | 66 [44] | 34 | 8 | 56 (26) | 69 (20) | 47 (26) | 13 | −9 |

| DCN | Glove | 68 [31] | 95 [21] | 70 [33] | 27 | 2 | 52 (22) | 48 (22) | 45 (16) | −4 | −7 |

| MO | Glove | 23 [35] | 97 [33] | 50 [60] | 74 | 27 | 5 (5) | 64 (26) | 49 (29) | 59 | 44 |

| OT | Glove | 77 [39] | 90 [42] | 67 [60] | 13 | −10 | 62 (19) | 95 (6) | 55 (24) | 33 | −7 |

| Mn. | 56.4 | 94.8 | 59.0 | 38.4 | 2.6 | 43.6 | 71.6 | 45.6 | 28.0 | 2.0 | |

| SD | 20.5 | 4.0 | 12.3 | 22.9 | 16.3 | 18.4 | 18.6 | 22.0 | 21.7 | 23.5 | |

| EC | Glasses | 44 [39] | 93 [43] | 41 [83] | 49 | −3 | 13 (13) | 53 (25) | 41 (24) | 40 | 28 |

| MAI | Glasses | 85 [27] | 97 [25] | 74 [42] | 12 | −11 | 74 (19) | 92 (12) | 69 (21) | 18 | −5 |

| DCN | Glasses | 65 [54] | 93 [58] | 73 [63] | 28 | 8 | 83 (20) | 80 (21) | 71 (25) | −3 | −12 |

| MO | Glasses | 57 [44] | 98 [41] | 60 [35] | 41 | 3 | 23 (15) | 63 (20) | 55 (19) | 40 | 32 |

| OT | Glasses | 78 [49] | 80 [50] | 61 [51] | 2 | −17 | 64 (21) | 76 (18) | 68 (22) | 12 | 4 |

| Mn. | 65.8 | 92.2 | 61.8 | 26.4 | −4.0 | 51.4 | 72.8 | 60.8 | 21.4 | 9.4 | |

| SD | 16.4 | 7.2 | 13.3 | 19.6 | 10.1 | 17.6 | 19.2 | 22.2 | 18.6 | 19.7 | |

The left side of this table shows % CIUs and, in brackets, Total No. Words, for U, SSR, and PU conditions, broken down by participant and topics. Also shown are difference scores measuring the Aided Effect (SSR-U) and Topic-Specific Carryover (PU-U). For comparison purposes, the right side of the table shows the respective normalised DME ratings, reported in Bartlett et al. (2007). Individual entries for U, SSR, and PU conditions reflect the mean of 12 raters and, in parentheses, the standard deviation.

Topic-specific carryover

Examination of the difference in % CIUs between Post-U and U samples reveals unimpressive carryover effects. Mean carryover effect was 2.6% for Glove and −4.0% for Glasses. Only one participant, MO, showed a difference in % CIUs that exceeded 20% (27% on Glove).

Correlations

As predicted, % CIUs and listener ratings were statistically correlated (r = .746; p < .001). Raw counts for No. CIUs and No. Non-CIUs also correlated with listener ratings: For No. CIUs, r = .594; p < .001; for No. Non-CIUs, r = −.548; p = .001. The two count measures did not correlate with one another (r.= −.144; p = .224). The fact that No. CIUs and No. Non-CIUs each correlate highly with listener ratings but not with each other implies that both measures may have contributed to the correlation of the derived measure, % CIUs, with listener ratings. The results from the multiple regression analyses confirmed this. The tested models had as the dependent variable mean listener rating, and as the independent variables No. CIUs and No. Non-CIUs. The resulting model had Adjusted R2 = .54 (i.e., 54% of the variance explained), with each independent variable making an independent contribution to the model (No. CIUs: Beta = 1.10; p < .001; No. Non-CIUs: Beta = −.92; p = .001). Thus the suggestion is that listeners judged the samples based on the number of informative words and the amount of uninformative material that was present.

DISCUSSION

This study follows up an earlier one by Bartlett et al. (2007), which demonstrated aided effects from SentenceShaper on narratives with functional content (ANELT topics) using listener judgements of informativeness as the dependent variable. That study broadened the evidence for aided effects, which had previously been documented on story-plot narratives using laboratory measures of utterance length and grammatical structure (Linebarger et al., 2000). The current study replicates the aided effects in Bartlett et al. (2007), using CIU analysis. We found that participants showed enhanced informativeness in all samples produced with SentenceShaper, and the effects were greater than 20% for four of five participants. Establishing the presence of aided effects is the first step in deciding who may benefit from using SentenceShaper as an assistive device and/or as a therapy tool. In our study, the biggest effects were for those participants with the lowest % CIUs in the U condition (MO, EC). Yet there was nothing apparent in the language profile that set the person with the weakest aided effect (OT) apart from the rest. Further research is needed to determine the characteristics of people who are most likely to show aided effects with SentenceShaper. Together with prior evidence for aided effects from SentenceShaper, the present findings show that the enhancement of aphasic language when using SentenceShaper is demonstrable across a range of participants, stimuli, and measures.

Bartlett et al. (2007) also found some evidence for topic-specific carryover. In the current study, only one participant, on one topic, showed topic-specific carryover. This indicates that topic-specific carryover from a single SentenceShaper production is at best a weak effect. It would be important to determine whether repeated topic-specific practice on SentenceShaper would improve spontaneous communications on that topic.

Topic-specific carryover refers to gains in the spontaneous retelling of Topic x that are attributable to having previously produced Topic x with SentenceShaper. This should not be confused with “treatment effects”. Treatment effects refer to gains in the spontaneous retelling of Topic x that are attributable to having undergone a period of SentenceShaper treatment involving production of a variety of different topics, none of them x. A number of studies have reported treatment effects for some participants, using QPA methodology (Linebarger, McCall, & Berndt, 2004; Linebarger, McCall, Virata, & Berndt, 2007; Linebarger, Schwartz, & Kohn, 2001). Treatment effects have also been reported with respect to % CIUs in studies that performed that analysis (Linebarger et al., 2004, 2007).

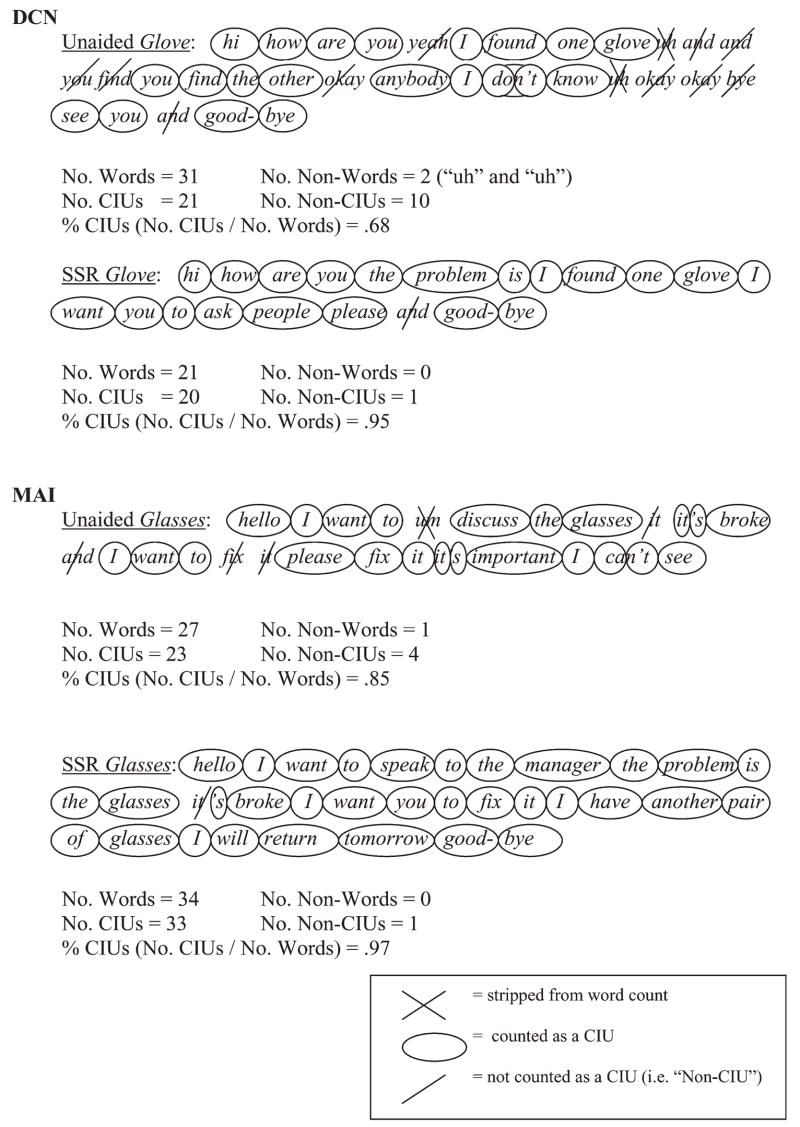

Our data also provide insight into the basis for listener judgements. Of particular interest was the finding from the regression analysis that No. CIUs and No. Non-CIUs can independently affect listener judgements of informativeness. Table 3 (top) illustrates this with aided and unaided samples from DCN. These samples contain essentially the same No. CIUs (21, 20 respectively), but the unaided sample contains 10 Non-CIUs while the aided sample contains only 1 Non-CIU. Based on the regression findings, such reduction in the number of irrelevant, incorrect words in a message contributed to listeners’ perception of the informativeness (accuracy and/or completeness) of aphasic speech.

TABLE 3.

Sample coded transcripts for U and SSR conditions

|

SentenceShaper, by design, incorporates several features that allow the user to decrease the number of irrelevant, repetitive, non-informative words (Non-CIUs). First, revisions and repetitions may be spoken but not recorded; second, recordings can be trashed; and third, recordings can be aborted with a mouse click. For this aided production, DCN undoubtedly succeeded in using one or more of SentenceShaper’s editing functions.

Gains in No. CIUs may also be attributed to features of SentenceShaper, such as the lexical support provided by the discourse cues and Side Buttons and the processing support provided by its replay feature, which allows the user to refresh his or her memory of previously recorded productions. This support may help to trigger new material through “Cloze responses” (Taylor, 1953) to replayed previous utterances. Additionally, by “turning off the clock” and supporting the creation of spoken utterances without the pressure of real-time speech, SentenceShaper may give the user more time and opportunity to produce appropriate material, richer in information. As seen at the bottom of Table 3, MAI seems to have used this support in her aided production of the Glasses topic, increasing her No. CIUs from 23 in the unaided sample to 33 in the aided sample. The No. Non-CIUs decreased slightly, from 4 to 1.

In conclusion, the data from this study validate the effectiveness of the CIU analysis for quantifying differences in the informativeness of aphasic speech with and without SentenceShaper; and they support prior studies in showing that results so obtained correlate with the judgements of unfamiliar listeners. The CIU analysis is shown here to be applicable to new types of stimuli (i.e., functional narratives) and robust to the scoring adjustments necessitated by the current procedures. Since CIU analysis is also more familiar to clinicians and may prove less time consuming than the collection and analysis of listener judgements, the validation of the CIU methodology reported here is of clinical as well as theoretical interest.

Acknowledgments

The authors gratefully acknowledge the thoughtful reviews given to an earlier draft of this paper by Beth Armstrong and two anonymous readers. We also wish to thank Michelene Kalinyak-Fliszar MA CCC/SLP and Roberta Brooks MA CCC/SLP for their assistance with the CIU scoring procedures, and Laura MacMullen BA for her help with later drafts. Support for this study was provided by a grant from the NIH (#1 R01 HD043991; P.I., M. Schwartz).

APPENDIX

Modified CIU scoring rules

Written transcripts only

Since some conditions differ systematically in prosody and may reveal information about the different conditions, scorers do not listen to the audio samples. Scorers receive transcripts that include text only (no utterance/phrase boundaries).

Repetition for emphasis

Although it may seem as though certain individuals are using repetition to emphasise a point, without having prosodic information it is not always clear that this is the case. As a result, any direct repetitions are omitted from the CIU count; the word is only counted as a CIU the last time it was spoken.

Alternatives/elaborations

Because participants are not simply retelling a story or describing a picture, but are role-playing a response to a daily life situation, scorers count as a CIU any word that is an alternative to or an elaboration of the story as long as the word would not mislead or confuse a listener.

Referencing (use of articles/determiners)

Because participants are allowed to hold and manipulate props when responding to the ANELTs (a glove in the Glove topic; a pair of broken glasses in the Glasses topic), use of nonspecific words such as “this” and “these” are acceptable alternatives for “glove” and “glasses” and therefore included in the CIU count.

Discourse markers

If a speaker uses a discourse marker when speaking directly to the listener (as in role-playing), it is stripped from the CIU count. e.g., “I say excuse me someone lost a glove”

Role-playing

Any instance of a speaker taking on multiple roles is included in the word count and considered for the CIU count. e.g., “how much cost fifty dollars American Express” – speaker appears to take on the role of both the customer and the salesperson: “How much [will it] cost?” “Fifty dollars.” “[Do you take] American Express?”

Prepositions

Prepositions are scored leniently because transcripts do not include information about utterance boundaries (which makes it difficult to determine intended meaning of a preposition and to which phrase a preposition is attached). e.g., “I will get the money for Mom” (it is not possible to determine if “for” is part of the utterance “I will get the money for …” or if it was a substitution error for “from” (“from Mom”)).

Footnotes

SentenceShaper uses methods and computer interfaces covered by U.S. Patent No. 6,068,485 (Linebarger & Romania, 2000) owned by Unisys Corporation and licensed to Psycholinguistic Technologies, Inc., which has released SentenceShaper as a commercial product (www.sentenceshaper.com)). A conflict of interest arises because ML serves as Director of Psycholinguistic Technologies. Therefore, ML has not participated in testing or in scoring of raw data in the study reported here.

SentenceShaper is the latest version of a program whose earlier prototype went by the name “CS” for “Communication System” (for comparison see Linebarger et al., 2000).

To avoid confounding aided effects with practice benefits, participants completed two weeks of training, prior to elicitation of the analysed speech samples, in which they narrated the two experimental topics (Lost Glove and Broken Glasses) twice a week unaided. This ensured that each topic was well known, and well practised in the unaided mode, before any experimental samples were elicited. CIU measures were not calculated for these practice sessions.

References

- Bartlett MR, Fink RB, Schwartz MF, Linebarger M. Informativeness ratings of messages created on an AAC processing prosthesis. Aphasiology. 2007;21:475–498. doi: 10.1080/02687030601154167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berndt R, Wayland S, Rochon E, Saffran E, Schwartz M. Quantitative production analysis. Hove, UK: Psychology Press Ltd; 2000. [Google Scholar]

- Blomert L, Kean ML, Koster C, Schokker J. Amsterdam-Nijmegen Everyday Language Test (ANELT): Construction, reliability & validity. Aphasiology. 1994;8:381–407. [Google Scholar]

- Campbell TF, Dollaghan C. A method for obtaining listener judgements of spontaneously produced language: Social validation through direct magnitude estimation. Topics in Language Disorders. 1992;12:42–55. [Google Scholar]

- Doyle PJ, Tsironas D, Goda AH, Kalinyak M. The relationship between objective measures and listeners’ judgements of the communicative informativeness of the connected discourse of adults with aphasia. American Journal of Speech-Language Pathology. 1996;5:53–60. [Google Scholar]

- Jacobs BJ. Social validity of changes in informativeness and efficiency of aphasic discourse following linguistic specific treatment (LST) Brain & Language. 2001;78:115–127. doi: 10.1006/brln.2001.2452. [DOI] [PubMed] [Google Scholar]

- Kertesz A. Western aphasia battery. New York: Harcourt Brace Jovanovich, Inc; 1982. [Google Scholar]

- Linebarger MC, McCall D, Berndt RS. The role of processing support in the remediation of aphasic language production disorders. Cognitive Neuropsychology. 2004;21:267–282. doi: 10.1080/02643290342000537. [DOI] [PubMed] [Google Scholar]

- Linebarger MC, McCall D, Virata T, Berndt RS. Widening the temporal window: Processing support in the treatment of aphasic language production. Brain and Language. 2007;100:53–68. doi: 10.1016/j.bandl.2006.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linebarger M, Romania J. System for synthesizing spoken messages. Washington, D.C.: U.S. Patent and Trademark Office; 2000. Unisys Corporation. U.S. Patent No. 6, 068, 485. [Google Scholar]

- Linebarger MC, Schwartz MF, Kohn SE. Computer-based training of language production: An exploratory study. Neuropsychological Rehabilitation. 2001;11:57–96. [Google Scholar]

- Linebarger MC, Schwartz MF, Romania JF, Kohn SE, Stephens DL. Grammatical encoding in aphasia: Evidence from a “processing prosthesis”. Brain and Language. 2000;75:416–427. doi: 10.1006/brln.2000.2378. [DOI] [PubMed] [Google Scholar]

- Nicholas LE, Brookshire RH. A system for quantifying the informativeness and efficiency of the connected speech of adults with aphasia. Journal of Speech and Hearing Research. 1993;36:338–350. doi: 10.1044/jshr.3602.338. [DOI] [PubMed] [Google Scholar]

- Rochon E, Saffran EM, Berndt RS, Schwartz MF. Quantitative analysis of aphasic sentence production: Further development & new data. Brain & Language. 2000;72:193–218. doi: 10.1006/brln.1999.2285. [DOI] [PubMed] [Google Scholar]

- Saffran EM, Berndt RS, Schwartz MF. The quantitative analysis of agrammatic production: Procedure and data. Brain and Language. 1989;37:440–479. doi: 10.1016/0093-934x(89)90030-8. [DOI] [PubMed] [Google Scholar]

- Stevens SS. Psychophysics: Introduction to its perceptual, neural and social prospects. New York: Wiley; 1975. [Google Scholar]

- Taylor WL. Cloze procedure: A new tool for measuring readability. Journalism Quarterly. 1953;30:415–433. [Google Scholar]

- Yorkston KM, Beukelman DR. An analysis of connected speech samples of aphasic and normal speakers. Journal of Speech and Hearing Disorders. 1980;45:27–36. doi: 10.1044/jshd.4501.27. [DOI] [PubMed] [Google Scholar]