Abstract

Problem

Lack of comparable data on adverse outcomes in hospitalised surgical patients.

Design

A Plan‐Do‐Study‐Act (PDSA) cycle to implement and evaluate nationwide uniform reporting of adverse outcomes in surgical patients. Evaluation was done within the Reach Efficacy‐Adoption Implementation Maintenance (RE‐AIM) framework.

Setting

All 109 surgical departments in The Netherlands.

Key measures for improvement

Increase in the number of departments implementing the reporting system and exporting data to the national database.

Strategies for change

The intervention included (1) a coordinator who could mediate in case of problems; (2) participation of an opinion leader; (3) a predefined plan of action communicated to all departments (including feedback of results during implementation); (4) connection with existing hospital databases; (5) provision of software and a helpdesk; and (6) an instrument based on nationwide standards.

Effects of change

Implementation increased from 18% to 34% in 1.5 years. The main reason for not implementing the system was that the Information Computer Technology (ICT) department did not link data with the hospital information system (lack of time, finances, low priority). Only 5% of the departments exported data to the national database. Export of data was hindered mainly by slow implementation of the reporting system (so that departments did not have data to export) and by concerns regarding data quality and public availability of data from individual hospitals.

Lessons learned

Hospitals need incentives to realise implementation. Important factors are financial support, sufficient manpower, adequate ICT linkage of data, and clarity with respect to public availability of data.

Keywords: adverse outcomes, reporting, surgery

At a time of increasing patient awareness and stories in newspapers about differences in mortality rates and adverse outcomes between hospitals, there is an increasing need to develop nationwide and uniform reporting of outcomes. Such a reporting system should enable healthcare professionals to study why hospitals differ in adverse outcomes, and whether these differences continue to exist after adjustment for differences in patient mix and treatment mix between hospitals. At the same time, a nationwide reporting system may be used to study determinants of adverse outcomes and as a data source to inform patients before treatment. Hospitals with high rates of adverse outcomes may target these determinants to reduce the occurrence of adverse outcomes, thereby improving the quality of care. Nothing is gained by false positive signals of differences between hospitals that are based on differences in definition or in methods used.

Outline of problem and context

In most surgical departments of hospitals in The Netherlands adverse outcomes are registered,1 but often only on paper and using different definitions and classification methods in different hospitals. This is far from ideal for comparing the outcomes of different hospitals. To improve the current situation, a uniform nationwide reporting system of adverse outcomes is needed in which the same definitions are used, adverse outcomes are classified and coded in the same way, and the same information is gathered that may possibly explain (part of) the differences in outcomes between hospitals.

The Association of Surgeons in The Netherlands aims to implement a uniform reporting system of adverse outcomes in routine surgical care in all hospitals, to establish a national database, and to study differences in adverse outcomes between hospitals. The integration in routine surgical care means that reporting is carried out by surgeons and surgical residents, in contrast with previous studies which were usually based on retrospective record review carried out by experts.2,3,4,5 As a result, surgeons and residents from all hospitals have to be convinced to change their current practice and to use this uniform reporting system.

This project is part of the adverse outcome programme of the Order of Medical Specialists6 which aims to develop and implement uniform and nationwide reporting of adverse outcomes by all national scientific associations of medical specialties in The Netherlands. The Association of Surgeons in The Netherlands was the first specialty to develop software including the uniform reporting system. Other specialties or other countries aiming to implement nationwide uniform reporting of adverse outcomes will benefit from the lessons learned in the present study.

The purpose of this paper is to evaluate the implementation of this nationwide reporting system, both the local implementation in hospitals and the export of data to the national database. Specific centres for surgical problems in children and private clinics were excluded. Together, the surgical departments had over 370 000 patient admissions in 2002.7

Assessment of problems and strategy for change

Approach

The Plan‐Do‐Study‐Act (PDSA) cycle was used.8 The different phases of this cycle were defined as:

Plan: to establish a national database of adverse outcomes in surgical patients in order to study differences between hospitals.

Do: to implement a nationwide uniform reporting system of adverse outcomes in all surgical departments in the Netherlands.

Study: to evaluate the local implementation of the reporting system in the hospitals and export of data to the national database, and to identify factors facilitating or hindering implementation.

Act: to determine which measures are taken or recommended to further improve the approach.

Questionnaires were sent to all surgical departments on two occasions to assess (1) the extent to which the reporting system was implemented, (2) changes in the extent of implementation, (3) the need for (more) support with implementation, and (4) whether data were sent to the national database or reasons for not doing so. Based on this information, we identified facilitating and hindering factors which can be used to improve the strategy used. In addition, we show which information can be obtained from the national database and whether determinants for differences in adverse outcomes between hospitals can be identified.

Intervention

From a recent review9 we identified the following facilitating factors that were included in our implementation strategy:

Participation of an opinion leader (a surgeon), a process manager (coordinator) and experts. The coordinator could be reached for (onsite) support and to mediate in case of problems with local implementation.

Use of a predefined plan of action communicated to all departments, including regular feedback of accomplished results during implementation (through letters and presentation at conferences) to motivate surgeons that it could be done.

Use of a nationwide definition of an adverse outcome10 agreed upon by all medical specialties, and clear procedures on how to report adverse outcomes in daily clinical practice and enter these in the software.

Use of a nationwide multidisciplinary approach regarding the classification of adverse outcomes. Adverse outcomes were classified on three dimensions: (a) pathology (such as bleeding, infection); (b) location, both region (such as abdomen, arm) and organ or organ system (such as lung, liver); and (c) external factors and other characteristics (such as medication). For each dimension, existing classification systems were followed as closely as possible, although adaptations were made as required by the specific nature and the analysis framework of adverse outcomes. For example, the first dimension—pathology—was inspired by the International Classification of Diseases 10th revision, adapting its general framework to the specific demands of adverse outcomes (as opposed to naturally occurring disease).

Provision of software including the classification mentioned under (4), the possibility to export data to the national database to be carried out by surgeons themselves, and a helpdesk.

Connection with existing hospital databases to facilitate reporting, which had to be created by the Information Computer Technology (ICT) department using standard procedures. These included that standard linkages were available (for free) for the main types of hospital information systems (covering about 75% of the hospitals), so that the ICT department only had to make adjustments resulting from local variations in the implementation of the hospital information system.

Flexibility in adapting (the software) to current practices, mostly done with help from the coordinator.

In addition, the coordinator actively approached departments to inquire about problems encountered—followed by onsite visits if needed—to facilitate implementation.

Key measures of improvement

The Reach Efficacy‐Adoption Implementation Maintenance (RE‐AIM) framework,11,12 a well known framework used in the evaluation of interventions, was used to evaluate the local implementation in hospitals and the export of data to the national database. On every dimension of this framework we defined measures for success of the intervention (table 1). These measures relate to questions in two questionnaires that were sent to the departments in 2002 and 2003. The following information was obtained for each surgical department:

Table 1 Application of RE‐AIM framework to study intervention.

| Reach: % of target population that participated in this intervention |

| % of surgical departments that received software |

| % of surgical departments reporting adverse outcomes in 2002 |

| Efficacy: success rate if implemented as in protocol |

| % of surgical departments sending in information to national database over period 2000–2 |

| % of surgical departments intending to send information to national database over the year 2003 |

| Adoption: % of settings that will adopt this intervention |

| % of surgical departments willing to implement reporting system |

| % of surgical departments unwilling because of own reporting system |

| Implementation: extent to which intervention is implemented |

| % of surgical departments with successful implementation of reporting system in 2003 |

| % of surgical departments without implementation in 2002 but with successful implementation in 2003 |

| % of surgical departments experiencing problems with implementation in 2003 |

| % of surgical departments with need for support beyond 2003 |

| Maintenance: extent to which a program is sustained over time |

| % of surgical departments with successful implementation in 2002 that maintained implementation in 2003 |

| % of surgical departments with successful implementation in 2002 that lost implementation in 2003 |

| Reasons for loss of implementation |

Whether adverse outcomes were reported (yes/no) and how these were registered (on paper/using the software supplied/own system/other).

Whether the supplied software was installed (yes/no) and reason for not installing (no priority of hospital or ICT department/lack of time but intends to do so in the future/problems with linkage to hospital information system/own system/other).

Whether a linkage with the hospital information system was achieved and, if not, which problems were encountered (no priority of hospital or ICT department/lack of time but intends to do so in the future/own system/other).

Whether the reporting system was fully implemented: the software linked to the hospital information system was used to systematically report adverse outcomes.

Whether data were exported to the national database and reasons for not exporting data (lack of time of surgeons/does not want to participate/reporting system not (fully) implemented/export too complicated/technical problems/other).

The problems “no priority of hospital or ICT department”, “lack of time but intends to do so in the future”, and “problems with linkage to hospital information system” were counted as surgical departments potentially willing to adopt the intervention. The reasons “own system” and “other” were counted as departments unwilling to adopt the intervention.

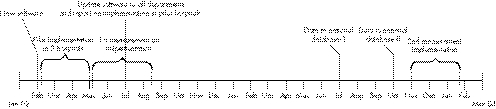

In case of non‐response or questions regarding the answers given, the written questionnaire was followed up by a telephone interview. These surveys were carried out in May–August 2002 and in November 2003–January 2004. In the second questionnaire we checked whether the information from the first questionnaire was still correct, and asked whether the departments needed additional support in implementing the system. Information was obtained from all hospitals for both questionnaires. Figure 1 graphically displays the timing of various steps in the implementation as well as the times of evaluation.

Figure 1 Implementation of surgical adverse outcome reporting system and evaluation over time in The Netherlands. February 2002: first version of new software distributed to all departments; February–May 2002: implementation of software with active support in three pilot hospitals; May –August 2002: first measurement on extent of implementation of adverse outcome reporting system and problems in all departments; July 2002: update of software incorporating lessons from pilot hospitals and report on implementation in pilot hospitals sent to all surgical departments.

Effects of change

Local implementation in hospitals

All 109 departments, consisting of 47 departments in teaching hospitals and 62 in non‐teaching hospitals, were reached and provided information on the intervention and the software (table 2). Most (n = 102, 93.6%) had already reported adverse outcomes in 2002, but often only on paper (n = 40, 36.7%). Most surgical departments (n = 93, 85.3%) were willing to adopt the intervention. The main reason for not being willing to adopt it was that the department had its own system of adverse outcome reporting (n = 11, 10.1%; table 2). Other reasons included, for example, that the reporting system was too complicated (n = 2, 1.8%).

Table 2 Evaluation of implementation of national reporting system of adverse outcomes in surgical patients using the RE‐AIM framework.

| Measure for improvement | No (%) |

|---|---|

| Reach: % of target population that participated in this intervention | |

| % of surgical departments that received software | 109 (100%) |

| % of surgical departments reporting adverse outcomes in 2002 | 102 (93.6%) |

| Efficacy: success rate if implemented as in protocol | |

| % of surgical departments sending in information to national database over period 2000–2 | 6 (5.5%) |

| % of surgical departments intending to send information to national database over the year 2003 | 22 (20.2%) |

| Adoption: % of settings that will adopt this intervention | |

| % of surgical departments willing to implement reporting system | 93 (85.3%) |

| % of surgical departments unwilling because of own reporting system | 11 (10.1%) |

| Implementation: extent to which intervention is implemented | |

| % of surgical departments with successful implementation of reporting system in 2003 | 37 (33.9%) |

| % of surgical departments without implementation in 2002 but with successful implementation in 2003 | 21 (19.3%) |

| % of surgical departments experiencing problems with implementation in 2003 | 50 (45.9%) |

| % of surgical departments with need for support beyond 2003 | 78 (71.6%) |

| Maintenance: extent to which a program is sustained over time | |

| % of surgical departments that maintained implementation in period 2002–3 | 16 (14.7%) |

| % of surgical departments that lost implementation in 2003 | 4 (3.6%) |

In 2003, 37 of the 109 departments (33.9%) successfully implemented the national reporting system (table 2) compared with 20 departments (18.3%) in 2002. The increase was higher in teaching hospitals (from 23.4% to 44.7%) than in non‐teaching hospitals (from 14.5% to 25.8%; χ2 = 6.07, p = 0.014). In the study period of 1.5 years, 21 departments successfully implemented the reporting system in addition to those already using it in 2002. However, four departments lost their implementation.

Of the 20 departments that had implemented the reporting system in 2002, 16 maintained it over the period of the study (table 2). The reason the four departments lost their implementation was mostly due to changes in the hospital information system so that linkage with the reporting system had to be established all over again. This is therefore outside our control. All of them intended to re‐implement the system in the near future so their loss of implementation is expected to be temporary.

Problems with local implementation

A large number of the surgical departments (n = 50, 45.9%) still had problems implementing the reporting system (table 2). The main reason given by 42 departments (84%) was problems with the ICT department, either technical problems in establishing the linkage with the hospital information system or lack of time/priority/finances in the ICT department. Eight departments claimed that lack of time on their own part had been the main reason for not implementing the reporting system, but that they intended to do so.

Many departments wanted support beyond 2003 (n = 78, 71.6%, table 2), both departments that had already implemented the reporting system (25/37 departments, 67.6%) and departments that had not yet implemented it (53/72 departments, 73.6%), but for different reasons. The need for support in departments that had implemented the system was mainly focused on the integration of the system in daily practice and how to use the reporting system for quality improvement, whereas the need for support in departments that had not implemented the reporting system was mainly focused on contact with the ICT department to explain what was needed and to help in establishing linkage with the hospital information system.

Export of data to national database

Figure 1 shows that data were exported at the end of the year rather than regularly throughout the year. We departed from our original plan to send in data regularly because of the problems with implementation shown in the first measurement. As a result, we focused on support with implementation and delayed the time of exporting data.

Only six departments (5.5%) had sent information to the national database during the period 2000–2 (table 2). The reasons given for not sending information to the national database were:

reporting system not implemented so no available data on this period (78 departments, 71.6%);

concerns regarding quality of data (10 departments, 9.2%);

export not possible from own reporting system (7 departments, 6.4%);

technical problems or export too complicated (4 departments, 3.7%);

lack of time (2 departments, 1.8%);

concerns on lack of clarity from the government regarding what will happen with the data—for example, whether they will become public (2 departments, 1.8%).

Information in the national database from the six departments concerned 38 099 patient admissions, 3007 of which (7.9%) had one or more adverse outcome. For operated patients, adverse outcomes occurred in 10.6% of admissions and death occurred in 1.3%. These values seem low in comparison with previous estimates of 5–27% for adverse outcomes13,14,15,16 and 0.8–2.7% for deaths13,15,16 in surgical patients in the Netherlands. Part of the explanation may be the completeness of information which is inherent to the start of data collection using a routine reporting system.

Lessons learned and next steps

Reflecting the actual goal of this study, it was disappointing to find that only 5% of departments had exported data to the national database. It was found that export of data was hindered mainly by slow implementation of the reporting system (so that departments did not yet have data to export) and by concerns regarding data quality and public availability of data from individual hospitals. Implementation increased from 18% to 34% in 1.5 years. Problems with local implementation were mostly due to the fact that the ICT department did not link data with the hospital information system (lack of time, finances, low priority), which is outside the control of the surgical department. On the other hand, some of the problems encountered are within the reach of the surgical departments, such as lack of time or concerns regarding quality of data, which can be improved.

The reason that information was not sent to the national database was due, to a significant extent, to the fact that many departments did not have data concerning this period as the reporting system had not been implemented at that time. Even so, 21 departments had data but only six exported data (28.6%). Another reason may be that it was the first time the export of data had been requested and people still had to get used to procedures (“it is probably complicated to do”). How valid and reliable would their data be? What kind of information would be retrieved from their data?

A more fundamental issue underlying the delay in sending information is that there is no incentive to implement a reporting system of adverse outcomes other than the desire of surgeons to improve the quality of care. However, there is the possibility of negative consequences, such as public availability of the data without any guidance so that they can easily be misinterpreted. For example, hospitals and surgeons with many high risk patients are likely to have a higher percentage of adverse outcomes which is not related to the quality of care.

A similar intervention developed in the context of US Veterans Affairs' (VA) hospitals was shown to be more successful.17 Prompted by a 1986 congressional mandate, this study started in 1991 in 44 medical centres and from 1994 onwards it was extended to all 132 VA medical centres performing surgery. Significant differences from the present study include the annual level of funding (4 million dollars compared with 93 000 dollars for the present study) and the manpower dedicated to the study (88 permanent full time equivalent positions compared with one coordinator and doctors entering adverse outcomes data in their private time). In addition, surgeons and residents in the present study had to be convinced to take part in the national (voluntary) reporting system and to change their current practice of registering events, compared with the controlled environment of VA hospitals in which the above study took place following a congressional mandate.

Data from the Hospital Quality Alliance show the influence of a statutory intervention on the uptake of hospitals sending in information: 2626 hospitals (62.5% of the 4203 hospitals in the Hospital Quality Alliance database18) sent in data in the third quarter of 2003 before a statutory intervention in December 2003 required the Centers for Medicare and Medicaid Services to withhold 0.4% of the annual payment update for hospitals not reporting data. Following this financial incentive, recruitment and reporting increased to 4179 hospitals (99.4%) (personal communication, David Hunt). Even though the Hospital Quality Alliance reports process measures rather than outcome measures, as discussed in the present study, we think that the conclusions with respect to the importance of incentives will be similar.

A strength of our approach is that, once implemented, reporting is probably done by motivated surgeons so that any findings regarding prevention of future adverse outcomes can be translated directly into daily practice.19 However, a weakness may be that surgeons also have to implement the system themselves with relatively very limited practical and financial support. The main reason given by surgical departments for not having implemented the reporting system was the difficulty in mobilising the ICT department to establish the necessary linkage with the hospital information system. There is little reason to assume that the linkage itself was complex since:

free standard linkages were made available by the Order of Medical Specialists for approximately 75% of hospital information systems and only needed to be adjusted to local variations of that particular hospital information system;

other hospitals with the same information system proved that it could be done;

our experience shows that, once the ICT department was willing to collaborate, linkage was always established.

Key messages

Comparable data on surgical adverse outcomes in hospital inpatients are not available in The Netherlands.

A uniform reporting system is needed to find determinants for hospital‐based differences in adverse outcome probabilities which can be used to develop quality improvements and to adequately inform patients before surgery.

This study evaluated the implementation of a nationwide uniform reporting system in The Netherlands, comprising both local implementation in hospitals and export of data to the national database.

The major factor hindering local implementation was that the Information Computer Technology (ICT) department did not link data with the hospital information system (lack of time, finances, low priority).

Only 5% of the departments exported data to the national database. Export of data was hindered by slow implementation of the reporting system (so that departments did not have data to export) and by concerns regarding data quality and public availability of data from individual hospitals.

Hospitals need to be given incentives to facilitate implementation, either in financial terms or in manpower, as well as clarity with respect to public availability of data.

The project coordinator functioned as an important intermediate between the ICT and surgical departments to overcome problems, but could not force the ICT departments to devote time and priority to establishing this linkage, particularly if they claimed to have other priorities issued by hospital boards. On the other hand, it is also possible that the excuses of the ICT department should be interpreted as unwillingness of the surgeons. However, if this reason was given, we asked for a contact person within the ICT department and checked whether they confirmed this so that we could rule out this explanation. However, if onsite visits for support are needed to facilitate the contact with the ICT department and only one coordinator is available to conduct these visits and to provide other support, it will take more than 1.5 years to implement the reporting system in over 100 surgical departments. Furthermore, problems in collaborating with ICT departments also affected the maintenance of the reporting system—for example, due to changes in the hospital information system, thereby affecting the linkage with the adverse outcome software.

To facilitate the local implementation of the reporting system in hospitals as well as export to the national database, hospitals need to be given incentives either in financial terms or in manpower, and clarity with respect to public availability of data. For example, only hospitals that have a reliable and validated adverse outcome reporting system according to existing standards and export data to the national database should receive such (financial) compensation to support the continued reporting of adverse outcomes. It is encouraging to find that the information from the national database from hospitals that did export data—that is, a 10.6% adverse outcome rate in operated patients—was comparable to the rate found in the last 2 years of the study in US VA hospitals.

Given the results of the present study, the Dutch Health Care Inspectorate is of the view that data from individual hospitals in the national database will not be made public, but only aggregated data in categories that are meaningful to patients. This clarity is likely to stimulate the export of adverse outcome data to the national database. These data will therefore become more reliable, which is an important prerequisite for the data to be used in patient education and research on differences between hospitals.

The benefits of implementing this reporting system on a local level need to be emphasised, given the high cost of any adverse outcome compared with the cost of implementing the system. A hospital needs to know the types of adverse outcomes that occur to be able to link them with surgical and surgically related procedures. This is a necessary prerequisite to starting initiatives to develop and implement interventions to prevent these adverse outcomes in the future.

Footnotes

The data were collected as part of a project funded by the Health Research and Development Council (grant number 16450001) and by the Association of Surgeons of The Netherlands. The development of the nationwide system including the software and related technical support is funded by the Order of Medical Specialists.

Conflict of interest: none.

References

- 1.Marang‐van de Mheen P J, Kievit J. Automated registration of adverse events in surgical patients in the Netherlands: the current status (in Dutch). Ned Tijdschr Geneeskd 20031471273–1277. [PubMed] [Google Scholar]

- 2.Brennan T A, Leape L L, Laird N M.et al Incidence of adverse outcomes and negligence in hospitalized patients: results of the Havard Medical Practice Study I. N Engl J Med 1991324370–376. [DOI] [PubMed] [Google Scholar]

- 3.Gawande A A, Thomas E J, Zinner M J.et al The incidence and nature of surgical adverse outcomes in Colorado and Utah in 1992. Surgery 199912666–75. [DOI] [PubMed] [Google Scholar]

- 4.Kable A K, Gibberd R W, Spigelman A D. Adverse outcomes in surgical patients in Australia. Int J Qual Health Care 200214269–276. [DOI] [PubMed] [Google Scholar]

- 5.Davis P, Ley‐Yee R, Briant R.et alAdverse outcomes in New Zealand Public Hospitals: principal findings from a national survey. Occasional Paper No 3. New Zealand: Ministry of Health, 2001

- 6. www.orde.nl/Kwaliteit/Complicatieregistratie (in Dutch)

- 7.Prismant Hospital statistics by specialty. Available at www.prismant.nl

- 8.Institute for Healthcare Improvement www.ihi.org/IHI/Topics/Improvement/ImprovementMethods/HowToImprove

- 9.Fleuren M A H, Wiefferink C H, Paulussen T G W M. Obstructing and facilitating factors in the implementation of health care improvement in organisations (in Dutch). TNO PG Report Nr PG/VGZ 2002.203. Leiden 2002

- 10.Kievit J, Jeekel J, Sanders F B M. Adverse outcome registration and quality improvement (in Dutch). Med Contact 1999541363–1365. [Google Scholar]

- 11.Glasgow R E, Vogt T M, Boles S M. Evaluating the public health impact of health promotion interventions: the RE‐AIM framework. Am J Public Health 1999891322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Glasgow R E, McKay H G, Piette J D.et al The RE‐AIM framework for evaluating interventions: what can it tell us about approaches to chronic illness management? Patient Educ Counsel 200144119–127. [DOI] [PubMed] [Google Scholar]

- 13.Roukema J A, van der Werken C, Leenen L P H. Reporting postoperative adverse outcomes to improve results of surgery (in Dutch). Ned Tijdschr Geneeskd 1996140781–784. [PubMed] [Google Scholar]

- 14.Veen E J, Janssen‐Heijnen M L G, Leenen L P H.et al The registration of complications in surgery: a learning curve. World J Surg 200529402–409. [DOI] [PubMed] [Google Scholar]

- 15.Veen M R, Lardenoye H P, Kastelein G W.et al Recording and classification of complications in a surgical practice. Eur J Surg 1999165421–424. [DOI] [PubMed] [Google Scholar]

- 16.Veltkamp S C.Complications in surgery. PhD Thesis, Utrecht 2001

- 17.Khuri S F, Daley J, Henderson W.et al The department of Veterans Affairs' NSQIP: the first national, validated, outcome‐based, risk‐adjusted, and peer‐controlled program for the measurement and enhancement of the quality of surgical care. Ann Surg 1998228491–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jha A K, Li Z, Orav E J.et al Care in US hospitals: the Hospital Quality Alliance Program. N Engl J Med 2005353265–274. [DOI] [PubMed] [Google Scholar]

- 19.Stadlander M, Marang‐van de Mheen P J, Kievit J. Quality improvement by adverse outcome registration: feasible and necessary (in Dutch). Kwaliteit in Beeld 2003516–17. [Google Scholar]