Abstract

Reconstruction algorithms for circular cone-beam (CB) scans have been extensively studied in the literature. Since insufficient data are measured, an exact reconstruction is impossible for such a geometry. If the reconstruction algorithm assumes zeros for the missing data, such as the standard FDK algorithm, a major type of resulting CB artifacts is the intensity drop along the axial direction. Many algorithms have been proposed to improve image quality when faced with this problem of data missing; however, development of an effective and computationally efficient algorithm remains a major challenge. In this work, we propose a novel method for estimating the unmeasured data and reducing the intensity drop artifacts. Each CB projection is analyzed in the Radon space via Grangeat's first derivative. Assuming the CB projection is taken from a parallel beam geometry, we extract those data that reside in the unmeasured region of the Radon space. These data are then used as in a parallel beam geometry to calculate a correction term, which is added together with Hu's correction term to the FDK result to form a final reconstruction. More approximations are then made on the calculation of the additional term, and the final formula is implemented very efficiently. The algorithm performance is evaluated using computer simulations on analytical phantoms. The reconstruction comparison with results using other existing algorithms shows that the proposed algorithm achieves a superior performance on the reduction of axial intensity drop artifacts with a high computation efficiency.

1. INTRODUCTION

Circular cone-beam (CB) scans are commonly used in X-ray CT. However, given Tuy's data sufficiency condition [1], the Radon space data of the scanned object cannot be completely measured in such an imaging geometry, and an exact reconstruction is possible only in the plane of the source trajectory (midplane). Many approximate reconstruction algorithms have been proposed in the literature. The FDK algorithm, developed by Feldkamp et al. [2], is by far the most popular mainly due to its structure of one-dimensional (1D) shift-invariant filtering and backprojection. Although originally derived as a heuristic extension of the exact fan-beam reconstruction, the FDK algorithm has been shown to be equivalent to an exact 3D reconstruction if the unmeasured Radon space data are assumed zeros [3, 4], except that a small correction term is also needed [5, 6]. Therefore, the FDK algorithm is exact for an object with uniform distribution in the longitudinal direction [2], whose Radon space data (the first derivative of the Radon transform) are exactly zeros in the unmeasured region of a circular trajectory. However, in general, zero is not a good approximation of the missing Radon space data, for the case when the scanned object is nonuniform and compactly supported, and has nonnegative attenuation coefficients. Consequently, the reconstructed images have CB artifacts, such as the well-known intensity drop in the axial direction [2, 5, 7–10]. The reconstruction can be improved by using an auxiliary trajectory in addition to the circular trajectory to measure the missing data [11–13]. In this work, we focus on using circular trajectories only, and develop an estimation-based method to reduce the intensity drop artifacts.

Reduction of artifacts in circular cone-beam CT (CBCT) can be achieved by estimating the unmeasured data using interpolation or extrapolation. Using Grangeat's formula [3], CB projection data can be analyzed as the first derivative of the Radon transform of the scanned object, and data estimation using interpolation can be performed in the Radon space to suppress CB artifacts [8]. However, this method requires three-dimensional (3D) data gridding, and hence it is computationally intensive; and in addition the backprojection structure is lost. Estimation methods of the Radon space data are also proposed in the space of reconstructed images, using multiple scans with different source-to-axis distances [7, 14, 15]. Zeng et al. developed improved algorithms to reduce the intensity drop using iterations [16]. These algorithms require either multiple reconstructions using different imaging geometric parameters or an iterative reconstruction that involves several computationally intense forward and backprojection steps. Other researchers have developed many improved algorithms in the framework of filtered-backprojection (FBP), without using Grangeat's framework [9, 10, 17, 18]. Since FBP has a compact structure, it is not easy to implement data interpolation/extrapolation in the Radon domain to further reduce the intensity drop artifacts.

Hu discovered that in a circular CB trajectory, the original object can be written as the summation of three terms [5]:

| (1) |

where f is the original object, is the FDK reconstruction, is Hu's correction term which represents the information contained in a circular CB scan but not utilized in the FDK reconstruction, and represents the information that is missing in the circular trajectory and cannot be reconstructed exactly. Hu proposed an algorithm that includes the first two measured terms, which shows reduced intensity drop in the reconstruction as compared to the FDK reconstruction [5]. Based on Grangeat's formula, Yang et al. proposed an algorithm that estimates the missing term and effectively reduces the intensity drop artifacts [19]. However, the formula of the estimated term takes the form of shift-variant filtering and backprojection, two steps that both require intense computation.

The work presented in this paper is also based on Grangeat's formula and Hu's theory. However, the derivation of estimation formula for is different from that of Yang, and the resulting implementation of the final formula is very efficient. We first analyze the CB projection data in the Radon domain via Grangeat's formula. Then, the unmeasured Radon space data are estimated from the CB projection by assuming that the projection is acquired in a parallel beam geometry. This approximation is equivalent to a data interpolation in the Radon domain. The estimated data are reconstructed as in the parallel beam geometry. More approximations are made to avoid the expensive steps of shift-variant filtering and backprojection in the calculation. The result is then added to the standard FDK reconstruction together with Hu's correction term to form the final reconstruction. Although the derivation assumes projections on a full scan, it can be readily extended to short-scan reconstructions using heuristic weighting schemes, such as Parker's weighting [20]. The performance of the proposed algorithm is verified using computer simulations on the 3D Shepp-Logan phantom, the FORBILD head phantom and the Defrise disc phantom. To fully evaluate the algorithm, the reconstructions are also compared with those using other existing algorithms.

The rest of this paper is organized as follows. Section 2 reviews the Radon transform and Grangeat's formula. The main algorithm is then derived and a reconstruction scheme is also proposed. Section 3 presents the results of computer simulations. Finally, Section 4 summarizes the paper.

2. METHOD

2.1. The system geometry

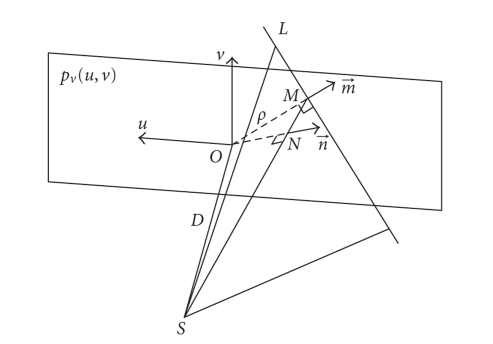

The system geometry is shown in Figure 1. In this paper, we use an equally spaced flat panel detector with a finite size. Algorithms when other types of detectors are used can be derived similarly. During data acquisition, the X-ray source S rotates about the z axis in the x-y plane, with a fixed distance D to the center of rotation O. Angle γ m is the full fan angle determined by the size of the detector and the focal spot to detector distance. We derive the algorithm assuming that the range of the view angle β is 360 degrees in a full-scan mode. The detector is placed perpendicular to for each projection. The object to be reconstructed is described by a compactly supported nonnegative function , where is the Cartesian coordinate. In the derivation, it is also assumed that there is no truncation of the projection data; this condition, however, will be relaxed based on the final formula.

Figure 1.

CB data acquisition geometry and coordinate system.

Denoting the distance from O to detector as D od, the relationship between p(u, v, β), the real projection image, and p v(u, v, β), the image on a virtual detector that is parallel to the real detector and passes through O, is as follows:

| (2) |

For simplicity, we use p v in the reconstruction hereafter, and the parameter β is dropped in the algorithm derivation if it is not used.

Main variables used in this paper are listed in Table 1 for clarity.

Table 1.

Variable glossary.

| D | Distance from the X-ray source to the rotation center |

| D od | Distance from the rotation center to the detector |

| f | Scanned object |

| Estimation of | |

| Reconstruction using the FDK algorithm | |

| Correction term in the Hu-FDK algorithm | |

| Missing information in the circular CB trajectory | |

| g 0 | Ramp filtering kernel |

| g h | Hilbert transform kernel |

| p | CB projection image |

| p F | Ramp-filtered image of p m |

| p h | Intermediate function defined in (17) |

| p m | Projection image reconstructed from partial data of S′ p p |

| p p | Parallel-beam projection image |

| p v | Projection image on the virtual detector (see Figure 1) |

| R f | Radon transform of an object f |

| S p | Weighted sinogram of a projection image p |

| w | Map function defined in (7) |

| α | Angular parameter of S p |

| β | CB projection angle |

| γ m | Full fan angle |

| ρ | Displacement parameter of S p |

2.2. The Radon inversion and Grangeat's formula

Reconstruction of the original object from its projections can be solved using the 3D Radon inverse formula [3, 21]. However, it is not efficient to directly use the Radon inversion on the X-ray projection data. To reduce the computational complexity, Grangeat established a fundamental relationship between the X-ray projection image and the first derivative of the Radon transform of the scanned object.

Figure 2 shows the geometric parameters of Grangeat's formula. One line L on the projection image p v can be specified by two parameters, its distance to the origin , and the vector in the image plane and perpendicular to L. The line L and the focal spot S determine a plane P, which can also be specified by two parameters, its normal vector and its displacement to the origin, . Define an intermediate function S p as a weighted sinogram of the 2D projection image on the virtual detector p v:

| (3) |

where is a vector in the plane of the projection image, and is the cosine weighting for an oblique incident angle.

Figure 2.

Illustration of the geometry of Grangeat's formula.

Denote R f as the Radon transform of the scanned object f, which is specified by a unit vector and a scalar ρ 0:

| (4) |

where P 0 is a 2D plane, with a normal vector and a displacement ρ 0 from the origin.

The relationship between the first derivative of S p and the first derivative of R f can be found:

| (5) |

where both of the first derivatives are with regard to the first parameter.

Based on Grangeat's formula (5), Figure 3 shows the data supports in the domain of R′ f for a circular CB trajectory. For simplicity, hereafter we refer to the domain of the first derivative of the Radon transform as the Radon domain, and the data in this domain as the Radon space data. For one CB projection, the surface of a sphere is measured in the Radon domain; as the projection rotates, the sphere rotates as well, and after a full scan, a torus of data are measured. It can be seen that not all of the Radon space is measured, and the missing data problem is clear. The diameter of the sphere of one projection is D, the distance from the focal spot to the center of rotation. As this distance increases, the region of missing data gets smaller. In a parallel beam geometry (with an infinite D), this sphere surface becomes a plane, and the missing data problem disappears.

Figure 3.

Data supports in the Radon domain.

This formalism provides a clearer understanding of the three terms in (1), which is consistent with Hu's original arguments [5]. The FDK reconstruction represents all the information inside the torus of measured data and partial information on the surface of the torus; Hu's correction term compensates for the unused data on the surface of the torus; the term represents the missing information outside the torus, and it is our goal to estimate these data.

2.3. The algorithm derivation

The missing data represented by the term result in CB artifacts in the reconstructed images. One solution to this problem is estimating this term using interpolation in the Radon domain. Inspired by the fact that a parallel beam geometry is free of the missing data problem, the missing data are estimated from measured CB projections, assuming that they are acquired in a parallel geometry. Then reconstruction is carried out using only these estimated data as though acquired in a parallel beam geometry, and the result is added to the standard FDK term and Hu's term as a correction to form a final reconstruction. Using this method, partial data on the spherical surface of one CB projection as shown in Figure 3 are filled in the region of missing data, and a data interpolation/extrapolation is carried out implicitly.

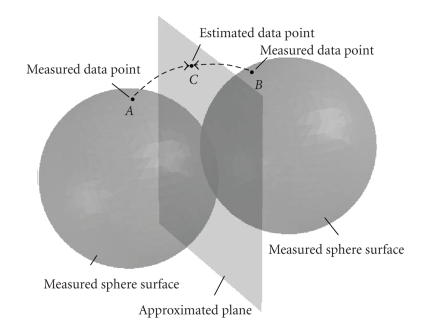

The concept of implicit data interpolation/extrapolation is illustrated in the Radon space in Figure 4. As similar to Figure 3, the sphere surfaces in the figure are measured using CB projections. If we assume the CB projections are acquired in a parallel beam geometry and reconstruct the object using a parallel beam geometry as well, the measured sphere surfaces become planes, that is, data points on the measured sphere surfaces are relocated onto the planes. Note that measured sphere surfaces associated with CB projections from the opposite projection angles are approximated as the same plane in the Radon space. As shown in Figure 4, data point C on the plane maps to two points A and B associated with two different CB projections. After the CB to parallel approximation, both data points A and B are “moved” to data point C; equivalently, the approximated data point C is a weighted summation of data points A and B. Therefore, this process can be considered as a linear interpolation of data point C from measured data points A and B.

Figure 4.

Illustration of the concept of implicit data interpolation/extrapolation. Data point C on the approximated plane is a weighted summation of measured data points A and B.

Using the idea of implicit interpolation/extrapolation, we can estimate Radon space data on a plane from two CB projections. As the projection angle changes, all the Radon space data can be estimated. However, we only need to estimate the data in the missing region of the Radon space in a CB geometry, and use them in the correction of the artifacts. Figure 5 is a 2D plot of the vertical plane in the Radon domain in Figure 3. If a parallel beam geometry is used in the data acquisition, the data of this plane can be measured completely using one projection from the direction of the normal of the plane. In a CB geometry, however, the measured data in the plane consists of two discs (shown as shaded regions), and the data outside are missing. If the CB projection from the direction of the normal of the plane is assumed to be a parallel projection, the measured data can be calculated in the whole plane; then only the data outside the shaded region are used in the reconstruction of the correction term . The data of R′ f in the missing region can be separated from the measured data by multiplying by w(s, t), a map function that is zero in the shaded region of Figure 5, and one outside. Denote p p as the projection image in a parallel beam geometry from the direction of the normal of the plane, and S p p as its sinogram. The relationship between R′ f and S′ p p can be found using Grangeat's formula, by letting D go to infinity in (5):

| (6) |

From Figure 2, it is also seen that the unit vector becomes identical to in a parallel geometry. Let or . For simplicity, the functions and are rewritten as S′ p p(ρ, α) and R′ f(ρ, α) in the polar coordinate system. Based on Figure 5, the map function w in the (ρ, α) coordinate system can be found as

| (7) |

Figure 5.

A vertical plane in the Radon domain.

Now the multiplication of w(ρ, α) on R′ f(ρ, α) can be done directly on S′ p p, according to (6):

| (8) |

We then approximate the parallel projection data S′ p p using the CB projection data S′ p. Mathematically, the following approximation is made:

| (9) |

Note that D goes to infinity in a parallel beam geometry, and the weight (ρ 2 + D 2)/D 2 equals one on the right-hand side. Equations (8) and (9) show that the missing Radon space data are approximated using the measured CB projection data.

We first derive the correction term that compensates for the missing data in terms of S′ p p, and then apply the approximation shown in (9). To calculate the correction term, reconstruction must be carried out using only partial projection data in the domain of S′ p p. This can be done by using a 2D reconstruction of the projection data, followed by a 3D parallel reconstruction. Note that these intermediate steps are used only in the derivation, and the derived final formula is implemented efficiently without these steps.

Since S p p is the sinogram of the projection image, we can compute the parallel projection image p p using FBP in a 2D parallel geometry:

| (10) |

| (11) |

where g 0(u) is the ramp-filter kernel and g h(u) = 1/π u is the Hilbert kernel. In (11), we use a Hilbert transform after a first derivative operation to substitute for the ramp-filtering, so that the calculation can be directly applied on S′ p p.

Denote p m as the projection image reconstructed from partial data of S′ p p. Using (11), we have

| (12) |

Denote as an estimate of the missing data in (1). We can compute the correction term using the 3D parallel reconstruction of p m. This computation can be done as slices of 2D parallel reconstructions. The FBP-based reconstruction is

| (13) |

where p F is the ramp-filtered parallel projection at view angle β:

| (14) |

| (15) |

Calculation of using (12), (13), and (15) can be simplified. Based on the two-step method developed by Noo et al. [22], 2D parallel reconstruction can be carried out using a derivative backprojection followed by a 1D Hilbert transform on the reconstructed image. Therefore, the Hilbert transform is taken out of the integral:

| (16) |

where sgn is the signum function.

Define the function p h as

| (17) |

Then, we have

| (18) |

Both first derivatives are with regard to the first parameter.

Insert (18) into (15), and the two Hilbert transforms become −1 since the Hilbert transform of a Hilbert transform of a function equals the negative of the function:

| (19) |

The problem is simplified to the calculation of p h′. Applying the approximation in (9), we have

| (20) |

| (21) |

The discontinuous positions of the function w correspond to the points on the surface of the torus of measured data in Figure 3, which are compensated for by Hu's correction term. Therefore, in (20), the derivatives on the discontinuities of w are not included.

Equation (21) has a structure of shift-variant filtering (due to the shift-variance of the multplication by the weighting function) and backprojection, therefore the implementation is not very efficient. Furthermore, since a weighted sinogram of each 2D projection image is needed in the calculation, it is required that no truncation is present in the projection. In particular, the method suffers from projection truncation in the longitudinal direction, so-called long object problem.

We simplify (21) using further approximations. Since the weighting of S′′ p is usually much larger than that of S′ p, that is, (ρ 2 + D 2)/D 2 versus 2ρ/D 2, the second term associated with S′ p is ignored. For a circular trajectory with a not very large cone angle, we have D ≫ ρ. The weighting function w is nonzero only when |cosα| < |ρ/D| , that is, in small neighborhoods of α at π/2 and 3π/2. In these neighborhoods, the following approximations can be made:

| (22) |

Now the calculation of p h′ can be simplified as

| (23) |

The correction term can be calculated by combining (13), (19), and (23).

Note that the approximate p h′ (or p F) is a function independent of parameter u. Backprojection of p F shown in (13) can be implemented efficiently by simply changing variable v in the projection space to z in the reconstruction space.

2.4. Practical reconstruction scheme

The final reconstruction is the summation of the FDK reconstruction , Hu's term , and the correction term :

| (24) |

The practical implementation of this formula is summarized below. The derivations of the FDK reconstruction and Hu's term can be found in [2, 5], respectively, and the formulae are presented here as a reference:

| (25) |

where the subscript β stands for the coordinate transformation of rotation about the z axis by β; and

| (26) |

is calculated by using (13), (19), and (23):

| (27) |

This estimation formula of the missing term in (1) is the main result of the paper. The equation shows a simple structure of calculation. Note that, since the second derivative operation is very sensitive to high-frequency errors and the intensity drop artifacts are mostly low-frequency signals in the longitudinal direction, filtering techniques are used to suppress the errors in the calculation. A practical implementation can be divided into the following steps.

Step 1. —

Take each projection p v(u, v, β), integrate along the direction of u.

Step 2. —

Take the second derivative with respect to variable v.

Step 3. —

Filter the 1D profile obtained from Step 2 using a median filter and a window filter.

Step 4. —

Integrate the processed 1D profile along the projection angle β.

Step 5. —

Change coordinate variable from the projection space to the reconstruction space (from v to z).

Step 6. —

Weight by .

Step 7. —

Replicate the 1D profile of z in the directions of x and y to generate a 3D volume.

As discussed earlier, Step 3 is important to suppress high-frequency misestimation and remove the streak artifacts that are otherwise present in . The median filter is able to remove high spikes caused by object boundaries, and the window filter is able to smooth out small fluctuations. In all the implementations presented in this paper, we used a median filter with a width of 10 pixels and a Hamming window filter. It is worth mentioning that since the filtering is applied only on to enforce low-frequency estimation, it will not affect the resolution of the reconstructed images obtained by the first two terms in (24).

In (27), we take the second derivative of the projection images along the vertical direction, and therefore the proposed algorithm can survive the long object problem. The calculation of (27) is also very efficient, since neither a shift-variant filtering step nor a backprojection step is used. This feature makes the proposed algorithm distinct from other existing algorithms, such as Yang's method [19]. As will be shown in the section of numerical results, in our implementations, the proposed method is typically 7-8 times more efficient than Yang's method. Note that the calculation of Hu's term (26) has the same FBP structure as the FDK reconstruction, and the cone-beam backprojection steps of these two calculations can be combined to reduce the computation cost. Since in FBP reconstructions, the backprojection step takes the majority of the computation time, the computation complexity of the proposed reconstruction (24) is close to that of the FDK reconstruction only.

3. NUMERICAL RESULTS

3.1. Simulation details

The algorithm performance was evaluated using computer simulations. Table 2 summarizes the system parameters used in the simulations. Three computer phantoms were used in this study. The first was the 3D Shepp-Logan phantom as defined in [23], which contains low-contrast objects. The second was the FORBILD head phantom (http://www.imp.uni-erlangen.de/forbild/). This phantom contains high-contrast objects, and therefore it results in more missing data in circular CBCT geometry. To further verify the algorithm, the Defrise disc phantom was also used. The Defrise phantom consists of seven ellipsoidal discs stacked in the z direction. Each disc has a uniform attenuation coefficient of 1 mm−1, and the ellipsoid has a diameter of 140 mm and a thickness of 14 mm, with a distance of 25 mm between discs. This phantom has strong high-frequency components in the z direction, and therefore has high values in its first derivative of the Radon transform in the region where the data are unmeasured in a circular CB scan. It represents the most challenging case of reconstruction using the circular CB data.

Table 2.

Simulation parameters.

| Source to detector distance (D + D od) | 700 mm |

| Source to axis distance (D) | 350 mm |

| Detector size | 512 × 512 |

| Detector element pitch | 0.781 mm |

| Full cone angle in the z direction | 32deg |

| Full fan angle in the x-y plane | 32deg |

| Projection number of a full scan | 800 |

| Reconstructed volume | 256 × 256 × 256 |

| Reconstructed voxel size | 0.781 mm |

Simulations were carried out on full-scan data. To test the stability of the algorithm, reconstructions on noisy data of the Shepp-Logan phantom were also investigated. In the simulation, we used 300 000 photons per ray, and the base material of the Shepp-Logan phantom is modeled as water at 80 keV, with a linear attenuation coefficient of 0.01837 mm−1.

To demonstrate the merit of the proposed algorithm, we compared the reconstructions using five different algorithms: the FDK algorithm [2], Hu's algorithm (only the first two terms of (24) are included) [5], the T-FDK algorithm [10], Yang's algorithm [19], and the proposed algorithm (24). All the five algorithms are in the category of analytical reconstruction algorithms for circular CBCT. As discussed earlier in the introduction section, the T-FDK algorithm was developed heuristically with a structure of shift-invariant FBP, and Yang's algorithm was based on interpolation in the Radon space, with a structure of shift-variant FBP.

3.2. Reconstruction results

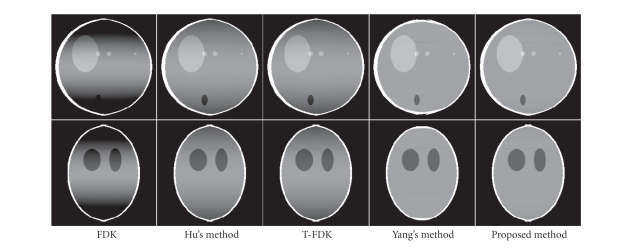

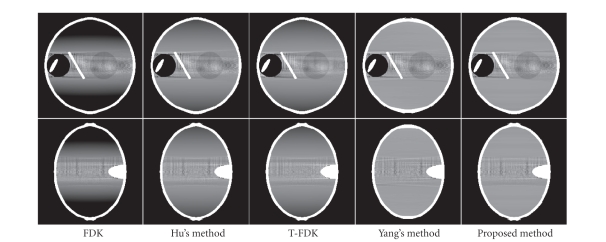

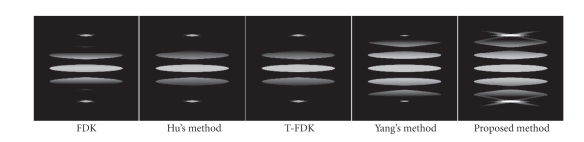

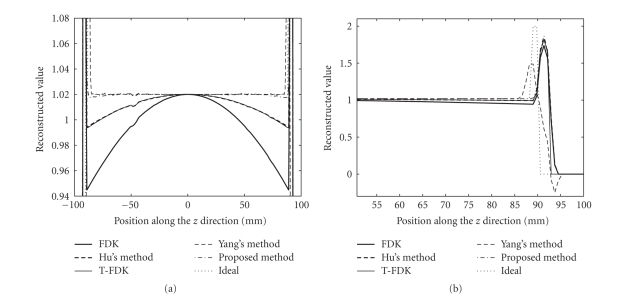

Figures 6, 9, and 10 show the reconstructed images on a full scan of the Shepp-Logan phantom, the FORBILD head phantom and the Defrise disc phantom, respectively. Comparisons of 1D vertical profiles of these images are also shown in Figures 8 and 11. The algorithm performances on the FORBILD head phantom are close to those on the Shepp-Logan phantom, and the 1D profile comparison of the reconstructions of the FORBILD head phantom is omitted here.

Figure 6.

Reconstructions of the Shepp-Logan phantom. Top row: x-z views; bottom row: y-z views. Display window: [0.98 1.05].

Figure 9.

Reconstructions of the FORBILD head phantom. Top row: x-z views; bottom row: y-z views. Display window: [1.02 1.08].

Figure 10.

Reconstructions of the Defrise phantom, x-z views. Display window: [0.7 1.1].

Figure 8.

1D central vertical profile comparison of Figure 6. (a) The reduction of the axial intensity drop. (b) The reconstructed object edge using different algorithms.

Figure 11.

1D central vertical profile comparison of Figure 10.

From the comparison, it is seen that the CB artifacts of axial intensity drop are apparent in the reconstructions using the FDK algorithm or Hu's algorithm, and these artifacts are effectively suppressed using the proposed method.

The performance of the T-FDK algorithm on the reduction of intensity drop artifacts is also inferior to that of the proposed algorithm, as shown in the comparison of reconstructions. It is worth mentioning that the T-FDK algorithm is slightly more efficient than the FDK algorithm [10], although it causes resolution loss [18]. Recall that the proposed algorithm requires computation close to that of the FDK algorithm. Therefore, the T-FDK algorithm is more efficient than the proposed algorithm. Note that the reconstruction results using Hu's algorithm and the T-FDK algorithm are very similar. This similarity holds under certain conditions, and readers can refer to [24] for detailed discussion.

Compared to Yang's algorithm, our proposed algorithm has an advantage of high computation efficiency. As discussed in Section 2.4, the correction term in our algorithm only involves integration and derivative operations, and it is computed very efficiently. Yang's correction term has a structure of shift-variant filtering and backprojection; both steps require intense computation. In our implementations, Yang's algorithm is typically 7-8 times slower than our proposed algorithm.

Both the proposed algorithm and Yang's algorithm achieve similar reduction of the intensity drop away from the object edge. Nonetheless, estimation of high-frequency data in Yang's algorithm causes relatively large errors. The resulting artifacts are around the object edges in the axial direction, typically streaks with negative magnitudes. This problem can be seen in Figure 8(b), and is more obvious in Figure 11, where data estimation is more challenging due to the high-frequency missing data along the axial direction. Our algorithm does not estimate the high-frequency missing data, and the negative streaks do not appear around the object edges.

Reconstructions on noisy projection data are shown in Figure 12 to demonstrate the stability of the proposed algorithm in the presence of noise. The algorithm performance is similar to that in previous comparisons. Based on the noise-free reconstructions as shown in Figure 6, the noise variances in the images are also measured. The noise in the image using the proposed algorithm remains in the same level as that in the image using the FDK algorithm or Hu's algorithm.

Figure 12.

Reconstructions of the Shepp-Logan phantom, using noisy projection data, x-z views. Display window: [0.98 1.05]. Based on the noise-free reconstructions as shown in Figure 6, from left to right, the noise variances in the images are measured as 5.872 × 10−5, 5.872 × 10−5, and 5.874 × 10−5.

4. CONCLUSIONS AND DISCUSSION

In this work, we propose an efficient estimation method to reduce the intensity drop in the CB reconstruction on circular scans. The algorithms are derived using data analysis in the Radon domain via Grangeat's formula. Assuming the CB projections are measured in a parallel beam geometry, we estimate the unmeasured data from the CB projections. These data are then reconstructed to form a correction term to improve the FDK reconstruction with Hu's term included. Equivalently, an implicit data interpolation/extrapolation is carried out in the Radon domain. It is interesting to note that Hu's term takes the first derivative of the projection data along the axial direction, while our correction term takes the second derivative. Although our algorithm is derived for circular CBCT on a full scan, it can be easily extended to short-scan reconstructions using weighting schemes, such as Parker's weighting.

The algorithm performances are evaluated using computer simulations on the 3D Shepp-Logan phantom, the FORBILD head phantom, and the Defrise disc phantom. The results show that the proposed method greatly suppresses the axial intensity drop in the FDK reconstructions and its performance improves on Hu's algorithm. Residual artifacts are mainly due to the high-frequency Radon space data missing in a circular CB geometry, which cannot be estimated accurately using interpolation or extrapolation in general. As demonstrated in the results of the Defrise disc phantom, relatively large reconstruction errors are expected around high intensity objects, such as bones in a clinical case, in the longitudinal direction.

Our algorithm also outperforms the T-FDK algorithm on the reduction of intensity drop artifacts. As compared to other existing algorithms, such as Yang's algorithm, that are based on interpolation in the Radon space, our algorithm has an advantage of high efficiency. The calculation of the correction term requires only simple integration, 1D derivative and multiplication operations, and the total computation of the proposed algorithm is close to that of the FDK algorithm. In our implementations, the proposed algorithm is 7-8 times faster than Yang's algorithm.

Figure 7.

Images of the second term (Hu's term) and the third term (the proposed correction term) of (24), using the projection data on the Shepp-Logan phantom. Top row: x-z views; bottom row: y-z views. Display window: [0 0.08].

ACKNOWLEDGMENTS

This project is supported in part by NIH R01 EB003524 and the Lucas Foundation.

References

- 1.Tuy HK. An inversion formula for cone-beam reconstruction. SIAM Journal on Applied Mathematics. 1983;43(3):546–552. [Google Scholar]

- 2.Feldkamp IA, Davis LC, Kress JW. Practical cone-beam algorithm. Journal of the Optical Society of America A. 1984;1(6):612–619. [Google Scholar]

- 3.Grangeat P. Mathematical Methods in Tomography. Vol. 1497. Berlin, Germany: Springer; 1991. Mathematical framework of cone-beam 3D reconstruction via the first derivativeof the Radon transform; pp. 66–97. Lecture Notes in Mathematics. [Google Scholar]

- 4.Defrise M, Clack R. Cone-beam reconstruction algorithm using shift-variant filtering and cone-beam backprojection. IEEE Transactions on Medical Imaging. 1994;13(1):186–195. doi: 10.1109/42.276157. [DOI] [PubMed] [Google Scholar]

- 5.Hu H. An improved cone-beam reconstruction algorithm for the circular orbit. Scanning. 1996;18:572–581. [Google Scholar]

- 6.Zhuang T-L, Nett BE, Tang X, Chen G-H. A cone-beam FBP reconstruction algorithm for short-scan and super-short-scan source trajectories. In: Developments in X-Ray Tomography IV, vol. 5535; August 2004; Denver, Colo, USA. pp. 566–576. Proceedings of SPIE. [Google Scholar]

- 7.Noo F, Heuscher DJ. Image reconstruction from cone-beam data on a circular short-scan. In: Medical Imaging 2002: Image Processing, vol. 4684; February 2002; San Diego, Calif, USA. pp. 50–59. Proceedings of SPIE. [Google Scholar]

- 8.Lee SW, Wang G. A Grangeat-type half-scan algorithm for cone-beam CT. Medical Physics. 2003;30(4):689–700. doi: 10.1118/1.1562941. [DOI] [PubMed] [Google Scholar]

- 9.Turbell H. Cone-beam reconstruction using filtered backprojection, Ph.D. thesis. Linkoping, Sweden: Department of Electrical Engineering, Linkopings Universitet; February 2001. [Google Scholar]

- 10.Grass M, Köhler Th, Proksa R. 3D cone-beam CT reconstruction for circular trajectories. Physics in Medicine and Biology. 2000;45(2):329–347. doi: 10.1088/0031-9155/45/2/306. [DOI] [PubMed] [Google Scholar]

- 11.Wang X, Ning R. A cone-beam reconstruction algorithm for circle-plus-arcdata-acquisition geometry. IEEE Transactions on Medical Imaging. 1999;18(9):815–824. doi: 10.1109/42.802759. [DOI] [PubMed] [Google Scholar]

- 12.Zeng GL, Gullberg GT. A cone-beam tomography algorithm for orthogonal circle-and-line orbit. Physics in Medicine and Biology. 1992;37(3):563–577. doi: 10.1088/0031-9155/37/3/005. [DOI] [PubMed] [Google Scholar]

- 13.Tang X, Ning R. A cone beam filtered backprojection (CB-FBP) reconstruction algorithm for a circle-plus-two-arc orbit. Medical Physics. 2001;28(6):1042–1055. doi: 10.1118/1.1376444. [DOI] [PubMed] [Google Scholar]

- 14.Kudo H, Saito T. Derivation and implementation of a cone-beam reconstruction algorithm for nonplanar orbits. IEEE Transactions on Medical Imaging. 1994;13(1):196–211. doi: 10.1109/42.276158. [DOI] [PubMed] [Google Scholar]

- 15.Zeng K, Chen Z, Zhang L, Wang G. A half-scan error reduction based algorithm for cone-beam CT. Journal of X-Ray Science and Technology. 2004;12(2):73–82. [Google Scholar]

- 16.Zeng K, Chen Z, Zhang L, Wang G. An error-reduction-based algorithm for cone-beam computed tomography. Medical Physics. 2004;31(12):3206–3212. doi: 10.1118/1.1809792. [DOI] [PubMed] [Google Scholar]

- 17.Tang X, Hsieh J, Hagiwara A, Nilsen R, Shaughnessy C, Drapkin E. Axial reconstruction algorithms for cone-beam volumetric CT. In: Proceedings of the Radiological Society of North America (RSNA '05); November-December 2005; Chicago, Ill, USA. [Google Scholar]

- 18.Yu L, Pan X, Pelizzari CA. Image reconstruction with a shift-variant filtration in circular cone-beam CT. International Journal of Imaging Systems and Technology. 2005;14(5):213–221. [Google Scholar]

- 19.Yang H, Li M, Koizumi K, Kudo H. FBP-type cone-beam reconstruction algorithm with Radon space interpolationcapabilities for axially truncated data from a circular orbit. Medical Imaging Techonology. 2006;24(3):201–208. [Google Scholar]

- 20.Parker DL. Optimal short scan convolution reconstruction for fanbeam CT. Medical Physics. 1982;9(2):254–257. doi: 10.1118/1.595078. [DOI] [PubMed] [Google Scholar]

- 21.Natterer F. The Mathematics of Computerized Tomography. New York, NY, USA: Wiley-Teubner; 1986. [Google Scholar]

- 22.Noo F, Clackdoyle R, Pack JD. A two-step Hilbert transform method for 2D image reconstruction. Physics in Medicine and Biology. 2004;49(17):3903–3923. doi: 10.1088/0031-9155/49/17/006. [DOI] [PubMed] [Google Scholar]

- 23.Kak AC, Slaney M. Principles of Computerized Tomography Imaging. Piscataway, NJ, USA: IEEE Press; 1987. [Google Scholar]

- 24.Zhu L, Starman J, Fahrig R. The relationship between the T-FDK algorithm and the Hu-FDK algorithm. In: Proceedings of the 9th International Meeting on Fully Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine; July 2007; Lindau, Germany. pp. 366–369. [Google Scholar]