Abstract

Emotionally expressive faces have been shown to modulate activation in visual cortex, including face-selective regions in ventral temporal lobe. Here, we tested whether emotionally expressive bodies similarly modulate activation in body-selective regions. We show that dynamic displays of bodies with various emotional expressions vs neutral bodies, produce significant activation in two distinct body-selective visual areas, the extrastriate body area and the fusiform body area. Multi-voxel pattern analysis showed that the strength of this emotional modulation was related, on a voxel-by-voxel basis, to the degree of body selectivity, while there was no relation with the degree of selectivity for faces. Across subjects, amygdala responses to emotional bodies positively correlated with the modulation of body-selective areas. Together, these results suggest that emotional cues from body movements produce topographically selective influences on category-specific populations of neurons in visual cortex, and these increases may implicate discrete modulatory projections from the amygdala.

Keywords: emotion, bodies, body selectivity, fusiform gyrus, EBA, FBA

INTRODUCTION

The ability to perceive and accurately interpret other people's emotions is central to social interaction. We gain information about others’ emotional states from multimodal cues, including postures and movements of the face and body.

One of the consistent findings from research on emotional face perception is an enhanced activation of face-selective visual areas such as the fusiform face area (FFA; Kanwisher et al., 1997) in response to faces expressing emotions, relative to emotionally neutral faces (Vuilleumier et al., 2001; Pessoa et al., 2002; Winston et al., 2003). This enhanced activation in FFA in response to emotional faces has been shown to correlate with activity in amygdala (Morris et al., 1998), and has been attributed to feedback modulatory influences from the amygdala, serving to prioritize visual processing of emotionally salient events (Vuilleumier et al., 2004; Vuilleumier, 2005).

Research on emotional body perception has similarly reported enhanced activation in visual cortex for emotional and sexually arousing bodies and body parts (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; Grosbras and Paus, 2006; Ponseti et al., 2006; Grezes et al., 2007). Such modulation by emotional bodies was consistently reported in the fusiform gyrus (de Gelder et al., 2004; Hadjikhani and de Gelder, 2003; Grosbras and Paus, 2006), and in lateral occipitotemporal cortex (Grosbras and Paus, 2006; Ponseti et al., 2006; Grezes et al., 2007). Interestingly, these general regions have also been shown to respond selectively to emotionally neutral images of bodies and body parts, compared to visually matched control images (Downing et al., 2001; Peelen and Downing, 2005b; Schwarzlose et al., 2005; Peelen et al., 2006). This raises the possibility that emotion signals from the body might modulate precisely those populations of neurons that code for the viewed stimulus category (Sugase et al., 1999), instead of providing a global boost to all visual processing in extrastriate visual cortex, or to all neurons within a given cortical region.

In the present study, we directly address this possibility by measuring activation to emotional and neutral bodies in functionally localized body-selective regions, one located in lateral occipitotemporal cortex: the extrastriate body area or EBA (Downing et al., 2001; Urgesi et al., 2004; Downing et al., 2007), and one located in the fusiform gyrus: the fusiform body area or FBA (Peelen and Downing, 2005b; Peelen et al., 2006; Schwarzlose et al., 2005). Body-selective FBA partly overlaps with face-selective FFA in fMRI studies using standard resolution (3 mm × 3 mm × 3 mm), but these regions can be dissociated when using high spatial resolution (Schwarzlose et al., 2005) or multi-voxel pattern analysis (Peelen et al., 2006). Here we used multi-voxel pattern analysis (Norman et al., 2006; Peelen et al., 2006; Downing et al., 2007; Peelen and Downing, 2007) to test whether voxel-by-voxel variations in emotional modulation within these regions correlate with voxel-by-voxel differences in body selectivity and/or face selectivity.

MATERIALS AND METHODS

Subjects

Eighteen healthy adult volunteers participated in the study (10 women, mean age 26 years, range 20–32). All were right-handed, with normal or corrected-to-normal vision and no history of neurological or psychiatric disease. Participants all gave informed consent according to ethics regulations.

Stimuli

We presented short movie clips of bodies expressing five basic emotions (anger, disgust, fear, happiness, sadness) and emotionally neutral gestures. For each condition (Anger, Disgust, Fear, Happiness, Neutral, Sadness), six full-light body movies performed by four actors (two males, two females) were taken from the set created and validated by Atkinson et al. (2004, 2007). All movies were presented on a black background. In brief (for full details see Atkinson et al., 2004, 2007), the actors wore uniform dark-grey, tight-fitting clothes and headwear so that all parts of their anatomy were covered. Facial features and expressions were not visible. Actors were instructed to express the emotions spontaneously. For the Neutral movies actors were instructed to perform non-emotional actions, such as walking on the spot (3/6), mimicking digging movements (2/6), and jumping gently (1/6).

To ensure that emotional movies did not contain more movements than the neutral movies, we calculated two separate measures of the amount of motion in each movie. For the first measure, we calculated the total frame-to-frame change in pixel intensity for each movie. As changes in pixel intensity between successive frames in our movies were mostly due to body movements, this number provides an indirect estimate of the total amount of movements in each video. Intensity changes (averaged across the six movies) were highest for the neutral movies: Anger = 181; Disgust = 91; Fear = 92; Happiness = 162; Sadness = 79; Neutral = 190. For the second motion measurement, we used the EyesWeb software's ‘Quantity of Motion (QoM)’ function (Camurri et al., 2003; http://www.infomus.dist.unige.it/). This converts the body image into a ‘Silhouette Motion Image (SMI)’, which carries information about variations in the shape and position of the body silhouette across a moving window of a few frames (we used four) throughout the length of the movie. A QoM value, equivalent to the number of pixels of the SMI, is computed for each movie frame that this window moves across. QoM is an estimate of the overall amount of detected motion, involving velocity and force, and unlike the first measure we used, is independent of the actor's distance from the camera (especially important in the case of fearful movements, which typically involve the actor retreating and thus reducing in absolute size). Total QoM values were calculated for each movie and then averaged across emotion category: Anger = 11.7; Disgust = 5.6; Fear = 9.9; Happiness = 18.1; Sadness = 5.7; Neutral = 14.4. This measure was positively correlated (r = 0.68, P < 0.001) with the first measure of body movements, indicating that they are tracking similar but not identical motion properties of the stimuli. A one-way analysis of variance (ANOVA) revealed a significant effect of Emotion, (F5, 30 = 10.111, P < 0.001), but pairwise comparisons (Bonferroni corrected, α = 0.05) confirmed that none of the emotion conditions involved a larger quantity of motion than the neutral condition (Neutral > Disgust and Sadness; Happiness > Sadness, Disgust, and Fear). Thus, both of our measures revealed no systematic excess of movement in any of the emotion conditions compared to the neutral condition, indicating that any increase in brain activity as a function of emotion cannot simply be due to the amount of movements in the emotional (vs neutral) movies. For example movies, see Supplemental Movies 1–6.

Design and procedure

Main experiment

Participants performed six runs of the main experiment. Each run started and ended with a 10 s fixation period. Within each run, 36 trials of 8.5 s were presented in three blocks of 12 trials. These blocks were separated by 5 s fixation periods. The three blocks differed in the type of stimuli presented (emotional body movies, emotional face movies, or emotional sounds). The order of the blocks was counterbalanced across runs. In this article, we present only the results from the body blocks. Within these blocks, two different movies (of different actors) of each of the six emotion conditions (Anger, Disgust, Fear, Happiness, Neutral, Sadness) were presented (the same emotions were presented in the face and sound blocks). Trials were presented in random order. Each run lasted ∼336 s.

Each trial consisted of a 2 s fixation cross, followed by a 3 s movie clip, a 1 s blank screen and a 2.5 s response window. Subjects were asked to rate the emotion expressed in the movie on a 3-point scale. For example, for Anger, the response was cued by the following text display: ‘Angry? 1—a little, 2—quite, 3—very much’, where ‘1’, ‘2’, ‘3’ referred to three response buttons (from left to right) on a computer mouse held in the right hand. For half the subjects this response mapping was reversed (from right to left). For a comparable task in the Neutral condition, we asked how ‘lively’ the movie was. Due to technical problems, responses could not be recorded in one subject.

Localizer

Participants were also scanned on two runs of an experiment previously shown to reliably localize body- and face-selective areas in visual cortex (Peelen and Downing, 2005a, b). Each run consisted of 21 15 s blocks. Of these 21 blocks, five were fixation-only baseline conditions, occurring on blocks 1, 6, 11, 16 and 21. During the other 16 blocks, subjects were presented with pictures of faces, headless bodies, tools or scenes. Forty full-color exemplars of each category were tested. Each image was presented for 300 ms, followed by a blank screen for 450 ms. Twice during each stimulus block the same image was presented two times in succession. Participants were instructed to detect these immediate repetitions and report them with a button press (1-back task). The position of the image was jittered slightly on alternate presentations, in order to disrupt attempts to perform the 1-back task based on low-level visual transients. Each participant was tested with two different versions, counterbalancing for the order of stimulus category. In both versions, assignment of category to block was counterbalanced, so that the mean serial position in the scan of each condition was equated.

Data acquisition

Scanning was performed on a 3T Siemens Trio Tim MRI scanner at Geneva University Hospital, Center for Bio-Medical Imaging. For functional imaging, a single shot EPI sequence was used (T2* weighted, gradient echo sequence). Scanning parameters were: TR = 2490 ms, TE = 30 ms, 36 off-axial slices, voxel dimensions: 1.8 mm × 1.8 mm, 3.6 mm slice thickness (no gap). Anatomical images were acquired using a T1-weighted sequence. Scanning parameters were: TR/TE: 2200 ms/3.45 ms; slice thickness = 1 mm; in-plane resolution: 1 mm × 1mm.

Preprocessing

Preprocessing and statistical analysis of MRI data was performed using BrainVoyager QX (Brain Innovation, Maastricht, The Netherlands). Functional data were motion corrected, slice-time corrected and low-frequency drifts were removed with a temporal high-pass filter (cut-off 0.006 Hz). For region-of-interest analyses and multi-voxel pattern analyses, no spatial smoothing was applied. For whole-brain group-average analyses, the data were spatially smoothed with a Gaussian kernel (8 mm FWHM). Functional data were manually co-registered with 3D anatomical T1 scans (1 mm × 1 mm × 1 mm resolution). The 3D anatomical scans were transformed into Talairach space, and the parameters from this transformation were subsequently applied to the co-registered functional data.

Whole-brain analyses

Whole-brain, random-effects group-average analyses were conducted on smoothed data from the main experiment. Events were defined as the 4 s period between the onset of the movie and the onset of the response window. These events were convolved with a standard model of the HRF (Boynton et al., 1996). A general linear model was created with one predictor for each condition of interest. Regressors of no interest were also included to account for differences in the mean MR signal across scans and subjects. Regressors were fitted to the MR time-series in each voxel and the resulting beta parameter estimates were used to estimate the magnitude of response to the experimental conditions. Contrasts were performed at uncorrected thresholds of P < 0.0005 and P < 0.001 (see Results section). Only clusters > 50 mm3 are reported for these analyses.

ROI analyses

In each subject, we defined four regions of interest (ROIs) from the localizer experiment. Left EBA, right EBA and right FBA were defined by contrasting body responses with tool responses, whereas right FFA was defined by contrasting face and tool responses. Analyses of the FFA and FBA were restricted to right hemisphere ROIs, because these regions were often weaker or non-existent in the left hemisphere as previously observed in other studies (Kanwisher et al., 1997; Peelen and Downing, 2005b). For each subject and each contrast, the most significantly activated voxel was first identified within a restricted part of cortex corresponding to previously reported locations (Peelen and Downing, 2005a, b). Each ROI was then defined as the set of contiguous voxels that were significantly activated (P < 0.005, uncorrected) within a 12 mm3 surrounding (and including) the peak voxel.

We also defined ROIs covering the amygdala, based on previously reported coordinates (left Amygdala: −18, −5, −9; right Amygdala: 21, −5, −9; e.g. Pessoa et al., 2002). The amygdala ROIs consisted of a 3 x 3 x 3 mm cube around this coordinate, and was identical for each subject. We chose a small amygdala ROI because activation was relatively weak and variable in single subject data, but consistently encompassed this region at lower thresholds.

Within each ROI in each subject, a further general linear model was then applied, modeling the response of the voxels in the ROI (in aggregate) to the conditions of the main experiment.

Voxelwise correlation analyses

For each ROI and subject, we measured the voxelwise pattern of activation to contrasts of interest in the localizer and the main experiment. This was accomplished by extracting a t-value for a given contrast at each voxel in the ROI. Selectivity estimates were extracted from the localizer (for bodies and faces), and emotional modulation estimates were extracted from the main experiment (for the five emotions). Body and face selectivity was computed by contrasting the response to each of these two categories with the response to tools. Emotional modulation was computed by contrasting each emotion category vs Neutral. To test whether the strength of emotional modulation was correlated with the degree of body and/or face selectivity, we then correlated the pattern of emotional modulation with the pattern of body and face selectivity on a voxel-by-voxel basis (similar to Peelen et al., 2006). For example, the correlation between body selectivity and anger selectivity was calculated as follows: First, we computed a t-value for each voxel in the ROI reflecting body selectivity (by contrasting bodies vs tools). Second, we computed a t-value for each voxel in the same ROI reflecting anger selectivity (by contrasting angry vs neutral bodies). These two sets of t-values were then correlated, resulting in a voxelwise correlation estimate between body and anger selectivity.

These correlations were computed for each subject individually and were Fisher transformed. Correlations between effective emotions (those that significantly modulated a given ROI, relative to Neutral) and body/face selectivity were averaged to increase power, which resulted in one correlation between emotional modulation and body selectivity, and one correlation between emotional modulation and face selectivity for each ROI. These resulting mean correlations were tested statistically against zero.

RESULTS

Behavioral results

The average rating of the five emotions expressed by bodies was 2.3, which corresponds to judgments of expressivity between ‘quite’ and ‘very much’, and suggests that the movies successfully conveyed emotions across conditions. Ratings per condition were as follows: Anger (2.2), Disgust (2.4), Fear (2.5), Happiness (2.3), Sadness (2.1) and Neutral (1.9). A one-way ANOVA revealed a significant main effect of Emotion (F5,12 = 5.0, P < 0.05). Paired-sample t-tests showed that all emotions were rated significantly higher than Neutral (all Ps < 0.05).

Whole-brain contrasts

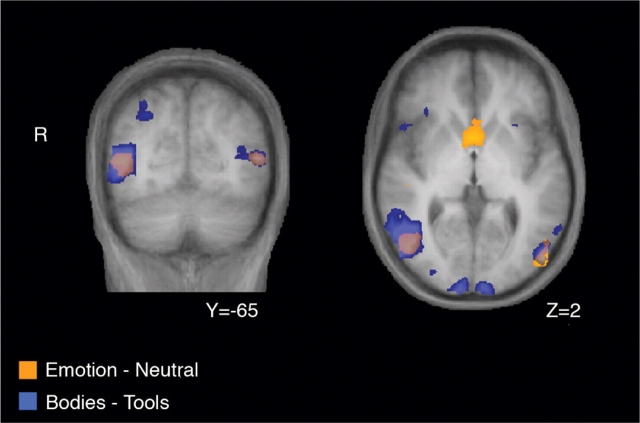

A whole-brain group-average contrast of all emotional vs neutral bodies (at P < 0.0005, uncorrected) revealed selective activation in bilateral occipitotemporal cortex, and in ventral striatum/caudate (Figure 1 and Table 1). The reverse contrast (neutral vs emotional bodies) activated bilateral parietal and right lateral temporal cortex (Table 1). Table 2 gives all activations for separate contrasts involving each emotion vs neutral. These whole-brain data are consistent with activation of visual areas in response to emotional body expressions, whose locations overlap with occipitotemporal regions previously shown to have body-selective activity (Downing et al., 2001; Peelen and Downing, 2005b).

Fig. 1.

Overlap between emotional modulation and EBA. Shown are the results from whole-brain group-average random-effects analyses contrasting emotional vs neutral bodies (orange) and bodies vs tools (blue). Emotional vs neutral bodies selectively activated EBA and ventral striatum. See Table 1 for coordinates and significance of these activations.

Table 1.

Regions activated by a whole-brain group-average random-effects analysis (P < 0.0005, uncorrected) contrasting all emotions vs neutral. Coordinates are in Talairach space

| x | y | z | t | mm3 | |

|---|---|---|---|---|---|

| Emotional > Neutral | |||||

| Left occipitotemporal | −47 | −76 | 2 | 5.23 | 130 |

| Right occipitotemporal | 45 | −67 | 6 | 4.84 | 235 |

| Ventral striatum/caudate | 1 | 8 | 5 | 4.75 | 97 |

| Neutral > Emotional | |||||

| Left posterior parietal | −41 | −65 | 52 | 5.18 | 137 |

| Right occipitotemporal | 61 | −42 | −11 | 5.45 | 667 |

| Right posterior parietal | 50 | −59 | 40 | 5.12 | 399 |

| Right superior parietal | 50 | −40 | 49 | 5.25 | 164 |

Table 2.

Regions activated by whole-brain group-average random-effects analyses (P < 0.001, uncorrected) contrasting each emotion vs neutral. Coordinates are in Talairach space

| x | y | z | t | mm3 | |

|---|---|---|---|---|---|

| Anger > Neutral | |||||

| Right fusiform | 43 | −36 | −21 | 4.90 | 96 |

| Right superior frontal | 42 | −8 | 51 | 4.58 | 104 |

| Disgust > Neutral | |||||

| Left occipitotemporal | −47 | −69 | 5 | 5.37 | 1867 |

| Left orbitofrontal | −10 | 43 | 6 | 4.36 | 59 |

| Right occipitotemporal | 46 | −65 | 6 | 6.08 | 2474 |

| Right lateral anterior temporal | 51 | 6 | −14 | 5.20 | 77 |

| Right medial temporal | 21 | 11 | −28 | 4.84 | 133 |

| Right sensori-motor | 5 | −36 | 67 | 5.16 | 154 |

| Fear > Neutral | |||||

| Left occipitotemporal | −40 | −70 | 14 | 4.86 | 1003 |

| Left precuneus | −10 | −46 | 46 | 4.60 | 208 |

| Right occipitotemporal | 42 | −69 | 11 | 4.74 | 809 |

| Right precuneus | −9 | −52 | 45 | 4.54 | 337 |

| Right superior frontal | 16 | −10 | 68 | 5.28 | 198 |

| Happiness > Neutral | |||||

| Left occipital | −25 | −89 | 5 | 4.31 | 95 |

| Left superior temporal | −51 | −36 | 12 | 5.01 | 1366 |

| Left cerebellum | −11 | −53 | −19 | 5.84 | 1496 |

| Ventral striatum/caudate | 5 | 8 | 4 | 5.71 | 2237 |

| Right brainstem | 11 | −14 | −26 | 4.23 | 72 |

| Right occipital | 32 | −85 | 4 | 4.61 | 255 |

| Right occipitotemporal | 44 | −65 | 2 | 5.34 | 1153 |

| Right fusiform | 32 | −30 | −26 | 5.27 | 152 |

| Right lateral anterior temporal | 54 | 6 | −11 | 4.83 | 261 |

| Right superior temporal | 47 | −29 | 3 | 4.81 | 535 |

| Right dorsomedial frontal | 5 | −12 | 53 | 5.52 | 839 |

| Right lateral frontal | 46 | −2 | 72 | 4.68 | 220 |

| Sadness > Neutral | |||||

| Left occipital | −39 | −84 | 12 | 5.79 | 2221 |

| Left ventromedial frontal | −1 | 19 | 0 | 4.15 | 50 |

| Right occipital | 24 | −95 | −12 | 5.61 | 264 |

Emotional modulation in EBA

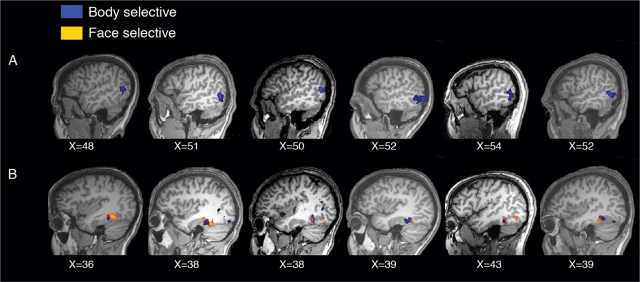

For each subject, we identified the left and right EBA as regions of interest (ROIs) by contrasting bodies vs tools in the localizer experiment (Figure 2A). Average peak Talairach coordinates were (x [s.d.], y [s.d.], z [s.d.]): left EBA (−47 [4], −71 [4], 3 [6]), right EBA (48 [5], −66 [6], 3 [6]), in agreement with previous fMRI reports (Downing et al., 2001).

Fig. 2.

(A) Right EBA in six individual subjects. (B) Right FBA and right FFA in six individual subjects.

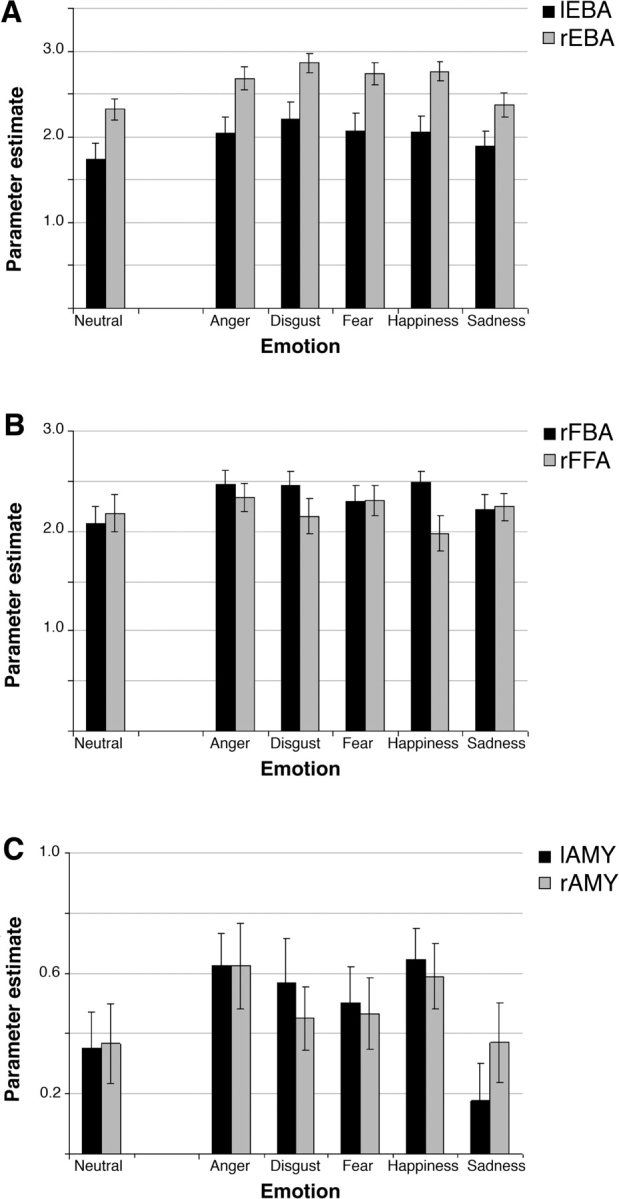

We then examined emotional increases in the functionally-defined EBA of each participant across the different emotion expression conditions. For each individual and each ROI, we extracted the activation values for each of the six conditions in the main experiment (Figure 3A). For statistical analyses, the value of the neutral condition was subtracted from the value of each of the five emotion conditions, yielding relative estimates of emotional increase. A 2 (Hemisphere) × 5 (Emotion) ANOVA on these values revealed a significant main effect of Emotion (F4,14 = 8.0, P < 0.005), indicating that different emotions modulated EBA to a different extent. There was no significant effect of Hemisphere on the size of emotional increase (F1,17 = 0.5, P = 0.5), and no significant interaction between Emotion and Hemisphere (F4,14 = 1.1, P = 0.4). The intercept was also significant (F1,17 = 38.0, P < 0.001), indicating a general difference between emotional and neutral bodies, with an overall effect of all emotions relative to the neutral condition despite the different effects of different emotions. Given the absence of a difference between hemispheres for emotional increases, in subsequent analyses we averaged the values of left and right EBA, in order to compare each emotion separately with the response to neutral bodies. These comparisons confirmed that all emotions produced a significantly stronger response than Neutral (Ps < 0.001), except Sadness (t17 = 1.3, P = 0.22).

Fig. 3.

Parameter estimates for emotional and neutral bodies in regions of interest. Error bars reflect standard error of the mean. (A) Left and right EBA, (B) right FBA and right FFA and (C) left and right amygdala.

Next, we examined the voxelwise degree of emotional responses in EBA as a function of body selectivity. As argued in our Introduction section, the emotional effects observed in EBA could reflect either a global increase of activity in occipito-temporal cortex or instead reflect more specific modulation of body-selective neurons. In the latter case, we would expect a positive voxel-by-voxel correlation between body selectivity and emotional modulation. In other words, if emotional modulation is selectively related to body processing, we would expect voxels that are more strongly body selective (indicating a high percentage of body-selective neurons) also to show relatively strong emotional modulation. To test this, we computed for each subject and each ROI the voxel-by-voxel correlation between emotional effects in the main experiment (averaged across emotions that showed significant modulation: Anger, Disgust, Fear, Happiness) and body selectivity as determined from the localizer experiment. As a control, the same computations were performed for the relation between emotional effects and face selectivity.

The correlation between body selectivity and emotional modulation was significantly positive in both ROIs (lEBA: r = 0.10, t17 = 3.5, P < 0.005; rEBA: r = 0.16, t17 = 4.7, P < 0.001), indicating that voxels that were more strongly body selective were also more strongly modulated by the emotional expressions displayed by bodies. By contrast, emotional modulation of EBA voxels was not related to the degree of response to face stimuli (both Ps > 0.6). These data suggest that activation of occipitotemporal areas by emotional body expressions depends on their sensitivity to bodies, rather than on the general magnitude of their visual responses to any category.

Emotional modulation in fusiform gyrus

To examine emotional effects in the fusiform gyrus, we defined, for each subject, the right FFA and right FBA as two separate ROIs by contrasting faces vs tools (FFA) and bodies vs tools (FBA) in the localizer experiment (Figure 2B). Average peak Talairach coordinates for right FFA and right FBA were highly similar: FFA (38 [4], −46 [8], −18 [5]), FBA (39 [5], −44 [5], −16 [4]), which agrees well with previous reports and confirms a close anatomical proximity between these two cortical regions (Peelen and Downing, 2005b; Schwarzlose et al., 2005).

We then examined emotional responses in these fusiform regions for each participant, as done for the EBA above. For each individually-defined ROI, we extracted the activation values for the six conditions in the main experiment (Figure 3B). The value of the neutral condition was subtracted from the value of each emotional condition, yielding relative estimates of emotional increase. A 2 (ROI) × 5 (Emotion) ANOVA on these values revealed a non-significant interaction of Emotion and ROI (F4,14 = 2.5, P = 0.09). The main effect of emotion category was not significant (P > 0.3), indicating that the five emotions modulated the ROIs to the same extent. The main effect of ROI was also not significant (P > 0.3), indicating that emotional modulation was the same in FFA and FBA. The intercept (reflecting the general difference between emotional and neutral bodies) was significant (F1,17 = 7.6, P < 0.05), indicating again an overall enhancement for all emotions relative to the neutral condition. Accordingly, separate pairwise contrasts for each emotion vs Neutral (averaged across ROIs) showed significant emotional increases for Anger, Disgust, and Happiness (all Ps <0.05), although the effect of Fear and Sadness did not reach significance (both Ps >0.1).

These findings confirm that body expressions activate fusiform cortex (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; Grosbras and Paus, 2006). However, the above results showed no significant difference between the emotional modulation of FFA and FBA. This could indicate that both face- and body-selective neurons are modulated by emotional body expressions. Alternatively, emotion could enhance processing only in body-selective neurons, with significant modulation in FFA being observed due to the strong overlap of this region with FBA (Peelen and Downing, 2005b).

To distinguish between these two possibilities, we tested whether the effect of emotional body expression in the fusiform gyrus was related, on a voxel-by-voxel basis, to body selectivity or face selectivity, or related to both body and face selectivity (relative to a third control category, such as tools). Similar to the analysis in EBA described above, we computed for each subject the voxel-by-voxel correlation between the emotional modulation and the degree of response to body stimuli, and between emotional modulation and the degree of response to face stimuli. Again, we averaged the correlations between body or face selectivity and the emotions that showed significant modulation (Anger, Disgust, Happiness). To be unbiased with respect to the main effects of face and body selectivity in this region, we performed this analysis on voxels corresponding to the union of FFA and FBA. This region was indeed equally face (ratio to tools: 1.7) and body selective (ratio to tools: 1.7).

Critically, this multi-voxel pattern analysis revealed that the amount of emotional increase to body expressions correlated significantly with the degree of body selectivity (r = 0.11, t17 = 3.4, P < 0.005), whereas there was no relation with the degree of response to faces (P = 0.3). This result indicates that the emotional modulation by body expressions in the fusiform gyrus is likely to involve a selective enhancement of body-related rather than face-related processing.

Emotional activation in amygdala

Finally, we examined whether emotional body expression also modulated amygdala activity. Given the subthreshold amygdala response in the whole-brain group analysis, we defined a-priori ROIs for each subject based on typical coordinates reported by previous studies (see Methods section).

Figure 3C shows the response to emotional and neutral conditions in both the left and right amygdala. Again, the value for the neutral condition was subtracted from the value of each of the emotion conditions. A 2 (Hemisphere) × 5 (Emotion) ANOVA on these values revealed a significant main effect of Emotion (F4,14 = 4.9, P < 0.05), indicating that different emotions activated the amygdala to a different extent. There was no significant effect of Hemisphere (F1,17 = 0.1, P = 0.8), and no significant interaction between Emotion and Hemisphere (F4,14 = 1.5, P = 0.2). Contrasts of each emotion vs Neutral (averaged across hemispheres) showed a significant activation for Anger (P < 0.01) and Happiness (P < 0.05), but not for Disgust, Fear and Sadness (all Ps > 0.1).

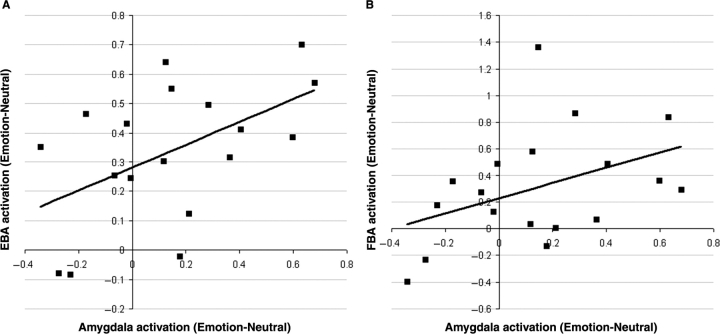

To test whether subjects with stronger emotional responses in amygdala also showed stronger modulation in visual areas, we correlated the response in the amygdala with the response in EBA, FBA and FFA. For each of these visual ROIs, we averaged the response to the five emotions (vs Neutral), and then correlated this value with the average emotional response in the amygdala (averaged across hemispheres). This analysis revealed that the magnitude of amygdala activation to emotional body expressions (relative to Neutral) correlated significantly (one-tailed) with the degree of emotional increases in both EBA (r = 0.52, P < 0.05; Figure 4A) and FBA (r = 0.42, P < 0.05; Figure 4B). By contrast, there was no significant correlation with the emotional increases in FFA (r = 0.20, P = 0.2). Thus, subjects with stronger amygdala activation to body expressions also showed a stronger modulation in body-selective, but not face-selective visual areas.

Fig. 4.

(A) Correlation between emotional increases in amygdala and emotional increases in EBA, both averaged across emotions and hemispheres. Each point represents one subject. (B) Correlation between emotional increases in amygdala and emotional increases in FBA, both averaged across emotions and hemispheres. Each point represents one subject.

DISCUSSION

The present results show that functionally localized EBA and FBA were influenced by the emotional significance of body movements. Furthermore, by using a multi-voxel pattern analysis, we could show that activity in these two regions was not only higher for emotional compared to neutral bodies, but also that such emotional increases correlated positively with the degree of body selectivity across voxels. No correlation was found between responses to body expressions and the degree of face selectivity (relative to tools) in any of these visual regions, arguing for a specific enhancement of body-selective neuronal populations as a function of the expressed body emotions. In particular, the positive voxelwise correlation in fusiform gyrus between emotional modulation by body expression and body selectivity, but not face selectivity, indicates that the observed modulation was exerted on the FBA (Downing et al., 2001; Peelen and Downing, 2005b) rather than on the overlapping FFA (Kanwisher et al., 1997). In addition, we also found that, in both EBA and FBA, the magnitude of emotional modulation was directly related to a concomitant response of the amygdala. Subjects who showed stronger amygdala responses to emotional (vs neutral) body movies also showed stronger emotional modulation in body-selective visual areas for the same movies. By contrast, activity in FFA was not influenced by amygdala responses to emotional bodies.

Note that the increased response of body-selective regions to emotional body expressions cannot be simply explained by differences in the amount of low-level motion in the image, as demonstrated by two different frame-by-frame measures of movements (either using the amount of pixelwise changes over time, or computing a more precise index of quantity of motion). In fact, the neutral condition contained at least as much bodily movement than other emotion conditions, such as anger or disgust, which nonetheless produced consistent increases in activations in EBA or FBA.

What is the source of this emotional modulation? Several lines of research suggest that modulatory influences of emotion may act directly on visual areas and presumably originate from the amygdala through feedback projections (Amaral et al., 2003; Vuilleumier, 2005). Such mechanisms would be consistent with our findings that emotional increases in body-selective visual areas were significantly correlated with amygdala activation, as previously observed for emotional faces in the FFA (Morris et al., 1998). Although such correlation by itself does not prove a causal role of amygdala influences on EBA and FBA, rather than purely bottom-up effects or common influences from a third brain area, previous findings on face processing have clearly demonstrated that intact amygdala function is necessary to produce not only increased fMRI responses of FFA to emotional faces (Vuilleumier et al., 2004), but also enhanced attention to emotional stimuli in behavioral tasks (Anderson and Phelps, 2001; Akiyama et al., 2006). Thus, a combined lesion/fMRI study (Vuilleumier et al., 2004) reported that patients with lesions in amygdala show no emotional modulation of FFA in response to fearful vs neutral faces, unlike normal subjects (Vuilleumier et al., 2001), although amygdala lesions do not affect FFA response to neutral faces and its modulation by selective attention (Vuilleumier et al., 2004). Thus, the present study extends previous studies on emotional face processing, by showing not only that similar effects can arise for emotional bodies but also more generally that the emotional value of visual stimuli may produce a selective boost for sensory areas involved in processing the stimulus category (Grandjean et al., 2005).

Accordingly, it is likely that such modulatory effects of emotion on visual areas originate from different sources than the modulation of visual processing by selective attention, although their effect may be similar in several aspects, for instance to facilitate perception or memory. While emotional orienting is presumably mediated by the amygdala (Akiyama et al., 2007; Vuilleumier, 2005), other forms of attention are known to involve a network of parietal and frontal regions (Kim et al., 1999; Nobre et al., 2004; Peelen et al., 2004; Grosbras et al., 2005; Cristescu et al., 2006). Clear dissociations between these two types of orienting have been observed. For example, patients with hemi-spatial neglect as a result of lesions in parietal cortex have difficulty orienting attention to stimuli in their contralesional visual field, but still show an advantage in detecting emotional stimuli in the affected hemifield (Vuilleumier and Schwartz, 2001). The opposite pattern of intact attentional but impaired emotional effects has also been reported (Vuilleumier et al., 2004).

Importantly, our findings extend recent imaging results showing increased visual activation to emotional body stimuli (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; Grosbras and Paus, 2006; Ponseti et al., 2006; Grezes et al., 2007), by revealing here the category-selective nature of these emotional effects. In particular, previous studies interpreted fusiform increases to emotional bodies as arising in face-selective FFA, due to functional ‘synergies’ from body to face processing modules in visual cortex. Our new results suggest that responses in fusiform cortex to emotional body expressions may instead reflect a more specific activation of the overlapping FBA and implicate body-selective rather than face-selective neurons. Indeed, our detailed voxel-by-voxel pattern analyses suggest that emotional activation by body expressions were directly related to the degree of response to body stimuli, but not to the degree of response to face stimuli (relative to a common control category, i.e. tools), for both the fusiform (FBA) and occipito-temporal (EBA) regions. This result strongly suggests that emotion signals might modulate precisely those populations of neurons that code for the viewed stimulus category, instead of providing a more general boost to all visual processing in extrastriate cortex, or to all neurons within a given cortical region. These data nicely dovetail with results from single-cell recordings (Sugase et al., 1999) suggesting that the same neuronal populations may encode different types of information about their preferred stimulus category (perhaps at different firing latencies). Thus, single-cell data indicate that the same neurons can respond initially to global cues about the stimulus category (e.g. body, face or object) and then later to finer cues about its identity or affective value (e.g. expression, familiarity). Such a pattern of activation would provide a plausible account for the correlation observed here, across voxels, between body selectivity and sensitivity to emotional body expressions.

Finally, we found that not all emotions modulated visual processing to the same extent. The most effective emotions for both EBA and FBA were Anger, Disgust and Happiness. Fear significantly modulated EBA, but this modulation failed to reach significance in FBA. Sadness did not significantly modulate either region. The absence of strong fear-related modulation in the FBA is somewhat surprising given previous findings of increased fusiform responses to static images of fearful faces and bodies (Vuilleumier et al., 2001; Hadjikhani and de Gelder, 2003). Note, however, that these studies compared only one emotion (fear) relative to a neutral condition, which might yield greater statistical sensitivity to the effect of fear. The absence of modulation for Sadness accords with previous research on face perception showing no significant increase to sad relative to neutral expressions (Phillips et al., 1998; Lane et al., 1999). This lack of modulation by sad expressions might reflect the low arousal associated with sadness (Heilman and Watson, 1989; Lang et al., 1998, 1999), in keeping with an important role of arousal dimensions in driving amygdala responses (Anderson et al., 2003; Winston et al., 2005), which would then predict stronger feedback modulations on cortical areas for emotional stimuli with higher arousal values.

To summarize, we found increased activation in body-selective visual areas for angry, disgusted, happy, and fearful bodies, compared to neutral controls. The strength of this modulation was positively correlated with body selectivity across voxels, suggesting a specific modulation of body-selective neuronal populations, rather than more diffuse cortical boosting. Further, a positive correlation between amygdala activation and modulation of body-selective areas supports the idea that the amygdala may constitute the critical source of emotional signals inducing the observed visual modulation. These data shed new light on neural pathways involved in the perception of body gestures, and more generally, the influence of emotional signals on visual processing.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Footnotes

Conflict of interest

None declared.

REFERENCES

- Akiyama T, Kato M, Muramatsu T, Umeda S, Saito F, Kashima H. Unilateral amygdala lesions hamper attentional orienting triggered by gaze direction. Cereb Cortex. 2007;17:2593–600. doi: 10.1093/cercor/bhl166. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Stappen I, et al. Dissociated neural representations of intensity and valence in human olfaction. Nature Neuroscience. 2003;6:196–202. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–9. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Atkinson AP, Tunstall ML, Dittrich WH. Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition. 2007;104:59–72. doi: 10.1016/j.cognition.2006.05.005. [DOI] [PubMed] [Google Scholar]

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33:717–46. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. Journal of Neuroscience. 1996;16:4207–21. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camurri A, Lagerlof I, Volpe G. Recognizing emotion from dance movement: comparison of spectator recognition and automated techniques. International Journal of Human-Computer Studies. 2003;59:213–25. [Google Scholar]

- Cristescu TC, Devlin JT, Nobre AC. Orienting attention to semantic categories. Neuroimage. 2006;33:1178–87. doi: 10.1016/j.neuroimage.2006.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of the United States of America; 2004. pp. 16701–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV. Functional magnetic resonance imaging investigation of overlapping lateral occipitotemporal activations using multi-voxel pattern analysis. Journal of Neuroscience. 2007;27:226–33. doi: 10.1523/JNEUROSCI.3619-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–3. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Pourtois G, et al. The voices of wrath: brain responses to angry prosody in meaningless speech. Nature Neuroscience. 2005;8:145–6. doi: 10.1038/nn1392. [DOI] [PubMed] [Google Scholar]

- Grezes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. Neuroimage. 2007;35:959–67. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Paus T. Brain networks involved in viewing angry hands or faces. Cerebral Cortex. 2006;16:1087–96. doi: 10.1093/cercor/bhj050. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Laird AR, Paus T. Cortical regions involved in eye movements, shifts of attention, and gaze perception. Human Brain Mapping. 2005;25:140–54. doi: 10.1002/hbm.20145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology. 2003;13:2201–5. doi: 10.1016/j.cub.2003.11.049. [DOI] [PubMed] [Google Scholar]

- Heilman KM, Watson RT. Arousal and emotions. In: Boller F, Grafman J, editors. Handbook of Neuropsychology. Amsterdam: Elsevier; 1989. pp. 403–17. [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim YH, Gitelman DR, Nobre AC, Parrish TB, LaBar KS, Mesulam MM. The large-scale neural network for spatial attention displays multifunctional overlap but differential asymmetry. Neuroimage. 1999;9:269–77. doi: 10.1006/nimg.1999.0408. [DOI] [PubMed] [Google Scholar]

- Lane RD, Chua PM, Dolan RJ. Common effects of emotional valence, arousal and attention on neural activation during visual processing of pictures. Neuropsychologia. 1999;37:989–97. doi: 10.1016/s0028-3932(99)00017-2. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Emotion, motivation, and anxiety: brain mechanisms and psychophysiology. Biological Psychiatry. 1998;44:1248–63. doi: 10.1016/s0006-3223(98)00275-3. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121(Pt 1):47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Maquet P, Frith CD, Vandenberghe R, Mesulam MM. Orienting attention to locations in perceptual versus mental representations. Journal of Cognitive Neuroscience. 2004;16:363–73. doi: 10.1162/089892904322926700. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends in Cognitive Science. 2006;10:424–30. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Within-subject reproducibility of category-specific visual activation with functional MRI. Human Brain Mapping. 2005a;25:402–8. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. Journal of Neurophysiology. 2005b;93:603–8. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Using multi-voxel pattern analysis of fMRI data to interpret overlapping functional activations. Trends in Cognitive Science. 2007;11:4–5. doi: 10.1016/j.tics.2006.10.009. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Heslenfeld DJ, Theeuwes J. Endogenous and exogenous attention shifts are mediated by the same large-scale neural network. Neuroimage. 2004;22:822–30. doi: 10.1016/j.neuroimage.2004.01.044. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49:815–22. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proceedings of the National Academy of Sciences of the United States of America; 2002. pp. 11458–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips ML, Bullmore ET, Howard R, et al. Investigation of facial recognition memory and happy and sad facial expression perception: an fMRI study. Psychiatry Research. 1998;83:127–38. doi: 10.1016/s0925-4927(98)00036-5. [DOI] [PubMed] [Google Scholar]

- Ponseti J, Bosinski HA, Wolff S, et al. A functional endophenotype for sexual orientation in humans. Neuroimage. 2006;33:825–33. doi: 10.1016/j.neuroimage.2006.08.002. [DOI] [PubMed] [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. Journal of Neuroscience. 2005;25:11055–9. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–73. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Urgesi C, Berlucchi G, Aglioti SM. Magnetic stimulation of extrastriate body area impairs visual processing of nonfacial body parts. Current Biology. 2004;14:2130–4. doi: 10.1016/j.cub.2004.11.031. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Science. 2005;9:585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Schwartz S. Emotional facial expressions capture attention. Neurology. 2001;56:153–8. doi: 10.1212/wnl.56.2.153. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–8. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Winston JS, Vuilleumier P, Dolan RJ. Effects of low-spatial frequency components of fearful faces on fusiform cortex activity. Current Biology. 2003;13:1824–9. doi: 10.1016/j.cub.2003.09.038. [DOI] [PubMed] [Google Scholar]

- Winston JS, Gottfried JA, Kilner JM, Dolan RJ. Integrated neural representations of odor intensity and affective valence in human amygdala. Journal of Neuroscience. 2005;25:8903–7. doi: 10.1523/JNEUROSCI.1569-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]