Abstract

In daily life, we perceive a person's facial reaction as part of the natural environment surrounding it. Because most studies have investigated how facial expressions are recognized by using isolated faces, it is unclear what role the context plays. Although it has been observed that the N170 for facial expressions is modulated by the emotional context, it was not clear whether individuals use context information on this stage of processing to discriminate between facial expressions. The aim of the present study was to investigate how the early stages of face processing are affected by emotional scenes when explicit categorizations of fearful and happy facial expressions are made. Emotion effects were found for the N170, with larger amplitudes for faces in fearful scenes as compared to faces in happy and neutral scenes. Critically, N170 amplitudes were significantly increased for fearful faces in fearful scenes as compared to fearful faces in happy scenes and expressed in left-occipito-temporal scalp topography differences. Our results show that the information provided by the facial expression is combined with the scene context during the early stages of face processing.

Keywords: context, face perception, emotion, event-related potentials, P1, N170

INTRODUCTION

The recognition of facial expressions has traditionally been studied by using isolated faces (Ekman, 1992; Adolphs, 2002). However, facial expressions can be rather ambiguous when viewed in isolation. This ambiguity may be resolved if the accompanying context is known (de Gelder et al., 2006; Barrett et al., 2007). In comparison to the study of object perception (Palmer, 1975; Bar, 2004; Davenport and Potter, 2004), there have only been a few behavioral studies that investigated the question how contexts may influence face processing. Facial expressions of fear tend to be perceived more frequently as expressing anger when subjects had heard a story about an anger provoking situation in advance (e.g. about people who were rejected in a restaurant) (Carroll and Russell, 1996). Facial expressions that were viewed in the context of emotional scenes were categorized faster in congruent visual scenes (e.g. faster recognition of a face conveying disgust in front of a garbage area) than in incongruent scenes (Righart and de Gelder, 2008). fMRI studies have also shown that facial expressions are interpreted differently given the context information that is available (Kim et al., 2004; Mobbs et al., 2006).

Behavioral and fMRI studies are not able to show at what stage of processsing emotional contexts affect face recognition. This may relate to an early stage of encoding, but it may also relate to a later stage of semantic associations that are made between face and context. Because of the time-sensitivity of event-related potentials (ERPs), it is possible to investigate how contexts affect different stages of face processing. The N170 is an ERP component that has been related to face encoding (Bentin et al., 1996; George et al., 1996; Itier and Taylor, 2004). The N170 occurs at around 170 ms after stimulus onset and has a maximal negative peak on occipito-temporal sites.

Although some studies have observed that the N170 is insensitive to facial expressions (Eimer and Holmes, 2002; Holmes et al., 2003), other studies have shown that the N170 amplitude is modified by facial expressions of emotion (Batty and Taylor, 2003), especially for fearful expressions (Batty and Taylor, 2003; Stekelenburg and de Gelder, 2004; Caharel et al., 2005; Righart and de Gelder, 2006; Williams et al., 2006). This suggests that emotional information may affect early stages of face encoding.

Another ERP component, the P1, with a positive deflection occurring at occipital sites at around 100 ms after stimulus onset, has also been related to face processing. Most studies have centred on this component because of its relation to spatial attention and physical features (Hillyard and Anllo-Vento, 1998). However, recent studies have found that the P1 amplitude is larger for faces than for nonface objects (Itier and Taylor, 2004; Herrmann et al., 2005), and that facial expressions affect the P1 amplitude as well (Batty and Taylor, 2003; Eger et al., 2003). The P1 may reflect a stage of face detection, and precedes the N170 that may reflect a stage of configural processing (Itier and Taylor, 2004). This time-course is consistent with earlier suggestions that global processing of faces occurs at around 117 ms, while fine processing of facial identity and expressions may occur at around 165 ms (Sugase et al., 1999).

An important question is how emotional contexts in which faces are perceived affect the aforementioned P1 and N170 components. In our previous study it was found that the N170 amplitude was larger when the face appeared in a fearful scene, especially when the face expressed fear (Righart and de Gelder, 2006). This effect was not found for the P1, though effects have been observed on this component for scenes only (Smith et al., 2003; Carretie et al., 2004; Righart and de Gelder, 2006), and for facial expressions in interaction with bodily expressions (Meeren et al., 2005).

While previous studies have reported that the N170 elicited by facial expressions is modulated by the emotional context, it is not yet clear whether individuals use context information in this stage when the task requires them to attend to the face and to discriminate explicitly between facial expressions. We hypothesized that the N170 component will still be affected by emotion regardless of the changed task conditions (Caharel et al., 2005).

In addition, it was not clear from our previous study how facial expressions of fear are processed compared with other facial expressions (e.g. happiness) as a function of the emotional context. In the present study, we investigated whether explicit categorization of facial expressions (fear, happiness) affects the P1 and the N170 for faces. As the discrimination of fine expressions may be associated with later processing stages than the P1 (compare with Sugase et al., 1999), and especially facial expressions of fear affect the N170 amplitude, we hypothesized that the N170 amplitude would be larger for fearful faces in fearful scenes as compared to happy and neutral scenes.

MATERIALS AND METHODS

Subjects

Eighteen participants (12 male) ranging from 21 to 50 years participated in the experiment (13 right handed). One participant was removed because of visual problems (glaucoma). The remaining participants had normal or corrected-to-normal vision. None of them reported a history of neurological or psychiatric diseases. All participants had given informed consent and were paid €20 for participation. The study was performed in accordance with the ethical standards of the Declaration of Helsinki.

Stimuli

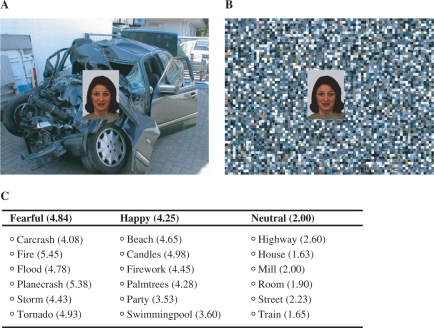

Stimuli consisted of faces that were centrally overlaid on pictures of natural scenes (Figure 1). Stimuli were color pictures (24 male and female) of facial expressions of fear and happiness from the Karolinska Directed Emotional Faces set (Lundqvist et al., 1998) and color pictures of natural scenes selected from the International Affective Picture System (IAPS) (Lang et al., 1999, p. 7560, 9622, 9911, 9920), which were complemented with images from the web to have for each category an equal number of scenes (Figure 1). Contents were validated for emotions of fear, happiness or neutrality and arousal (seven-point scale, from 1 = unaffected to 7 = extremely aroused) by a different group of participants (N = 10) using a collection of 401 picture stimuli. In total, 24 scene stimuli were selected for each emotion category (Figure 1). For the final set that was used in the experiment, arousal rates for fearful (4.84) and happy scenes (4.24) were significantly higher than neutral scenes (2.00) (both P < 0.001 but P > 0.05 for fearful–happy). On average, the intended label for happy scenes was chosen for 75% of the trials, for fearful scenes for 64% of the trials and for neutral scenes for 87% of the trials.

Fig. 1.

Facial expressions of fear and happiness were combined with context scenes conveying fear, happiness or a neutral situation. (A). An example of a face-context compound showing a facial expression of fear and a carcrash and (B) the same facial expression shown in a scrambled version of the carcrash that was used as a control stimulus for the effects of color. (C). Six context categories of stimuli were selected, each category containing four different stimuli (i.e. in total 24 for each emotion) that were validated. Average arousal ratings of validation are shown in parentheses. Participants rated the stimuli on a scale from 1–7.

In order to control for low-level features (e.g. color), all scene pictures were scrambled by randomizing the position of pixels across the image (blocks of 8 × 8 pixels were randomized across the image measuring 768 × 572 pixels width and height), which makes the pictures meaningless. The resulting pictures were inspected carefully for residual features that could cue recognition.

The height and width of the facial images was 7.9 cm × 5.9 cm (5.6° × 4.2°) and for context images 24.5 cm × 32 cm, respectively (17.4° × 22.6°). Faces did not occlude critical information in the context picture.

Design and procedure

Participants were seated in a dimly illuminated and an electrically shielded cabin with a monitor positioned at 80 cm distance. Participants were instructed and familiarized with the experiment by a practice session.

The experiment was run in 16 blocks each containing 72 trials of face-context compounds, 8 blocks of faces with intact context scenes and 8 blocks of faces with scrambled scenes. Blocks presenting intact and scrambled images alternated (with order randomized across participants). Facial expressions (fear, happy) were paired with a scene of each category (fear, happy, neutral) to have a balanced factorial design. Categories of emotional scenes appeared randomly throughout each block, so that each condition was presented in each block. Altogether, intact and scrambled blocks amounted to 12 conditions of each 96 trials.

The face-context compounds were presented for 200 ms and were preceded by a fixation cross. Participants performed a two-alternative forced choice task in which they categorized facial expression as happy or fearful. Responses were recorded from stimulus onset. No feedback was given. They were instructed to respond as accurately and fast as possible. Response buttons were counterbalanced across participants. The intertrial interval was randomized between 1200 and 1600 ms.

After the main experiment, participants received a validation task in which they judged the arousability of the scenes and categorized them by emotion. In contrast to the stimulus validation, arousal was measured on a five-point scale now because of response box limitations in the EEG experiment (five-point scale from 1 = calm to 5 = extremely arousing). Emotional categorization was measured by using three options (fear, happy, neutral).

EEG recording

EEG was recorded from 49 active Ag–AgCl electrodes (BioSemi Active2) mounted in an elastic cap referenced to an additional active electrode (Common Mode Sense). EEG was bandpass filtered (0.1–30 Hz, 24 dB/Octave). The sampling rate was 512 Hz. All electrodes were referenced offline to an average reference. Horizontal electrooculographies (hEOG) and vertical electrooculographies (vEOG) were recorded. The raw data were segmented into epochs from 200 ms before and 1000 ms after stimulus onset. The data were baseline corrected to the first 200 ms.

EEG was EOG corrected by using the algorithm of Gratton et al. (1983). Epochs exceeding 100 μV amplitude difference at any channel were removed from analyses. No differences were observed across conditions for facial expressions and emotional scenes. On average 87.5 trials (range across conditions: 86.8–88.5) were left for faces in intact scenes and 85.1 trials (84.4–85.4) were left for faces in scrambled scenes after removal of the artifacts. After removal of trials containing inaccurate responses or responses below 200 ms, ERPs were averaged for conditions of facial expressions of fear in intact fearful, happy and neutral scenes, and for happy facial expressions in the same context categories. Similarly, averages were computed for scrambled blocks, resulting in a total of 12 conditions.

Electrode selection for P1 and N170 analyses was based on previous studies. Based on grand average ERP inspection, peak detection windows for P1 and N170 were measured using time-windows of 60–140 ms and 100–220 ms, respectively. Peak latencies and amplitudes of P1 were analyzed at occipital sites (O1/2) and occipito-temporal sites (PO3/4, PO7/8) as the maximal positive peak amplitude. The N170 was analyzed on occipito-temporal sites (P5/6, P7/8 and PO7/8) as the maximal negative peak amplitude.

Data analyses

Behavioral analyses were performed for error rate (percentage of incorrect responses) and RTs (average response-times for correct responded trials). RT data were inspected for outliers for each participant. RTs > 2.5 SD from the mean of each condition were removed from analyses. Using these criteria, 2.6% of the trials were removed. Main and interaction effects were analyzed by using repeated measures ANOVA containing the factors Image (intact, scrambled), Facial expression (fear, happy) and Scene (fear, happy, neutral). Planned comparisons were performed (Howell, 2002) to test our specific hypothesis that fearful faces are faster recognized in fearful scenes (congruent) as compared to happy scenes (incongruent), and that happy faces are faster recognized in happy scenes (congruent) as compared to fearful scenes (incongruent). (α = 0.05, one-tailed t-test, directional hypotheses).

P1 and N170 latencies and amplitudes were analyzed by using repeated measures ANOVA containing the within subject factors image (intact, scrambled), facial expression (fear, happy), scene (fear, happy, neutral), hemisphere (left, right) and electrode position. Emphasis was put on the analysis of the factors facial expression and scene. Because of the specific hypothesis, planned comparisons were performed between facial expressions as a function of the emotional context.

Scalp topographic analyses and differences across the topographies for emotional scenes were analyzed by using repeated measures ANOVA. Mean amplitudes around the N170/Vertex Positive Potential (VPP) (140–160 ms) were calculated and differences across topographies were tested by t-tests on each electrode site (Rousselet et al., 2004). Amplitudes were vector normalized according to the method employed by McCarthy and Wood (1985). P-values were corrected by Greenhouse–Geisser epsilon when appropriate. Statistics are indicated with original degrees of freedom (Picton et al., 2000).

RESULTS

Behavioral results

The average error rate across all conditions was below 5%. Because of this low rate, no planned comparisons were performed. The main effect for image was significant, F(1,16) = 4.08, P < 0.05, in that errors were slightly increased for faces in intact scenes (M = 4.4%) than scrambled scenes (M = 4.0%).

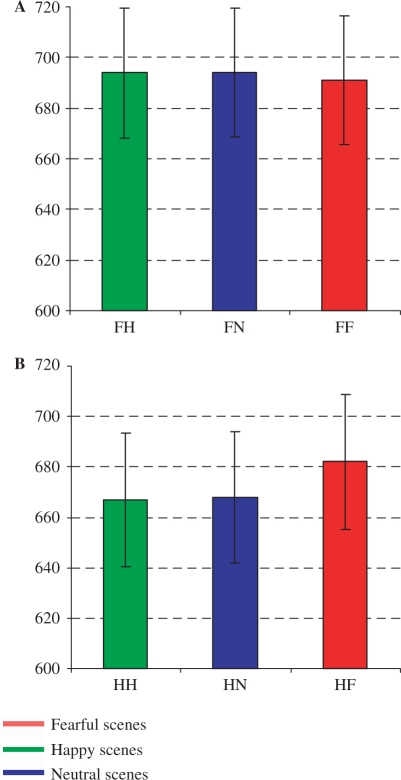

The analyses for RTs showed a main effect for facial expression, F(1,16) = 4.07, P < 0.05) as reflected in faster RTs to happy facial expressions (M = 665 ms) than fearful facial expressions (M = 690 ms), which is shown in Figure 2. A three-way interaction was observed between facial expression, scene and image, F(2,15) = 3.89, P < 0.05. Planned comparisons showed that happy faces were recognized faster in intact happy (M = 667 ms) and neutral (M = 668 ms) than fearful scenes (M = 682 ms), t(16) = 2.54, P < 0.05 and t(16) = 2.16, P < 0.05, respectively. The differences were not significant between happy faces in scrambled versions of happy (M = 661 ms), neutral (M = 656 ms) and fearful scenes (M = 657 ms). Neither were the differences significant for fearful faces in intact fearful scenes (M = 691 ms), happy scenes (M = 694 ms) and neutral scenes (M = 694 ms).

Fig. 2.

Mean response-times (ms) for fearful (A) and happy faces (B) in fearful, happy and neutral scenes. Error bars are 1 Standard Error around the mean.

In the evaluation task after the main experiment, a main effect was found for arousal, F(2,32) = 59.41, P < 0.001, in that fearful (M = 3.81) and happy scenes (M = 3.45) were rated as being more arousing than the neutral scenes (M = 1.84), t(16) = 11.99, P < 0.001 and t(16) = 8.80, P < 0.001. No effects were found for the categorization task, F(2,32) = 2.22, P > 0.05. However, post-hoc t-tests showed that the ratings for fearful and happy categories differed significantly, t(16) = 2.15, P < 0.05. The intended label for happy scenes was chosen on 87% of the trials, for fearful scenes on 77% of the trials and for neutral scenes on 81% of the trials. Thus, these results are consistent with our previous ratings on arousal and category.

ERP data

P1 component

The ERPs of 14 participants showed a distinctive P1 deflection. No main effects were observed for facial expression and scene for P1 latency and amplitude, P > 0.05. A marginally significant three-way interaction was found between image, electrode position and scene, F(4,10) = 2.92, P = 0.08, which was explained by amplitudes that were smaller for faces in intact happy (M = 6.18 μV) than intact fearful (M = 6.97 μV) and intact neutral scenes (M = 6.91 μV), but on electrode pair O1/2 only, respectively, P < 0.01 and P < 0.05. None of the comparisons for scrambled scenes were significant.

N170 component

The ERPs of 15 participants showed a distinctive N170. Main effects were found for the N170 latency of facial expression, F(1,14) = 20.41, P < 0.001 and scene, F(2,13) = 15.47, P < 0.001, but the effects were explained by slight latency differences between happy faces (M = 148 ms) and fearful faces (M = 149 ms) and between neutral (M = 148 ms) and fearful scenes (M = 149 ms), and therefore these effects are not further discussed.

The analyses for the N170 amplitude showed a main effect for facial expression, F(1,14) = 6.36, P < 0.05, in that N170 amplitudes were more negative to facial expressions of happiness (M = −4.64 μV) than fear (M = −4.25 μV).

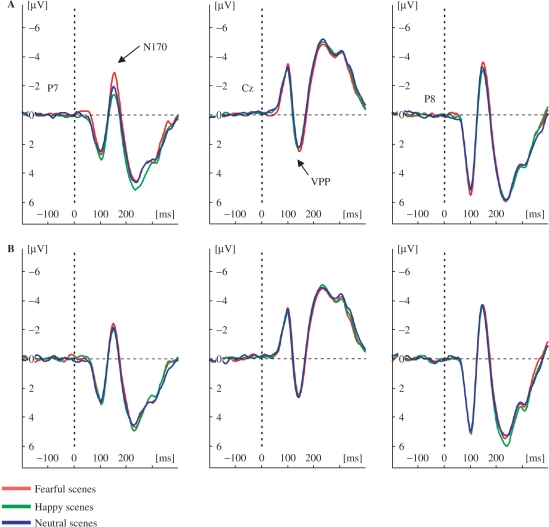

A three-way interaction was observed between image, electrode-position and scene, F(4,11) = 6.90, P < 0.05 (Figure 3). Amplitudes were more negative for faces in intact fearful (M = −4.51 μV) than faces in intact happy (M = −3.94 μV) and intact neutral scenes (M = −4.05 μV), P < 0.01, P < 0.05, respectively. These results were significant on electrodes P7/8 only. It is unlikely that these effects are based on low-level features. The differences between faces in scrambled fearful (M = −5.23 μV) and faces in scrambled happy scenes (M = −5.03 μV) obtained marginal significance (P = 0.06). The differences between faces in scrambled fearful scenes (M = −5.23 μV) and faces in scrambled neutral scenes (M = −5.26 μV) were not significant.

Fig. 3.

Grand-averaged ERPs of the N170/VPP components. N170/VPP for fearful (A) and happy faces (B) in fearful, happy and neutral scenes. The N170 is displayed for occipito-temporal electrode sites (P7/8) and the VPP is displayed for the vertex electrode (Cz). Negative amplitudes are plotted upwards.

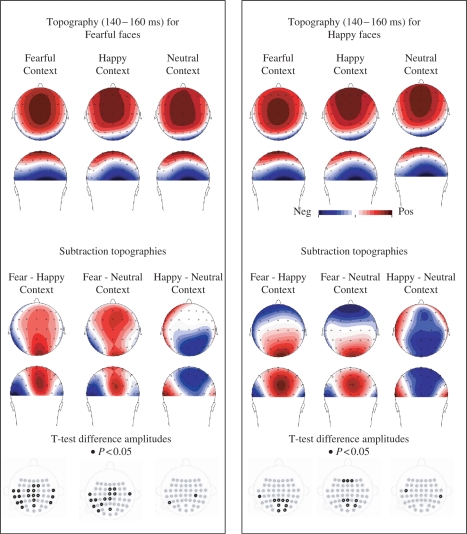

Planned comparisons were performed on N170 peak amplitudes for fearful and happy facial expressions separately as a function of scene. It was found that the N170 amplitudes were more negative for fearful facial expressions in fearful scenes than fearful facial expressions in happy scenes, for P7 (−4.18 μV; −3.19 μV), t(14) = 3.74, P < 0.01, P8 (−4.84 μV; −4.23 μV), t(14) = 2.59, P < 0.05 and compared to neutral scenes for P7 (−4.18 μV; −3.33 μV), t(14) = 3.06, P < 0.01 (Figure 3). For happy facial expressions, N170 amplitudes were more negative in fearful scenes than happy facial expressions in happy scenes for P7 (−3.90 μV; −3.52 μV), t(14) = 2.61, P < 0.05, and happy facial expressions in neutral scenes for P7 (−3.90 μV; 3.39 μV), t(14) = 2.28, P < 0.05. The comparisons for other electrodes on the occipito-temporal scalp are shown in Figure 4. Importantly, the differences were obtained on all occipito-temporal electrodes on the left hemisphere for fearful faces in fearful scenes as compared to happy and neutral scenes, while this was not the case for happy faces. T-tests on all electrodes for the calculated mean amplitudes (140–160 ms) were similar to peak analyses (Figure 4).

Fig. 4.

Scalp topographies for faces in context based on a window (140-160 ms) around the peak for the N170. Upper panel left: scalp topography of fearful faces in fearful, happy and neutral scenes show the typical central positivity of the VPP and the occipito-temporal negativities of the N170. Upper panel right: happy faces in fearful, happy and neutral scenes. Lower panel left: scalp topographies based on difference waves show the prominent left occipito-temporal N170 and central VPP response to faces in fearful scenes, but neither for fearful faces in happy or neutral scenes, nor for happy faces in any of the scenes (lower panel right). Black dots in electrode-map depict sites on which difference is significant (t-test, P < 0.05, uncorrected).

Scalp topography analyses

Scalp topography analyses were performed to examine further whether the implied hemispheric differences for fearful facial expressions in fearful scenes were reflected in topographical differences. For the scalp topographic distribution, mean amplitudes were calculated around the N170 peak, which occurred on ∼150 ms. Figure 4 shows planned comparisons for mean amplitudes at 140–160 ms indicating that fearful faces were larger in fearful scenes than happy and neutral scenes on left occipito-temporal electrodes. In addition, the central electrodes show a positivity (associated with the VPP, Joyce and Rossion, 2005) that is increased for fearful faces in fearful scenes as compared to happy and neutral scenes. The topographic interaction between a large range of symmetrically positioned occipito-temporal electrodes (T7/8; TP7/8; CP3/4; CP5/6; P7/8; P5/6; PO7/8; PO3/4; O1/2) and scene was tested for facial expressions of fear and happiness separately by repeated measures ANOVA.

A significant topography difference was found between fearful facial expressions in intact fearful scenes as compared to fearful facial expressions in intact happy scenes, F(19,304) = 4.10, P < 0.05, but it was not different from fearful facial expressions in neutral scenes, F(19,304) = 1.28, P = 0.29. The difference between fearful facial expressions in happy scenes and fearful facial expressions in neutral scenes was significant neither, F(19,304) = 1.61, P = 0.18. The comparisons across scrambled scenes were also nonsignificant. For happy facial expressions, no topography differences were found between faces that were presented in fearful scenes compared to happy, F(19,304) = 1.92, P = 0.13, and neutral scenes, F(19,304) = 0.51, P = 0.74, and nor between happy and neutral scenes, F(19,304) = 0.93, P = 0.44.

DISCUSSION

We showed that affective information provided by natural scenes influences face processing. Larger N170 amplitudes were observed for faces in fearful scenes as compared to faces in happy and neutral scenes, which were particularly increased for fearful faces on the left hemisphere electrodes. Taken together, our results indicate that information from task-irrelevant scenes is combined rapidly with the information from facial expressions, even when the task requires categorization of facial expressions. These results suggest that subjects use context information on this stage of processing when they discriminate between facial expressions. The emotional context may facilitate this categorization process by constraining perceptual choices (Barrett et al., 2007).

The behavioral data confirm earlier findings that context information may influence how facial expressions are recognized (Carroll and Russell, 1996; reviewed by Barrett et al., 2007). Categorization of happy facial expressions is significantly faster in happy than fearful scenes, whereas the faster recognition of fearful facial expressions in fearful scenes compared to happy scenes did not reach significance. These behavioral data replicate our previous findings (Righart and de Gelder, 2008), but appear to oppose the results on the ERP data. The differences for scrambled scenes were not significant and therefore it is unlikely that low-level features explain these results. A novel finding in this study is that RTs for happy faces in neutral scenes were also faster than fearful scenes. Future studies may investigate how recognition of facial expressions is affected by emotional scenes when individuals are not constrained by choosing between emotion labels (Barrett et al., 2007).

The behavioral data do not necessarily reflect the same brain processes as measured by ERPs. Behavioral results differ across tasks that are employed, as it has been reported that response times are fastest to negative expressions if participants had to detect expressions (Öhman et al., 2001), but are slowest if participants had to discriminate among several expressions (Calder et al., 2000). The ERP data showed different patterns for the P1 component compared to the N170 component. The P1 amplitudes were larger to faces in fearful than happy scenes, which is consistent with literature that has shown P1 effects for emotional scenes without faces (Smith et al., 2003; Carretie et al., 2004; Righart and de Gelder, 2006), and may relate to attentional effects that have been reported for the P1 (Hillyard and Anllo-Vento, 1998). However, it should be noted that the results for faces in neutral scenes were not consistent with this interpretation, because the amplitudes for neutral scenes were similar to these for fearful scenes. We cannot exclude here that low-level features may have introduced this effect for neutral scenes (Allison et al., 1993). However, low-level features are unlikely explaining the main results for the N170, because these results replicate our previous data but now by using different stimulus sets (Righart and de Gelder, 2006).

In contrast to the P1, increased N170 amplitudes were observed on the left hemispheric electrode sites when fearful faces were accompanied by fearful scenes rather than happy or neutral scenes. As fearful scenes increased the N170 amplitude for fearful faces significantly, emotions may combine specifically for fear at this stage of encoding. Previous work has already shown that the N170 amplitude is modified by facial expressions (Batty and Taylor, 2003), especially for fearful facial expressions (Batty and Taylor, 2003; Stekelenburg and de Gelder, 2004; Williams et al., 2006), and that fearful scenes increase the N170 amplitude for fearful facial expressions even more (Righart and de Gelder, 2006). Although the N170 amplitude was increased for both fearful and happy faces in fearful as compared to happy and neutral scenes, which may relate to general effects of arousal, specific effects were found for fearful faces on the left hemisphere, as indicated by the scalp topographic analyses that showed significant differences on left occipito-temporal electrodes.

The left hemispheric effects may correspond to contextual effects that were observed before in an fMRI study (Kim et al., 2004). It was found that left fusiform gyrus activation to surprised faces was increased if preceded by a negative context (e.g. about losing money) as compared to a positive context (e.g. about winning money). We have shown that context effects also occur when context and face are presented simultaneously. From a psychological perspective this is important, because it shows that emotional information from the context and face can be combined simultaneously, and on an early stage of processing, which appears to diverge from the face recognition model by Bruce and Young (1986), in which facial expressions are extracted after a stage of structural encoding, and in which context information is incorporated at a relatively late semantic stage of processing.

The N170 may (in part) be generated by the fusiform gyrus (Pizzagalli et al., 2002). The N170 amplitude to faces has been related to the BOLD response in the fusiform gyrus (Iidaka et al., 2006). The time-course of the N170, and the generation of this component in the fusiform gyrus, is consistent with a feedback modulation from the amygdala. An intracranial study has found that the amygdala may respond differentially to facial expressions at around 120 ms (Halgren et al., 1994). Anatomical connections have been found between the amygdala and the fusiform gyrus (Aggleton et al., 1980), and in patients with amygdalar sclerosis it has been shown that the amygdala is critical in enhancing the fusiform gyrus response to facial expressions (Vuilleumier et al., 2004).

The input from the amygdala to early visual areas may importantly shape emotion perception (Halgren et al., 1994; LeDoux, 1996). Early enhanced visual responses to emotional stimuli may be crucial for rapid decision making and reactions to salient situations and other person's reactions to these situations.

Acknowledgments

We are grateful to Thessa Caus for assistance with the materials and EEG recordings.

REFERENCES

- Adolphs R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–61. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Allison T, Begleiter A, McCarthy G, Roessler E, Nobre AC, Spencer DD. Electrophysiological studies of color processing in human visual cortex. Electroencephalography and Clinical Neurophysiology. 1993;88:343–55. doi: 10.1016/0168-5597(93)90011-d. [DOI] [PubMed] [Google Scholar]

- Aggleton JP, Burton MJ, Passingham RE. Cortical and subcortical afferents to the amygdala of the rhesus monkey (Macaca Mulatta) Brain Research. 1980;190:347–68. doi: 10.1016/0006-8993(80)90279-6. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nature Reviews Neuroscience. 2004;5:617–29. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Science. 2007;11:327–32. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Caharel S, Courtay N, Bernard C, Lalonde R, Rebai M. Familiarity and emotional expression influence an early stage of face processing: an electrophysiological study. Brain and Cognition. 2005;59:96–100. doi: 10.1016/j.bandc.2005.05.005. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–51. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Carretie L, Hinojosa JA, Martin-Loeches M, Mercado F, Tapia M. Automatic attention to emotional stimuli: neural correlates. Human Brain Mapping. 2004;22:290–99. doi: 10.1002/hbm.20037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205–18. doi: 10.1037//0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Davenport JL, Potter MC. Scene consistency in object and background perception. Psychological Science. 2004;15:559–64. doi: 10.1111/j.0956-7976.2004.00719.x. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Meeren HKM, Righart R, Van den Stock J, van de Riet WAC, Tamietto M. Chapter 3. Beyond the face: exploring rapid influences of context on face processing. Progress in Brain Research. 2006;155PB:37–48. doi: 10.1016/S0079-6123(06)55003-4. [DOI] [PubMed] [Google Scholar]

- Eger E, Jedynak A, Iwaki T, Skrandies W. Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41:808–17. doi: 10.1016/s0028-3932(02)00287-7. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002;13:427–31. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and Emotion. 1992;6:169–200. [Google Scholar]

- George N, Evans J, Fiori N, Davidoff J, Renault B. Brain events related to normal and moderately scrambled faces. Cognitive Brain Research. 1996;4:65–76. doi: 10.1016/0926-6410(95)00045-3. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MGH, Donchin E. A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology. 1983;55:468–84. doi: 10.1016/0013-4694(83)90135-9. [DOI] [PubMed] [Google Scholar]

- Halgren E, Baudena P, Heit G, Clarke M, Marinkovic K. Spatio-temporal stages in face and word processing. 1. Depth recorded potentials in the human occipital and parietal lobes. Journal of Physiology. 1994;88:1–50. doi: 10.1016/0928-4257(94)90092-2. [DOI] [PubMed] [Google Scholar]

- Hansen CH, Hansen RD. Finding the face in the crowd: an anger superiority effect. Journal of Personality and Social Psychology. 1988;54:917–24. doi: 10.1037//0022-3514.54.6.917. [DOI] [PubMed] [Google Scholar]

- Herrmann MJ, Ehlis AC, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs) Journal of Neural Transmission. 2005;112:1073–81. doi: 10.1007/s00702-004-0250-8. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:781–7. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Cognitive Brain Research. 2003;16:174–84. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Howell DC. Statistical Methods for Psychology. 5th. Pacific Grove, CA, USA: Duxbury Thomson Learning; 2002. [Google Scholar]

- Iidaka T, Matsumoto A, Haneda K, Okada T, Sadato N. Hemodynamic and electrophysiological relationship involved in human face processing: evidence from a combined fMRI-ERP study. Brain and Cognition. 2006;60:176–86. doi: 10.1016/j.bandc.2005.11.004. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14:132–42. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- Joyce C, Rossion B. The face-sensitive N170 and VPP components manifest the same brain processes: the effect of reference electrode site. Clinical Neurophysiology. 2005;116:2613–31. doi: 10.1016/j.clinph.2005.07.005. [DOI] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, et al. Contextual modulation of amygdala responsivity to surprised faces. Journal of Cognitive Neuroscience. 2004;16:1730–45. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. International Affective Picture System (IAPS): Technical Manual and Affective Ratings. Gainesville: NIMH Center for the Study of Emotion and Attention: University of Florida; 1999. [Google Scholar]

- LeDoux JE. The Emotional Brain. New York: Simon & Schuster; 1996. [Google Scholar]

- Lundqvist D, Flykt A, Ohman A. The Karolinska Directed Emotional Faces-KDEF: CD-ROM. Stockholm: Department of Clinical Neuroscience, Psychology Section: Karolinska Institute; 1998. [Google Scholar]

- McCarthy G, Wood C. Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalography and Clinical Neurophysiology. 1985;62:203–8. doi: 10.1016/0168-5597(85)90015-2. [DOI] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mobbs D, Weiskopf N, Lau HC, Featherstone E, Dolan RJ, Frith CD. The Kuleshov effect: the influence of contextual framing on emotional attributions. Social Cognitive and Affective Neuroscience. 2006;1:95–106. doi: 10.1093/scan/nsl014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: a threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80:381–96. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Palmer SE. The effect of contextual scenes on the identification of objects. Memory and Cognition. 1975;3:519–26. doi: 10.3758/BF03197524. [DOI] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, et al. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology. 2000;37:127–52. [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Marqui RD, Davidson RJ. Affective judgments of faces modulate early activity (∼160 ms) within the fusiform gyri. NeuroImage. 2002;16:663–77. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Context influences early perceptual analysis of faces–an electrophysiological study. Cerebral Cortex. 2006;16:1249–57. doi: 10.1093/cercor/bhj066. [DOI] [PubMed] [Google Scholar]

- Righart R, de Gelder B. Recognition of facial expressions is influenced by emotional scene gist. Cognitive, Affective and Behavioral Neuroscience. 2008;8:264–72. doi: 10.3758/cabn.8.3.264. [DOI] [PubMed] [Google Scholar]

- Rousselet GA, Mace MJ, Fabre-Thorpe M. Spatiotemporal analyses of the N170 for human faces, animal faces and objects in natural scenes. Neuroreport. 2004;15:2607–11. doi: 10.1097/00001756-200412030-00009. [DOI] [PubMed] [Google Scholar]

- Smith NK, Cacioppo JT, Larsen JT, Chartrand TL. May I have your attention, please: electrocortical responses to positive and negative stimuli. Neuropsychologia. 2003;41:171–83. doi: 10.1016/s0028-3932(02)00147-1. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, de Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777–80. doi: 10.1097/00001756-200404090-00007. [DOI] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–73. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesions on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–8. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Williams LM, Palmer D, Liddell BJ, Song L, Gordon E. The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. NeuroImage. 2006;31:458–67. doi: 10.1016/j.neuroimage.2005.12.009. [DOI] [PubMed] [Google Scholar]