Abstract

In many domains of cognitive processing there is strong support for bottom-up priority and delayed top-down (contextual) integration. We ask whether this applies to supra-lexical context that could potentially constrain lexical access. Previous findings of early context integration in word recognition have typically used constraints that can be linked to pair-wise conceptual relations between words. Using an artificial lexicon, we found immediate integration of syntactic expectations based on pragmatic constraints linked to syntactic categories rather than words: phonologically similar “nouns” and “adjectives” did not compete when a combination of syntactic and visual information strongly predicted form class. These results suggest that predictive context is integrated continuously, and that previous findings supporting delayed context integration stem from weak contexts rather than delayed integration.

Spoken word recognition is remarkably efficient. In under a second, “The doctor treated the sick patient,” is easily understood, despite the flow of 24 phonemes, selection of six words from a vocabulary of thousands, and resolution of syntactic ambiguity at the words sick and patient, each of which could be an adjective or a noun. The efficiency of spoken word recognition depends in part on rapid integration of multiple sources of information. In addition to the incoming signal, the preceding linguistic context is a rich source of potential constraints.

One influential theoretical approach posits that contextual constraints serve primarily to evaluate candidate hypotheses generated from the bottom-up match between speech and potential lexical candidates. This “access-selection” framework predicts that, with the possible exception of lexical priming, contextual constraints are not immediately integrated with bottom-up input (Marslen-Wilson, 1987, 1990). Rather, there is a lag during which lexical activation depends solely on goodness of fit to the input. On the alternative view of “continuous integration,” contextual constraints affect the earliest moments of processing, with lexical activation jointly determined by all available bottom-up and top-down constraints (e.g., Gaskell & Marslen-Wilson, 2002).

Dahan and Tanenhaus (2004) reported support for continuous integration using the visual world eye tracking paradigm (Tanenhaus, Spivey-Knowlton, Eberhard & Sedivy, 1995). They examined verb-based thematic constraints, and found that semantic relatedness between verbs and subsequent nominal arguments prevented fixations to context-inappropriate phonological competitors of nouns. Many other studies support continuous integration with methods ranging from cross-modal priming (Tabossi, Colombo & Job, 1987; Tabossi & Zardon, 1993) to event-related potentials (Connolly & Philips, 1994; Delong, Urbach, & Kutas, 2005; van Berkum, Zwitserlood, Hagoort and Brown 2003; van den Brink, Brown & Hagoort, 2001; Van Petten, Coulson, Rubin, Plante & Parks, 1999). However, constraints in these studies can be linked to pairwise conceptual relatedness of words (e.g., verbs and nouns in Dahan & Tanenhaus). Therefore, such results might arise from pre-established semantic relatedness and could be explained by semantic priming. A constraint tied to more abstract syntactic features might be integrated at a different time scale than a constraint mediated by semantic priming.

Indeed, there is minimal evidence for immediate effects of syntactic constraints. For example, syntactic contexts do not eliminate initial priming of an inappropriate sense of a cross-category homophone ambiguity, such as “rose” (Tanenhaus, et al., 1979), though priming is limited to context-appropriate senses after a short delay, consistent with access-selection (see also Swinney, 1979). However, form-class expectations have limited predictive power, and stronger context might result in detectably earlier effects (Tanenhaus & Lucas, 1987; Tanenhaus, Dell & Carlson, 1987). Shillcock and Bard (1993) observed that closed-class words might provide strong predictive constraints because the class has few members. They examined whether items like /wʊd/ in a closed-class context (“John said that he didn't want to do the job, but his brother would”) prime associates of open class homophones. They found priming for “timber” immediately after the offset of /wʊd/ in the open-class context (“John said he didn't want to do the job with his brother's wood”), but not in the closed-class context. This result held when they probed halfway through /wʊd/, suggesting that the closed-class context influences the earliest moments of lexical access.

Tanenhaus et al. (1979) and Shillcock and Bard (1993) are both consistent with the continuous integration prediction that top-down information sources have detectable early impact when they are sufficiently predictive. Such a reliability-based model of information integration is functionally equivalent to Bayesian inference (Knill & Richards, 1996), where strength of prior probabilities determines constraint impact. On this view, even though constraints are continuously integrated, weak constraints may appear to be integrated late because their effects are difficult to detect until the bottom-up response is strong (cf. Dahan, Magnuson, & Tanenhaus, 2001). However, given the specificity of the Shillcock and Bard materials, tests of generalization are clearly desirable.

What might constitute a strong form-class expectation more general than the closed/open class distinction? If a listener hears the word onset “pur—”, access-selection predicts equivalent initial activation of purse and purple (ignoring differences in length, frequency, etc.). If instead the listener hears “click on the pur—” in the context of a display containing a purse, book, cup and shoe, and the cup happens to be purple, conversational convention (Grice's [1975] maxim of quantity) could lead the listener to expect the word at this position to be a bare noun; an adjective is not required for unambiguous reference. But if the display contained blue and green purses and purple and yellow cups, “purple cup” would be much more likely. However, it would be virtually impossible to assemble a reasonable number of items from English well matched within and between form class on frequency, neighborhood, length, semantic features, etc., making this an excellent question to address with an artificial lexicon.

Magnuson, Tanenhaus, Aslin, and Dahan (2003) demonstrated that artificial lexicons show central signature effects of real-time spoken word recognition, including frequency, cohort and rhyme competition, and effects of frequency-weighted neighborhood density. The paradigm has subsequently been applied to a variety of issues in spoken word recognition and lexical learning (Creel, Aslin & Tanenhaus, 2006, 2008; Creel, Tanenhaus & Aslin, 2006; Shatzman & McQueen, 2006) and syntactic processing (Wonnacott, Newport & Tanenhaus, 2008).

In the current study, participants learned nouns (names of shapes) and adjectives (textures). Instructions used English context and word order (e.g., “click on the /pibᴧ/ [adj] /tedu/ [noun]”). The lexicon (see Table 1) contained phonemic cohorts from different syntactic categories (e.g., /pibo/ was a noun and /pibᴧ/ was an adjective) or the same category (e.g., another noun was /pibe/).

Table 1.

Artificial lexical items.

| Noun (shape) | Adjective (texture) |

|---|---|

| pibo | pibᴧ |

| pibe | |

| bupo | bupᴧ |

| bupe | |

| tedu | tedi |

| tedε | |

| dotε | doti |

| dotu | |

| kagæ | kagai |

| kagʊ | |

| gawkʊ | gawkæ |

| gawkai |

We created conditions where visual context provided strong syntactic expectations, but without the pair-wise conceptual relatedness present in previous studies. We constructed displays where adjectives were required (e.g., identical shapes with different textures) or infelicitous (e.g., unique shapes, making the adjective superfluous), thus controlling the minimally sufficient set of words for unambiguous reference. We tracked eye movements as participants followed spoken instructions to select items in the display. Instructions were always of the form “click on the NOUN” (all shapes different, adjective unnecessary) or “click on the ADJECTIVE NOUN” (identical shapes present with different textures, requiring the adjective for unambiguous reference). If syntactic expectations in conjunction with pragmatic constraints embodied in the visual display can constrain initial lexical access, we should observe competition effects only between cohorts from the same syntactic form-class at the offset of “the”. Thus, this study tests whether the Shillcock and Bard (1993) findings generalize to novel materials and a finer-grained measure, whether perceivers can constrain lexical access based on an abstract expectation generated by the number of nominal types in a display, and therefore whether and how early context not correlated with pairwise lexical semantic relations can mediate bottom-up processing.

Experiment

We extended the artificial lexicon paradigm to include words for adjectives (names for textures) and nouns (shapes). We exploited the naturalistic visual aspects of the paradigm to establish strong pragmatic form-class expectations. By making contingencies perfectly predictive, we were able to test whether, in an ideal case, lexical access would be restricted to candidates from the expected form-class.

Methods

Participants

Fourteen native speakers of English who reported normal or corrected-to-normal vision and normal hearing were paid to participate.

Stimuli

There were 18 bisyllabic nonsense words (9 nouns, referring to shapes, and 9 adjectives referring to textures; see Table 1) designed to be distinctive but possible English words. Only three items had English neighbors: tedu, tedi, and doti had 1, 2, and 3 neighbors, respectively, with summed log frequencies 4.1 or less (Magnuson et al., 2003, reported virtually no interaction between artificial and native lexicons, even when artificial items were constructed to be maximally similar to real words).

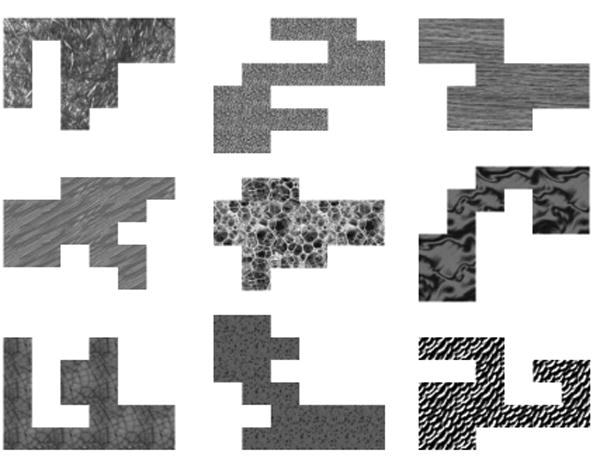

The auditory stimuli (16 bit, 22.025 kHz) were produced by a male native speaker of English in a sentence context (“Click on the /bupe tedu/”). The visual materials included 9 unfamiliar shapes, generated by randomly filling 18 contiguous cells of a 6 × 6 grid, and 9 distinctive textures. Figure 1 shows the shapes, with a different texture applied to each. Names were randomly mapped to shapes and textures for each participant.

Figure 1.

Visual materials. The nine “nouns” referred to the shapes; the nine “adjectives” referred to the textures that could be applied to the shapes. Any texture could be applied to any shape, and name-picture mappings were randomized for every participant.

Procedure

Participants were trained and tested on two consecutive days in 90 to 120 minute sessions. On day 1, participants were trained first on nouns in a two-alternative forced choice (2AFC) task. On each trial, two shapes appeared (both solid black) and the participant heard an instruction (e.g., “click on the bupo”). Auditory stimuli were presented binaurally through headphones. When the subject clicked on an item, one item disappeared, leaving the correct one, and the name was repeated. Items were not presented on consecutive trials, and were pseudo-randomly ordered such that every item was presented 7 times every 72 trials. There were 144 2AFC trials, after which noun training continued with 144 4AFC trials. Throughout, all shapes appeared equally often as distractors.

Adjective training began with two exemplars of the same shape with different textures, and an instruction such as “click on the bupe pibo”. Since participants were familiar with the shape names, it was clear that the new words referred to textures. Each adjective was randomly paired with eight different nouns in each block. After 144 2AFC trials, there were 144 4AFC trials, with four exemplars of the same shape with different textures. These were followed by 144 more 4AFC trials, but with two exemplars each of two identical shapes, each with a different texture (requiring participants to recognize both the adjective and noun).

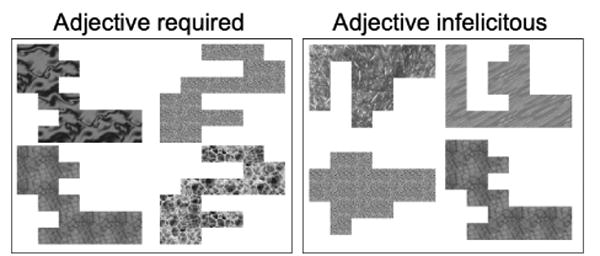

The final training phase involved two types of 4AFC trials. On some trials, four different shapes appeared. On others, two pairs of shapes appeared. On every trial, each shape had a different texture. On 2-type trials (two pairs of identical shapes; left panel of Figure 2), an adjective was required (and specified) for unambiguous reference (e.g., “click on the bupe pibo”). On 4-type trials (four different shapes; right panel of Figure 2), adjectives were not required – each item could be identified unambiguously by shape name, and only the noun was specified (e.g., “click on the pibo”). Using the adjective would violate Grice's (1975) maxim of quantity (do not over-specify), which interlocutors tend to observe in natural conversation.

Figure 2.

Example displays illustrating the pragmatic constraints imposed by number of nominal types in the display. On the left, an adjective is required for unambiguous reference. On the right, an adjective (rather than a bare noun) violates the Maxim of Quantity.

Each adjective was presented eight times per block, paired each time with a different, randomly selected noun. Each noun was presented as a target eight times in the 4-noun trials. Participants completed one block of 144 trials of this mixed training on Day 1. On Day 2, the training consisted of four more mixed blocks.

Each day ended with a 4AFC test without feedback. We tracked participants' eye movements using an Eye-Link system. There were six test conditions. In the noun/no cohorts condition, there were four different shapes, and no shape or texture name was a competitor of the target noun. The purpose of this condition (and the adjective/no cohorts condition described below) was to reduce the proportion of trials including cohort items. There were two critical noun conditions that included cohorts. In the noun/noun cohort condition, there were four shapes, and one was a cohort to the target (e.g., /pibe/ given the target /pibo/), but no shape had the target's adjective cohort texture (e.g., the /pibᴧ/ texture). In the noun/adjective cohort condition, four different shapes were displayed. Noun cohorts were not displayed, but an adjective cohort was (e.g., /pibᴧ tedu/). In these conditions, the instruction referred only to the noun (e.g., “click on the pibo”).

In the other three conditions, two exemplars of two different shapes were displayed, requiring an adjective for unambiguous reference. In the adjective/no cohort condition, none of the distractor textures were cohorts of the target, and neither were any of the nouns. In the adjective/adjective cohort condition, one of the non-target textures was a cohort to the target (e.g., /tedε bupo/ given the target /tedi dotu/), and no noun cohorts of the target were displayed. In the adjective/noun cohort condition, none of the distractors had cohort textures, but a noun cohort was displayed (e.g., /bupe tedu/ given the target /tedi dotu/).

Each adjective and noun appeared nine times as the initial or only item in the target noun phrase in the test. In cohort conditions, nouns and adjectives either had one competitor in each form class, or two in the opposite form class. Nouns with noun cohorts appeared in six noun/no cohort trials, two noun/noun cohort trials, and once in the noun/adjective cohort condition. Nouns with two adjective cohorts appeared in 7 noun/no cohort trials, 0 noun cohort trials, and two noun/adjective cohort trials. The same pattern was used with adjective conditions (yielding 162 test trials).

Predictions

A non-linguistic characteristic of the displays provides a potential constraint on lexical access: 2-type displays (two instances of each of two shapes, each with different textures) predict an adjective following “the”, while 4-type displays (four distinct shapes) predict a noun following “the.” The access-selection framework predicts that this information should not initially constrain lexical access (early competition among all bottom-up matches irrespective of form class). Continuous integration predicts constraint on the earliest moments of lexical access (constraining competition to members of the expected form class).

Results

Training

Accuracy at key points during training and testing is detailed in Table 2. Data from two participants who performed at less than 90% correct on the test on day 2 were excluded.

Table 2.

Accuracy by session. Tests are shown in bold.

| Type | First block | Last block |

|---|---|---|

| 2 noun | .70 | .96 |

| 4 noun | .93 | .97 |

| 4 adjectives, 1 noun | .88 | .96 |

| 4 adjectives, 2 nouns | .97 | .98 |

| Mixed, day 1 | .96 | .96 |

| Test, day 1 | .98 | |

| Mixed, day 2 | .96 | .96 |

| Test, day 2 | .98 |

Test

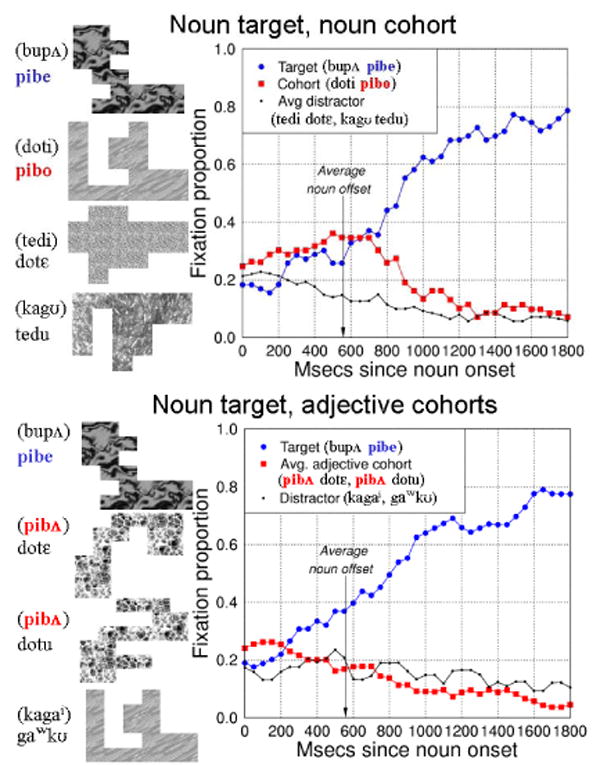

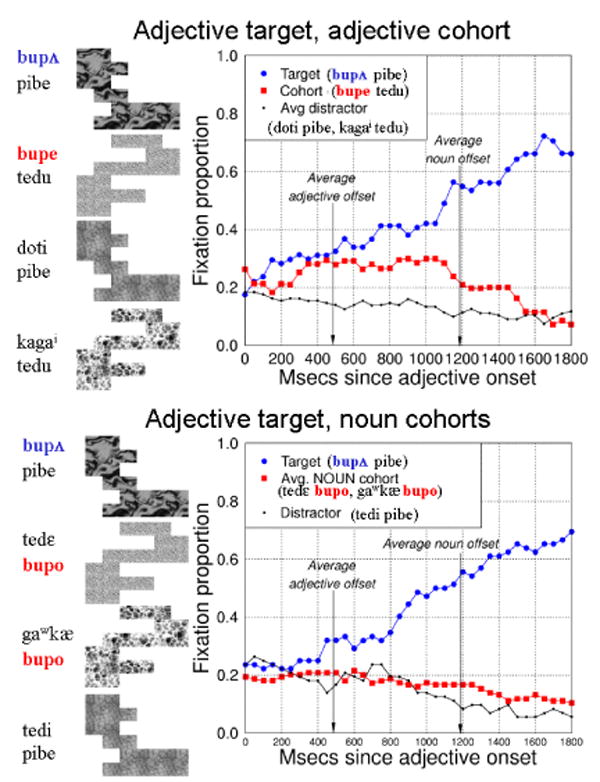

Results from the day 2 test are shown in Figures 3 (critical noun conditions) and 4 (critical adjective conditions). For the adjective/noun cohort condition to require an adjective, two exemplars of each of two different shapes had to be displayed. To make the noun/adjective cohort condition comparable, two items were displayed with textures that were cohorts of the noun target.

Figure 3.

Fixation proportions over time to noun targets and noun cohorts (top panel) and adjective cohorts (bottom panel). Example images to the left of each panel illustrate the trial structure. In experimental displays, images were arranged around a central fixation cross and were not presented with text labels.

Strong, early cohort effects are apparent in the upper panels of Figures 3 and 4 (within-form class competitor conditions) but not in the lower panels (between-form class conditions). To assess whether cohort fixations differed from unrelated distractors in each condition, we conducted an ANOVA on the day 2 test with the following design: 2 (noun or adjective target) × 2 (cohort relevance [same class as target or not]) × 2 (early or late analysis window) × 2 (cohort or mean distractor proportion). The analysis window ranged from 200 ms after target onset (approximately the earliest that signal driven changes in fixations could emerge, given 150-200 ms to plan and launch saccades; Fischer, 1992; Saslow, 1967) and ended 1000 ms later (by which point cohort fixations were in steep decline for all conditions). Since the crucial question is whether there was early cohort competition, we divided that window in half to yield “early” and “late windows”.1 The key finding is an interaction of relevance and item that stems from greater cohort than distractor fixations only in the relevant cohort conditions (Table 3). This pattern holds in planned comparisons restricted to the early analysis window. That is, cohorts were fixated more than distractors only in the relevant cohort conditions (when the cohort and target are of the same form class). The fact that this pattern holds even for the early windows suggests that context constrains lexical activation immediately.

Figure 4.

Fixation proportions over time to adjective targets and adjective cohorts (top panel) and noun cohorts (bottom panel). Example images to the left of each panel illustrate the trial structure. In the experimental displays, images were arranged around a central fixation cross and were not presented with text labels.

Table 3.

analysis of day 2 test. ANOVAs assessing noun vs. adjective targets (N.S. tests not included), early vs. late analysis windows (200-700 and 700-1200 msecs post-target onset), and cohort relevance (relevant = same form class as target) by item type (cohort vs. mean distractor proportions). Crucially, there are more looks to cohorts than distractors, and an interaction of item and relevance (the effect of target type was not significant, nor were any other interactions). Planned comparisons (below) reveal that the interaction stems from greater proportions for cohorts only in the relevant conditions.

Additional planned comparisons show this pattern holds for the early window.

| Full analysis | ||||||||

|---|---|---|---|---|---|---|---|---|

| Level A | Level B | F1(1, 11) | p | ω2 | F2(1, 10) | p | ω2 | |

|

|

||||||||

| Early | Late | |||||||

|

|

||||||||

| Window | .20 | .17 | 21.7 | .001 | .462 | 11.5 | .007 | .535 |

| Relevant | Irrelevant | |||||||

|

|

||||||||

| Relevance | .22 | .17 | 17.0 | .002 | .400 | 7.9 | .018 | .441 |

| Cohort | Distractor | |||||||

|

|

||||||||

| Item | .24 | .17 | 7.3 | .021 | .210 | 5.7 | .038 | .362 |

| Relevance × Item | 5.8 | .034 | .342 | 8.8 | .014 | .468 | ||

| Planned comparisons | ||||||||

| Level A | Level B | F1(1, 11) | p | ω2 | F2(1, 10) | p | ω2 | |

|

|

||||||||

| Cohort | Distractor | |||||||

|

|

||||||||

| Item at Relevant | .26 | .15 | 11.0 | .007 | .294 | 11.1 | .008 | .527 |

| Cohort | Distractor | |||||||

|

|

||||||||

| Item at Irrelevant | .17 | .16 | < 1.0 | 1.2 | .281 | .072 | ||

| Cohort | Distractor | |||||||

|

|

||||||||

| Item at Relevant, Early window only | .29 | .16 | 10.3 | .008 | .279 | 7.7 | .020 | .434 |

| Cohort | Distractor | |||||||

|

|

||||||||

| Item at Irrelevant, Early window only | .19 | .17 | < 1.0 | < 1.0 | ||||

Discussion

Using an artificial lexicon paradigm to precisely control within- and between form-class similarity for words referring to shapes and textures, we found strong support for early interaction between acoustic-phonetic information for lexical activation and form-class expectations derived from visually-based pragmatic constraints. In contrast to classic results supporting primacy of bottom-up information over syntactic expectations, sufficiently strong form-class expectations immediately constrained lexical access. In concert with many findings showing a range of impacts of semantic context (e.g., Duffy, Morris, & Rayner, 1988), our results support the continuous integration hypothesis (Dahan & Tanenhaus, 2004; MacDonald, Pearlmutter, & Seidenberg, 1994). On this view, it is possible to observe extreme possibilities along an early-late impact continuum with endpoints that resemble selective access to context-appropriate items (early) and exhaustive (late) access. Note as well that immediate effects of context (in the current results and Dahan & Tanenhaus) entail anticipatory impact; the apparent complete absence of cross-class cohort competition requires that the system is prepared for the expected form class at the onset of the target phrase (else we should detect at least transient cross-class cohort competition).2

Our results add to the understanding of context integration in two ways. First, they show that lexical activation of items in the display depends not just on static perceptual properties, but also expectations that emerge based on an unfolding utterance and visual context (cf. Altmann & Kamide, 2007). Second, given the similarity of the time course effects, the complex constraint at work here likely operates via similar mechanisms as the arguably simpler conceptual and scene-specific constraints in previous work (such as scene constraints on syntactic processing [Knoeferle, Habets, Crocker, & Muente, in press; Tanenhaus et al., 1995], and effects of affordances of tools and objects [Chambers, Tanenhaus, & Magnuson, 2004; Hanna & Tanenhaus, 2003]).3

Although it will be important to examine whether our results generalize to natural language materials and more probabilistic contexts, the current results provide an important starting point for further explorations of the time course of context integration using artificial materials, and provide an empirical example of immediate integration of bottom-up information with top-down form-class expectations.

Acknowledgments

We thank Dan Mirman and Greg Zelinsky for helpful discussions. This study was supported by NSF SBR-9729095 and NIH DC-005071 to MKT and RNA, and an NSF Graduate Research Fellowship and a Grant-in-Aid of Research from the National Academy of Sciences through Sigma Xi to JSM. Preparation of the manuscript was supported by NIH grants DC-005765 to JSM, and HD-001994 and HD-40353 to Haskins Laboratories.

Footnotes

We explored other window sizes and separate analyses of noun and adjective conditions. We were unable to find parameters that did not converge with this analysis.

“Preparedness” could result from a bias towards expected class or against the unexpected class. See Mirman, McClelland, Holt, and Magnuson (2008) for simulations of multiple neurophysiologically plausible attentional mechanisms that could influence lexical attention.

We cannot rule out the possibility that fixations index a decision process that integrates context and (potentially exhaustive) lexical activation in a cascaded manner and subsequently limits visual attention. We find this unlikely, given the overwhelming absence of cross-class competition here, evidence that fixations reflect lexical activation in the absence of bottom-up support (e.g., semantic effects: Huettig & Altmann, 2005; Yee & Sedivy, 2006), and converging evidence using priming (Shillcock & Bard, 1993).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

James S. Magnuson, Department of Psychology, University of Connecticut, and Haskins Laboratories, New Haven, CT

Michael K. Tanenhaus, Department of Brain and Cognitive Sciences, University of Rochester

Richard N. Aslin, Department of Brain and Cognitive Sciences, University of Rochester

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language. 1998;38:419–439. [Google Scholar]

- Altmann G, Kamide Y. The real-time mediation of visual attention by language and world knowledge: Linking anticipatory (and other) eye movements to linguistic processing. Journal of Memory and Language. 2007;57:502–518. [Google Scholar]

- Chambers CG, Tanenhaus MK, Magnuson JS. Actions and affordances in syntactic ambiguity resolution. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2004;30:687–696. doi: 10.1037/0278-7393.30.3.687. [DOI] [PubMed] [Google Scholar]

- Connolly JF, Phillips NA. Event-related potential components reflect phonological and semantic processing of the terminal words of spoken sentences. Journal of Cognitive Neuroscience. 1994;6:256–266. doi: 10.1162/jocn.1994.6.3.256. [DOI] [PubMed] [Google Scholar]

- Cooper RM. The control of eye fixation by the meaning of spoken language: A new methodology for the real-time investigation of speech perception, memory, and language processing. Cognitive Psychology. 1974;6(1):84–107. [Google Scholar]

- Creel SC, Aslin RN, Tanenhaus MK. Acquiring an artificial lexicon: Segment type and order information in early lexical entries. Journal of Memory and Language. 2006;54:1–19. [Google Scholar]

- Creel SC, Aslin RN, Tanenhaus MK. Heeding the voice of experience: The role of talker variation in lexical access. Cognition. 106:633–664. doi: 10.1016/j.cognition.2007.03.013. in press. [DOI] [PubMed] [Google Scholar]

- Creel SC, Tanenhaus MK, Aslin RN. Consequences of lexical stress on learning an artificial lexicon. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:15–32. doi: 10.1037/0278-7393.32.1.15. [DOI] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK. Time course of frequency effects in spoken-word recognition: Evidence from eye movements. Cognitive Psychology. 2001;42:317–367. doi: 10.1006/cogp.2001.0750. [DOI] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK, Hogan EM. Tracking the time course of subcategorical mismatches: Evidence for lexical competition. Language and Cognitive Processes. 2001;16(56):507–534. [Google Scholar]

- Dahan D, Tanenhaus MK. Continuous mapping from sound to meaning in spoken-language comprehension: Immediate effects of verb-based thematic constraints. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:498–513. doi: 10.1037/0278-7393.30.2.498. [DOI] [PubMed] [Google Scholar]

- Dahan D, Tanenhaus MK. Looking at the rope when looking for the snake: Conceptually mediated eye movements during spoken-word recognition. Psychonomic Bulletin & Review. 2005;12:453–459. doi: 10.3758/bf03193787. [DOI] [PubMed] [Google Scholar]

- Delong K, Urbach T, Kutas M. Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nature Neuroscience. 2005;8:1117–1121. doi: 10.1038/nn1504. [DOI] [PubMed] [Google Scholar]

- Duffy SA, Morris RK, Rayner K. Lexical ambiguity and fixation times in reading. Journal of Memory and Language. 1988;27:429–446. [Google Scholar]

- Fischer B. Saccadic reaction time: Implications for reading, dyslexia and visual cognition. In: Rayner K, editor. Eye movements and visual cognition: Scene perception and reading. Springer-Verlag; New York: 1992. pp. 31–45. [Google Scholar]

- Gaskell MG, Marslen-Wilson WD. Representation and competition in the perception of spoken words. Cognitive Psychology. 2002;45:220–266. doi: 10.1016/s0010-0285(02)00003-8. [DOI] [PubMed] [Google Scholar]

- Grice HP. Logic and conversation. In: Cole P, Morgan JL, editors. Syntax and Semantics, Vol 3, Speech Acts. New York: Academic Press; 1975. pp. 41–58. [Google Scholar]

- Hanna JE, Tanenhaus MK. Pragmatic effects on reference resolution in a collaborative task: evidence from eye movements. Cognitive Science. 2003;28:105–115. [Google Scholar]

- Huettig F, Altmann GTM. Word meaning and control of eye fixation: Semantic competitor effects and the visual world paradigm. Cognition. 2005;96:B23–B32. doi: 10.1016/j.cognition.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Huettig F, Altmann GTM. Visual-shape competition during language-mediated attention is based on lexical input and not modulated by contextual appropriateness. Visual Cognition. 2007;15:985–1018. [Google Scholar]

- Knill DC, Richards W. Perception as Bayesian Inference. Cambridge University Press; New York, NY: 1996. [Google Scholar]

- Knoeferle P, Habets B, Crocker MW, Muente TF. Visual scenes trigger immediate syntactic reanalysis: evidence from ERPs during spoken comprehension. Cerebral Cortex. doi: 10.1093/cercor/bhm121. in press. [DOI] [PubMed] [Google Scholar]

- MacDonald MC, Pearlmutter NJ, Seidenberg MS. Lexical nature of syntactic ambiguity resolution. Psychological Review. 1994;101(4):676–703. doi: 10.1037/0033-295x.101.4.676. [DOI] [PubMed] [Google Scholar]

- Magnuson JS, Dixon JA, Tanenhaus MK, Aslin RN. The dynamics of lexical competition during spoken word recognition. Cognitive Science. 2007;31:1–24. doi: 10.1080/03640210709336987. [DOI] [PubMed] [Google Scholar]

- Magnuson JS, Tanenhaus MK, Aslin RN, Dahan D. The microstructure of spoken word recognition: Studies with artificial lexicons. Journal of Experimental Psychology: General. 2003;132(2):202–227. doi: 10.1037/0096-3445.132.2.202. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD. Functional parallelism in spoken word-recognition. Cognition. 1987;25:71–102. doi: 10.1016/0010-0277(87)90005-9. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD. Activation, competition, and frequency in lexical access. In: Altmann GTM, editor. Cognitive Models of Speech Processing: Psycholinguistics and Computational Perspectives. Cambridge, MA: MIT Press; 1990. pp. 148–172. [Google Scholar]

- Mirman D, McClelland JL, Holt LL, Magnuson JS. Effects of attention on the strength of lexical influences on speech perception: Behavioral experiments and computational mechanisms. Cognitive Science. 2008;32:398–417. doi: 10.1080/03640210701864063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saslow MG. Latency for saccadic eye movement. Journal of the Optical Society of America. 1967;57:1030–1033. doi: 10.1364/josa.57.001030. [DOI] [PubMed] [Google Scholar]

- Shatzman KB, McQueen JM. Prosodic knowledge affects the recognition of newly-acquired words. Psychological Science. 2006;17:372–377. doi: 10.1111/j.1467-9280.2006.01714.x. [DOI] [PubMed] [Google Scholar]

- Shillcock RC, Bard EG. Modularity and the processing of closed class words. In: Altmann GTM, Shillcock RC, editors. Cognitive models of speech processing. The Second Sperlonga Meeting. Erlbaum; 1993. pp. 163–185. [Google Scholar]

- Swinney DA. Lexical access during sentence comprehension: (Re)consideration of context effects. Journal of Verbal Learning and Verbal Behavior. 1979;18:645–659. [Google Scholar]

- Tabossi P, Colombo L, Job R. Accessing lexical ambiguity: Effects of context and dominance. Psychological Research. 1987;49:161–167. [Google Scholar]

- Tabossi P, Zardon F. Processing ambiguous words in context. Journal of Memory and Language. 1993;32:359–372. [Google Scholar]

- Tanenhaus MK, Dell GS, Carlson G. Context effects and lexical processing: A connectionist approach to modularity. In: Garfield JL, editor. Modularity in Knowledge Representation and Natural-Language Processing. Cambridge, MA: MIT Press; 1987. pp. 83–108. [Google Scholar]

- Tanenhaus MK, Leiman JM, Seidenberg MS. Evidence for multiple stages in the processing of ambiguous words in syntactic contexts. Journal of Verbal Learning and Verbal Behavior. 1979;18:427–440. [Google Scholar]

- Tanenhaus MK, Lucas MM. Context effects in lexical processing. Cognition. 1987;25:213–234. doi: 10.1016/0010-0277(87)90010-2. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton M, Eberhard K, Sedivy JC. Integration of visual and linguistic information is spoken-language comprehension. Science. 1995;268:1632–1634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- van Berkum JJA, Zwitserlood P, Hagoort P, Brown C. When and how do listeners relate a sentence to the wider discourse? Evidence from the N400 effect. Cognitive Brain Research. 2003;17:701–718. doi: 10.1016/s0926-6410(03)00196-4. [DOI] [PubMed] [Google Scholar]

- van den Brink D, Brown C, Hagoort P. Electrophysiological evidence for early contextual influences during spoken-word recognition: N200 versus N400 effects. Journal of Cognitive Neuroscience. 2001;13:967–985. doi: 10.1162/089892901753165872. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Coulson S, Rubin S, Plante E, Parks M. Time course of word identification and semantic integration in spoken language. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:394–417. doi: 10.1037//0278-7393.25.2.394. [DOI] [PubMed] [Google Scholar]

- Wonnacott E, Newport EL, Tanenhaus MK. Acquiring and processing verb argument structure: distributional learning in a miniature language. Cognitive Psychology. 2008;56:165–209. doi: 10.1016/j.cogpsych.2007.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yee E, Sedivy J. Eye movements to pictures reveal transient semantic activation during spoken word recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:1–14. doi: 10.1037/0278-7393.32.1.1. [DOI] [PubMed] [Google Scholar]