Abstract

Objective:

To improve understanding of the information-seeking behaviors of public health professionals, the authors conducted this randomized controlled trial involving sixty participants to determine whether library and informatics training, with an emphasis on PubMed searching skills, increased the frequency and sophistication of participants' practice-related questions.

Methods:

The intervention group (n = 34) received evidence-based public health library and informatics training first, whereas the control group (n = 26) received identical training two weeks later. The frequency and sophistication of the questions generated by both intervention and control groups during the interim two-week period served as the basis for comparison.

Results:

The intervention group reported an average of almost 1.8 times more questions than those reported by the control group (1.24 vs. 0.69 questions per participant); however, this difference did not reach statistical significance. The intervention group overall produced more sophisticated (foreground) questions than the control group (18 vs. 9); however, this difference also did not reach statistical significance.

Conclusion:

The training provided in the current study seemed to prompt public health practitioners to identify and articulate questions more often. Training appears to create the necessary precondition for increased information-seeking behavior among public health professionals.

Highlights

Library and informatics training produced a higher volume of questions formulated by public health practitioner trainees compared to those formulated by members of a control group.

Training resulted in a trend toward increased sophistication in questions formulated by the trainees after training compared to members of the control group.

This study supports Wilson's theory and model of information seeking and verifies previous empirical research studies on information-seeking behavior.

Implications

Library and informatics training may lead to increased question formulation among trainees. This question formulation step serves as a theoretical precondition for information-seeking behavior.

Results lend validation to health sciences librarians' efforts during the past decade to provide related training for public health practitioners.

The randomized controlled trial design of delaying an intervention in a control group might have wide applicability in library and informatics training.

Background

Public Health Information Needs

The Institute of Medicine's 1988 The Future of Public Health prompted a number of major initiatives to improve public health in the United States. This report noted that the then current public health system was “incapable … of applying fully current scientific knowledge” and lacked a “scientifically sound knowledge base” [1]. Public health informatics in subsequent years would play a quintessential role in both building and utilizing this critical knowledgebase throughout US and international public health systems, thereby enabling public health practitioners to find appropriate high-quality information [2–4].

Progress has occurred, although much work remains. The information needs and information-seeking behaviors of public health practitioners still have not been researched extensively [5–7]. Revere and colleagues conducted a comprehensive literature review to ascertain the information needs of public health practitioners and discovered “few formal studies of the information-seeking behaviors of public health professionals” [8]. Nonetheless, there have been several noteworthy studies on the information needs of the US public health workforce. Some exploratory studies have suggested the need for greater awareness of and training in accessing public health information resources [9–12]. Efforts have been launched by the Centers for Disease Control and Prevention and the National Library of Medicine to address hypothesized information needs, both to develop and to provide access to information resources for public health practitioners [13–15].

Unfortunately, the few rigorous research studies that exist have been conducted outside the United States and have had limited applicability inside the United States. For example, Forsetlund and colleagues attempted to understand the information needs of Norwegian public health physicians [16–19], but their work has only tangential relevance for the United States because public health roles and contexts in the United States and Norway have vast differences. These projects have increased understanding of information behavior among public health practitioners tremendously but also have led to revived calls for rigorous, integrative research in the United States [8].

Understanding the information needs of public health practitioners poses an inherently complex set of research problems. The contemporary public health workforce in the United States consists of a variety of professionals, including nurses, health educators, disease prevention specialists, epidemiologists, physicians, program directors, and nutritionists. The roles of these respective professions in public health contexts tend to be at variance from the roles of these same professionals outside of public health contexts [8].

Moreover, the broad field of informatics, concerned with information-seeking behavior, does not have a unifying theory. Instead, a set of mostly mechanistic or conceptual models currently inform hypothesis-testing in information behavior studies [20–22].

Theories of Information-Seeking Behavior

The frequency and volume of questions articulated by a public health practitioner, or any other health professional, pertain to theories of information behavior. The major theories of information behavior have been well summarized by authors including Case [20] and Wilson [21,22]. Case and Wilson both note that models of information seeking share a common characteristic: that an individual's recognition of an information need serves as the prerequisite for all subsequent information-seeking behavior. If one does not articulate an information need, then one will not engage in information-seeking behavior [23]. Most models depict this activation of information seeking as prompted by a feeling of uncertainty or a gap in one's current knowledge of a subject, particularly if the needed missing information has an increased sense of priority in one's mind. In this regard, Kuhlthau observes that “[i]nformation searching is traditionally portrayed as a systematic, orderly procedure rather than the uncertain, confusing process users commonly experience” [24].

The concept of “need” in these theoretical models has been difficult to apply to research studies because findings have been limited in deciphering the full array of all possible motivations from either overt behavior or ethnographic participant forms of observation studies [22]. These types of participant observation studies regrettably run the risk of introducing bias because the observer actually interferes with the observed subjects' experience of information need. In other words, the observing researcher might participate in the study context to the extent that the researcher actually introduces the perceived need into the mind of the subjects when the subject otherwise potentially would not have perceived that need [25].

Wilson's Theoretical Model

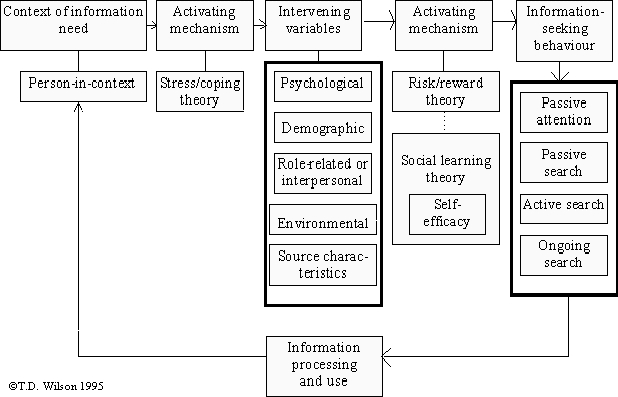

The present study relates most closely to Wilson's information-seeking model (Figure 1), which notes that an individual's sense of self-efficacy plays a major role in determining whether or not one engages in information-seeking behavior [21]. Ease of access appears to be a second important determining variable in whether one decides to engage in information seeking [22]. Other factors validated by empirical researchers with relevance to this study consist of “possessing the necessary expertise, resources, and time” [26]. The training intervention described by the current study could be interpreted as providing the “expertise” and the “resources” in Wilson's model.

Figure 1.

Wilson's information behavior model. Permission granted to reprint by TD Wilson, Jun 5, 2006.

Wilson's framework focuses on a person when an information need occurs. A gap in knowledge generates stress in the person that activates the information need, mediated by intervening variables such as environmental supports or constraints and an actual activating mechanism [21,22]. The empirical research literature that forms the basis of the models described above lends additional findings that inform the present study. Covell and colleagues's landmark study of the information-seeking behavior of physicians, for example, has resulted in the hypothesis that training in the skill of formulating questions to foster effective finding of answers would then increase information-seeking activities [27].

Number of Questions to Expect

How often do questions actually arise in professional practice? When designing this study, the authors wondered what volume of questions to expect from the subjects in the study. Several empirical studies have been conducted in study populations including physicians and nurses [27–32]. However, these results offered no direct information about the frequency of questions that might be expected from public health practitioners. The authors reasoned that they should not assume that information seeking occurs identically across disciplines [33]. Thus, investigators had no a priori expectations for the volume of questions to be generated by the subjects of the current study.

Study Hypotheses

This article reports on a combined research and training project intended to produce rigorous research on the information-seeking behavior of the public health workforce. This randomized controlled trial tested two hypotheses:

Evidence-based public health library and informatics training increases the frequency of practice-related questions that public health professionals articulate.

Evidence-based public health library and informatics training increases the sophistication of practice-related questions that public health professionals articulate, as measured by the categorizations of background versus foreground [34] types of questions, discussed further below.

Methods

Study Population

The authors secured approval by the University of New Mexico Health Sciences Center Human Research Review Committee (institutional review board) prior to commencing the focus group and the randomized controlled trial phases of this study (approval number: 05-215). Only New Mexico Department of Health (DoH) professionals were eligible to enroll in this study. The investigators defined public health “professionals” broadly, as in a prior study [35], to include administrators, disease prevention specialists, epidemiologists, health educators, nurses, nutritionists, physicians, program directors, and social workers. The investigators included in this study only those DoH professionals who signed an institutional review board consent form, returned at least one survey (Appendix) during a six-week period, and participated in a three-hour evidence-based public health (EBPH) library and informatics course designed for public health practitioners. All participants had agreed in advance to receive their training on either 1 of 2 possible training dates, which were scheduled exactly 2 weeks apart. Participants completing the training and 3 surveys were eligible to receive a $20.00 gift card as an added incentive. The investigators repeated this pattern of training both in an intervention group and a control group 5 times involving a total of 75 participants at 4 sites around New Mexico—Albuquerque, Las Cruces, Roswell, and Santa Fe—during the period of August 2005 through February 2006. Table 1 tracks the initial enrollees, trainees, and those who completed the study. The 75 participants represented about half of the study team, as DoH administrators' originally expected and planned for 130 participants.

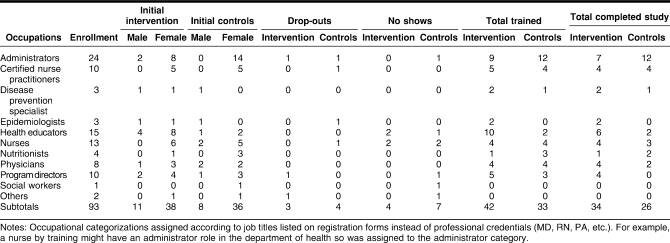

Table 1.

Enrollment to completion: demographic data for all study participants

Intervention and Control Groups

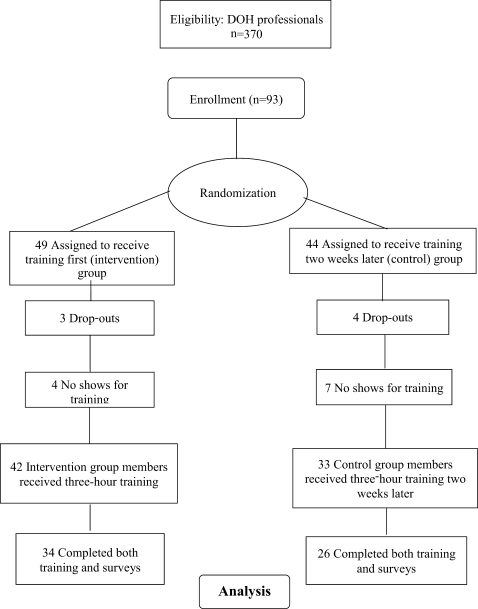

Once the investigators had enrolled all participants, they randomized each participant into either an intervention group or a control group using a web-based randomizing service [36]. The randomization produced two evenly sized groups, each consisting of a mix of professional groups without any observable clusters of the same professions in either the intervention group or the control group. Figure 2 depicts the sequence of training between the groups, whereas Figure 3 provides a sequential overview of the enrollment and participation processes for this experimental research methodology.

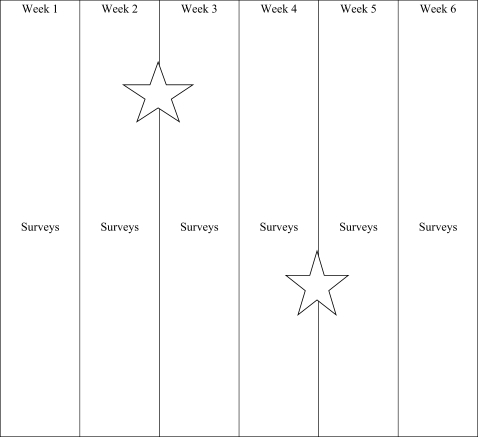

Figure 2.

Study period for each site.

Star indicates training dates. Star at end of week 2 represents intervention group, while star at end of week 4 represents control group training date

Figure 3.

Randomized controlled trial flow chart

Once the training period began, if enrollees arrived for training on a date for which they were not scheduled, they still were trained but were not included in this experimental study. Anyone who was not previously enrolled and attended the training was trained, provided space existed, but such trainees were not included in this study either.

Intervention

The intervention group received its three-hour long EBPH training session exactly two weeks prior to the control group's identical three-hour long EBPH training session. The investigators were determined to make these two paired training sessions as identical as possible by making the same opening remarks, adhering to identical class schedule, and utilizing exactly the same training materials. The trainer even told the same jokes and wore identical clothing during both paired training sessions. Therefore, to the best of the investigators' ability to equalize the training sessions [37], only the timing of the training differed between the control and intervention training sessions. The intervention consisted of a three-hour-long training session [38] covering basic EBPH principles [39–41], such as definitions and types of EBPH questions, levels of evidence [42], evaluations of both information and statistics websites relevant to public health, PubMed training tailored to public health practitioners' needs [43,44], and free peer-reviewed web-based journals.

Jenicek's definition of EBPH served as the standard for describing EBPH in both project publicity and training materials. Jenicek defined EBPH as the “conscientious, explicit, and judicious use of current best evidence in making decisions about the care of communities and populations in the domain of health protection, disease prevention, health maintenance and improvement (health promotion) … the process of systematically finding, appraising, and using contemporaneous research findings as the basis for decisions in public health” [39]. The first author designed these training sessions based on findings from much of the aforementioned training reports' findings [9,10] to the extent that these reports offered any concrete details. The first author also relied on four years' experience of teaching in the University of New Mexico Masters in Public Health (MPH) Program, with minor modifications suggested by two separate focus groups [45]. The approach to these training sessions largely overlapped with the public health information needs identified by O'Carroll and colleagues [13], Lynch [46], and, to a limited extent, Lee and colleagues [10]. Since the completion of this project, a report by LaPelle and colleagues also has described similar areas of overlapping approach [47].

The two-week period in which the intervention group had been trained and the control group had not yet been trained served as the focus of data collection. All questions were designated by intervention or control group member status and then examined for their frequency and sophistication by either intervention or control groups.

Survey

This study sought to collect all questions generated by individuals in either the intervention group or the control group. During the six-week study period, all participants, regardless of group status, were emailed a brief reminder along with a survey (Appendix) that took approximately two minutes to complete. These reminders were emailed three times per week on Mondays, Wednesdays, and Fridays. The brief surveys asked participants to record any work-related questions that had occurred to them recently. The specific survey wording stated, “Describe in a single sentence, if possible, a question related to performing your daily job duties on the attached survey form.” Participants were asked whether or not they attempted to answer the question, with prompts to indicate their experiences. Although instructed to submit only one question per survey form, some respondents included more than one question per form. All participants were encouraged at the time of enrollment in the study to submit as many questions as had occurred to them.

The authors sought to avoid any Hawthorne effect of changing the participants' behavior simply by observing it [48] by including a two-week survey period preceding any training session so all participants would attain a comfort level with the thrice-weekly survey routine. The randomization protocols then attempted to assure that any lingering Hawthorne effect would be distributed evenly between both the intervention and control groups.

Similar randomized controlled trials have reported the need to reduce contamination between control and intervention groups [49]. For example, contamination might involve members of the intervention and control group discussing their training experiences with one another. The authors took precautions such as sending emails to members of the control and intervention groups separately so participants were only aware of other members of their own groups through addressee notations. Many training participants were dispersed geographically or traveled extensively, thereby further reducing the likelihood of contamination.

Data Analysis

The second author (Carr), blinded to participant group status, coded and entered all survey responses, including those surveys that did not contain questions, into an Excel spreadsheet. Participants' names were removed to protect their privacy during the final data analyses. The spreadsheet noted data by the group designation of the participant submitting the question, date of the question, and text of the actual question.

The study also employed Richardson's and Mulrow's classification system for questions as a measure of their relative sophistication. Background questions were defined as occurring when one has “little familiarity” with the subject so that many times textbooks can be the source of the answer, and foreground questions were defined as occurring to individuals familiar with a subject and therefore often answerable using journal articles [34]. The investigators operationalized a background question in terms of any question that could be answered by a member of the reference staff using the reference collection resources. Two of the investigators (Carr and Eldredge) evaluated the background or foreground status of each question, initially agreeing on the designation of all but fewer than five questions. For these questions, the investigators discussed the designations until they reached full consensus.

Two-tailed t-test analyses were applied to assess differences in the frequency of questions and their levels of sophistication as categorized by either background or foreground types.

Results

Table 1 describes the distribution of participants, by either intervention or control group, across occupational and gender categories.

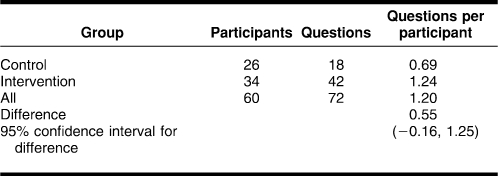

Hypothesis One: Number of Reported Questions

Table 2 shows the number of questions reported by the intervention and control groups during weeks 3 and 4 of the 6-week study. Intervention group members, who had been trained, articulated almost twice as many questions as members of the still untrained control group during weeks 3 and 4 (1.24 questions vs. 0.69 questions). The 95% confidence level for the difference in total number of questions (0.16, 1.25) did not exclude zero, indicating that the difference did not reach significance at the 0.05 level.

Table 2.

Questions reported by intervention and control groups and ratios of question to study participants during weeks 3–4

Hypothesis Two: Background Versus Foreground Categorizations

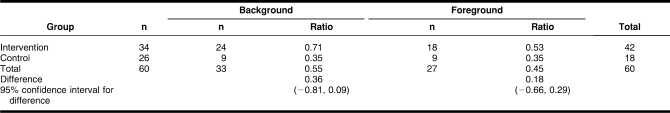

Study participants in both groups generated more background than foreground questions overall. Control group members generated a higher proportion of foreground questions than intervention members (50.0% vs. 42.9%). As shown in Table 3, intervention group members generated a higher number of questions, however, so the actual number of foreground questions was higher in the intervention group. The intervention group reported a mean number of 0.53 foreground questions per participant compared to 0.35 foreground questions per control group participant. The 95% confidence intervals for differences in the mean number of questions by type did not exclude zero, so these differences were not statistically significant.

Table 3.

Number of background and foreground questions and ratio of questions to number of persons in group during weeks 3–4

Reported Information-Seeking Behavior

This study did not propose to test a hypothesis as to whether members of the trained intervention group would be more likely to pursue answers to their questions than members of the untrained control group. The investigators were interested to find that 80% of the intervention group members' surveys (n = 28/35) reported attempting to answer their questions, while only 50% of the untrained control group members (n = 8/16) indicated any attempt to answer their questions during weeks 3–4.

Discussion

EBPH training appeared to increase the number of questions reported by trained public health practitioners. EBPH training also seemed to support the hypothesis that trained public health practitioners would have a higher level of sophistication of questions as measured by an increase in foreground questions. However, the formal hypothesis testing results did not reach statistical significance, likely stemming in part from the small sample sizes.

The training intervention in this study is associated with increased rate of question formulation reported by participants. This question formulation stage provides a theoretical precondition of information-seeking pursuits. In Wilson's model, depicted in Figure 1, training can provide this activating mechanism. The current results therefore offer support for Wilson's model. The observed trends toward increased question frequency and sophistication further suggest validation of broadscale collaborative training initiatives involving diverse members of the public workforce over the past decade [50–53], because training apparently leads to an essential prerequisite condition of information-seeking behavior. Yet, these results need to be validated further through scientific replication [54–58] by launching similar experimental studies intended to better understand which types of training designs are most effective and how such training can be structured to prompt increased sophistication of participants' subsequent questions [59]. Future investigators may employ a prospective cohort study to aid in predicting the effect of training on participants' anticipated desire to pursue answers to their questions. Brettle also notes lingering research questions in a systematic review regarding comparative efficacy of training regimens and applicability of various approaches in different situations [60].

There are several other plausible explanations for the inability of this study to confirm at a statistically significant level the authors' second hypothesis regarding increasing sophistication of participant questions. A single three-hour training session may have provided an insufficient catalyst to increase the proportion of more sophisticated foreground questions. The conceptual distinction between the question types was more continuous than categorical, leading to a measurement problem. The conceptual distinction does admittedly introduce an element of vagueness as one approaches the boundary between foreground and background questions. Participants also may not have constituted those professionals who are more likely to have foreground than background questions. Future research could investigate if such tendencies or clustering of information needs exist among various professional groups in the public health community.

Study Limitations and the Need for Replication

A steady rate of attrition occurred in prospective enrollment in the study population during the weeks leading up to either training date, mostly due to potential participants' deployments or reassignments caused by Hurricanes Katrina and Rita during 2005. The authors estimated conservatively that at least 30–40 potential participants could not even commit to participate in advance due to these storms and did not enroll. Some enrollees had to drop out of the study as their training date approached due to these events or due to work reassignments as some DoH employees were assigned to the Gulf States. A transition from a client-based to a web-based email system at the DoH during the July-to-August 2005 enrollment period and email server problems during the January-to-February 2006 enrollment period appeared to hamper initial overall recruitment efforts. The final participation rate of 60 practitioners in this study therefore fell short of the 130 expected participants.

The “Methods” section of this article demonstrates how the study design attempted to minimize any Hawthorne effect. Participants in this study might have experienced the opposite phenomenon of “survey fatigue” due to the 6-week length survey study period. Increasing the $20.00 incentive to a higher amount, if downplayed during the initial study enrollment period for reasons explained in the “Quantity of Questions” section below, might help minimize survey fatigue [61,62].

As the aforementioned text suggests, one threat to validity overshadowed this study. Most of the recruitment and participation for this experiment occurred during a unique time period when the New Mexico DoH was deploying and reassigning personnel due to the public health disaster caused by Hurricane Katrina, aggravated further by the potential threat of Hurricane Rita. The authors repeatedly observed that many participants in this study were distracted generally by these events and their temporary job reassignments. Thus, the results of this study potentially could be affected methodologically by a variation of the threat to validity known as “history” [63–65]. Replication of this study under different circumstances, as noted above, could overcome this potential threat to external validity. Replication would also facilitate any future meta-analyses.

Quantity of Questions

This study demonstrated that a training intervention was associated with a trend toward an increased number of questions that public health practitioners articulated. The authors were uncertain at the beginning of the study about the number of questions that individual public health practitioners might generate during the period of observation. The present study did not attempt to determine such a number, although the study suggested the need for further research to gauge the number of questions public health practitioners might articulate. Prior research on nurses' and physicians' questions revealed a wide range in the number of articulated questions [9, 10, 43–50]. The present study suggested, however, that public health practitioners posed far fewer questions than physicians.

The present study monetarily rewarded participants who submitted questions via the repeated surveys in addition to undergoing the training. Meta-analyses and randomized controlled trials suggest that monetary incentive systems increase overall response rates on surveys [66–69], and monetary incentives additionally tend to increase survey participation across contexts [70]. Another study further determined that issuing monetary rewards are ethical [71]. Researchers might want to amplify these results in similar studies by offering higher monetary rewards, with the expectation that participants will submit more questions, as a means of determining if public health practitioners articulate more questions overall than the present study revealed. Anecdotally, the authors observed that a subset of perhaps fifteen prospective participants seemed to be motivated primarily by the gift cards in exchange for submitting the minimum prerequisite three questions rather than motivated by more altruistic reasons. Thus, any planned increases in monetary value for gift cards in future studies might need to safeguard against overly promoting the gift cards by focusing on the training and survey participation aspects.

Translational Research

The international biomedical community generally refers to the migration of experimental research findings into professional practice as “translational research.” Type I translational research consists of applying laboratory research findings from basic medical sciences theory building to clinical practice. Type II research consists of ensuring that the best clinical evidence reaches the community, thereby benefiting the public [72–74]. Translational processes ideally transfer evidence-based research findings into community-level practice quickly. While this process can be slow and sometimes iterative, translational research ultimately seeks to benefit the public [75,76]. The current experimental study can provide a template for conducting a distinct form of translational research in the health sciences librarianship profession because it links a theory to a rigorous empirical research project, which is further linked to a training experience that is anticipated to benefit all of the participants. The experiment validates the training experience in the process, thereby providing evidence that training can serve as a catalyst for future information-seeking behavior. This observed behavior change might also have the potential to promote the long-term practice of lifelong learning and the drive to stay current in one's profession through information seeking, thereby benefiting the public.

The specific type of randomized controlled trial design described in this article, training the intervention group followed by identical training for the control group after a delay, has great potential for adaptation not only for outreach training studies, but also for curriculum-based library and informatics instruction. These latter contexts also might provide greater control of the incentive systems for academic learners.

Conclusion

This randomized controlled trial confirmed a trend toward increased articulation of questions among public health practitioners following training as compared with personnel who had not yet received such training, lending support to the nationwide effort to train the public health workforce. This study also revealed a trend supporting the second hypothesis that predicted that a training event would stimulate participants to pose more sophisticated foreground questions instead of background questions. This randomized controlled trial did demonstrate that members of the health sciences librarianship profession could adapt this basic design of delaying an intervention for a control group to test similar hypotheses. On a broader scale, this study supported Wilson's theory and model of information seeking and verified previous empirical research studies on information-seeking behavior, while it also reinforced health sciences librarians' existing focus on providing training to aid this group's information seeking.

Acknowledgments

The authors gratefully acknowledge the New Mexico Department of Health's assistance in implementing this project including: regional directors or their designees Karen Armitage, Debra Belyeu, Eugene Marciniak, and Margy Wienbar, for their leadership and support; the DoH professionals for their participation in this study; and Holly Buchanan, AHIP, Janis Teal, AHIP, and Cathy Brandenburg at the University of New Mexico Health Sciences Library and Informatics Center for their support.

Appendix

Survey Email

Greetings:

As part of the research component of our public health informatics training program, please complete the five-minute survey below or in the attachment to this email. Describe in a single sentence, if possible, a question related to performing your daily job duties on the attached survey form. Then, check the appropriate boxes that follow. You are welcome to use either the attachment or the email text below—whichever is more convenient.

Either email jeldredgesalud.unm.edu or fax (505.272.5350) this survey to Jon Eldredge.

Thank you. Your responses will help us improve future public health informatics training.

Name:

Date:

Briefly state your question: _________________

Did you attempt to find the answer? □Yes □ No

If no, why not?

□ Not quite sure how to formulate my question

□ Not enough time

□ Not important enough

□ No idea where to find the answer

□ No access to needed resources

□ Lack of skills to find needed information

□ Other: (explain) _________________________

If yes, did you find an answer? □Yes □ No

Comments: _____

If you did not have any questions for this survey, please check this box □ and return this survey. Please return promptly to Jon Eldredge at jeldredgesalud.unm.edu or via fax 505.272.5350.

Footnotes

Funded by the National Library of Medicine under National Library of Medicine contract N01-LM-1-3515 with the Houston Academy of Medicine-Texas Medical Center Library.

This article has been approved for the Medical Library Association's Independent Reading Program <http://www.mlanet.org/education/irp/>.

Contributor Information

Jonathan D. Eldredge, Associate Professor and Library Knowledge Consultant jeldredge@salud.unm.edu.

Richard Carr, rcarr@salud.unm.edu, Lecturer III and Reference and User Support Services Coordinator; Health Sciences Library and Informatics Center, University of New Mexico, MSC09 5100, 1 University of New Mexico, Albuquerque, NM 87131-0001.

David Broudy, David.Broudy1@state.nm.us, Epidemiologist, State of New Mexico, Department of Health, 1111 Stanford Drive Northeast, Albuquerque, NM 87106.

Ronald E. Voorhees, (former Chief Medical Officer), State of New Mexico, Department of Health, 1190 St. Francis Drive, Santa Fe, NM 87502 voorhees@operamail.com.

References

- 1.Institute of Medicine. The future of public health. Washington, DC: National Academy Press; 1988. p. 19, 32. [Google Scholar]

- 2.Lasker R.D. Data needs in an era of health reform. Washington, DC: Proceedings of the 25th Public Health Conference on Records and Statistics; Jul 17–19, 1995. The quintessential role of information in public health; pp. 3–10. [Google Scholar]

- 3.Friede A., Blum H.L., McDonald M. Public health informatics: how information-age technology can strengthen public health. Annu Rev Public Health. 1995;16:239–52. doi: 10.1146/annurev.pu.16.050195.001323. [DOI] [PubMed] [Google Scholar]

- 4.Momen H. Equitable access to scientific and technical information for health. Bull World Health Organ. 2003;81(10):700. [PMC free article] [PubMed] [Google Scholar]

- 5.Humphreys B.L. Meeting information needs in health policy and public health: priorities for the National Library of Medicine and the National Network of Libraries of Medicine. J Urban Health. 1998 Dec;75(4):878–83. doi: 10.1007/BF02344515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rambo N. Information resources for public health practice. J Urban Health. 1998 Dec;75(4):807–25. doi: 10.1007/BF02344510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rambo N., Dunham P. Information needs and uses of the public workforce—Washington 1997–1998. MMWR Morb Mortal Wkly Rep. 2000 Feb 18;49(6):118–20. [PubMed] [Google Scholar]

- 8.Revere D., Turner A.M., Madhavan A., Rambo N., Bugni P.F., Kimball A.M., Fuller S.S. Understanding the information needs of public health practitioners: a literature review to inform design of an interactive digital knowledge management system. J Biomed Inform. 2007 Aug;40(4):410–21. doi: 10.1016/j.jbi.2006.12.008. [DOI] [PubMed] [Google Scholar]

- 9.Rambo N., Zenan J.S., Alpi K.M., Burroughs C.M., Cahn M.A., Rankin J. Public Health Outreach Forum: lessons learned [special report] Bull Med Libr Assoc. 2001 Oct;89(4):403–6. [PMC free article] [PubMed] [Google Scholar]

- 10.Lee P., Giuse N.B., Sathe N.A. Benchmarking information needs and use in the Tennessee public health community. J Med Libr Assoc. 2003 Jul;91(3):322–36. [PMC free article] [PubMed] [Google Scholar]

- 11.Wallis L.C. Information-seeking behavior of faculty in one school of public health. J Med Libr Assoc. 2006 Oct;94(4):442–6. [PMC free article] [PubMed] [Google Scholar]

- 12.McKnight M., Peet M. Health care providers' information seeking: recent research. Med Ref Serv Q. 2000 Summer;19(2):27–50. doi: 10.1300/J115v19n02_03. [DOI] [PubMed] [Google Scholar]

- 13.O'Carroll P.W., Cahn M.A., Auston I., Selden C.R. Information needs in public health and health policy: results of recent studies. J Urban Health. 1998 Dec;75(4):785–93. doi: 10.1007/BF02344508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Baker E.L., Porter J. Practicing management and leadership: creating the Information Network for Public Health Professionals. J Public Health Manag Pract. 2005 Sep;11(5):469–73. doi: 10.1097/00124784-200509000-00018. [DOI] [PubMed] [Google Scholar]

- 15.Zenan J.S., Rambo N., Burroughs C.M., Alpi K.M., Cahn M.A., Rankin J. Public Health Outreach Forum: report [special report] Bull Med Libr Assoc. 2001 Oct;89(4):400–3. [PMC free article] [PubMed] [Google Scholar]

- 16.Fosetlund L., Bjørndal A. The potential for research-based information in public health: identifying unrecognized information needs. BMC Public Health. 2001. Jan 30, [DOI] [PMC free article] [PubMed]

- 17.Forsetlund L., Bradley P., Forsen L., Nordheim L., Jamtvedt G., Bjørndal A. Randomized controlled trial of a theoretically grounded tailored intervention to diffuse evidence-based public health practice. BMC Med Educ. 2003. Mar 13, [DOI] [PMC free article] [PubMed]

- 18.Fosetlund L., Bjørndal A. Identifying barriers to the use of research faced by public health physicians in Norway and developing an intervention to reduce them. J Health Serv Res. 2002 Jan;7(1):10–8. doi: 10.1258/1355819021927629. [DOI] [PubMed] [Google Scholar]

- 19.Forsetlund L., Talseth K.O., Bradley P., Nordheim L., Bjørndal A. Many a slip between a cup and a lip: process evaluation of a program to promote and support evidence-based public health practice. Eval Rev. 2003 Apr;27(2):179–209. doi: 10.1177/0193841X02250528. [DOI] [PubMed] [Google Scholar]

- 20.Case D.O. Looking for information: a survey of research on information seeking, needs, and behavior. Boston, ME: Academic Press; 2002. pp. 75–7, 113–40. [Google Scholar]

- 21.Wilson T.D. Models of information behavior research. J Doc. 1999 Jun;55(3):249–70. [Google Scholar]

- 22.Wilson T.D. Information behavior: an interdisciplinary perspective. Inf Process Manage. 1997 Jul;33(4):551–72. [Google Scholar]

- 23.Dervin B. Libraries reaching out with health information to vulnerable populations: guidance from research on information seeking and use. J Med Libr Assoc. 2005 Oct;93(4 suppl):S74–80. [PMC free article] [PubMed] [Google Scholar]

- 24.Kuhlthau C.C. A principle of uncertainty for information seeking. J Doc. 1993 Dec;49(4):339–55. [Google Scholar]

- 25.Gorman P.N. Information needs of physicians. J Am Soc Inf Sci. 1995 Dec;46(10):729–36. [Google Scholar]

- 26.Gorman P.N., Ash J., Wykoff L. Can primary care physicians' questions be answered using the medical journal literature. Bull Med Libr Assoc. 1994 Apr;82(2):140–6. [PMC free article] [PubMed] [Google Scholar]

- 27.Covell D.G., Uman G.C., Manning P.R. Information needs in office practice: are they being met. Ann Intern Med. 1985 Oct;103(4):596–9. doi: 10.7326/0003-4819-103-4-596. [DOI] [PubMed] [Google Scholar]

- 28.McKnight M. The information seeking of on-duty critical care nurses: evidence from participant observation and in-context interviews. J Med Libr Assoc. 2006 Apr;94(2):145–51. [PMC free article] [PubMed] [Google Scholar]

- 29.Rasch R.F.R., Cogdill K.W. Nurse practitioners' information needs and information seeking: implications for practice and education. Holist Nurs Pract. 1999 Jul;13(4):90–7. doi: 10.1097/00004650-199907000-00013. [DOI] [PubMed] [Google Scholar]

- 30.Ely J.W., Burch R.J., Vinson D.C. The information needs of family physicians: case-specific clinical questions. J Fam Pract. 1992 Sep;35(3):265–9. [PubMed] [Google Scholar]

- 31.Gorman P.N., Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995 Apr;15(2):113–9. doi: 10.1177/0272989X9501500203. [DOI] [PubMed] [Google Scholar]

- 32.Osheroff J.A., Forsythe D.E., Buchanan B.G., Bankowitz R.A., Blumenfeld B.H., Miller R.A. Physicians' information needs: analysis of questions posed during clinical teaching. Ann Intern Med. 1991 Apr 1;114(7):576–81. doi: 10.7326/0003-4819-114-7-576. [DOI] [PubMed] [Google Scholar]

- 33.Skelton B. Scientists and social scientists as information users: a comparison of results of science user studies with the investigation into information requirement of the social sciences. J Libr. 1973 Apr;5(2):139–56. [Google Scholar]

- 34.Richardson W.S., Mulrow C.D. Lifelong learning and evidence-based medicine for primary care. In: Noble J, ed. Textbook of primary care medicine. St. Louis, MO: Mosby; 2001. pp. 2–9. [Google Scholar]

- 35.Walton L.J., Hasson S., Ross F.V., Martin E.R. Outreach to public health professionals: lessons learned from a collaborative Iowa public health project. Bull Med Libr Assoc. 2000 Apr;88(2):165–71. [PMC free article] [PubMed] [Google Scholar]

- 36.Urbaniak G.C., Plous S. Research randomizer [Internet] Middletown, CT: Social Psychology Network; 2008. [cited 28 Feb 2008]. < http://www.randomizer.org>. [Google Scholar]

- 37.Eldredge J.D. The randomized controlled trial design: unrecognized opportunities for health sciences librarianship. Health Info Libr J. 2003 Jun;20(suppl 1):34–44. doi: 10.1046/j.1365-2532.20.s1.7.x. [DOI] [PubMed] [Google Scholar]

- 38.Lasker R.D. Strategies for addressing priority information problems in health policy and public health. J Urban Health. 1998 Dec;75(4):88–95. doi: 10.1007/BF02344517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jenicek M. Epidemiology, evidenced-based medicine, and evidence-based public health. J Epidemiol. 1997 Dec;7(4):187–97. doi: 10.2188/jea.7.187. [DOI] [PubMed] [Google Scholar]

- 40.Brownson R.C., Gurney J.G., Land G.H. Evidence-based decision making in public health. J Public Health Manag Pract. 1999 Sep;5(5):86–97. doi: 10.1097/00124784-199909000-00012. [DOI] [PubMed] [Google Scholar]

- 41.Gray J.A.M. Evidence-based public health. In: Trinder L, Reynolds S, eds. Evidence-based practice: a critical appraisal. Oxford, UK: Blackwell Science; 2000. pp. 89–110. [Google Scholar]

- 42.Nutbeam D. Achieving ‘best practice’ in health promotion: improving the fit between research and practice. Health Educ Res. 1996 Sep;11(3):317–26. doi: 10.1093/her/11.3.317. [DOI] [PubMed] [Google Scholar]

- 43.Alpi K.M. Expert searching in public health. J Med Libr Assoc. 2005 Jan;93(1):97–103. [PMC free article] [PubMed] [Google Scholar]

- 44.Adily A., Westbrook J.I., Coiera E.W., Ward J.E. Use of on-line evidence databases by Australian public health practitioners. Med Inform Internet Med. 2004 Jun;29(2):127–36. doi: 10.1080/14639230410001723437. [DOI] [PubMed] [Google Scholar]

- 45.Mullaly-Quijas P., Ward D.H., Woelfl N. Using focus groups to discover health professionals' information needs: a regional marketing study. Bull Med Libr Assoc. 1994 Jul;82(3):305–11. [PMC free article] [PubMed] [Google Scholar]

- 46.Lynch C. The retrieval problem for health policy and public health: knowledge bases and search engines. J Urban Health. 1998 Dec;75(4):794–806. doi: 10.1007/BF02344509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.LaPelle N.R., Luckmann R., Simpson E.H., Martin E.R. Identifying strategies to improve access to credible and relevant information for public health professionals: a qualitative study. BMC Public Health. 2006;6:89. doi: 10.1186/1471-2458-6-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Roethlisberger FJ, Dickson W.J. Management and the worker: an account of a research program conducted by the Western Electric Company, Hawthorne Works, Chicago. Cambridge, MA: Harvard University Press; 1939. pp. 194–9, 227. [Google Scholar]

- 49.Rosenberg W.M.C., Deeks J., Lusher A., Snowball R., Dooley G., Sackett D. Improving searching skills and evidence retrieval. J R Coll Physicians Lond. 1998 Nov;32(6):557–63. [PMC free article] [PubMed] [Google Scholar]

- 50.Cogdill K.W. Introduction: public health information outreach. J Med Libr Assoc. 2007 Jul;95(3):290–2. doi: 10.3163/1536-5050.95.3.290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Humphreys B.L. Building better connections: the National Library of Medicine and public health. J Med Libr Assoc. 2007 Jul;95(3):293–300. doi: 10.3163/1536-5050.95.3.293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cahn M.A., Auston I., Selden C.R., Cogdill K., Baker S., Cavanaugh D., Elliott S., Foster A.J., Leep C.J., Perez D.J., Pomietto B.R. The Partners in Information Access for the Public Health Workforce: a collaboration to improve and protect the public's health, 1995–2006. J Med Libr Assoc. 2007 Jul;95(3):301–9. doi: 10.3163/1536-5050.95.3.301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cogdill K.W., Ruffin A.B., Stavri P.Z. The National Network of Libraries of Medicine's outreach to the public health workforce: 2001–2006. J Med Libr Assoc. 2007 Jul;95(3):310–5. doi: 10.3163/1536-5050.95.3.310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Plutchak T.S. Building a body of evidence [editorial] J Med Libr Assoc. 2005 Apr;93(2):193–5. [PMC free article] [PubMed] [Google Scholar]

- 55.Butz W.P., Torrey B.B. Some frontiers in social science. Science. 2006 Jun 30;312(5782):1898–900. doi: 10.1126/science.1130121. [DOI] [PubMed] [Google Scholar]

- 56.Klein J.G., Brown G.T., Lysyk M. Replication research: a purposeful occupation worth repeating. Can J Occup Ther. 2000 Jun;67(3):155–61. doi: 10.1177/000841740006700310. [DOI] [PubMed] [Google Scholar]

- 57.Rosenthal R. Replication in behavioral research. J Soc Behav Pers. 1990;5(4):1–30. [Google Scholar]

- 58.Schneider B. Building a scientific community: the need for replication. Teach Coll Rec. 2004 Jul;106(7):1471–83. [Google Scholar]

- 59.Smith C.A., Ganschow P.S., Reilly B.M., Evans A.T., McNutt R.A., Osei A., Saquib M., Surabhi S., Yadav S. Teaching residents evidence-based medicine skills: a controlled trial of effectiveness and assessment of durability. J Gen Intern Med. 2000;15(10):710–5. doi: 10.1046/j.1525-1497.2000.91026.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Brettle A. Information skills training: a systematic review of the literature. Health Info Libr J. 2003;20:3–9. doi: 10.1046/j.1365-2532.20.s1.3.x. [DOI] [PubMed] [Google Scholar]

- 61.Fink A. How to conduct surveys. 3rd ed. Thousand Oaks, CA: Sage Publication; 2006. p. 57. [Google Scholar]

- 62.Bourque L.B., Fielder E.P. How to conduct self-administered and mail surveys. Thousand Oaks, CA: Sage Publications; 1995. [Google Scholar]

- 63.Miller D.C., Salkind N.J. Handbook of research design and social measurement. 6th ed. Thousand Oaks, CA: Sage Publications; 2002. pp. 49–51. [Google Scholar]

- 64.Neuman W.L. Social research methods: qualitative and quantitative approaches. 6th ed. Boston, MA: Pearson Education; 2006. pp. 259–68. [Google Scholar]

- 65.Shadish W.R., Cook T.D., Campbell D.T. Experimental and quasi-experimental design for generalized causal inference. Boston, MA: Houghton Mifflin Company; 2002. pp. 54–6. [Google Scholar]

- 66.Singer E., Van Hoewyk J., Gebler N., Raghunathan T., McGonagle The effect of incentives on response rates in interviewer-mediated surveys. J Off Stat. 1999;15(2):217–30. [Google Scholar]

- 67.Church A.H. Estimating the effect of incentives on mail survey response rates: a meta-analysis. Public Opin Q. 1993 Spring;57(1):62–79. [Google Scholar]

- 68.Whiteman M.K., Langenberg P., Kjerulff K., McCarter R., Flaws J.A. A randomized trial of incentives to improve response rates to a mailed women's health questionnaire. J Womens Health. 2003;12(8):821–8. doi: 10.1089/154099903322447783. [DOI] [PubMed] [Google Scholar]

- 69.Roberts L.M., Wilson S., Roalfe A., Bridge P. A randomized controlled trial to determine the effect on response of including a lottery incentive in health surveys. BMC Health Serv Res. 2004;4:30. doi: 10.1186/1472-6963-4-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Groves R.M., Singer E., Corning A. Leverage-saliency theory of survey participation: description and an illustration. Public Opin Q. 2000 Fall;64(3):299–308. doi: 10.1086/317990. [DOI] [PubMed] [Google Scholar]

- 71.Singer E., Bossarte R.M. Incentives for survey participation: when are they coercive. Am J Prev Med. 2006 Nov;31(5):411–8. doi: 10.1016/j.amepre.2006.07.013. [DOI] [PubMed] [Google Scholar]

- 72.Sussman S., Valente T.W., Rohrbach L.A., Skara S., Pentz M.A. Translation in the health professions: converting science into action. Eval Health Prof. 2006 Mar;29(1):7–32. doi: 10.1177/0163278705284441. [DOI] [PubMed] [Google Scholar]

- 73.Rohrbach L.A., Grana R., Sussman S., Valente T.W. Type II translation: transporting prevention interventions from research to real-world settings. Eval Health Prof. 2006 Sep;29(3):302–33. doi: 10.1177/0163278706290408. [DOI] [PubMed] [Google Scholar]

- 74.Colón-Ramos U., Lindsay A.C., Monge-Rojas R., Greaney M.L., Campos H., Peterson K.E. Translating research into action: a case study on trans fatty acid research and nutrition policy in Costa Rica. Health Policy Plan. 2007 Nov;22(6):363–74. doi: 10.1093/heapol/czm030. [DOI] [PubMed] [Google Scholar]

- 75.Ginexi E.M., Hilton T.F. What's next for translation research. Eval Health Prof. 2006 Sep;29(3):334–47. doi: 10.1177/0163278706290409. [DOI] [PubMed] [Google Scholar]

- 76.Simons-Morton B.G., Winston F.K. Translational research in child and adolescent transportation safety. Eval Health Prof. 2006 Mar;29(1):33–64. doi: 10.1177/0163278705284442. [DOI] [PubMed] [Google Scholar]