Abstract

Objectives:

The authors developed a tool to assess the quality of search filters designed to retrieve records for studies with specific research designs (e.g., diagnostic studies).

Methods:

The UK InterTASC Information Specialists' Sub-Group (ISSG), a group of experienced health care information specialists, reviewed the literature to evaluate existing search filter appraisal tools and determined that existing tools were inadequate for their needs. The group held consensus meetings to develop a new filter appraisal tool consisting of a search filter appraisal checklist and a structured abstract. ISSG members tested the final checklist using three published search filters.

Results:

The detailed ISSG Search Filter Appraisal Checklist captures relevance criteria and methods used to develop and test search filters. The checklist includes categorical and descriptive responses and is accompanied by a structured abstract that provides a summary of key quality features of a filter.

Discussion:

The checklist is a comprehensive appraisal tool that can assist health sciences librarians and others in choosing search filters. The checklist reports filter design methods and search performance measures, such as sensitivity and precision. The checklist can also aid filter developers by indicating information on core methods that should be reported to help assess filter suitability. The generalizability of the checklist for non-methods filters remains to be explored.

Highlights

Increasing numbers of search filters to identify research conducted according to specific research methods are being published.

Users may need help to identify and select filters.

The authors developed a structured tool to extract the key methods and performance data from reports describing search filters.

Implications

The UK InterTASC Information Specialists' Sub-Group (ISSG) Search Filter Appraisal Checklist can assist with the practice of evidence-based librarianship.

The tool assesses the methods, reliability, and generalizability of search filters, and completed appraisals are available on the ISSG search filter website.

Introduction

Search filters are developed to improve the efficiency and effectiveness of searching and are typically created by identifying and combining search terms to retrieve records with a common feature [1]. Filters can be expert informed, research based, or a combination [1]. Information about the methods of filter development, along with the results of testing, is important to enable potential users to judge whether the filter is relevant and reliable [1,2].

Over the last two decades, research methods have been increasingly used to develop and test search filters, to make them more robust and reliable [3–6]. Research-based search filters are included in bibliographic databases such as PubMed (Clinical Queries function), and others have been developed to assist with international study identification exercises for databases such as CENTRAL and The Database of Abstracts of Reviews of Effects (DARE) [5–9]. Search filters are proliferating as librarians and researchers try to identify records reporting projects with specific study designs (e.g., randomized controlled trials) to assist with evidence-based health care [5, 7–9]. For example, at least eight search filters are available for retrieving diagnostic test accuracy studies from MEDLINE [10]. Even experienced health sciences librarians may be challenged to select appropriate filters and to advise researchers about which, if any, to use for a particular search query.

In evidence-based health care, many critical appraisal tools have been developed to assess the quality and relevance of research reports [11–13]. The UK InterTASC Information Specialists' Sub-Group (ISSG), which supports the research groups providing technology assessments to the National Institute for Health and Clinical Excellence in the United Kingdom, identified the need for such a tool to help its members select from the search filters on its website [14]. This paper describes the ISSG's process for developing a tool to appraise search filters that would help their members, health sciences librarians, and others to choose the most relevant filter for their needs.

Methods

The ISSG Search Filter Appraisal Checklist was developed using consensus methods over three meetings of the ISSG during 2006 and 2007. ISSG members felt that, as a group of highly skilled health care information specialists, they had the relevant skills to develop such a tool, having experience with publishing search filters, testing search filter performance, practicing critical appraisal, and developing checklists and structured abstracts.

Assessment of Existing Tools

Before the first meeting, the ISSG members searched the MEDLINE and Library and Information Science Abstracts databases and their own personal reference collections to identify existing tools. The searches included the following terms (with the asterisk representing truncation):

(“search filter*” OR “search hedge*” OR “search strateg*”) AND (appraisal OR checklist* OR tool* Or assessment*)

Consensus Meetings

The team held a series of meetings in person with follow-up conversations through email to discuss existing filter appraisal tools, draft and test checklists to promote discussion of key elements, and determine the final form of the checklist. The group also debated the need for an accompanying summary or abstract to complement the filter checklist and provide an overview of the methods used to develop a filter.

As noted, the group drafted a pilot checklist to begin discussion of tool specifications. The team tested the pilot checklist against a recent filter developed by Zhang et al., which had a detailed methods description [15], and developed a subsequent, revised checklist informed by members' critiques of the pilot tool. At a second meeting, the group tested the revised checklist against three different filters that used different methods of filter design [16–18]. Two of the filters were from published articles with detailed methods sections [16,18], and one was published on a website that reported little about its development [17]. During the meeting, the ISSG members discussed the usability, clarity, practicality, and reproducibility of the draft checklist and two abstract or summary formats (Figures 1 and 2).

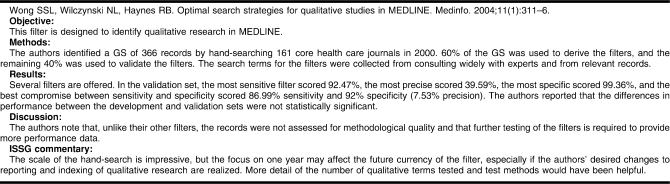

Figure 1.

Example brief abstract format (describing filters published by Wong et al.) tabled and rejected by the UK InterTASC Information Specialists' Sub-Group (ISSG)

Figure 2.

Example structured abstract format (describing filters published by Wong et al.) tabled and accepted by the ISSG

Results

Review of Existing Search Filter Appraisal Tools

One checklist was identified by searches: Jenkins's search filter appraisal checklist [1]. The ISSG felt that Jenkins's checklist was helpful but not entirely suitable because it focused on generally determining whether filter design methods were reported rather than collect filter design details. The tool also offered few opportunities to extract data describing a filter's performance. For example, Jenkins's checklist asked “Do the authors report clearly how the filter performance was tested?” but did not ask what performance testing was undertaken or prompt the assessor to report performance data. Jenkins's checklist also asked some highly technical questions that might be difficult for some assessors to answer; for example, “Does the gold standard have sufficient power to allow statistically significant results?” Some of the questions—such as “Are the methods of search term derivation clearly described, and are they reasonable and likely to be effective?”—were difficult to answer because they contained several elements. ISSG members agreed that Jenkins's checklist was a helpful prompt, however, a more detailed checklist was required.

Pilot Checklist Development and Tool Specifications

Based on information gained through assessing the existing filter tool and participants' prior knowledge of searching, the ISSG drafted a pilot checklist and a brief summary. The checklist and the summary template were tested on a search filter by Zhang et al. [15], and the group discussed strengths and weaknesses, determining key checklist concepts:

the focus and scope of a filter: limitations, generalizability, and obsolescence;

the quality of the methods used to develop the filter: specifically, how gold standards of relevant records (sets of publications relevant to a topic, identified through hand-searching publications or other methods, that may be used to identify search terms and/or test the performance of a filter) were identified, how search terms were identified, how the strategy was developed, and how the filter performance was tested on the test gold standard (internal validity) and on separate validation gold standards (external validity).

The group agreed that a checklist should contain both categorical and descriptive information. A checklist should avoid numerical quality scores, for individual elements and the overall tool, because of known difficulties in assigning scores to individual dimensions of a tool and in interpreting a final combined score [19]. ISSG members also felt that the dilemma of reporting adequacy should be addressed by wording comments to indicate that the assessment of the quality of the search filter design must be made from the (sometimes limited) information provided in filter creators' reports describing a filter.

Additionally, the group felt that the format of a checklist should be flexible to cope with the variety of search filter design methods. The stages of search filter design and purpose should also be broken down into focused questions, and the checklist should include data extracted from the publication describing the filter. The members agreed that the ISSG checklist should allow for narrative comment.

The ISSG also addressed the need for a summary statement to complement the checklist. Two alternative templates were developed: a one hundred–word summary and a longer, structured abstract (Figures 1 and 2). The structured abstract template was designed to describe the filter objective, the methods used to develop the filter, key validation data, any reported limitations of the filter design, and additional comments, as appropriate.

Using these design principles, the group drafted a revised checklist and abstract template.

Refinement of the Checklist Tool

As described in the “Methods” section, the ISSG tested the revised checklist against three published filters [16–18] with varied design methods and noted improvements. The revised checklist captured relevance information more effectively, and members felt that the revision addressed the issue that the assessor can only assess what a filter author reports. The tool achieved this not only by recording the reporting of the design, but also by including prompts reflecting issues of design quality. These prompts should alert assessors to consider whether (unreported) alternative approaches might have been more suitable.

The ISSG felt that the revised checklist was flexible enough to capture the growing variety of methods reported in search filter design. It could capture information about multiple gold standards and validation testing activity. It also allowed an assessor to report performance comparisons against other filters, which strengthened the information available for deciding between filters. The checklist, however, still required work to capture information on how strategies were derived from the selected search terms.

ISSG members also chose between summary and abstract formats to accompany the checklist portion of the filter appraisal tool. A structured abstract was agreed to be more helpful than a one hundred–word summary because it captured the filter objective, the main methods used to develop the filter, any key validation data, and any major limitations to the filter design. An abstract also provided space to summarize the strengths and weakness of the filter design. ISSG members agreed that the abstract was suitable for quick assessment of relevance, with the checklist offering the essential detail required for informed decision making.

Following discussions, the checklist was revised again and underwent a final round of feedback. The ISSG agreed on the final ISSG Search Filter Appraisal Checklist and structured abstract format at a third meeting in April 2007. The final ISSG Search Filter Appraisal Checklist is shown in Table 1. Examples of completed checklists are published on the ISSG website [20], and an example structured abstract is shown in Figure 2.

Table 1.

UK InterTASC Information Specialists' Sub-Group (ISSG) Search Filter Appraisal Checklist

Discussion

The ISSG Search Filter Appraisal Checklist is being used by ISSG members to appraise published search filters. Checklists are completed by an information professional, checked by an independent assessor, and edited by the website editor for consistency. Copies of checklists will be sent to the original authors of the filters, and feedback will be published. Completed checklists are published on the ISSG website [14].

The ISSG Search Filter Appraisal Checklist is designed to be comprehensive. Its structure follows the life cycle of the process involved in developing a search filter from gold standard identification, search term selection, strategy development, testing, and validation through to comparison with other filters. It may take time to complete but should provide clearer insight into the quality and suitability of a filter. The checklist is not exclusive. It does not “reject” search filters that have been designed informally or have not been tested or validated. It does, however, allow librarians and others to differentiate easily between evidence-based, validated filters and those of a less rigorous design.

Future Research

There is scope to evaluate the performance of the checklist, using independent assessors and a range of filters. Evaluations could assess ease of use, clarity, comprehensiveness, and consistency. Since the checklist was finalized, the Canadian Agency for Drugs and Technologies in Health's (CADTH's) critical appraisal and ranking tool for search filters has been developed. The CADTH tool is less detailed than the ISSG checklist and incorporates a score [21], and a formal comparison of the two tools is a topic for further research.

In addition, the ISSG checklist focuses on search filters designed to retrieve studies with specific research methods (such as systematic reviews) or study type focus (such as diagnostic tests). Some of the checklist's elements are likely to be applicable to search filters in other areas. Health sciences librarians may wish to explore the applicability of the checklist beyond methods search filters.

Conclusions

Health sciences librarians trying to decide between search filters now have several tools. They can use the ISSG website to find appraisals of filters in the form of structured abstracts and checklists. The abstract offers a rapid assessment of relevance, and the checklist offers more detailed information to assist with deciding whether a filter is useful. Alternatively, librarians can complete the blank checklist themselves to assess a filter of interest. The website and checklist are also resources that librarians can recommend to relevant inquirers.

Critical appraisal checklists serve several purposes. The clear breakdown of the reported methods in the ISSG Search Filter Appraisal Checklist is designed in the hope, shared with designers of other critical appraisal tools, that it will encourage filter authors, many of whom are librarians, to report detailed methods [22]. In highlighting methods to report to help readers assess the quality and relevance of a filter, librarians can also assist authors in achieving more transparent research reporting.

Conflict of Interest Statement

Andrew Booth, Cynthia Fraser, Julie Glanville, Su Golder, and Carol Lefebvre have published search filters.

Acknowledgments

The work of InterTASC members, including the ISSG, is funded through the UK National Institute for Health Research Health Technology Assessment Programme. The views and opinions expressed herein are those of the authors and do not necessarily reflect those of the programme. We acknowledge Janette Boynton and Louise Foster of Quality Improvement Scotland and Anne Eisinga of the UK Cochrane Centre for systematic searches of the literature undertaken to identify filters for inclusion on the ISSG website. We are grateful for comments received from Mike Clarke, director of the UK Cochrane Centre.

Contributor Information

Julie Glanville, Project Director–Information Services, York Health Economics Consortium, Level 2, Market Square, University of York, York, YO10 5NH, United Kingdom jmg1@york.ac.uk.

Sue Bayliss, Information Specialist, Aggressive Research Intelligence Facility/West Midlands Health Technology Assessment Collaboration, Department of Public Health and Epidemiology, University of Birmingham, Edgbaston, B15 2TT, United Kingdom s.bayliss@bham.ac.uk.

Andrew Booth, Director of Information Resources and Reader in Evidence Based Information Practice, School of Health and Related Research (ScHARR), University of Sheffield, Regent Court, 30 Regent Street, Sheffield, S1 4DA, United Kingdom A.Booth@sheffield.ac.uk.

Yenal Dundar, Doctor, Department of Psychiatry, North Devon District Hospital, Raleigh Park, Barnstaple, EX31 4JB, United Kingdom yenal@liverpool.ac.uk.

Hasina Fernandes, Information Specialist, National Institute for Health and Clinical Excellence, MidCity Place, 71 High Holborn, London, WC1V 6NA, United Kingdom Hasina.Fernandes@nice.org.uk.

Nigel David Fleeman, Research Fellow, Liverpool Reviews and Implementation Group, School of Population, Community and Behavioural Sciences, University of Liverpool, Sherrington Buildings, Ashton Street, Liverpool, L69 3GE, United Kingdom Nigel.Fleeman@liverpool.ac.uk.

Louise Foster, Health Information Scientist, National Health Service Quality Improvement Scotland, Delta House, 50 West Nile Street, Glasgow, G1 2NP, United Kingdom louisefoster@nhs.net.

Cynthia Fraser, Information Officer, Health Services Research Unit, University of Aberdeen, Health Sciences Building, Foresterhill, Aberdeen, AB25 2ZD, United Kingdom c.fraser@abdn.ac.uk.

Anne Fry-Smith, Lead Information Specialist, West Midlands Health Technology Assessment Collaboration, University of Birmingham, Edgbaston, Birmingham, B15 2TT, United Kingdom A.S.Fry-Smith@bham.ac.uk.

Su Golder, Information Officer, Centre for Reviews and Dissemination, University of York, York, YO10 5DD, United Kingdom spg3@york.ac.uk.

Carol Lefebvre, Senior Information Specialist, UK Cochrane Centre, National Institute for Health Research, Summertown Pavilion, Middle Way, Oxford, OX2 7LG, United Kingdom CLefebvre@cochrane.co.uk.

Caroline Miller, Information Specialist, National Institute for Health and Clinical Excellence, MidCity Place, 71 High Holborn, London, WC1V 6NA, United Kingdom Caroline.Miller@nice.org.uk.

Suzy Paisley, Research Fellow, ScHARR, University of Sheffield, 30 Regent Street, Sheffield, S1 4DA, United Kingdom s.paisley@sheffield.ac.uk.

Liz Payne, Independent Information Specialist, Salisbury, United Kingdom eapayne@go.com.

Alison Price, Information Scientist, Wessex Institute for Health Research and Development, Mailpoint 728, Boldrewood, University of Southampton, Southampton, SO16 7PX, United Kingdom A.M.Price@soton.ac.uk.

Karen Welch, Information Scientist, Wessex Institute for Health Research and Development, Mailpoint 728, Boldrewood, University of Southampton, Southampton, SO16 7PX, United Kingdom; on behalf of the InterTASC Information Specialists' Sub-Group K.Welch@soton.ac.uk.

References

- 1.Jenkins M. Evaluation of methodological search filters—a review. Health Info Libr J. 2004 Sep;21(3):148–63. doi: 10.1111/j.1471-1842.2004.00511.x. [DOI] [PubMed] [Google Scholar]

- 2.Leeflang M.M., Scholten R.J., Rutjes A.W., Reitsma J.B., Bossuyt P.M. Use of methodological search filters to identify diagnostic accuracy studies can lead to the omission of relevant studies. J Clin Epidemiol. 2006 Mar;59(3):234–40. doi: 10.1016/j.jclinepi.2005.07.014. [DOI] [PubMed] [Google Scholar]

- 3.Haynes R.B., Wilczynski N., McKibbon K.A., Walker C.J., Sinclair J.C. Developing optimal search strategies for detecting clinically sound studies in MEDLINE. J Am Med Inform Assoc. 1994 Nov;1(6):447–58. doi: 10.1136/jamia.1994.95153434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Boynton J., Glanville J., McDaid D., Lefebvre C. Identifying systematic reviews in MEDLINE: developing an objective approach to search strategy design. J Inf Sci. 1998 Jun;24(3):137–54. [Google Scholar]

- 5.White V.J., Glanville J., Lefebvre C., Sheldon T.A. A statistical approach to designing search filters to find systematic reviews: objectivity enhances accuracy. J Inf Sci. 2001 Jun;27:357–70. [Google Scholar]

- 6.Wilczynski N.L., Morgan D., Haynes R.B. The Hedges Team. An overview of the design and methods for retrieving high-quality studies for clinical care. BMC Med Inform Decis Mak. 2005 Jun;5:20. doi: 10.1186/1472-6947-5-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Glanville J.M., Lefebvre C., Miles J.N.V., Camosso-Stefinovic J. How to identify randomized controlled trials in MEDLINE: ten years on. J Med Libr Assoc. 2006 Apr;94(2):130–6. [PMC free article] [PubMed] [Google Scholar]

- 8.National Library of Medicine. PubMed Clinical Queries [Internet] Bethesda, MD: The Library; 2007. [cited 1 Jul 2008]. < http://www.ncbi.nlm.nih.gov/entrez/query/static/clinical.shtml>. [Google Scholar]

- 9.Lefebvre C., Manheimer E., Glanville J. Chapter 6: searching for studies. In: Higgins J.P., Green S., editors. Cochrane handbook for systematic reviews of interventions [Internet]. version 5.0.0. The Cochrane Collaboration; 2008. [rev. Feb 2008; cited 1 Jul 2008]. < http://www.cochrane-handbook.org>. [Google Scholar]

- 10.InterTASC Information Specialists' Sub-Group. Search filter resource: diagnostic studies [Internet] York, UK: The Sub-Group; 2008. [rev. 1 Jul 2008; cited 1 Jul 2008]. < http://www.york.ac.uk/inst/crd/intertasc/diag.htm>. [Google Scholar]

- 11.Moher D., Cook D.J., Eastwood S., Olkin I., Rennie D., Stroup D.F. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. quality of reporting of meta-analyses. Lancet. 1999 Nov 27;354(9193):1896–900. doi: 10.1016/s0140-6736(99)04149-5. [DOI] [PubMed] [Google Scholar]

- 12.The CONSORT Group. The CONSORT statement [Internet] The Group; 2001. [rev. 22 Oct 2007; cited 1 Jul 2008]. < http://www.consort-statement.org/index.aspxo1011>. [Google Scholar]

- 13.Whiting P., Rutjes A.W.S., Reitsma J.B., Bossuyt P.M.M., Kleijnen J. The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol. 2003. Nov, [DOI] [PMC free article] [PubMed]

- 14.InterTASC Information Specialists' Sub-Group. Search filter resource [Internet] York, UK: The Sub-Group; 2008. [rev. 13 May 2008; cited 20 May 2008]. < http://www.york.ac.uk/inst/crd/intertasc/>. [Google Scholar]

- 15.Zhang L., Ajiferuke I., Sampson M. Optimizing search strategies to identify randomized controlled trials in MEDLINE. BMC Med Res Methodol. 2006. [DOI] [PMC free article] [PubMed]

- 16.Bachmann L.M., Coray R., Estermann P., Ter Riet G. Identifying diagnostic studies in MEDLINE: reducing the number needed to read. J Am Med Inform Assoc. 2002 Nov;9(6):653–8. doi: 10.1197/jamia.M1124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Scottish Intercollegiate Guidelines Network. Search filters: economic studies [Internet] Edinburgh, UK: The Network; 2006. [rev. 14 Mar 2008 cited 1 Jul 2008]. < http://www.sign.ac.uk/methodology/filters.html#econ>. [Google Scholar]

- 18.Wong S.S.L., Wilczynski N.L., Haynes R.B. Developing optimal search strategies for detecting clinically relevant qualitative studies in MEDLINE. Medinfo. 2004;11(1):311–4. [PubMed] [Google Scholar]

- 19.Whiting P., Harbord R., Kleijnen J. No role for quality scores in systematic reviews of diagnostic accuracy studies. BMC Med Res Methodol. 2005. [DOI] [PMC free article] [PubMed]

- 20.InterTASC Information Specialists' Sub-Group. Search filter resource: qualitative research [Internet] York, UK: The Sub-Group; 2008. [rev. 11 Mar 2008; cited 1 Jul 2008]. < http://www.york.ac.uk/inst/crd/intertasc/qualitat.htm>. [Google Scholar]

- 21.Bak G. CADTH CAI and ranking tool for search filters. 2007. Workshop paper presented at: Health Technology Assessment International; Barcelona, Spain;

- 22.The CONSORT Group. Welcome to the CONSORT statement website [Internet] The Group; 2007. [cited 1 Jul 2008]. < http://www.consort-statement.org>. [Google Scholar]