Abstract

The two halves of the brain are believed to play different roles in emotional processing, but the specific contribution of each hemisphere continues to be debated. The right-hemisphere hypothesis suggests that the right cerebrum is dominant for processing all emotions regardless of affective valence, whereas the valence specific hypothesis posits that the left hemisphere is specialized for processing positive affect while the right hemisphere is specialized for negative affect. Here, healthy participants viewed two split visual-field facial affect perception tasks during functional magnetic resonance imaging, one presenting chimeric happy faces (i.e. half happy/half neutral) and the other presenting identical sad chimera (i.e. half sad/half neutral), each masked immediately by a neutral face. Results suggest that the posterior right hemisphere is generically activated during non-conscious emotional face perception regardless of affective valence, although greater activation is produced by negative facial cues. The posterior left hemisphere was generally less activated by emotional faces, but also appeared to recruit bilateral anterior brain regions in a valence-specific manner. Findings suggest simultaneous operation of aspects of both hypotheses, suggesting that these two rival theories may not actually be in opposition, but may instead reflect different facets of a complex distributed emotion processing system.

Keywords: FMRI, neuroimaging, faces, emotion, affect

For nearly three decades, the field of affective neuroscience has debated the question of how the brain is organized to process emotions, with considerable emphasis placed on the lateralization of these processes between the two halves of the brain. For the behavioral expression of emotion, evidence suggests that the anterior regions of the brain are organized asymmetrically, with the left cerebral hemisphere specialized for processing positive or approach-related emotions and the right hemisphere specialized for processing negative or withdrawal-related emotions (Davidson, 1992, 1995). For the perception of emotional stimuli, however, the evidence for lateralization has been less consistent (Rodway et al., 2003). Two major theories of cerebral lateralization of emotional perception have been proposed: (i) the Right-Hemisphere Hypothesis (RHH), which posits that the right half of the brain is specialized for processing all emotions, regardless of affective valence (Borod et al., 1998), and (ii) the Valence-Specific Hypothesis (VSH), which asserts that each half of the brain is specialized for processing particular classes of emotion, with the left cerebral hemisphere specialized for processing positive emotions and the right hemisphere specialized for processing negative emotions (Ahern and Schwartz, 1979; Wedding and Stalans, 1985; Adolphs et al., 2001). While the RHH has received the most consistent support (Rodway et al., 2003), it has been difficult to reconcile this theory with a number of compelling reports suggesting a valence-specific organization of emotional perception (Natale et al., 1983; Canli et al., 1998; Rodway et al., 2003).

Since the late 1970s, numerous studies have demonstrated support for one or the other of these two rival hypotheses (Ley and Bryden, 1979; Reuter-Lorenz and Davidson, 1981; Natale et al., 1983; Reuter-Lorenz et al., 1983; McLaren and Bryson, 1987; Rodway et al., 2003). Support for each hypothesis comes from a common body of research with patients suffering from brain damage localized to a single hemisphere and studies examining visual field perceptual biases in healthy normal individuals. In some studies, patients with lesions to the right hemisphere have greater impairment in the perception of emotional faces, regardless of the valence of the expressed emotion, relative to patients with comparable lesions to the left hemisphere, providing support for the RHH (Adolphs et al., 1996; Borod et al., 1998; Adolphs et al., 2000). Other studies, however, find that unilateral brain damage to the left hemisphere impairs the perception of positive emotions while comparable right hemisphere lesions impair perception of negative emotions—evidence that generally supports the VSH (Borod et al., 1986; Mandal et al., 1991).

Compelling evidence for each of these hypotheses comes also from perceptual studies of healthy normal individuals. Many of these investigations have relied on experimental designs that capitalize on the inherent divided and crossed nature of the visual system, which projects information from one half of the visual perceptual field directly to the opposing hemisphere during the initial stages of sensory and perceptual processing (Levy et al., 1983; Wedding and Stalans, 1985; Hugdahl et al., 1989). A particularly intriguing paradigm for studying lateralized emotional perception has involved the use of facial expression stimuli that are artificially designed to project a different emotion to each half of the brain simultaneously (Levy et al., 1972). These ‘chimeric’ faces are composite expressions that are artificially created by splicing together opposing halves from two photographs of the same person expressing different emotions, such that one side of the face displays an emotional expression (e.g. sadness) while the other half is emotionally neutral. Studies using these and related techniques suggest that most healthy normal right-handed individuals show a clear perceptual bias toward emotional information falling into the left visual hemifield (i.e. projected initially to the right cerebral hemisphere) (Levy et al., 1983; McLaren and Bryson, 1987; Moreno et al., 1990; Hugdahl et al., 1993). Although a left visual hemifield (i.e. right-hemisphere) bias is often found for emotional perception in general, some studies of normal healthy volunteers have shown a valence-specific effect suggesting that negative emotions are recognized more readily within the left visual field (LVF) (i.e. right hemisphere), while positive emotions are recognized more effectively in the right visual field (RVF) (i.e. left hemisphere) (Reuter-Lorenz and Davidson, 1981; Natale et al., 1983; Reuter-Lorenz et al., 1983; Jansari et al., 2000; Rodway et al., 2003). Despite more than two decades of behavioral, cognitive neuropsychological and lesion studies testing these two competing hypotheses, there is still no clear consensus regarding which, if either, of these positions is correct.

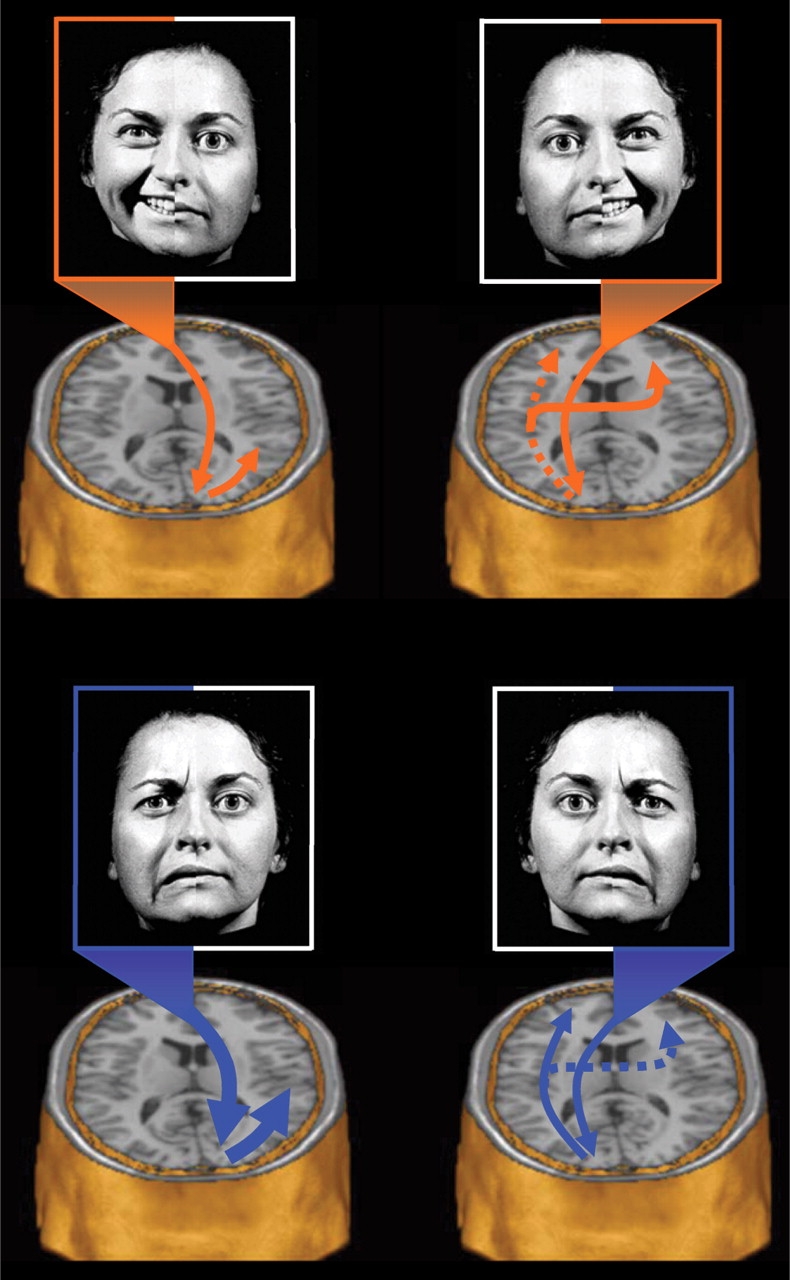

To clarify this issue, we used Blood Oxygen Level Dependent (BOLD) functional magnetic resonance imaging (fMRI) to examine lateralized brain activity in a sample of healthy women as they viewed a series of chimeric faces expressing either happiness or sadness on one half of the face and a neutral expression on the other half (Figure 1). Using a technique known as backward stimulus masking (Esteves and Ohman, 1993; Soares and Ohman, 1993; Whalen et al., 1998), the chimeric facial expressions were presented so rapidly as to be generally imperceptible to conscious awareness and masked immediately by a neutral face from the same poser. Here we show that when the neurobiological substrates underlying the perception of emotional faces are studied using functional neuroimaging, brain regions consistent with both the RHH and VSH appear to be activated, suggesting that these two putatively rival hypotheses may not necessarily be in opposition after all.

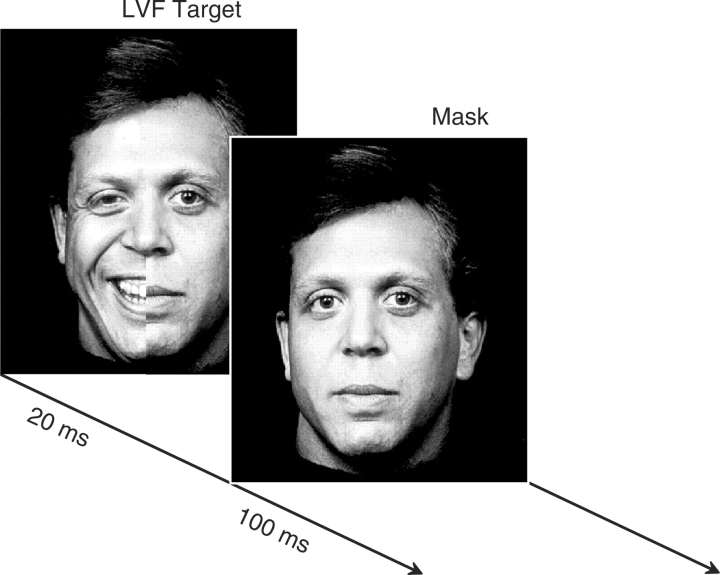

Fig. 1.

Masked chimeric face stimuli. Each trial consisted of two stimuli presented in rapid succession: (i) a ‘target’ chimeric face depicting an emotional expression on one half and a neutral expression from the same poser on the other half. There was an equal number of presentations of left-sided and right-sided stimuli. Each target chimeric expression was presented for 20 ms and was immediately replaced by (ii) a ‘mask’ face consisting of a photograph of the same poser expressing a neutral emotion for 100 ms. Participants provided feedback on each trial as to whether the poser was a male or female. Each trial was separated by a 3 s interstimulus interval.

METHODS

Subjects

Twelve right-handed healthy adult females volunteered to undergo fMRI while completing a facial affect perception task. Participants ranged in age from 21 to 28 years (M = 23.7, s.d. = 2.1) and were recruited from the local community of Belmont, MA, and from the staff of McLean Hospital. Volunteers were without significant history of psychiatric or neurologic illness and were required to have normal or corrected-normal vision with contact lenses. None of the participants had any previous exposure to the experimental stimuli and all were completely naïve to the fact that the study involved backward masking of stimuli or unconscious affect perception. Written informed consent was obtained from all volunteers and all were provided with a small monetary compensation. This study was approved by the human use review committee at McLean Hospital.

Unilateral masked affect stimulation tasks

Participants completed two masked facial affect perception tasks, the order of which was counterbalanced across subjects. Because of the long duration of each scan and to reduce the possibility of contrast effects and arousal differences between the two affective valences within the same run, the happy and sad conditions were collected as separate runs. The two tasks were virtually identical except for the primary emotion displayed by the target faces (i.e. happiness vs sadness). The duration of each task was 5 min and was divided into 25 alternating epochs, each of 12 s duration. The design of the task was complex, and alternated repeatedly among the following experimental conditions throughout the 5 min task: (i) crosshair fixation, (ii) fully presented affective faces masked by a full neutral face, (iii) unilaterally presented chimeric faces (i.e. half the face expressing an emotion and half the face neutral) masked by a full neutral face (Figure 1). Half of these chimeric faces displayed the emotion to the left side and half displayed the emotion on the right. Data for the masked full face presentations have been reported elsewhere (Killgore and Yurgelun-Todd, 2004) and will not be discussed in this article. The stimulus faces were obtained from the Neuropsychiatry Section of the University of Pennsylvania Medical Center (Erwin et al., 1992) and modified for use in the present study. The stimuli comprised 24 black and white photographs of facial expressions posed by four male and four female actors simulating each of three different emotional states (i.e. happy, sad, neutral). During each trial, an emotional target face was presented for 20 ms and was followed immediately by 100 ms mask consisting of a neutral emotion photograph of the same poser (Figure 1). All trials were separated by a 3 s inter-stimulus interval. Because many emotional expressions tend to be displayed asymmetrically on the face (Borod et al., 1997), we attempted to control for any possible effects on perception resulting from facial morphology by presenting each target face at two separate times during the task, once in its normal orientation and a second time as a mirror-reversed image. The masked affect task was programmed in Psyscope 1.2.5 (Macwhinney et al., 1997) on a Power Macintosh G3 computer and presented from an LCD projector to a screen placed near the end of the scanning table and viewed from a mirror build into the head coil. Volunteers were not informed about the unconscious presentations of the stimuli prior to the study and were told only that they would view a series of facial photographs and make a judgment about the gender of the person in the photograph by pressing a button on a keypad. Button presses were always made with the dominant (right) hand.

Following the fMRI scan, participants were tested for recognition of the masked affect expressions. The participants were shown all 24 expressions to which they had been exposed and were asked to identify whether or not they had seen each expression. As described previously (Killgore and Yurgelun-Todd, 2004), these participants correctly identified the neutral masked faces with ease, scoring significantly above chance (i.e. 82% correct; t[11] = 4.76, P < 0.001), whereas the masked sad (16% correct; t[11] = −5.25, P < 0.001) and happy (9% correct; t[11] = −11.66, P < 0.001) face expressions were recognized significantly below chance expectations. The fact that subjects scored below chance on the affective stimuli but not the neutral masks suggests that they clearly did not recall having seen the affective faces but were attending to the stimuli. This suggests that the masking procedure was successful at preventing conscious awareness of the masked stimuli.

Neuroimaging methods

Functional MRI data were acquired on a 1.5 Tesla GE LX MRI scanner equipped with a quadrature RF head coil. Head motion was minimized with foam padding and a tape strap across the forehead. To reduce non-steady-state effects, three dummy images were taken initially and discarded prior to analysis. Echoplanar images were collected using a standard acquisition sequence (TR = 3 s, TE = 40 ms, flip angle = 90°) across 20 coronal slices (7 mm, 1 mm gap). Images were obtained with a 20 cm field of view and a 64 × 64 acquisition matrix, yielding an in-plane resolution of 3.125 × 7 × 3.125 mm. For this study, two scanning runs were performed per subject (one for each affective valence). During each run, 100-scans were collected over 300 s. At the outset of each scanning session, matched T1-weighted high-resolution images were also obtained.

Image processing

Prior to statistical analysis, the echoplanar images were realigned and motion corrected using standard procedures and algorithms in SPM99 (Friston et al., 1995a, 1995b). The data were spatially normalized into three-dimensional stereotaxic space, spatially smoothed using an isotropic Gaussian filter [full width half maximum (FWHM) = 10 mm], and resliced to 2 × 2 × 2 mm.

The data were analyzed according to a standard two-stage random-effects approach (Penny et al., 2003). At the fixed-effects stage of analysis, individual design matrices were created for the masked happy and the masked sadness conditions for each subject. These matrices included all four presentation conditions (i.e. fixation, full face presentations, left-sided affective chimeric presentations and right-sided affective chimeric presentations) for the happy and sad affect conditions. Employing an event related design, each condition was modeled using the general linear model (Friston et al., 1995a, 1995b), with 28 fixation crosshair presentations, 24 full face presentations, 24 left-sided affective chimera and 24 right-sided affective chimera, all presented at pseudo-random intervals. A high pass filter was used to remove low frequency confounds by applying the SPM99 default value of twice the longest interval between two occurrences of the most frequently occurring stimulus condition (i.e. 78 s). Low pass filtering based on the hemodynamic response function was also applied. This first level analysis produced four fixed-effects contrast images for each participant, including global brain activation due to: (i) masked happy left-chimera, (ii) masked happy right-chimera, (iii) masked sad left-chimera and (iv) masked sad right-chimera.

The four individual fixed-effects contrast images for each subject were subsequently entered into a series of random-effects parametric statistical analysis procedures in SPM99 (Penny et al., 2003). Three sets of random-effects analyses tested the significance of: (i) global activity during masked unilateral presentations of each affect (one-sample t-tests of each lateralized affective presentation against baseline activity), (ii) activity unique to each visual field presentation for each affect (i.e. paired-sample t-tests between lateralized presentations within each affect), (iii) activity unique to each affect within a particular unilateral visual field presentation (i.e. paired sample t-tests between affects within each visual field). All analyses were constrained to include only the cortical and subcortical tissue within the two cerebral hemispheres (i.e. excluding cerebellum and brainstem, which were not fully imaged in all subjects) by implementing the standard TD cerebrum mask implemented within the Wake Forrest University (WFU) PickAtlas Utility (Maldjian et al., 2003). The following parameters were used for the three analysis sets: (i) For the global analysis of each unilateral presentation (i.e. one-sample t-tests), the height threshold was set to P < 0.001 (uncorrected), with the extent threshold set to 20 contiguous voxels. (ii) For the direct comparisons between visual fields within each affect (i.e. paired t-tests), the height threshold was set to P < 0.005 (uncorrected), with the extent threshold set to 20 contiguous voxels. (iii) Similarly, for the direct comparisons between happy and sad affects within each visual field (i.e. paired t-tests), the height threshold was set to P < 0.005 (uncorrected), with the extent threshold set to 10 contiguous voxels. To facilitate visualization, SPM activation maps are presented as ‘glass brain’ maximum intensity projections (MIP) in the standardized coordinate space of the Montreal Neurological Institute (MNI).

RESULTS

General analysis of unilateral stimulation

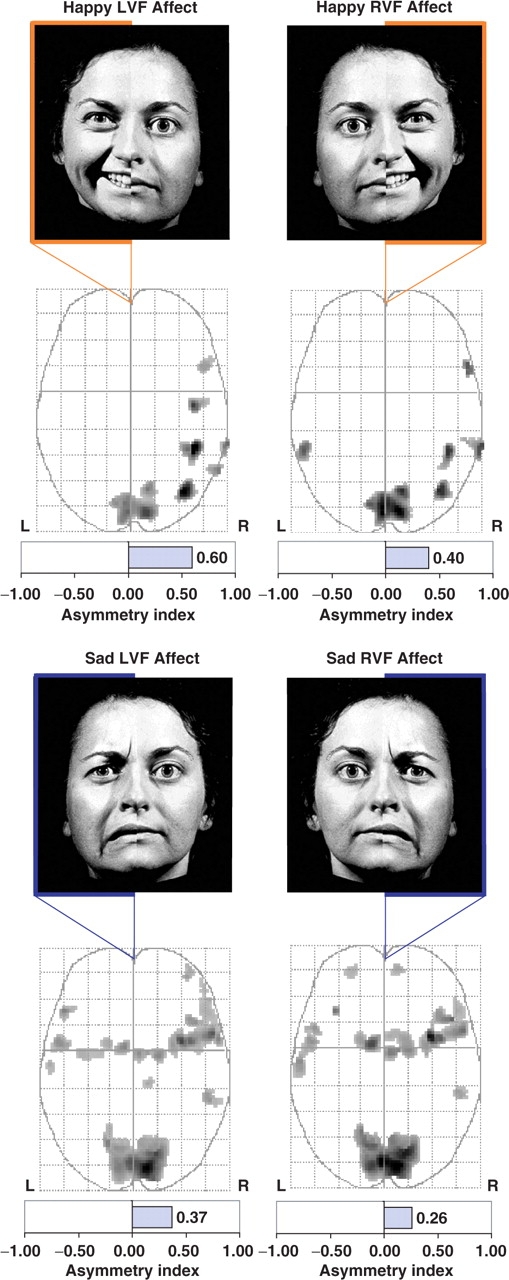

The individual contrast maps from the four unilateral presentation conditions (i.e. happy LVF, happy RVF, sad LVF and sad RVF) were first subjected to a series of one-sample random effects t-tests against the null hypothesis of no activation. Table 1 presents the local maxima, number of active voxels, and peak z-score for each of the significant clusters from that analysis. As evident in Figure 2, all four conditions produced significant activation, particularly within the right hemisphere. To quantify the lateralized pattern of activation, an Asymmetry Index (AI) was calculated to indicate the directional ratio of suprathreshold voxels in the left and right hemisphere temporal lobe search regions (i.e. Right - Left/Right + Left) for each unilateral affective valence condition. The AI can range from −1.0 to +1.0, with greater negative values indicating relatively more voxels activated in the left hemisphere, whereas greater positive values indicate relatively more active voxels on the right (Detre et al., 1998; Killgore et al., 1999). As shown at the bottom of each illustration in Figure 1, the asymmetry indexes were consistently within the positive direction (i.e. happy LVF AI = +0.60; happy RVF AI = +0.40; sad LVF AI = +0.37; sad RVF AI = +0.26), indicating a general asymmetry of activation favoring the right hemisphere for all four conditions regardless of lateralized presentation or affective valence condition.

Table 1.

Significant clusters of activation (one-sample t-test) for happy and sad chimeric faces according to the visual field of affect presentation

| Regions of activation | Active voxels | x | y | z | z-score |

|---|---|---|---|---|---|

| Unilateral masked happiness | |||||

| LVF | |||||

| R. Inferior parietal lobule | 108 | 44 | −42 | 50 | 4.28 |

| R. Middle occipital gyrus | 97 | 38 | −72 | 16 | 4.23 |

| R. Pre-central gyrus | 69 | 44 | −10 | 36 | 3.93 |

| R. Calcarine cortex | 609 | 10 | −86 | 4 | 3.89 |

| R. Middle temporal gyrus | 32 | 68 | −38 | 4 | 3.69 |

| R. Fusiform gyrus | 34 | 40 | −42 | −24 | 3.69 |

| R. Inferior temporal gyrus | 35 | 62 | −56 | −10 | 3.59 |

| R. Inferior frontal gyrus (operculum) | 46 | 52 | 16 | 26 | 3.37 |

| RVF | |||||

| R. Calcarine cortex | 800 | 10 | −84 | 4 | 4.09 |

| R. Middle occipital gyrus | 63 | 38 | −70 | 16 | 3.85 |

| R. Inferior parietal lobule | 70 | 44 | −42 | 50 | 3.81 |

| R. Middle temporal gyrus | 87 | 68 | −38 | 2 | 3.73 |

| R. Inferior frontal (trigone) | 21 | 58 | 20 | 6 | 3.67 |

| L. Middle temporal gyrus | 71 | −58 | −42 | −10 | 3.66 |

| Unilateral masked sadness | |||||

| LVF | |||||

| R. Calcarine cortex | 2356 | 10 | −84 | 4 | 5.12 |

| R. Middle frontal gyrus | 737 | 34 | 6 | 48 | 4.55 |

| L. Inferior frontal (trigone) | 41 | −50 | 28 | 8 | 3.99 |

| L. Supplementary motor area | 64 | −6 | 2 | 56 | 3.95 |

| R. Superior temporal pole | 27 | 60 | 8 | −8 | 3.94 |

| R. Globus pallidus | 68 | 18 | −4 | 8 | 3.89 |

| R. Supplementary motor area | 44 | 4 | −2 | 64 | 3.84 |

| L. Precentral gyrus | 84 | −52 | 4 | 30 | 3.82 |

| R. Superior temporal pole | 25 | 44 | 20 | −20 | 3.79 |

| R. Middle temporal gyrus | 31 | 54 | −36 | −14 | 3.75 |

| R. Superior temporal pole | 83 | 28 | 4 | −24 | 3.67 |

| R. Middle frontal gyrus | 23 | 38 | 52 | 8 | 3.64 |

| R. Thalamus | 34 | 10 | −24 | 12 | 3.49 |

| L. Putamen | 31 | −18 | 6 | 10 | 3.47 |

| L. Superior temporal gyrus | 25 | −60 | −10 | 4 | 3.46 |

| L. Middle temporal gyrus | 75 | −38 | −4 | −28 | 3.41 |

| RVF | |||||

| R. Calcarine cortex | 2283 | 10 | −84 | 2 | 4.81 |

| R. Middle frontal gyrus | 208 | 32 | 8 | 46 | 4.61 |

| L. Supplementary motor area | 100 | −10 | 2 | 54 | 4.24 |

| R. Superior temporal pole | 135 | 48 | 20 | −22 | 4.06 |

| R. Inferior frontal (operculum) | 232 | 56 | 12 | 30 | 3.96 |

| L. Inferior orbital frontal | 20 | −36 | 26 | −18 | 3.93 |

| R. Supplementary motor area | 66 | 4 | −4 | 64 | 3.86 |

| R. Globus pallidus | 172 | 18 | −2 | 6 | 3.76 |

| L. Superior temporal gyrus | 132 | −60 | −10 | 4 | 3.71 |

| L. Pre-central gyrus | 65 | −52 | 4 | 30 | 3.64 |

| L. Inferior frontal (operculum) | 33 | −50 | 14 | 8 | 3.62 |

| L. Putamen | 39 | −16 | 6 | 8 | 3.52 |

| R. Middle temporal gyrus | 20 | 52 | −32 | −16 | 3.50 |

| R. Middle orbital frontal gyrus | 32 | 10 | 56 | −2 | 4.79 |

| L. Superior orbital frontal gyrus | 26 | −22 | 54 | −6 | 3.31 |

Note: L, left hemisphere; R, right hemisphere; LVF, left visual field; RVF, right visual field. Atlas coordinates are from the MNI standard atlas, such that x reflects the distance (mm) to the right or left of midline, y reflects the distance anterior or posterior to the anterior commissure and z reflects the distance superior or inferior to the horizontal plane through the AC-PC line.

Fig. 2.

One sample t-tests for chimeric stimuli. Brain activity associated with each unilateral affective stimulation condition was tested relative to resting baseline using a one sample t-test (P < 0.001, uncorrected). The top row shows happy chimera and the bottom row shows sad chimera. Relative to resting baseline, brain activity was greater in the right hemisphere during all four affective hemiface conditions regardless of affective valence (i.e. happy vs sad) or visual field of presentation [i.e. Left Visual Field (LVF) vs Right Visual Field (RVF)]. Maximum intensity projections (MIPs) in the axial plane show similar activity in the primary visual cortex and greater right hemisphere activity for all four conditions.

Visual field contrasts

The unique contribution of each lateralized presentation relative to the activation produced by its mirror image projecting the affective expression to the opposing visual field was also evaluated. By directly contrasting the activity produced during the LVF affective chimeric face presentations with that produced by the identical mirror-image right affective chimera, we isolated the activity that was unique to each lateralized field of presentation, while eliminating voxels activated in common between both lateralized conditions. This was accomplished by paired t-tests between the two lateralized presentations separately for each affective valence condition.

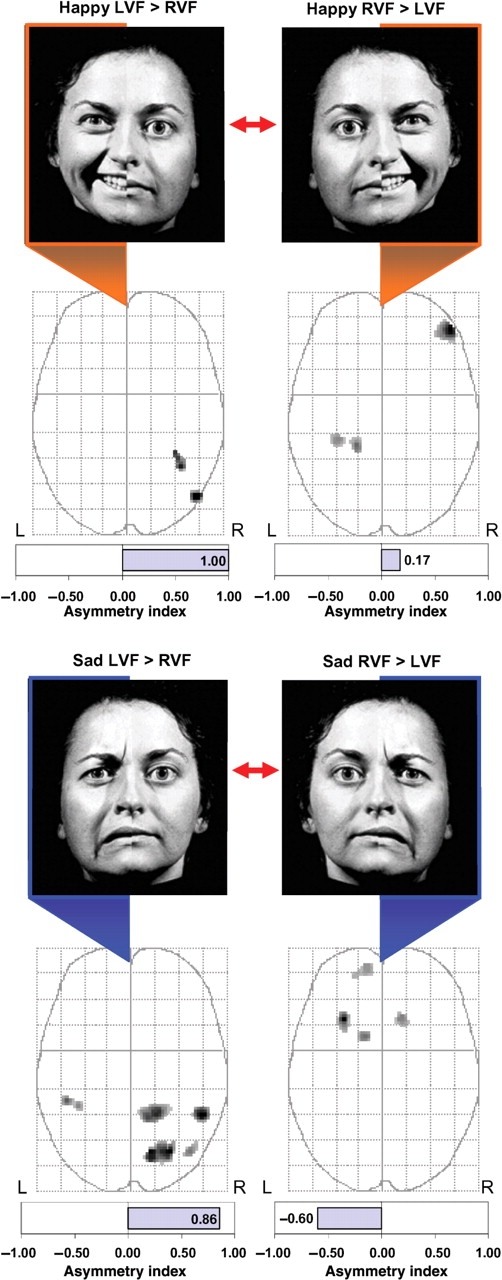

Happy affect

LVF > RVF

As seen in Figure 3, for happy chimeric faces, unilateral LVF presentations (i.e. LVF–RVF contrast) yielded significantly greater activity within two brain regions, both of which were within posterior aspect of the right hemisphere (middle temporal and fusiform gyri), leading to a total rightward lateralization of suprathreshold activity (AI = +1.00).

Fig. 3.

Comparisons between lateralized stimulus conditions. Brain activity associated with unilateral LVF presentations was compared directly to activity associated with unilateral RVF presentations using paired t-tests. Regions showing significant differences (P < 0.005, uncorrected) between the two lateralized presentation conditions are displayed on the MIPs in the axial plane. Happy hemifaces presented to the LVF produced significantly greater activity in the posterior right hemisphere (fusiform gyrus and middle temporal gyrus) than when presented to the RVF (top left). In contrast, happy hemifaces presented to the RVF produced greater activity in the right lateral orbitofrontal cortex and left fusiform/parahippocampal gyrus (top right). For sad hemifaces, LVF presentations produced greater activity predominantly in the posterior right hemisphere compared to similar RVF presentations (bottom left). In contrast, RVF presentations produced greater activity in the left orbitofrontal cortex and basal ganglia when compared to identical LVF presentations (bottom right).

RVF > LVF

In contrast to the LVF presentations, unilateral presentations to the RVF (i.e. RVF–LVF contrast) led to separate major regions of activation, with two small clusters of activity located in the parahippocampal gyrus and fusiform gyrus of the left hemisphere and one large cluster of activation in the right orbitofrontal cortex (Figure 3 and Table 2). Overall, the number of activated voxels slightly favored the right hemisphere (AI = +0.17).

Table 2.

Significant clusters of activation during direct comparison of left vs right-lateralized presentations (paired t-test) for Happy and Sad chimeric faces

| Regions of activation | Active voxels | x | y | z | z-score |

|---|---|---|---|---|---|

| Unilateral masked happiness | |||||

| LVF>RVF | |||||

| R. Middle temporal gyrus | 41 | 50 | −72 | 2 | 3.11 |

| R. Fusiform gyrus | 26 | 38 | −52 | −20 | 3.02 |

| RVF>LVF | |||||

| R. Inferior orbital frontal gyrus | 174 | 48 | 46 | −10 | 4.22 |

| L. Parahippocampal gyrus | 71 | −18 | −36 | −12 | 3.48 |

| L. Fusiform gyrus | 52 | −32 | −32 | −14 | 3.16 |

| Unilateral masked sadness | |||||

| LVF>RVF | |||||

| R. Middle temporal gyrus | 76 | 50 | −46 | 12 | 3.46 |

| R. Lingual gyrus | 69 | 28 | −72 | 2 | 3.44 |

| R. Precuneus | 231 | 14 | −72 | 42 | 3.40 |

| R. Superior parietal lobule | 111 | 18 | −46 | 70 | 3.27 |

| L. Supramarginal gyrus | 40 | −46 | −36 | 32 | 3.07 |

| R. Middle occipital gyrus | 25 | 42 | −72 | 30 | 2.93 |

| RVF>LVF | |||||

| L. Inferior orbital frontal gyrus | 93 | −28 | 22 | −16 | 3.88 |

| L. Caudate | 34 | −12 | 10 | 12 | 3.20 |

| R. Caudate | 42 | 14 | 24 | −8 | 3.08 |

| L. Middle orbital frontal gyrus | 40 | −10 | 58 | −4 | 2.94 |

Note: L, left hemisphere; R, right hemisphere; LVF, left visual field; RVF, right visual field. Atlas coordinates are from the MNI standard atlas, such that x reflects the distance (mm) to the right or left of midline, y reflects the distance anterior or posterior to the anterior commissure and z reflects the distance superior or inferior to the horizontal plane through the AC-PC line. P < 0.005 (uncorrected), k = 20 voxels.

Sad affect

LVF > RVF

For sad chimeric faces, unilateral LVF presentations (i.e. LVF–RVF contrast) produced strongly right-lateralized activity (AI = +0.86) that was exclusively restricted to the posterior brain regions, including the temporal lobes, parietal lobes and visual processing regions of the lingual gyrus, precuneus and occipital cortex.

RVF > LVF

In contrast, RVF presentations (i.e. RVF–LVF contrast) of sad chimera led to an exclusively anterior pattern of activation that was predominantly lateralized to the left hemisphere (AI = −0.60; Figure 3 and Table 2), including the middle and inferior orbital frontal gyri and head of both caudate nuclei.

Valence specific contrasts

The cerebral responses unique to each affect were also examined separately for each lateralized presentation. This was accomplished via paired t-tests between the happy and sad chimeric stimuli separately for each lateralized presentation. Coordinates of local maxima for these analyses are presented in Table 3.

Table 3.

Significant clusters of activation during direct comparison of Happy vs Sad chimeric faces (paired t-test) separately for left-lateralized and right-lateralized affective presentations.

| Regions of activation | Active voxels | x | y | z | z-score |

|---|---|---|---|---|---|

| Left visual field affect | |||||

| Happy > sad | |||||

| — | — | — | — | — | — |

| Sad > happy | |||||

| R. Insula | 270 | 42 | 6 | −16 | 3.97 |

| L. Insula | 105 | −40 | 14 | −6 | 3.59 |

| R. Anterior cingulate gyrus | 124 | 8 | 30 | 18 | 3.49 |

| L. Middle occipital gyrus | 71 | −20 | −86 | 18 | 3.32 |

| L. Fusiform gyrus | 140 | −36 | −6 | −28 | 3.30 |

| R. Lingual gyrus | 69 | 10 | −60 | −4 | 3.29 |

| R. Posterior cingulate gyrus | 25 | 10 | −44 | 14 | 3.21 |

| L. Middle frontal gyrus | 49 | −34 | 48 | 0 | 3.15 |

| R. Post-central gyrus | 33 | 28 | −34 | 56 | 3.09 |

| R. Inferior temporal gyrus | 31 | 46 | −18 | −22 | 3.08 |

| L. Anterior cingulate gyrus | 23 | −2 | 32 | 16 | 3.00 |

| L. Supramarginal gyrus | 53 | −62 | −32 | 36 | 2.98 |

| R. Middle cingulate gyrus | 22 | 20 | −6 | 44 | 2.97 |

| R. Middle frontal gyrus | 32 | 44 | 8 | 40 | 2.78 |

| Right Visual field affect | |||||

| Happy > sad | |||||

| L. Middle temporal gyrus | 110 | −52 | −38 | −10 | 3.20 |

| R. Middle temporal gyrus | 29 | 48 | −74 | 16 | 2.95 |

| Sad > happy | |||||

| R. Insula | 199 | 42 | 8 | −16 | 3.66 |

| L. Insula | 94 | −40 | 14 | −6 | 3.45 |

| L. Superior frontal gyrus | 60 | −30 | 48 | 0 | 3.23 |

| R. Middle frontal gyrus | 28 | 32 | 8 | 52 | 3.23 |

| R. Lingual gyrus | 27 | 10 | −60 | −4 | 3.22 |

| L. Fusiform gyrus | 77 | −32 | −2 | −40 | 3.14 |

| R. Inferior orbital frontal gyrus | 24 | 48 | 28 | −6 | 2.88 |

Note: L, left hemisphere; R, right hemisphere; LVF, left visual field; RVF, right visual field. Atlas coordinates are from the MNI standard atlas, such that x reflects the distance (mm) to the right or left of midline, y reflects the distance anterior or posterior to the anterior commissure and z reflects the distance superior or inferior to the horizontal plane through the AC-PC line. P < 0.005 (uncorrected), k = 20 voxels.

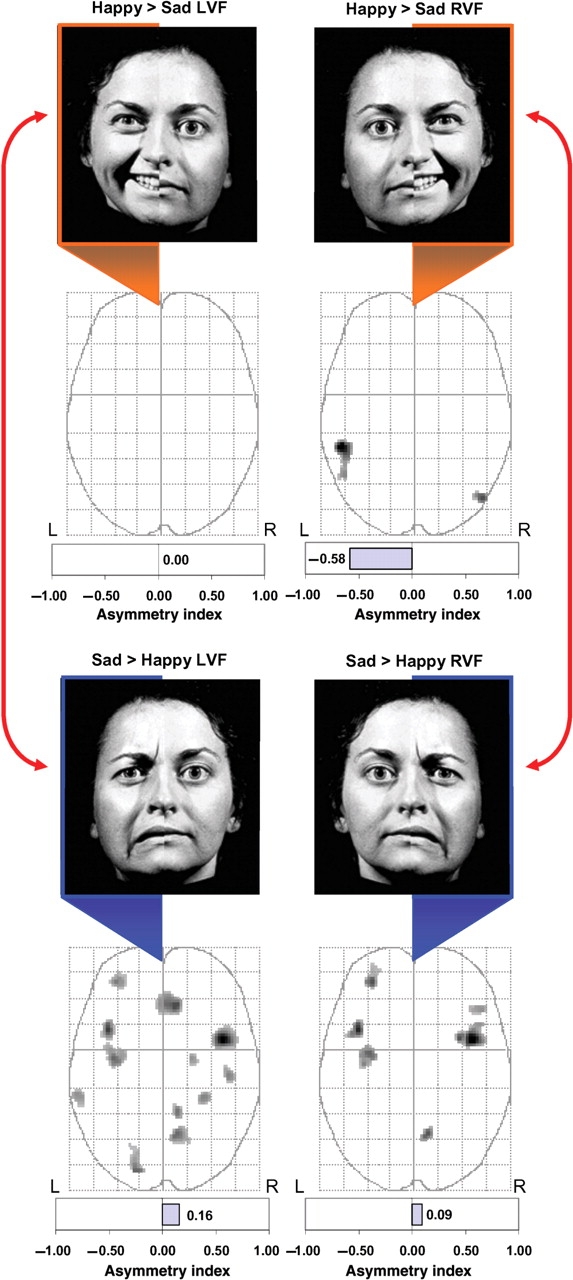

LVF

Happy > sad

Figure 4 shows that for unilateral LVF presentations, there were no regions where happy affect was associated with greater activation than sad affect (i.e. happy – sad contrast).

Fig. 4.

Comparisons between valence conditions. Brain activity associated with happy vs sad stimuli restricted to a single visual field was compared using paired t-tests (P < 0.005, uncorrected) and displayed on MIPs in the axial plane. For LVF stimuli, there were no regions where unilateral happy hemifaces produced greater activation than unilateral sad hemifaces. In contrast, sad hemifaces presented to the LVF produced significantly greater activity across a distributed bilateral network of affective brain regions when compared activity produced by matched happy hemifaces. For RVF stimuli, happy hemifaces produced significantly greater activity in bilateral middle temporal gyri, whereas sad hemifaces restricted to the RVF produced significantly greater activation within several distributed affect regions of both hemispheres when compared to comparable happy hemifaces.

Sad > happy

In contrast, sad affect in the LVF produced significantly greater bilateral activity (AI = +0.16) than similar presentations of left-sided unilateral happy affect (Figure 4). Most prominently, sad affect was associated with greater bilateral activation within the insular cortex, as well as a distributed network of regions including the anterior cingulate gyrus, frontal cortex, temporal cortex and visual object processing regions (Table 3).

RVF

Happy > sad

For unilateral RVF presentations, happy affect (i.e. happy – sad contrast) was associated with significant left-lateralized activation in posterior brain regions (AI = −0.58) when compared to sad affect. As shown in Figure 4, this pattern included activation of the middle temporal gyrus in both hemispheres, particularly on the left.

Sad > happy

Consistent with the aforementioned findings for the LVF, the RVF contrast of unilateral sad vs happy faces produced significant activity across a distributed network including the insular and frontal cortex, as well as posterior visual object processing regions. This pattern was generally bilateral (AI = +0.09), with only slightly more activated voxels in the right relative to the left hemisphere (Figure 4).

DISCUSSION

Utilizing a classical neuropsychological face perception paradigm during fMRI, we show that two long competing theories of lateralized emotion processing the RHH and the VSH (Demaree et al., 2005), may not provide mutually exclusive accounts of the lateralization of affective perception. It appears, instead, that the underlying neural processes specified by these two hypotheses may operate concurrently as interrelated components of a bi-hemispheric distributed emotion processing system. First, the present data show a consistent pattern of greater right-lateralized activation for all affective tasks, regardless of valence or visual field of stimulation (Figure 2), providing general support for the RHH. Second, when specific visual fields and affective valence conditions were statistically contrasted, unique patterns of lateralized activation were associated with each combination of stimuli, providing support for the RHH in some instances and for the VSH in others.

Left visual field presentations

When emotional face stimuli, regardless of valence, were presented unilaterally to the LVF (i.e. direct projection of emotion to the right hemisphere), there was consistently greater task-related activation within the posterior right hemisphere compared to identical lateralized presentations of affect to the RVF (i.e. initial left hemisphere projections). These findings are consistent with the RHH, which postulates that the right hemisphere would be activated by either happy or sad stimuli because of its presumed dominance for processing emotion (Borod et al., 1998). A second set of contrasts, however, added clarification to these findings by demonstrating a valence specific component as well. For example, when restricted to the LVF, sad emotional expressions produced significantly greater activity distributed throughout both hemispheres relative to matched happy chimera in the same visual field. In fact, for unilateral LVF presentations, there were no brain regions where happy chimeric expressions produced greater activity than matched sad chimera. This finding modifies the previously mentioned data supporting the RHH, suggesting that the magnitude and extent of activation produced by LVF presentations was modulated by the affective valence of the stimulus. Moreover, the greater responsiveness to LVF presentations of sad relative to happy faces is consistent with predictions of the VSH, which suggests that the right hemisphere is particularly specialized for processing negative affect (Ahern and Schwartz, 1979; Wedding and Stalans, 1985; Adolphs et al., 2001). Thus, for LVF stimuli, the right hemisphere was significantly more activated than the left for both affects (i.e. consistent with the RHH), but was particularly responsive to sad relative to happy stimuli (i.e. consistent with the VSH).

Right visual field presentations

Compared to LVF affective presentations, RVF chimera (i.e. direct projection to the left hemisphere) were associated with predominantly anterior cerebral activation, including orbitofrontal cortex and ventral striatum. Furthermore, the asymmetry of anterior activation differed according to the valence of the expression, with happy expressions in the RVF associated with right-lateralized anterior activity and sad expressions associated with left-lateralized anterior activity (i.e. consistent with the VSH). A second set of contrasts, directly comparing happy and sad affects during RVF stimulation showed further that right-lateralized happy chimera produced significantly greater activity in the left middle temporal gyrus relative to matched lateralized sad expressions, suggesting that this region may be particularly important in processing positive facial affect. Right-lateralized sad expressions, on the other hand, produced greater bilateral activity distributed among a number of affect processing regions such as the insula, frontal cortex, fusiform gyrus and lingual gyrus, than right-lateralized happy expressions, consistent with other accounts of distributed neural processing of faces (Vuilleumier and Pourtois, 2007).

A tentative working model

Based on these findings, we postulate two interrelated systems in operation during affective face processing. First, there appears to be a dominant posterior right hemisphere system that is specialized for emotional perception in general, regardless of valence, but which is particularly well-suited for processing the subtleties of negatively valenced facial affect. We found that the right hemisphere was more extensively activated than the left by a masked affect task, regardless of valence or visual field of input, suggesting that affective information in general is transferred to the right hemisphere in a manner consistent with the colossal relay hypothesis of Zaidel (1983, 1985, 1986; Zaidel et al., 1988, 1991). When considered in this light, these findings provide functional imaging support for the RHH (Levy et al., 1983; McLaren and Bryson, 1987; Moreno et al., 1990; Hugdahl et al., 1993; Adolphs et al., 1996; Borod et al., 1998; Adolphs et al., 2000). Second, there also appears to be a non-dominant posterior left hemisphere system that has only limited emotional processing capabilities, necessitating downstream elaboration by anterior cortical and subcortical regions. This downstream processing appears to involve valence-specific lateralized activation of orbitofrontal cortex and ventral striatum. During RVF presentations, negatively valenced stimuli appear to activate left anterior regions, while positively valenced stimuli appear to activate right anterior regions (Figure 5).

Fig. 5.

Proposed model of regional interactions. The present findings were used to outline a tentative model whereby the posterior right hemisphere is dominant for processing all facial affective stimuli regardless of valence, but is also particularly specialized for processing negative affective stimuli. In contrast, the posterior left hemisphere is postulated to be relatively less effective at processing affective stimuli in general and must, therefore, rely on downstream processing within the prefrontal cortex bilaterally to evaluate the significance of the affective stimulus. This prefrontal system appears to recruit the left and right anterior regions in a valence specific manner. Top left: Happy affective stimuli in the LVF are initially projected to the right hemisphere primary visual cortex and then intra-hemispherically to nearby posterior temporal and fusiform regions for further analysis. Top right: In contrast, happy stimuli in the RVF are first projected to the primary visual cortex of the left hemisphere. Because the left hemisphere is relatively less specialized for processing facial affect, such information is sent downstream for further elaborative processing. Happy affect in this hemisphere appears to activate left fusiform and left middle temporal gyri and is further projected bilaterally to the prefrontal cortices for elaboration and comparison, leading to valence specific activation of the right prefrontal cortex. Bottom left: Sad affective stimuli from the LVF would be directly projected to the primary visual cortex of the right hemisphere. Because of the superiority of the right hemisphere for processing affect, and negative affect in particular, very little transfer distance would be required for valence-specific elaboration. Consequently, negative facial affect cues in the LVF would be expected to be more rapidly and efficiently processed than any other affect/visual field combination. Bottom right: In contrast, sad affective stimuli in the RVF would be particularly disadvantaged, as they would be sent to the non-affect dominant left hemisphere. Due to the relative non-specialization of the left hemisphere for processing affect, the information would be subsequently relayed to the anterior regions for further elaboration and comparison. Because there exist many more categories of negative emotion than positive, the processing of negative affect in the left hemisphere is likely to be particularly inefficient. Globally, this model predicts that LVF presentations should generally be superior to RVF presentations, regardless of valence, but further suggests that RVF presentations of happy expressions will typically result in superior processing than identical presentations of sad expressions.

Interestingly, the pattern of prefrontal activation yielded by RVF affective stimuli was lateralized in a manner opposite from the traditional ‘left = positive/approach’ and ‘right = negative/withdrawal’ pattern suggested in some models (Davidson et al., 1990; Davidson, 1992). It is possible that the prefrontal cortices are engaged bilaterally in the downstream processing of the information (Vuilleumier and Pourtois, 2007), but that the lateralized pattern of activation presently observed represents greater neural inefficiency or increased regional effort invested toward processing affective information by the half of the prefrontal cortex that is less specialized for that particular emotion. Furthermore, assuming that the left hemisphere is in fact poorer at processing facial displays of emotion relative to the right, it is likely to have greater difficulty discriminating among the complexities of the wide range of negative emotional expressions, of which there exist at least four or five basic categories (e.g. anger, sadness, disgust, fear and perhaps contempt) (Ekman, 1992, 2004). In contrast, positive emotional expressions may be comparatively less demanding to identify, because positive emotions can roughly be subsumed under a single broad category of ‘happiness’, the most easily identified of all emotions (Kirouac and Dore, 1985; Esteves and Ohman, 1993; Hugdahl et al., 1993). Consequently, positive expressions in the RVF and projected to the emotionally non-dominant hemisphere may be identified more easily than negative expressions, due to the limited processing necessary for recognizing the general category of positive emotions. Of note, this pattern is actually more consistent with a direct access model of hemispheric processing (Zaidel, 1983, 1985, 1986; Zaidel et al., 1988, 1991), which posits that affective information may be processed to some extent by either hemisphere, but that it will be most effectively processed when projected directly to the hemisphere most specialized for affective processing. Thus, not only could the present model account for the findings of the RHH (Levy et al., 1983; McLaren and Bryson, 1987; Moreno et al., 1990; Hugdahl et al., 1993; Adolphs et al., 1996; Borod et al., 1998; Adolphs et al., 2000), but it may also account for some of the behavioral findings supporting the VSH as well (Reuter-Lorenz and Davidson, 1981; Natale et al., 1983; Reuter-Lorenz et al., 1983; Jansari et al., 2000; Rodway et al., 2003; Lee et al., 2004; Pourtois et al., 2005). Assuming this model of brain activity relates to behavioral performance, one might expect to find support for the RHH under most circumstances when lateralized affective presentations are compared across hemispheres (e.g. LVF sad vs RVF sad), but may find support for the VSH on some occasions when valenced stimuli are compared within hemispheric presentations (e.g. RVF happy vs RVF sad).

The use of a backward masking paradigm in this study ensured that stimuli were projected initially to one visual field or another by virtue of the fact that the stimulus presentation times were faster than the response time necessary to move the eyes toward the lateralized affective target stimulus as it appeared in one visual field or another. This strength also serves as a limitation, as the findings cannot be validly generalized to presentations that are consciously recognized. Some evidence also suggests that the lateralization of emotional perception may be affected by individual difference variables such as gender (Harrison et al., 1990; Killgore and Gangestad, 1999), handedness (Rodway et al., 2003), or even current mood state (Mikhailova et al. 1996; Killgore and Cupp, 2002; Compton et al., 2003). Such variables were held constant or not assessed in the present study and cannot, therefore, be addressed here. Functional imaging studies that examine such factors will undoubtedly further our understanding of this complex system. It also needs to be emphasized that, although the present findings were discussed in terms of possible stages of temporal and spatial processing (e.g. Vuilleumier and Pourtois, 2007), the temporal resolution of fMRI is extremely limited. Future investigations would benefit from the use of alternative technologies such as magnetoencephalography (MEG) which can provide considerably more refined resolution of cerebral responses over brief time periods.

At least two major assumptions were also critical to our interpretation of the data. First, we generally interpreted the increased BOLD activation as evidence of greater cognitive and neural processing. This may not necessarily be the case, as there is some evidence that suggests that greater activation may represent less efficient processing or greater cognitive effort due to task difficulty (Neubauer et al., 2005). This concern cannot be answered by the present findings and will require further research. Further, the present results rely heavily upon the ‘subtraction hypothesis’, which assumes that brain activity during one cognitive state can be validly differentiated from that of another state via simple subtraction of the two conditions. Here we contrasted mirror-image chimeric faces, assuming that the neutral components would cancel out. The validity of such methods is still a matter of ongoing discussion (Sartori and Umilta, 2000; Hautzel et al., 2003), and future research may attempt to employ alternative methods to the chimeric presentations used here, such as full face presentations to each hemifield.

Overall, these functional neuroimaging findings suggest a complex lateralized emotional perception system that encompasses processes subsumed by both the RHH and VSH. Future research may be advanced by focusing on understanding the functional interrelationships among the components of this distributed system rather than continuing to debate the relative merits of two hypotheses that appear to be addressing separate but interrelated components of the same system. By applying functional neuroimaging to a long-standing debate within affective neuroscience, it is now possible to see how two apparently contradictory hypotheses may, in fact, both be correct, once we have the capacity to step back and visualize the system as a whole.

Acknowledgments

This work was supported by a grant from the National Institute of Child Health and Human Development (NICHD) to W. D. K., PhD and D. A. Y.-T., PhD: NIH grant number 1 R03 HD41542-01, and MH 069840 to Deborah A. Yurgelun-Todd, PhD.

Footnotes

Conflict of Interest

None declared.

References

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. Journal of Neuroscience. 2000;20:2683–90. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Damasio AR. Cortical systems for the recognition of emotion in facial expressions. Journal of Neuroscience. 1996;16:7678–87. doi: 10.1523/JNEUROSCI.16-23-07678.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Jansari A, Tranel D. Hemispheric perception of emotional valence from facial expressions. Neuropsychology. 2001;15:516–24. [PubMed] [Google Scholar]

- Ahern GL, Schwartz GE. Differential lateralization for positive vs negative emotion. Neuropsychologia. 1979;17:693–8. doi: 10.1016/0028-3932(79)90045-9. [DOI] [PubMed] [Google Scholar]

- Borod JC, Cicero BA, Obler LK, et al. Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology. 1998;12:446–58. doi: 10.1037//0894-4105.12.3.446. [DOI] [PubMed] [Google Scholar]

- Borod JC, Haywood CS, Koff E. Neuropsychological aspects of facial asymmetry during emotional expression: a review of the normal adult literature. Neuropsychology Review. 1997;7:41–60. doi: 10.1007/BF02876972. [DOI] [PubMed] [Google Scholar]

- Borod JC, Koff E, Perlman Lorch M, Nicholas M. The expression and perception of facial emotion in brain-damaged patients. Neuropsychologia. 1986;24:169–80. doi: 10.1016/0028-3932(86)90050-3. [DOI] [PubMed] [Google Scholar]

- Canli T, Desmond JE, Zhao Z, Glover G, Gabrieli JD. Hemispheric asymmetry for emotional stimuli detected with fMRI. Neuroreport. 1998;9:3233–9. doi: 10.1097/00001756-199810050-00019. [DOI] [PubMed] [Google Scholar]

- Compton RJ, Fisher LR, Koenig LM, McKeown R, Munoz K. Relationship between coping styles and perceptual asymmetry. Journal of Personality and Social Psychology. 2003;84:1069–78. doi: 10.1037/0022-3514.84.5.1069. [DOI] [PubMed] [Google Scholar]

- Davidson RJ. Anterior cerebral asymmetry and the nature of emotion. Brain & Cognition. 1992;20:125–51. doi: 10.1016/0278-2626(92)90065-t. [DOI] [PubMed] [Google Scholar]

- Davidson RJ. Brain Asymmetry. Cambridge, MA: MIT Press; 1995. Cerebral asymmetry, emotion, and affective style. In: Davidson R.J., Hugdahl, K, editors; pp. 361–87. [Google Scholar]

- Davidson RJ, Ekman P, Saron CD, Senulis JA, Friesen WV. Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology. I. Journal of Personality & Social Psychology. 1990;58:330–41. [PubMed] [Google Scholar]

- Demaree HA, Everhart DE, Youngstrom EA, Harrison DW. Brain lateralization of emotional processing: historical roots and a future incorporating ‘dominance’. Behavioural and Cognitive Neuroscience Review. 2005;4:3–20. doi: 10.1177/1534582305276837. [DOI] [PubMed] [Google Scholar]

- Detre JA, Maccotta L, King D, et al. Functional MRI lateralization of memory in temporal lobe epilepsy. Neurology. 1998;50:926–32. doi: 10.1212/wnl.50.4.926. [DOI] [PubMed] [Google Scholar]

- Ekman P. Are there basic emotions? Psychological Review. 1992;99:550–3. doi: 10.1037/0033-295x.99.3.550. [DOI] [PubMed] [Google Scholar]

- Ekman P. Emotions Revealed: Recognizing Faces and Feelings to Improve Communication and Emotional Life. New York: Henry Holt and Company; 2004. [Google Scholar]

- Erwin RJ, Gur RC, Gur RE, Skolnick B, Mawhinney-Hee M, Smailis J. Facial emotion discrimination: I. Task construction and behavioral findings in normal subjects. Psychiatry Research. 1992;42:231–40. doi: 10.1016/0165-1781(92)90115-j. [DOI] [PubMed] [Google Scholar]

- Esteves F, Ohman A. Masking the face: recognition of emotional facial expressions as a function of the parameters of backward masking. Scandinavian Journal of Psychology. 1993;34:1–18. doi: 10.1111/j.1467-9450.1993.tb01096.x. [DOI] [PubMed] [Google Scholar]

- Friston K, Ashburner J, Poline J, Frith C, Heather J, Frackowiak R. Spatial registration and normalization of images. Human Brain Mapping. 1995a;2:165–89. [Google Scholar]

- Friston K, Holmes A, Worsley K, Poline J, Frith C, Frackowiak R. Statistical parametric maps in functional imaging: a general approach. Human Brain Mapping. 1995b;5:189–201. [Google Scholar]

- Harrison DW, Gorelczenko PM, Cook J. Sex differences in the functional asymmetry for facial affect perception. International Journal of Neuroscience. 1990;52:11–16. doi: 10.3109/00207459008994238. [DOI] [PubMed] [Google Scholar]

- Hautzel H, Mottaghy FM, Schmidt D, Muller HW, Krause BJ. Neurocognition and PET. Strategies for data analysis in activation studies on working memory. Nuklearmedizin. 2003;42:197–209. [PubMed] [Google Scholar]

- Hugdahl K, Iversen PM, Johnsen BH. Laterality for facial expressions: does the sex of the subject interact with the sex of the stimulus face? Cortex. 1993;29:325–31. doi: 10.1016/s0010-9452(13)80185-2. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Iversen PM, Ness HM, Flaten MA. Hemispheric differences in recognition of facial expressions: a VHF-study of negative, positive, and neutral emotions. International Journal of Neuroscience. 1989;45:205–13. doi: 10.3109/00207458908986233. [DOI] [PubMed] [Google Scholar]

- Jansari A, Tranel D, Adolphs R. A valence-specific lateral bias for discriminiating emotional facial expressions in free field. Cognition and Emotion. 2000;14:341–53. [Google Scholar]

- Killgore WDS, Cupp DW. Mood and sex of participant in perception of happy faces. Percept Mot Skills. 2002;95:279–88. doi: 10.2466/pms.2002.95.1.279. [DOI] [PubMed] [Google Scholar]

- Killgore WDS, Gangestad SW. Sex differences in asymmetrically perceiving the intensity of facial expressions. Perceptual & Motor Skills. 1999;89:311–4. doi: 10.2466/pms.1999.89.1.311. [DOI] [PubMed] [Google Scholar]

- Killgore WDS, Glosser G, Casasanto DJ, French JA, Alsop DC, Detre JA. Functional MRI and the Wada test provide complementary information for predicting post-operative seizure control. Seizure. 1999;8:450–5. doi: 10.1053/seiz.1999.0339. [DOI] [PubMed] [Google Scholar]

- Killgore WDS, Yurgelun-Todd DA. Activation of the amygdala and anterior cingulate during nonconscious processing of sad versus happy faces. Neuroimage. 2004;21:1215–23. doi: 10.1016/j.neuroimage.2003.12.033. [DOI] [PubMed] [Google Scholar]

- Kirouac G, Dore FY. Accuracy of the judgment of facial expression of emotions as a function of sex and level of education. Journal of Nonverbal Behavior. 1985;9:3–7. [Google Scholar]

- Lee GP, Meador KJ, Loring DW, et al. Neural substrates of emotion as revealed by functional magnetic resonance imaging. Cognitive and Behavioral Neurology. 2004;17:9–17. doi: 10.1097/00146965-200403000-00002. [DOI] [PubMed] [Google Scholar]

- Levy J, Heller W, Banich MT, Burton LA. Asymmetry of perception in free viewing of chimeric faces. Brain & Cognition. 1983;2:404–19. doi: 10.1016/0278-2626(83)90021-0. [DOI] [PubMed] [Google Scholar]

- Levy J, Trevarthen C, Sperry RW. Reception of bilateral chimeric figures following hemispheric deconnexion. Brain. 1972;95:61–78. doi: 10.1093/brain/95.1.61. [DOI] [PubMed] [Google Scholar]

- Ley RG, Bryden MP. Hemispheric differences in processing emotions and faces. Brain and Language. 1979;7:127–38. doi: 10.1016/0093-934x(79)90010-5. [DOI] [PubMed] [Google Scholar]

- Macwhinney B, Cohen J, Provost J. The PsyScope experiment-building system. Spatial Vision. 1997;11:99–101. doi: 10.1163/156856897x00113. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–9. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Mandal MK, Tandon SC, Asthana HS. Right brain damage impairs recognition of negative emotions. Cortex. 1991;27:247–53. doi: 10.1016/s0010-9452(13)80129-3. [DOI] [PubMed] [Google Scholar]

- McLaren J, Bryson SE. Hemispheric asymmetries in the perception of emotional and neutral faces. Cortex. 1987;23:645–54. doi: 10.1016/s0010-9452(87)80054-0. [DOI] [PubMed] [Google Scholar]

- Mikhailova ES, Vladimirova TV, Iznak AF, Tsusulkovskaya EJ, Sushko NV. Abnormal recognition of facial expression of emotions in depressed patients with major depression disorder and schizotypal personality disorder. Biological Psychiatry. 1996;40:697–705. doi: 10.1016/0006-3223(96)00032-7. [DOI] [PubMed] [Google Scholar]

- Moreno CR, Borod JC, Welkowitz J, Alpert M. Lateralization for the expression and perception of facial emotion as a function of age. Neuropsychologia. 1990;28:199–209. doi: 10.1016/0028-3932(90)90101-s. [DOI] [PubMed] [Google Scholar]

- Natale M, Gur RE, Gur RC. Hemispheric asymmetries in processing emotional expressions. Neuropsychologia. 1983;21:555–65. doi: 10.1016/0028-3932(83)90011-8. [DOI] [PubMed] [Google Scholar]

- Neubauer AC, Grabner RH, Fink A, Neuper C. Intelligence and neural efficiency: further evidence of the influence of task content and sex on the brain-IQ relationship. Brain Research: Cognitive Brain Research. 2005;25:217–25. doi: 10.1016/j.cogbrainres.2005.05.011. [DOI] [PubMed] [Google Scholar]

- Penny WD, Holmes AP, Friston KJ. Human Brain Function. 2nd. San Diego: Academic Press; 2003. Random effects analysis. In: Frackowiak, R.S.J., Friston, K.J., Frith, C., et al; pp. 843–50. [Google Scholar]

- Pourtois G, de Gelder B, Bol A, Crommelinck M. Perception of facial expressions and voices and of their combination in the human brain. Cortex. 2005;41:49–59. doi: 10.1016/s0010-9452(08)70177-1. [DOI] [PubMed] [Google Scholar]

- Reuter-Lorenz P, Davidson RJ. Differential contributions of the two cerebral hemispheres to the perception of happy and sad faces. Neuropsychologia. 1981;19:609–13. doi: 10.1016/0028-3932(81)90030-0. [DOI] [PubMed] [Google Scholar]

- Reuter-Lorenz PA, Givis RP, Moscovitch M. Hemispheric specialization and the perception of emotion: evidence from right-handers and from inverted and non-inverted left-handers. Neuropsychologia. 1983;21:687–92. doi: 10.1016/0028-3932(83)90068-4. [DOI] [PubMed] [Google Scholar]

- Rodway P, Wright L, Hardie S. The valence-specific laterality effect in free viewing conditions: the influence of sex, handedness, and response bias. Brain Cognition. 2003;53:452–63. doi: 10.1016/s0278-2626(03)00217-3. [DOI] [PubMed] [Google Scholar]

- Sartori G, Umilta C. How to avoid the fallacies of cognitive subtraction in brain imaging. Brain Language. 2000;74:191–212. doi: 10.1006/brln.2000.2334. [DOI] [PubMed] [Google Scholar]

- Soares JJ, Ohman A. Backward masking and skin conductance responses after conditioning to nonfeared but fear-relevant stimuli in fearful subjects. Psychophysiology. 1993;30:460–6. doi: 10.1111/j.1469-8986.1993.tb02069.x. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Wedding D, Stalans L. Hemispheric differences in the perception of positive and negative faces. International Journal of Neuroscience. 1985;27:277–81. doi: 10.3109/00207458509149773. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience. 1998;18:411–8. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaidel E. Cerebral Hemisphere Asymmetry. New York: Praeger Publishers; 1983. Disconnection syndrome as a model for laterality effects in the normal brain. In: Hellige, J., editor. [Google Scholar]

- Zaidel E. The Dual Brain: Hemispheric Specialization in Humans. New York: Guilford; 1985. Language in the right hemisphere. In: Zaidel, E., editor. [Google Scholar]

- Zaidel E. Two Hemispheres–One Brain: Functions of the Corpus Collosum. New York: A. R. Liss; 1986. Callosal dynamics and right hemisphere language. In: Jasper, H., editor. [Google Scholar]

- Zaidel E, Clarke JM, Suyenobu B. Neurobiological Foundations of Higher Cognitive Function. New York: Guilford; 1991. Hemispheric independence: a paradigm case for cognitive neuroscience. In: Wechsler, A., editor. [Google Scholar]

- Zaidel E, White H, Sakurai E, Banks W. Right Hemisphere Contributions to Lexical Semantics. New York: Springer; 1988. Hemispheric locus of lexical congruity effects: neuropsychological reinterpretation of psycholinguistic results. In: Chiarello, C., editor. [Google Scholar]