Abstract

The influence of integrated goal representations on multilevel coordination stability was investigated in a task that required finger tapping in antiphase with metronomic tone sequences (inter-agent coordination) while alternating between the two hands (intra-personal coordination). The maximum rate at which musicians could perform this task was measured when taps did or did not trigger feedback tones. Tones produced by the two hands (very low, low, medium, high, very high) could be the same as, or different from, one another and the (medium-pitched) metronome tones. The benefits of feedback tones were greatest when they were close in pitch to the metronome and the left hand triggered low tones while the right hand triggered high tones. Thus, multilevel coordination was facilitated by tones that were easy to integrate with, but perceptually distinct from, the metronome, and by compatibility of movement patterns and feedback pitches.

Keywords: Motor Coordination, Auditory Feedback, Perceptual Motor Processes, Finger Tapping

Skilled human activity often involves precise temporal coordination at multiple levels. For example, in musical ensembles, performers coordinate their own body parts when manipulating instruments in order to produce sounds that are coordinated with the sounds of other performers. The intricate interlocking of instrumental parts in Balinese Gamelan music (especially in the kotekan style of playing) illustrates superbly how adept humans can become at such multilevel coordination. Here, and in more common activities such as dancing to music, there are two primary levels of coordination: (1) intra-personal – between an individual’s own body parts (e.g., two hands or feet), and (2) inter-agent – between one’s own actions and externally controlled events (e.g., another’s actions or the effects thereof).1

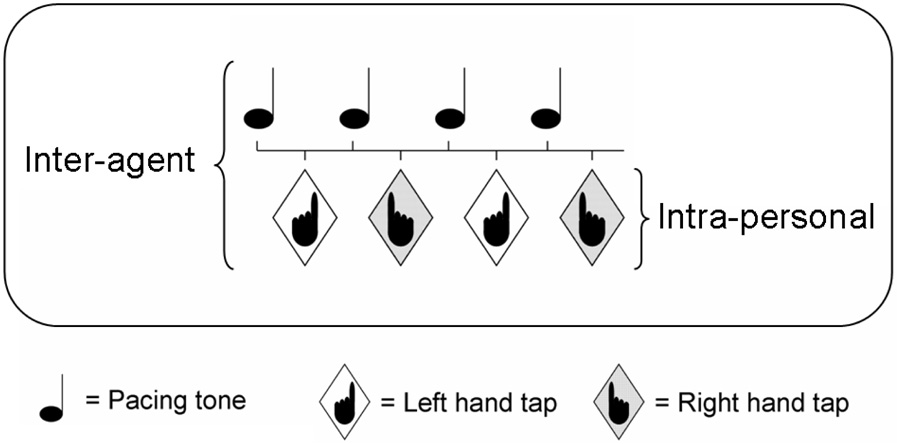

Keller and Repp (2004) investigated simultaneous intra-personal and inter-agent coordination using a task that requires the left and right hands to move in alternation (intra-personal coordination) to produce finger taps at the midpoint between the tones of an auditory metronome (inter-agent coordination) (see Figure 1). Thus, antiphase coordination is required at both levels. This coordination mode, dubbed Alternating Bimanual Syncopation (ABS), proved to be relatively challenging. The variability of tap timing was far greater for ABS than for any of the other coordination modes that were examined, including unimanual syncopation where taps are made (with either the left or right hand) after every metronome tone, unimanual ‘skip’ syncopation where taps are made after every other tone, bimanual synchronization with alternating hands, unimanual synchronization (tapping with every tone), and unimanual skip synchronization (tapping with every other tone). The relative difficulty of ABS was more pronounced at moderately fast movement rates than at comfortable rates.

Figure 1.

Schematic illustration of the intra-personal and inter-agent components of alternating bimanual syncopation.

Borrowing from the dynamical systems approach to movement coordination, Keller and Repp (2004) concluded that the difficulty with ABS derives from competition between multiple attractors in the dynamical landscape wherein simultaneous intra-personal and inter-agent coupling takes place. According to this view, each of the two coupling collectives—inter-personal (i.e., hand-hand, or action-action) and intra-agent (metronome-hand, or perception-action)—harbours an antiphase attractor and an in-phase attractor. Note that in the case of the inter-agent collective, antiphase coordination is syncopation and in-phase coordination is synchronization. It is known from other work that antiphase attractors are weaker than in-phase attractors, and that perception–action coupling is weaker than action–action coupling (e.g., Byblow, Chua, & Goodman, 1995; Chua & Weeks, 1997; Haken, Kelso, & Bunz, 1985; Schmidt, Bienvenu, Fitzpatrick, & Amazeen, 1998). Thus, there are asymmetries both within and between the two collectives involved in ABS.

The asymmetries within collectives compromise the stability of ABS to the extent that antiphase movements are drawn towards the in-phase mode. Attraction to the in-phase mode is stronger at fast rates than at comfortable rates because perception-action and action-action coupling grow weaker with increasing movement frequency, and the effects of this weakening on stability are greater for antiphase than for in-phase coordination (see Kelso, 1995; Schmidt et al., 1998; Turvey, 1990). At critically fast rates, transitions from the antiphase mode to the in-phase mode are often observed in intra-personal coordination and in inter-agent coordination (e.g., Kelso, 1984; Fraisse & Ehrlich, 1955; Kelso, DelColle, & Schöner, 1990; Schmidt et al., 1998). Such phase transitions are usually preceded by increased variability in movement timing (Kelso, Scholz, & Schöner, 1986; Schmidt, Carello, & Turvey, 1990). In ABS, the potential for transitions from antiphase to in-phase coordination may be relatively high because attraction takes place at the intra-personal and inter-agent levels simultaneously. Although phase transitions can be avoided by the intention to maintain double antiphase relations—at least by musically trained individuals tapping at the moderate rates tested by Keller and Repp (2004)—it may be effortful to do so. The high movement timing variability for ABS observed by Keller and Repp (2004) suggests that their musician participants were teetering on the edge of the boundary between antiphase and in-phase coordination.

The second type of asymmetry that characterises ABS—between intra-personal and inter-agent collectives—may compromise stability by influencing the allocation of attentional resources (Keller & Repp, 2004). ABS can be viewed as a dual task wherein both components (intra-personal and inter-agent antiphase coordination) require attention (see Mayville, Jantzen, Fuchs, Steinberg, & Kelso, 2002; Temprado & Laurent, 2004; Temprado, Zanone, Monno, & Laurent, 1999). The intra-personal (alternating hands) component of ABS may divert attentional resources from the relatively demanding task of maintaining antiphase coordination with the metronome. Inter-agent coupling may consequently weaken, making coordination accuracy at this level more variable from cycle to cycle. Such attentional constraints may apply in real-word instances of simultaneous intra-personal and inter-agent coupling when, for example, an instrumentalist falls out of sync with the rest of the ensemble due to excessive attention to fingering, and dancers lose the music (or their partners) due to excessive attention to their own footwork.

The current study investigated how multilevel coordination stability is influenced by the way in which the goals of the task are represented by the actor. The main aim of our experiment was to test whether coordination stability during ABS is affected by the degree to which the inter-agent and intra-personal components of ABS can be integrated into a unified task-goal representation. This was manipulated through the use of supplementary auditory feedback in the form of tones triggered by taps.

Previous work on intra-personal coordination has shown that bimanual coordination stability is influenced by the degree to which tones triggered by separate hands can be integrated into a single perceptual stream. For example, in a study of 2:3 polyrhythm production (i.e., repeatedly making two isochronous taps with one hand in the time that it takes to make three taps with the other hand), Summers, Todd, and Kim (1993) found that timing was more accurate when the pitch separation between tones produced by each hand was narrow than when it was wide. Given that sequences of tones close in pitch are more likely to be perceived as a single stream than sequences of tones separated by large pitch differences (see Bregman, 1990), the results of Summers et al. suggest that bimanual coordination is more stable when the two hands are controlled with reference to integrated rather than independent movement goals (also see Klapp, Hill, Tyler, Martin, Jagacinski, & Jones, 1985; Jagacinski, Marshburn, Klapp, & Jones, 1988; Summers, 2002). This phenomenon generalizes across tasks and modalities, as benefits of integrated goal representations have also been observed in studies addressing the influence of transformed visual feedback on the stability of intra-personal coordination (e.g., Bogaerts, Buekers, Zaal, & Swinnen, 2003; Mechsner, Kerzel, Knoblich, & Prinz, 2001) and inter-agent coordination (e.g., Roerdink, Peper, & Beek, 2005).

In the current study, it was hypothesized that the addition of supplementary auditory feedback during ABS would simplify the perceptual goals of the task if the feedback tones and pacing signal tones can be integrated into a single Gestalt. We assumed that such integration would encourage the actor to adopt a common (auditory) perceptual locus of control across intra-personal and inter-agent levels of coordination, and that this perceptual locus of control would stabilise performance by reducing the impact of coupling constraints that implicate the motor system, and/or by discouraging the disproportionate allocation of attention to the alternating hands. Thus, integrated goal representations may redress the asymmetries both within and between the intra-personal and inter-agent collectives involved in ABS.

A strong version of the integrated goal representation hypothesis predicts that multilevel coordination stability should be greatest when feedback tones and pacing tones are indistinguishable, because under such conditions a single perceptual stream is guaranteed. However, the benefits of having a simple perceptual goal may in this case be tempered by structural incongruence between the auditory goal representation (consisting of a series of identical elements) and the motor program that drives movement (consisting of a series of disparate elements: left-hand taps, right-hand taps, and, presumably, intervening ‘rests’ that coincide with pacing signal events2). Research addressing the production of musical sequences has shown that timing accuracy can be affected by whether or not structural relations between movements and their auditory effects are compatible (e.g., Keller & Koch, 2006; Pfordresher, 2003, 2005). Such findings suggest that, during ABS, the benefits of integrated goal representations may enter into a trade-off with factors such as the degree of structural congruence between goal representations and their associated movement patterns.

To test the above hypotheses, coordination stability during ABS was compared across conditions that varied in terms of (1) whether or not finger taps triggered tones (i.e., presence of supplementary auditory feedback), and (2) the relationship between tone pitches produced by the two hands and the pacing sequence. It was assumed that integrated goal representations would be encouraged to the extent that feedback tones and pacing sequence tones are perceived as a single auditory stream, and that such perceptual organisation would arise when the tones are close in terms of pitch height. To gauge the effects of these feedback manipulations, coordination stability was indexed using an objective measure of the fastest at rate at which ABS could be performed accurately. This ‘rate threshold’ measure, determined using a simple adaptive staircase procedure, yields information about the critical rate at which participants’ intentions are no longer effective in preventing transitions from antiphase to in-phase coordination (or total loss of coordination).

If integrated goal representations facilitate coordination stability, then ABS thresholds should indicate faster critical rates when the pitch separation between feedback tones and pacing tones is small than when it is large. Whether such benefits extend to the case where feedback and pacing tones are identical was a question of interest. The perception of a single stream (and, by implication, a perfectly integrated goal) is unavoidable under such circumstances. However, this situation is characterised by structural incongruence between the auditory goal representation (a sequence of uniform elements) and the required movement pattern (a ‘left-rest-right-rest’ varying sequence).

We examined the role of structural congruence by varying the assignment of low and high pitches to the two hands in the conditions where feedback tones were distinctive from pacing tones. Feedback pitches could either be the same or different across hands. Structural congruence was assumed to be highest when a different pitch is associated with each hand, because in this case the auditory goal representation and the movement pattern both consist of a set of three distinct elements. Structural congruence was manipulated further in the conditions where the two hands were associated with different pitches by varying the assignment of low and high tones to the left and right hands. Congruence was assumed to be higher when the left hand produced low tones and the right hand produced high tones (which is compatible with the key-to-tone mapping on a piano keyboard) than with the reverse (incompatible) arrangement (see Lidji, Kolinsky, Lochy, Karnas, & Morais, 2007; Repp & Knoblich, 2007a; Rusconi, Kwan, Giordano, Umiltá, & Butterworth, 2006).

METHODS

Participants

The 16 participants included author BHR in addition to six women and nine men. All apart from BHR were paid in return for their efforts. Eight participants (including BHR) were tested in New Haven, Connecticut, and eight were tested in Leipzig, Germany. Ages ranged from 20 to 33 years, except for BHR, who was a ripe 58 years at the time. All had played musical instruments for six or more years. The New Haven participants were self-declared right handers and the Leipzig participants were right handed according to the Edinburgh inventory (Oldfield, 1971).

Materials

Auditory sequences consisting of isochronous series of up to 38 identical moderately high-pitched digital piano tones (C6, 1046.5 Hz, with duration 80 ms plus damped decay) were used as pacing signals. In New Haven, the tone sequences were generated on a Roland RD-250s digital piano under control of a program written in MAX (a graphical programming environment for music and multimedia) running on a Macintosh Quadra 660AV computer. In Leipzig, sequences were produced by the QuickTime Music Synthesizer on a Macintosh G5 computer running MAX. The piano tones sounded very similar on both systems. Sequence rate was set according to the threshold measurement procedure described below.

Procedure

Participants sat in front of the computers, listened to the sequences over Sennheiser HD540 II headphones (in New Haven) or Sennheiser HD270 headphones (in Leipzig) at a comfortable intensity, and tapped on a Roland SPD-6 electronic percussion pad—which was held on the lap—at both test sites. The touch-sensitive rubber surface of the SPD-6 is divided into six segments, arranged in two rows of three. Left-hand taps were made on the top left segment and right-hand taps were made on the top right segment. The sensitivity of the pad was set to the manual (as opposed to drumstick) mode. Participants tapped with their index fingers, with the left hand leading. Some participants rested their hands on the pad and tapped by moving only the index fingers; others tapped ‘from above’ by moving the wrist and/or elbow joints of the free arms. In all conditions, the impact of the finger on the pad provided some auditory feedback (a thud), in proportion to the tapping force.

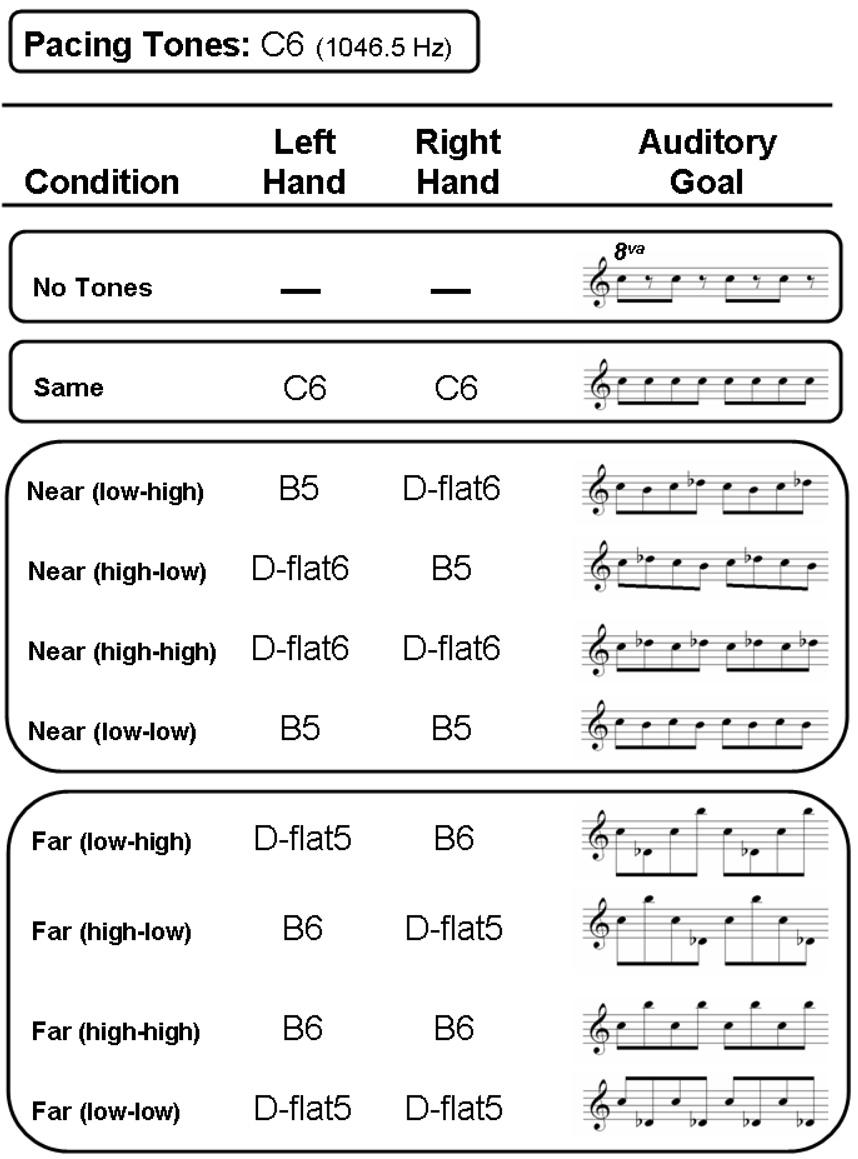

Participants’ ABS thresholds were estimated in 10 experimental conditions. Supplementary auditory feedback was provided in nine out of these ten conditions (see Figure 2). The sole condition without such feedback is referred to as the ‘No Tones’ condition. In the conditions with supplementary auditory feedback, transmission delays in the experimental apparatus at both sites caused tones to occur around 30 ms after finger impacts on the percussion pad. These delays were not expected to have marked effects on the results because musicians are generally accustomed to time lags between movements and sounds on their instruments due to mechanical (e.g., key action) and acoustical (e.g., rise time) considerations.

Figure 2.

The 10 feedback conditions used in the experiment. Tone pitches (e.g., C6) associated with the metronomic pacing sequence and the left and right hands are specified according to the note numbering system in which C4 is middle C on the piano. The auditory goal in each condition is shown in musical notation on the right (only pacing tones are shown for the ‘No Tones’ condition). Note: 8va indicates that tones sound one octave higher than notated, which applies to all conditions.

The nine supplementary auditory feedback conditions can be grouped as follows. First, in a condition referred to as ‘Same’, the pitches produced by the two hands and the pacing sequence were identical (C6). Next, in four conditions referred to collectively as ‘Near’ conditions, feedback tones were close in pitch to the pacing tones. In two Near conditions, labelled ‘Near (low-low)’ and ‘Near (high-high)’, the left and right hands produced identical pitches, which were one semitone lower or higher than the pacing tones. In the two remaining Near conditions, the left and right hands produced different pitches, also one semitone lower or higher than the pacing tones. In the ‘Near (low-high)’ condition, the left hand triggered low tones while the right hand triggered high tones (which was assumed to be a compatible mapping), while in the ‘Near (high-low)’ condition, these contingencies were reversed (and assumed to be incompatible). Finally, there were four ‘Far’ conditions in which the mappings of feedback to hand were similar to those employed in the Near conditions, except that feedback tones were now 11 semitones lower or higher than the pacing tones.

Participants’ ABS thresholds were estimated twice for each of the 10 conditions using an adaptive staircase procedure. Each set of 10 estimates was taken in a separate test session, typically separated by one week. During each session, each condition was presented once in a separate experimental block lasting approximately 8–10 minutes.

In a warm-up exercise conducted at the start of each session, the participant was required to estimate his or her ABS threshold without supplementary auditory feedback (as in the No Tones condition) by tapping along with, and adjusting the presentation rate of, a continuous sequence of isochronous piano tones identical to those described above. The participant indicated that he or she was ready to begin the warm-up exercise by pressing the spacebar on the computer keyboard, resulting in the presentation of the sequence after a brief delay. Initially the inter-onset interval (IOI) was set to 800 ms. Sequence rate could subsequently be adjusted by pressing the ‘up’ and ‘down’ arrow keys on the computer keyboard. Pressing the ‘up’ key shortened the IOI by a constant 20 ms, resulting in a rate increase, while pressing the ‘down’ key lengthened the IOI by 20 ms, resulting in a rate decrease. The participant was instructed to increase the rate of the sequence until he or she could no longer tap along in antiphase, and then to decrease the rate gradually until antiphase tapping was just manageable. Clicking a virtual button on the computer screen then brought on the formal threshold measurement procedure, after the participant’s estimated threshold had been recorded by MAX.

The formal threshold measurement procedure consisted of blocks of trials in which the rate of the sequence was adjusted from trial to trial according to an algorithm implemented in MAX. As mentioned above, there was one block of trials per feedback condition in each of the two test sessions. For each block, sequence rate was initially set to the participant’s own threshold estimate from the warm-up exercise. (All participants proved to be conservative in their threshold estimates, and were able to tap faster during formal threshold measurement.) In each trial, the participant’s task was to begin tapping after the third tone of the sequence, and to attempt to maintain an antiphase relationship between taps and tones until the sequence ended. Instructions specified that the urge to make transitions from antiphase to in-phase should be resisted, much as a musician would be required to do in order to perform an off-beat passage correctly. MAX evaluated the correctness of tap timing online by measuring the timing of each tap relative to the midpoint between sequence tones. The timing of an individual tap was considered to be correct if the time interval between the tap and the midpoint between tones was shorter than the time intervals between the tap and the initial tone, and the tap and the final tone, of the current IOI (i.e., within the 26–74% region of each IOI; 50% representing the midpoint). Tap timing was not measured for the initial 12 taps or the final tap of a trial. Performance was judged to be correct only in trials in which the remaining 24 taps met the timing criterion. Trials were aborted once an error was detected in order to shorten the duration of the task.

The algorithm that determined presentation rate for each trial started with the rule that, if performance on the first trial is correct, then the rate of the sequence should be increased by 4% in the next trial. (The resulting IOI was rounded to the nearest millisecond.) This rule was reapplied until an incorrect trial was registered, in which case sequence rate remained the same for the next trial. Following three consecutive incorrect trials, sequence rate was reduced by 4%. Then, as long as performance remained correct, sequence rate was increased by 1% from trial to trial until the occurrence of another set of three consecutive incorrect trials. At this point the block terminated and the lowest correct IOI value achieved by the participant was recorded by MAX as his or her threshold. At the conclusion of each trial within a block, a message appeared in a window on the computer screen informing the participant whether sequence rate would be faster, the same, or slower in the next trial. The participant progressed from block to block by closing the current message window, and then opening a new window by selecting an item from a dropdown menu on the computer screen. (The participant had been given a sheet specifying the order in which he or she should run through the blocks.) Ten blocks—one for each condition—were completed in this fashion during each test session, yielding 10 ABS threshold values per participant per session. Condition order in the first session was counterbalanced across participants according to a 10 × 10 Latin square. The condition order encountered by individual participants was reversed in the second session.

RESULTS

Each participant’s ABS threshold estimate for each feedback condition from the first and second test sessions are shown in Table 1 and Table 2, respectively. In a preliminary analysis, these estimates were entered into a (2 × 10)× 2 analysis of variance (ANOVA) with the within-participant variables session and feedback condition, and the between-participants variable test site (New Haven vs. Leipzig).3 Two of the Leipzig participants were excluded from this analysis because they failed to save their data correctly during the first session. This preliminary ANOVA revealed significant main effects of session, F(1, 12) = 21.83, p = .001, and feedback condition, F(9, 108) = 6.38, p < .01. The effect of session indicated general improvement between sessions: Average thresholds decreased from 319 ms (SD = 67) in the first session to 277 ms (SD = 56 ms) in the second session (for participants who saved their data in both sessions).4 The interaction between session and feedback condition was far from statistical significance, F(9, 108) = .63, p = .56. Likewise, the main effect of test site and all interactions involving this between-participants variable were not significant, ps > .2, indicating that the slight differences in equipment at the two sites did not affect performance reliably.

Table 1.

Individual participants’ ABS thresholds (in ms) for the 10 feedback conditions from the first test session. Data from participants L1 and L3 are missing because they were not saved due to human error. The letter prefixing each participant number indicates the test site: ‘N’ = New Haven; ‘L’ = Leipzig. For the feedback condition labels, ‘Lo’ = Low and ‘Hi’ = High.

| Near |

Far |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Participant | No Tones | Same | Lo-Hi | Hi-Lo | Hi-Hi | Lo-Lo | Lo-Hi | Hi-Lo | Hi-Hi | Lo-Lo |

| N1 | 350 | 248 | 277 | 324 | 409 | 289 | 285 | 308 | 309 | 300 |

| N2 | 278 | 289 | 302 | 351 | 277 | 324 | 259 | 311 | 324 | 308 |

| N3 | 300 | 379 | 337 | 353 | 318 | 376 | 324 | 303 | 324 | 312 |

| N4 | 300 | 312 | 247 | 238 | 305 | 269 | 252 | 311 | 312 | 297 |

| N5 | 350 | 391 | 398 | 350 | 375 | 401 | 324 | 388 | 402 | 379 |

| N6 | 283 | 352 | 284 | 373 | 358 | 359 | 331 | 359 | 316 | 373 |

| N7 | 404 | 288 | 310 | 323 | 266 | 299 | 349 | 320 | 349 | 374 |

| N8 | 330 | 334 | 379 | 285 | 391 | 315 | 308 | 297 | 347 | 369 |

| L1 | - | - | - | - | - | - | - | - | - | - |

| L2 | 304 | 221 | 169 | 213 | 192 | 152 | 221 | 235 | 227 | 194 |

| L3 | - | - | - | - | - | - | - | - | - | - |

| L4 | 302 | 267 | 269 | 273 | 253 | 247 | 289 | 267 | 269 | 336 |

| L5 | 324 | 341 | 298 | 314 | 300 | 312 | 279 | 324 | 318 | 374 |

| L6 | 239 | 239 | 168 | 190 | 164 | 175 | 193 | 175 | 176 | 258 |

| L7 | 672 | 386 | 310 | 319 | 287 | 335 | 406 | 682 | 711 | 447 |

| L8 | 431 | 393 | 431 | 443 | 435 | 390 | 349 | 399 | 388 | 363 |

| Average | 348 | 317 | 299 | 311 | 309 | 303 | 298 | 334 | 341 | 335 |

| SD | 106 | 60 | 75 | 67 | 79 | 74 | 56 | 115 | 122 | 62 |

Table 2.

Individual participants’ ABS thresholds (in ms) for the 10 feedback conditions from the second test session. Abbreviations are the same as in Table 1.

| Near |

Far |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Participant | No Tones | Same | Lo-Hi | Hi-Lo | Hi-Hi | Lo-Lo | Lo-Hi | Hi-Lo | Hi-Hi | Lo-Lo |

| N1 | 374 | 244 | 194 | 225 | 199 | 240 | 274 | 304 | 262 | 335 |

| N2 | 239 | 282 | 188 | 183 | 260 | 213 | 284 | 239 | 239 | 255 |

| N3 | 314 | 290 | 290 | 264 | 299 | 248 | 314 | 290 | 316 | 248 |

| N4 | 306 | 253 | 243 | 231 | 225 | 243 | 234 | 253 | 253 | 253 |

| N5 | 379 | 348 | 337 | 397 | 379 | 394 | 375 | 347 | 354 | 344 |

| N6 | 328 | 352 | 260 | 339 | 355 | 370 | 260 | 292 | 316 | 370 |

| N7 | 356 | 255 | 185 | 184 | 174 | 215 | 254 | 288 | 342 | 219 |

| N8 | 339 | 351 | 309 | 334 | 305 | 324 | 347 | 321 | 324 | 334 |

| L1 | 260 | 292 | 215 | 211 | 235 | 232 | 232 | 223 | 292 | 274 |

| L2 | 328 | 200 | 185 | 209 | 243 | 169 | 209 | 213 | 251 | 217 |

| L3 | 509 | 490 | 510 | 553 | 552 | 575 | 570 | 606 | 622 | 530 |

| L4 | 267 | 210 | 210 | 220 | 227 | 241 | 242 | 214 | 227 | 269 |

| L5 | 315 | 275 | 210 | 236 | 275 | 232 | 285 | 245 | 271 | 271 |

| L6 | 193 | 172 | 140 | 134 | 147 | 160 | 147 | 179 | 209 | 245 |

| L7 | 324 | 354 | 293 | 382 | 315 | 297 | 360 | 341 | 295 | 401 |

| L8 | 390 | 349 | 308 | 314 | 313 | 346 | 343 | 353 | 329 | 435 |

| Average | 326 | 295 | 255 | 276 | 281 | 281 | 296 | 294 | 306 | 313 |

| SD | 72 | 78 | 88 | 105 | 96 | 103 | 95 | 98 | 95 | 87 |

Further analyses addressing the effects of feedback condition were conducted (without the test site variable) on data from the second session only. This decision was motivated not only by the fact that we had data from all participants for this session, but also because ABS is a challenging task that—not unlike, say, juggling—can be performed confidently only after considerable practice. An omnibus ANOVA revealed that the main effect of feedback condition was statistically significant for the second session dataset, F(9, 135) = 6.10, p < .001.

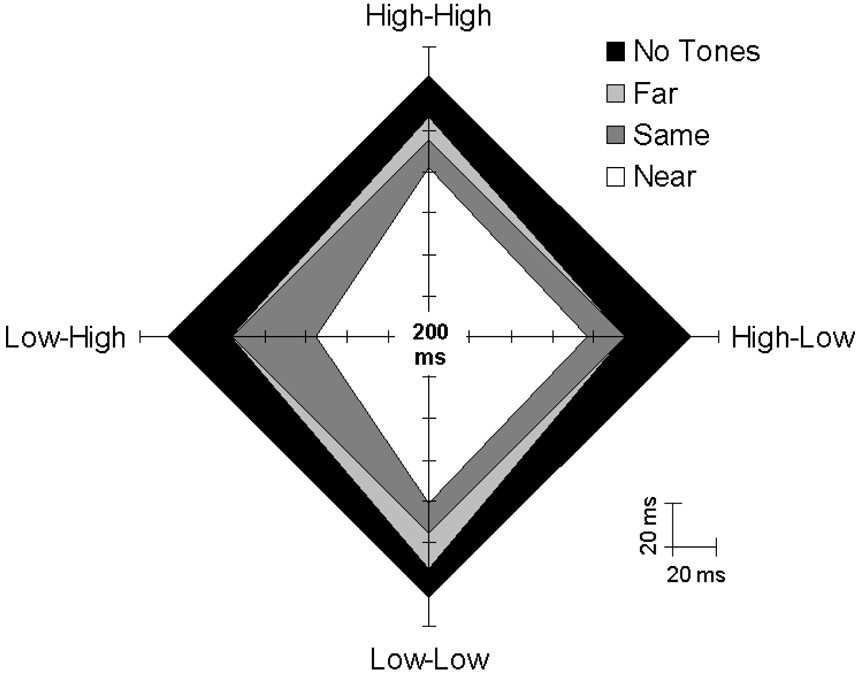

To assist in visualizing the data, average ABS thresholds from the second session are shown on a radar plot in Figure 3. Each of the four branches of the plot spans an IOI range from 200 to 340 ms. The labels attached to the branches refer to the different feedback-to-hand mappings from the Near and Far conditions (e.g., ‘Low-High’ refers to conditions where the left hand produced low-pitched tones and the right hand produced high tones). Points on the branches indicating ABS threshold values for the Near and Far conditions are joined by separate sets of lines. For the sake of comparison, threshold values for the No Tones and Same conditions are also joined by separate lines (note that the branch labels are not relevant in these cases, and that the same values are intersected on each branch). Each set of lines forms a diamond-like quadrilateral plane whose surface area and shape vary as a function of the related threshold values in such a way that smaller areas indicate lower ABS thresholds.

Figure 3.

Average ABS thresholds (in ms) for the 10 different feedback conditions. The labels at the end of each radar plot branch refer to the relative pitches of feedback tones (low or high) assigned to the left and right hands in the Near and Far conditions (with the pitch associated with the left hand listed first). These labels are not relevant in the case of data from the No Tones and Same conditions. The range of each branch spans 200–340 ms in 20 ms steps. See the text in the Results section for a more detailed description of this figure.

As hypothesized, ABS thresholds were generally lower when auditory feedback was present than when it was absent, as indicated by the fact that the No Tones condition covers the largest area in Figure 3. The reliability of this result was confirmed by a planned contrast that compared thresholds in the No Tones condition with thresholds from the Same, Near, and Far conditions combined (i.e., averaged), F(1, 15) = 9.58, p < .01. Further sets of planned comparisons—based on the hypothetical possibilities raised in the introduction—addressed how the benefits of auditory feedback were affected (1) by the degree to which integrated goal representations were encouraged by the various pitch relations and (2) by the degree of structural congruence between goal representations and movement patterns.

In support of the hypothesis that integrated goal representations facilitate coordination stability, thresholds were lower on average in the Near than the Far conditions, F(1, 15) = 15.55, p = .001. However, as can be seen in Figure 3, the benefits of integrated goal representations did not extend further to the Same condition. In fact, thresholds were higher in the Same condition than in the (averaged) Near conditions, F(1, 15) = 6.04, p < .05. In the former (identical tones) case, the benefits of highly integrated goal representations may be offset by costs associated with structural incongruence between these representations and the related movement patterns.

Further evidence that structural congruence plays a role in coordination stability can be seen in the irregular shape associated with the Near conditions in Figure 3. Please note the slight elongation on the vertical dimension and the more obvious compression on the left side. The vertical elongation indicates that thresholds were higher, on average, when the left and right hands produced the same pitched tone [Near (Low-Low) & Near (High-High)] than when the two hands produced different pitches [Near (Low-High) & Near (High-Low)], F(1, 15) = 8.09, p = .01. The left-side compression indicates that thresholds were lower for the feedback-to-hand mapping that was compatible with the left-to-right spatial arrangement of tone pitches on a piano keyboard [Near (Low-High)] than for the reverse, incompatible mapping [Near (High-Low)], F(1, 15) = 6.61, p < .05. These results suggest that, when the pitch separation between feedback and pacing tones was small, structural congruence between auditory goal representations and movement patterns facilitated coordination.

A similar analysis of data from the Far conditions did not yield significant effects, though there was a tendency for thresholds to be lower when the two hands produced different pitches than when they produced the same pitch, F(1, 15) = 4.30, p = .06. These results suggest that structural congruence was not particularly influential when integrated goal representations were discouraged by the large pitch separation between feedback and pacing tones.

DISCUSSION

Simultaneous intra-personal and inter-agent coordination was investigated in a challenging task that required alternating bimanual syncopation (ABS), i.e., finger tapping in antiphase with metronomic tone sequences while alternating between the two hands. Auditory feedback (in the form of tones triggered by taps) was manipulated to test how the stability of ABS is affected by the degree to which the intra-personal and inter-agent components of the task can be integrated into a unified task-goal representation. Coordination stability was indexed by thresholds representing the fastest rates at which musically trained participants could perform the ABS task. Feedback tones improved performance, and this benefit was most pronounced when the tones were close in pitch to the pacing tones and the left hand triggered low tones while the right hand triggered high tones. Thus, multilevel coordination stability was facilitated by distal action effects that were readily integrable (but distinctive) and structurally congruent with the movement pattern.

Integrated Task-Goal Representations

Integrated task-goal representations may—up to a point (see below)—facilitate multilevel coordination stability by allowing a common (auditory perceptual) locus of control to be used for intra-personal and inter-agent levels of coordination. We assume that, under such a strategy, action is guided by the intention to produce a particular sequence of tones (i.e., the conscious goal is specified in the auditory domain), rather than to produce a sequence of finger movements that interleaves the external pacing tones (i.e., a goal spanning the auditory and motor domains). We speculate that this strategy could potentially serve to redress the asymmetries that arise both within and between the two coupling collectives involved in ABS (described briefly in the Introduction and in more detail by Keller & Repp, 2004).

The use of an auditory locus of control—in other words, a ‘disembodied’ control structure—could reduce the asymmetry between the strength of antiphase and in-phase coupling within the inter-agent collective to the extent that disembodied control lessens the impact of coupling constraints that implicate the motor system (see Mechsner, 2004). As a consequence, antiphase inter-agent coordination would be stable over a wider range of rates. A large body of research has shown that challenging intra-personal coordination tasks are performed more successfully when the actor focuses on the distal effects of his or her actions rather than on the body movements that bring about these effects (for a review, see Wulf & Prinz, 2001). The current findings suggest that such a strategy may be also beneficial when the difficulty lies not in intra-personal coordination itself (alternating the hands is alone not a challenging task), but rather in the demands of simultaneous intra-personal and inter-agent coordination.

The phenomenon known as ‘anchoring’ (Beek, 1989; Byblow, Carson, & Goodman, 1994; Fink, Foo, Jirsa, & Kelso, 2000; Roerdink, Ophoff, Peper, & Beek, 2008) provides a specific mechanism that may facilitate coordination stability especially during ABS with auditory feedback. Anchoring occurs when cyclic movements (e.g., juggling) are timed with reference to a discrete point within each cycle. Such anchor points, which often coincide with salient movement transitions, can be viewed as “intentional attractors” (Beek, 1989, p. 183) that provide task-specific information that assists in reducing kinematic variability around movement transition points as well as stabilizing entire movement cycles (see Roerdink et al., 2008). The information that is available at anchor points thus varies according to the task, and, moreover, it can be associated both with self-generated events (e.g., ball release in juggling; Beek, 1989) and with externally controlled events (e.g., the tones of an auditory pacing signal; Fink et al., 2000). When auditory feedback is present during ABS, the feedback tones triggered by taps may serve as self-generated anchoring events. Anchors linked to extrinsic acoustic information may facilitate coordination stability by enabling the actor to assess more precisely any deviations from the temporal goals because auditory event timing is perceived more accurately than visual event timing and more rapidly than tactile event timing (see Aschersleben, 2002; Grondin, 1993; Kolers & Brewster, 1985; Repp & Penel, 2002). The differences in ABS thresholds that we observed with different types of auditory feedback suggest that this facilitation varies with the type of information available at anchor points: Some anchors may be more secure than others.

Integrated task goal representations may also serve to balance the asymmetry between coupling strength in the intra-personal and the inter-agent collective (which may be especially large during ABS to the extent that intra-personal coupling weakens inter-agent coupling by diverting attentional resources). We speculate that the use of an auditory locus of control may encourage attention to be allocated evenly to the individual’s actions—or, more specifically, their auditory effects—and the pacing signal. Our finding that performance was clearly better when the pitch separation between feedback tones and pacing tones was small than when it was large suggests that such allocation may be affected by the degree to which auditory information from separate sources can be integrated into a single Gestalt (cf. Klapp et al., 1985; Jagacinski et al., 1988; Summers et al., 1993). Readily integrable information could lead to an overall reduction in attentional demands by allowing the dual task (maintaining two levels of antiphase coordination) to be conceptualized as a simpler single task (producing a coherent sequence of pitches). Converging evidence that the complexity of task-goal representations influences coordination stability was found in a recent study that examined the temporal coupling of vocal responses with spatially symmetrical and asymmetrical bimanual movements (Spencer, Semjen, Yang, & Ivry, 2006).

Importantly, however, integrated goal representations stabilised ABS only up to a point in our study. Performance was poorer when pacing and feedback tones were identical (hence perfectly integrable) than when feedback tones were close, but different, in pitch to the pacing tones. Our findings suggested that this may have been the case because multilevel coordination stability was affected by the degree of structural congruence between auditory goal representations and their associated movement patterns.

Structural Congruence

Two types of structural congruence can be distinguished, one related to the concept of ‘set-level compatibility’ and the other to ‘element-level compatibility’ from the stimulus-response compatibility literature (Kornblum, 1992; Kornblum, Hasbroucq, & Osman, 1990).

In the case of ABS, set-level compatibility refers to the degree to which properties of the set of elements that comprise the auditory goal match properties of the set of elements comprising the movement pattern. In the current conception, the number of distinct elements in each set is a property that determines set-level compatibility.5 This form of compatibility can account for why ABS was more stable when the left and right hands produced different pitches than when they produced the same pitch (when feedback tones were close in pitch to the pacing tones). Set-level compatibility is high to the extent that movement patterns and auditory goals have the same number of distinct elements. The set of features for the ABS movement pattern includes three elements (left-hand taps, right-hand taps, and rests). When the two hands produce different pitches, the auditory goal set (left-hand pitch, right-hand pitch, and pacing tone pitch) includes the same number of elements as the movement pattern set, whereas there is one less element in the auditory goal set than in the movement pattern when the hands produce the same pitch. This difference in number of elements across sets precludes the formation of unique associations (i.e., one-to-one mappings) between individual movement pattern elements and auditory goal elements. As a consequence, the efficiency of processes based on associative learning—such as the serial-chaining and chunking involved in sequential action (see Greenwald, 1970; Keele, Ivry, Mayr, Hazeltine, & Heuer, 2003; Stöcker & Hoffman, 2004; Ziessler, 1988)—could decrease.

Set-level compatibility is lowest when feedback tones and pacing tones are identical in pitch. Such identity may blur distinctions both within and between events at the intra-personal and inter-agent levels, making it more difficult for the actor to keep track of their location in sequential pattern structure than when feedback and pacing tones are distinctive. It is also conceivable that the benefits of integrated goal representations are counteracted in the case of identical feedback and pacing tones due to confusion in the attribution of agency, specifically, the process of distinguishing the effects of one’s own actions from those of another agent (see Repp & Knoblich, 2007b).

Element-level compatibility refers to how the elements are mapped from one set to the other. In sequential tasks such as ABS, element-level compatibility is determined by the mapping of transitional relations between elements; for example, whether a left-to-right transition in the movement pattern is associated with a low-to-high or a high-to-low transition in the auditory goal. Auditory goals and movement patterns can be compatible at the element level only if they are compatible at the set level. Element-level compatibility can account for why—in conditions where the hands produced different tones that were close in pitch to the pacing tones—ABS was more stable when the feedback-to-hand mapping was compatible than when it was incompatible with the mapping found on the piano.

Related effects of structural congruence on timing accuracy have been observed in studies that required auditory sequences to be produced by unimanual finger movements (e.g., Keller & Koch, 2006; Pfordresher, 2003, 2005). The focus of these earlier studies was on sequential planning, and the sequences contained patterns of transitions that were complex relative to the repeating left-right pattern in ABS. The current results are therefore interesting insofar as they suggest that the structural congruence between movement patterns and auditory goals can continue to play a role when sequential planning demands are relatively low. This may be due to the influence of structural congruence on online motor control processes in tasks where action coordination is challenging. One possibility is that the anchoring process mentioned earlier is most effective at promoting global stability when structural congruence is high. More generally, perception and action may be more tightly coupled—allowing more stable global coordination—when movement patterns and the ‘melodic’ contours of auditory goals are represented by a common set of transitional relations than when representations of two distinctive sets of transitional relations are simultaneously active (for related discussions, see Hoffmann, Stöcker, & Kunde, 2004; Hommel, Müsseler, Aschersleben, & Prinz, 2001; Klapp, Porter-Graham, & Hoifjeld, 1991; Koch, Keller, & Prinz, 2004; Pfordresher, 2006). It may be the case that the concurrent simulation of movements and auditory goals by internal models (see Wolpert & Ghahramani, 2000) runs more smoothly when they have a common transitional structure.

Finally, structural congruence between auditory goals and movement patterns may facilitate multilevel coordination by encouraging the use of hierarchical timing control structures such as metric frameworks. Metric frameworks are cognitive/motor schemas that comprise hierarchically arranged levels of pulsation, with pulses at the ‘beat level’ nested within those at the ‘bar level’ in simple integer ratios such as 2:1 (duple meter), 3:1 (triple), or 4:1 (quadruple) (see London, 2004). Auditory stimuli characterised by regular patterns of durations and pitches can induce metric frameworks in a listener by encouraging the perception of periodic accents (e.g., Hannon, Snyder, Eerola, & Krumhansl, 2004; Jones & Pfordresher, 1997; Povel & Essens, 1985). Multiple timekeepers/oscillations in the listener’s central nervous system may become coupled both internally with one another and externally with the multiple periodicities suggested by such accent structures (e.g., Large & Jones, 1999; Vorberg & Wing, 1996).

Numerous studies have shown that the use of meter-based hierarchical timing mechanisms promotes efficiency and accuracy in rhythm perception and production (e.g., Keller & Burnham, 2005; Povel & Essens, 1985). Of particular relevance to the current study, Keller & Repp (2005) found that musicians could counteract the tendency to fall into the in-phase mode during unimanual antiphase inter-agent coordination by engaging in regular phase resetting based on metric structure (which was induced either by physical accents in the pacing signal or by the instruction to imagine such accents when they were in fact absent). The regular pattern of pitch variation that results when the two hands produce different pitches during ABS may encourage the perception of periodic accents, and thereby lead to metric framework generation. This could stabilise performance by facilitating meter-based phase resetting (Keller & Repp, 2005) and/or by reducing variability due to the engagement of hierarchically coupled oscillators rather than a single isolated oscillator (see Patel, Iversen, Chen, & Repp, 2005). It should be noted that although these meter-based explanations may be able to account for the effects of set-level compatibility observed in the current study (i.e., greater stability when the two hands produced different pitches than when they produced the same pitch), they alone cannot account for the effect of element-level compatibility (i.e., greatest stability when the left hand produced low pitches and the right hand produced high pitches).

Conclusions

Integrated task-goal representations facilitate simultaneous intra-personal and inter-individual coordination most effectively when constraints related to the structural congruence between auditory goals and their associated movement patterns are not violated. Integrated representations and structural congruence may jointly stabilise multilevel coordination in various ways. The former may encourage the use of a common ‘disembodied’ locus of control, while the latter may promote efficient associative chaining mechanisms, tight perception-action coupling, and/or the recruitment of hierarchical timing mechanisms. The relationship between these potential explanations, and their relative importance, should be explored in future research.

Acknowledgements

We thank Kerstin Traeger, Nadine Seeger, and Frank Winkler for their help with running the experiment. We also thank Jeroen Smeets and two anonymous reviewers for their comments on a previous version of the manuscript. The research reported in this article was supported by the Max Planck Society and National Institutes of Health grants MH-51230 (awarded to Bruno Repp) and DC-03663 (awarded to Elliot Saltzman), as well as grant H01F-00729 from the Polish Ministry of Science and Higher Education.

Footnotes

The term ‘inter-agent’ is used rather than ‘inter-personal’ because many instances of coordination at this level, both in the laboratory (e.g., finger tapping in time with a metronome) and in the real world (synchronizing with computer-generated and/or recorded music), involve a human and a machine.

Given that movement-related brain areas have been implicated in covert synchronization with rhythmic stimuli (e.g., Grahn & Brett, 2007; Oullier, Jantzen, Steinberg, & Kelso, 2005; Schubotz, Friederici, & von Cramon, 2000), it is possible that actors imagine the movement of body parts other than the hands (e.g., vocal articulators) during these rests.

The criterion for statistical significance was set at α = .05 and the Greenhouse-Geisser correction was applied when the degrees of freedom numerator exceeded one (the reported p-values are corrected, but the reported degrees of freedom values are uncorrected) for all analyses reported in this article.

Average threshold estimates were positively correlated across the two sessions for these participants, r = .87, p < .01.

Note that set-level compatibility is determined by the overlap between the properties of a stimulus set and a response set in Kornblum’s (1992; Kornblum et al., 1990) models, while our discussion concerns the overlap between a set of responses (the movement pattern) and a mixed set of distal response effects and externally controlled stimuli (the auditory goals). Also note that it is arguable whether the number of elements within a set should be classed as a potential dimension on which the overlap between sets can be evaluated. Nevertheless, we consider our conception of set-level compatibility to have heuristic value in the interpretation of our results.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Peter E. Keller, Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany

Bruno H. Repp, Haskins Laboratories, New Haven, Connecticut

References

- Aschersleben G. Temporal control of movements in sensorimotor synchronization. Brain and Cognition. 2002;48:66–79. doi: 10.1006/brcg.2001.1304. [DOI] [PubMed] [Google Scholar]

- Beek PJ. Juggling dynamics. Amsterdam: Free University Press; 1989. [Google Scholar]

- Bogaerts H, Buekers M, Zaal F, Swinnen SP. When visuo-motor incongruence aids motor performance: The effect of perceiving motion structures during transformed visual feedback on bimanual coordination. Behavioural Brain Research. 2003;138:45–57. doi: 10.1016/s0166-4328(02)00226-7. [DOI] [PubMed] [Google Scholar]

- Bregman AS. Auditory scene analysis. Cambridge, MA: MIT Press; 1990. [Google Scholar]

- Byblow WD, Carson RG, Goodman D. Expressions of asymmetries and anchoring in bimanual coordination. Human Movement Science. 1994;13:3–28. [Google Scholar]

- Byblow WD, Chua R, Goodman D. Asymmetries in coupling dynamics of perception and action. Journal of Motor Behavior. 1995;27:123–137. doi: 10.1080/00222895.1995.9941705. [DOI] [PubMed] [Google Scholar]

- Chua R, Weeks DJ. Dynamical explorations of compatibility in perception-action coupling. In: Hommel B, Prinz W, editors. Theoretical issues in stimulus response compatibility. Amsterdam: Elsevier Science; 1997. pp. 373–398. [Google Scholar]

- Fink PW, Foo P, Jirsa VK, Kelso JAS. Local and global stabilization of coordination by sensory information. Experimental Brain Research. 2000;134:9–20. doi: 10.1007/s002210000439. [DOI] [PubMed] [Google Scholar]

- Fraisse P, Ehrlich S. Note sur la possibilityé de syncoper en fonction du tempo d’une cadence [Note on the possibility of syncopation as a function of sequence tempo] L’Année Psychologique. 1955;55:61–65. [PubMed] [Google Scholar]

- Grahn JA, Brett M. Rhythm and beat perception in motor areas of the brain. Journal of Cognitive Neuroscience. 2007;19:893–906. doi: 10.1162/jocn.2007.19.5.893. [DOI] [PubMed] [Google Scholar]

- Greenwald AG. Sensory feedback mechanisms in performance control: With special reference to the ideo-motor mechanism. Psychological Review. 1970;77:73–99. doi: 10.1037/h0028689. [DOI] [PubMed] [Google Scholar]

- Grondin S. Duration discrimination of empty and filled intervals marked by auditory and visual signals. Perception & Psychophysics. 1993;54:383–394. doi: 10.3758/bf03205274. [DOI] [PubMed] [Google Scholar]

- Haken H, Kelso JAS, Bunz H. A theoretical model of phase transitions in human hand movements. Biological Cybernetics. 1985;51:347–356. doi: 10.1007/BF00336922. [DOI] [PubMed] [Google Scholar]

- Hannon EE, Snyder JS, Eerola T, Krumhansl CL. The role of melodic and temporal cues in perceiving musical meter. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:956–974. doi: 10.1037/0096-1523.30.5.956. [DOI] [PubMed] [Google Scholar]

- Hoffmann J, Stöcker C, Kunde W. Anticipatory control of actions. International Journal of Sport and Exercise Psychology. 2004;2:346–361. [Google Scholar]

- Hommel B, Müsseler J, Aschersleben G, Prinz W. The theory of event coding (TEC): A framework for perception and action. Behavioral & Brain Sciences. 2001;24:849–937. doi: 10.1017/s0140525x01000103. [DOI] [PubMed] [Google Scholar]

- Jagacinski RJ, Marshburn E, Klapp ST, Jones MR. Tests of parallel versus integrated structure in polyrhythmic tapping. Journal of Motor Behavior. 1988;20:416–442. doi: 10.1080/00222895.1988.10735455. [DOI] [PubMed] [Google Scholar]

- Jones MR, Pfordresher P. Tracking musical patterns using joint accent structure. Canadian Journal of Psychology. 1997;51:271–290. [Google Scholar]

- Keele S, Ivry R, Meyer U, Hazeltine E, Heuer H. The cognitive and neural architecture of sequence representation. Psychological Review. 2003;110:316–339. doi: 10.1037/0033-295x.110.2.316. [DOI] [PubMed] [Google Scholar]

- Keller PE, Burnham DK. Musical meter in attention to multipart rhythm. Music Perception. 2005;22:629–661. [Google Scholar]

- Keller PE, Koch I. The planning and execution of short auditory sequences. Psychonomic Bulletin & Review. 2006;13:711–716. doi: 10.3758/bf03193985. [DOI] [PubMed] [Google Scholar]

- Keller PE, Repp BH. When two limbs are weaker than one: Sensorimotor syncopation with alternating hands. Quarterly Journal of Experimental Psychology. 2004;57A:1085–1101. doi: 10.1080/02724980343000693. [DOI] [PubMed] [Google Scholar]

- Keller PE, Repp BH. Staying offbeat: Sensorimotor syncopation with structured and unstructured auditory sequences. Psychological Research. 2005;69:292–309. doi: 10.1007/s00426-004-0182-9. [DOI] [PubMed] [Google Scholar]

- Kelso JAS. Phase transitions and critical behavior in human bimanual coordination. American Journal of Physiology: Regulatory, Integrative, and Comparative Physiology. 1984;15:R1000–R1004. doi: 10.1152/ajpregu.1984.246.6.R1000. [DOI] [PubMed] [Google Scholar]

- Kelso JAS. Dynamic patterns: The self-organization of brain and behavior. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- Kelso JAS, DelColle JD, Schöner G. Action-perception as a pattern formation process. In: Jeannerod M, editor. Attention and performance XIII. Hillsdale, NJ: Erlbaum; 1990. pp. 139–169. [Google Scholar]

- Kelso JAS, Scholz JP, Schöner G. Nonequilibrium phase transitions in coordinated biological motion: Critical fluctuations. Physical Letters A. 1986;118:279–284. [Google Scholar]

- Klapp ST, Hill MD, Tyler JG, Martin ZE, Jagacinski RJ, Jones MR. On marching to two different drummers: Perceptual aspects of the difficulties. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:814–827. doi: 10.1037//0096-1523.11.6.814. [DOI] [PubMed] [Google Scholar]

- Klapp ST, Porter-Graham KA, Hoifjeld AR. The relation of perception and motor action: Ideomotor compatibility and interference in divided attention. Journal of Motor Behavior. 1991;23:155–162. doi: 10.1080/00222895.1991.10118359. [DOI] [PubMed] [Google Scholar]

- Koch I, Keller PE, Prinz W. The ideomotor approach to action control: Implications for skilled performance. International Journal of Sport and Exercise Psychology. 2004;2:362–375. [Google Scholar]

- Kolers PA, Brewster JM. Rhythms and responses. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:150–167. doi: 10.1037//0096-1523.11.2.150. [DOI] [PubMed] [Google Scholar]

- Kornblum S. Dimensional relevance and dimensional overlap in stimulus-stimulus, and stimulus-response compatibility. In: Stelmach G, Requin J, editors. Tutorials in Motor Behavior II. North-Holland: Amsterdam; 1992. pp. 743–777. [Google Scholar]

- Kornblum S, Hasbroucq T, Osman A. Dimensional overlap: Cognitive basis for stimulus-response compatibility: A model and taxonomy. Psychological Review. 1990;97:253–270. doi: 10.1037/0033-295x.97.2.253. [DOI] [PubMed] [Google Scholar]

- Large EW, Jones MR. The dynamics of attending: How we track time-varying events. Psychological Review. 1999;106:119–159. [Google Scholar]

- Lidji P, Kolinsky R, Lochy A, Karnas D, Morais J. Spatial associations for musical stimuli: A piano in the head? Journal of Experimental Psychology: Human Perception and Performance. 2007;33:1189–1207. doi: 10.1037/0096-1523.33.5.1189. [DOI] [PubMed] [Google Scholar]

- London J. Hearing in time: Psychological aspects of musical meter. Oxford: Oxford University Press; 2004. [Google Scholar]

- Mayville JM, Jantzen KJ, Fuchs A, Steinberg FL, Kelso JAS. Cortical and subcortical networks underlying syncopated and synchronized coordination revealed using fMRI. Human Brain Mapping. 2002;17:214–229. doi: 10.1002/hbm.10065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechsner F. A perceptual-cognitive approach to bimanual coordination. In: Jirsa VK, Kelso JAS, editors. Coordination dynamics: issues and trends. Berlin: Springer-Verlag; 2004. pp. 177–195. [Google Scholar]

- Mechsner F, Kerzel D, Knoblich G, Prinz W. Perceptual basis of bimanual coordination. Nature. 2001;414:69–73. doi: 10.1038/35102060. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologica. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Oullier O, Jantzen KJ, Steinberg FL, Kelso JAS. Neural substrates of real and imagined sensorimotor coordination. Cerebral Cortex. 2005;15:975–985. doi: 10.1093/cercor/bhh198. [DOI] [PubMed] [Google Scholar]

- Patel AD, Iversen JR, Chen Y, Repp BH. The influence of metricality and modality on synchronization with a beat. Experimental Brain Research. 2005;163:226–238. doi: 10.1007/s00221-004-2159-8. [DOI] [PubMed] [Google Scholar]

- Pfordresher P. Auditory feedback in music performance: Evidence for a dissociation of sequencing and timing. Journal of Experimental Psychology: Human Perception & Performance. 2003;29:949–964. doi: 10.1037/0096-1523.29.5.949. [DOI] [PubMed] [Google Scholar]

- Pfordresher PQ. Auditory feedback in music performance: The role of melodic structure and musical skill. Journal of Experimental Psychology: Human Perception and Performance. 2005;31:1331–1345. doi: 10.1037/0096-1523.31.6.1331. [DOI] [PubMed] [Google Scholar]

- Pfordresher PQ. Coordination of perception and action in music performance. Advances in Cognitive Psychology. 2006;2:183–198. [Google Scholar]

- Povel DJ, Essens PJ. Perception of temporal patterns. Music Perception. 1985;2:411–441. doi: 10.3758/bf03207132. [DOI] [PubMed] [Google Scholar]

- Repp BH, Knoblich G. Action can affect auditory perception. Psychological Science. 2007a;18:6–7. doi: 10.1111/j.1467-9280.2007.01839.x. [DOI] [PubMed] [Google Scholar]

- Repp BH, Knoblich G. Toward a psychophysics of agency: Detecting gain and loss of control over auditory action effects. Journal of Experimental Psychology: Human Perception and Performance. 2007b;33:469–482. doi: 10.1037/0096-1523.33.2.469. [DOI] [PubMed] [Google Scholar]

- Repp BH, Penel A. Auditory dominance in temporal processing: New evidence from synchronization with simultaneous visual and auditory sequences. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:1085–1099. [PubMed] [Google Scholar]

- Roerdink M, Ophoff ED, Peper CE, Beek PJ. Visual and musculoskeletal underpinnings of anchoring in rhythmic visuo-motor tracking. Experimental Brain Research. 2008;184:143–156. doi: 10.1007/s00221-007-1085-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roerdink M, Peper CE, Beek PJ. Effects of correct and transformed visual feedback on rhythmic visuo-motor tracking: Tracking performance and visual search behavior. Human Movement Science. 2005;24:379–402. doi: 10.1016/j.humov.2005.06.007. [DOI] [PubMed] [Google Scholar]

- Rusconi E, Kwan B, Giordano BL, Umiltá C, Butterworth B. Spatial representation of pitch height: the SMARC effect. Cognition. 2006;99:113–129. doi: 10.1016/j.cognition.2005.01.004. [DOI] [PubMed] [Google Scholar]

- Schmidt RC, Bienvenu M, Fitzpatrick PA, Amazeen PG. A comparison of within- and between-person coordination: Coordination breakdowns and coupling strength. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:884–900. doi: 10.1037//0096-1523.24.3.884. [DOI] [PubMed] [Google Scholar]

- Schmidt RC, Carello C, Turvey MT. Phase transitions and critical fluctuations in the visual coordination of rhythmic movements between people. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:227–247. doi: 10.1037//0096-1523.16.2.227. [DOI] [PubMed] [Google Scholar]

- Schubotz RI, Friederici AD, von Cramon DY. Time perception and motor timing: a common cortical and subcortical basis revealed by event-related fMRI. NeuroImage. 2000;11:1–12. doi: 10.1006/nimg.1999.0514. [DOI] [PubMed] [Google Scholar]

- Spencer RM, Semjen A, Yang S, Ivry RB. An event-based account of coordination stability. Psychonomic Bulletin & Review. 2006;13:702–710. doi: 10.3758/bf03193984. [DOI] [PubMed] [Google Scholar]

- Stöcker C, Hoffmann J. The ideomotor principle and motor sequence acquisition: Tone effects facilitate movement chunking. Psychological Research. 2004;68:126–137. doi: 10.1007/s00426-003-0150-9. [DOI] [PubMed] [Google Scholar]

- Summers JJ. Practice and training in bimanual coordination tasks: Strategies and constraints. Brain and Cognition. 2002;48:166–178. doi: 10.1006/brcg.2001.1311. [DOI] [PubMed] [Google Scholar]

- Summers JJ, Todd JA, Kim YH. The influence of perceptual and motor factors on bimanual coordination in a polyrhythmic tapping task. Psychological Research. 1993;55:107–115. doi: 10.1007/BF00419641. [DOI] [PubMed] [Google Scholar]

- Temprado J-J, Laurent M. Attentional load associated with performing and stabilizing a between-persons coordination of rhythmic limb movements. Acta Psychologica. 2004;115:1–16. doi: 10.1016/j.actpsy.2003.09.002. [DOI] [PubMed] [Google Scholar]

- Temprado J-J, Zanone PG, Monno A, Laurent M. Attentional load associated with performing and stabilizing preferred bimanual patterns. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:1579–1594. [Google Scholar]

- Turvey MT. Coordination. American Psychologist. 1990;45:938–953. doi: 10.1037//0003-066x.45.8.938. [DOI] [PubMed] [Google Scholar]

- Vorberg D, Wing A. Modeling variability and dependence in timing. In: Heuer H, Keele SW, editors. Handbook of perception and action. vol. 2. London: Academic Press; 1996. pp. 181–262. [Google Scholar]

- Wolpert DM, Ghahramani Z. Computational principles of movement neuroscience. Nature Neuroscience. 2000;3:1212–1217. doi: 10.1038/81497. [DOI] [PubMed] [Google Scholar]

- Wulf G, Prinz W. Directing attention to movement effects enhances learning: A review. Psychonomic Bulletin & Review. 2001;8:648–660. doi: 10.3758/bf03196201. [DOI] [PubMed] [Google Scholar]

- Ziessler M. Response-effect learning as a major component of implicit serial learning. Journal of Experimental Psychology: Learning, Memory and Cognition. 1998;24:962–978. [Google Scholar]