Abstract

Computer-assisted self-interview (CASI) questionnaires are being used with increased frequency to deliver surveys that previously were administered via self-administered paper-and-pencil questionnaires (SAQs). Although CASI may offer a number of advantages, an important consideration for researchers is the assessment modality's immediate and long-term costs. To facilitate researchers' choice between CASI and SAQ, this article provides theoretical cost models with specific parameters for comparing the costs for each assessment type. Utilizing these cost models, this study compared the cost effectiveness in a health behavior study in which both CASI (n = 100) and SAQ (n = 100) questionnaires were administered. Given the high initial costs, CASI was found to be less cost effective than SAQ for a single study. However, for studies with large sample sizes or when CASI software is to be used for multiple studies, CASI would be more cost effective and should be the assessment mode of choice.

In recent years, computer-assisted self-interview (CASI) software has grown in popularity as an alternative to paper-and-pencil self-administered questionnaires (SAQs) for use in collecting self-report data in psychological and behavioral research. CASI has been most widely adopted for projects involving assessment of sensitive behaviors or with stigmatized populations, including assessment of sexual risk behavior (e.g., Des Jarlais et al., 1999; Johnson et al., 2001; Kurth et al., 2004; Romer et al., 1997), psychiatric symptoms (e.g., Chinman, Young, Schell, Hassell, & Mintz, 2004; Epstein, Barker, & Kroutil, 2001), and substance use (e.g., Hallfors, Khatapoush, Kadushin, Watson, & Saxe, 2000; Turner et al., 1998; Webb, Zimet, Fortenberry, & Blythe, 1999). In addition, CASI has been used in experimental research to study cognitive functioning (e.g., Blais, Thompson, & Baranski, 2005; Günther, Schäfer, Holzner, & Kemmler, 2003) and the efficacy of computer-based instruction (e.g., Cumming & Elkins, 1996) and to conduct neuropsychological assessments (e.g., Davidson, Stevens, Goddard, Bilkey, & Bishara, 1987; White et al., 2003). Indeed, there is great potential for use of CASI to administer surveys assessing a range of self-report domains and for a multitude of experimental designs.

CASI presents individual questions visually to the participant on a computer screen. The respondent enters responses by using either a touch screen or a traditional keyboard. CASI software can also be programmed to present survey items, using digitally generated audio or recorded audio voice-overs. CASI digitally records participants' numeric responses, and the data are easily exported to most statistical software packages. Implementation of CASI requires either a desktop or a laptop computer and the purchase of CASI computer software. A number of CASI software packages are currently available on the market (e.g., Questionnaire Design Studio, BLAISE, and MediaLab). These software packages provide considerable flexibility in the design and presentation of questionnaires, and each offers unique features (for a review, see Batterham, Samreth, & Xue, 2005). CASI software programs often utilize user-friendly menus to program survey items, select question types, and add additional features.

CASI assessments afford a number of potential benefits over traditional paper-and-pencil SAQs. For complex surveys, computerized assessment reduces respondent burden through the use of automatic branching, range rules, and consistency checks (Erdman, Klein, & Greist, 1985; Metzger et al., 2000; Schroder, Carey, & Vanable, 2003; Turner et al., 1998). Relative to SAQ, CASI assessments may enhance the perception that information remains confidential, because individual responses are not easily viewed by research staff. As a result, CASI may also reduce participants' embarrassment and increase their willingness to disclose sensitive information, particularly for surveys that assess stigmatized or illicit behaviors (e.g., sexual behavior or illicit drug use; Erdman et al., 1985; Kurth, Spielberg, Rossini, & the U.A.W. Group, 2001; Paperny, Aono, Lehman, Hammar, & Risser, 1990). In low-literacy populations or for multi-lingual samples, the ability to incorporate audio prompts with CASI ameliorates literacy concerns that may affect data quality in alternate assessment modes, such as SAQ (Perlis, Des Jarlais, Friedman, Arasteh, & Turner, 2004; Schroder et al., 2003; Turner et al., 1998). In addition, CASI decreases the number of staff hours devoted to data entry and verification (Jennings, Lucenko, Malow, & Dévieux, 2002) and may improve data accuracy by reducing data entry errors and standardizing question administration (Metzger et al., 2000).

Although CASI offers a number of potential benefits to researchers and study participants, the cost of the CASI software and computer hardware needed to implement CASI may preclude its use in some research and clinical settings. Costs for the purchase of CASI software range from $625 to as high as $2,500 for a single license (depending on software sophistication and licensing specifics), and computer hardware purchases add additional expense. On the other hand, CASI-based data collection may yield considerable cost savings over time for some studies, because of increased administration efficiency, reduced duplication costs, and the elimination of staff time devoted to data entry. Although some have argued that CASI involves greater financial costs to administer, as compared with SAQ (Bloom, 1998), we are not aware of any studies that have systematically evaluated the relative costs associated with CASI versus SAQ. A comparative cost analysis would allow researchers to evaluate the difference in costs between these assessment types to determine the relative expenses for each approach with varying parameter considerations (Levin, 1983; Pearce, 1992). Such an analysis allows researchers to compare the initial fixed costs and the variable administrative costs associated with a given assessment type.

In the present study, we compared CASI and SAQ, utilizing an analysis of the differences in fixed and variable costs associated with each assessment type, an approach known as a comparative cost effectiveness analysis (e.g., Levin, 1983; Pearce, 1992). Because financial resources for conducting research are often scarce, this economic analysis will provide valuable data for researchers who are considering the possibility of allocating funds for CASI software and computer hardware. Using data gathered as part of a larger study on the impact of computerized assessments on the disclosure of health behaviors (Brown & Vanable, 2007), we first developed theoretical cost models with individual parameters for both SAQ and CASI. Utilizing these cost models, CASI was then compared with SAQ as a method of collecting self-report data. Cost comparisons will be presented first for this single study, involving the enrollment of 200 participants. Next, theoretical cost models were utilized to forecast the cost differential between CASI and SAQ when each of the following study parameters is altered: (1) The study's sample size is increased, and (2) CASI software and hardware are purchased for use with multiple studies. Given the high initial fixed costs associated with CASI, it was predicted that CASI would be less cost effective than SAQ in the context of a single study. However, we hypothesized that CASI would be more cost effective than SAQ for studies involving larger sample sizes or when the software and computers are implemented for multiple studies.

METHOD

Theoretical Cost Effectiveness Models

In deciding between CASI and SAQ, a researcher weighs the costs and the benefits of the two survey methods. However, apart from financial costs, it is difficult to quantify the practical benefits (e.g., increased confidentiality) of one survey mode over another. Thus, for the present study, benefits between the two assessment modes were assumed to be held constant. On the basis of this assumption, our analytic approach examines the opportunity cost of using one method over the other, conditional on the benefits for both assessment types being equal (Levin, 1983; Pearce, 1992). As is shown in Equation 1, the opportunity cost, Z, of using CASI can be displayed as

| (1) |

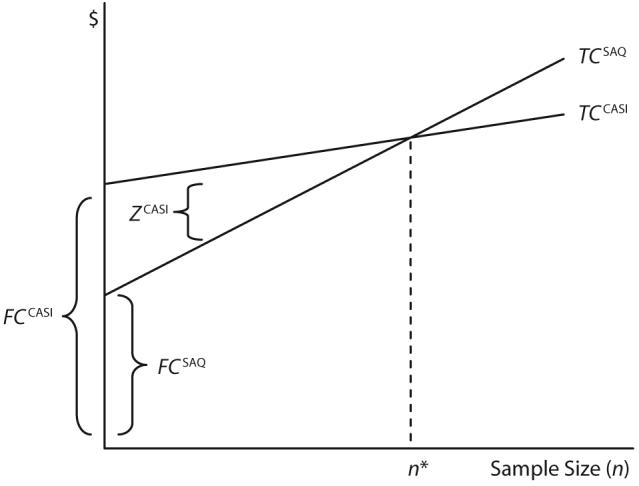

In this equation, the opportunity cost of using CASI (ZCASI) is equal to the total cost of using CASI (TCCASI) minus the total cost of using SAQ (TCSAQ). Thus, ZCASI indicates the total cost differential between the two assessment modalities. When ZCASI is greater than zero, this equation indicates that using SAQ would be the more cost-effective mode to implement in a study, relative to CASI. However, if, instead, ZCASI is less than zero, CASI would be the more cost-effective assessment type. When ZCASI is equal to zero, there is no cost difference between the survey methods. The relationship between ZCASI and the total cost of each survey method is displayed graphically in Figure 1. As is shown in Figure 1, n* represents the sample size at which the total costs between SAQ and CASI are equivalent.

Figure 1.

Theoretical total costs of self-administered questionnaires (SAQs) and computer-assisted self-interview (CASI) software and their relationship to ZCASI.

The total cost (TCSAQ, TCCASI) of a study can be decomposed into fixed and variable costs. Fixed costs (FC) are the one-time incurred expenses involved in conducting the study whenever the sample size is greater than zero (Colander, 2005). An example of a fixed cost for CASI is the purchase of a computer to administer the survey. In Figure 1, the fixed costs of SAQ and CASI are depicted as the y-intercept point. In contrast, variable costs (VC) vary directly as a function of key parameters, such as a study's sample size (Colander, 2005). An example of a variable cost for SAQ would be the copying expenses associated with duplicating each individual survey. Thus, variable costs determine the slope of the lines as shown in Figure 1.

Algebraic representations of the total costs, fixed costs, and variable costs for SAQ are as follows:

| (2) |

| (3) |

and

| (4) |

Fixed costs for SAQ, as shown in Equation 3, include the staff wages required to develop the survey and other indirect costs. The staff cost of labor hours (W) is the wage per hour for a researcher to develop the assessment battery. The wage is multiplied by the time taken to create the survey and draft questionnaire items [P1(q)] and the time taken to program the survey in a data entry software package [P2(q)], given the number of questions in the survey (q). Added to the wage costs are the total indirect costs (I) to administer the study. Indirect research costs include fees, such as room rental and electricity costs. Purchasing of data entry software (S) is also included in the SAQ fixed costs.

SAQ variable costs represented in Equation 4 include the amount paid to research assistants to administer the survey and costs associated with participant payments and survey duplication charges. The wage cost per hour for research assistants (W) is multiplied by the time taken to administer [A(q, n, t)], double enter, and check each survey for consistency for a given number of questions (q), number of study participants (n), and number of SAQ surveys that can be administered simultaneously (t). This quantity is added to the amount paid to the participants to compensate for their participation (SP), which is multiplied by the total number of participants (n). In addition, the price to copy each survey (C), multiplied by the number of participants (n), is added to the variable costs for SAQ.

The analogous equations for CASI total costs, fixed costs, and variable costs are

| (5) |

| (6) |

and

| (7) |

In addition to the SAQ fixed costs, CASI fixed costs (i.e., Equation 6) also include the cost to purchase c computers (HCASI) and the computer software (SCASI). CASI variable costs are the same as those for SAQ but do not include the copy charges needed to administer SAQ (C). In addition, note that the cost to administer each survey (ACASI) is now a function of the number of questions (q), sample size (n), and number of computers used (c). The administration times (A) for both SAQ and CASI are further described in Equations 9 and 10.

The major focus for this study is to measure Equation 1, which calculates the differences between the total costs of administering the two methods. In this sense, costs incurred regardless of the study assessment method are eliminated (e.g., participant payments, study's indirect costs). The equations above therefore can be reduced to

| (8) |

If the functional forms for administering the survey are assumed to take the form

| (9) |

and

| (10) |

where γ is the amount of time it takes each participant to complete the survey, given q questions, and ϕ is the time necessary to double enter and check each survey for consistency. γ is divided by the number of SAQ surveys that can be administered at once (t), or for CASI, γ is divided by the number of computers simultaneously used (c). In addition, the cost per computer can be denoted as ψ, and the cost to copy each survey as σ, given the number of questions (q). Thus, the opportunity cost of CASI, as compared with that of SAQ, can be represented in Equation 11 below.

Methodology for Cost Effectiveness Analysis

The specific parameters utilized to perform this comparative cost effectiveness analysis are derived from data collected as a part of a survey study of health behaviors among college students. This study administered a questionnaire battery that assessed a range of health behaviors, including sexual risk behaviors, substance use, and attitudes of relevance to health behavior change (Brown & Vanable, 2007). In total, 27 separate measures made up the assessment battery, with a total of 514 individual questions (q). The time to create the survey and draft questionnaire items [P1(q)] was logged by the principal investigator. Identical surveys were administered via CASI (n = 100) and SAQ (n = 100) during individual assessments, with a sample of female college undergraduates. The participants were compensated $10 for their participation (SP).

| (11) |

For the SAQ version of the questionnaire, EpiData, a free software program available in the public domain, was used as the data entry platform (SSAQ; EpiData Software, 1999). The time to program EpiData, including the time to create variable names, variable labels, value labels, and appropriate range and skip rules was logged . In addition, the questionnaire battery was copied for the SAQ administration [σ(q)]. To program CASI, the Questionnaire Design Studio was purchased and utilized (SCASI; Nova Research Company, 2004). The time necessary to program the CASI survey with all questions and features was recorded . In order to run CASI and export the data, a software license and the Data Management Warehouse software program were also purchased (SCASI). For this study, the CASI was administered individually with one newly purchased desktop computer (ψ).

RESULTS

The results section will first detail the amounts for each of the individual cost parameters for SAQ and CASI in the survey study. Next, the opportunity costs for the health behavior survey study utilizing CASI and SAQ will be examined. Finally, projected costs will be calculated to compare the costs between SAQ and CASI when each of the following conditions is altered: (1) The sample size is increased, and (2) CASI is purchased for use with multiple studies.

Individual Cost Parameters

For modeling purposes, personnel costs for assisting with survey creation, software programming, and study administration was set at $15/h (W), or $0.25/min. The total time to create the survey and draft questionnaire items [P1(q)] to be used in both SAQ and CASI assessments was 25 h. The participants were compensated $10 for their participation (SP). For the SAQ version of the questionnaire, EpiData, a free software program, was used as the data entry platform (SSAQ). The time to program the data entry software for SAQ was 8 h . In addition, the questionnaire battery copies cost $120 for the SAQ administration [σ(q)], a cost of $1.20 per participant. The total survey administration time for the SAQ participants (n = 100) was 75.1 h, with an average administration time per participant of 45.1 min [γSAQ(q)]. In addition, the time to double enter SAQ data and conduct consistency checks was 33.5 h [ϕ(q)], an average of 20.1 min per participant. The software requirements for CASI, including the Questionnaire Design Studio, the CASI Data Collection Module, and the Data Warehouse Manager, totaled $1,300 (SCASI). The time necessary to program the CASI survey was 16.5 h . One computer was purchased for this study at a cost of $1,300 (ψ). Survey administration time for CASI across all participants (n = 100) was equal to 75.9 h, with an average administration time per participant of 45.5 min [γCASI(q)]. The cost parameters for the health behavior study are summarized in Table 1.

Table 1.

Cost Parameters for Single Health Behavior Survey Study (nCASI = 100; nSAQ = 100)

| Parameter Description | Parameter Label |

Time (min) |

Cost ($) |

|---|---|---|---|

| Costs Associated With Both Assessment Modes | |||

| Research staff wage per minute | W | – | 0.25 |

| Time to create survey | P1(q) | 1,500 | – |

| Payment per participant | SP | – | 10.00 |

| SAQ Costs | |||

| SAQ data entry platform software | SSAQ | – | 0.00 |

| Program SAQ data entry platform | 480 | – | |

| SAQ copy charges per participant | σ(q) | – | 1.20 |

| SAQ administration time per participant | γSAQ(q) | 45.1 | – |

| SAQ data entry and verification per participant | ϕ(q) | 20.1 | – |

| CASI Costs | |||

| Purchase of one computer | ψ | – | 1,300.00 |

| CASI software | SCASI | – | 1,300.00 |

| Program CASI software | 990 | – | |

| CASI administration time per participant | γCASI(q) | 45.5 | – |

Opportunity Costs for CASI Versus SAQ for a Single Health Behavior Survey Study (N = 200)

Using the individual cost parameters, we compared the costs for administering the survey via CASI and SAQ by utilizing Equation 11 (see equation below). For illustrative purposes, the equation below includes the actual parameter values used to complete the calculations for the health behavior study we conducted. Solving for ZCASI, the opportunity cost was equal to $2,115. As was predicted, this result indicates that SAQ with a sample size of n = 100 and other defined study parameters was more cost effective, relative to CASI with a comparable sample size of n = 100.

Projected Costs for CASI Versus SAQ With Varying Cost Parameters

Using the estimates above, we can linearly extrapolate the impact of increasing sample size and other relevant study parameters on the relative costs associated with each assessment mode. In addition, the cost models can be utilized to determine the conditions under which CASI is more cost effective than SAQ. For illustrative purposes, we examined the impact of two hypothetical study scenarios, varying the cost parameters to compare the cost of utilizing SAQ with the cost for CASI.

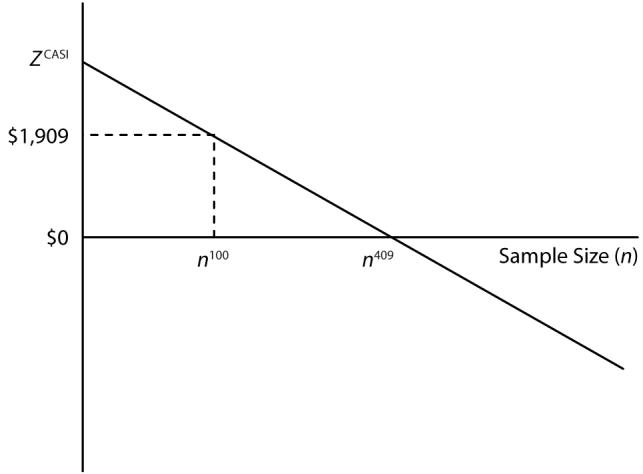

Variations in sample size

Using the comparative cost model shown in Equation 11, one can solve for the sample size (n) at which CASI is more cost effective, relative to SAQ, holding all other parameters of the conducted study constant. As is shown in Figure 1, as the sample size (n) of a study increases, the total cost differential between CASI and SAQ decreases until, eventually, CASI becomes the more cost-effective assessment option. Thus, solving Equation 11 for n, the results indicate that for studies with a sample size greater than n = 409, CASI would be more cost effective than SAQ. As is depicted in Figure 2, with study parameters including a sample size of n = 100, ZCASI equals $2,115; however, with a sample size greater than 409, ZCASI is negative, indicating that CASI would be more cost effective for studies that involved enrollment of more than 409 participants.

Figure 2.

Estimated opportunity cost (Z) of using computer-assisted self-interview software versus self-administered questionnaires as a function of sample size (n).

Use of CASI software across multiple studies

When CASI software is purchased for multiple research projects, the fixed costs of computers and CASI software are spread across studies. Using the parameters from the study conducted, we examined how many studies with similar parameters (e.g., sample size, survey length) would need to be conducted for CASI to be the most cost-effective assessment method. Using Equation 11, we kept all of the cost parameters as they were in the survey study and evaluated the number of studies required for ZCASI to be less than zero, using Equation 12 below. Thus, this simulates when the software and computer hardware are purchased to work with multiple studies. Solving for “studies,” CASI would be the most cost-effective survey method if used for at least six studies. By using CASI for several studies, the impact of the initial purchasing cost of the software is reduced, and CASI will, therefore, be the most cost-effective assessment type given higher variable costs to administer SAQ.

These two examples indicate that CASI can, indeed, be more cost effective than SAQ, depending on the study's parameters. When studies with large sample sizes (n > 409) are conducted, CASI will be a more cost-effective assessment modality. In addition, CASI software becomes more cost effective if it is to be purchased for multiple studies as part of a larger research program. Thus, one can see that the particular study parameters and long-term research needs will significantly affect the extent to which CASI is cost effective and should be considered before an assessment modality is selected.

DISCUSSION

Researchers and clinicians must often consider and justify the costs and benefits associated with administering an assessment battery. Some have suggested that CASI may be more costly to implement (e.g., Bloom, 1998), but to date, there have been no systematic investigations to compare the costs associated with administering CASI with those for SAQ. Cost effectiveness analysis allows the costs to be compared between the two alternatives, utilizing a comparative analysis of fixed and variable costs associated with each assessment type (Levin, 1983; Pearce, 1992). This approach allows the opportunity costs between two options to be evaluated, holding the benefits of each option constant, since benefits, such as ease of survey completion, are difficult to quantify (Pearce, 1992). This article has developed theoretical cost models detailing the cost parameters specific to each of the two assessment types. Cost models were then utilized to evaluate the cost effectiveness of CASI and SAQ in a study in which both versions of a health behavior survey were administered. Projected cost analyses also illustrated two conditions under which CASI would be more cost effective than SAQ.

| (12) |

For the cost effectiveness analysis in this health behavior survey study, it was hypothesized that CASI would be less cost effective than SAQ, given the relatively small sample size and the fact that initial start-up costs associated with CASI were high. Indeed, the software costs and computer hardware purchase increased CASI's fixed costs to approximately six times that of SAQ. However, variable costs between CASI and SAQ were comparable, with CASI costing slightly less per participant to administer. Overall, the differences in costs between CASI and SAQ indicated that SAQ was the most cost-effective modality for use in this single study.

Cost data computed on the basis of our single survey study provides preliminary cost data that can be utilized by researchers when selecting an assessment format. For a single, relatively small study, the SAQ modality was the most cost effective. However, the projected cost models that manipulated specific study parameters suggest that in a variety of other research settings, CASI is a more economical choice. Data from these models indicate several conditions in which CASI is more cost effective than SAQ. Indeed, CASI will be particularly cost effective for studies with large sample sizes, since the costs associated with not having to pay staff to complete SAQ data entry are eliminated. In addition, when computer and CASI software purchases are to be used for multiple studies, CASI will be the most cost-effective assessment modality, because the impact of the high initial fixed costs is distributed across multiple investigations.

As is shown in the development of the theoretical cost models, there are a number of factors that affect the total cost associated with implementing a particular assessment type. This article has outlined the important cost considerations when an assessment modality is selected and is the first to describe a comparative cost effectiveness analysis between the assessment modes of SAQ and CASI. Researchers should utilize these cost models to evaluate the economic utility of selecting either CASI or SAQ for future research studies. As is shown in the projected cost examples, the effect of altering one or multiple parameters alters the opportunity costs associated with selecting the assessment mode. As a result, researchers should carefully consider all relevant cost parameters before deciding whether to adopt a computerized assessment approach or stay with paper-and-pencil surveys.

The models presented here highlight important cost parameters to consider when one decides between CASI and SAQ. Researchers seeking to apply these cost models to their respective research programs may do so by tailoring the model parameters to fit with local circumstances and needs. Most of the model parameters can be estimated with little difficulty. Thus, to estimate the relative costs associated with CASI and SAQ, consideration must be given to such factors as the research design and number of studies that will be conducted, sample size, the number of assessments to be administered per participant (e.g., if follow-up assessments are included in the design), costs for participant payments, and duplication costs. Researchers should also verify the number of computers and the software available in their organization and should consider the cost implications if computers or software must be purchased. Finally, consideration should be given to the staffing needs and associated costs of the particular project and to the number of surveys that can be administered on a given occasion. After determining these parameter values, researchers should approximate the amount of time needed to administer the instrument and should consider the time needed to perform data entry and verification. Administration and data entry time can be determined by considering survey length and complexity, by applying the values provided for the study presented in this article, or through piloting the measure as a paper-and-pencil survey. In addition, researchers should approximate the time required to program CASI software or a SAQ data entry platform; these values can be estimated on the basis of the complexity of the assessment instrument and past programming experience. After approximating these parameters, researchers can then utilize Equation 11 to compare the costs associated with each assessment type. This value would provide researchers with an approximation of the relative costs of using each assessment mode. From there, researchers should determine whether there are any critical benefits of either assessment mode to consider, relative to the costs.

The cost effectiveness analyses presented in this article measure the difference in costs associated with each assessment modality, holding the benefits constant. For researchers seeking guidance regarding the choice between CASI and SAQ, consideration of the benefits of each assessment modality, relative to the needs of a specific research project, is also essential. For example, CASI is more engaging and interactive, reduces survey completion difficulty, incorporates the use of audio for low-literacy samples, and decreases the amount of time required to perform data entry and verification (e.g., Metzger et al., 2000; Paperny et al., 1990; Perlis et al., 2004; Schroder et al., 2003; Turner et al., 1998). In addition, CASI allows for the implementation of more complex survey instruments with additional features, such as random administration of question presentation order, capturing response time latencies, and incorporation of digital media, among others.

Although there are a number of potential benefits associated with CASI's use, SAQ may be preferable in some research contexts. For example, an advantage of SAQ is the ability to conduct large, mass administrations of a survey. SAQ may also be more appropriate for studies involving participants who have little experience and familiarity with computers or for individuals who have less trust in computer technology. For researchers, problems with computer reliability and the potential for data loss is reduced when SAQ is used. Thus, SAQ may be preferable for use in mass-testing sessions in which large quantities of data are to be collected in a short period of time, for samples with little computer experience, or in order to eliminate concerns about potential data loss related to hardware failure. Although our article has examined the costs associated with each assessment modality's use, researchers should also consider the extent to which the benefits of a particular survey format outweigh the costs.

This article has developed theoretical cost models to facilitate researchers' decisions regarding the use of CASI versus SAQ. Findings from our cost effectiveness analysis suggest that for a single study with a small sample size, SAQ will be the most cost effective. However, findings provide clear evidence that, over time, CASI is more cost effective than SAQ, particularly for studies with large sample sizes or when the software is already available. Therefore, for large trials or when CASI will be used for many studies, CASI will be the most cost effective and should be the assessment mode of choice. Given the technological benefits associated with CASI and the fact that this assessment mode is more cost effective in the long term, it appears that initial start-up costs for CASI software and hardware are well worth the money. Assuming that a given laboratory can afford these up-front costs and that there are no study-specific reasons to prefer SAQ, it is recommended that researchers utilize CASI as their assessment mode of choice when conducting self-report assessments.

Acknowledgments

This work was supported in part by NIMH Grant U01MH066794-01A2 and by funds from a research development grant from the Department of Psychology, Syracuse University. The authors thank Laura Gaskins, Lindsey Ross, Lien Tran, and Nicole Weiss for their assistance with data collection.

REFERENCES

- Batterham P, Samreth B, Xue R. Assessing computer-assisted interviewing software. 2005 Retrieved June 1, 2006, from chipts.ucla.edu/tempmat/broadsheet/Assessment.pdf.

- Blais A, Thompson MM, Baranski JV. Individual differences in decision processing and confidence judgments in comparative judgment tasks: The role of cognitive styles. Personality & Individual Differences. 2005;38:1701–1713. [Google Scholar]

- Bloom DE. Technology, experimentation, and the quality of survey data. Science. 1998;280:847–848. doi: 10.1126/science.280.5365.847. [DOI] [PubMed] [Google Scholar]

- Brown JL, Vanable PA. The effects of assessment mode and privacy level on self-reports of sexual risk behaviors and substance use among young women; Poster presented at the annual meeting of the Society of Behavioral Medicine; Washington, DC.. Mar, 2007. [Google Scholar]

- Chinman M, Young AS, Schell T, Hassell J, Mintz J. Computer-assisted self-assessment in persons with severe mental illness. Journal of Clinical Psychiatry. 2004;65:1343–1351. doi: 10.4088/jcp.v65n1008. [DOI] [PubMed] [Google Scholar]

- Colander DC. The firm: Production and cost. In: Miller RL, editor. Understanding modern economics. 5th ed. McGraw-Hill; New York: 2005. pp. 89–112. [Google Scholar]

- Cumming JJ, Elkins J. Stability of strategy use for addition facts: A training study and implications for instruction. Journal of Cognitive Education. 1996;5:101–116. [Google Scholar]

- Davidson OR, Stevens DE, Goddard GV, Bilkey DK, Bishara SN. The performance of a sample of traumatic head-injured patients on some novel computer-assisted neuropsychological tests. Applied Psychology. 1987;36:329–342. [Google Scholar]

- Des Jarlais DC, Paone D, Milliken J, Turner CF, Miller H, Gribble J, et al. Audio-computer interviewing to measure risk behaviour for HIV among injecting drug users: A quasi-randomised trial. Lancet. 1999;353:1657–1661. doi: 10.1016/s0140-6736(98)07026-3. [DOI] [PubMed] [Google Scholar]

- EpiData Software . EpiData entry [Computer software] EpiData Association; Odense, Denmark: 1999. [Google Scholar]

- Epstein JF, Barker PR, Kroutil LA. Mode effects in self-reported mental health data. Public Opinion Quarterly. 2001;65:529–549. [Google Scholar]

- Erdman HP, Klein MH, Greist JH. Direct patient computer interviewing. Journal of Consulting & Clinical Psychology. 1985;53:760–773. doi: 10.1037//0022-006x.53.6.760. [DOI] [PubMed] [Google Scholar]

- Günther VK, Schäfer P, Holzner BJ, Kemmler GW. Long-term improvements in cognitive performance through computer-assisted cognitive training: A pilot study in a residential home for older people. Aging & Mental Health. 2003;7:200–206. doi: 10.1080/1360786031000101175. [DOI] [PubMed] [Google Scholar]

- Hallfors D, Khatapoush S, Kadushin C, Watson K, Saxe L. A comparison of paper vs. computer-assisted self interview for school alcohol, tobacco, and other drug surveys. Evaluation & Program Planning. 2000;23:149–155. [Google Scholar]

- Jennings TE, Lucenko BA, Malow RM, Dévieux JG. Audio-CASI vs. interview method of administration of an HIV/STD risk of exposure screening instrument for teenagers. International Journal of STD & AIDS. 2002;13:781–784. doi: 10.1258/095646202320753754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AM, Copas AJ, Erens B, Mandalia S, Fenton K, Korovessis C, et al. Effect of computer-assisted self-interviews on reporting of sexual HIV risk behaviours in a general population sample: A methodological experiment. AIDS. 2001;15:111–115. doi: 10.1097/00002030-200101050-00016. [DOI] [PubMed] [Google Scholar]

- Kurth AE, Martin DP, Golden MR, Weiss NS, Heagerty PJ, Spielberg F, et al. A comparison between audio computer-assisted self-interviews and clinician interviews for obtaining the sexual history. Sexually Transmitted Diseases. 2004;31:719–726. doi: 10.1097/01.olq.0000145855.36181.13. [DOI] [PubMed] [Google Scholar]

- Kurth AE, Spielberg F, Rossini A, the U.A.W. Group STD/HIV risk: What should we measure, and how should we measure it? International Journal of STD & AIDS. 2001;12(Suppl 2):171. [Google Scholar]

- Levin HM. Cost-effectiveness: A primer. Sage; Beverly Hills, CA: 1983. [Google Scholar]

- Metzger DS, Koblin B, Turner C, Navaline H, Valenti F, Holte S, et al. Randomized controlled trial of audio computer-assisted self-interviewing: Utility and acceptability in longitudinal studies. American Journal of Epidemiology. 2000;152:99–106. doi: 10.1093/aje/152.2.99. [DOI] [PubMed] [Google Scholar]

- Nova Research Company . Questionnaire Design Studio (Version 2.4) [Computer software] Author; Bethesda, MD: 2004. [Google Scholar]

- Paperny DM, Aono JY, Lehman RM, Hammar SL, Risser J. Computer-assisted detection and intervention in adolescent high-risk health behaviors. Journal of Pediatrics. 1990;116:456–462. doi: 10.1016/s0022-3476(05)82844-6. [DOI] [PubMed] [Google Scholar]

- Pearce DW, editor. The MIT dictionary of modern economics. 4th ed. MIT Press; Cambridge, MA: 1992. [Google Scholar]

- Perlis TE, Des Jarlais DC, Friedman SR, Arasteh K, Turner CF. Audio-computerized self-interviewing versus face-to-face interviewing for research data collection at drug abuse treatment programs. Addiction. 2004;99:885–896. doi: 10.1111/j.1360-0443.2004.00740.x. [DOI] [PubMed] [Google Scholar]

- Romer D, Hornik R, Stanton B, Black M, Li X, Ricardo I, Feigelman S. “Talking” computers: A reliable and private method to conduct interviews on sensitive topics with children. Journal of Sex Research. 1997;34:3–9. [Google Scholar]

- Schroder KE, Carey MP, Vanable PA. Methodological challenges in research on sexual risk behavior: Accuracy of self-reports. Annals of Behavioral Medicine. 2003;26:104–123. doi: 10.1207/s15324796abm2602_03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner CF, Ku L, Rogers SM, Lindberg LD, Pleck JH, Sonenstein FL. Adolescent sexual behavior, drug use, and violence: Increased reporting with computer survey technology. Science. 1998;280:867–873. doi: 10.1126/science.280.5365.867. [DOI] [PubMed] [Google Scholar]

- Webb PM, Zimet GD, Fortenberry JD, Blythe MJ. Comparability of a computer-assisted versus written method for collecting health behavior information from adolescent patients. Journal of Adolescent Health. 1999;24:383–388. doi: 10.1016/s1054-139x(99)00005-1. [DOI] [PubMed] [Google Scholar]

- White RF, James KE, Vasterling JJ, Letz R, Marans K, Delaney R, et al. Neuropsychological screening for cognitive impairment using computer-assisted tasks. Assessment. 2003;10:86–101. doi: 10.1177/1073191102250185. [DOI] [PubMed] [Google Scholar]