Abstract

The contribution of past experiences to concurrent resurgence was investigated in three experiments. In Experiment 1, resurgence was related to the length of reinforcement history as well as the reinforcement schedule that previously maintained responding. Specifically, more resurgence occurred when key pecks had been reinforced on a variable-interval 1-min schedule than a variable-interval 6-min schedule, but this effect may have been due either to the differential reinforcement rates or differential response rates under the two schedules. When reinforcement rates were similar (Experiment 2), there was more resurgence of high-rate than low-rate responding. When response rates were similar (Experiment 3), resurgence was not related systematically to prior reinforcement rates. Taken together, these three experimental tests of concurrent resurgence illustrate that prior response rates are better predictors of resurgence than are prior reinforcement rates.

Keywords: resurgence, behavioral history, response recovery, concurrent schedule, pigeons, key peck

The recurrence of previously reinforced responding when more recently reinforced responses are no longer effective, or resurgence (Epstein, 1985), typically is studied using a sequence of three conditions. In the first (hereafter, reinforcement) condition, one operant (A) is reinforced. In the second (hereafter, elimination) condition, A is extinguished and/or an alternative operant (B) is reinforced. In the third (hereafter, resurgence) condition, B no longer produces the same quantity or quality of reinforcers (e.g., extinction is introduced or the consequences for A are changed). Operationally, any increase in A during the resurgence condition relative to the elimination condition is labeled the resurgence of A.

Resurgence has been demonstrated using various types of responses, such as those that were respondently conditioned (Epstein & Skinner, 1980) or a product of self-generated rules (Dixon & Hayes, 1998), yet the controlling variables of resurgence remain largely unstudied. Several experimental analyses of resurgence have assessed effects of variables in the elimination and resurgence conditions (e.g., Doughty, da Silva, & Lattal, 2007; Hemmes, Brown, Jakubow, & de Vaca, 1997; Lieving & Lattal, 2003; Pacitti & Smith, 1977), but the contribution of variables in the reinforcement condition to resurgence has received relatively less attention. In two groups of rats, Carey (1951) assessed rats' bar-pressing under extinction following reinforcement of single and double responses. Only one bar press comprised the single response requirement, but two bar presses with an interresponse time (IRT) ≤ 0.25 s were required for each double response. Treatment of the rats in each group differed according to the order of the conditions. Single responses were reinforced for the rats in one group whereas double responses were reinforced for the rats in the other group and, after 24 sessions, the reinforcement requirements for the two groups were reversed and remained in effect for another 24 days. Extinction then was introduced for rats in both groups. Initially, the IRTs resembled those reinforced immediately prior to extinction; but thereafter, the initially reinforced IRTs increased in number (i.e., the prior IRTs resurged; see also Lindblom & Jenkins, 1981, Experiment 4). Reed and Morgan (2007) systematically replicated Carey's findings by examining resurgence in each of two components of a multiple schedule as a function of schedules in effect during the reinforcement condition. Across two experiments, rats were exposed to a multiple random-ratio (RR) random-interval (RI) schedule (Experiment 1) or a multiple differential-reinforcement-of-high-rate behavior (DRH) differential-reinforcement-of-low-rate behavior (DRL) schedule (Experiment 2) before FI schedules were introduced in each component. After FI responding was stable, extinction was introduced to test for resurgence. In both experiments, more resurgence occurred in the component previously correlated with the schedule that produced relatively higher rates of responding during the reinforcement condition. Carey's findings and Reed and Morgan's findings suggest that resurgence occurs as an orderly function of variables at work in remote conditions and are consistent with other investigations of remote behavioral history effects (e.g., Barrett, 1977; Barrett & Stanley, 1983; Doughty et al., 2005).

Beyond the general paucity of assessments of resurgence as a function of prior contingencies, investigations of resurgence within a single context have been restricted primarily to analyses of the resurgence of only one target response. For example, Leitenberg, Rawson, and Bath (1970) reinforced rats' responses on one lever (Lever A) before extinguishing that response while reinforcing responses on a second lever (Lever B). When Lever B responding was extinguished, resurgence of Lever A responding occurred. This procedure, like other examples of resurgence, allows an assessment of different amounts of resurgence by varying parameters of the resurgence sequence across organisms or across repeated measures within organisms, but does not allow the assessment of more than one variable on resurgence within a single context.

In the only experiment that has assessed the resurgence of concurrent responses, Epstein (1983), using a concurrent schedule, compared the resurgence of key pecking that was previously reinforced and key pecking that had not been reinforced. Two keys were operative throughout Epstein's experiment. In the reinforcement condition, pecking on one of the two keys (Response A) was reinforced while pecking on the second key (Response B) was not. In a subsequent elimination condition, a response inconsistent with key pecking (Response C; e.g., wing flapping) was reinforced. In the resurgence condition, Response C no longer was reinforced. Relatively more resurgence of Response A than Response B was observed. Epstein's test of relative responding suggested that the resurgence of a response is related to its history of reinforcement. Although Epstein's findings reveal the importance of reinforcement in producing resurgence, they did not allow assessment of resurgence as a function of differential non-zero amounts of reinforcement. Carey's (1951) and Reed and Morgan's (2007) studies, furthermore, assessed relative resurgence of a single response between subjects in two different groups or in two different contexts (the two components of a multiple schedule) rather than two responses in a single context.

The recurrence of two concurrently available responses, or relative resurgence, has not been assessed when each of the responses was reinforced differently. In the three experiments comprising the present investigation, concurrent schedules were used to compare the relative resurgence of two responses as a function of variables in the reinforcement condition. After demonstrating relative resurgence in the first experiment, the subsequent experiments attempted to isolate the contributions of response and reinforcer rates, respectively, to such resurgent responding.

Experiment 1

Although the most common definition of resurgence is that it is the recurrence of a previously reinforced response (e.g., Epstein, 1985; but see Cleland, Guerin, Foster, & Temple, 2001), only Epstein (1983) examined the importance of the response having been reinforced for resurgence. His findings, described in the Introduction, raise the question of whether any history of reinforcement (e.g., any rate or schedule of reinforcement) for a response is sufficient to produce resurgence of that response, or whether resurgence occurs differently if the history of reinforcement is different. Perhaps, as in other studies of response recovery (e.g., Franks & Lattal, 1976), the extent of resurgence is related to the reinforcement parameters previously correlated with the response. The purpose of Experiment 1 was to determine whether concurrent resurgence is an orderly function of the prior history of reinforcement of a response. This was addressed by examining, first, the effects of the presence or absence of a stable history of reinforcement and, then, the effects of two different (past) reinforcement schedules on the resurgence of two responses under extinction.

Method

Subjects

Each of 3 experimentally naive White Carneau pigeons was maintained at 80% of its free-feeding weight by food obtained during the experimental session and provided by the experimenter immediately following the session. Water and health grit were freely available in the home cage where a 12-hr light∶12-hr dark cycle was maintained.

Apparatus

A sound-attenuating operant conditioning chamber (31 cm wide, 30 cm long, and 38 cm high) containing a brushed aluminum three-key work panel was used. Each of the side keys (2 cm diameter) was 5 cm from the side wall of the chamber, 10 cm from the center key (2.5 cm diameter), center to center, and 25 cm from the floor. The two side keys could be transilluminated white and the center key could be transilluminated red. General illumination was provided by a white houselight that was centered between the sides of the work panel and located 2.5 cm from the ceiling. A rectangular aperture (6 cm wide by 6.5 cm high) was located on the midline of the panel, 8 cm from the floor. The aperture provided access to mixed grain when a hopper was raised. A 28-V DC clear bulb illuminated the aperture and all other lights were dark during 3-s presentations of the hopper for reinforcer deliveries. A ventilation fan, located in the back right corner of the rear wall, and white noise delivered through a speaker, located in the lower left corner of the work panel, masked extraneous noise. Programming and data recording were controlled by a computer in an adjacent room using MED-PC® software and hardware (MED Associates, Inc. & Tatham, 1991).

Procedure

Pecking each of the three keys was autoshaped (Brown & Jenkins, 1968) for one session during which, across a series of trials, one of the keys was transilluminated following a randomly selected 80-, 90-, or 100-s intertrial interval (ITI). After 6 s or a key peck, whichever occurred first, the key was darkened and the hopper was raised for 3 s. The session lasted until 60 reinforcers were delivered. Each pigeon next was exposed to two sessions in which pecking on the red center key was reinforced according to a fixed-ratio (FR) schedule; the side keys were lit, but pecking them had no programmed effect. The FR value increased after 10 reinforcers were delivered at each of the following values: FR 3, FR 5, FR 10, and FR 15. In all conditions of Experiment 1, a white houselight was on throughout each session, except during reinforcer deliveries. Sessions occurred 6 days per week, and each lasted for 45 min. The schedule in effect in each condition and the number of sessions to which each pigeon was exposed to the schedule is shown in Table 1.

Table 1.

The Schedule and Number of Sessions in Which Each Pigeon Was Exposed to Each Condition of Experiment 1.

| Condition | Concurrent Schedule |

Pigeon |

|||||

| Left Key | Center Key | Right Key | 126 | 536 | 418 | ||

| Exp 1a | Elimination | Extinction | VI 1 min | Extinction | 41 | 25 | 35 |

| Resurgence | Extinction | Extinction | Extinction | 30 | 14 | 13 | |

| Exp 1b | Reinforcement | VI 1 min | OFF | VI 6 min | 60 | 60 | |

| VI 6 min | OFF | VI 1 min | 65 | ||||

| Elimination | Extinction | VI 3 min | Extinction | 24 | 20 | 30 | |

| Resurgence | Extinction | Extinction | Extinction | 10 | 10 | 10 | |

| Reinforcement | VI 6 min | OFF | VI 1 min | 60 | |||

| Elimination | Extinction | VI 3 min | Extinction | 45 | |||

| Resurgence | Extinction | Extinction | Extinction | 15 | |||

Experiment 1a

Experiment 1a did not contain a reinforcement condition because it was designed to assess responding in the resurgence condition when there was no consistent history of reinforcement for responding on the two side keys (i.e., when pecking the side keys had been reinforced only during a single autoshaping session). In the elimination condition, a VI 1-min schedule was in effect on the illuminated center key while extinction continued on the two side keys, which also were illuminated throughout the session. All VI schedules in this and the two subsequent experiments were constructed according to the constant probability distribution described by Fleshler and Hoffman (1962). A 3-s changeover delay (COD) was in effect on the center key. In this and the two subsequent experiments, peck–peck CODs (Shahan & Lattal, 1998) were used such that, in this case, the first peck on the center key following a peck on either side key began a delay of 3 s. If no other pecks occurred on the side keys during this delay, a peck on the center key could be reinforced according to the programmed schedule. This first (elimination) condition was in effect for a minimum of 20 sessions and until there were no increasing or decreasing trends in response rates during the last 6 sessions as determined by visual inspection. Then, in the resurgence condition, all three keys were illuminated and extinction was in effect on each key. This condition was in effect for a minimum of 10 sessions and until 3 consecutive sessions occurred in which there was less than one response per min.

Experiment 1b

In the reinforcement condition, the two side keys were illuminated and a concurrent VI 1-min VI 6-min schedule (Pigeons 126 and 536) or concurrent VI 6-min VI 1-min schedule (Pigeon 418) was in effect, with the first and second listed schedules on the left and right keys, respectively. The center key was dark. The 3-s COD was in effect on each of the side keys. Independent VI schedules operated on each side key such that a reinforcer could be available on both keys at the same time. This first (reinforcement) condition was in effect for a minimum of 30 sessions and until responding was stable in the last 6 sessions as determined by visual inspection. In the elimination condition, extinction was in effect on the two illuminated side keys, a VI 3-min schedule was in effect on the illuminated center key, and the 3-s COD was in effect on the center key. In the resurgence condition, all three keys were illuminated and extinction was in effect on each. The stability criteria for the elimination and resurgence conditions were the same as described for Experiment 1a. The sequence of conditions was repeated for Pigeon 418 because little resurgence was obtained during the first assessment.

Results and Discussion

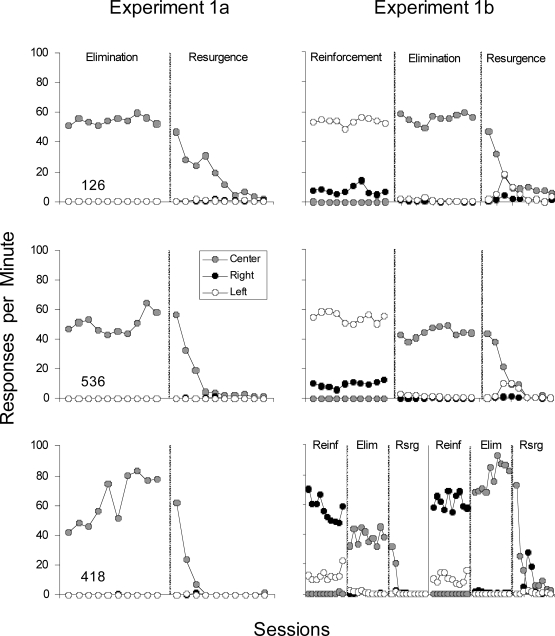

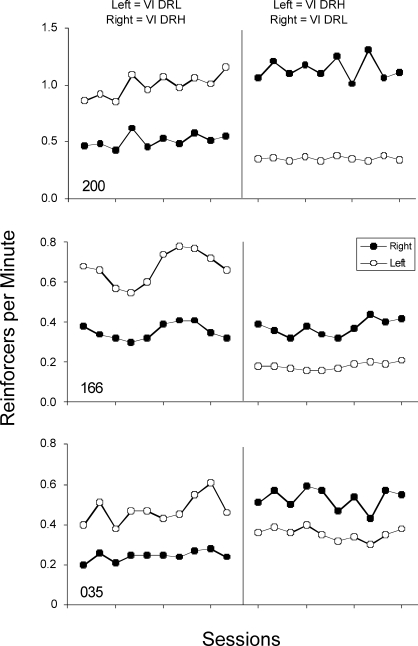

Response rates during each of the last 10 sessions of the elimination condition and each of the first 10 sessions of the resurgence condition of Experiment 1a are shown in the left panel of Figure 1. Response rates during each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence condition of Experiment 1b are shown in the right panel of Figure 1. Data from the replication also are shown for Pigeon 418 in Experiment 1b. Little responding occurred on the side keys in the resurgence condition of Experiment 1a, especially relative to the amount of responding that occurred on the side keys in the resurgence condition of Experiment 1b. That is, in Experiment 1b, when initial responding on either side key was reinforced until stable, response rates in the resurgence condition were greater than when, in Experiment 1a, side-key responding had only a brief reinforcement history.

Fig 1.

Responses per min on each key during each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence conditions of Experiments 1a and 1b.

Ideally, responding in the resurgence conditions of Experiments 1a and 1b could be compared more directly by analyzing resurgence as a proportion of baseline responding, but this analysis was not feasible given the near-zero (and often zero) response rates that occurred in the elimination condition of Experiment 1a. In any case, the extent to which each of the concurrent responses resurged was related to properties of the reinforcement condition. The absence of such a reinforcement condition in Experiment 1a precluded subsequent resurgence, but, because responding on the side keys was autoshaped prior to implementing the first (elimination) condition, there was a very brief history of reinforcement for responding on the side keys. This brief history of reinforcement provided by the autoshaping procedure alone was not sufficient to produce subsequent resurgence, however. The present finding, therefore, is consistent with Epstein's (1983) earlier report of no resurgence of a response that had never been reinforced systematically (i.e., the pigeons were naïve and no pretraining was conducted). These present findings in combination with those of Epstein suggest that some minimal duration of reinforcement history is necessary for resurgence.

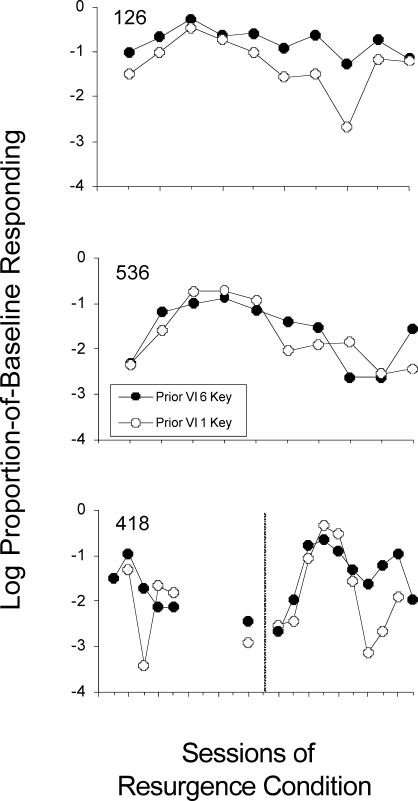

In addition to comparisons of resurgence when responding was or was not previously reinforced over an extended period, Experiment 1b also allowed comparisons of resurgence on two response keys previously correlated with different reinforcement schedules. More resurgence occurred on the key previously correlated with the VI 1-min schedule than on the key previously correlated with the VI 6-min schedule (see Figure 1). Even in the first resurgence condition for Pigeon 418, during which little resurgence occurred, more resurgence occurred on the key previously correlated with the VI 1-min schedule. A more direct analysis of the relation between resurgence and response rates during the reinforcement conditions is shown in Figure 2. The data points represent the logarithms (to the base 10) of response rates in each of the first 10 sessions of the resurgence conditions divided by the average response rates over the last 10 sessions of the reinforcement condition on each side key. Across pigeons there were no systematic differences in proportion-of-baseline resurgence on the side keys. Pigeon 126, however, responded proportionally more on the key previously correlated with the VI 6-min schedule during the resurgence condition as compared to the reinforcement condition. The overlapping lines for Pigeons 536 and 418 indicate that the amount of resurgence that occurred on each key was proportional to the amount of responding on each key during the reinforcement condition.

Fig 2.

Log proportion-of-baseline responding on each key during each session of the resurgence conditions of Experiment 1b. Data points are missing for sessions in which resurgence rates equaled zero, yielding undefined logarithmic values.

The differential amount of resurgence, in absolute terms, that occurred on the key previously correlated with a VI 1-min schedule of reinforcement and on the key previously correlated with a VI 6-min schedule of reinforcement could be a function of either the reinforcement differential per se, or the differential responding produced by such reinforcement. In other words, it is unknown whether the higher reinforcement rates, response rates, or the combination of the two variables under the VI 1-min schedule were responsible for greater resurgence observed on that key. The second and third experiments attempted to isolate the contributions of these two variables to resurgence.

Experiment 2

In Experiment 2, tandem schedules were used during the reinforcement condition to hold reinforcement rates constant while varying response rates on the side keys (see Lattal, 1989). The response-elimination technique also was changed in Experiment 2 from that used in Experiment 1. Instead of using a VI schedule to reinforce a third response while extinguishing responding on the side keys, as done in Experiment 1, responding was eliminated in Experiment 2 by introducing a differential-reinforcement-of-other-behavior (DRO) schedule. In prior studies, DRO schedules were used successfully during the elimination condition before testing resurgence (e.g., Lieving & Lattal, 2003). Using the DRO schedules rather than reinforcing responding on a third key has at least two advantages. First, it prevents any induction-like effects that may occur. That is, pecking a third operandum may alter pecking rates or patterns generated during the reinforcement condition that otherwise might occur in the resurgence condition. Second, the DRO contingency requires that responding associated with either side key be completely eliminated, because the absence of responding is necessary for reinforcement, prior to the introduction of extinction in the resurgence test.

Method

Subjects

Three male White Carneau pigeons, similar to those described for Experiment 1, were used. Each of the pigeons had been used in prior experiments involving key pecking for food.

Apparatus

The operant chamber used in Experiment 2 was similar to that used in Experiment 1, but differed as follows. It was 30 cm wide, 30 cm long, and 35 cm high. Each of the two side keys was 6 cm from the side wall and 6 cm from the center key (center to center). All three keys were located 23 cm from the floor. The houselight was located in the lower right corner of the work panel and access to grain was available through a 5-cm square aperture with its center located 5 cm from the floor and centered on the panel. A speaker was located 2 cm above the houselight. The general illumination, food deliveries, and white noise were the same as those described for Experiment 1.

Procedure

No pretraining was required. In all conditions of Experiment 2, each session began with a 5-min blackout in the chamber, after which the houselight remained on throughout the session, except during reinforcer deliveries. Sessions occurred 7 days per week. The schedule in effect during each condition and the number of sessions in each condition is shown in Table 2.

Table 2.

The Schedule and Number of Sessions in Which Each Pigeon Was Exposed to Each Condition of Experiment 2.

| Condition | Concurrent Schedule |

Pigeon |

|||

| Left Key | Right Key | 200 | 166 | 035 | |

| Reinforcement | tandem VI 27 s FR 5 | tandem VI 27 s DRL 3 s | 39 | 56 | 40 |

| Elimination | DRO 20 s | DRO 20 s | 15 | 15 | 15 |

| Resurgence | Extinction | Extinction | 12 | 12 | 12 |

| Reinforcement | tandem VI 27 s DRL 3 s | tandem VI 27 s FR 5 | 61 | 39 | 19 |

| Elimination | DRO 20 s | DRO 20 s | 22 | 14 | 15 |

| Resurgence | Extinction | Extinction | 12 | 13 | 12 |

In the reinforcement condition, a concurrent tandem VI 27-s FR 5 tandem VI 27-s DRL 3-s schedule was in effect on the two illuminated side keys (with the same 3-s COD described in Experiment 1). An independent VI schedule operated on each side key and, when the VI interreinforcer interval lapsed, a reinforcer was delivered following completion of the FR or DRL response requirement. (The VI schedules were generated as described in Experiment 1.) The DRL was programmed such that the first response after the VI elapsed initiated the DRL timer, which was reset by any additional responses, until at least 3 s elapsed before another response occurred. Therefore, two key pecks separated by at least 3 s were required after the VI elapsed for the reinforcer to be presented. Pecks to the dark center key had no programmed consequence. This condition was in effect for a minimum of 15 sessions and until response rates on the side keys were judged stable by visual inspection.

In the elimination condition, a DRO 20-s schedule delivered reinforcers if no key pecking occurred on either illuminated side key for 20 s (i.e., each response on either side key postponed an otherwise scheduled reinforcer by 20 s). This condition was in effect for a minimum of 15 sessions and until 3 consecutive sessions occurred in which there was less than one response per minute on each key. Each session of the reinforcement and elimination conditions lasted until 60 reinforcers had been delivered. In the resurgence condition extinction was in effect while the two side keys were illuminated throughout the 30-min sessions. The resurgence condition was in effect for a minimum of 10 sessions and until 3 consecutive sessions occurred in which less than one response per minute occurred on the side keys.

After the first three conditions, each condition was repeated in the same order but with the left- and right-key reinforcement contingency assignments reversed. Contingencies arranged in the elimination and resurgence conditions, and the stability criteria used, were the same in the initial test and the replication.

Results and Discussion

The ratios of response rates and reinforcement rates during the reinforcement conditions of Experiment 2 are shown in Table 3. Reinforcer ratios were close to one (i.e., similar reinforcement rates were obtained on the two keys) during the reinforcement condition of all six resurgence tests. The large differences in response ratios from the initial test to the replication were dictated by the tandem schedule contingencies. For Pigeon 200, for example, when the tandem VI DRL schedule was in effect on the right key, ratios of right-to-left-key response rates varied from 0.12 to 0.24 (mean = 0.16) whereas ratios of right-to-left-key reinforcement rates varied from 0.93 to 1.73 (mean = 1.16) over the last 10 sessions of that condition. When the tandem VI DRL schedule was in effect on the left key, response ratios varied from 7.84 to 12.82 (mean = 9.91) while reinforcement ratios varied from 0.66 to 1.86 (mean = 1.11).

Table 3.

Ratios (Right-key/Left-key) of Responses per Minute and Reinforcers per Min for Each Pigeon in Each of the Last 10 Sessions of the Reinforcement Conditions in Experiment 2.

| Pigeon |

||||||

| 200 | 166 | 035 | ||||

| Initial Test | ||||||

| Session | Responses | Reinforcers | Responses | Reinforcers | Responses | Reinforcers |

| 1 | 0.12 | 1.00 | 0.41 | 1.64 | 0.32 | 1.71 |

| 2 | 0.15 | 1.40 | 0.24 | 0.95 | 0.45 | 1.73 |

| 3 | 0.12 | 0.77 | 0.31 | 1.30 | 0.32 | 1.00 |

| 4 | 0.17 | 0.93 | 0.35 | 1.29 | 0.14 | 0.82 |

| 5 | 0.12 | 1.21 | 0.19 | 0.66 | 0.47 | 1.38 |

| 6 | 0.14 | 0.93 | 0.33 | 1.06 | 0.37 | 0.95 |

| 7 | 0.24 | 1.40 | 0.27 | 1.00 | 0.29 | 1.22 |

| 8 | 0.20 | 0.93 | 0.26 | 1.22 | 0.32 | 1.00 |

| 9 | 0.14 | 1.73 | 0.46 | 1.88 | 0.20 | 1.07 |

| 10 | 0.18 | 1.30 | 0.21 | 1.23 | 0.21 | 0.54 |

| Mean | 0.16 | 1.16 | 0.30 | 1.22 | 0.31 | 1.14 |

| Replication | ||||||

| Session | Responses | Reinforcers | Responses | Reinforcers | Responses | Reinforcers |

| 1 | 12.82 | 1.86 | 16.67 | 3.31 | 5.41 | 1.40 |

| 2 | 11.26 | 1.00 | 14.51 | 0.71 | 5.41 | 0.62 |

| 3 | 8.08 | 0.66 | 10.48 | 1.50 | 8.22 | 1.22 |

| 4 | 10.29 | 1.29 | 5.25 | 0.93 | 8.38 | 1.50 |

| 5 | 8.75 | 0.88 | 5.11 | 0.66 | 8.94 | 1.51 |

| 6 | 8.28 | 0.77 | 3.52 | 1.00 | 6.36 | 0.77 |

| 7 | 7.84 | 0.88 | 5.36 | 1.15 | 8.95 | 0.72 |

| 8 | 8.54 | 0.88 | 6.94 | 1.49 | 4.96 | 0.62 |

| 9 | 10.59 | 1.00 | 3.19 | 1.06 | 7.10 | 1.72 |

| 10 | 12.69 | 1.85 | 4.51 | 0.93 | 7.13 | 0.76 |

| Mean | 9.91 | 1.11 | 7.55 | 1.27 | 7.09 | 1.08 |

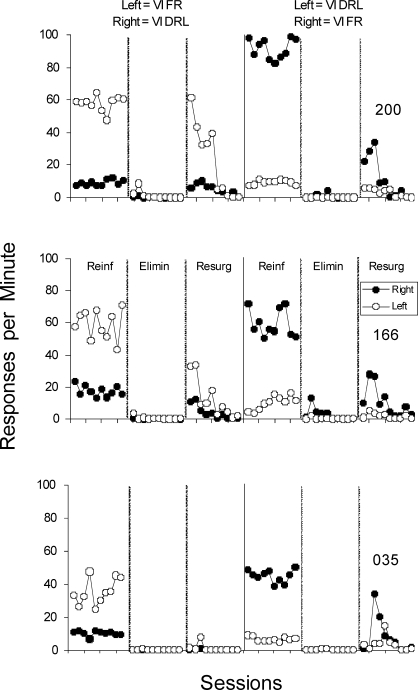

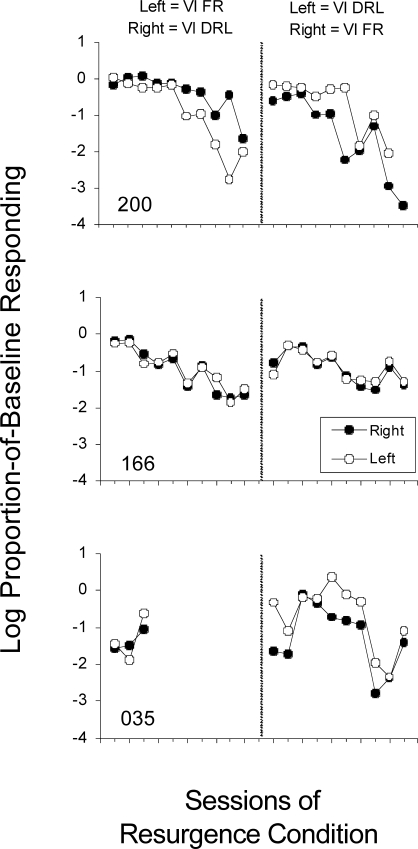

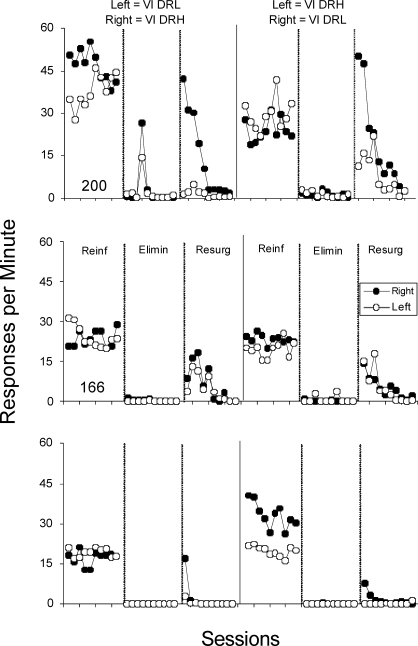

Average reinforcer rates during the last 10 sessions under the DRO 20-s schedule were 2.87, 2.94, and 2.96 (during the first elimination condition) and 2.95, 2.72, and 2.82 (during the second elimination condition) for Pigeons 200, 166, and 035 respectively. These obtained reinforcer rates are a measure, in addition to response rates, of the efficiency of responding under the DRO contingency, and they indicate that the schedule eliminated responding. The effects of the DRO schedule also are apparent in Figure 3, which shows the response rates on each key during each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence conditions. For all pigeons in every condition, more resurgence occurred on the key to which higher rates of responding occurred in the reinforcement condition than on the key to which relatively lower rates of responding occurred in the reinforcement condition. This differential resurgence occurred despite similar reinforcement rates across these response alternatives during the reinforcement condition. A more direct analysis is shown in Figure 4. These values were determined as described for Figure 2 of Experiment 1b. For Pigeon 200, proportionally more resurgence during the last five sessions occurred on the key previously correlated with the DRL terminal link schedule than the key previously correlated with the FR terminal link schedule. For the first five sessions of the resurgence condition for Pigeon 200 and all sessions shown for Pigeons 166 and 035, however, the functions overlap, indicating that the amount of resurgence on each key was proportional to the amount of responding on those keys during the reinforcement conditions.

Fig 3.

Responses per min on each key during each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence conditions of Experiment 2.

Fig 4.

Log proportion-of-baseline responding on each key during each session of the resurgence conditions of Experiment 2. Data points are missing for sessions in which resurgence rates equaled zero, yielding undefined logarithmic values.

The differential resurgence obtained in Experiment 2 was similar to that of Experiment 1b, although reinforcement rates in the reinforcement condition of Experiment 2 were similar for pecking on both keys. The response rates and proportion-of-baseline resurgence analyses support the conclusion that the amount of resurgence was related directly to the rate at which responding previously occurred under reinforcement (corroborating and extending the findings of Carey, 1951, and Reed & Morgan, 2007, using multiple schedules). Moreover, these data illustrate that what resurges is not a simple key peck, but rather the particular topography or pattern of key pecking that was reinforced previously. That is, when responding characterized by longer IRTs was reinforced during the reinforcement condition, the resurgence was characterized by longer IRTs.

Finally, Experiment 2 replicated prior resurgence effects obtained when DRO schedules were used to eliminate initial responding, rather than, as in Experiment 1 and most previous investigations of resurgence, extinction on the two side keys combined with the reinforcement of a third response were used. Thus, resurgence does not require the reinforcement of a specific, experimentally defined, alternative response during the elimination condition (see Doughty et al., 2007; Lieving & Lattal, 2003).

Experiment 3

The result of Experiment 2 was that different response rates resulted in differential resurgence following elimination of responding even when prior reinforcement rates were similar. By contrast to Experiment 2, reinforcement conditions in Experiment 3 were arranged to approximate equivalent response rates on each key while arranging contingencies to produce differential reinforcement rates on the keys.

Method

Subjects and Apparatus

The 3 pigeons from Experiment 2 and the apparatus from Experiment 1 were used.

Procedure

Pretraining was not required. In all conditions of Experiment 3, sessions occurred 7 days per week and each session began with a 5-min blackout. Thereafter, the houselight was on throughout the session, except during 3-s reinforcer deliveries. The schedule in effect during each condition and the number of sessions to which the pigeons were exposed to each schedule are shown in Table 4.

Table 4.

The Schedule and Number of Sessions in Which Each Pigeon Was Exposed to Each Condition of Experiment 3.

| Condition | Concurrent Schedule |

Pigeon |

|||

| Left Key | Right Key | 200 | 166 | 035 | |

| Reinforcement | tandem VI 30 s DRL x s | tandem VI 30 s DRH x/3 s | 128 | 72 | 60 |

| Elimination | DRO 20 s | DRO 20 s | 16 | 24 | 15 |

| Resurgence | Extinction | Extinction | 22 | 15 | 15 |

| Reinforcement | tandem VI 30 s DRH x/3 s | Tandem VI 30 s DRL x s | 34 | 80 | 100 |

| Elimination | DRO 20 s | DRO 20 s | 17 | 17 | 15 |

| Resurgence | Extinction | Extinction | 31 | 15 | 15 |

In the reinforcement condition, a concurrent tandem VI 30-s DRL x-s tandem VI 30-s DRH y/3-s schedule was in effect on the two side keys and each session lasted until 35 reinforcers were delivered. Pecks to the dark center key had no programmed consequence. A single VI schedule was used as the initial component of the tandem schedule on both keys such that a reinforcer was available on only one of the two keys at a time (an interdependent concurrent schedule, see Stubbs & Pliskoff, 1969). Thus, according to a preset ratio (as described below), the VI schedule arranged for the next terminal component of the tandem schedule to operate on one of the two keys, either DRL or DRH. The DRL schedule was programmed as described in Experiment 2 (i.e., IRT ≥ x s). The DRH schedule was arranged such that a programmed minimum number of responses (y) had to occur within a 3-s period before a reinforcer was delivered. That is, after the VI elapsed, a reinforcer was available upon completion of the required number of responses within a 3-s period. If the required number of responses did not occur, the 3-s period was reset and the reinforcer remained available until the required number of responses occurred within 3 s. A 3-s COD was in effect on each side key as described for Experiments 1 and 2.

The interdependent concurrent VI schedule was used and the values of the DRL and DRH schedules were varied in an attempt to maintain more or less equivalent response rates on the two keys while maintaining corresponding differential reinforcement rates. Because a single VI schedule was used on both response keys, the obtained reinforcer ratios could be controlled directly by the programmed reinforcer ratios. The programmed reinforcer ratios, which were held constant during the last 10 sessions of the reinforcement condition, are shown in the Appendix. Each ratio represents the number of reinforcers assigned to the right and left keys, respectively. If the reinforcer ratio was 4∶8, for example, 4 of the first 12 reinforcers would be assigned to the right key whereas 8 of the first 12 reinforcers would be assigned to the left key (and these assignments occurred in random order). After the first 12 reinforcers were obtained under this 4∶8 reinforcer ratio, the allocation process would be reset and the next 12 reinforcers would be assigned in the same fashion. Reinforcers always were arranged such that fewer reinforcers were obtained on the key correlated with the tandem VI DRH schedule than on the key correlated with the tandem VI DRL schedule. The DRH and DRL values were adjusted prior to each session at the experimenter's discretion based on response rates in the preceding session. The DRL value (s) and DRH value (number of responses required in the 3-s period) in effect during each of the last 10 sessions of the reinforcement condition for each pigeon are shown in the Appendix.

The reinforcement condition was in effect until responding was stable as determined by visual inspection. Initially, the ratio of response rates on each key was required to vary around 1.0 prior to introducing the elimination condition, but time constraints required that this criterion be relaxed in two cases. For Pigeon 200, during the initial test, the reinforcement condition was in effect for 128 sessions and response rates were similar for only the last 5 of those sessions before a condition change was implemented. This transient similarity in response rates on each key for Pigeon 200 occurred several times throughout the reinforcement condition, but never lasted the 6 sessions required to change conditions. Therefore, the elimination condition was introduced after similar response rates for 5 consecutive sessions. Similarly, during the replication, the elimination condition was introduced for Pigeon 035 after 100 sessions of exposure to the reinforcement condition. This latter case was the only one of six resurgence tests in Experiment 3 in which response rates across the two keys were more different than corresponding reinforcer rates.

A DRO 20-s schedule was introduced on both response keys in the elimination condition and each session lasted until 35 reinforcers were delivered. This condition was in effect for a minimum of 15 sessions and until there were 3 consecutive sessions during which less than one response per minute occurred. Thereafter, in each 35-min session of the resurgence condition, extinction operated on both keys. This resurgence condition was in effect for a minimum of 15 sessions and until there were 3 consecutive sessions during which less than one response per minute occurred on the side keys.

Each condition of Experiment 3 was repeated, but with the contingencies in the reinforcement condition reversed on each key. The elimination and resurgence conditions and stability criteria during replication were the same in the initial test and the replication.

Results and Discussion

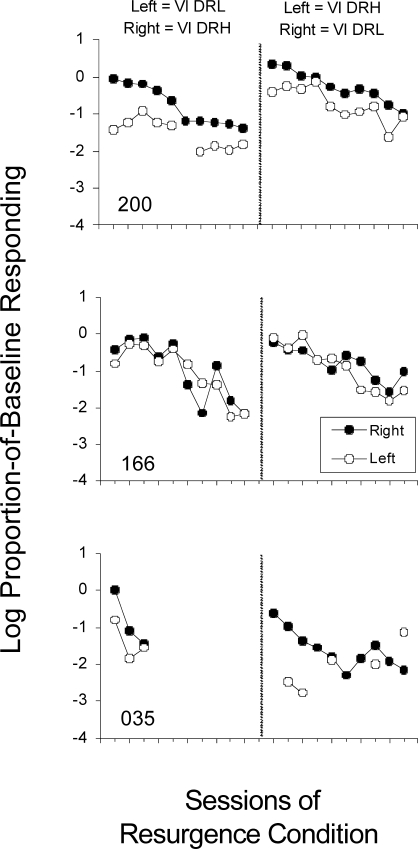

The ratios of response rates and reinforcement rates (right key/left key) for each of the last 10 sessions of the reinforcement conditions are shown in Table 5. Although response rates varied from session to session, the overall ratios of response rates on the keys were close to one (see the mean ratios in Table 5). One exception, noted earlier, occurred for Pigeon 035 during the replication when response rate ratios deviated further from 1.0 (mean = 1.67) than did reinforcement rate ratios (mean = 1.50). Reinforcement rates (shown in Figure 5) were consistently different between the keys, as dictated by the programmed contingencies. That is, during both reinforcement conditions, reinforcement under the tandem VI DRL schedule was consistently more frequent than that during the tandem VI DRH schedule. For Pigeon 200, for example, when the tandem VI DRH schedule operated on the right key, ratios of right-to-left key response rates varied from 0.89 to 1.72 (mean = 1.27) and ratios of right-to-left key reinforcer rates varied from 0.47 to 0.56 (mean = 0.51). When the tandem VI DRL schedule was in effect on the right key, ratios of right-to-left key response rates varied from 0.54 to 1.16 (mean = 0.84) and ratios of right-to-left key reinforcer ratios varied from 2.79 to 3.36 (mean = 3.24). Overall, then, responding was not consistently higher on either of the two tandem schedules across both reinforcement conditions for any pigeon.

Table 5.

Ratios (Right-key/Left-key) of Responses per Min and Reinforcers per Min for Each Pigeon in Each of the Last 10 Sessions of the Reinforcement Conditions in Experiment 3.

| Pigeon |

||||||

| 200 | 166 | 035 | ||||

| Initial Test | ||||||

| Session | Responses | Reinforcers | Responses | Reinforcers | Responses | Reinforcers |

| 1 | 1.45 | 0.53 | 0.66 | 0.56 | 0.87 | 0.50 |

| 2 | 1.72 | 0.52 | 0.68 | 0.52 | 0.92 | 0.51 |

| 3 | 1.51 | 0.49 | 0.97 | 0.56 | 1.23 | 0.55 |

| 4 | 1.46 | 0.56 | 0.97 | 0.55 | 0.66 | 0.53 |

| 5 | 1.54 | 0.47 | 1.05 | 0.53 | 0.66 | 0.53 |

| 6 | 1.08 | 0.49 | 1.25 | 0.53 | 0.90 | 0.58 |

| 7 | 0.99 | 0.49 | 1.30 | 0.53 | 0.90 | 0.53 |

| 8 | 1.14 | 0.55 | 1.01 | 0.53 | 0.90 | 0.49 |

| 9 | 0.89 | 0.50 | 0.88 | 0.49 | 1.08 | 0.46 |

| 10 | 0.92 | 0.47 | 1.22 | 0.49 | 1.00 | 0.52 |

| Mean | 1.27 | 0.51 | 1.00 | 0.53 | 0.91 | 0.52 |

| Replication | ||||||

| Session | Responses | Reinforcers | Responses | Reinforcers | Responses | Reinforcers |

| 1 | 0.84 | 3.03 | 1.21 | 2.17 | 1.86 | 1.42 |

| 2 | 0.71 | 3.36 | 1.19 | 2.00 | 1.78 | 1.46 |

| 3 | 0.80 | 3.33 | 1.29 | 1.88 | 1.66 | 1.39 |

| 4 | 0.98 | 3.19 | 1.60 | 2.38 | 1.54 | 1.48 |

| 5 | 0.82 | 3.33 | 1.28 | 2.13 | 1.44 | 1.63 |

| 6 | 1.02 | 3.29 | 1.17 | 1.88 | 1.78 | 1.47 |

| 7 | 0.54 | 2.86 | 1.22 | 1.95 | 2.02 | 1.59 |

| 8 | 1.16 | 3.97 | 0.87 | 2.20 | 1.62 | 1.43 |

| 9 | 0.84 | 2.79 | 1.38 | 2.11 | 1.52 | 1.63 |

| 10 | 0.66 | 3.26 | 1.03 | 2.00 | 1.52 | 1.45 |

| Mean | 0.84 | 3.24 | 1.22 | 2.07 | 1.67 | 1.50 |

Fig 5.

Reinforcers per min on each key during each of the last 10 sessions of the reinforcement conditions of Experiment 3.

Average reinforcer rates during the last 10 sessions under the DRO 20-s schedule were 2.59, 2.83, and 3.00 (during the first elimination condition) and 2.64, 2.86, and 2.96 (during the second elimination condition) for Pigeons 200, 166, and 035 respectively. Figure 6 shows the response rates in each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence condition of Experiment 3. With response rates not systematically different in the reinforcement conditions but reinforcement rates clearly differentiated during those reinforcement conditions, resurgence was not an orderly function of reinforcement rates obtained during the reinforcement condition despite the fact that reinforcement rates were consistently different on the two keys. For Pigeon 166 (middle panel of Figure 6), response rates during the reinforcement condition were similar, despite 0.53 and 2.07 reinforcer ratios during the initial test and replication respectively, and subsequent resurgence on the two keys was similar. For Pigeons 200 and 035, more resurgence occurred on the right key regardless of the schedules operating on the two keys during reinforcement conditions. During the initial test for Pigeon 200 and the replication for Pigeon 035, however, response rates during the reinforcement condition were higher on the right key than the left key (the operating contingencies did not adequately equate them) and very little resurgence occurred subsequently on the left key. (In fact, in the replication for Pigeon 035, the response rates during the reinforcement condition differed more than the obtained reinforcer rates during the reinforcement condition.) Thus, although more resurgence occurred on the right key in these tests, the data in Figure 7 show that the proportion-of-baseline resurgence was similar. As shown in Figure 6, the replication for Pigeon 200 and the initial test for Pigeon 035 are the only two cases, of the six tests, in which similar response rates in the reinforcement condition were followed by any differential response rates in the resurgence condition. It should be noted, however, that resurgence on the left key was higher during these tests when baseline response rates were similar than in the two previously mentioned tests (initial test for Pigeon 200 and replication for Pigeon 035) when baseline response rates on the right key were higher than baseline response rates on the left key (see Figure 6).

Fig 6.

Responses per min on each key during each of the last 10 sessions of the reinforcement and elimination conditions and each of the first 10 sessions of the resurgence conditions of Experiment 3.

Fig 7.

Log proportion-of-baseline responding on each key during each session of the resurgence conditions of Experiment 3. Data points are missing for sessions in which resurgence rates equaled zero, yielding undefined logarithmic values.

From Experiment 3 it may be concluded that differential reinforcement rates do not necessitate differential resurgence rates. That is, resurgence on the two keys varied (and varied somewhat systematically with response rates during the reinforcement condition) despite consistently discrepant reinforcement rates on the two keys during the reinforcement condition. Taken with Experiment 2, resurgence on two concurrently available operanda appears better predicted by the rate at which responding previously occurred on those operanda rather than the rate at which that responding was reinforced.

General Discussion

The present experiments diverge from previous experimental analyses of resurgence in four ways. First, in Experiments 1 and 2, concurrent resurgence was shown to be a relative effect of environmental circumstances occurring in the reinforcement condition of the resurgence procedure. In other words, the length of reinforcement history and the reinforcement schedules used to maintain responding during the reinforcement condition were related systematically to resurgence of that responding. Second, by comparing the results of Experiments 2 and 3, the nature of resurgence was similar to the nature of responding in the reinforcement condition. Third, the results of all three experiments attest to the utility of a concurrent resurgence procedure, which allowed the assessment of differential resurgence by concurrent reinforcement phase manipulations, rather than by effecting these manipulations successively through either a reversal design or a multiple schedule where conditions alternate. (The particular disadvantage of the latter is that whichever condition is presented first may have a disproportionate effect relative to the condition that follows, thereby biasing the results and necessitating between-subjects comparisons.) Finally, the use of a DRO schedule in the elimination phase minimized the possibility of response induction from a third key peck in the elimination condition to the keys on which resurgence occurred under extinction. The results of these concurrent resurgence procedures thus contribute to the further understanding of both resurgence and behavioral history. They also serve as a conduit to broader issues related to the definition of resurgence and to the applications of the resurgence concept.

In the present experiments, as in previous investigations of resurgence, previously established responding, subsequently eliminated, reappeared when the reinforcement of alternative response(s) was discontinued. Resurgence occurred reliably during both the first and second resurgence tests of each experiment, replicating the earlier findings of Lieving and Lattal (2003) that resurgence is a repeatable effect not necessarily diminished by a second series of tests. In fact, the results of Experiment 3 showed reliable resurgence in pigeons that had as many as three prior resurgence tests. Moreover, these experiments replicated earlier studies of resurgence in which DRO schedules were used in the elimination condition prior to resurgence tests (e.g., Doughty et al., 2007).

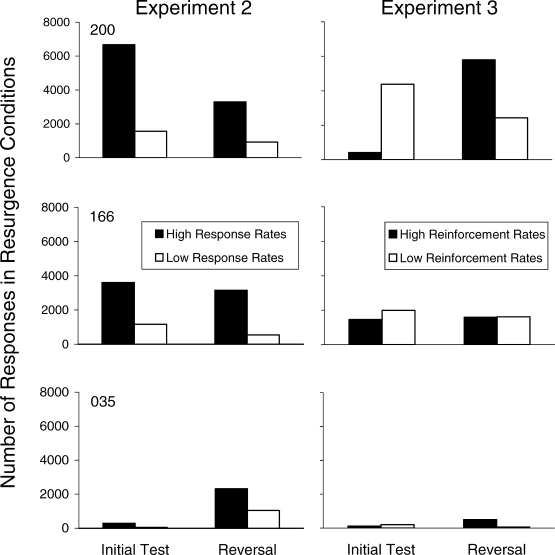

Although prior reinforcement until responding reached stability ensured resurgence in all conditions of each experiment, only a difference in prior response rates, and not prior reinforcement rates, was systematically related to rates of resurgence. These latter effects are summarized in Figure 8 which allows comparison of the resurgence of the high and low response-rate behavior (with relatively similar reinforcement rates) in Experiment 2, and of the high and low reinforcement-rate behavior (with relatively similar response rates) in Experiment 3. In Experiment 2 (left panel), in six of six tests of resurgence, two for each pigeon, resurgence occurred at relatively higher rates on the key to which responding occurred at relatively higher rates during the reinforcement condition. Similar data from the same pigeons in Experiment 3 (right panel) reveal a more idiosyncratic relation between prior reinforcement rate and resurgence. That is, differential reinforcement rates on the two keys during the reinforcement conditions, unlike the differential response rates of Experiment 2, were not related to consistent differences in resurgence on the two keys. The only case in which response rates during reinforcement conditions did not predict resurgence was in Experiment 3, but there response rates varied unsystematically across the initial reinforcement condition. Thus, the data in Figure 8, like the previous absolute response rates and proportion-of-baseline resurgence, show that baseline response rates better predicted resurgence rates than did baseline reinforcer rates.

Fig 8.

Total number of responses on each key during all sessions of the resurgence conditions (the initial test and reversal) of Experiment 2 (left panel) and Experiment 3 (right panel).

The results of Experiment 2 show that response rates alone, that is, without differential reinforcement rates, determine the degree of response resurgence. When, in Experiment 3, response rates varied unsystematically, resurgence also was unsystematic and was not related to the consistent differences in reinforcement rates associated with each operandum. This negative result with respect to the relation between resurgence and reinforcement rate, like any negative result, must not be over-extrapolated, as there may be some differences in reinforcement rates that might produce differential resurgence. Nonetheless, the results of the two experiments taken together do suggest, within the limits noted, that response rates are the more reliable predictor of resurgence. The results further suggest the importance of isolating these two variables, which typically are confounded in many experimental arrangements, when assessing the causes of resurgence. In line with these findings, the results of Carey (1951) and Reed and Morgan (2007) also implicate response rates during the reinforcement phase as a critical element in differential resurgence. Unlike the present experiments, however, neither Carey nor Reed and Morgan tested resurgence following reinforcement histories consisting of similar response rates but unequal reinforcement rates.

As noted in the Introduction, resurgence is an instance of residual or remote behavioral history effects whereby present responding under nominally identical conditions is affected by circumstances in effect prior to those identical conditions. For example, Barrett (1977) trained two groups of pigeons on FR or DRL schedules before training both groups to stable performance on VI schedules. When pigeons in the two groups were injected with different doses of d-amphetamine, different dose effect curves were obtained as a function of the original FR or DRL training, even though response rates on the VI schedule were similar for both groups of pigeons prior to the injections. In a similar way, the present results show how different experiences in an initial reinforcement condition followed by an identical elimination condition (either extinction in Experiment 1 or DRO in Experiments 2 and 3) give rise to differential effects of that prior differential history in the resurgence condition. By virtue of the concurrent-schedule arrangement, these effects are shown within individual subjects rather than across groups of subjects or in alternating (multiple-schedule) contexts.

Previous within-subject comparisons of the effects of an immediately preceding condition on subsequent responding under a common contingency have shown that differences in responding generated by exposure to FR or DRL schedules during an initial training (history-building) condition persist for some time following a change of both schedules to FI (Freeman & Lattal, 1992). By contrast, when response rates in two history-building conditions are similar but reinforcement rates between the two conditions differ, behavioral differences in the history-testing conditions are small and transient following a change of both schedules to an identical schedule (e.g., an FI schedule, Okouchi & Lattal, 2006). Thus, the present results from Experiments 2 and 3 showing that different response rates, but not different reinforcement rates in the history-building condition, were related to subsequent rates of resurgence (in the “history-testing” condition) affirm those obtained using a different paradigm to assess behavioral history effects.

Two final considerations with respect to resurgence as a type of behavioral history effect are whether resurgence should be measured in absolute or proportional terms and whether resurgence may be considered a test of response strength. These issues are brought to light by the results of Experiments 2 and 3. In Experiment 2, absolute resurgence on the two keys was different whereas in both Experiments 2 and 3 the proportional resurgence was not systematically different. In general tests of behavioral history, responding often is assessed in absolute terms (e.g., Freeman & Lattal, 1992). In tests of response strength, however, proportion-of-baseline measures often are used instead (e.g., Nevin & Grace, 1999; Nevin, Smith, & Roberts, 1987). The proportional measures are used to test how resistant a behavior is to change (e.g., response-independent food deliveries, extinction). In resurgence tests, it may be the case that both measures are useful to consider. In terms of response strength, in the present experiments, it may be the case that the strength of responding on each key of Experiments 2 and 3 was similar because the proportion-of-baseline resurgence was similar across the keys. The absolute amount of resurgence, however, was different on the two keys. It is likely the case that resurgence is a combined function of prior reinforcement rates (which determine the likelihood that behavior will recur) and prior response patterns (which determine the structural nature of the resurgence, e.g., short IRTs or long IRTs). Therefore, what is not addressed by the present experiments, and perhaps warrants further analysis, is the mechanism by which higher response rates in the reinforcement condition are related to resurgence rates. On one hand, it may be the case that higher response rates in the reinforcement condition are some indication that previously reinforced behavior is more resistant to change and, in this sense, stronger (see Lattal, 1989). On the other hand, the different absolute rates of resurgence could represent different types of operants (i.e., “peck slow” and “peck fast”) that recovered similarly due to their similar strengths.

The present results also bear on interpretations of resurgence as deferred extinction or variability induced by extinction (cf. Cleland et al., 2001). Eliminating responding during the elimination phase by using DRO schedules ensures the response is indeed eliminated and not simply transferred to the second operandum in the elimination component as was possible in several earlier studies of resurgence (e.g., Leitenberg et al., 1970; but cf. Lieving & Lattal, 2003). In such cases, Leitenberg and his colleagues have argued, resurgence may be simply a continuation of the nonextinguished class of responses from the reinforcement condition. But in the present Experiments 2 and 3, each pigeon continued to peck during the first session (and often in the second and third sessions of exposure) to the DRO schedule of the elimination condition (which consequently earned the pigeons fewer reinforcers). The fact that pecking occurred early on during DRO exposure and then decreased indicates that the pigeons contacted the extinction contingency in effect for responding on the keys. The two different contingencies implemented in the reinforcement condition of each experiment reported here preclude an interpretation of resurgence as extinction-induced variability. Were resurgence simply a variant of the latter, nondifferential responding would be expected in the resurgence condition, which was not the case in Experiments 1 and 2. The orderly properties of resurgence found here suggest that such responding is not simply variability, because otherwise there would be no systematic relation between contingencies in the reinforcement conditions and subsequent resurgence as there was in Experiments 1 and 2.

When considering the implications of the present findings, the conceptual and operational definitions of resurgence recede in importance. Understanding, in its own right, the extent to which reinforcement histories and the patterns of responding they generate influence subsequent response recurrence is important, regardless of the label attached to such a phenomenon. The present results have implications both for experimental control in the laboratory (e.g., Aguilera, 2000) and the maintenance of clinical interventions in applied settings (e.g., Lerman & Iwata, 1996). In basic research settings, responding that was reinforced in previous conditions, or possibly previous experiments, may resurge when recent contingencies have been changed such that they interact with the direct effects of current contingencies. The response resurgence may even go unnoticed in such cases because it may involve responses that typically are not recorded, such as hopper checking, or topographies of responses, such as IRTs. In applied settings, responding that was reinforced prior to the introduction of interventions may resurge when the contingencies arranged by the intervention are altered due to time constraints, caregivers' limited skills, or to changes in assignments of personnel to carry out an intervention. Problem behavior, such as self-injury or aggression, may resurge in a classroom, for example, if the teacher does not reinforce a mand that a child had learned during functional communication training (see Lieving, Hagopian, Long, & O'Connor, 2004).

Conversely, there are other situations where resurgence may facilitate performance rather than interfere with desired outcomes. In the laboratory, for example, resurgence of a previously reinforced response may facilitate in generating required baseline response rates or topographies more quickly than if the organism has no history where such desired responding has been reinforced. Likewise, resurgence in applied settings may lead to desired skills in problem solving or creativity, as suggested by Epstein (1991). Thus, data generated by the experimental analysis of behavioral history in general, and resurgence in particular, might enhance the control of resurgence, either facilitating or minimizing its occurrences in situations like those described above.

Acknowledgments

Portions of these data were presented at the annual meetings of the Southeastern Association for Behavior Analysis, Chattanooga, TN, October 2000, and the Association for Behavior Analysis, New Orleans, May 2001. We thank Gregory Lieving and Adam Doughty for helpful comments on the project and Jeremy Styles, Darin Sellers, Christopher Eluk, Adam Doughty, and Jessica Milo for assistance in collecting data.

APPENDIX

Values of the Programmed Reinforcer Ratio, DRH Schedule, and DRL Schedule in Each of the Last 10 Sessions of the First Condition for Each Pigeon in Experiment 3

| Pigeon | ||||||||

| 200 |

166 |

035 |

||||||

| Reinf Ratio | DRH Value (# Resp) | DRL Value (s) | Reinf Ratio | DRH Value (# resp) | DRL Value (s) | Reinf Ratio | DRH Value (# resp) | DRL Value (s) |

| 2∶4 | 7 | 2.8 | 4∶8 | 8 | 4.0 | 2∶4 | 14 | 8.5 |

| 2∶4 | 6 | 2.0 | 4∶8 | 9 | 5.0 | 2∶4 | 14 | 9.0 |

| 2∶4 | 8 | 1.5 | 4∶8 | 11 | 5.3 | 2∶4 | 14 | 9.0 |

| 2∶4 | 5 | 1.5 | 4∶8 | 11 | 5.3 | 2∶4 | 14 | 9.0 |

| 2∶4 | 4 | 1.0 | 4∶8 | 11 | 5.3 | 2∶4 | 14 | 8.3 |

| 2∶4 | 4 | 0.5 | 4∶8 | 11 | 5.3 | 2∶4 | 13 | 8.6 |

| 2∶4 | 4 | 1.0 | 4∶8 | 10 | 5.5 | 2∶4 | 13 | 8.7 |

| 2∶4 | 4 | 1.0 | 4∶8 | 10 | 5.0 | 2∶4 | 14 | 9.0 |

| 2∶4 | 3 | 1.0 | 4∶8 | 10 | 5.0 | 2∶4 | 14 | 9.2 |

| 2∶4 | 4 | 1.5 | 4∶8 | 10 | 5.0 | 2∶4 | 14 | 9.3 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.6 | 3∶2 | 12 | 5.2 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.6 | 3∶2 | 13 | 5.0 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.6 | 3∶2 | 14 | 5.0 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.6 | 3∶2 | 14 | 5.2 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.4 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.6 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.4 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.4 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.4 |

| 7∶2 | 1 | 1.0 | 4∶2 | 16 | 7.8 | 3∶2 | 14 | 5.4 |

References

- Aguilera C. Resurgence of inaccurately instructed behavior (Doctoral dissertation, West Virginia University, 2000) Dissertation Abstracts International. 2000;64:2900. [Google Scholar]

- Barrett J.E. Behavioral history as a determinant of the effects of d-amphetamine on punished behavior. Science. 1977;198:67–69. doi: 10.1126/science.408925. [DOI] [PubMed] [Google Scholar]

- Barrett J.E, Stanley J.A. Prior behavioral experience can reverse the effects of morphine. Psychopharmacology. 1983;81:107–110. doi: 10.1007/BF00429001. [DOI] [PubMed] [Google Scholar]

- Brown P.L, Jenkins H.M. Autoshaping of the pigeon's key-peck. Journal of the Experimental Analysis of Behavior. 1968;11:1–8. doi: 10.1901/jeab.1968.11-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey J.P. Reinstatement of previously learned responses under conditions of extinction: A study of “regression” [Abstract] American Psychologist. 1951;6:284. [Google Scholar]

- Cleland B.S, Guerin B, Foster T.M, Temple W. Resurgence. The Behavior Analyst. 2001;24:255–260. doi: 10.1007/BF03392035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M.R, Hayes L.J. Effects of differing instructional histories on the resurgence of rule-following. Psychological Record. 1998;48:275–292. [Google Scholar]

- Doughty A.H, Cirino S, Mayfield K.H, da Silva S.P, Okouchi H, Lattal K.A. Effects of behavioral history on resistance to change. The Psychological Record. 2005;55:315–330. [Google Scholar]

- Doughty A.H, da Silva S.P, Lattal K.A. Differential resurgence and response elimination. Behavioural Processes. 2007;75:115–128. doi: 10.1016/j.beproc.2007.02.025. [DOI] [PubMed] [Google Scholar]

- Epstein R. Resurgence of previously reinforced behavior during extinction. Behavior Analysis Letters. 1983;3:391–397. [Google Scholar]

- Epstein R. Extinction-induced resurgence: Preliminary investigations and possible limitations. Psychological Record. 1985;35:143–153. [Google Scholar]

- Epstein R. Skinner, creativity, and the problem of spontaneous behavior. Psychological Science. 1991;2:362–370. [Google Scholar]

- Epstein R, Skinner B.F. Resurgence of responding during the cessation of response-independent reinforcement. Proceedings of the National Academy of Sciences USA. 1980;77:6251–6253. doi: 10.1073/pnas.77.10.6251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleshler M, Hoffman H.S. A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franks G.J, Lattal K.A. Antecedent reinforcement schedule training and operant response reinstatement in rats. Animal Learning and Behavior. 1976;4:374–378. [Google Scholar]

- Freeman T.J, Lattal K.A. Stimulus control of behavioral history. Journal of the Experimental Analysis of Behavior. 1992;57:5–15. doi: 10.1901/jeab.1992.57-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hemmes N.S, Brown B.L, Jakubow J.J, de Vaca S.C. Determinants of response recovery in extinction following response elimination. Learning & Motivation. 1997;28:542–557. [Google Scholar]

- Lattal K.A. Contingencies on response rate and resistance to change. Learning and Motivation. 1989;20:191–203. [Google Scholar]

- Leitenberg H, Rawson R.A, Bath K. Reinforcement of competing behavior during extinction. Science. 1970;169:301–303. doi: 10.1126/science.169.3942.301. [DOI] [PubMed] [Google Scholar]

- Lerman D.C, Iwata B.A. Developing a technology for the use of operant extinction in clinical settings: An examination of basic and applied research. Journal of Applied Behavior Analysis. 1996;29:345–382. doi: 10.1901/jaba.1996.29-345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieving G.A, Hagopian L.P, Long E.S, O'Connor J. Response-class hierarchies and resurgence of severe problem behavior. The Psychological Record. 2004;54:621–634. [Google Scholar]

- Lieving G.A, Lattal K.A. Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindblom L.L, Jenkins H.M. Responses eliminated by noncontingent or negatively contingent reinforcement recover in extinction. Journal of Experimental Psychology: Animal Behavior Processes. 1981;7:175–190. [PubMed] [Google Scholar]

- Tatham T.A. MED Associates, Inc. MED-PC Medstate notation. East Fairfield, NH: MED Associates, Inc; 1991. [Google Scholar]

- Nevin J.A, Grace R.C. Does the context of reinforcement affect resistance to change. Journal of Experimental Psychology: Animal Behavior Processes. 1999;25:256–268. doi: 10.1037//0097-7403.25.2.256. [DOI] [PubMed] [Google Scholar]

- Nevin J.A, Smith L.D, Roberts J. Does contingent reinforcement strengthen operant behavior. Journal of the Experimental Analysis of Behavior. 1987;48:17–33. doi: 10.1901/jeab.1987.48-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okouchi H, Lattal K.A. An analysis of reinforcement history effects. Journal of the Experimental Analysis of Behavior. 2006;86:31–42. doi: 10.1901/jeab.2006.75-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pacitti W.A, Smith N.F. A direct comparison of four methods for eliminating a response. Learning and Motivation. 1977;8:229–237. [Google Scholar]

- Reed P, Morgan T.A. Resurgence of behavior during extinction depends on previous rate of response. Learning & Behavior. 2007;35((2)):106–114. doi: 10.3758/bf03193045. [DOI] [PubMed] [Google Scholar]

- Shahan T.A, Lattal K.A. On the functions of the changeover delay. Journal of the Experimental Analysis of Behavior. 1998;69:141–160. doi: 10.1901/jeab.1998.69-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stubbs D.A, Pliskoff S.S. Concurrent responding with fixed relative rate of reinforcement. Journal of the Experimental Analysis of Behavior. 1969;6:887–895. doi: 10.1901/jeab.1969.12-887. [DOI] [PMC free article] [PubMed] [Google Scholar]