Abstract

A fast and efficient method for quantifying photoreceptor density in images obtained with an en-face flood-illuminated adaptive optics (AO) imaging system is described. To improve accuracy of cone counting, en-face images are analyzed over extended areas. This is achieved with two separate semiautomated algorithms: (1) a montaging algorithm that joins retinal images with overlapping common features without edge effects and (2) a cone density measurement algorithm that counts the individual cones in the montaged image. The accuracy of the cone density measurement algorithm is high, with >97% agreement for a simulated retinal image (of known density, with low contrast) and for AO images from normal eyes when compared with previously reported histological data. Our algorithms do not require spatial regularity in cone packing and are, therefore, useful for counting cones in diseased retinas, as demonstrated for eyes with Stargardt’s macular dystrophy and retinitis pigmentosa.

1. INTRODUCTION

The past ten years have seen the increasing application of adaptive optics (AO) to overcome the resolution limits imposed by the ocular aberrations in the human eye. First applied to a conventional fundus camera by Liang et al.,1 AO has been subsequently applied to confocal laser scanning ophthalmoscopes2–4 and most recently to optical coherence tomography.5–7

Such systems have enabled cone classing of individual photoreceptors,8,9 measurement of the directionality of individual cones,10 the temporal11,12 and wavelength properties13 of cone reflectance, and the characterization of the cone mosaics in forms of sex linked dichromacy.14 Recently, Choi et al.15 and Wolfing et al.16 have shown cone loss in retinal dystrophies and correlations with retinal function. Martin and Roorda17 demonstrated the use of an AO–scanning laser ophthalmoscope (AO-SLO) for in vivo measurement of single leukocyte cell velocities.

The ability to image single cells in vivo has significant advantages over histological studies that are limited by artifacts during preparation of the tissue and allow only pathogenesis of various retinal diseases to be studied at one point, not at different stages. However, despite the major achievements in hardware and system integration, there has been relatively little development of software to both postprocess and automate the analysis of AO retinal images.

This paper attempts to address two of the image processing challenges associated with high-resolution retinal imaging. First, the field of view can be as small as a few degrees or less owing to the limited isoplanatic patch size of the eye,18,19 hence, many images must be montaged together to obtain a better overall perspective. Second, cone densities20 can be as high as 324,000 cones/mm2, so there is a need for automated photoreceptor counting for both research and clinical applications.

Several authors have generated composite retinal images14,16,17,21 and reported cone density measurements14–16,22 from AO images using flood fundus cameras and AO-SLOs, respectively. Many of these results rely on manual counting and commercial graphics packages such as Photoshop (Adobe Systems, Mountain View, California) and ImageJ (National Institutes of Health, Bethesda, Maryland) for image montaging14,16 and cone density measurements,14,16,22 respectively. This is both highly labor intensive and time consuming to achieve acceptable statistical accuracy and is further complicated by low image contrast, noise, and the fact that results may differ among counting personnel.

Several cone counting methods23,24 (e.g., Hough-transform-based edge detection) have been applied generally to cell counting23 but not specifically to the retina. Other more sophisticated algorithms based on Euclidian distance map erosion followed by watershed segmentation25,26 have also been tried, but these tend to be computationally intensive and would give rise to multiple counts when presented with low-contrast cones.

This paper describes a computer-based system that allows accurate and semiautomatic montaging and counting of cones in AO retinal images written in MATLAB 7.0 programming language (The Mathworks, Inc., Natick, Massachusetts). It utilizes histogram-based cone counting that is implemented after retinal image preprocessing and montaging. These are required to enhance image quality and to make the counting more statistically accurate (a larger image area may be sampled in the montage). Experimental results on synthetic, normal, and diseased retinas demonstrate the efficiency of this computer-based system.

2. METHODS

To develop the algorithms and test their applicability, a synthetic cone mosaic was first generated. Once the algorithms were optimized, the routines were applied to AO images acquired with the University of California Davis AO flood-illumination fundus camera. For a detailed description of this system refer to Choi et al.15

AO images were obtained on three normal subjects (N1, N2, N3), one subject with Stargardt’s macular dystrophy (ST1), and one subject with retinitis pigmentosa (RP1). All the subjects were examined by a retinal specialist, and their details are summarized in Table 1. All subjects were dilated with a drop of 1% tropicamide and 2.5% phenylephrine, and the head was stabilized via the use of a dental-impression bite bar. During imaging, subjects were instructed to fixate on a target consisting of concentric circles, the radii of which corresponded to different retinal eccentricities. Images were then acquired at various retinal locations using an arc lamp with interference filters centered at 550±40 and 650±40 nm with an exposure time of 10 ms. The size of each retinal image was 1° in diameter owing to the current design of the AO system.15 The imaging camera (VersArray XP, Princeton Scientific Instruments, Monmouth Junction, New Jersey) was translated until the clearest focus was achieved at the photoreceptor layer. Trial lenses were used to correct the low-order aberrations, if required. Figure 1 shows AO images acquired with a 550 nm imaging wavelength over a 6.5 mm exit pupil from one of the normal subjects at three retinal locations: 2°, 4° temporal retina, and 7° nasal retina.

Table 1.

Summary of Subject Eye Conditions

| Subject | Age | Gender | Eye Tested | Refractive Error | Visual Acuity | Wavefront RMS Error after AO Correction (μm) |

|---|---|---|---|---|---|---|

| N1 | 22 | M | Left | −2.50 DS | 20/20 | 0.06 |

| N2 | 32 | M | Right | −2.75 DS | 20/15 | 0.06 |

| N3 | 20 | M | Left | +0.50 DS | 20/15 | 0.05 |

| ST1 | 29 | F | Left | −9.00/+2.25×58 | 20/150+ | 0.06 |

| RP1 | 12 | M | Right | −8.00/+3.00×95 | 20/50 | 0.06 |

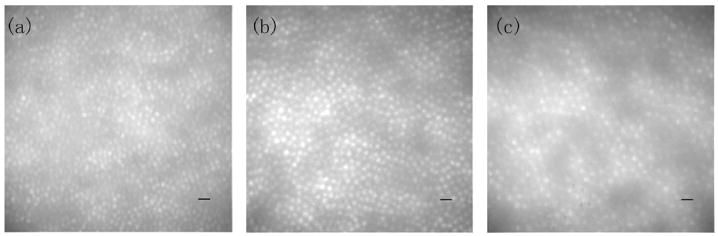

Fig. 1.

AO retinal images using a 550 nm imaging wavelength at three locations from one normal subject, N1: (a) 2° temporal retina, (b) 4° temporal retina, (c) 7° nasal retina. Scale bar corresponds to 10 μm.

A. Synthetic Cone Mosaic Generation

To demonstrate the accuracy of our cone density algorithm, a 512×512 pixel image representative of the cone mosaic at a 5° eccentricity was created. Each cone was sampled by approximately 15 pixels. The peak intensity (reflectance) of each cone was adjusted to a normally distributed random number between 0.5 and 1, the variance of which was measured from our normal retinal images with AO correction (and in agreement with a previous report11) as shown in Fig. 2(a). The image contrast was first decreased to 0.45 based on the scattering effect from the retina reported by Choi et al.13 After convolving every cone with a Gaussian function (FWHM of 4 μm indicative of cone photoreceptors from a deconvolved13 image at 5° retinal eccentricity) to simulate their waveguide nature, the image contrast was further reduced to 0.37 as shown in Fig. 2(b). The point-spread function (PSF) before AO correction [Strehl ratio of 0.018, root mean square (RMS) of 2.3 μm] was reconstructed by using Zernike coefficients measured from a typical subject (7 mm exit pupil, 650 nm light) as shown in Fig. 2(c). After convolving the image with the PSF and adding simulated photon, read, and dark noise27 typical of the imaging camera (VersArray 1024, Princeton Scientific Instruments, Monmouth Junction, New Jersey), the contrast of the final retinal image was further reduced to 0.18 as shown in Fig. 2(d). Our retinal images typically have higher contrast (i.e., greater than 0.28) owing to the AO correction.

Fig. 2.

(Color online) Cone density measurement on a simulated cone mosaic image. (a) Artificial cone mosaic image (peak intensity of each cone, 0.5–1). (b) Image (a) after adding background and Gaussian filtering (contrast=0.37). (c) PSF of a typical subject (Strehl ratio=0.018; RMS=2.3 μm; 650 nm wavelength; 7 mm pupil) before AO correction. (d) Image (b) after PSF filtering and addition of simulated noise (contrast=0.18). (e) Calculated cone mosaic by the connected component labeling algorithm. (f) Cone density measurement for image (d).

The accurate cone number, which is 706 in Fig. 2(a), was obtained by applying the two-dimensional connected components labeling algorithm. This technique scans the image pixel by pixel and allows those adjacent (connected) pixels sharing the same or similar intensities to be grouped together.28 Each pixel in each group was labeled with the same color, whereas different groups were assigned different colors for easier illustration as shown in Fig. 2(e). The cone counting result shown in Fig. 2(f) is presented in Subsection 3.B.1.

B. Adaptive Optics Retinal Image Preprocessing

Cone images from the five subjects were compressed by Symlet wavelets,29 a process that enhances the algorithm speed by a factor of more than 4 and also has proved to be highly efficient for feature extraction29 in that it retains 99.96% of the information that exists in the original retinal image. Seven to ten 512×512 pixel images from the same retinal position were semiautomatically selected based on image contrast and retinal overlap using cross correlation.30 An image contrast threshold of 0.28 was used in most cases to ensure that at least 60% of all the images were selected. However, for poor quality images, a lower contrast threshold of 0.22 was used. After software selection, the images are manually inspected to make sure that all the images are selected correctly. This is because eye movements during imaging may distort the image but not necessarily impair the contrast used in the selection algorithm. Cross correlation was calculated for all the selected images, with the position of the correlation peak providing a motion vector between two considered images. The magnitude of motion threshold, which is 80 pixels, was set to guarantee that all the images for registration are from the same retinal location and the overlapping area between images is at least 352×352 pixels, which is 50% of the original image area (512×512 pixels). The threshold for the magnitude of the un-normalized cross correlation of 4×105 was calculated based on an 80 pixel displacement of the worst scenario to ensure all the good images are included. By setting empirical thresholds for the magnitude of the cross correlation and the eye motion, all the well-correlated images from the same retinal location were selected and averaged to optimize the contrast and improve the signal-to-noise ratio of the image. In most cases, correction for eye rotation was unnecessary. However, when the cones appeared streaky and rotated, especially at the edge of an image, a program based on cross correlation calculated the amount of angle rotation for a single image with respect to the reference image, and then nearest-neighbor interpolation was performed. The preprocessing time for two hundred 512×512 images was approximately 10 min on a 1.4 GHz Pentium 4 CPU and 768 Mbyte RAM personal computer, excluding the user operating time, which is about 15 min. The user operating time consisted of reviewing the images selected by the program, deleting those images that are blurred by the subject’s eye movement, and operating the software.

C. Retinal Image Montaging

A retinal image montage combines a number of averaged AO retinal images within a consistent coordinate system. In comparison with existing methods,31–33 the algorithm described here is automated, seamless (without edge artifacts), and covers a wide area. The variable weight montage method33 was combined with point-mapping registration,30 which registered every pair of averaged retinal images to guarantee perfect alignment. Point-mapping registration was used to locate common features in the reference image that also appear in the data image, and spatial mapping was determined based on these matching feature points. The method allows users to pick out feature points (e.g., the center of cones) manually to guarantee accurate matching between the reference and the data images. The variable-weight method33 was used instead of equal weight in order to reduce edge effects. When blending all the images to form a montage, a parabolic mask was used to put greater weight on pixels closer to the center of the overlapping area of the montaged image as described in Eq. (1):

| (1) |

where M(x,y) and Ii(x,y) are the pixel intensities of a point (x,y) in the montaged and single images, respectively; N is the number of single images; di is the distance from the point (x,y) to the nearest border of the image Ii if the point (x,y) is inside the single image Ii; otherwise, di is set to 0. The manual point-mapping method allows a small overlapping area (e.g., less than 100×100 pixels) between image frames, which helps to achieve a seamless montage. This blending removes many of the artifacts due to varying contrast and intensity. A larger area is obtained for cone density calculation, which provides more statistically accurate results.

D. Cone Density Measurement Algorithm

Figure 3(a) shows the initial, averaged image Iorig from normal subject, N1 (550 nm imaging wavelength). It was first convolved with a Gaussian filter and then subtracted from the initial profile to yield the new image, I. The process is described by Eq. (2),

| (2) |

where the exponential describes a Gaussian filter of width σ (i.e., the standard deviation) and (x,y) denote the coordinates of a pixel.31 For this work, the σ value was empirically chosen to equal one plus the retinal eccentricity, e.g., 3 for 2°, 5 for 4°, etc. Figures 3(b) and 3(c) show Gaussian-filtered and background-subtracted images, respectively. To aid visualization of the filtered images, the values of I were linearly scaled to increase brightness.

Fig. 3.

(Color online) Flowchart of semiautomated cone density measurement procedure. (a) Cropped AO retinal image from subject N1 at 4° temporal retina (imaging wavelength=550 nm). (b) Image (a) after Gaussian filtering. (c) Image after background subtraction and linear scaling. (d) Cone counting result in the first intensity section 246–255. (e) Cone counting result in the second intensity section 236–245. (f) Cone counting result in the last intensity section 16–25. (g) Outcome of cone counting (all the cones are accurately identified with cone density of 25,164 cells/mm2).

Cones have a higher intensity than other parts of the retinal image, and the centers of the waveguide are normally brightest (peak intensity of cones), so one can identify them based on this characteristic. By determining the peak intensities of the dimmest and brightest cones, 16 and 255, respectively [from Fig. 3(c)], the intensity range of 16–255 in the image histogram was set. The intensity range was then divided into smaller sections in steps of ten to search for the pixels that are located in each section. The searching started from the highest intensity section to the lowest intensity section until all the sections were processed. Figure 3(d) shows the cone counting results from the highest intensity section corresponding to the range 246–255. Once a pixel is counted, all the pixels within the square defined by the smallest center-to-center cone spacing are color coded (with an arbitrary intensity) so that they will not be counted in subsequent processing. This step ensures that cones are counted only once. The cone counting results from the second highest intensity section (236–245) and the last intensity section (16–25) are shown in Figs. 3(e) and 3(f), respectively. Figure 3(g) shows the outcome of cone counting with all the cones highlighted. It is then a straightforward step to calculate the cone density. The program takes approximately 90 s to calculate the cone density for a 293×536 cropped montaged retinal image.

The users input a value for the intensity of the dimmest cone as a threshold parameter, so it is conceivable that counting results may vary between operators. However, since the image quality is greatly enhanced after background subtraction, users can easily determine whether the cones are underpicked or overpicked by direct comparison with the original retinal images. The threshold can therefore be adjusted accordingly until all the cones are picked. Hence, the final cone densities from different operators did not vary significantly.

3. RESULTS

A. Retinal Image Montage

Even with good fixation, involuntary eye movements result in inevitable shifts in single-frame images, from which the overall montage would be created. Figure 4 shows a montage of retinal images from subject ST1 (taken at 650 nm imaging wavelength) and a corresponding cone density map. The montage is composed of four cropped 300×300 pixel images taken at 4° inferior retina. The longest side of the montage corresponds to approximately 450 μm on the retina. Although there was varying contrast among the constituent images, the montage image has a smooth and seamless appearance. The montage in Fig. 4 used the 1° field of view frames from the AO system; however, the algorithm can be applied in the same format to larger single frames.

Fig. 4.

(Color online) Retinal image montage and corresponding cone density measurement for the subject ST1. (a) Montage of four retinal images taken at 650 nm. (b) Cone density map of montaged image in (a) (cone density=5247 cells/mm2). Scale bar corresponds to 10 μm on the retina.

B. Cone Counting

1. Synthetic Image Results

The cone counting algorithm was applied to the synthetic image shown in Fig. 2(f). All the cones were successfully chosen (i.e., 690 of 706 cones, or 97.7%) except for the ones that are partially cropped at the edge of the image. As noise was added to the image, it blurred the cones at the edge to the extent that they could not be segregated from the background. To demonstrate the accuracy of our cone counting algorithm, two observers performed manual counting on the same raw simulated image shown in Fig. 2(d). Their results were 662 and 671, which accounts for 93.8% and 95% of the total cone number, respectively. Those cones with very low contrast were missed by manual counting but were identified by the automated method.

2. Adaptive Optics Image Results

Cone densities were calculated for all five subjects then compared with the extrapolated values from Curcio’s histology data.20 The size of the cropped area (sampling window) in our image was 8–46 times larger than Curcio’s window size, depending on the retinal eccentricity (46 times larger for the areas within 1 mm from the foveal center and 8 times larger for more peripheral areas). To examine the cone density variance between Curcio’s and our sampling windows, the sampling window was divided into several subsections: Each subsection was the same size as Curcio’s sampling window for that particular retinal location. The difference in cone density between subsections and our whole sampling window for the normal images was less than 7%, which lies within one standard error (4%–8%; see Ref. 20) of Curcio’s mean density.

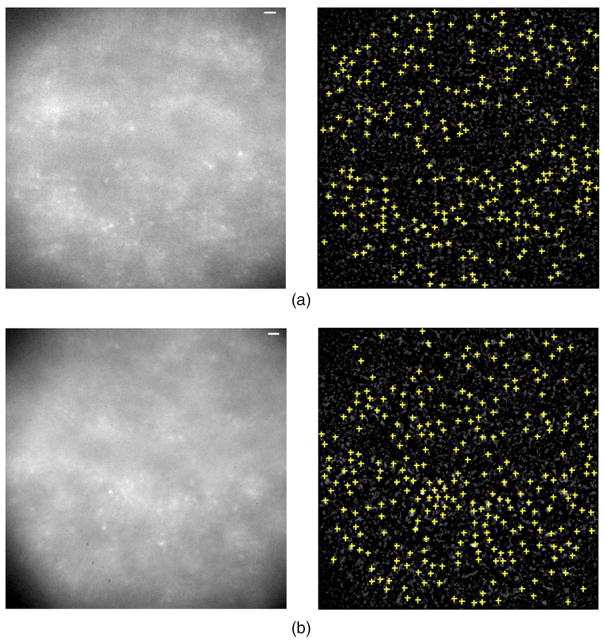

Normal image results

The retinal image and cone counting results from the first normal subject, N1, are shown in Fig. 3. Figure 5 shows the AO retinal images obtained at 650 nm from the two other normal subjects, N2 and N3, and their cone density maps. All the cones in the retinal images from the three normal subjects are identified. Table 2 summarizes the cone density measurements on three normal subjects using the algorithm as compared with Curcio’s histology data. Good agreement (over 98%) was found between the cone density measurements from our algorithm and Curcio’s histology data.

Fig. 5.

(Color online) Retinal images and cone density maps for two normal subjects, N2 and N3. (a) Cropped retinal image and cone density measurement for N2 at 2° temporal retina (cone density=42,389 cells/mm2). (b) Cropped retinal image and cone density measurement for N3 at 4° temporal 4° superior retina (cone density=20,151 cells/mm2). Images were taken with a 650 nm imaging wavelength. Scale bar corresponds to 10 μm on the retina.

Table 2.

Cone Density Measurements for the Three Normal Subjects, N1, N2, and N3: Comparison between Cone Counting Algorithm and Histology Data

| Subject | Retinal Location | Cone Density Measurement, D1 (cells/mm2) | Extrapolation from Curcio’s Data, D2 (cells/mm2) | Agreement, Aa (%) |

|---|---|---|---|---|

| N1 | 4° TRb | 25,164 | 25,000 | 99 |

| N2 | 2° TRb | 42,389 | 43,000 | 99 |

| N3 | 4° T 4° SRc | 20,151 | 20,000 | 99 |

Agreement percentage, , where D1 is cone density measured by our algorithm and D2 is the cone density from histology data.

TR, temporal retina.

TSR, temporal superior retina.

Diseased retinal image results

Figure 6 shows cone counting on AO retinal images obtained from RP1 at two different retinal locations, 4° temporal 4° inferior retina and 2° temporal 4° superior retina. Figure 4(b) shows cones identified with the algorithm on montaged, diseased retinal images from ST1 at 4° inferior retina. Table 3 summarizes the cone density measurements by the algorithm and a comparison with the extrapolated values from Curcio’s histology data.20 The percentage values provide an indication of relative cone density at each retinal location. These numbers provide a good quantitative index of cone loss in diseased retinas. Although there are numerous irregular features in these images, the algorithm has identified cones accurately.

Fig. 6.

(Color online) AO retinal images at two locations and the corresponding cone density calculations for subject RP1. (a) AO retinal image and cone density map at 2° temporal 4° superior retina (cone density=4779 cells/mm2). (b) AO retinal image and cone density map at 4° temporal 4° inferior retina (cone density=4012 cells/mm2). Images were taken at a 650 nm imaging wavelength. Scale bar corresponds to 10 μm on the retina.

Table 3.

Cone Density Measurements from the Adaptive Optics Retinal Images for Subjects ST1 and RP1

| Subject | Retinal Location | Cone Density Measurement (cells/mm2) | Extrapolation from Curcio’s Data (cells/mm2) | Relative Cone Density (%) |

|---|---|---|---|---|

| ST1 | 4° IRa | 5247 | 25,000 | 21 |

| RP1 | 4° T 4° IRb | 4779 | 17,500 | 27 |

| 2° T 4° SRc | 4012 | 26,500 | 15 |

Ir, Inferior retina.

TIR, Temporal inferior retina.

TSR, Temporal superior retina.

4. CONCLUSION AND DISCUSSION

A fast and reliable semiautomated technique is described for measuring the cone density of AO retinal images in both healthy and diseased human eyes, as well as combining single-image frames to create a larger seamless montage. Utilizing larger areas for counting may prove to be beneficial for obtaining more statistically accurate cone densities for an area of interest. Of course, for specific retinal loci (e.g., the foveal area with high cone density variance), smaller windows cropped from the montaged image, covering more uniform cone mosaics, may be desirable. One must be mindful that Curcio’s data are based on only eight human eyes, and the introduction of unknown artifacts is possible during histological preparation. Ideally, a subject’s cone density should be compared with a larger population of age-matched normal retinas acquired in an identical manner. Lacking such a database, Curcio’s histological data have been widely used and agree well with in vivo data obtained from the small number of normals in the literature.

When a retinal image with a dark edge or background (within the overlapping area) is included in a montage (the worst case for our algorithm), it can cause slight edge effects because of high variation in intensity. If there is an area where the view of cone photoreceptors is obscured, owing either to the presence of blood vessels or to other retinal changes such as a scar or druse, that area is automatically cropped out by setting a threshold in the image histogram to separate those objects from the whole image or is manually taken out by the user (when the intensities of these areas are similar to other areas) prior to the cone density measurement. In most cases, the size of these retinal structures has been much smaller than the size of the single AO frame, which is 1° in diameter. If the scar or the retinal structure is bigger than the size of the image, the image becomes completely out of focus owing to scattering from these structures. The chance of mistaking blurred structures for cones is unlikely, as they take on a completely different appearance.

The results presented here provide a means of quantifying changes in in vivo cellular-level retinal images. Although the cone counting algorithms were tested on AO flood-illumination images, they are also applicable in the analysis of en-face images acquired by other in vivo retinal imaging modalities such as AO-OCT and AO-SLO.

Acknowledgments

We thank Susan Garcia and Adam White for assistance in imaging and Ravi Jonnal for valuable comments. This study was supported by the National Eye Institute (grant 014743).

Contributor Information

Bai Xue, Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, California 95817, USA.

Stacey S. Choi, Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, California 95817, USA

Nathan Doble, Iris AO Inc., 2680 Bancroft Way, Berkeley, California 94704, USA.

John S. Werner, Department of Ophthalmology and Vision Science, University of California Davis, Sacramento, California 95817, USA

References

- 1.Liang J, Williams DR, Miller DT. Supernormal vision and high-resolution retinal imaging through adaptive optics. J Opt Soc Am A. 1997;11:2884–2892. doi: 10.1364/josaa.14.002884. [DOI] [PubMed] [Google Scholar]

- 2.Zhang Y, Poonja S, Roorda A. MEMS-based adaptive optics scanning laser ophthalmoscopy. Opt Lett. 2006;31:1268–1270. doi: 10.1364/ol.31.001268. [DOI] [PubMed] [Google Scholar]

- 3.Roorda A, Romero-Borja F, Donnelly WJ, III, Queener H, Hebert TJ, Campbell MCW. Adaptive optics scanning laser ophthalmoscopy. Opt Express. 2002;10:405–412. doi: 10.1364/oe.10.000405. [DOI] [PubMed] [Google Scholar]

- 4.Hammer DX, Ferguson RD, Bigelow CE, Iftimia NV, Ustun TE, Burns SA. Adaptive optics scanning laser ophthalmoscope for stabilized retinal imaging. Opt Express. 2006;14:3354–3367. doi: 10.1364/oe.14.003354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hermann B, Fernandez EJ, Unterhubner A, Sattmann H, Fercher AF, Drexler W, Prieto PM, Artal P. Adaptive-optics ultrahigh-resolution optical coherence tomography. Opt Lett. 2004;29:2142–2144. doi: 10.1364/ol.29.002142. [DOI] [PubMed] [Google Scholar]

- 6.Zhang Y, Rha J, Jonnal RS, Miller DT. Adaptive optics spectral optical coherence tomography for imaging the living retina. Opt Express. 2005;13:4792–4811. doi: 10.1364/opex.13.004792. [DOI] [PubMed] [Google Scholar]

- 7.Zawadzki RJ, Jones S, Olivier S, Zhao M, Bower B, Izatt J, Choi SS, Laut S, Werner JS. Adaptive-optics optical coherence tomography for high-resolution and high-speed 3D retinal in vivo imaging. Opt Express. 2005;13:8532–8546. doi: 10.1364/opex.13.008532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Roorda A, Williams DR. The arrangement of the three cone classes in the living human eye. Nature. 1999;397:520–522. doi: 10.1038/17383. [DOI] [PubMed] [Google Scholar]

- 9.Hofer H, Carroll J, Neitz M, Neitz J, Williams DR. Organization of the human trichromatic cone mosaic. J Neurosci. 2005;25:9669–9679. doi: 10.1523/JNEUROSCI.2414-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Roorda A, Williams DR. Optical fiber properties of individual human cones. J Vision. 2002;2:404–412. doi: 10.1167/2.5.4. [DOI] [PubMed] [Google Scholar]

- 11.Pallikaris A, Williams DR, Hofer H. The reflectance of single cones in the living human eye. Invest Ophthalmol Visual Sci. 2003;44:4580–4592. doi: 10.1167/iovs.03-0094. [DOI] [PubMed] [Google Scholar]

- 12.Rha J, Jonnal RS, Thorn KE, Qu J, Zhang Y, Miller DT. Adaptive optics flood-illumination camera for high speed retinal imaging. Opt Express. 2006;14:4552–4569. doi: 10.1364/oe.14.004552. [DOI] [PubMed] [Google Scholar]

- 13.Choi SS, Doble N, Lin J, Christou J, Williams DR. Effect of wavelength on in vivo images of the human cone mosaic. J Opt Soc Am A. 2005;22:2598–2605. doi: 10.1364/josaa.22.002598. [DOI] [PubMed] [Google Scholar]

- 14.Carroll J, Neitz M, Hofer H, Neitz J, Williams DR. Functional photoreceptor loss revealed with adaptive optics, an alternate cause of color blindness. Proc Natl Acad Sci USA. 2004;101:8461–8466. doi: 10.1073/pnas.0401440101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Choi SS, Doble N, Hardy J, Jones SS, Keltner J, Olivier SS, Werner JS. In-vivo imaging of the photoreceptor mosaic in retinal dystrophies and correlations with retinal function. Invest Ophthalmol Visual Sci. 2006;47:2080–2092. doi: 10.1167/iovs.05-0997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wolfing JI, Chung M, Carroll J, Roorda A, Williams DR. High-resolution retinal imaging of cone–rod dystrophy. Ophthalmology. 2006;113:1014–1019. doi: 10.1016/j.ophtha.2006.01.056. [DOI] [PubMed] [Google Scholar]

- 17.Martin JA, Roorda A. Direct and noninvasive assessment of parafoveal capillary leukocyte velocity. Ophthalmology. 2005;112:2219–2224. doi: 10.1016/j.ophtha.2005.06.033. [DOI] [PubMed] [Google Scholar]

- 18.Dubinin A, Cherezova T, Belyakov A, Kudryashov A. Human eye anisoplanatism: eye as a lamellar structure. Proc SPIE. 2006;6138:260–266. [Google Scholar]

- 19.Tarrant J, Roorda A. The extent of the isoplanatic patch of the human eye. Invest Ophthalmol Visual Sci. 2006 E-Abstract 1195/B60. [Google Scholar]

- 20.Curcio CA, Sloan KR, Kalina RE, Hendrickson AE. Human photoreceptor topography. J Comp Neurol. 1990;292:497–523. doi: 10.1002/cne.902920402. [DOI] [PubMed] [Google Scholar]

- 21.Glanc M, Gendron E, Lacombe F, Lafaille D, Le Gargasson JF, Lena P. Towards wide-field retinal imaging with adaptive optics. Opt Commun. 2004;230:225–238. [Google Scholar]

- 22.Putman NM, Hofer H, Doble N, Chen L, Carroll J, Williams DR. The locus of fixation and the foveal cone mosaic. J Vision. 2005;5:632–639. doi: 10.1167/5.7.3. [DOI] [PubMed] [Google Scholar]

- 23.Barber PR, Vojnovic B, Kelly J, Mayes CR. Automatic counting of mammalian cell colonies. Phys Med Biol. 2001;46:63–76. doi: 10.1088/0031-9155/46/1/305. [DOI] [PubMed] [Google Scholar]

- 24.Stokes MD, Deane GB. A new optical instrument for the study of bubbles at high void fractions within breaking waves. IEEE J Ocean Eng. 1999;24:300–311. [Google Scholar]

- 25.Danias J, Sbsen F, Goldblum D, Chen B. Cytoarchitecture of the retinal ganglion cells in the rat. Invest Ophthalmol Visual Sci. 2002;43:587–594. [PubMed] [Google Scholar]

- 26.Cosio FA, Flores JAM, Castaneda AP, Solano S, Tato P. Proceedings of 25th Annual International Conference of the IEEE EMBS. Vol. 1. IEEE; 2003. Automatic counting of immunocytochemically stained cells; pp. 17–21. [Google Scholar]

- 27.Specification Sheet for Princeton Instruments VersArray 1024, http://www.piacton.com/products/versarray/datasheets.aspx

- 28.Haralick RM, Shapiro LG. Computer and Robot Vision. Vol. 1. Addison-Wesley; 1992. Connected components labeling; pp. 28–48. [Google Scholar]

- 29.Liang H, Hartimo I. Proceedings of the IEEE-SP International Symposium on Time-Frequency and Time-Scale Analysis. IEEE; 1998. A feature extraction algorithm based on wavelet packet decomposition for heart sound signals; pp. 93–96. [Google Scholar]

- 30.Brown LG. A survey of image registration techniques. ACM Comput Surv. 1992;24:325–376. [Google Scholar]

- 31.Soliz P, Wilson M, Nemeth S, Nguyen P. Computer-aided methods for quantitative assessment of longitudinal changes in retinal images presenting with maculopathy. Proc SPIE. 2002;4681:159–170. [Google Scholar]

- 32.Can A, Stewart CV, Roysam B. A feature-based technique for joint, linear estimation of high-order image-to-mosaic transformations: application to mosaicing the curved human retina. IEEE Trans Pattern Anal Mach Intell. 2002;24:412–419. [Google Scholar]

- 33.Berger JW, Leventon ME, Hata N. Design considerations for a computer-vision-enabled ophthalmic augmented reality environment. Lect Notes Comput Sci. 1997;1205:399–408. [Google Scholar]