Abstract

Auditory word comprehension was assessed in a series of acute left hemisphere stroke patients. Participants decided whether an auditorily presented word matched a picture. On different trials, words were presented with a matching picture, a semantic foil, or a phonemic foil. Participants had significantly more trouble with semantic foils across all levels of impairment.

Introduction

Auditory comprehension deficits following left hemisphere brain injury tend to be relatively mild in comparison to deficits in speech production, an observation that has lead to the view that speech production mechanisms are more strongly left hemisphere dominant than speech comprehension mechanisms (Bachman & Albert, 1988; Goodglass, 1993). Classically, auditory comprehension deficits in aphasia were thought to result from impairments in perceiving speech sounds (Luria, 1970; Wernicke, 1874/1969). However, a number of studies have now shown convincingly that word-level comprehension failures in aphasia are attributable as least as much or even more so to post-phonemic breakdowns than they are to deficits at the phonemic level (Bachman & Albert, 1988; Baker, Blumsteim, & Goodglass, 1981; Miceli, Gainotti, Caltagirone, & Masullo, 1980). For example, aphasic individuals tend to make more semantic than phonemic errors on a spoken word-to-picture matching task with semantic and phonemic foils (Baker et al., 1981; Miceli et al., 1980)1. This has lead to the hypothesis that phonological stages of speech recognition are bilaterally organized (Hickok & Poeppel, 2000, 2004, 2007).

One possible objection to this conclusion is that the evidence comes from cases of chronic aphasia. It is possible that phonemic level mechanisms involved in speech recognition are in fact strongly left dominant, but that following left hemisphere damage, systems in the right hemisphere may be recruited to perform phonemic level analysis in a compensatory fashion. Compensatory plasticity, therefore, may explain the relative preservation of receptive phonemic processing abilities in chronic aphasia.

One way to address this possibility is to study the auditory comprehension abilities of acute stroke victims, i.e., following disruption of function but prior to the involvement of compensatory mechanisms. We report such a study here.

Methods

Participants

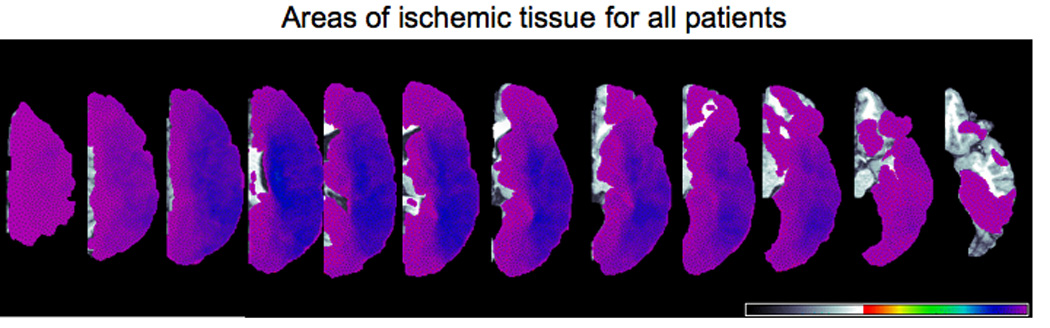

We studied a series of 289 patients within 24 hours of hospital admission for symptoms of acute left hemisphere ischemic stroke who were able to provide informed consent or indicate a family member to provide informed consent. The volume of ischemic tissue across patients ranged from 1.9 to 296 cubic centimeters, as measured by ImageJ software (Abramoff, Magelhaes, & Ram 2004). Figure 1 depicts all areas of infarct or severe hypoperfusion for all patients. Exclusion criteria were: left-handedness (as reported by the patient or a close relative), history of previous neurological disease, history of uncorrected visual or hearing loss, or lack of premorbid proficiency in English, per the patient or family; or reduced level of consciousness or sedation. All patients or a family member provided informed consent for the study, using methods and consent forms approved by the Johns Hopkins Institutional Review Board. Seventy two percent of the patients showed deficits (less than 2 standard deviations below the mean scores for normal subjects, and below the score of any normal subject) on at least one test among a battery of lexical tests that included: oral and written naming, oral reading of words and pseudowords, spelling to dictation of words and pseudowords, repetition of words and pseudowords, written word picture verification, and spoken word/picture verification (see DeLeon et al., 2007) for details of the testing). The other 28% of the participants were likely not aphasic, although tests for agrammatic speech production or syntax comprehension were not systematically administered to all patients. Many of these participants with no documented deficits had lacunar strokes or infarcts outside of “language cortex” (e.g. limited to striate cortex). The mean age of the participants range was 68.5 years (sd = 15.1); 53.2% were female. The mean education was 11.7 years (sd = 3.5). This study was also approved by the UC Irvine Institutional Review Board.

Figure 1.

Distribution map of ischemic tissue (due to infarct or severe hypoperfusion) for all patients as determined by perfusion-weighted imaging. From left to right, dorsal to more ventral axial slices of the left hemisphere are shown.

Procedures

A previous study had indicated that word/picture verification is a more sensitive measure of single word comprehension than multiple-choice word-picture matching (Breese & Hillis, 2004). We therefore used the former task. Participants were presented with a word auditorily, along with a picture that corresponded either to a correct visual depiction of the word, a semantically-related foil, or a phonemically related foil (for a complete list of stimuli used, see Newhart et al. 2007). Participants were asked to judge whether or not the word and picture matched. The series of 17 items were presented 3 times (such that each item appeared once in a series of 17 trials); each time a given item was presented with a different foil or target (in random order across stimuli).

Data analysis

Accurate performance on a word/picture verification task requires that the subject both correctly accept matches and correctly reject mismatches. A subject’s overall error rate on such a task will be influenced by response bias. We therefore used signal detection methods to estimate each subject’s ability to discriminate targets (matching pictures) from non-targets (foil pictures), corrected for response bias. Specifically, using each subject’s proportion of hits (correct acceptances of a match) and false alarms (incorrect acceptances of a non-match) we calculated d-prime statistics separately for phonemic and semantic foils (i.e., using the false alarm rate for each type of foil separately). We also calculated corresponding a-prime statistics, which provides an estimate of proportion correct corrected for response bias (Grier, 1971). Because a-prime values are more readily interpretable, we report primarily on analyses using a-prime values.

Subjects were excluded from our analysis if (i) their performance on the task as perfect (n=41), (ii) they had a false alarm rate of 1.0 for either semantic or phonemic foils (n=8), or (iii) they had a negative d-prime value (FA rate > hit rate) for either the semantic or phonemic foils (n=3). These exclusions resulted in a dataset including 237 subjects.

Results

As a group, performance was relatively good. The mean a-prime value for the entire sample based on semantic foils was 0.92 (sd =.098), and based on phonemic foils was 0.94 (sd = .069). Although small, the difference in performance for semantic (92% correct) versus phonemic (94% correct) foils was nonetheless highly reliable in a paired t-test, t(236) = 8.97, p < .0001.

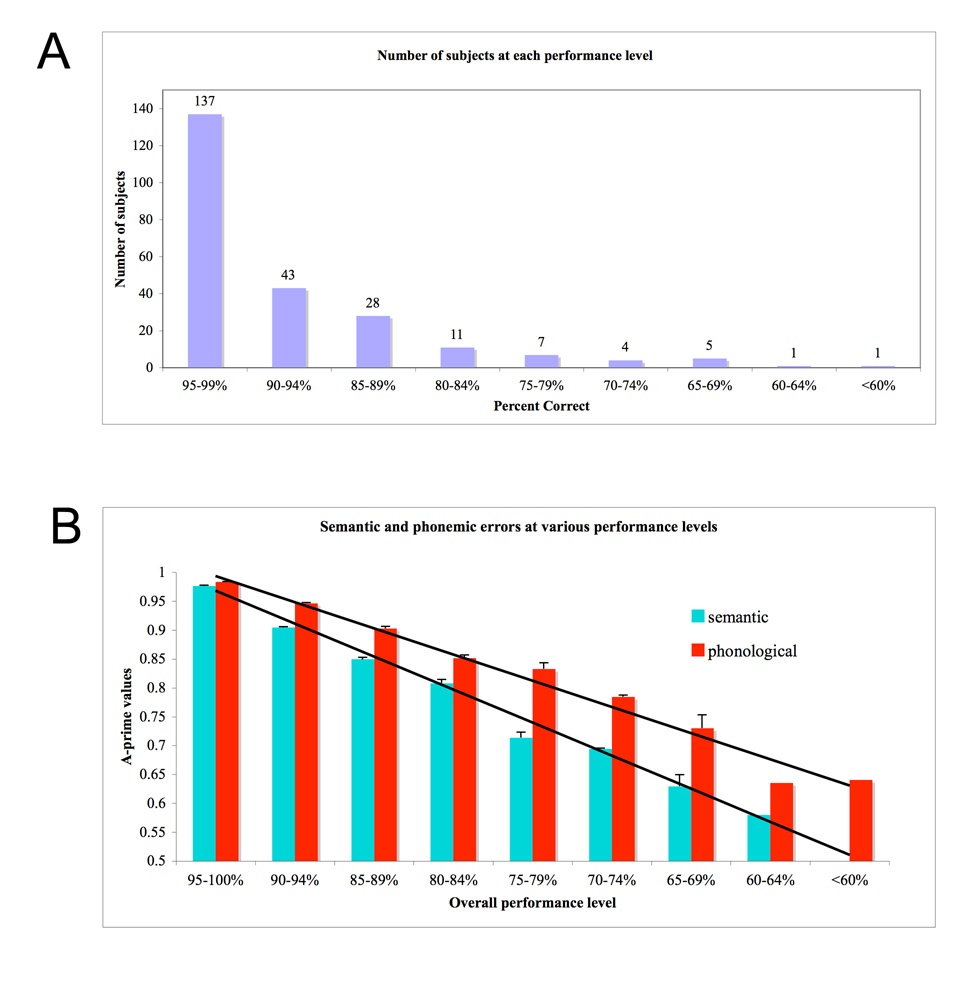

Overall group performance is not particularly revealing however, because minimally impaired patients were included in the sample. To assess whether degree of impairment in auditory word comprehension might be associated with different patterns of impairment in semantic- versus phonemic-level processing, we partitioned our data into nine subgroups reflecting different degrees of impairment on our word-picture verification task. Overall severity was estimated using a-primes calculated from the pooled semantic and phonemic foils, and subjects were binned into the following groups .95–.99, .90–.94, .85–.89, .80–.84, .75–.79, .70–.74, .65–.69, .60–.64, and < .60 (note that .5 is chance). The distribution of subjects across these groups is shown in Figure 2a. We next calculated the mean semantic and phonemic a-prime values for subjects in each severity group. These data are presented in Figure 2b. It is clear from the figure that subjects are better able to discriminate a target from a phonemic foil, than a target from a semantic foil, and this holds across all severity levels. Put another way, subjects make more semantic errors than phonemic errors independent of severity level. This difference was reliable at all levels of impairment (paired t-tests, 1-tailed; bottom three levels pooled due to low n at the tail of the distribution): .95–.99: t(136) = 3.82, p = .0001; .90–.94: t(42) = 8.78, p < .0001; .85–.89: t(27) = 4.67, p< .0001; .80–.84: t(10) = 1.92, p= .0435; .75–.79: t(6) = 3.39, p= .0055; < .75: t(10) = 2.78, p= .0095. All p-values remain at 0.05 or below even after a Bonferroni correction, with the exception of the .80–.84 group. But even in that group, the effect is going in the expected direction, and severity groups both above and below are showing the expected significant differences. The data, therefore, clearly demonstrate that semantic errors dominate phonemic errors across degrees of impairment ranging from mild to severe.

Figure 2.

Word comprehension performance in a sample of 237 acute left hemisphere stroke patients. A. Distribution of participants across the range of performance levels. B. Semantic versus phonemic discrimination scores (a-prime) across the range of performance levels.

Discussion

Our findings indicate that even in acute stroke, single word auditory comprehension deficits result more from semantic (access)-level impairments than phonemic level-impairments. Breese and Hillis (2004) reached a similar conclusion using a different analysis approach. This result is consistent with the view that phonemic-level aspects of auditory word recognition are bilaterally organized, as unilateral disruption, even in acute stroke, does not appear to lead to profound deficits in phonemic processing in comprehension (Hickok & Poeppel, 2000, 2004, 2007). Even if one examines the most severely impaired patients in our sample, those scoring less than 80% correct overall (the bottom 7% of our sample), we find that performance is 10 percentage points higher for phonemic discrimination than semantic discrimination (72% vs. 62% correct, respectively). Thus while it is clear that left hemisphere insult can impair phonemic-level processes, even the most severely affected individuals are performing well above chance, and clearly better than patients with word deafness which is associated with bilateral lesions to the superior temporal lobe (Buchman, Garron, Trost-Cardamone, Wichter, & Schwartz, 1986). We suggest that the residual phonemic ability in both chronic and acute stroke reflects right hemisphere auditory word recognition processes.

Acknowledgments

The research reported in this paper was supported by NIH RO1 DC05375 to AH and NIH R01DC0361 to GH.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The tendency for such patients to make errors involving selection of semantically related items does not necessarily imply that their deficits involve conceptual-semantic representations. In fact, given the focal nature of the lesions, it seems much more likely that such deficits arise from semantic access mechanisms, for example, systems that mediate the relation between phonemic representations and widely distributed conceptual-semantic representations (Hickok & Poeppel, 2004, 2007).

References

- Abramoff MD, Magelhaes PJ, Ram SJ. Image processing with ImageJ. Biophotonics International. 2004;11(7):36–42. [Google Scholar]

- Bachman DL, Albert ML. Auditory comprehension in aphasia. In: Boller F, Grafman J, editors. Handbook of neuropsychology. Vol. 1. New York: Elsevier; 1988. pp. 281–306. [Google Scholar]

- Baker E, Blumsteim SE, Goodglass H. Interaction between phonological and semantic factors in auditory comprehension. Neuropsychologia. 1981;19:1–15. doi: 10.1016/0028-3932(81)90039-7. [DOI] [PubMed] [Google Scholar]

- Breese EL, Hillis AE. Auditory comprehension: is multiple choice really good enough? Brain and Language. 2004;89(1):3–8. doi: 10.1016/S0093-934X(03)00412-7. [DOI] [PubMed] [Google Scholar]

- Buchman AS, Garron DC, Trost-Cardamone JE, Wichter MD, Schwartz M. Word deafness: One hundred years later. Journal of Neurology, Neurosurgury, and Psychiatry. 1986;49:489–499. doi: 10.1136/jnnp.49.5.489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon J, Gottesman RF, Kleinman JT, Newhart M, Davis C, Heidler-Gary J, Lee A, Hillis AE. Neural regions essential for distinct cognitive processes underlying picture naming. Brain. 2007;130(Pt 5):1408–1422. doi: 10.1093/brain/awm011. [DOI] [PubMed] [Google Scholar]

- Goodglass H. Understanding aphasia. San Diego: Academic Press; 1993. [Google Scholar]

- Grier JB. Nonparametric indexes for sensitivity and bias: Computing formulas. Psychological Bulletin. 1971;75:424–429. doi: 10.1037/h0031246. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends in Cognitive Sciences. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Luria AR. Traumatic aphasia. Mouton: The Hague; 1970. [Google Scholar]

- Miceli G, Gainotti G, Caltagirone C, Masullo C. Some aspects of phonological impairment in aphasia. Brain and Language. 1980;11:159–169. doi: 10.1016/0093-934x(80)90117-0. [DOI] [PubMed] [Google Scholar]

- Newhart M, Ken L, Kleinman JT, Heidler-Gary J, Hillis AE. Neural networks essential for naming and word comprehension. Cognitive Behavioral Neurology. 2007;20:25–30. doi: 10.1097/WNN.0b013e31802dc4a7. [DOI] [PubMed] [Google Scholar]

- Wernicke C. The symptom complex of aphasia: A psychological study on an anatomical basis. In: Cohen RS, Wartofsky MW, editors. Boston studies in the philosophy of science. Dordrecht: D. Reidel Publishing Company; 18741969. pp. 34–97. [Google Scholar]