SUMMARY

Joint analysis of longitudinal measurements and survival data has received much attention in recent years. However, previous work has primarily focused on a single failure type for the event time. In this paper we consider joint modelling of repeated measurements and competing risks failure time data to allow for more than one distinct failure type in the survival endpoint which occurs frequently in clinical trials. Our model uses latent random variables and common covariates to link together the sub-models for the longitudinal measurements and competing risks failure time data, respectively. An EM-based algorithm is derived to obtain the parameter estimates, and a profile likelihood method is proposed to estimate their standard errors. Our method enables one to make joint inference on multiple outcomes which is often necessary in analyses of clinical trials. Furthermore, joint analysis has several advantages compared with separate analysis of either the longitudinal data or competing risks survival data. By modelling the event time, the analysis of longitudinal measurements is adjusted to allow for non-ignorable missing data due to informative dropout, which cannot be appropriately handled by the standard linear mixed effects models alone. In addition, the joint model utilizes information from both outcomes, and could be substantially more efficient than the separate analysis of the competing risk survival data as shown in our simulation study. The performance of our method is evaluated and compared with separate analyses using both simulated data and a clinical trial for the scleroderma lung disease.

Keywords: competing risks model, joint modelling, longitudinal data, mixed effects model, EM algorithm

1. INTRODUCTION

In clinical trials and other follow-up studies, it is common that a response variable (e.g. a biomarker) is repeatedly measured during follow-up and the occurrence of some key event, which could cause non-ignorable missing data for the biomarker, is also monitored. Often, the occurrence of the event is censored by some competing risks such as disease-related dropout. A typical example is the scleroderma lung study (SLS) [1] which has motivated this research. The SLS is a double-blinded, randomized controlled study on 162 patients, with 81 in each group. The goal of the SLS is to evaluate the effectiveness of oral cyclophosphamide (CYC) versus placebo in the treatment of active, symptomatic lung disease due to scleroderma. One outcome variable is forced vital capacity (FVC, as per cent predicted), a measure of lung function determined longitudinally at 3-month intervals. Another important measure is a clinical outcome variable—the time to treatment failure or death. Here a treatment failure occurs when %FVC of a patient in either group falls by ≥15 per cent after 6 months in the study. In addition, there are also considerable disease-related dropouts during the follow-up. Note that both death and dropout would cause non-ignorable missing data for the longitudinal measurements of %FVC.

Separate analysis for each of these endpoints has been studied extensively. For the time to event data, Cox’s [2] proportional hazards model is popular, while mixed effects models and the GEE method are widely used for longitudinal measurements [3–6]. However, joint analysis of both outcomes is often needed. This is the case for the SLS for two primary reasons. First, we are interested in evaluating the effects of CYC treatment on the two endpoints, %FVC and the time to treatment failure or death, simultaneously, since CYC is considered effective if it can either improve the %FVC of the patients in the study or lower the risk of treatment failure or death. Thus, it is necessary to build a more inclusive model which links the two aspects together. Secondly, the procedure of estimating the effects of CYC on the longitudinal outcome %FVC can be complicated by the disease-related dropout process or death, in which patients with worse scleroderma lung disease prognoses tend to withdraw from the study early or die, and hence are lost to follow up. Such non-ignorable missing data may lead to biased inferences if a separate analysis is performed on the longitudinal data using the mixed effects model or the GEE method [7, 8].

Joint modelling of the two different types of endpoints simultaneously has received considerable attention in recent years [9–20]. Tsiatis and Davidian provided a nice overview of joint models [21]. A joint model enables one to evaluate effects of factors of interest on both endpoints at the same time [9, 10], and also, it can be used to adjust inferences about longitudinal data for outcome-dependent missing values, of which the assumption of missing data mechanism can be non-ignorable non-response (NINR) [11–13]. In addition, we expect to gain more efficiency in statistical inferences with a joint model since information from both endpoints is utilized. A fourth advantage of joint modelling stems from scientific investigations such as AIDS studies, in which the interest is to characterize the relationship between CD4 count and the time to AIDS. One common procedure is that the true underlying trajectory of the CD4 count can be first modelled, and then be incorporated into a Cox model for the time to AIDS [14, 15], or into an accelerated failure time model in other applications if the proportional hazards assumption fails [16]. Non-likelihood-based approaches include the work of Robins and his colleagues [22–24] who used augmented inverse probability of censoring weighted estimating equations. The approach was supplemented by a sensitivity analysis for the parameter associated with longitudinal measurements in the non-response model.

However, previous joint models only deal with a single failure type with non-informative censorship for the time to event, and thus are not applicable to survival data with competing risks or informative censoring. In our SLS data, disease-related dropout should be taken into account along with treatment failure or death in a joint analysis with %FVC for two reasons. First, disease-related dropout is regarded as informative censoring for treatment failure or death, since it is recognized that both events are related to patient disease condition. Secondly, disease-related dropout generates non-ignorable missing values in %FVC. One possible way of handling such a complicated situation is to treat disease-related dropout as a competing risk for treatment failure or death.

This paper extends the work of Henderson et al. [9] by considering simultaneous analysis of longitudinal measurements and competing risks failure times to allow for more than one distinct failure type. Our model enables one to handle informative censoring by treating it as a competing risk. It also allows non-ignorable missingness after event times. We adopt a linear mixed effects sub-model for the longitudinal measurements and a mixture sub-model for competing risks survival data which is similar to that of Larson and Dinse [25] and Ng and McLachlan [26]. The mixture model for competing risks enables one to evaluate the effects of some factors on both the marginal probabilities of occurrence of the risks and the conditional cause-specific hazards. One major difference of our mixture model from their approach is that we introduce frailties which are linear in the random effects for the longitudinal measurements. Therefore, conditional on common covariates, the correlation between the two endpoints is characterized by latent random effects. An EM-based algorithm is derived to estimate the parameters in both sub-models, and inverse of the empirical Fisher information from the profile likelihood is used to approximate the variance–covariance matrix of the estimators. It is worth noting that the estimation procedure for joint analysis of longitudinal measurements and competing risks failure time data is more complicated than that for longitudinal measurements and survival data with a single failure type. With the two-step mixture sub-model for competing risks event times, we must introduce additional hidden variables to simplify the EM algorithm. Another commonly used model for competing risks is the cause-specific hazards proposed by Prentice et al. [27, 28]. Joint modelling of longitudinal data and competing risks using cause-specific hazards will be studied in a sequel paper.

This article is organized as follows. The model and the estimation procedure are described in Section 2. In Section 3, a real data application is illustrated by the SLS clinical trial. In Section 4, the performance of the joint model is examined by simulation studies, and the joint model is compared to separate analyses in terms of the bias, the empirical standard error, the estimated standard error, and the power of the tests for factors of interest. We also examined the estimates from the joint model with a single failure type in the presence of informative censoring. Some concluding remarks and possible future directions are given in Section 5.

2. MODEL AND ESTIMATION

2.1. The joint model

Suppose there are n subjects in the study. Let Yi(t) be the measurement of a response variable for subject i at time t, where i =1, 2, …, n. During follow-up, each subject may experience one of g distinct failure types or could be right censored. Let Ci =(Ti, Di) be the competing risks data on subject i, where Ti is the failure time or censoring time, and Di takes value from {0, 1, …, g}, with Di = 0 indicating a censored event and Di = k showing that subject i fails from the kth type of failure, where k = 1, …, g. Throughout, the censoring mechanism is assumed to be non-informative. As stated before, informative censoring can be treated as one of the g types of failures. We also assume that each subject in the study will eventually fail from one of the g possible failure types.

Our joint model for Y (t) and C consists of the following three components:

| (1) |

| (2) |

| (3) |

where we denote . Sub-model (1) is the linear mixed effects model for the longitudinal outcome Yi (t), and sub-model (2)–(3) specifies the distribution of the competing risks survival data with being the marginal probability of subject i failing from risk k, and the conditional cause-specific hazard for risk k, also referred to as the component hazard [25, 26], in which λ0k (t) is a completely unspecified baseline hazard function for risk k, where k =1, …, g.

To be more specific, in sub-model (1), is a vector of covariates associated with the longitudinal trajectory Yi(t) and is allowed to change over time, β1 represents the fixed effects of U0i is a random intercept, U1i is subject-specific effects of a vector of covariates which may or may not be the same as and εi (t) ~ N(0, σ2) for all t ≥ 0 is the measurement error. We assume that and is independent of εi (t). We further assume that εi (t1) is independent of εi (t2) for any t1 ≠ t2.We write In sub-model (2)–(3)), are vectors of covariates associated with the marginal probability and the conditional cause-specific hazards, respectively, and α and γ are the corresponding unknown coefficients. The sub-model incorporates random effects Wki and Vki, and the Vki′s are usually referred to as frailties in regression models for survival data. It is reasonable to assume that Ui, Wki, and Vki for the same i are correlated. The simplest form is with θk and νk being unknown parameters. We use this type of formulation in our joint model. Therefore, we need to rewrite as where Sub-model (2)–(3) is an extension of the mixture model for competing risks survival data described by Larson and Dinse [25] and Ng and McLachlan [26] which consists of a logistic model for the marginal distribution of failure types and a proportional hazards model for the conditional cause-specific hazard without any random effects. At last, we assume that the longitudinal measurements are independent of the competing risks survival data, conditional on all the covariates and random effects. Through (1)–(3), we adopt the most generic notation for the covariates which are used in different parts of the model. In practice, the vectors may have components in common. In the SLS data example as illustrated in Section 3, the three vectors are identical, containing the baseline %FVC, the degree of fibrosis in the lung, the treatment CYC, and an interaction between fibrosis and CYC.

We have the following remarks on our joint model.

Remark 1

We note that the characteristics of the study subjects are represented by two aspects: the observable covariates X and the hidden subject-specific effects U. Both X and U are responsible for characterizing the sub-models and introducing dependence between Y and C, if there are common covariates in (1)–(3). The parameters θ and ν measure the latent association from the random effects.

Remark 2

Our joint model belongs to the class of random-effects selection models, which assume the missing data in longitudinal measurements after dropout are non-ignorable [12]. This type of missing data in longitudinal measurements cannot be correctly analysed using usual linear mixed effectsmodels that require missingness to be missing at random (MAR) when some assumptions are satisfied [29, 30]. Let Y(o) and Y(m) denote the observed and missing components, respectively, for the longitudinal response variable Y, and let M denote the missing data indicator vector for Y. According to Little and Rubin [29], missing completely at random (MCAR) occurs when f (M|Y,ϕ) = f (M|ϕ) for all Y and ϕ, where ϕ is an unknown parameter. Missing at random (MAR) occurs when f (M|Y,ϕ)= f (M|Y(o),ϕ). A missing mechanism is said to be ignorable if it is MCAR or MAR; and non-ignorable otherwise. In the study of longitudinal data with informative dropout, the time to dropout T has direct correspondence with M if there are no missing data before dropout. Under random-effects selection models, T is assumed to be independent of Y given random effects U. Therefore,

| (4) |

Each density f here should be indexed by some unknown parameters which have been left out for simplicity. The above equation indicates that the conditional distribution of T given Y depends on both Y(o) and Y(m), which indicates that the missing mechanism is non-ignorable. However, for the missing data in longitudinal measurements before dropout, we need to assume the missing mechanism is MAR. If the informative dropout is one of the competing risks in the follow-up, and it is related to both the longitudinal outcome and the time to event of interest, a competing risks framework should be considered. It is necessary to take into account the correlation between the informative dropout and the event of interest, and more importantly, to adjust the inference on longitudinal measurements for non-ignorable missing values after the informative dropout.

2.2. Parameter estimation

Let Ψ=(β, Σ, σ2, α, θ, γ, ν, λ01(t), …, λ0g(t)) be the vector containing all the unknown parameters from (1) to (3). Suppose the longitudinal outcome Yi(t) is observed at time points ti j for j = 1, …, ni, and is denoted as Yi = (Yi1, …, Yini ). Note that the set (ti1, …, tini) can be different among subjects, due to different dropout times and the fact that some patients may miss one or more visits. We assume that the missing values in the longitudinal measurements caused by reasons other than occurrence of events are MAR. Recall that the competing risks endpoint on subject i is Ci = (Ti, Di). It is important to note that the joint distribution of (Y,C) is completely determined by f (Y|U), f (C|U), and f(U), which are specified in models (1)–(3). The full likelihood function for Ψ, conditional on the observed data (Yi, Ci) for i = 1, …, n and the covariates, is thus

| (5) |

where the second equality follows from the assumption that Y and C are independent conditional on all the covariates and random effects. In the above expression, for a censored subject with censoring time Ti, we have

| (6) |

Here, Sk(t) and fk(t) are the conditional cause-specific survival and density functions for risk k, respectively, derived from λk(t) in (3), and .

To maximize the above likelihood, the cumulative baseline hazard functions are assumed to be step functions with jumps at observed event times. It is obvious that the observed-data likelihood is difficult to maximize in the presence of integration. We may treat random effects U as missing data and use the EM algorithm to maximize the conditional likelihood in which the functions of U are replaced by their expectations given the observed data. However, for censored subjects, the term becomes non-linear in the logarithm of the conditional likelihood. To further simplify the estimation procedure, we introduce a latent vector τi = (τi1, …, τig)T for subject i if censoring occurs, where τik is 1 or 0 indicating whether or not the ith subject would have eventually failed from cause k if the follow-up time is sufficiently long, where k = 1, …, g. This is similar to what Ng and McLachlan [26] postulated. The posterior distribution of τi given Yi, Ci, and Ui is multinomial with probability τik = 1 equal to

| (7) |

Given U and τ, the complete-data likelihood is

| (8) |

It is clear that the complete-data log-likelihood is linear in the components πk, fk, and Sk, so that the parameters α and θ can be well separated from γ and ν.

The maximum likelihood estimate of Ψ is obtained via an EM-based algorithm, which involves iterations between an E-step and an M-step. In the E-step of the (m + 1)th iteration, we need to evaluate the expectation of the complete-data log-likelihood conditional on the observed data (Y,C) and the current parameter estimate, say Ψ(m). This is equivalent to calculating the expected values of all the functions of U and τ that appear in the complete-data log-likelihood. We write l(Ψ;U, τ)= log L(Ψ; Y,C,U, τ) with L as defined in (8), where we drop Y and C to simplify the notation. We have

| (9) |

The second equality holds because l(Ψ;U, τ) is a linear function of τ. Therefore, the only hidden variable in l(Ψ;U, Eτ|U,Y,C,Ψ(m) (τ)) is U, and computation of the conditional expectation of complete-date log-likelihood is reduced to evaluating the expectation of all the functions of U, say, h(U) in l(Ψ;U, Eτ|U,Y,C,Ψ(m) (τ)) conditional on (Y,C,Ψ(m)), and we can write

| (10) |

In the M-step, EU,τ|Y,C,Ψ(m) [l(Ψ;U, τ)] is maximized with respect to Ψ, so that we can update the estimate of Ψ to get Ψ(m+1). We write Q(Ψ; Ψ(m)) = EU,τ|Y,C,Ψ(m) [l(Ψ; U, τ)] and have

| (11) |

Recall that Ψ=(β,Σ, σ2, α, θ, γ, ν, λ01(t), …, λ0g(t)). In Appendix A we show that β, Σ, σ2, and the cumulative baseline hazard functions H0k(t) can be updated with closed forms (A5)–(A8), where H0k(t) is a step function with jumps at observed event times due to risk k, k = 1, …, g. No closed-form solutions exist for α, θ, γ, and ν, which need to be updated using a one-step Newton–Raphson algorithm in each iteration as given in (A13)–(A24). Then Ψ(m+1) will be the input for the E-step in the next iteration. The algorithm stops when the convergence criteria are satisfied. Technical details of the algorithm are deferred to Appendix A.

2.3. Standard error estimation

Louis [31] proposed a method for computing the observed information matrix together with the EM algorithm. However, this method is computationally unattractive and time-consuming for our model in which the non-parametric baseline hazards are involved. Here we develop a method similar to that of Lin et al. [32]. We split the parameter vector Ψ into two components, the parametric component Ω = (β,Σ, σ2, α, θ, γ, ν) and the collection of non-parametric baseline hazard functions Λ=(λ01(t), …, λ0g(t)). Since we are only interested in making inference on Ω, calculating the entire information matrix with the baseline functions is unnecessary. The variance–covariance matrix of Ω can be approximated by the inverse of the empirical Fisher information obtained from the profile likelihood where the baseline hazards functions have been profiled out. Let l(i) (Ω̂; Y, C) denote the observed score vector from the profile likelihood on the ith subject evaluated at Ω̂. The observed information matrix of Ω can be approximated by

| (12) |

Wald’s test can then be performed based on the estimated variance–covariance matrix (12).

3. THE SCLERODERMA LUNG STUDY

The proposed joint model for longitudinal measurements and competing risks failure time data is illustrated with the SLS clinical trial. We are interested in evaluating if treatment CYC is effective on at least one of the two endpoints, that is, if the treatment can either improve the %FVC level of a patient or decrease the risk of treatment failure or death. Initially patients were only given a partial dose, and the dose was gradually increased to the target level within the first 6 months. The treatment was given for 12 months from the baseline and there was an additional year of follow-up. The primary analysis has been based on the first 12 months data. In addition to treatment failure or death, there are considerable dropouts during the follow-up. Specifically, as summarized in Table I, we have observed the following during the 24 months follow-up: (1) 16 treatment failures or deaths; (2) 32 informative dropouts due to adverse event (AE), serious adverse event (SAE), or worsening disease; (3) 10 non-informative dropouts with no evidence showing that the dropouts were related to the disease. For example, the patient may have moved out of the city before completion of the study. The informative dropout not only causes non-ignorable missing data in %FVC which indicates linear mixed effects models are not applicable, but also introduces informatively censored events for treatment failure or death. Therefore, we have to take into account this type of events when evaluating the treatment effects on %FVC outcome and the risk of treatment failure or death.

Table I.

Three types of events in the scleroderma lung study.

| Category | CYC | Placebo | Total |

|---|---|---|---|

| Treatment failure or death | 6 | 10 | 16 |

| Informative dropout | |||

| (AE, SAE, worsening disease) | 19 | 13 | 32 |

| Non-informative dropout | |||

| (Other reasons) | 4 | 6 | 10 |

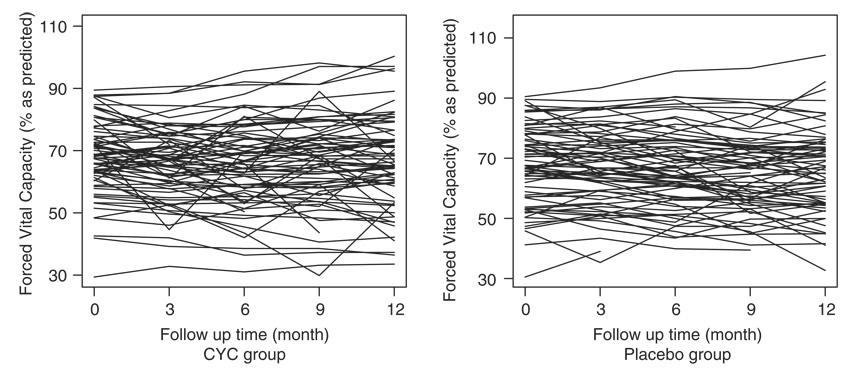

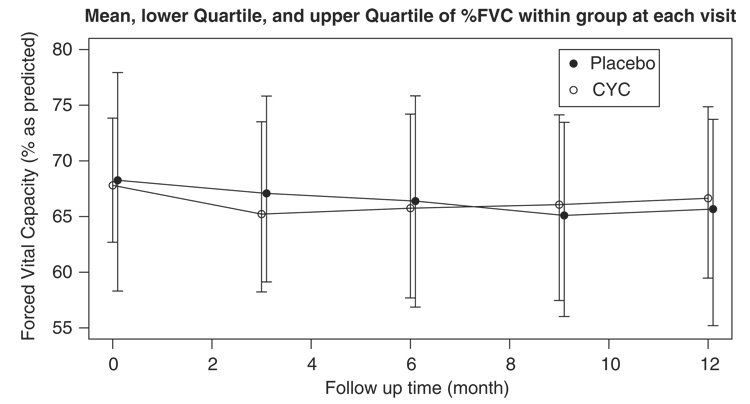

Figure 1 shows the longitudinal profile of %FVC over time for the CYC group versus the placebo. We observe a large variation in the baseline %FVC. There is no apparent time trend for both groups. Figure 2 shows the average, the lower quartile, and the upper quartile of %FVC within each group at months 0, 3, 6, 9, and 12. These quantities were calculated on those who had not dropped out by the time. It is observed that the average %FVC initially decreases in the CYC group. One explanation may be that prior to month 6 patients were only given partial doses, not allowing sufficient time or dosing for the treatment to take effect. To account for this, we only used data from month 6 and thereafter in the subsequent analysis. There were 10 patients with follow up ≤3 months, so they were not included in the analysis. The figure also shows that the CYC group tends to have a higher %FVC on average than the placebo group from month 9. However, no significant between-group difference is identified by the t-test at each visit (results not shown), and the figure indicates that the two groups have about the same interquartile ranges. We should note that both curves could be biased due to non-ignorable missing data in %FVC. Since patients with lower %FVC are more likely to dropout due to AE, SAE, worsening disease, or death, the observed curves for %FVC would be higher than the true population mean.

Figure 1.

Longitudinal profile of %FVC for the CYC group and the placebo group.

Figure 2.

The mean, the lower quartile, and the upper quartile of %FVC within each group during follow-up. At months 0, 3, 6, 9, and 12, the numbers at risk are 81, 72, 70, 68, and 70 for CYC group, and 80, 72, 70, 65, and 65 for the placebo group.

For illustrative purposes, we considered two confounding factors in our model when assessing CYC treatment effects: baseline %FVC and degree of fibrosis in the lung. These elements were stated for the primary analysis in the recorded manual of operations. No statistical selection procedure was used. We also considered the interaction between treatment CYC and fibrosis, which was indicated to be significant for %FVC measurements in preliminary analyses. Thus, we applied the joint model to the SLS data with three covariates: baseline %FVC, fibrosis, and the treatment group indicator, as well as the interaction between CYC and fibrosis. In the sub-model for %FVC, we fitted a linear mixed effects model with a random intercept and fixed effects for the three factors along with the interaction between CYC and fibrosis, and these terms were also considered in the mixture sub-model for the competing risks event times. Two competing risks, treatment failure or death and disease-related dropout, were analysed together with %FVC using the following joint model. In the sub-model for %FVC, we assume, for subject i at visit j

| (13) |

where FVC0i is the baseline %FVC, FIBi is the degree of fibrosis in the lung, CYCi is 1 or 0 as a group indicator for the CYC treatment or placebo, Ui is the random intercept with a mean zero normal distribution, and the εij ’s are the mutually independent normal measurement errors. The normality assumption seems to be reasonable for this data set by diagnostics of residuals. For the competing risks survival data, we formulate the probability of treatment failure or death by the following:

| (14) |

and the conditional cause-specific hazards for treatment failure or death (risk 1) and disease-related dropout (risk 2) are specified as

| (15) |

| (16) |

respectively.

The results of such a joint analysis are summarized in Table II, together with those from a linear random intercept model for the longitudinal outcome %FVC alone, and those from a mixture model for the competing risks failure time data. In the joint analysis, the interaction between CYC treatment and fibrosis is 1.79 with p-value 0.006, which suggests that the treatment CYC is more effective for the patient with a higher degree of fibrosis in the lung. However, the linear mixed effects model for %FVC alone failed to detect this effect. The test for the overall effects of treatment CYC can be done by jointly testing the two terms, the main effect and the interaction with fibrosis. The test gives p-value 0.0010 and 0.13 in the joint model and the separate analysis, respectively. In the joint model the inference for the effect of CYC has been adjusted for the non-ignorable missing data in %FVC, but we fail to do so in the analysis for %FVC alone. Finally, no treatment effects are identified for the competing risks failure time data, either with the joint model or the separate analysis. Possible reasons include the facts that the follow-up time is not long enough to detect any difference between the two groups and that the number of treatment failure or death is small.

Table II.

Comparison of the joint model versus the separate analysis for %FVC measurements alone using 6–12 month SLS data.

| Joint analysis | Separate analysis | |||

|---|---|---|---|---|

| Estimate (SE) | p-value | Estimate (SE) | p-value | |

| Longitudinal outcome %FVC | ||||

| Baseline %FVC (β1) | 0.96 (0.02) | <0.0001 | 0.96 (0.04) | <0.0001 |

| Fibrosis (β2) | −1.66 (0.50) | 0.001 | −1.69 (0.85) | 0.05 |

| CYC group (β3) | −2.26 (1.50) | 0.13 | −2.51 (2.43) | 0.30 |

| CYC × Fibrosis (β4) | 1.79 (0.66) | 0.006 | 1.83 (1.11) | 0.10 |

| Effect of CYC (H0 : β3 = β4 =0)* | 0.001 | 0.13 | ||

| Cause-specific probability (treatment failure or death) | ||||

| Baseline %FVC (α1) | −0.10 (0.14) | 0.49 | −0.03 (0.13) | 0.83 |

| Fibrosis (α2) | −0.45 (1.47) | 0.76 | 0.30 (1.39) | 0.83 |

| CYC group (α3) | 0.91 (6.71) | 0.89 | −0.86 (7.56) | 0.91 |

| CYC × Fibrosis (α4) | 0.01 (2.82) | 1.00 | 0.32 (3.30) | 0.92 |

| Effect of CYC (H0 : α3 = α4 = 0)* | 0.93 | 0.99 | ||

| Conditional cause-specific hazards (time to treatment failure or death) | ||||

| Baseline %FVC (γ11) | 0.01 (0.06) | 0.85 | 0.04 (0.26) | 0.87 |

| Fibrosis (γ12) | 0.001 (0.88) | 1.00 | −0.46 (2.88) | 0.87 |

| CYC group (γ13) | −0.34 (3.30) | 0.92 | 2.72 (9.09) | 0.76 |

| CYC × Fibrosis γ14) | −0.28 (1.52) | 0.85 | −1.49 (3.68) | 0.68 |

| Effect of CYC (H0 : γ13 = γ14 = 0)* | 0.64 | 0.86 | ||

| Conditional cause-specific hazards (time to disease-related dropout) | ||||

| Baseline %FVC (γ21) | −0.05 (0.05) | 0.25 | −0.01 (0.05) | 0.76 |

| Fibrosis (γ22) | 0.24 (0.75) | 0.75 | 0.45 (0.58) | 0.44 |

| CYC group (γ23) | 1.61 (3.03) | 0.60 | 1.06 (1.46) | 0.47 |

| CYC × Fibrosis (γ24) | −0.41 (1.27) | 0.75 | −0.36 (0.62) | 0.56 |

| Effect of CYC (H0 : γ23 = γ24 = 0)* | 0.75 | 0.75 | ||

The p-values are based on Wald test with 2 degrees of freedom.

4. SIMULATION STUDY

We examined the finite sample performance of our joint model and the proposed estimation procedure using simulated data. We primarily looked at three aspects: first, we investigated for the joint model the simulated coverage probability of the 95 per cent confidence intervals which were obtained based on the estimated standard errors using the method described in Section 2.3; second, the joint model was compared to the separate analysis of either the longitudinal data or the competing risks survival data in terms of the bias, the empirical standard error, the estimated standard error, and the power of the tests for the effects of some factors; third, we examined the parameter estimates from a joint model with a single failure type in the presence of informative censoring, and the results were compared to our joint model which treated the informative censoring as a competing risk.

The longitudinal measurements were simulated from the following random slope model:

| (17) |

where ti j = 0, 0.5, 1, …, 5 represents the scheduled visiting times and X2i ~ Bernoulli(0.5) acts as a treatment group indicator in randomized trials. The random intercept Ui ~ N(0, 0.5) was independent of the measurement error εi j, which was distributed as N(0, 0.25). We simulated two competing risks for event times, say risk 1 and risk 2, with the marginal probability for risk 1 specified as

| (18) |

and the following conditional hazards for the two risks:

| (19) |

| (20) |

where X1i ~ N(2, 0.1). The baseline hazards were held constant at 0.1 and 0.2 for risk 1 and 2, respectively, so that the time to each risk forms an exponential distribution. The censoring time was set to an exponential random variable with mean 15, to obtain average censoring rate at about 17 per cent, the events of risk 1 at about 33 per cent, and the events of risk 2 at about 50 per cent. The longitudinal outcome was missing after the observed or censored event times. The proportions of subjects with 1, 2, 3, …, 11 measurements are, respectively, 0.28, 0.20, 0.13, 0.10, 0.07, 0.05, 0.04, 0.03, 0.02, 0.02, and 0.06. Table III shows the simulated coverage probability of the 95 per cent confidence intervals from such a joint model at two sample sizes, 200 and 500, with 500 Monte Carlo samples for each. In the table we write for the variance of the random intercept Ui. It is shown that the simulated coverage probability of confidence intervals is close to the nominal value 0.95.

Table III.

Simulated coverage probability of the 95 per cent confidence intervals for the joint model (each entry is calculated based on 500 Monte Carlo samples).

| N =200 | N =500 | ||

|---|---|---|---|

| Parameter | True value | 95 per cent CI coverage rate | 95 per cent CI coverage rate |

| β0 | 10 | 0.974 | 0.946 |

| β1 | 1 | 0.934 | 0.944 |

| β2 | −1.5 | 0.960 | 0.948 |

| σ2 | 0.25 | 0.958 | 0.932 |

| 0.5 | 0.926 | 0.954 | |

| α10 | −0.5 | 0.948 | 0.934 |

| α11 | 0.2 | 0.946 | 0.934 |

| α12 | −0.5 | 0.956 | 0.956 |

| γ11 | 0.8 | 0.970 | 0.950 |

| γ12 | −0.5 | 0.940 | 0.948 |

| γ21 | 0.5 | 0.940 | 0.940 |

| γ22 | 0.5 | 0.934 | 0.946 |

| ν1 | 0.7 | 0.938 | 0.928 |

| ν2 | 0.5 | 0.954 | 0.944 |

Next we compared the joint model to the separate analysis of either endpoint on the following items: the bias of the point estimates, the empirical standard error, the estimated standard error, and the mean square error. The Monte Carlo samples were simulated from model (17)–(20) and analysed in two ways: the joint model as specified in (17)–(20), and the separate analysis of the two endpoints. The separate analysis was done by fitting a random slope linear model (17) for the longitudinal outcome and a mixture model for the competing risks failure time data with random effect Ũi as follows:

| (21) |

| (22) |

| (23) |

where Ũi was assumed to be a zero-mean Gaussian random variable with variance It is worth noting that for the separate competing risks model (21)–(23), there is no longer a coefficient θ1 for the random effect Ũi in (21) to ensure identifiability. It is easy to see that ν1 and ν2 are the parameters associated with Ui in the competing risk data component (19)–(20) of the joint model. In this simulation, θ1 was set to 1, and thus We used a similar approach as proposed in Section 2.3 to compute the variance–covariance matrix for the parameters in (21)–(23). The simulation was based on 500 Monte Carlo samples, and two sample sizes were considered (n =200 and 500; see Tables IV(A) and (B). We label the empirical standard error as SE, the median of estimated standard error as Est. SE, and the mean square error as MSEJ and MSES for the joint model and the separate analysis, respectively.

Table IV.

Comparison of the bias, the standard error (SE), the estimated standard error (Est. SE), and the mean square error (MSE) between the joint model and the separate analysis (A) sample size=200 and (B) sample size=500.

| Separate | Joint | |||||||

|---|---|---|---|---|---|---|---|---|

| Parameter | True | Bias | SE | Est. SE | Bias | SE | Est. SE | MSES/MSEJ |

| (A) | ||||||||

| Longitudinal | ||||||||

| Fixed effects | ||||||||

| β0 | 10 | 0.011 | 0.039 | 0.040 | −0.0003 | 0.040 | 0.041 | 1.026 |

| β1 | 1 | −0.126 | 0.070 | 0.073 | −0.004 | 0.085 | 0.081 | 2.869 |

| β2 | −1.5 | 0.001 | 0.051 | 0.055 | −0.002 | 0.055 | 0.057 | 0.859 |

| Random effects | ||||||||

| σ2 | 0.25 | 0.0002 | 0.014 | 0.015 | −0.0004 | 0.014 | 0.015 | 1.001 |

| 0.5 | −0.039 | 0.076 | 0.074 | −0.002 | 0.093 | 0.087 | 0.843 | |

| Competing risks | ||||||||

| Fixed effects | ||||||||

| α10 | −0.5 | −0.159 | 1.281 | 6.698 | −0.264 | 1.322 | 1.230 | 0.917 |

| α11 | 0.2 | 0.065 | 0.629 | 2.948 | 0.115 | 0.650 | 0.596 | 0.918 |

| α12 | −0.5 | −0.037 | 0.381 | 2.830 | −0.042 | 0.375 | 0.366 | 1.029 |

| γ11 | 0.8 | −0.084 | 0.531 | 6.982 | 0.026 | 0.480 | 0.518 | 1.251 |

| γ12 | −0.5 | 0.037 | 0.360 | 6.504 | −0.081 | 0.352 | 0.320 | 1.004 |

| γ21 | 0.5 | <0.0001 | 0.474 | 2.798 | 0.043 | 0.426 | 0.416 | 1.226 |

| γ22 | 0.5 | −0.002 | 0.282 | 2.804 | 0.023 | 0.277 | 0.257 | 1.029 |

| Random effects | ||||||||

| ν1 | 0.7 | −0.664 | 0.210 | 132.2 | 0.025 | 0.419 | 0.373 | 2.753 |

| ν2 | 0.5 | −0.440 | 0.223 | 55.33 | 0.029 | 0.301 | 0.288 | 2.661 |

| 0.5 | 0.093 | 0.076 | 36.75 | −0.002 | 0.093 | 0.087 | 1.667 | |

| (B) | ||||||||

| Longitudinal | ||||||||

| Fixed effects | ||||||||

| β0 | 10 | 0.013 | 0.024 | 0.025 | 0.001 | 0.026 | 0.025 | 1.102 |

| β1 | 1 | −0.127 | 0.046 | 0.046 | −0.002 | 0.054 | 0.051 | 6.248 |

| β2 | −1.5 | −0.002 | 0.032 | 0.035 | −0.002 | 0.035 | 0.035 | 0.836 |

| Random effects | ||||||||

| σ2 | 0.25 | 0.0001 | 0.010 | 0.009 | −0.0003 | 0.009 | 0.009 | 1.234 |

| 0.5 | −0.035 | 0.047 | 0.046 | 0.0004 | 0.055 | 0.054 | 1.135 | |

| Competing risks | ||||||||

| Fixed effects | ||||||||

| α10 | −0.5 | −0.128 | 0.825 | 0.995 | −0.149 | 0.811 | 0.753 | 1.025 |

| α11 | 0.2 | 0.052 | 0.395 | 0.442 | 0.063 | 0.386 | 0.364 | 1.038 |

| α12 | −0.5 | −0.020 | 0.229 | 0.513 | −0.011 | 0.222 | 0.228 | 1.070 |

| γ11 | 0.8 | −0.083 | 0.363 | 1.231 | −0.0002 | 0.303 | 0.302 | 1.510 |

| γ12 | −0.5 | 0.023 | 0.248 | 1.901 | −0.031 | 0.196 | 0.190 | 1.575 |

| γ21 | 0.5 | 0.012 | 0.285 | 0.803 | 0.009 | 0.265 | 0.251 | 1.157 |

| γ22 | 0.5 | 0.018 | 0.181 | 1.210 | −0.002 | 0.170 | 0.159 | 1.145 |

| Random effects | ||||||||

| ν1 | 0.7 | −0.541 | 0.368 | 31.34 | −0.013 | 0.224 | 0.210 | 8.503 |

| ν2 | 0.5 | −0.358 | 0.321 | 23.29 | 0.016 | 0.182 | 0.170 | 2.005 |

| 0.5 | 0.086 | 0.038 | 6.601 | 0.0004 | 0.055 | 0.054 | 6.926 | |

Note The separate analysis for longitudinal data used the linear mixed effects model (17) which is not able to handle non-ignorable missing data and results in considerable biases. The competing risks survival data were analysed using the mixture model (21)–(23). The joint analysis was based on the model (17)–(20).

The results can be summarized as follows. First of all, the time trend β1 of the longitudinal measurements is severely underestimated in the separate analysis for both sample sizes. The variance of the time trend, is also negatively biased. This is the consequence of the informative dropout process in which with positive coefficients ν1 and ν2, we observe a higher risk of dropout for those subjects with greater than average increasing rates over time in the longitudinal out-come. The resultant non-ignorable missing values after dropout cannot be accounted for in the linear mixed effects model alone and thus biases in the estimated time trend and its variance are observed. However, we are able to obtain almost unbiased estimates for these quantities in the joint analysis, where the informative dropout process has been modelled together with the longitudinal measurements. Our results are consistent with the findings of Henderson et al. [9]. Second, it is observed that the joint model provides much more accurate estimates for ν1 and ν2, the coefficients of the random effects in the hazards, than those from the mixture model alone which produces even worse estimates when the sample size is not that large (say, 200). This finding indicates that we can improve the efficiency of frailty estimation in the survival endpoint by combining the information of the longitudinal outcome, if the latter is correlated with the frailty and the correlation is correctly modelled. This is analogous to frailty models for multivariate survival data, in which frailty measures association among multiple event times. The frailty is easier to estimate and identify in multivariate survival analysis than in univariate survival analysis for heterogeneity [33]. Third, in the separate analysis for the competing risks survival data, the standard errors are overestimated for all the parameters compared to the empirical ones, especially for ν1 and ν2. In contrast, we can obtain more accurate standard error estimates from the joint model. Overall, the joint model performs better than the separate analysis with smaller MSE for most parameters. We note that for parameters α10, α11 and at the competing risks endpoint, the biases and/or variances are larger for the joint model than the separate at the current sample sizes (n =200 and 500). However, in our simulation with n =1000, smaller biases and variances are observed for the joint model compared to the separate.

We did further simulations to compare the power of detecting treatment effects on the competing risks failure time data between the joint model and the separate analysis. Here we did not compare the power for the longitudinal measurements since in the separate analysis, the linear mixed effects model is not valid in the presence of non-ignorable missing values. For simplicity, the longitudinal measurements were simulated from a random intercept linear model

| (24) |

and the competing risks failure times were simulated from model (18)–(20). The binary covariate X2i acts as a treatment group indicator in randomized trials, so the test for treatment effects on competing risks would have the following null hypothesis and the alternative:

Wald’s tests were performed on 200 Monte Carlo samples. The rejection rates under different sample sizes are summarized in Table V. There are four sets of coefficients for X2. Model I is the null model, where α12 =γ12 =γ22 =0. Under Model I, the rejection rate in the joint model is close to the significance level of 0.05 when the sample size is large enough, but the analysis for competing risks data alone tends to be conservative. Models II, III, and IV are different alternatives, where the true values for α12, γ12, and γ22 are 0, 1.0, and −1.0 in Model II, 1.0, 1.0, and 0 in Model III, and 0.8, 0.8, and −0.8 in Model IV, respectively. It is shown that the analysis for competing risks alone has much lower power than the joint model in Models II, III, and IV for almost all sample sizes. It is the consequence of inflated variance estimates for competing risks failure time data in the separate analysis. We have observed similar results under model (17)–(20) (see Tables IV(A) and (B).

Table V.

Comparison of the power of the tests for treatment effects on the competing risks failure time data based on 200 Monte Carlo samples.

| Model I | Model II | Model III | Model IV | |||||

|---|---|---|---|---|---|---|---|---|

| Sample size | Joint | Separate | Joint | Separate | Joint | Separate | Joint | Separate |

| 50 | 0.025 | <0.001 | 0.180 | 0.020 | 0.340 | 0.025 | 0.150 | 0.010 |

| 100 | 0.055 | <0.001 | 0.740 | 0.080 | 0.920 | 0.155 | 0.745 | 0.090 |

| 200 | 0.040 | 0.005 | 0.990 | 0.525 | 1.000 | 0.255 | 1.000 | 0.235 |

| 500 | 0.060 | 0.015 | 1.000 | 0.950 | 1.000 | 0.630 | 1.000 | 0.680 |

We finally examined the behaviour of the joint analysis of longitudinal measurements and survival data with a single failure type in the presence of informative censoring. The Monte Carlo samples were generated from the joint model (17)–(20), and were analysed by the following joint model in which risk 1 is the event of interest, and failures due to risk 2 were incorrectly treated as non-informatively censored events

| (25) |

| (26) |

Tables VI(A) and (B) summarize the results for sample sizes 200 and 500, respectively, both of which are based on 500 Monte Carlo samples. Considerable bias occurs for the parameter ν1 in the joint model with a single failure type, treating the correlated competing risk as non-informative censoring. This results in very low confidence interval coverage probabilities especially when the sample size increases to 500. No such bias is observed for the joint model in which the informatively censored events have been modelled together with risk 1 as failures due to a competing risk. In addition, we note that larger bias also occurs for the parameters γ11, and γ12 in the single failure type joint model with the correlated competing risk treated as independent censoring as the sample size goes up to 500.

Table VI.

Comparison of the bias, the standard error (SE), the estimated standard error (Est. SE), and the simulated coverage probability (CP) of the 95 per cent confidence intervals between a joint model with competing risks versus that with a single failure type where the other failure is treated as independent censoring (A) sample size=200 and (B) sample size=500.

| Ignoring the competing risk | Competing risks | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Parameter | True | Bias | SE | Est. SE | CP | Bias | SE | Est. SE | CP | |

| (A) | ||||||||||

| Longitudinal | ||||||||||

| Fixed effects | ||||||||||

| β0 | 10 | 0.001 | 0.039 | 0.041 | 0.952 | −0.0003 | 0.040 | 0.041 | 0.974 | |

| β1 | 1 | −0.002 | 0.079 | 0.073 | 0.928 | −0.004 | 0.085 | 0.081 | 0.934 | |

| β2 | −1.5 | −0.004 | 0.053 | 0.056 | 0.968 | −0.002 | 0.055 | 0.057 | 0.960 | |

| Random effects | ||||||||||

| σ2 | 0.25 | −0.0005 | 0.014 | 0.015 | 0.962 | −0.0004 | 0.014 | 0.015 | 0.958 | |

|

|

0.5 | 0.031 | 0.090 | 0.092 | 0.954 | −0.002 | 0.093 | 0.087 | 0.926 | |

| Survival | ||||||||||

| Fixed effects | ||||||||||

| γ11 | 0.8 | 0.085 | 0.436 | 0.447 | 0.960 | 0.026 | 0.480 | 0.518 | 0.970 | |

| γ12 | −0.5 | 0.068 | 0.265 | 0.282 | 0.958 | −0.081 | 0.352 | 0.320 | 0.940 | |

| Random effects | ||||||||||

| ν1 | 0.7 | 0.477 | 0.287 | 0.307 | 0.714 | 0.025 | 0.419 | 0.373 | 0.938 | |

| (B) | ||||||||||

| Longitudinal | ||||||||||

| Fixed effects | ||||||||||

| β0 | 10 | 0.003 | 0.024 | 0.025 | 0.958 | 0.001 | 0.026 | 0.025 | 0.946 | |

| β1 | 1 | −0.005 | 0.049 | 0.046 | 0.934 | −0.002 | 0.054 | 0.051 | 0.944 | |

| β2 | −1.5 | −0.005 | 0.034 | 0.035 | 0.956 | −0.002 | 0.035 | 0.035 | 0.948 | |

| Random effects | ||||||||||

| σ2 | 0.25 | −0.001 | 0.009 | 0.009 | 0.956 | −0.0003 | 0.009 | 0.009 | 0.932 | |

|

|

0.5 | 0.029 | 0.058 | 0.057 | 0.946 | 0.0004 | 0.055 | 0.054 | 0.954 | |

| Survival | ||||||||||

| Fixed effects | ||||||||||

| γ11 | 0.8 | 0.092 | 0.269 | 0.265 | 0.924 | −0.0002 | 0.303 | 0.302 | 0.950 | |

| γ12 | −0.5 | 0.079 | 0.165 | 0.169 | 0.944 | −0.031 | 0.196 | 0.190 | 0.948 | |

| Random effects | ||||||||||

| ν1 | 0.7 | 0.435 | 0.178 | 0.180 | 0.304 | −0.013 | 0.224 | 0.210 | 0.928 | |

5. DISCUSSION

In clinical trials, it is very common that both longitudinal measurements and event times are recorded on the study subjects during follow-up. Previous methods for joint modelling of these two endpoints have focused on a single failure type for the event times with non-informative censoring, which may not be applicable in the presence of informative censoring or competing risks. In this article, we have proposed a joint model for longitudinal measurements and competing risks failure time data, which can be used to handle informative censoring by treating it as a competing risk for the event of interest. Our approach accommodates the mixed effects sub-model for the longitudinal outcome and the two-step mixture sub-model for competing risks. Besides the random effects U, we need to introduce an additional hidden variable τ to simplify the estimation procedure in the mixture sub-model. An EM-based algorithm has been developed to estimate the parameters of interest, and their variance–covariance matrix can be approximated by inverting the empirical Fisher information derived from the profile likelihood. Our joint model not only provides a framework for joint inference on longitudinal outcome and time to event data with competing risks, but also a means to analyse longitudinal outcome with non-ignorable missing mechanisms (discussed in Remark 2 of Section 2.1). In addition, our simulation studies show that the joint model improves the efficiency of frailty estimation in the survival endpoint, and is able to produce accurate estimates of the standard errors using the profile likelihood method. Our joint model also is shown to have more power in statistical tests for effects of factors at the survival endpoint. Finally, we point out that since we need to evaluate integrals of various functions of U in the E-step of each iteration, computational burden increases dramatically with growth of the dimension of the random effects U. This is a common issue in all likelihood-based approaches involving random effects.

Because we discretize the baseline hazards, the dimension of the parameter space increases with the sample size. It is a challenging task to establish the asymptotic properties of the estimators from such a complex semi-parametric model. Recently Zeng and Cai [34] derived the asymptotic distributions of the maximum likelihood estimators from their joint model in which they considered a single failure type for the time to event. A rigorous treatment of the asymptotic properties of the MLE’s under our model with competing risks warrants future research.

Our method can be further extended to robust inference for joint analysis of longitudinal measurements and survival data to handle outlying observations in the longitudinal outcome. One possible approach is to use the idea of Richardson [35] by incorporating weight functions in maximum likelihood and restricted maximum likelihood estimating equations. In a sequel we develop a robust procedure by replacing the normal distribution assumption for measurement errors with the t distribution to take into account longer-than-normal tails. Another issue is about the robustness to the normality assumption of the random effects. It has been noted by Song et al. [19] and Hsieh et al. [36] that the estimation procedure is robust to the normality assumption in some joint models of longitudinal and survival data with a single failure type. We have not investigated this issue in this paper although we expect similar conclusions.

ACKNOWLEDGEMENTS

We would like to thank Dr Donald P. Tashkin in UCLA David Geffen School of Medicine for providing the Scleroderma Lung Study data. We also thank the referees and the associate editor for their valuable and constructive comments.

APPENDIX A: THE EM-BASED ALGORITHM

E-step

Because the complete-data log-likelihood is linear in τ, we can write EU,τ|Y,C,Ψ(m)[l(Ψ;U, τ)] = EU|Y,C,Ψ(m)[l(Ψ;U, Eτ|U,Y,C,Ψ(m)(τ))], where m indicates that the parameter estimates are from the mth iteration of the EM algorithm. In the E-step of the (m + 1)th iteration, for the censored subject i, we have

| (A1) |

which is a function of the hidden variable U. Therefore, we need to evaluate the expected values of all functions of U, say h(U), in l(Ψ;U, Eτ|U,Y,C,Ψ(m) (τ)), conditional on the observed data and the current parameter estimates. We have

| (A2) |

where we use the fact

| (A3) |

The distribution of U|Y,Ψ(m) is multivariate normal with an easily derived mean and variance covariance matrix, and

| (A4) |

for the i th subject. Note that our method requires integration with respect to U in (A2) and (A3). The integrals can be evaluated using numerical integration (quadrature) [37].

M-step

The vector of parameters Ψ is updated by maximizing Q(Ψ; Ψ(m)) = EU,τ|Y,C,Ψ(m) [l(Ψ;U, τ)]. In the formula below, we use E to stand for EU,τ|Y,C,Ψ(m). Closed-form estimates are available for β, Σ, σ2, and baseline hazards λ0k(t) for k = 1, …, g, whose cumulative function H0k (t) is shown below. We write vector and have

| (A5) |

| (A6) |

and

| (A7) |

In calculation of the baseline hazards, the failure time observations are firstly ordered from the smallest to the largest. If there are qk distinct failure times due to the kth cause, then we write tk1 ≤ …, ≤ tkqk for k = 1, …, g. The cumulative baseline hazard function for cause k is denoted by H0k(t). Let R(tkj) be the risk set at time tkj, and dkj be the number of failures due to cause k at time tkj. We have

| (A8) |

No closed-form solutions exist for α, θ, γ, and ν. They satisfy the following score equations, respectively

| (A9) |

for k =1, …, g − 1

| (A10) |

for k = 1, …, g − 1

| (A11) |

for k = 1, …, g, and

| (A12) |

for k = 1, …, g, where tk j and λ0k (tk j) are the same as in (A8). We do not need to solve the above equations, and the parameters α, θ, γ, and ν can be updated using the following one-step Newton–Raphson algorithm in each iteration. Letting we have

| (A13) |

for k =1, …, g − 1, where the matrix and the vector are

| (A14) |

| (A15) |

| (A16) |

for k = 1, …, g − 1, where the matrix and the vector are

| (A17) |

| (A18) |

| (A19) |

for k = 1, …, g, where the matrix and the vector are

| (A20) |

| (A21) |

and finally

| (A22) |

for k = 1, …, g, where the matrix and the vector are

| (A23) |

| (A24) |

It should be noted that in the M-step, some parameters are calculated conditional on the most updated values of other parameters. For example, we use β(m+1) instead of β(m) when updating σ2 in (A6) and α(m+1) instead of α(m) when obtaining θ(m+1) from (A10). This is similar for in (A11) and (A12), and γ(m+1) in (A12). This is the modified version of EM algorithm called the expectation-conditional maximization (ECM) algorithm [38]. By the ECM algorithm, the maximization procedure is much simplified conditional on some parameters being estimated, due to the difficulty of maximizing the likelihood with respect to α, θ, γ, and ν simultaneously in our joint model formulation. In addition, it is shown that the ECM algorithm has the same convergence features as the EM algorithm.

REFERENCES

- 1.Tashkin DP, et al. Cyclophosphamide versus placebo in scleroderma lung disease. The New England Journal of Medicine. 2006;354:2655–2666. doi: 10.1056/NEJMoa055120. [DOI] [PubMed] [Google Scholar]

- 2.Cox DR. Regression models and life tables. Journal of the Royal Statistical Society, Series B. 1972;34:187–200. [Google Scholar]

- 3.Harville DA. Maximum likelihood approaches to variance component estimation and to related problems. Journal of the American Statistical Association. 1977;72:320–338. [Google Scholar]

- 4.Laird NM, Ware JH. Random-effects models for longitudinal data. Biometrics. 1982;38:963–974. [PubMed] [Google Scholar]

- 5.Liang KY, Zeger SL. Longitudinal data analysis using generalized linear models. Biometrika. 1986;73:13–22. [Google Scholar]

- 6.Zeger SL, Liang KY, Albert PS. Models for longitudinal data: a generalized estimating equation approach. Biometrics. 1988;44:1049–1060. [PubMed] [Google Scholar]

- 7.Saha C, Jones MP. Asymptotic bias in the linear mixed effects model under non-ignorable missing data mechanisms. Journal of the Royal Statistical Society, Series B. 2005;67:167–182. [Google Scholar]

- 8.Robins JM, Rotnitzky A, Zhao LP. Analysis of semiparametric regression models for repeated outcomes in the presence of missing data. Journal of the American Statistical Association. 1995;90:106–121. [Google Scholar]

- 9.Henderson R, Diggle P, Dobson A. Joint modeling of longitudinal measurements and event time data. Biostatistics. 2000;4:465–480. doi: 10.1093/biostatistics/1.4.465. [DOI] [PubMed] [Google Scholar]

- 10.Zeng D, Cai J. Simultaneous modelling of survival and longitudinal data with an application to repeated quality of life measures. Lifetime Data Analysis. 2005;11:151–174. doi: 10.1007/s10985-004-0381-0. [DOI] [PubMed] [Google Scholar]

- 11.Schluchter MD. Methods for the analysis of informatively censored longitudinal data. Statistics in Medicine. 1992;11:1861–1870. doi: 10.1002/sim.4780111408. [DOI] [PubMed] [Google Scholar]

- 12.Hogan JW, Laird NM. Model-based approaches to analysing incomplete longitudinal and failure time data. Statistics in Medicine. 1997;16:259–272. doi: 10.1002/(sici)1097-0258(19970215)16:3<259::aid-sim484>3.0.co;2-s. [DOI] [PubMed] [Google Scholar]

- 13.Hogan JW, Roy J, Korkontzelou C. Tutorial in biostatistics—handling drop-out in longitudinal studies. Statistics in Medicine. 2004;23:1455–1497. doi: 10.1002/sim.1728. [DOI] [PubMed] [Google Scholar]

- 14.Wulfsohn MS, Tsiatis AA. A joint model for survival and longitudinal data measured with error. Biometrics. 1997;53:330–339. [PubMed] [Google Scholar]

- 15.Wang Y, Taylor JMG. Joint modeling longitudinal and event time data with application to acquired immunodeficiency syndrome. Journal of the American Statistical Association. 2001;96:895–905. [Google Scholar]

- 16.Tseng YK, Hsieh F, Wang JL. Joint modelling of accelerated failure time and longitudinal data. Biometrika. 2005;92:587–603. [Google Scholar]

- 17.DeGruttola V, Tu XM. Modeling progression of CD-4 lymphocyte count and its relationship to survival time. Biometrics. 1994;50:1003–1014. [PubMed] [Google Scholar]

- 18.Xu J, Zeger SL. Joint analysis of longitudinal data comprising repeated measures and times to events. Applied Statistics. 2001;50:375–387. [Google Scholar]

- 19.Song X, Davidian M, Tsiatis AA. A semiparametric likelihood approach to joint modeling of longitudinal and time-to-event data. Biometrics. 2002;58:742–753. doi: 10.1111/j.0006-341x.2002.00742.x. [DOI] [PubMed] [Google Scholar]

- 20.Brown ER, Ibrahim JG. A Bayesian semiparametric joint hierarchical model for longitudinal and survival data. Biometrics. 2003;59:221–228. doi: 10.1111/1541-0420.00028. [DOI] [PubMed] [Google Scholar]

- 21.Tsiatis AA, Davidian M. Joint modeling of longitudinal and time-to-event data: an overview. Statistica Sinica. 2004;14:809–834. [Google Scholar]

- 22.Rotnitzky A, Robins JM. Analysis of semiparametric regression models with non-ignorable non-response. Statistics in Medicine. 1997;16:81–102. doi: 10.1002/(sici)1097-0258(19970115)16:1<81::aid-sim473>3.0.co;2-0. [DOI] [PubMed] [Google Scholar]

- 23.Rotnitzky A, Robins JM, Scharfstein DO. Semiparametric regression for repeated outcomes with non-ignorable non-response. Journal of the American Statistical Association. 1998;93:1321–1339. [Google Scholar]

- 24.Scharfstein DO, Rotnitzky A, Robins JM. Adjusting for nonignorable drop-out using semiparametric nonresponse models. Journal of the American Statistical Association. 1999;94:1096–1120. [Google Scholar]

- 25.Larson MG, Dinse GE. A mixture model for the regression analysis of competing risks data. Applied Statistics. 1985;34:201–211. [Google Scholar]

- 26.Ng SK, McLachlan GJ. An EM-based semi-parametric mixture model approach to the regression analysis of competing-risks data. Statistics in Medicine. 2003;22:1097–1111. doi: 10.1002/sim.1371. [DOI] [PubMed] [Google Scholar]

- 27.Prentice RL, Breslow NE. Retrospective studies and failure time models. Biometrika. 1978;65:153–158. [Google Scholar]

- 28.Prentice RL, Kalbfleisch JD, Peterson AV, Flournoy N, Farewell VT, Breslow NE. The analysis of failure times in the presence of competing risks. Biometrics. 1978;34:541–554. [PubMed] [Google Scholar]

- 29.Little RJA, Rubin DB. Statistical Analysis with Missing Data. 2nd edn. Hoboken: Wiley; 2002. [Google Scholar]

- 30.Fitzmaurice G, Laird N, Ware J. Applied Longitudinal Analysis. Hoboken: Wiley; 2004. [Google Scholar]

- 31.Louis TA. Finding the observed information matrix when using the EM algorithm. Journal of the Royal Statistical Society, Series B. 1982;44:226–233. [Google Scholar]

- 32.Lin H, McCulloch CE, Rosenheck RA. Latent pattern mixture models for informative intermittent missing data in longitudinal studies. Biometrics. 2004;60:295–305. doi: 10.1111/j.0006-341X.2004.00173.x. [DOI] [PubMed] [Google Scholar]

- 33.Hougaard P. Analysis of Multivariate Survival Data. New York: Springer; 2000. [Google Scholar]

- 34.Zeng D, Cai J. Asymptotic results for maximum likelihood estimators in joint analysis of repeated measurements and survival time. Annals of Statistics. 2005;33:2132–2163. [Google Scholar]

- 35.Richardson AM. Bounded influence estimation in the mixed linear model. Journal of the American Statistical Association. 1997;92:154–161. [Google Scholar]

- 36.Hsieh F, Tseng YK, Wang JL. Joint modelling of survival and longitudinal data: likelihood approach revisited. Biometrics. 2006 doi: 10.1111/j.1541-0420.2006.00570.x. [DOI] [PubMed] [Google Scholar]

- 37.Press WH, Teutolsky SA, Vetterling WT, Flannery BP. Numerical Recipes in FORTRAN. The Art of Scientific Computing. 2nd edn. New York: Cambridge University Press; 1992. [Google Scholar]

- 38.Meng X, Rubin DB. Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika. 1993;80:267–278. [Google Scholar]