Abstract

Background

Jargon is a barrier to effective patient-physician communication, especially when health literacy is low or the topic is complicated. Jargon is addressed by medical schools and residency programs, but reducing jargon usage by the many physicians already in practice may require the population-scale methods used in Quality Improvement.

Objective

To assess the amount of jargon used and explained during discussions about prostate or breast cancer screening. Effective communication is recommended before screening for prostate or breast cancer because of the large number of false-positive results and the possible complications from evaluation or treatment.

Participants

Primary care internal medicine residents.

Measurements

Transcripts of 86 conversations between residents and standardized patients were abstracted using an explicit-criteria data dictionary. Time lag from jargon words to explanations was measured using “statements,” each of which contains one subject and one predicate.

Results

Duplicate abstraction revealed reliability κ = 0.92. The average number of unique jargon words per transcript was 19.6 (SD = 6.1); the total jargon count was 53.6 (SD = 27.2). There was an average of 4.5 jargon-explanations per transcript (SD = 2.3). The ratio of explained to total jargon was 0.15. When jargon was explained, the average time lag from the first usage to the explanation was 8.4 statements (SD = 13.4).

Conclusions

The large number of jargon words and low number of explanations suggest that many patients may not understand counseling about cancer screening tests. Educational programs and faculty development courses should continue to discourage jargon usage. The methods presented here may be useful for feedback and quality improvement efforts.

The interpersonal process is the vehicle by which technical care is implemented and on which its success depends...why is it so often ignored in assessments of the quality of care?...the criteria and standards that permit precise measurement of the attributes of the interpersonal process are not well developed or have not been sufficiently called upon to undertake the task.

Avedis Donabedian1

INTRODUCTION

Communication is said to be the “main ingredient” of medical care,2 but researchers have found communication problems across the health-care system. One of the most commonly mentioned is physicians’ use of jargon,2–7 which is defined as the “specialized language of a trade, profession, or similar group” that is “not likely to be easily understood by persons outside the profession”.8 In this paper we present a method designed to be suitable for quantifying clinicians’ jargon usage over a statewide population and use the method to describe internal medicine residents’ use of jargon during counseling about screening for prostate or breast cancer.

We chose to study communication about prostate and breast cancer screening because professional organizations recommend routine counseling about potential risks of screening.9–15 Counseling is recommended for prostate screening because there is no high-level evidence to suggest that prostate-specific antigen decreases morbidity or mortality.9–11,13 Counseling recommendations for breast cancer screening were instituted because of differences in the level of evidence for mammography in women >50 years of age versus 40–49.12,15,16

This analysis is part of a larger effort to develop communication assessment tools that will be suitable for use on a population scale, such as an institution, health plan, or entire state.17–22 Population-scale efforts will be important because communication problems can occur anywhere. Medical schools and residency programs discourage jargon during training,3,4,6 but it is unclear how long the benefits of training persist. Faculty development programs help practicing physicians,23,24 but these programs require more time, effort, and money than many physicians seem willing or able to commit. To be successful on a population scale, new communication assessment methods will need to be quantitatively reliable, concrete enough for use by quality improvement professionals, and able to function on a lean budget. To meet these requirements in previous analyses of communication behaviors, we have adapted a “communication quality indicator” approach from Quality Improvement. We also adapted methods from corpus linguistics (the study of language as found in collections of text used in the real world) in order to quantify jargon usage beyond the ordinal scale approaches used in previous research.7,25

METHODS

Data Source

We used an explicit-criteria procedure to abstract transcripts of conversations between internal medicine residents and standardized patients portrayed to have a question about screening for prostate or breast cancer. Transcripts were made from tapes collected during four workshops in a Primary Care Internal Medicine residency program. The workshops were part of the educational curriculum. Residents were asked to give informed consent and were offered a chance to decline use of their tapes for research. Methods were approved by institutional review boards at Yale and the Medical College of Wisconsin.

Residents were taped in two standardized patient encounters before the didactic portion of the workshops. In one encounter, a 50-year-old man asked about prostate cancer screening; in the other encounter, a 43-year-old woman asked about breast cancer screening. The order of the two encounters was random for each resident. A fact sheet stated that the patient had no family history of cancer and had had an unremarkable physical exam the week before, so the resident would not feel obliged to do a physical or take an extended history. Patients began with a short speech patterned after the following example:

I’m sorry I’m back so soon after my physical, but I had to leave so quickly that I didn’t get a chance to ask a question. I recently saw an advertisement about prostate [or breast] cancer screening, but I wasn’t sure if it was for me. What do you think?

To standardize the counseling task, patients were coached to avoid asking leading questions and to minimize the appearance of anxiety or confusion. This strategy allowed us to focus on jargon usage rather than residents’ ability to respond to questions (an important skill, but not the subject of this study).

Tapes were transcribed verbatim and proofread for accuracy by a board-certified internist (MF and Dr. Jeffrey Stein). The proofreaders deleted personally identifying text about the residents to reduce abstractor bias. To provide a content-related unit of duration, we used a sentence-diagramming procedure to parse transcripts into individual “statements,” which were defined as having one explicit or implied subject and one predicate. The approach was selected to be consistent with theories and research from cognitive psychology about the mental demands of holding several unfamiliar concepts in mind at the same time.26–29 Statements were also simpler for abstractors than the widely used utterance approach, which looks for individual concepts as well, but also parses speech at points such at 1 second pauses, tonal changes, speaker emphases, and some conjunctions.30 Examples of parsed text are shown in Figures 1 and 2, with each statement beginning at a number in pointed brackets { } and ending with a double slash //. A single slash/ or a quadruple slash //// is used to mark the middle or end of compound statements.

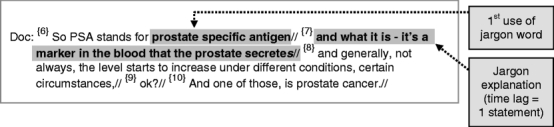

Figure 1.

Example of simple use of the sentence parsing method and explanatory time lag calculation.

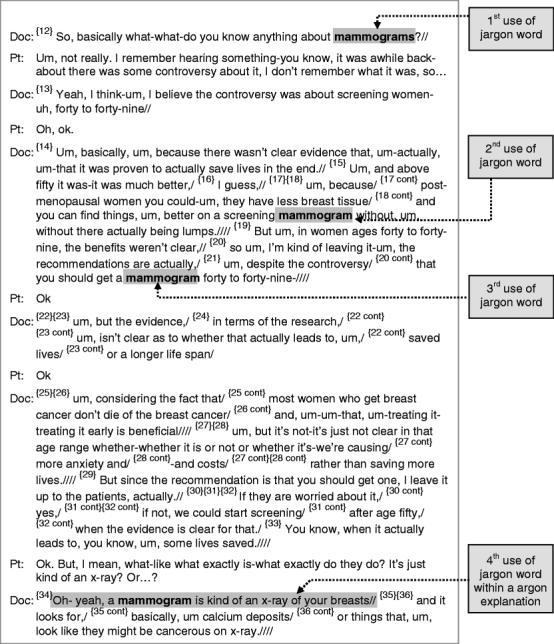

Figure 2.

Example of a more complicated use of the sentence parsing method, explanation ratio, and explanatory time lag calculation.

The final sample for analysis consisted of 86 transcripts (41 for prostate cancer screening and 45 for breast cancer screening).

Abstraction

Abstraction was facilitated by an explicit-criteria data dictionary that included jargon word lists and definitions to use with jargon explanations. Abstractors read the transcripts statement by statement and compared each word with the jargon lists. Transcripts were abstracted by two reviewers to assess reliability; one third were discussed further to ensure quality and consistency, following the suggestion by Feinstein.31

Jargon word lists were developed using an automated procedure adapted from corpus linguistics. In brief, the procedure used a carefully structured seven-step protocol. Steps 1 through 4 were done by a spreadsheet without the need for subjective judgment.

A corpus document was constructed by merging all transcript files.

Word frequency software (we use Textanz, Cro-Code, St. Petersburg, Russia) was used to extract a complete list of words, abbreviations, and two- or three-word combinations. Each of the words on the frequency list was converted to its lemma (root), and all words from the same lemma were grouped together.

Words and lemmas were cross-indexed to remove “common words,” i.e., those words that were also listed on the composite list of familiar words listed earlier.

The remaining words were cross-indexed against an electronic version of Stedman’s Medical Dictionary32 to identify words for the “highly specialized” list. All abbreviations were also listed as highly specialized with the exceptions of “U.S.” and “U.S.A.”

The remaining words were collaboratively examined by the authors for assignment to either the “uncommon” list of jargon words, containing words some people may not recognize, or to the “common-but-confusing” list containing words that are common in English, but in the transcripts were used for an uncommon concept.

Words that had been excluded in the third step were re-examined by the four authors to identify additional words for the common-but-confusing list.

For verification purposes, an amendment process allowed abstractors to propose corrections to the highly specialized, uncommon, and common-but-confusing word lists, provided that the word could be ratified by one other abstractor. When such a word was identified, an electronic search of previously abstracted transcripts was used to verify that the newly designated jargon word was not missed in previous abstractions.

Jargon Explanations It was expected that the effectiveness of residents’ explanations would vary, so a trichotomous variable (definite/partial/absent) was developed. To be assigned a definite rating, the explanation statement had to refer to the jargon word directly and not use jargon itself. Explanations that used jargon were assigned a partial rating. For example in Figure 1 the explanation of “prostate-specific antigen” is rated as partial because it uses the word “marker” without an accompanying explanation. A partial rating could also be assigned for explanations that were not obviously linked to a specific word or for explanations that were questionable to the abstractors. Discrepancies between abstractors were automatically resolved by assignment to the partial explanations category.

Calculations and Analyses

The “explanation ratio” is a measure of the burden of unexplained jargon words over the entire transcript. This quantifying approach is grounded in cognitive psychology research suggesting that the mental effort necessary for puzzling over unexplained concepts inhibits comprehension of information that is subsequently encountered.26–29 The explanation ratio is calculated using the formula

|

where the numerator is equal to the number of jargon words that are never explained or that are more than two statements earlier than the explanation, plus 0.5 for each jargon word that only follows a partial explanation. For example, in Figure 2 the explanation ratio for “mammogram” is 1÷4, or 0.25.

The “explanatory time lag” was a measure of informational content (in statements) presented between the first usage of the jargon word and its first full or partial explanation. In Figure 1 the time lag for “prostate-specific antigen” is 1 statement, while in Figure 2 the time lag for the word “mammogram” is 22 statements.

The explanation ratio and explanation time lag calculations were both done by a spreadsheet (Microsoft Excel, Redmond, WA) without subjective interpretation. Statistical analyses were performed using JMP software (SAS Institute, Cary, NC). One-way ANOVA and chi-squared tests were used as appropriate for variable type. Inter-abstractor reliability for jargon words was calculated using Cohen’s method.

RESULTS

Participant characteristics are shown in Table 1. The interviews averaged 10.1 min (range, 2 to 21.9) and 146.4 statements per transcript. Inter-abstractor reliability was κ = 0.92. To evaluate the feasibility of our methods we tracked time and expenses, and project that quality improvement projects in the future will be achievable with a budget under $50 per clinician evaluated.

Table 1.

Participant Characteristics

| No. responding | (%) | |

|---|---|---|

| Gender | ||

| Male | 20 | (42) |

| Female | 28 | (58) |

| Age* | ||

| 25–29 years | 26 | (55) |

| 30–37 years | 21 | (45) |

| Year in residency | ||

| 1st | 10 | (20.8) |

| 2nd | 19 | (39.6) |

| 3rd or 4th ** | 19 | (39.6) |

*Forty-seven residents responded to the age question

**Fourth year residents were from the medicine-pediatrics program

Jargon Words

Abstraction and the preceding seven step process in the protocol allowed us to populate the jargon word list with 350 unique jargon words across 86 transcripts. The average number of unique jargon words per transcript was 19.6 (SD 6.1). Most words were used more than once so that the total jargon count averaged 53.6 words per transcript (SD 27.2). There were no significant differences in jargon count by resident gender or year in residency. The five most frequent jargon words included for each type of cancer are listed in Table 2.

Table 2.

Most Commonly Used Jargon Words in Counseling

| Condition | Jargon word* | Instances | Percent of total for condition** |

|---|---|---|---|

| Breast cancer screening | Mammogram | 558 | 27.2 |

| Screen | 245 | 12.0 | |

| Tissue | 117 | 5.7 | |

| Biopsy | 101 | 4.9 | |

| False positive | 55 | 2.7 | |

| Prostate cancer screening | Prostate | 837 | 32.7 |

| Screen | 246 | 9.6 | |

| Symptom | 109 | 4.3 | |

| Rectal | 109 | 4.3 | |

| Biopsy | 108 | 4.2 |

*Jargon words are lemmatized for this table (e.g., “Screen” includes screen, screened and screening)

**Total number of jargon words in all mammography transcripts = 2,050. Total number of jargon words in all prostate transcripts = 2,558

Jargon Explanations

All but one of the transcripts included at least one jargon explanation. There was an average of 4.5 explanations per transcript (SD 2.3). Prostate screening transcripts averaged more explanations per transcript than mammography transcripts (5.9 versus 3.2 respectively, p < .0001). The five most frequently explained jargon words for each type of cancer are listed in Table 3.

Table 3.

Most Commonly Explained Jargon Words in Counseling

| Transcripts with explanation | |||

|---|---|---|---|

| Condition | Jargon word* | n | (%) |

| Breast cancer screening | Mammogram | 27 | (60.0) |

| False Positive | 16 | (35.6) | |

| Biopsy | 11 | (24.4) | |

| Baseline | 8 | (17.8) | |

| Screen | 5 | (11.1) | |

| Prostate cancer screening | Prostate-specific antigen | 31 | (75.6) |

| PSA | 28 | (68.3) | |

| Benign prostatic hypertrophy | 20 | (48.8) | |

| Biopsy | 11 | (26.8) | |

| Prostate (by itself) | 7 | (17.1) | |

*Jargon words are lemmatized for this table (e.g., “Screen” includes screen, screened and screening)

The average explanation ratio was 0.15 (SD 0.11), meaning that the remaining 85% of jargon was neither explained in the transcript nor explained before two more statements were presented. The explanation ratio was slightly better for prostate transcripts (0.18) than for breast cancer transcripts (0.12, p = 0.0125), but there were no significant differences by resident gender or year in residency.

Time Lag Between Jargon Words and Explanations

The average time lag was 8.4 statements (SD 13.4), meaning that a patient would have been exposed to eight concepts while puzzling over an unexplained word. The lag was greater in mammography (11.6) as compared with prostate transcripts (4.6 statements, p = 0.004). There were no significant differences by resident gender or year in residency.

Sensitivity Analysis Without “Prostate” and “Mammogram”

Two issues prompted us to do a post hoc sensitivity analysis after deleting instances of the word “prostate” and words beginning with “mammogra-,” with the exception of compound words such as “prostate-specific antigen.” The first was that these two words together accounted for almost a third of the total number of words (1,395 of the 4,608 or 30.3%) across the 86 transcripts. Second, many of the opening statements from the standardized patients included one of these words. We used a search routine to delete these words, so that the average total jargon count decreased to 37.3 words per transcript, the expected relative decrease of 30.3%. The number of transcripts without an explanation increased to 5 of 86 total transcripts (5.8% of total). The average number of explanations per transcript decreased 17% to 3.7. There was a 10% increase in the explanation ratio to 0.16. The average time lag from the first usage of the word to its explanation decreased by 0.08% (0.006 statements).

DISCUSSION

Jargon is frequently a barrier to effective communication, especially when discussing a complicated topic like the risks associated with screening. The two purposes of this paper were to describe residents’ use of jargon during counseling about prostate and breast cancer screening and to introduce a new method for quantifying jargon usage that can be used in population-based samples. In the analysis we found that jargon words were common, explanations were rare, and many explanations lagged well behind usage of the words that they were supposed to explain. The analysis was limited by use of a small sample of residents from a single program, which limits generalizability to other residency programs or clinicians in practice. Additional research should be conducted in more broadly representative samples of physicians before wider implementation of the method.

From the patient perspective, our approach may overestimate jargon burden because of the low explanation ratio of the word “prostate” and words beginning with “mammogra-” (Table 3). The high prevalence of these words is understandable since they were central to the topic of conversation, but the words’ low explanation ratio could have been affected by the standardized patient’s necessary use of the words to begin conversation. When these words were removed for the sensitivity analysis described in the results section, however, the jargon count was still high, and the explanation ratio was still quite small. In addition, the finding that 60% and 17% of residents choose to explain the word mammogram and prostate, respectively, (Table 3) suggests that some residents still thought it was necessary to explain these words even though they had already been used by the patient.

Regardless of the size of the problem, we are concerned about the challenge that jargon presents for patients with limited health literacy or English skills, and for patients who are ethnically or culturally different from their clinicians.5 Patients with high health literacy may have sufficient understanding of words like “prostate” and therefore might not need an explanation, but clinicians should be cognizant that patients may use words that they do not entirely understand and consider taking a conservative approach of providing an explanation when there is doubt about likely understanding.3–6

Several research questions remain. The finding that most residents explained at least one jargon word suggests that they may already be aware that misunderstanding of certain words is possible; thus, studies should investigate whether clinicians tend to overestimate their patients’ vocabularies or if there is some other reason why jargon is often unexplained. A statewide study of counseling after newborn screening currently in progress will allow us to quantify the association between jargon usage and comprehension in that population.33

In the time since this manuscript was first submitted, two other research groups have developed methods to quantify jargon usage. In one study of primary care visits,34 Castro et al. operationalized jargon as either “clinical or technical terms with only one meaning listed in a medical dictionary (e.g., hemoglobin A1c)” or “clinical terms used in health care contexts with distinct meanings in lay contexts (e.g., your weight is stable)” (p. s86). In another study, Keselman et al. developed a “predictive familiarity model” based on input to a multiple regression formula from text frequencies found in Reuter’s news reports, queries to an Internet health search engine, queries to a general Internet search engine, and further input from 41 laypersons.35 The model was used to categorize 45 words as either “likely,” “somewhat likely,” or “unlikely” to be familiar to laypersons. This line of research is a positive development. Future research comparing the models, their generalizability, costs, and their relationship to care outcomes will advance the field further.

In our view, the most important area for future jargon research is the development of metrics that can be applied on a population-wide scale with the ultimate goal of reducing jargon usage and improving clinicians’ explanations. To be successful on these large scales, methods will need to be quantitatively reliable, concrete enough for use by quality improvement professionals with typical training, and able to function on a lean budget. We have adapted a methodological approach from Quality Improvement because of that field’s track record for improving other complex clinician behaviors over large populations.36 The main goal of this project was to extend our previous work17–22 into a method that will reliably and affordably quantify jargon usage and explanations for use in population-based quality improvement projects.

In summary, the high prevalence of jargon words and low prevalence of jargon explanations suggests that there may be problems with communication and patient comprehension during discussions about cancer screening. Our explicit-criteria method is concrete enough for use by quality improvement professionals and customizable for different clinical topics. Since it is not possible to draw conclusions about individuals based on population-based data, we advise clinicians to approach each patient as an individual, regardless of the patients’ apparent health literacy, and to be conservative about word choice, explanation, and assessment of understanding. Reduced use of jargon and improved explanations by individual clinicians or over entire populations are likely to result in increased communication effectiveness and patient participation in care.

Acknowledgments

Lindsay Deuster was supported by a training grant from the National Heart, Lung and Blood Institute (T35-HL72483-24). Dr. Farrell was supported in part by grant K01HL072530 from the National Heart, Lung, and Blood Institute. An earlier version of this paper was presented as a poster at the 2007 annual meeting of the Society of General Internal Medicine. The authors are grateful to Dr. Jeffrey Stein for assistance with educational workshops and transcript proofreading, and to Dr. Richard Frankel for very helpful critique and suggestions about the manuscript.

Conflict of Interest None disclosed.

References

- 1.Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743–8. [DOI] [PubMed]

- 2.Roter DL, Hall JA, eds. Doctors Talking with Patients, Patients Talking with Doctors: Improving Communication in Medical Visits. Auburn House: Westport, Connecticut; 1992.

- 3.Coulehan JL, Block MR. The medical interview: mastering skills for clinical practice. 5th ed. Philadelphia: F.A. Davis Co.; 2006:409. xix.

- 4.Makoul G. Essential elements of communication in medical encounters: the Kalamazoo consensus statement. Acad Med. 2001;76(4):390–3. [DOI] [PubMed]

- 5.Nielsen-Bohlman L, Panzer A, Kindig D, eds. Health Literacy: A Prescription to End Confusion. National Academies Press: Washington, DC; 2004. [PubMed]

- 6.Smith RC, Marshall-Dorsey AA, Osborn GG, et al. Evidence-based guidelines for teaching patient-centered interviewing. Patient Educ Couns. 2000;39(1):27–36. [DOI] [PubMed]

- 7.Stillman PL, Sabers DL, Redfield DL. The use of paraprofessionals to teach interviewing skills. Pediatrics. 1976;57(5):769–74. [PubMed]

- 8.The American Heritage Dictionary of the English Language. Fourth ed. Boston, Massachusetts: Houghton Mifflin Company: 2006.

- 9.American Academy of Family Physicians. Summary of AAFP Recommendations for Clinical Preventive Services, Revision 6.4. 2007 [accessed 06/04/2008]; Available from: http://www.aafp.org/online/en/home/clinical/exam.html.

- 10.American College of Physicians. Screening for prostate cancer. Ann Intern Med. 1997;126(6):480–4. [PubMed]

- 11.American Urological Association. Prostate-specific antigen (PSA) best practice policy. Oncology. 2000;14(2):267–72. 277–8, 280. [PubMed]

- 12.Smith RA, Cokkinides V, Eyre HJ. Cancer screening in the United States, 2007: a review of current guidelines, practices, and prospects. CA Cancer J Clin. 2007;57(2):90–104. [DOI] [PubMed]

- 13.U.S. Preventive Services Task Force. Screening for prostate cancer: recommendation and rationale. Ann Intern Med. 2002;137(11):915–6. [DOI] [PubMed]

- 14.Veterans Health Administration, ed. VHA Handbook 1120.2 Health Promotion and Disease Prevention Program. Department of Veterans Affairs: Washington, DC; 1999.

- 15.Qaseem A, Snow V, Sherif K, Aronson M, Weiss KB, Owens DK. Screening mammography for women 40 to 49 years of age: a clinical practice guideline from the American College of Physicians. Ann Intern Med. 2007;146(7):511–5. [DOI] [PubMed]

- 16.Ransohoff DF, Harris RP. Lessons from the mammography screening controversy: can we improve the debate? Ann Intern Med. 1997;127(11):1029–34. [DOI] [PubMed]

- 17.Donovan J, Deuster L, Christopher SA, Farrell, MH. Residents’ precautionary discussion of emotions during communication about cancer screening, poster at 2007 annual meeting of the Society for General Internal Medicine, Toronto, Ontario, Canada.

- 18.Donovan JJ, Farrell MH, Deuster L, Christopher SA. “Precautionary empathy” by child health providers after newborn screening. Poster at International Conference on Communication and Health Care, Charleston, SC; 2007.

- 19.Farrell MH, Deuster L, Donovan J, Christopher SA. Pediatric residents’ use of jargon during counseling about newborn genetic screening results. Pediatrics, in press, August 2008. [DOI] [PubMed]

- 20.Farrell MH, Kuruvilla PE. Assessment of understanding: A quality indicator for communication after newborn genetic screening. Arch Pediatr Adolesc Med. 2008;2(3):199–204. [DOI] [PubMed]

- 21.Farrell MH, La Pean A, Ladouceur L. Content of communication by pediatric residents after newborn genetic screening. Pediatrics. 2005;116(6):1492–8. [DOI] [PubMed]

- 22.La Pean A, Farrell MH. Initially misleading communication of carrier results after newborn genetic screening. Pediatrics. 2005;116(6):1499–505. [DOI] [PubMed]

- 23.Bylund CL, Brown RF, di Ciccone BL, Levin TT, Gueguen JA, Hill C, Kissane DW. Training faculty to facilitate communication skills training: Development and evaluation of a workshop. Patient Educ Couns. 2008;70(3):430–6. [DOI] [PubMed]

- 24.Lang F, Everett K, McGowen R, Bennard B. Faculty development in communication skills instruction: insights from a longitudinal program with “real-time feedback”. Acad Med. 2000;75(12):1222–8. [DOI] [PubMed]

- 25.Braddock CH, Edwards KA, Hasenberg NM, Laidley TL, Levinson W. Informed decision making in outpatient practice: time to get back to basics. JAMA. 1999;282(24):2313–20. [DOI] [PubMed]

- 26.Graber DA. The Theoretical Base: Schema Theory. In: Graber DA, ed. Processing the News: How People Tame the Information Tide. New York: Longman; 1988:27–31.

- 27.Miller GA. The magical number seven, plus or minus two: some limits on our capacity for processing information. Psychol Rev. 1994;101(2):343–52. [DOI] [PubMed]

- 28.Seel N. Mental Models in Learning Situations. Adv Psychol. 2006;138:85–110. [DOI]

- 29.White JD, Carlston DE. Consequences of Schemata for Attention, Impressions, and Recall in Complex Social Interactions. J Pers Soc Psychol. 1983;45(3):538–49. [DOI] [PubMed]

- 30.Roter D. Roter Interaction Analysis System Coding Manual. [accessed 06/04/2008]; Available from: http://www.rias.org/manual.

- 31.Feinstein A. Clinical Epidemiology: The Architecture of Clinical Research. Philadelphia: WB Saunders; 1985.

- 32.Stedman TL. Stedman’s medical dictionary. 28Philadelphia: Lippincott Williams & Wilkins; 20061 v. (various pagings).

- 33.Farrell MH. R01 HL086691, Improvement of communication process and outcomes after newborn genetic screening. National Heart, Lung, and Blood Institute: Medical College of Wisconsin: 2008.

- 34.Castro CM, Wilson C, Wang F, Schillinger D. Babel babble: physicians’ use of unclarified medical jargon with patients. Am J Health Behav. 2007;31(Suppl 1):S85–S95. [DOI] [PubMed]

- 35.Keselman A, Tse T, Crowell J, Browne A, Ngo L, Zeng Q. Assessing consumer health vocabulary familiarity: an exploratory study. J Med Internet Res. 2007;9(1):e5. [DOI] [PMC free article] [PubMed]

- 36.Jencks SF, Huff ED, Cuerdon T. Change in the Quality of Care Delivered to Medicare Beneficiaries, 1998–1999 to 2000–2001. JAMA. 2003;289(3):305–12. [DOI] [PubMed]