Abstract

The methodology of objective assessment, which defines image quality in terms of the performance of specific observers on specific tasks of interest, is extended to temporal sequences of images with random point spread functions and applied to adaptive imaging in astronomy. The tasks considered include both detection and estimation, and the observers are the optimal linear discriminant (Hotelling observer) and the optimal linear estimator (Wiener). A general theory of first- and second-order spatiotemporal statistics in adaptive optics is developed. It is shown that the covariance matrix can be rigorously decomposed into three terms representing the effect of measurement noise, random point spread function, and random nature of the astronomical scene. Figures of merit are developed, and computational methods are discussed.

Keywords: 110.0110, 110.3000, 110.4280, 010.0010, 010.7350

1. INTRODUCTION

Scientific and medical images are acquired for specific purposes, and the quality of an imaging system is ultimately determined by how well the images fulfill those purposes. In broad terms the purpose, or task, of the imaging system is to learn something about the object that produced the image. More specifically, the tasks of interest can be divided generically into classification and estimation. In a classification task, the goal is to label the object, or to say to which of two or more classes it belongs. Estimation tasks are concerned with extraction of numerical information from the images.

How well the task can be performed depends not only on the task and imaging system but also on the means by which the task is performed, or the observer. For classification tasks, the observer is often a human, such as a radiologist or photointerpreter, and some measure of classification accuracy can be used as a figure of merit for the combined performance of the imaging system and the observer. Alternatively, images can be classified by computer algorithms or mathematical models. It is possible in many cases to construct ideal observers that achieve the best possible performance on a given task with images from a given imaging system; performance of an ideal observer can be regarded as a figure of merit for the imaging system alone, since it does not depend on the capabilities of humans, ad hoc feature-extraction schemes, or other suboptimal classification methods.

Estimation tasks can also be performed by humans, but it is more common to use a computer algorithm to analyze the image and report numerical values for one or more parameters of interest. Again, estimation algorithms that are optimal in some statistical sense can be used to obtain figures of merit for the imaging system itself, but as with classification tasks, this metric will depend on the specific estimation task chosen.

This task-based approach to image quality, often called objective assessment, is now well established in radiological imaging, and in fact virtually mandatory in that field, but it is widely applicable to other areas of imaging as well. For a comprehensive review and discussion of both medical and nonmedical applications, see Barrett and Myers.1

In the first paper of this series,2 it was emphasized that task performance is inherently statistical and that calculation or measurement of objective performance has to account for all sources of image randomness, including the randomness of the objects themselves or the background on which they are superimposed. This paper examined a variety of estimation and classification tasks with both optimal and suboptimal observers, and it derived relationships between the objective figures of merit for estimation and classification tasks. An important conclusion of this paper is that not only the absolute level of image noise, but also its correlation structure, is important for both kinds of task. Image correlations can be introduced by the image detector or subsequent image processing or reconstruction, but they are also inherent in the objects being imaged.

The second paper in the series3 examined Fourier methods for quantifying task performance. Though familiar Fourier techniques are rigorously applicable only for linear, shift-invariant imaging systems with stationary noise, this paper considered a more general descriptor called the Fourier crosstalk matrix, which is applicable to any linear imaging system. The crosstalk matrix was related to the Fisher information matrix for estimation of Fourier coefficients and used to discuss classification and estimation tasks.

The third paper in the series4 looked specifically at classification tasks with the ideal observer. It developed the theory of the ideal observer and set the stage for practical computation of its performance in radiological imaging.1,5–7

The goal of the present paper is to show how the methodology of objective assessment of image quality can be applied to an important nonradiological imaging area, namely astronomical adaptive optics (AO). It should serve as a case study of how the various sources of randomness in a complex imaging system can be systematically enumerated and analyzed and how they affect task performance. In addition, this paper adds to the methodology of objective assessment in two respects: It considers the effect of a random system operator, and it analyzes task performance on sequences of correlated images.

Section 2 is a background section, containing little that is new but introducing the viewpoint and notation used in the remainder of the paper. In particular, the critical concept of multiply stochastic images is introduced and integrated into specific figures of merit for task performance.

Section 3 is a detailed statistical analysis of a generic AO system, and Section 4 applies the results of the analysis to task-based assessment of image quality. The goal of Section 5 is to show that the resulting figures of merit can actually be computed in practice. Section 6 summarizes the results and conclusions of the analysis.

2. BACKGROUND

A. Descriptions of Digital Imaging Systems

A digital imaging system is one that delivers a discrete set of data, {gm,m=1, … ,M}, or equivalently an M × 1 data vector g. For a single static image, M is the number of pixels in the image, but multiple image frames indexed by time, wavelength, or viewing angle can also be included in the data vector.

The object itself is not discrete, even though we often model it as such; instead, a real-world object is a function of some number of continuous variables. We shall write this function as f(r) with the understanding that the vector r includes all independent variables needed to describe the object, including time if the object is not static. In general, r has q components, where q=2 for a two-dimensional (2D) static object. When we do not wish to be specific about the independent variables, we shall denote the object as f, with the boldface indicating a vector in a Hilbert space.1

The components of g are random variables because the object being viewed is randomly chosen from some ensemble of objects, because of measurement noise and possibly because the imaging system itself is random. Object randomness is discussed in Subsection 2.B below, and consideration of random systems is postponed to Subsection 3.B. For now, we define an average data vector , where the overbar indicates an ensemble average over the measurement noise for a given object and imaging system.

A system is said to be linear if each component of is a linear functional of f. The most general form of this linear functional is

| (2.1) |

where the index ∞ indicates that the integral runs over the complete range of all q variables that make up r. In abstract operator form, Eq. (2.1) can also be written as

| (2.2) |

where the linear operator H is defined by the M integrals in Eq. (2.1). Since H maps a function of continuous variables to a discrete vector, it is referred to as a continuous-to-discrete, or CD, operator.1 The kernel hm(r) in Eq. (2.1) is called the sensitivity function of the linear imaging system. It is also a point response function in the sense that hm(r0) is the mean response of the mth measurement when the object is a point, δ(r—r0), but of course the integral in Eq. (2.1) is not a convolution.

Since the data vector has a finite dimension and the object is a vector in an infinite-dimensional Hilbert space, CD operators necessarily have null functions. The only components of f that can be captured by, even in the absence of noise, are linear combinations H of the sensitivity functions.

B. Random Objects and Doubly Stochastic Images

For a single object f, the conditional probability density function (PDF) of the image, denoted pr(g|f), describes the randomness of the measurement noise only. This PDF (or probability mass function in the case of discrete random variables) usually has a simple and well-understood form, for example a multivariate Gaussian for electronic readout noise or a Poisson in the case of photon-counting statistics.

To fully characterize random objects, we would need a PDF on f; if we had such a thing, we could write the final PDF on the data as

| (2.3) |

where in principle the integral is over all parameters needed to specify the object. An alternative notation that means the same thing is

| (2.4) |

where the angle brackets denote an average over the quantities indicated by the subscript, in this case over an ensemble of objects.

There are many situations where the average in Eq. (2.4) can be performed analytically or approximated numerically without an explicit PDF for the object ensemble. Numerically, Monte Carlo sampling methods make it possible to do the averaging whenever we can simulate the objects, though the computational requirements are likely to be severe. Analytically, multivariate normal and log-normal models are tractable even when the dimensionality of the object description is very large, and there are mathematical models known as lumpy and clustered lumpy backgrounds8,9 that accurately represent tissue distributions encountered in medical imaging yet remain mathematically tractable even in the limit of an infinite-dimensional Hilbert space for the object. Also, there is a large literature on constructing lower-dimensional representations that capture the essential features of interesting objects by the use of wavelets10,11 or independent-components analysis.12,13

A survey of the state of the art in object statistics is given in Barrett and Myers,1 and some examples relevant to astronomy will be given in Section 4 and Appendix A.

The conditional mean image is defined as the average of g with respect to pr(g|f). If we also average over random objects, the overall mean image, denoted , is given in component form by

| (2.5) |

For a linear imaging system,

| (2.6) |

Conditional and overall covariance matrices can be defined similarly. The conditional covariance matrix, which describes the measurement noise, is given in component form as

| (2.7) |

or in outer-product form as

| (2.8) |

For Poisson noise, .

The overall covariance matrix is defined by

| (2.9) |

Now add and subtract in each factor:

| (2.10) |

Note that the cross term has vanished identically, since

| (2.11) |

Thus, with no assumptions about independence of g and f, we can write

| (2.12) |

where the first term describes the measurement noise and the second term arises from object variability. For most kinds of noise, including Poisson noise in photon-counting detectors and electronic readout noise in detector arrays, is diagonal.

The second term in Eq. (2.12) is not diagonal. Recall that the object is a random process f(r) and hence described by an autocovariance function:

| (2.13) |

The autocovariance function can be regarded as the kernel of an integral operator , and for a linear imaging system, the second term in the decomposition can be written formally as

| (2.14) |

where is the adjoint1 of the operator .

C. Tasks and Observers

This subsection provides a brief survey of key concepts from statistical decision theory. A more complete discussion can be found in many sources.1,14,15

1. Classification Tasks

In a classification task, the goal is to assign the object that produced an image to one of two or more classes. If the hypothesis that f belongs to the kth class is denoted Hk, then the probability law for the data when hypothesis Hk is true is pr(g|Hk). In terms of the PDFs discussed above,

| (2.15) |

When regarded as a function of Hk for a fixed (observed) g, pr(g|Hk) is referred to as the likelihood of the hypothesis for that data set.

A binary classification task is one where there are only two classes or hypotheses. In a signal-detection task, for example, the hypotheses are signal-absent and signal-present. If we assume that each image must be assigned without equivocation either to hypothesis H0 (e.g., signal-absent) or to H1, the decision on a binary task can be made in complete generality by computing some scalar test statistic t(g) from the data; the observer then decides on H1 if the test statistic is greater than a decision threshold and decides on H0 otherwise. The value of the threshold controls the trade-off between true positive decisions (correctly choosing H1) and false positive decisions (choosing H1 when H0 is true). In signal-detection problems, the true-positive fraction (TPF) is called the probability of detection, and the false-positive fraction (FPF) is called the false-alarm rate.

A plot of TPF versus FPF as the threshold is varied is called a receiver operating characteristic (ROC) curve. Meaningful figures of merit for binary classification include the TPF at a specified FPF (the Neyman—Pearson criterion), the area under the ROC curve (AUC), and certain detectability indices derived from the ROC curve. The probability of detection alone is not a meaningful metric since it can always be made large, even unity, simply by choosing a low threshold.

Another common figure of merit for binary classification tasks is the signal-to-noise ratio (SNR) on the test statistic. Not to be confused with the more common pixel SNR, the SNR for a specific test statistic t(g) is defined as

| (2.16) |

where ⟨t(g)|Hk⟩ is the expected value of the test statistic when hypothesis Hk is true and Var{t(g)|Hk} is the corresponding variance. If the test statistic is normally distributed under both hypotheses, the AUC is uniquely determined by SNRt.

2. Optimal Observers for Binary Classification

The ideal observer on a binary task is defined variously as one that maximizes the AUC, maximizes the TPF at all specified FPFs, or minimizes a cost function defined in terms of TPF and FPF. By any of these criteria, the test statistic used by the ideal observer is the likelihood ratio , so the ideal observer for a binary problem is one that calculates either the likelihood ratio or its logarithm . There are several examples where this computation is feasible,5–7,16,17 but in many problems and are complicated nonlinear functions of the data for which no closed form is possible, and in any case their computation requires knowledge of the data PDF under both hypotheses.

A more tractable alternative to the ideal observer is the ideal linear observer, often called the Hotelling observer1,2,18,19 in the literature on objective assessment of image quality. Linear observers compute linear discriminants, so the test statistic has the form t(g)=wtg, where w is an M × 1 vector called the template, and wtg denotes its scalar product with the M × 1 data vector. The Hotelling discriminant uses a template that maximizes a certain class separability measure,20 and if the classes are equally probable it also maximizes the SNR defined in Eq. (2.16). Linear test statistics are usually normally distributed by virtue of the central limit theorem, and in this case maximizing this SNR is equivalent to maximizing the AUC among linear observers. It can also be shown that the Hotelling test statistic is equal to the log-likelihood ratio if the raw data are normally distributed with the same covariance under both hypotheses, so the Hotelling observer is identical to the ideal observer in this case and thus maximizes the AUC among all observers, not just linear ones.

Computation of the Hotelling test statistic requires only the overall mean vectors and the covariance matrices of the data under the two hypotheses. The test statistic is given by

| (2.17) |

The inverse of the average covariance matrix is related to the familiar signal-processing operation of prewhitening, and for this reason, the Hotelling observer is sometimes called a prewhitening matched filter; unless the noise is stationary, however, the prewhitening and matched filtering cannot be carried out in the Fourier domain.

The Hotelling discriminant (2.17) should not be confused with the Fisher discriminant. Basically the difference is that the Hotelling discriminant uses ensemble means and covariances and the Fisher discriminant uses sample means and covariances. In fact, the Fisher discriminant is almost never applicable to raw pixel values in images, since the dimension of the covariance matrix is M×M, where M is the number of pixels, and a sample covariance of this size would be invertible only if the number of sample images were greater than M — 1, which is very difficult to achieve. As we shall see in detail in Section 5, however, it is indeed possible to estimate and invert the ensemble covariance used by the Hotelling observer.

A figure of merit for the Hotelling observer is the Hotelling SNR, sometimes called the Hotelling trace; it is given by

| (2.18) |

where tr{·} denotes the trace (sum of the diagonal elements) of the matrix.

Often the Hotelling observer is applied not to the raw data but to a data set of reduced dimensionality obtained by passing g through a set of linear filters; in this case it is referred to as the channelized Hotelling observer (CHO). The channels can be chosen to preserve the class separability or to construct an observer that accurately predicts the performance of human observers as measured by psychophysical studies. For a thorough review of the CHO and its many successful applications in medical imaging, see Barrett and Myers.1

3. Detection of Signals at Random Locations

When the signal location is random, the ideal decision strategy in Gaussian measurement noise is to subtract the mean background contribution at each pixel (assumed known), perform a prewhitening matched filter operation for each possible signal location, and exponentiate.21,22 The output of these operations is averaged over all possible locations of the signal to determine the ideal observer’s decision variable. A comparison with a threshold is then done to render a decision as to whether or not the signal is present in the scene. No location information is provided by this observer when the decision is made.

The Hotelling formalism allows signals to be random but runs into difficulty when the signal can be at a random location. If all locations in the field of view are equally probable, the mean difference image is a constant and the linear test statistic (2.17) conveys little information. In fact, no linear observer will perform well in this situation. Nevertheless, as we shall see, the Hotelling framework can still be quite useful in the presence of signal-location uncertainty.

If the only randomness in the signal is its location, it is natural to consider a linear detection strategy that applies a prewhitening matched filter to each of the possible signal locations. Typically, the location that gives the largest Hotelling test statistic is chosen as the tentative location of a signal, and that test statistic is compared with a threshold to decide between signal-present and signal-absent at that location. The operation of finding the maximum is nonlinear, so the overall operation is nonlinear.

If the inverse covariance is the same for each signal location, it can be precomputed and used for each location. Moreover, if the signal is large relative to a pixel, so that its image is approximately shift-invariant, there is no need to recompute the mean data vector for each possible location either. Then, for a signal with uniform location uncertainty, the ideal linear approach becomes one of scanning the prewhitening matched filter over the field of view, and the observer is referred to as a scanning Hotelling observer.23

When the image of the signal is location-dependent, the Hotelling framework can be further generalized to incorporate this information into the observer’s template at each signal location under test. This will be the case, for example, when the pixel size is large relative to the signal. Samson et al.16 investigated the problem of point-target detection when the image is comparable in size with a pixel and randomly located with respect to the pixel. Of course, other forms of signal randomness can be incorporated into the Hotelling formalism by the requisite adjustment in the expected data at each location.

There are several advantages to the Hotelling formalism over computation of the ideal observer’s test statistic in the location-uncertain task. The addition of a scanning mechanism to the Hotelling formalism yields a test statistic that is easily computed. Moreover, it was shown by Nolte and Jaarsma21 that the scanning Hotelling observer achieves a performance level that is nearly ideal in certain regimes, specifically ones in which the signal is equally likely at all locations and the noise variance is small. In addition, the scanning operation results in a determination of the signal’s location along with a test statistic for the detection task.

A useful way to characterize the performance on the joint detection—localization problem is with a localization ROC (LROC) curve,24 which is a plot of the probability of detection and correct localization versus the false-alarm rate; the figure of merit for this task is the area under the LROC curve. If only the probability of detection is of interest, area under the conventional ROC curve (AUC) can be used, even with the scanning strategy. In many cases the area under the LROC correlates well with the AUC for a signal at a fixed location as various system parameters are varied.25 For a discussion of observer strategies that maximize the area under the LROC curve, see Khurd and Gindi.26

4. Estimation Tasks

In a pure estimation task, an object of interest is known to be present, but we wish to determine numerical values for parameters that describe the object. We assemble these parameters into a vector θ(f), and the relevant likelihood is denoted pr(g|θ). An estimate of θ is denoted . The bias and variance of , often combined into a mean square error (MSE), are conventional figures of merit for the estimation task.

There is a well-known lower bound, called the Cramér—Rao bound, on the variance of any estimator.14,15 An unbiased estimator that achieves the bound is said to be efficient. An efficient estimator can be regarded as the ideal observer for an estimation problem, but in many problems no efficient estimator exists. A practical alternative is the maximum-likelihood (ML) estimator, which chooses the value of θ(f) that maximizes pr(g|θ) for the observed g. An ML estimator is efficient if an efficient estimator exists, and it is asymptotically efficient as more or better data are acquired.

Another alternative is an ideal linear estimator, which computes a linear (or affine) functional of the data. A linear estimator is ideal if the bias is zero and the variance is as small as possible. Different forms of the ideal linear estimator use different degrees of prior information and different ways of computing the variance, but a useful one to highlight for this discussion is the generalized Wiener estimator. This estimator is unbiased in a global sense (the average of over all data g and over a prior distribution of θ is equal to the prior mean ), and it minimizes the ensemble mean square error (EMSE) defined in the same global sense. For doubly stochastic data, this estimator is given by27

| (2.19) |

where Kg is the overall (doubly stochastic) covariance matrix of g and Kθ,g is the cross-covariance of θ and the data. The optimal EMSE that results from this estimator is given by

| (2.20) |

The generalized Wiener estimator is the counterpart of the Hotelling observer in two respects: Both use prior knowledge of an ensemble of objects, and both form their output by a linear operation on prewhitened data [cf. Eqs. (2.17) and (2.19)]. For both, it is necessary to determine the overall data covariance and to be able to invert it.

3. STATISTICAL ANALYSIS OF ADAPTIVE OPTICS SYSTEMS

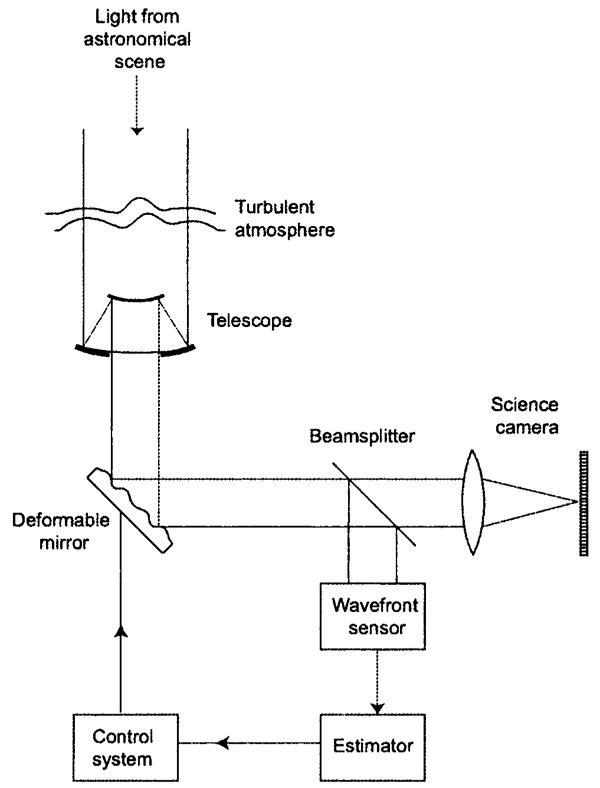

A generic AO system viewing an astronomical scene through a turbulent atmosphere is shown in Fig. 1. The astronomical scene consists of the object being studied (the science object), a reference object, which may consist of one or more natural or laser guide stars, and a background, defined as everything else in the field of view of the science camera. In some cases the reference object may be part of the science object, as when the task is to detect a faint companion around a known star, which then also functions as the guide star.

Fig. 1.

Illustration of an adaptive optics system.

Light passing through the telescope is reflected from a deformable mirror before being relayed to the science camera, which records the final image (or images) of the scene. Part of the light emerging from the deformable mirror is diverted by a beam splitter to a wavefront sensor in order to acquire information about the distorted wavefront. An estimator converts the output of the wavefront sensor to estimates of wavefront parameters, and a control system converts these estimates into control signals to be applied to the deformable mirror. Ideally, the control signals would produce a mirror deformation equal and opposite to the wavefront distortions produced by the atmosphere, and an uncorrupted image would be passed on to the science camera.

The wavefront sensor and estimator are often treated as a single element in the literature; a wavefront sensor in that view is a subsystem that delivers estimates of parameters such as local wavefront tilts. We shall find it convenient, however, to separate these two boxes as in Fig. 1. The wavefront sensor box might, for example, include a lenslet array and an image detector in a Shack—Hartmann configuration, and the estimator box could include computation of image centroids to get the tilts for each lenslet aperture. One reason for showing the estimator box separately is that sophisticated ML methods can also be used for going from the detector output in the sensor to estimates of wavefront parameters.28 These methods are based on accurate statistical models, and they permit estimation of parameters other than simple tilts.

The control system uses the estimated wavefront parameters, sometimes for several consecutive frames of data, to derive the signals to be applied to the actuators in the deformable mirror. The control system is often referred to as a wavefront reconstructor since it is conceptualized as a two-step process, first reconstructing (estimating) the entire wavefront from tilts or other sensor data, then deriving the control signals from the reconstruction. As a black box, however, it just transforms the wavefront parameter estimates to control signals. Usually the transformation is implemented as a matrix multiplication.

Various random processes affect the statistics of the data from the science camera. The most obvious source of randomness is the photon or electronic noise associated with detection of the image by the science camera. The atmosphere would not be a source of randomness if the AO system were perfect, but it is not for several reasons. First, a deformable mirror with a finite number of actuators cannot exactly match a continuous wavefront even if the latter is known perfectly; second, the wavefront sensor itself measures only a finite number of parameters of the wavefront; and third, this measurement is degraded by photon or electronic noise in the sensor. Finally, there is always a temporal delay between measuring the wavefront and applying the correction. For all of these reasons, the corrected wavefront is imperfect and noisy, and the point spread function (PSF) in the main imaging path between the astronomical scene and the science camera is random.

Moreover, as discussed in Section 2, objects being imaged are themselves random. The astronomical scene will usually include some unknown background that has to be treated as a random process, and a laser guide star is random because of laser fluctuations and variable characteristics of the atmospheric layer from which the laser light is scattered. Even the science object can have random parameters; a faint companion, for example, can be at an unknown location and have unknown brightness.

The goal of this section is to analyze the statistical properties of this AO system without saying much about specific implementations and without making very many restrictive assumptions. Emphasis will be on determining the covariance properties of the images, since, as we saw in Subsections 2.C and 2.D, several important figures of merit for task performance can be computed from covariance matrices without knowledge of the full PDF. The results from this section will be related to task performance in Section 4.

A. Notation and Assumptions

1. Science Data

Because our goal is to characterize the statistics of the data from the science camera, we begin by establishing the notation for those data. Suppose that a sequence of J discrete frames of data is acquired, and each frame consists of the outputs of M detector pixels. An individual measurement (one pixel in one frame) can be denoted , where j = 1, … ,J and m = 1, … ,M. The set {gm(j), m=1, ,M} for fixed j is the M × 1 vector g(j), and the set {g(j), j=1, … ,J} is the complete data set from the science camera, denoted G.

The object being imaged is denoted as f(r,t), where r is a 2D vector of x—y coordinates in the telescope focal plane; angular coordinates of the astronomical object are found by dividing x and y by the focal length of the telescope.

The relation between object and mean image is assumed to be linear as in Eq. (2.1). With the extra index for frame number and with r=(x,y,t) and r=(x,y), Eq. (2.1) becomes

| (3.1) |

where the overbar in this case denotes an average with respect to the conditional PDF . Note that linearity in this sense holds even if the PSF is derived from the object, since the average implied by the overbar is conditional on a specified PSF.

Both the object f(r,t) and the kernel are spatiotemporal random processes. The kernel is related to the incoherent PSF of the main imaging path (atmosphere, telescope, deformable mirror, science camera) by

| (3.2) |

where the jth frame extends from time tj to tj+T, dm(r) describes the response of the mth detector pixel, and p(rd,r,t) is the time-dependent incoherent PSF of the main path, with the variable rd denoting position in the detector plane. Note that the PSF is not assumed to be shift-invariant (isoplanatic).

With Eq. (3.2), the linear imaging relation in Eq. (3.1) can be written in detail as

| (3.3) |

In words, the noiseless incoherent image of a particular object through a particular PSF is integrated over the frame time and the pixel area to get .

We shall assume that the object is a slowly varying function of time, essentially constant over one frame of the science camera, in which case Eq. (3.3) becomes

| (3.4) |

where f(j)(r)=f(r,tj

| (3.5) |

| (3.6) |

A useful abstract notation analogous to Eq. (2.2) is

| (3.7) |

where is a linear operator mapping the object sequence F, which is the set of all f(j)(r), to a sequence of digital images, with the jth image in the sequence determined by the kernel . The operator is random, since the PSF p(rd, r,t) and hence the kernel is random.

To summarize the notation for the main imaging path, the science camera produces an image sequence G, where (the average of G over only the measurement noise in the science camera) is related to the object F by a random operator , the properties of which are determined by the set P of random incoherent PSFs, each of which has been temporally averaged over a frame.

2. Control Loop

The control loop comprises the wavefront sensor, estimator, control system, and deformable mirror. The detector on the wavefront sensor consists of L pixels, and it observes the wavefront for a time T’, not necessarily the same as the frame time for the science camera. After the kth frame, the detector on the wavefront sensor produces a set of signals, , or equivalently an L × 1 data vector v(k); the whole set of is denoted V. The total time duration for wavefront sensing is the same as for data acquisition with the science camera, so KT’=JT.

The estimator uses the vector of sensor signals for one frame, v(k), and produces estimates of wavefront parameters for that frame, , which might, for example, be tilts over the subapertures in a Shack—Hartmann sensor. The control system takes estimates of wavefront parameters for previous frames, , and computes drive voltages to apply to the N actuators of the deformable mirror on the current frame; for reasons that will become clear, we denote these signals as or as the N × 1 vector

We assume that the control system is linear and that it makes use of the output of the estimator for the K0 frames preceding the current one. Thus its input—output relation can be written in matrix—vector form as

| (3.8) |

where is the control matrix for a lag of k′ frames. This matrix might be derived by considering some algorithm for wavefront reconstruction and then estimating from the reconstruction, but if these steps are linear, their effect can be included in the control matrix.

3. Mirror and Atmosphere

The wavefront perturbation produced by the deformable mirror is assumed to be a linear combination of influence functions , where N is the number of actuators. If the deformable mirror is in a plane conjugate to the telescope pupil and the voltage is applied to the nth actuator during frame k of the control loop, then the effect of the mirror on the wavefront is represented as

| (3.9) |

where r’ denotes a point in the pupil.

To use the same representation for the mirror and the atmosphere, we expand the atmospheric wavefront as a sum of influence functions plus a residual. For a monochromatic point source that would image to point r0 in the image plane in the absence of aberrations, we express the actual wavefront in the pupil as

| (3.10) |

where the sum is the least-squares fit of by the set of influence functions, and the residual is the portion of the wavefront that cannot be corrected by the deformable mirror.

The corrected wavefront emerging from the mirror is thus given by

| (3.11) |

If αn(t;r0) is approximately constant over the frame period and well approximated by , then the wavefront is compensated as closely as it can be with the given mirror; hence the notation for the mirror drive voltages. Note, however, that the actual αn(t;r0) is a function of the continuous time variable while is a constant during one frame of the control loop.

4. Random Point Spread Functions

The relation of the PSF to the pupil function of the imaging system is well-known. For quasimonochromatic light of wavelength λ and a point object at r0 (in image-plane coordinates), we can define an effective pupil function by

| (3.12) |

where again r’ specifies location in the pupil, aap(r’) is a binary (0–1) function describing the clear aperture of the pupil, and is the phase distortion for an object at r0 (in image-plane coordinates).

The anisoplanatic coherent PSF is a scaled Fourier transform of the pupil function, given by

| (3.13) |

where f is the back focal length of the science camera. The incoherent PSF is proportional to the squared modulus of the coherent one, and the effective PSF for the jth frame is given from Eq. (3.6) as

| (3.14) |

where the constant C and the units of f(r0) are chosen so that is the mean number of photons detected by pixel m during frame j. If the atmosphere and deformable mirror could be modeled jointly as a thin phase screen in the pupil, would be independent of the object coordinate r0 and the system would be isoplanatic.

For simplicity we drop the subscript on r0 in what follows. Moreover, the PSF p(j)(rd,r) will be denoted as p(j) for short, and the set of all p(j) for j=1, … ,J will be denoted by P.

5. Speckle

We can usually assume that the control loop works well enough that the corrected phase excursions are small, so that relation (3.13) can be approximated as

| (3.15) |

where . The form in relation (3.15) is general enough to describe weak atmospheric scintillation if is allowed to be complex.

The Fourier integral in relation (3.15) can be written as

| (3.16) |

where is a 2D spatial frequency (measured in cycles per unit length in the focal plane of the science camera), and are, respectively, the 2D Fourier transforms of and with respect to the pupil coordinate r’, and the asterisk denotes convolution.

From Eq. (3.14), the effective incoherent PSF for the jth frame is given to second order in by

| (3.17) |

where the arguments in the integrand have been omitted for clarity.

The randomness in this PSF stems from the three random processes evident in Eq. (3.11), namely the atmospheric coefficients αn(t;r), the control signals , and the uncorrectable part of the atmospheric turbulence, . The resulting PSF can be regarded as a speckle pattern produced by the weak residual phase variations across the pupil. The last two terms in Eq. (3.17) show that this speckle pattern is modulated or “pinned” by the Airy rings of the ideal PSF (proportional to Aap). Pinned speckle in AO has been studied by several authors,29–32 but usually in the context of univariate statistics such as variance and PDF at a single point. In Section 5 we shall see how to obtain the covariance properties needed for objective assessment of image quality with linear observers.

6. Random Objects

We have already denoted the temporal sequence of astronomical scenes as F, and it will also be useful to decompose an astronomical scene into science object, guide star, and background (everything else), so that

| (3.18) |

The three components are random for different reasons and require different stochastic descriptions. If the task is detection of a faint star, the science object can be modeled as a point source of unknown location and brightness, so it is described fully by a three-dimensional PDF on these parameters. A natural guide star is at a known location and its brightness can be measured independently, so it is not random at all. A laser guide star is random because of variations in laser intensity and fluctuations in the distribution of atmospheric molecules being excited.

The background term could describe a complicated star field, modeled as a random point process,1 or it could refer to the thermal sky background in the far infrared, which bears a striking similarity to the lumpy backgrounds used to model medical images. Even if the background is spatially uniform, it has to be treated as a random process since the background brightness is unknown and possibly time-varying.

B. Triply Stochastic Averaging

In this subsection we generalize the doubly stochastic averaging process introduced in Subsection 2.B in two ways: We add a third source of randomness (the random PSF), and we consider a sequence of correlated images. We begin by developing a general formalism of nested averages over the three main sources of randomness, and then we apply it to calculation of the mean vectors and covariance matrices of the science-camera data. As we know from Section 2, these quantities are important determinants of image quality for both classification and estimation tasks.

1. Nested Probability Density Functions

Let T(G) denote an arbitrary (possibly vector-valued) function of the image sequence G. An overall average of this function is given formally by

| (3.19) |

Consider first the inner average, over G given P and F. Since the PSF and the object are fixed by the conditional PDF, the only remaining randomness in this average is the measurement noise of the science camera. Since different photons are detected in different frames and the frame time is far larger than any electronic correlation time, we can write

| (3.20) |

Moreover, the measurement noise components in different detector pixels in the same frame are usually statistically independent (an exception sometimes occurs in detectors with built-in gain28), in which case

| (3.21) |

Finally, for pure electronic noise (but not for Poisson noise), we can assume that , independent of the random PSF and the object. For Poisson noise, the statistics are determined by the mean, so .

With the object decomposition (3.18), the second average, over the random PSFs P given the object sequence F, really involves pr(P|Fsci,Fbg,Fgs); different circumstances will permit different assumptions about this density. The greatest simplification is when the background and science object make a negligible contribution to the output of the wavefront sensor and when the guide star is nonrandom; in that case, pr(P|F)=pr(P). An intermediate case is that where the randomness of the guide star cannot be neglected, and then pr(P|F)=pr(P|Fgs). Finally, if the wavefront data are derived from the science object itself, we have to use pr(P|F) without simplification. We shall carry along the two extremes, a general pr(P|F) and an independent model, pr(P|F)=pr(P), in what follows.

Even if we assume that P is independent of F, however, it is generally not correct to assume that the PSFs for different science-camera frames, p(j) and p(j′) with j≠j′, are independent; temporal correlations are present because of the atmospheric correlation time and because the control system uses outputs of the wavefront sensor for multiple previous sensor frames to determine the drive signals to the mirror on the current sensor frame.

The final average in Eq. (3.19) is over the object variability, and in principle it requires a huge-dimensional PDF pr(F), or even several such PDFs for different hypotheses if we consider a classification task. In practice, however, the decomposition (3.18) suggests several simpler stochastic descriptions. It will often be valid, for example, to assume that the science object, background, and guide star are statistically independent, so pr(F) =pr(Fsci)pr(Fbg)pr(Fgs), and further assumptions can be applied to each factor. If the science object is independent of time, for example, pr(Fsci) reduces to pr(fsci), where f denotes a single object rather than a sequence. Moreover, as discussed at the end of Subsection 3.B, pr(fsci) might be a low-dimensional PDF on a few parameters of scientific interest. The background PDF pr(Fbg) is more difficult in general, but the figures of merit discussed here require only the mean object and a spatiotemporal autocovariance function. The guide-star PDF pr(Fgs) is trivial for a nonrandom natural guide star but more complicated for a laser guide star.

2. Means

To see how triply stochastic averaging works in a relatively simple case, let T(G) be a single datum , the output of one detector pixel for one frame of data from the science camera. The statistics of depend on the incoherent PSF p(j) and noise realization for frame j, and the noise can depend on the object for that frame in the case of Poisson noise. The PSF for frame j can, however, depend on the object (especially the guide star) for previous frames. The overall (triple-bar) average of this datum can thus be written most generally as

| (3.22) |

If we average over detector noise alone, then the single-bar average is given in component form directly from our assumption of conditional linearity, Eq. (3.7), by

| (3.23) |

where the PSF and object for frames other than the jth are irrelevant for this conditional average, conditioned on PSF and object.

The next average is over the random PSFs P given F. Since averaging is a linear operation that can be interchanged with integration under broad conditions (loosely speaking, so long as all integrals converge), it follows that

| (3.24) |

where the average kernel is related to the average incoherent PSF by [cf. Eq. (3.5)]

| (3.25) |

If we assume that the PSF is temporally stationary and ergodic, the index j on and hence on can be omitted. On the other hand, though the notation does not show it, can depend on the object sequence F and in particular on the guide star over multiple frames.

The final average, over the object variability, yields

| (3.26) |

where the second form holds if p(j) is independent of F.

Each of these component averages is the mth component of a corresponding M × 1 average vector; for example, is the mth component of . We shall also use overbars on the whole set G in a similar fashion. For example, can be regarded as an MJ × 1 vector with the (m, j)th component given by .

3. Covariance Matrices

By analogy to Eq. (2.9), the overall covariance matrix of a triply stochastic image sequence is defined as

| (3.27) |

To be explicit, KG is an MJ × MJ matrix with components given by [cf. Eq. (2.7)]

| (3.28) |

Now, as in Eq. (2.10), add and subtract terms in each factor of Eq. (3.27):

| (3.29) |

Even without any assumptions of independence, the cross covariance vanishes identically, just as it did in Eq. (2.10). A similar argument shows that also vanishes, and we can write

| (3.30) |

where

| (3.31) |

| (3.32) |

| (3.33) |

Thus the overall covariance matrix for a triply stochastic image sequence can be rigorously decomposed into three terms representing, respectively, the contributions from measurement noise, from the random PSF, and from randomness in the object being imaged.

The first term, , comes from readout and Poisson noise, with at least the Poisson component averaged over P and F. With the noise modeled as in Eq. (3.21), we can write

| (3.34) |

The second term, , is the contribution from the random PSF, averaged over the object class. If the AO system worked perfectly, this term would vanish since the PSF would not be random. Also, if the integration time of the science camera goes to infinity and the atmospheric statistics are ergodic, so that infinite time averages are the same as ensemble averages, then again the PSF term vanishes. With a real system and a finite integration time, this term describes the residual speckle pattern from the uncorrected part of the random atmospheric phase. In the most general case, it is given in component form by

| (3.35) |

If P is independent of F, we obtain

| (3.36) |

One way to interpret Eq. (3.36) is to move the average over F outside the integral. The integral then represents the covariance of the sensitivity function as manifest in the data for a particular spatiotemporal object, and the result is averaged over objects.

The final term, , is the contribution from object randomness. In the general case, it is given by

| (3.37) |

If P is independent of F,we get

| (3.38) |

where is the spatiotemporal autocovariance function of the object, sampled at discrete time points:

| (3.39) |

The interpretation of Eq. (3.38) is that is the object autocovariance function mapped through the ensemble-average CD imaging system to the final image sequence from the science camera. Some useful analytic forms for the autocovariance function are given in Appendix A.

When P is independent of F, the object and PSF terms can usefully be combined. Adding Eqs. (3.36) and (3.38) and doing some algebra, we get

| (3.40) |

where

| (3.41) |

Now the PSF and object enter symmetrically into the overall covariance, reflecting the fact that we can do the averages over P and F in either order if they are independent. Note, however, that the autocovariance of the discretized PSF is more complicated than the object autocovariance since depends on a pixel index m in addition to the spatial variable r and the discretized time index j.

Various special cases of Eqs. (3.36), (3.38), and (3.40) can be given. If the object is independent of time, as it often is in astronomy, the superscripts j and j’ can be omitted on everywhere and on Kf. On the other hand, if the object is temporally stationary, then . Similarly, if the atmospheric statistics are temporally stationary, we can omit the superscript on and regard the average over P in Eq. (3.36) as a function of j—j’. To combine these cases, if the atmospheric statistics are temporally stationary and the object is either nonrandom, time-independent (but spatially random), or temporally stationary, then both the object and PSF terms depend on j—j’.

An important practical situation is that when the image detector in the science camera does not introduce pixel-to-pixel correlations, the object is independent of time, the atmospheric statistics are temporally stationary, and the PSF is independent of the object; if all of these conditions are satisfied, the overall covariance can be written in component form as

| (3.42) |

4. TASK PERFORMANCE IN ASTRONOMICAL ADAPTIVE OPTICS

In this section we consider three important tasks that arise in astronomical imaging: detection of point objects on a random background, detection of faint companions such as exoplanets, and photometry. For each task, we briefly discuss how it is performed in current practice, and then we discuss statistically optimal approaches that make use of the formalism developed above. For each task, two distinct outcomes are obtained: expressions for task-based figures of merit for assessment of image quality and methods that might be useful for actually performing the tasks. Computational aspects are treated in Section 5.

A. Detection of Point Objects on a Random Background

The detection of point sources is an essential task in observational astronomy.33 Increasing sensitivity to point sources permits detection of fainter objects up to a given distance or the detection of objects of a given luminosity at larger distances. Applications inside the solar system include the early detection of near-earth asteroids, as well as Kuiper-belt and other trans-Neptunian objects. In stellar astronomy, it is of interest to detect free-floating brown dwarfs and planets, which may make up a substantial fraction of the missing dark matter. Point-source detection is also relevant to the detection of extragalactic objects such as quasars or active galactic nuclei, which are unresolved even with the largest available apertures.

Point-source detection is strongly influenced by the background. A spatially uniform diffuse background creates Poisson noise that interferes with the ability of any observer to perform the detection, and spatial inhomogeneities as in galactic cirrus or dense unresolved star fields can cause spurious peaks that lead to false alarms in the detection task. Even isolated nearby stars can cause false alarms if their PSFs overlap the site of a potential detection; the effect of the PSF is random because the luminosity and precise location of the interfering star are random (or at least unknown to the observer) and the PSF itself is random because of noise in the wavefront sensor and uncorrected atmospheric effects.

1. Current Practice

The standard imaging practice in observational astronomy consists of obtaining one or several images of the object of interest, together with other images that are needed for the image processing. These include dark images, which are obtained with the shutter closed, and flat fields, which are obtained with uniform illumination on the sky or of a screen inside the telescope dome. The dark images reveal structure in the detector readout noise and are subtracted from the object frames. The flat fields are used to determine the detection sensitivity across the field of view. The dark image is subtracted from the flat field, and the object images are divided by the result. Median filters may be applied to sequences of dark images and flat fields to obtain smoother estimates.

The mean and variance of the sky background are usually estimated before source detection is attempted. This information may be obtained either from an image or sequence of images of a source-free field or by median filtering the actual image of the object. Variations of the sky background over the image may be estimated by dividing the image into regions called tiles and estimating the sky background in each tile by median filtering.

For observation at near-infrared wavelengths (as is the case for most current AO systems), the sky background is strong and variable. For broadband observations at wavelengths shorter than 2 μm, an important component of the background is dominated by emission from hydroxyl radicals in the ionosphere, which vary due to the passage of gravity waves.34 Longward of 2 μm, the background is dominated by thermal emission from the sky and telescope. In the far infrared, background due to thermal emission from galactic dust clouds has a fractal-like spatial structure.35

The thermal background from the telescope and sky is usually removed by chopping and nodding; chopping refers to rapidly interchanging the field of view on and off the object, usually by rocking the telescope secondary mirror at several hertz. However, the telescope background estimated from the off-object measurements will not be identical to the background at the object, so the telescope is moved periodically (nodded) so that the new off-object position corresponds to the previous on-object position. This process will introduce artifacts if there are objects present in the regions of interest or if the object is larger than the chop throw. Bertero et al.36 describe a Fourier-based algorithm to restore nodded and chopped images that can remove these artifacts.

After the noise in the image is estimated, objects are usually detected by searching for pixels that are higher than the background by some amount, say three standard deviations. Extended objects are then detected by finding connected pixels that are significantly higher than the noise.37 A more sophisticated approach involving wavelet transforms has been proposed38 but does not seem to be standard practice.

2. Spatiotemporal Hotelling Observer

Though the current practice in astronomy certainly recognizes the importance of background in point-source detection, little attention has been given to optimal detection algorithms that incorporate information about the spatial and temporal correlations of the background or knowledge of the statistics of the random PSF. The Hotelling observer provides a rigorous framework for doing so.

In contrast to the purely spatial Hotelling observer described in Subsection 2.C, however, the Hotelling observer for astronomy should be spatiotemporal. The raw data in most astronomical observations are a sequence of frames from a CCD camera or other electronic detector, but these frames are almost always summed, after various corrections as described above, to get a single image that is used for the science task. There is no reason in principle to believe that this summation preserves the information content of the data, defined in terms of ability of an ideal observer to perform the task. If the task will be performed by a human observer, however, a long sequence of individual frames is of little use, so some form of summation is required. In what follows we discuss the optimal spatiotemporal Hotelling observer applied to an image sequence and show how it can be used to provide a single summed image for direct observation, without loss of information. Suboptimal summation methods are also discussed for comparison.

By analogy to Eq. (2.17), the Hotelling test statistic for a triply stochastic image sequence is

| (4.1) |

Note that the template W is itself an image sequence.

An important special case is where the signal is weak, so that the spatiotemporal covariance matrix is the same under both hypotheses (signal-present and signal-absent) and given in general by Eq. (3.30). Since the noise term in the covariance matrix is diagonal, as shown by Eq. (3.34), the inverse needed to compute tHot(G) exists. Practical ways of finding (or avoiding) the inverse are discussed in Section 5; for now, we simply proceed as if the inverse were known.

The Hotelling test statistic for a weak spatiotemporal signal is

| (4.2) |

where is the mean signal at pixel m in frame j. The averaging implied by the double overbar here is over measurement noise and an ensemble of PSFs. Often we will want to consider the signal that we want to detect as random, and in those cases a third overbar can be added to accord with Eq. (4.1).

We see from Eq. (4.2) that the ideal linear detection strategy is to do a spatiotemporal prewhitening operation followed by a matched filter with the mean signal. The corresponding Hotelling detectability is given by [cf. Eq. (2.18)]

| (4.3) |

If we assume that the object to be detected is independent of time and that the PSF statistics are temporally stationary, the mean difference signal is independent of j, and we can write its value at the mth pixel simply as . The interpretation is that is the image of the signal object blurred by the long-term average of the partially corrected PSF and with measurement noise averaged out. Note that this signal can be random, so long as its ensemble mean is independent of time. In that case, we can rewrite Eq. (4.2) as

| (4.4) |

The set or the vector g(pw) represents a single frame of prewhitened data; after the spatiotemporal prewhitening, it is easy to form the Hotelling test statistic for many different signals that one might seek to detect. In fact, the single image g(pw) can also be presented to a human observer as an optimally preprocessed summary of the raw image sequence.

An alternative strategy, routinely used in astronomy, is simply to sum the frames without the prewhitening step. The test statistic for such a nonprewhitening (NPW) observer is given by

| (4.5) |

The Hotelling and NPW observers are equivalent (their test statistics differ by an irrelevant constant factor) if and only if the data are independent and identically distributed both spatially and temporally. Correlations in either the pixel index m or the frame index j necessarily reduce the detection performance of the NPW observer relative to that of the Hotelling observer; such correlations can arise from either the PSF term or the object term in the data covariance.

3. Signal-Known-Exactly Detection on a Uniform Background

To illustrate the spatiotemporal Hotelling observer, consider the detection of a nonrandom point object on a sky background that is spatially constant over the field of view but can vary randomly with time over the duration of the observation. The autocovariance function for the object in this case is discussed in Appendix A.

The PSF term in the covariance can usually be neglected in this problem. To see this point, we assume that the background is spatially constant at the random time-varying value C(t) and rewrite Eq. (3.36) as

| (4.6) |

We note from Eq. (3.5), however, that is a non-random constant so long as is a constant, which it is whenever the underlying continuous PSF is isoplanatic. Thus, if the atmosphere can be modeled as a thin phase plate in the pupil, the PSF term in the data covariance for a spatially constant background vanishes. If there is substantial anisoplanatism, the PSF term is not identically zero, but it should be small since the image of a constant background should be nearly constant in any practical case.

The mean PSF is still important, however, since it determines the signal to be detected. For a time-independent point object of known luminosity at a known location [the so-called signal-known-exactly (SKE) task], the signal part of the object distribution is fs(r)=Asδ(r—rs). In general the corresponding mean signal in the data will depend on j through , but if the atmosphere is temporally stationary, the mean signal at the mth detector pixel is

| (4.7) |

We also assume that all detector elements are identical, so that the variances of the electronic noise and the Poisson noise from the uniform background are independent of m. With Eqs. (3.42), (A10), and (3.38), the overall covariance matrix is

| (4.8) |

where which can be interpreted as the flatfield image; η is independent of m if the system is isoplanatic and the detector elements are identical.

It is shown in Subsection 5.B that the inverse covariance has the form

| (4.9) |

where Q(j,j’) is defined by Eq. (5.16). With Eqs. (4.2), (4.7), and (4.9), the Hotelling test statistic can be written as

| (4.10) |

where

| (4.11) |

The interpretation of Eq. (4.10) is that the data are first preprocessed by subtracting the estimate ηĈ(j) of the background in each frame and then passed through a matched filter. The background estimate is found, according to Eq. (4.11), by summing over all pixels in each frame and also doing a weighted sum over correlated frames, with the weighting specified by Q(j,j’). The resulting test statistic is optimal in terms of task performance; for detection of a nonrandom point object on a time-varying but spatially uniform background by a linear observer, the test statistic defined in Eq. (4.10) gives the largest Hotelling detectability and, to a good approximation, the largest area under the ROC curve.

From Eqs. (4.3), (4.7), and (4.9), the Hotelling detectability for this task is given by

| (4.12) |

The last line represents the reduction in detectability from having to estimate the background, even when that estimation is done optimally. It can be shown, however, that this term varies asymptotically as M-1, where M is the number of pixels in a frame and hence, in this problem, the number of pixels that can be averaged to get an estimate of the background. Thus

| (4.13) |

which is exactly the expression that would be obtained if the background were nonrandom and known to the observer.

Several important conclusions can be drawn from relation (4.13). Obvious ones are that the detectability is larger for stronger sources, more frames, and less electronic noise. We see also that the detectability is proportional to the sum of the squares of the discretized mean PSF values; since this sum increases with the Strehl ratio of the system, it can be used to quantify the effect of uncorrected atmospheric blur on the flat-background SKE detectability.

Another consequence of the sum over m is that the detectability can be high even if no single pixel exceeds the noise level; detection by a Hotelling observer is determined by the noise in the test statistic tHot(G), and it is only the SNR of that quantity that matters, not the pixel SNR. The Hotelling SNR can be much better than the pixel SNR because of the optimal summation across pixels. Indeed, the human observer also does a very good job of summing over pixels, a fact that was known already to Albert Rose in 1950 and has been very well verified in the decades since then.39

Since data from multiple pixels are used by both human and Hotelling observers, it follows that there is no disadvantage in detection performance to using small detector pixels; if more pixels fit within the mean PSF, more of them are used in forming the test statistic and the performance cannot decrease (at least for pure Poisson noise). This contradicts the common view40 that oversampling is bad because it decreases the SNR; it decreases only the irrelevant single-pixel SNR. There might be engineering or economic arguments for using larger pixels, but they cannot be justified on grounds of detectability.

4. Random, Nonuniform Backgrounds

SKE detection tasks with random, spatially nonuniform backgrounds (so-called lumpy backgrounds) have played an important role in developing realistic task-based figures of merit in medical imaging,1,6–9 and they should prove equally useful in astronomy. The important difference, however, is that the PSF term varies randomly with time in astronomy; therefore, as we shall see, the correlations are spatiotemporal even for a temporally constant background.

When the PSF is independent of the object, the PSF term in the data covariance is given by Eq. (3.36). An important special case is when the time-independent background is spatially stationary (or at least approximately so over the field of view), so that . By the same argument as that used in Eq. (4.6), the term proportional to in Eq. (3.36) vanishes identically if the continuous PSF is isoplanatic, and it should be small in most practical cases. If the PSF is also temporally stationary, Eq. (3.36) becomes

| (4.14) |

This expression is zero if the PSF is nonrandom (perfect AO system), and it is often small by the argument below Eq. (4.6) if the background is spatially uniform but of random level. More generally, spatiotemporal correlations result from an interaction of spatial background structure and a spatiotemporal PSF.

If we combine the object term with the PSF term as in Eq. (3.40) and use the noise term from Eq. (3.34), we get

| (4.15) |

We have dropped the indices on to be consistent with the assumptions of spatial and temporal stationarity, and we have dropped the index on the electronic noise variance σ2 on the assumption that all detectors are identical.

In most practical applications in astronomy, the spatial correlation length of the background is large compared with the field of view of the telescope, and any particular realization of the background might be well described by a constant plus a linear variation in brightness. In that case, the integral in Eq. (4.15) can be evaluated if the means and variances of the constant and linear terms are known.

Once the integral is performed, the evaluation of the Hotelling test statistic and SNR requires a matrix inversion. Common practice in image analysis is to approximate covariance matrices as block-circulant matrices when they arise from digital representations of stationary random processes. This approximation, which is reasonable if the correlation length of the random process is small compared with the image size, permits diagonalization and inversion of the covariance by use of the discrete Fourier transform (DFT). Unfortunately the circulant approximation would rarely be applicable in the present problem because the correlation length is usually long. In that case the matrix in Eq. (4.15) is block-Toeplitz rather than block-circulant, and the inverse can be performed with the help of methods discussed in Subsection 5.B. The method of preconditioned conjugate gradients, in which the circulant approximation to the Toeplitz is used only in the preconditioner, may also be useful.41

However the inverse is performed, the resulting spatiotemporal prewhitening operation will, by definition, perform an optimal linear compensation for the background nonuniformity, consistent with the statistical information built into it. No other linear operation, such as local background estimation, can achieve better performance.

B. Detection of Faint Companions

Over 160 extrasolar planets have been detected in the decade since the detection of a planet orbiting the star 51 Peg.42 Most of these planets have been detected by spectroscopic monitoring of radial velocity variations of the parent stars. Some planets have also been detected by the observation of transits of the planet behind the parent star43 and “anomalous” microlensing events.44

Recently, direct images of what appear to be substellar objects have been obtained by using the adaptive optics system on the Very Large Telescope. In both of these detections, the companion object was approximately 0.7 arcsec from the central star, which was about ten times the diffraction limit, and approximately 6 mag fainter than the central star (in the K band). The central star in the detection by Neuhauser et al.45 is a young T Tauri star, and the companion mass is not tightly constrained [1–42 Jupiter masses (MJup)]. This companion may therefore be a brown dwarf rather than an exoplanet. The detection of Chauvin et al.46 does seem to be an exoplanet, as they constrain the mass to 5±2 MJup (the boundary mass between brown dwarf and exoplanet is controversial but is in the region of 12 MJup). The central star in this detection is itself a brown dwarf, which greatly reduces the magnitude difference with the exoplanet. Direct detection of exoplanets nearer to the diffraction limit around main-sequence stars is much more difficult, as the ratio of the intensities will be ∼ 109 at visible wavelengths and ∼ 106 in the near infrared.

1. Current Practice

The limitation on direct detection of faint companions is noise from the central star, but it is speckle noise associated with the random PSF rather than photon noise that dominates.47,48 These speckles arise from uncorrected atmospheric aberrations and slowly varying telescope or instrumental aberrations. There is a lot of work going on in the development of techniques to suppress the speckles by using coronography and pupil masks.49

A promising approach to removing the speckles is simultaneous differential imaging (SDI). Images are acquired simultaneously in at least two adjacent passbands, in one of which the companion is expected to be dim or absent. If the images are subtracted, then the speckle structure should be practically identical and the detection of any companions is limited by photon noise. A suitable wavelength is 1.6 μm, which corresponds to the methane absorption band found only in cold atmospheres. A critical issue with this technique is the minimization of non-common-path errors between the different wavelength channels.50

2. Covariance Terms

As the discussion above indicates, the dominant covariance term limiting the detection of faint companions is likely to be the PSF term, since it is this term that describes the speckle pattern. We know from Eqs. (3.35) and (3.36) that the PSF term involves an average over random objects, where the object in this problem includes the companion (under the signal-present hypothesis), the host star, light from the host star scattered by a circumstellar dust cloud, and any other background that might be present. For the purpose of the PSF term, however, we can assume that the host star is far brighter than any other light source in the field of view. If we also assume that the host star is nonrandom, with a known luminosity and position, then no averaging over random objects is needed to construct the PSF term. To be specific, if the host star is described by , with A* and r* fixed and known to the observer, then the PSF term is given from Eq. (3.35) as

| (4.16) |

The noise term, though likely to be weak in this application, should be included for a complete theory. The noise is uncorrelated, as shown in Eq. (3.34), but nevertheless the form of the average PSF plays a role since the noise in the mth pixel includes Poisson fluctuations from light originating from the star and coupled into that pixel by the average PSF. In addition, there are noise contributions arising from other background light and from electronic noise. If the electronic noise variance and the mean number of detected background photons are independent of m and j,we get

| (4.17) |

The object term in the data covariance does not include the effects of the direct light from the host star, which is assumed to be nonrandom and known, but it does include light from random dust clouds and general sky background. The background considerations are the same as those in Subsection 4.A, and dust clouds can be included by simulation methods described in Subsection 5.A.

3. Hotelling Observer

For a specified position rc where a companion might or might not be present, the mean signal in the Hotelling formulas is given by , where is the mean brightness of possible companions. This mean brightness enters into the final expressions for detectability but is just an irrelevant constant in the template.

Of course rc is not known a priori, so the Hotelling test statistic can be evaluated for a range of possible locations and the maximum chosen as the final test statistic to be used for the detection decision. If other data suggest possible locations, then the search over locations can be constrained accordingly.

If the observations cover a sufficient time that significant movement of the companion might be expected, a fully spatiotemporal Hotelling observer can be constructed. For a particular assumed orbit, the function rc(t) will be known and the corresponding mean signal will be . For faint companions the covariance matrix is independent of the orbit chosen, so it is straightforward to compute the Hotelling test statistic for a set of possible orbits consistent with other data such as radial velocity measurements.

4. Simultaneous Differential Imaging

To adapt the Hotelling theory to SDI, we need one more index on the data to indicate the spectral band. We denote an observation at pixel m in frame j for band b as , where b=1,2 if there are just two spectral bands. The first step in processing SDI data is to form the difference image, with components given by

| (4.18) |

and the problem is to detect a companion from this new data set.

To simplify the analysis, we assume that the contributions to the data covariance from sky background, dust clouds, and any possible companion are negligible and that the brightness and position of the host are nonrandom and known to the observer. With these assumptions the object term in the covariance is zero.

The noise is assumed to be independent in the two bands, so the noise variances add. With no background, the same readout noise in all pixels of both detectors, and temporally stationary atmospheric statistics, Eq. (4.17) becomes

| (4.19) |

where is the mean sensitivity function and Ab* is the brightness of the host star for band b. One overbar on K has been deleted, since averaging over random objects is not needed.

The PSF term for the difference data is defined by

| (4.20) |

For a nonrandom point object and a wavelength-dependent PSF,

| (4.21) |

but the usual assumption in SDI is that the PSF is independent of wavelength. In that case, we find that

| (4.22) |

Comparing this result with Eq. (4.16), we see that the PSF term has the same form but is reduced in magnitude by (A2*—A1*)2/A*2.

The signal from the faint companion is also reduced. If we denote the object function for the companion in band b as fbs(r), then

| (4.23) |

Thus the noise is doubled in forming the difference image (compared with a single image with the same mean number of photons), the PSF term in the covariance is reduced by a potentially large factor, and the mean signal is also reduced. The signal and both terms in the covariance are reduced further by the need to use narrowband filters in SDI. The net gain or loss in detectability can be determined by comparing the Hotelling SNR2 values for the two data sets G and δG and comparing both with the SNR2 for data obtained over a broader spectral range.

C. Photometry

Astronomers are interested not only in detecting objects but also in determining the flux coming from them. By estimating flux, and in particular estimating the flux in different wavelength ranges (i.e., the color), they can determine physical properties (temperature, age, mass, etc.) of the object in question.

1. Current Practice

The estimation of flux from images, which is referred to as photometry, is usually carried out in one of two ways: aperture photometry or PSF fitting. In aperture photometry the flux in an area including the object is summed, and an estimate of the background is subtracted. The background estimate is obtained simply by summing the flux inside an aperture where no objects are believed to be present. The aperture used to estimate the background is usually an annulus around the object.

Aperture photometry will not work in crowded fields, and in this case it is usual to employ PSF fitting. In this approach a model of the objects in the field is fitted to the data. This requires accurate knowledge of the PSF and is complicated if the PSF varies over the field. Esslinger and Edmunds51 simulated crowded fields with PSFs from a real AO system and used a standard photometry package, DAOPHOT, to estimate stellar magnitudes by PSF fitting. They found that even when using the correct PSF in fitting, the rms error in magnitude determination was as high as 0.1 mag for densities lower than a few stars per square arcsecond, and they concluded that they cannot get good photometric precision in crowded fields. They also tested the photometry of simulated faint companions by means of deconvolution and found that deconvolution gave worse results than PSF fitting.

2. Spatiotemporal Wiener Estimator

Since aperture photometry makes questionable assumptions about the background, and PSF fitting breaks down in crowded star fields, it is reasonable to investigate linear methods like the Wiener estimator that incorporate statistical models of the background.

The Wiener estimator for a doubly stochastic spatial problem was given in Eq. (2.19), and the associated ensemble mean square error (EMSE) was given in Eq. (2.20). For estimation of a parameter θ(F) from triply stochastic spatiotemporal data, these equations generalize to

| (4.24) |

| (4.25) |

Calculation of the grand mean and the two covariances KG and Kθ,G must now include the fact that θ is random. Since θ is a function of F, we can write

| (4.26) |

and the grand mean is

| (4.27) |