Abstract

It is presently unknown whether our response to affective vocalizations is specific to those generated by humans or more universal, triggered by emotionally matched vocalizations generated by other species. Here, we used functional magnetic resonance imaging in normal participants to measure cerebral activity during auditory stimulation with affectively valenced animal vocalizations, some familiar (cats) and others not (rhesus monkeys). Positively versus negatively valenced vocalizations from cats and monkeys elicited different cerebral responses despite the participants' inability to differentiate the valence of these animal vocalizations by overt behavioural responses. Moreover, the comparison with human non-speech affective vocalizations revealed a common response to the valence in orbitofrontal cortex, a key component on the limbic system. These findings suggest that the neural mechanisms involved in processing human affective vocalizations may be recruited by heterospecific affective vocalizations at an unconscious level, supporting claims of shared emotional systems across species.

Keywords: emotion, voice, conspecific vocalizations, functional magnetic resonance imaging, limbic system, auditory cortex

1. Introduction

The accurate perception of affective information in the human voice plays a critical role in human social interactions. In the presence of another individual, whether visual information is available or not, we use acoustic correlates of emotional and motivational states to modulate and predict social behaviour. An increasing number of neuroimaging studies suggest that processing vocal affective information involves brain regions different from those involved in speech perception (Sander & Scheich 2001; Fecteau et al. 2005b, 2007; Grandjean et al. 2005; Schirmer & Kotz 2006). What is unknown is the extent to which these regions are selectively tuned to the affective sounds of the human voice or more broadly tuned to the affective sounds from humans and other animals.

Ever since Darwin argued for a common set of underlying mechanisms based on his comparative studies of human and animal facial and vocal expression (Darwin 1872), there have been attempts to document the continuity of emotional expression in human and non-human animals. Morton (1977, 1982), for example, suggested a suite of motivational–structural rules to capture the affiliative, fearful and aggressive vocalizations of birds and mammals. In general, the vocalizations associated with fear or affiliation tend to be relatively high in frequency and tonal, whereas aggressive vocalizations tend to be low in frequency and noisy. More recently, Owren & Rendall (2001) have argued for a tight relationship between structure and function in the calls of non-human primates, and, in particular, that vocalizations such as alarm calls share a common morphology across species, suitably designed to induce a state of fear, which triggers escape. Yet efforts to empirically test the phylogenetic continuity of the structure–function relationship in the vocalizations have been hindered by an insufficiently precise specification of the acoustic parameters and the affective states to be compared across species (Scherer 1985).

Here, we directly test the continuity hypothesis by measuring human cerebral activity during stimulation with animal affective vocalizations. We asked whether the human brain would show different responses to the affective valence of animal vocalizations and, if so, whether some of this response would overlap with the one observed for human, species-specific vocalizations. Valence is a fundamental dimension of human emotional experience, differentiating positive versus negative emotions/situations (also approach/avoidance; see also Davidson et al. 2000), used both in ‘dimensional’ approaches to emotion (Russell 1980; Lang 1995) and in ‘categorical’ accounts of emotion proposing a small number of ‘basic emotions’, which agree on classifying these basic emotions into positive (e.g. happiness) versus negative (e.g. fear, disgust; Ekman & Friesen 1978). A growing number of human neuroimaging studies reveal cerebral activity related to valence that is dissociated from the response to another important dimension of emotion: arousal (e.g. Anderson et al. 2003; Anders et al. 2004; Winston et al. 2005; Lewis et al. 2007). Valence is also a dimension along which animal affective expressions can be relatively simply categorized, at least when the affective context of the vocalization is known. It thus constitutes a good candidate for a common affective dimension along which to compare the animal and human vocalizations.

We used sets of affective vocalizations from two non-human species—domestic cats (Felis catus) and rhesus monkey (Macaca mulatta)—that were classified into positive versus negative valence by the investigator who made the recordings (N.N. in the cats and M.D.H. in the monkeys) based on the affective context at the time of recording. Thus, a cat meow produced in a distress situation was placed in the ‘negative’ category, while a monkey harmonic arch produced upon discovering rare high-quality food was placed in the ‘positive’ category. Although cat sounds are to some extent familiar to most human adults, the rhesus monkey calls were not.

We scanned normal human volunteers while they performed an animal classification task (monkey/cat) on the vocalizations. We expected to observe the differences in cerebral response related to the valence category of the animal calls. We also compared these cerebral responses with those elicited by human non-speech affective vocalizations such as laughs and screams in order to uncover the possible commonalities in cerebral activation.

2. Material and methods

(a) Participants

Twelve right-handed normal subjects (age: 22±1 years; five females) with no history of neurological or psychiatric conditions participated in this study after giving written informed consent. The study was approved by the ethical committee from the Centre Hospitalier of Université de Montréal.

(b) Affective vocalizations

(i) Animal vocalizations

The animal vocalizations consisted of 36 rhesus vocalizations (recordings by M.D.H.) and 36 cat vocalizations (recordings by N.N.) classified into positive and negative valence based on the affective context of the recording. In both species, the 36 vocalizations consisted of 18 positive and 18 negative valence vocalizations. The cat vocalizations consisted of ‘meows’ or ‘miaows’. Calls recorded in food-related and affiliative contexts were assigned to the positive affect category, whereas agonistic and distress contexts were assigned to the negative category (Nicastro & Owren 2003). The rhesus monkey calls included girneys, harmonic arches and a warble, included in the positive category, and screams and gekkers, included in the negative category.

(ii) Human vocalizations

The human vocalization (described elsewhere; Fecteau et al. 2005a, 2007) consisted of 48 non-linguistic exemplars with the positive (n=24; laughs, sexual vocalizations) and negative valence (n=24; cries, fearful screams). Unlike the animal calls, the human vocalizations also included emotionally neutral vocalizations such as coughs or throat clearings, but those were not included in the statistical comparison with the animal vocalizations.

All stimuli were digitized at a sampling rate of 22 050 Hz and a 16 bit resolution, and were normalized by peak amplitude using Cool Edit Pro (Syntrillium Corporation). They ranged in duration from 300 ms (a positive cat meow) to 2092 ms (a negative cat meow).

(c) Acoustical analyses

Acoustical analyses were performed on each vocalization using Praat (http://www.praat.org) and in-house Matlab scripts (Mathworks, Inc.). For each vocalization, the following measures were obtained: average energy (RMS); duration (DUR); fundamental frequency of phonation (f0) in voiced frames, including median f0 (MEDf0), standard deviation of f0 (SDf0), minimum (MINf0) and maximum f0 (MAXf0); percentage of unvoiced frames (% UNV); and harmonic-to-noise ratio of the voice parts (HNR). Measures are listed in tables 1–4 of the electronic supplementary material for the animal positive, animal negative, human positive and human negative vocalizations, respectively. Table 1 provides a general summary.

Table 1.

General comparison of acoustic characteristics. (Average acoustic measures of the vocalizations used in the neuroimaging experiments. RMS, mean energy (arb. units); DUR, duration (seconds); MEDf0, medial fundamental frequency of phonation (f0, Hz); SDf0, standard deviation off0 (Hz); MINf0; minimum of f0 (Hz); MAXf0, maximum of f0 (Hz); % UNV, percentage of unvoiced frames; HNR, harmonic-to-noise ratio (dB).)

| RMS | DURa,b | MEDf0a,b | SDf0a | MINf0a,b | MAXf0a | % UNVc | HNR | |

|---|---|---|---|---|---|---|---|---|

| animal positive | 0.235 | 0.713 | 726.5 | 339.7 | 369.7 | 1612.8 | 4.40 | 8.12 |

| s.d. | 0.053 | 0.394 | 475.2 | 485.4 | 218.4 | 1695.5 | 10.00 | 5.15 |

| animal negative | 0.212 | 0.983 | 1673.7 | 547.2 | 808.7 | 2940.0 | 21.99 | 10.19 |

| s.d. | 0.089 | 0.388 | 1347.7 | 573.3 | 777.2 | 2524.3 | 29.116 | 6.128 |

| human positive | 0.181 | 1.357 | 441.8 | 138.2 | 278.5 | 824.1 | 36.43 | 8.32 |

| s.d. | 0.056 | 0.481 | 390.6 | 218.2 | 310.3 | 795.5 | 23.00 | 4.80 |

| human negative | 0.216 | 1.485 | 594.3 | 107.8 | 337.9 | 793.7 | 16.48 | 10.12 |

| s.d. | 0.095 | 0.441 | 553.2 | 106.8 | 384.3 | 585.9 | 23.9 | 5.87 |

Significant (p<0.05 Bonferroni corrected for multiple comparisons) main effect of animal/human.

Significant main effect of positive/negative.

Significant interaction between animal/human and positive/negative.

(d) Scanning procedure

The subjects were scanned while performing a monkey/cat classification on the animal vocalizations in one functional run, and a male/female gender classification on the human vocalizations in the second functional run. The subjects performed their choice using two mouse buttons with their right hand (button order counterbalanced across the subjects). Reaction times were recorded using MCF (Digi Vox, Montreal, Canada). Data from three subjects who did not respond to more than 10% of trials were excluded from the analysis. Stimuli were presented using an MR-compatible pneumatic system, with a 5.5 s average stimulus onset asynchrony, and a 25% proportion of null events used as a silent baseline. Each stimulus was presented twice in each run in a pseudo-random order.

Scanning was performed on a 1.5 T MRI system (MagnetomVision, Siemens Electric, Erlangen, Germany) at the Centre Hospitalier of Université de Montréal. Functional scans were acquired with a single-shot echo-planar gradient-echo (EPI) pulse sequence (TR=2.6 s, TE=40 ms, flip angle=908, FOV=215 mm, matrix=128×128). The 28 axial slices (resolution 3.75×3.75 mm in-plane, 5 mm thickness) in each volume were aligned with the AC–PC line, covering the whole brain. A total of 320 volumes were acquired (the first four volumes of each series were later discarded to allow for T1 saturation) in each functional run. After the functional scanning, T1-weighted anatomical images were obtained for each participant (1×1×1 mm resolution). Scanner noise was continuous throughout the experiment providing a constant background (baseline).

(e) Affective ratings

After scanning, the subjects were asked to rate each stimulus (human and animal vocalizations) on perceived affective valence (from extremely negative to extremely positive) using a visual analogue scale (ratings converted to a 0–100 integer). Ratings were obtained for 10 out of the 12 subjects for the animal vocalizations, and 11 out of the 12 subjects for the human vocalizations.

(f) fMRI analysis

Image processing and statistical analysis were performed using SPM2 (Wellcome Department of Cognitive Neurology; Friston et al. 1994; Worsley & Friston 1995).The imaging time series corresponding to each of the two runs (human and animal) was realigned to the first volume to correct for inter-scan movement. Time series were shifted using sinc interpolation to correct for the differences in slice acquisition times. The functional images were then spatially normalized to a standard stereotaxic space (Talairach & Tournoux 1988) based on a template provided by the Montreal Neurological Institute (Evans et al. 1994) to allow for group analysis. Finally, functional data were spatially smoothed with an 8 mm isotropic Gaussian kernel to compensate for residual inter-participant variability and to allow for the application of Gaussian random field theory in the statistical analysis (Friston et al. 1994). Each subject's structural scan was co-registered with the mean realigned functional image and normalized using the parameters determined for the functional images. A mean anatomical image was created from the participants' individual scans, onto which activation was overlaid for anatomical localization.

Data analysis was performed in a two-stage mixed-effects analysis (equivalent to a random-effects analysis) in which BOLD responses for each subject were first modelled using a synthetic haemodynamic function in the context of the fixed-effects general linear model. Subject-specific linear contrasts on the parameter estimates corresponding to the positive versus negative vocalizations (for both the human and animal runs) were then entered into a second-level multiple regression analysis (two variables: (positive–negative) humans and (positive–negative) animals) to perform between-subject analyses, resulting in a t statistic for each voxel. These t statistics (transformed to Z statistics) constitute a statistical parametric map (SPM). The SPMs were thresholded at p=0.001 uncorrected for multiple comparisons across the brain (i.e. a threshold of t>4.0 with d.f.=11). Effect size was extracted for each subject in the regions of significant change across the conditions using MarsBar (Brett et al. 2002).

3. Results

(a) Acoustical comparisons

Statistical comparisons between acoustical measures for the different categories of vocalizations were performed using two-way ANOVAs with human/animal and positive/negative as factors (table 1, see also tables 1–4 of the electronic supplementary material). All measures except mean energy (RMS), % UNV and HNR were significantly different (p<0.005 uncorrected; p<0.05 with Bonferroni corrections) between the animal and human vocalizations, with generally shorter, higher-pitched animal vocalizations. Overall, the positive and negative vocalizations showed significant (p<0.008) differences for stimulus duration (DUR), slightly longer for the negative calls for both the human and animal vocalizations (table 1), and median f0 and minimum f0, both with higher values for the negative vocalizations. A significant interaction between the human/animal and positive/negative was only observed for the % UNV (p<0.05 with Bonferroni correction), highest for the animal negative and human positive vocalizations.

(b) Behavioural measures

During scanning, response accuracy (n=9) for the cat/monkey classification task was 66.7±4.5% for the positive animal vocalizations and 71.0±4.5% for the negative animal vocalizations. For the male/female task, the response accuracy was 77.8±3.5% for the positive human vocalizations and 70.4±4.9% for the negative human vocalizations. All classifications were, therefore, consistently above chance, indicating appropriate perception of auditory stimuli in the scanner environment. No significant effect of task or valence category or interaction between these factors was found on per cent correct scores (all F1,8<4.45, p>0.05). Reaction time data, by contrast, revealed a significant main effect of task (F1,8=29.6, p<0.001), with the animal classification task yielding faster reaction times than the gender classification task, but there was no significant effect of vocalization valence or interaction between task and valence (reaction time from stimulus onset: positive animal vocalizations, 1205±366 ms; negative animal vocalizations, 1239±396 ms; positive human vocalizations, 1773±478 ms; negative human vocalizations, 1776±574 ms).

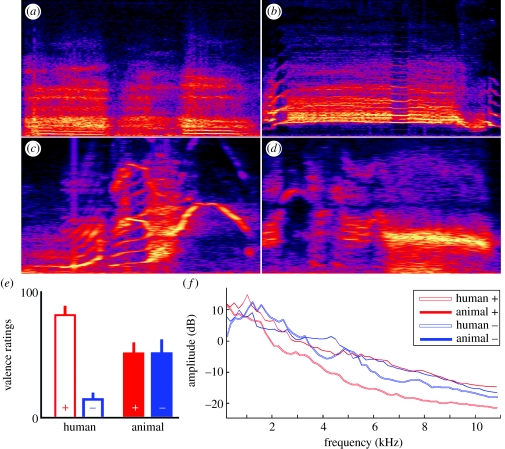

After scanning, participants rated both the human and animal vocalizations on perceived emotional valence. As expected, valence ratings were markedly different (t10=28.2, p<0.001) for the positive and negative human vocalizations (valence ratings 0–100 (mean±s.d.): human positive, 85.1±5.2; human negative, 14±3.6), replicating previous work (Fecteau et al. 2004). In contrast, valence ratings for the animal vocalizations were virtually identical for the positive versus negative categories (figure 1e; valence ratings 0–100 (mean±s.d.): monkey positive, 54.2±5.6; cat positive, 48.0±10.1; monkey negative, 53.7±7.8; cat negative, 49.0±13.1). A two-way repeated measures ANOVA with valence and animal species as factors showed no effect of valence (F1,9=0.026, p>0.8) and no interaction between valence and species (F1,9=0.566, p>0.4), indicating that the participants were unable to extract affective information from the unfamiliar rhesus or more familiar cat vocalizations. The only effect close to significance was a trend for a main effect of species (F1,9=3.83, p=0.08), indicating lower valence ratings for the cat than monkey vocalizations, irrespective of valence category.

Figure 1.

Human and animal affective vocalizations. (a–d) Spectrograms (0–11 025 Hz) of examples of stimuli from each of the four categories of vocalizations. (a) Human positive vocalization: sexual pleasure. (b) Human negative vocalization: scream of fear. (c) Animal positive vocalization: monkey harmonic arch. (d) Animal negative vocalization: monkey scream. (e) Ratings of perceived affective valence on a 0–100 visual analogue scale (0, extremely negative; 100, extremely positive). Note the absence of difference in perceived valence for the animal vocalizations. (f) Long-term average spectrum for the four sound categories.

(c) fMRI analysis

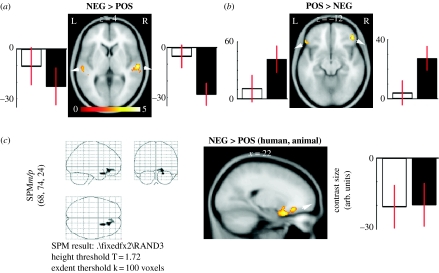

Joint analysis of the functional magnetic resonance imaging (fMRI) data from the human and animal runs revealed several activation differences between the positive and negative vocalizations (figure 2; table 2). Bilateral regions of the secondary auditory cortex, located lateral and posterior to Heschl's gyrus in the left and right hemispheres, were more active for the vocalizations included in the negative category (figure 2a). Note that this effect was largely driven by the animal vocalizations (black bars in figure 2). Conversely, the bilateral regions of lateral inferior prefrontal cortex, pars orbitalis (Brodmann area 47), showed the opposite pattern of response, with more activity for the positively than negatively valenced vocalizations (figure 2b). Again, this effect was largely driven by the animal vocalizations.

Figure 2.

Neuronal response to affective valence differences. Regions of significant (p<0.001 uncorrected) response difference to vocalization valence. (a) Bilateral regions of auditory cortex are more active for negative vocalizations. (b) Bilateral regions of lateral inferior prefrontal cortex respond more to positive versus negative vocalizations. Note that these two effects are largely driven by the animal vocalizations. (c) Unique region of significant (p<0.001 uncorrected) BOLD signal change to positive versus negative vocalizations in the conjunction analysis (response to human AND animal vocalizations). Open square, human vocalizations; filled square, animal vocalizations. This part of the right medial posterior OFC is more active for negative vocalizations. Note similar effect sizes for the human and animal vocalizations. NEG, negative; POS, positive.

Table 2.

Cerebral areas of differential response to positive versus negative vocalizations. (Peaks of significant (p<0.001 uncorrected) BOLD signal difference between positively and negatively valenced vocalizations are listed with approximate anatomical localization, coordinates in Talairach space and t-value of peak. *p<0.005 uncorrected in the conjunction analysis.)

| anatomical localization | Talairach coordinates | t-value | ||

|---|---|---|---|---|

| joint analysis (human OR animal vocalizations) | ||||

| positive>negative vocalizations | ||||

| right inferior prefrontal cortex (BA 47) | 46 | 40 | −12 | 4.50 |

| 50 | 32 | −8 | 4.30 | |

| left inferior prefrontal cortex (BA 47) | −58 | 30 | −6 | 4.32 |

| cerebellum | 8 | −50 | −42 | 5.01 |

| 6 | −44 | −44 | 4.43 | |

| −38 | −72 | −36 | 4.31 | |

| occipital cortex | 6 | −88 | 20 | 4.15 |

| negative>positive vocalizations | ||||

| right superior temporal gyrus (BA 22) | 60 | −18 | 4 | 5.70 |

| 42 | −18 | −2 | 4.20 | |

| right orbitofrontal cortex (BA 47/11) | 20 | 18 | −22 | 5.14 |

| 18 | 32 | −12 | 4.24 | |

| conjunction analysis (human AND animal vocalizations) | ||||

| positive>negative vocalizations | ||||

| no significant cluster | ||||

| negative>positive vocalizations | ||||

| right orbitofrontal cortex (BA 47/11) | 20 | 18 | −22 | 3.56* |

| 18 | 34 | −10 | 2.53 | |

(d) Conjunction analysis

Next, in order to uncover the commonalities in cerebral response to the human and animal vocalizations, we performed a conjunction analysis. This analysis identifies cerebral regions that are significantly activated in the positive–negative difference for both the human and animal vocalizations (Friston et al. 2005; Nichols et al. 2005). Only a single cerebral region, located in the right ventrolateral orbitofrontal cortex (OFC), in Brodmann area 11/47, showed a significant common activation related to valence differences (table 2; figure 2c): this region showed a greater response to the negative vocalizations for both the human and animal parts of the dataset.

4. Discussion

This study was designed to test Darwin's emotional continuity hypothesis at the behavioural and neurophysiological levels, asking whether the mechanisms subserving our classification of valenced, non-speech human sounds generalizes to comparably valenced animal sounds. The results indicate that under the specific test conditions, behavioural responses fail to reveal accurate discrimination of animal affective vocalizations, whereas cerebral blood flow data from the fMRI reveal successful discrimination, with the patterns of activation that mirror those obtained for human affective vocalizations.

There are clearly potential complications with such a comparison. Although valence is a major dimension of affective expression in most theoretical and empirical studies of emotion (Ekman & Friesen 1978; Russell 1980; Lang 1995), a categorization in terms of valence is not always straightforward and should be made with caution in humans, let alone in animals. For example, human laughter can express mirth (positive) but also contempt (negative), depending on the context. For this reason, the set of human affective vocalizations for this study was selected based on prior affective ratings obtained in an independent population of judges on a large number of possible stimuli. We were careful to select prototypical exemplars with significant difference in valence ratings for the positive versus negative categories (Fecteau et al. 2007). Classifying vocalizations based on valence is even more problematic for animal calls. As for humans, the animal vocalizations of a given category do not necessarily always convey the same affective valence. For example, ‘harmonic arches’ are produced by the monkeys upon discovery of high-quality/rare food, and so it is natural to associate a positive valence to these calls. However, such discovery can also be stressful, especially for a subordinate monkey in a large social group where there is an intense aggressive competition and where there is a serious risk of being punished for not sharing food (Hauser 1992). Accordingly, we were careful to avoid a general classification scheme, instead performing the valence classification based on individually recorded vocalizations given in a clearly identified context by an experimenter with years of experience studying the target animal (cf. §2). Thus, while we are aware of the complexity surrounding emotion labels and classification for human and animal vocalizations, we contend that a crude classification in the positive/negative categories constitutes a valid first approach for comparing affective processing of the human and animal vocalizations.

With these limitations in mind, we discuss further the two main findings to emerge from this study. First, there was a contrast between the lack of overt differentiation of the positive and negative animal vocalizations by the subjects (figure 1e), and the robust cerebral activation differences they showed in response to the two categories (figure 2). Second, an important component of the limbic system known to be involved in affective processing, the OFC, was activated similarly by valence differences in the human and animal vocalizations. These results suggest that the differences in cerebral response to the positive and negative animal vocalizations were not only simply related to low-level acoustic differences, but also arise from higher-level affective processes also recruited by the human vocalizations. Together, these findings support the notion of evolutionary continuity in affective expression initially posited by Darwin.

(a) Lack of overt valence differentiation in the animal calls

One important feature of the present results is the lack of overt valence differentiation in the animal vocalizations by the human subjects. While the subjects gave much higher valence ratings to the positive than negative human vocalizations, as expected (figure 1e), they gave essentially similar valence ratings to the positive versus negative animal vocalizations. This indicates that within the constraints of the experiments, subjects were unable to extract information on the valence of the calling context from the vocalizations. This lack of valence differentiation was relatively unsurprising for the monkey calls, given subjects' lack of familiarity with these sounds. A different picture may have been expected for the cat calls, though. First, cats are much more present in the urban environment of the subjects, and although we unfortunately did not collect data on familiarity with cats, the subjects were likely to have been exposed to a much larger number of cat meows in their life than to rhesus calls. Second, domestic cats directly depend on humans for their survival, at least to a greater extent than the macaque colonies raised in semi-captivity on the Island of Cayo Santiago where the recordings were made. Thus, an effective transmission of affective valence in their vocalizations to humans would seem highly advantageous; the meows would be particularly suited to this purpose since they are mostly produced in a cat-to-human contexts (Nicastro & Owren 2003). In their study of classification of cat meows by humans in five different call production contexts (food related, agonistic, affiliative, obstacle or distress), Nicastro & Owren (2003) observed a classification accuracy that was significantly above chance, although modestly so. Also, classification accuracy was better if subjects had lived with, interacted with or had a general affinity for cats (Nicastro & Owren 2003). The fact that the present results do not replicate these earlier findings could be explained either by our use of a somewhat more abstract, positive/negative classification task, or by the fact that the subjects' familiarity with cats had not been controlled.

The lack of overt valence differentiation of the animal calls can also be accounted for by the fact that classification of affective signals in terms of valence may not actually be very easy or natural to begin with. The subjects' success with the human stimuli might have been largely epiphenomenal in the beginning, perhaps because they were influenced in their valence ratings by the fact that they recognized the category of vocalizations (e.g. recognizing a laughter as such, then attributing to the stimulus the positive valence of the prototypical laughter). Such a strategy would have been encouraged by the fact that we purposely chose unambiguous, prototypical human vocalizations. But it would not give good results for the animal calls that were either all of the same broad category (meows for cats) or from call categories unknown to the subjects (e.g. grunts, harmonic arches). This possibility should be tested in future studies using less prototypical human vocalizations or categorization tasks based on another affective dimension, e.g. arousal (the ‘activity dimension’ of emotion).

(b) Lack of brain behaviour correlation

We did not observe overt valence differentiation of the animal calls by our subjects. This stands in contrast to the fMRI data that revealed robust differences in the patterns of activation elicited by the positive versus negative calls, mostly driven by the animal vocalizations (figure 2). Although this pattern of results—differences in brain activation not translated into observable behavioural difference—may appear surprising, there is now compelling evidence that the relation between behavioural performance and cerebral activation is complex, variable across brain regions and by no means linear. The lack of a straightforward relation between behaviour and brain activity is illustrated in many recent papers (e.g. Grossman et al. 2002; Handy et al. 2003). Wilkinson & Halligan (2004), in particular, discuss the danger of excluding new potentially informative indices by demanding that all patterns of brain activation be paired with a demonstrable behavioural correlate (Wilkinson & Halligan 2004).

Here, fMRI revealed significant differences in brain response to the positive and negative vocalizations. A nearly symmetrical portion of the secondary auditory cortex in the two hemispheres showed a significantly greater response to the negative than positive vocalizations (figure 2a; table 2). Similar activations have also been reported in the context of affective processing of the human voice (Sander & Scheich 2001; Grandjean et al. 2005). A straightforward interpretation of this auditory activation is that it reflects low-level acoustical differences between the vocalizations. Indeed, acoustical analyses revealed a number of significant structural differences related to vocalization valence (table 1): the negative vocalizations were indeed characterized by longer calls, well suited to activate auditory cortex more and by higher median and minimum f0 values. The magnitude of these acoustical differences between the positive and negative vocalizations was also greater for the animal than human vocalizations (difference negative–positive: DUR: animal 170 ms, human 128 ms; MINf0: animal 439 Hz, human 59.4 Hz; MEDf0: animal 947.2 Hz, human 152.5 Hz), which may explain why the effect sizes for the negative–positive contrast was of higher magnitude for the animal vocalizations. The greater activation of auditory cortex by the negative than positive vocalizations can also be related to the emotionally driven enhanced response of auditory cortex to negatively reinforced stimuli found in conditioning studies in both humans and rodents.

The opposite pattern of response was observed in prefrontal cortex. Nearly symmetrical regions in the two hemispheres, located in the inferior lateral part of inferior prefrontal cortex, pars orbitalis (Brodmann area 47), were more activated for the positively than negatively valenced vocalizations (figure 2b). Like the activations in auditory cortex, these lateral prefrontal activations could reflect processing of acoustical differences associated with valence, although perhaps at a higher level of integration. They may also correspond to the recruitment of voice-sensitive auditory field in human prefrontal cortex (Fecteau et al. 2005b), possibly homologous to the vocalization-sensitive auditory fields recently identified in macaque prefrontal cortex (Romanski et al. 2005).

(c) Cross-specific activation of OFC

Our results reveal a differential pattern of neural activation in response to the positive versus negative animal vocalizations. Although we are not licensed to conclude that this result is uniquely mediated by a mechanism that computes affective valence, our conjunction analysis strengthens this interpretation. This analysis allowed us to uncover activations of lesser magnitude (a less conservative threshold was used) but present in response to both the human and animal vocalizations. Only one cerebral region showed statistically significant patterns of activation. Specifically, an extended portion of the right OFC showed activation common to the human and animal vocalizations, responding more to the negative than positive vocalizations regardless of their source, human or animal (figure 2c).

The OFC is a key component of the limbic system involved in emotional control and decision making in relation to cognition and emotion (Rolls 1996; Bechara et al. 2000; Adolphs 2002). Its activation has been reported by previous neuroimaging studies of emotional processing in the auditory domain. Blood et al. (1999), for instance, measured the cerebral response to musical scales of increasing dissonance. They observed significant correlations between cerebral activity and consonance in a large part of the OFC, although with an opposite direction: OFC activity was greater for the most consonant pieces (Blood et al. 1999) unlike the greater response to the negative vocalizations observed here. The OFC activation in the context of voice processing is also consistent with the reports of impaired voice expression identification in patients with ventral frontal lobe damage (Hornak et al. 1996).

Importantly, the common OFC response to human and animal vocalizations occurred despite the differences across species in the underlying acoustical morphology of these vocalizations (cf. figure 1f; table 1). Thus, OFC activation probably reflects a more refined, abstract representation of affective valence, less directly dependent on the fine structure of the stimuli than at the lower levels of cortical processing, and potentially also recruited by the stimuli from another species, or in another sensory modality (Lewis et al. 2007).

Interestingly, a recent study using positron emission tomography in awake macaques revealed a comparable, although slightly more medial, activation of the ventromedial prefrontal cortex when comparing the conditions of listening to the negatively (screams) versus positively valenced vocalizations (coos) or non-biological control sounds (Gil-da-Costa et al. 2004), suggesting that the response to the affective valence of vocalizations observed in the human brain may have a counterpart in another primate species, in particular the rhesus macaques studied here as well.

5. Conclusion

In summary, we present two significant findings. First, the human brain responds differently to animal vocalizations of the positive or negative affective valence even when there is no evidence of conscious differentiation of calls as a function of valence. Although this dissociation between behavioural and neurophysiological measures is surprising, it is a feature of several neuroimaging studies of cognition that illustrates the complex relation between cerebral activation and behaviour (Wilkinson & Halligan 2004) and provides interesting insight into affective processing in the human brain. Second, at the level of OFC, the negatively valenced calls of animals are as likely to trigger brain activation as similarly valenced human sounds. Overall, this suggests an important degree of evolutionary continuity with respect to the underlying mechanisms. This continuity is not surprising since the physiology of vocal production is largely similar across mammals such as monkeys, cats and humans (Fitch & Hauser 1995), in particular in its affective aspects (Darwin 1872). Future studies should further explore both this continuity and the possibility of discontinuity, while furthering our understanding of the precise functional significance of the OFC activations observed here. It will be important, in particular, to study other dimensions of affective expression such as ‘arousal’ or ‘activity’, which would perhaps not show the same degree of asymmetry in the ratings between the human and animal vocalizations.

Acknowledgments

The study was approved by the ethical committee from the Centre Hospitalier of Université de Montréal.

We thank Jean-Maxime Leroux and Boualem Mensour for their assistance with fMRI scanning, Cyril Pernet for useful discussions and Drew Rendall for constructive comments on a previous version of the manuscript. The field research that led to the recording of the macaque vocalizations was supported by the National Center for Research Resources (NCRR; grant no. CM-5-P40RR003640-13), a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NCRR or NIH. This work is also supported by the Canadian Institutes of Health Research (P.B., S.F. and J.L.A.), the Canada Research Chairs Program (J.L.A.), France Telecom (P.B.) and gifts from J. Epstein and S. Shuman (M.D.H.).

Supplementary Material

Table 1: acoustic characteristics of the animal positive vocalizations. Table 2: acoustic characteristics of the animal negative vocalizations. Table 3: acoustic characteristics of the human positive vocalizations. Table 4: acoustic characteristics of the human negative vocalizations

References

- Adolphs R. Neural systems for recognizing emotion. Curr. Opin. Neurobiol. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. doi:10.1016/S0959-4388(02)00301-X [DOI] [PubMed] [Google Scholar]

- Anders S, Lotze M, Erb M, Grodd W, Birbaumer N. Brain activity underlying emotional valence and arousal: a response-related fMRI study. Hum. Brain Mapp. 2004;23:200–209. doi: 10.1002/hbm.20048. doi:10.1002/hbm.20048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson A.K, Christoff K, Stappen I, Panitz D, Ghahremani D.G, Glover G, Gabrieli J.D, Sobel N. Dissociated neural representations of intensity and valence in human olfaction. Nat. Neurosci. 2003;6:196–202. doi: 10.1038/nn1001. doi:10.1038/nn1001 [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio A. Emotion, decision making and the oribitofrontal cortex. Cereb. Cortex. 2000;10:295–307. doi: 10.1093/cercor/10.3.295. doi:10.1093/cercor/10.3.295 [DOI] [PubMed] [Google Scholar]

- Blood A.J, Zatorre R.J, Bermudez P, Evans A.C. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat. Neurosci. 1999;2:382–387. doi: 10.1038/7299. doi:10.1038/7299 [DOI] [PubMed] [Google Scholar]

- Brett, M., Anton, J. L., Valabregue, R. & Poline, J. B. 2002 Region of interest analysis using an SPM toolbox. In Proc. 8th HBM, Sendai, Japan, June 2002

- Darwin C. John Murray; London, UK: 1872. The expression of the emotions in man and animals. [Google Scholar]

- Davidson R.J, Jackson D.C, Kalin N.H. Emotion, plasticity, context, and regulation: perspectives from affective neuroscience. Psychol. Bull. 2000;126:890–909. doi: 10.1037/0033-2909.126.6.890. doi:10.1037/0033-2909.126.6.890 [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W.V. Consulting Psychologists Press, Inc; Mountain View, CA: 1978. Facial action coding. [Google Scholar]

- Evans A.C, Kamber M, Collins D.L, MacDonald D. An MRI-based probabilistic atlas of neuroanatomy. In: Shorvon S.D, Fish D, Andermann F, Bydder G.M, Stefa H, editors. Magnetic resonance scanning and epilepsy. Plenum Press; New York, NY: 1994. pp. 263–274. [Google Scholar]

- Fecteau S, Armony J, Joanette Y, Belin P. Priming of non-speech vocalizations in male adults: the influence of the speaker's gender. Brain Cogn. 2004;55:300–302. doi: 10.1016/j.bandc.2004.02.024. doi:10.1016/j.bandc.2004.02.024 [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony J.L, Joanette Y, Belin P. Judgment of emotional nonlinguistic vocalizations: age-related differences. Appl. Neuropsychol. 2005a;12:40–48. doi: 10.1207/s15324826an1201_7. doi:10.1207/s15324826an1201_7 [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony J.L, Joanette Y, Belin P. Sensitivity to voice in human prefrontal cortex. J. Neurophysiol. 2005b;94:2251–2254. doi: 10.1152/jn.00329.2005. doi:10.1152/jn.00329.2005 [DOI] [PubMed] [Google Scholar]

- Fecteau S, Belin P, Joanette Y, Armony J. Amygdala responses to nonlinguistic emotional vocalizations. Neuroimage. 2007;36:480–487. doi: 10.1016/j.neuroimage.2007.02.043. doi:10.1016/j.neuroimage.2007.02.043 [DOI] [PubMed] [Google Scholar]

- Fitch W.T, Hauser M.D. Vocal production in nonhuman primates: acoustics, physiology, and functional constraints on “honest” advertisement. Am. J. Primatol. 1995;37:191–219. doi: 10.1002/ajp.1350370303. doi:10.1002/ajp.1350370303 [DOI] [PubMed] [Google Scholar]

- Friston K.J, Jezzard P, Turner R. Analysis of functional MRI time-series. Hum. Brain Mapp. 1994;1:153–171. doi:10.1002/hbm.460010207 [Google Scholar]

- Friston K.J, Penny W.D, Glaser D.E. Conjunction revisited. Neuroimage. 2005;25:661–667. doi: 10.1016/j.neuroimage.2005.01.013. doi:10.1016/j.neuroimage.2005.01.013 [DOI] [PubMed] [Google Scholar]

- Gil-da-Costa R, Braun A, Lopes M, Hauser M.D, Carson R.E, Herscovitch P, Martin A. Toward an evolutionary perspective on conceptual representation: species-specific calls activate visual and affective processing systems in the macaque. Proc. Natl Acad. Sci. USA. 2004;101:17 516–17 521. doi: 10.1073/pnas.0408077101. doi:10.1073/pnas.0408077101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Pourtois G, Schwartz S, Seghier M.L, Scherer K.R, Vuilleumier P. The voices of wrath: brain responses to angry prosody in meaningless speech. Nat. Neurosci. 2005;8:145–146. doi: 10.1038/nn1392. doi:10.1038/nn1392 [DOI] [PubMed] [Google Scholar]

- Grossman M, Smith E.E, Koenig P, Glosser G, DeVita C, Moore P, McMillan C. The neural basis for categorization in semantic memory. Neuroimage. 2002;17:1549–1561. doi: 10.1006/nimg.2002.1273. doi:10.1006/nimg.2002.1273 [DOI] [PubMed] [Google Scholar]

- Handy T.C, Grafton S.T, Shroff N.M, Ketay S, Gazzaniga M.S. Graspable objects grab attention when the potential for action is recognized. Nat. Neurosci. 2003;6:421–427. doi: 10.1038/nn1031. doi:10.1038/nn1031 [DOI] [PubMed] [Google Scholar]

- Hauser M.D. Costs of deception: cheaters are punished in rhesus monkeys (Macaca mulatta) Proc. Natl Acad. Sci. USA. 1992;89:12 137–12 139. doi: 10.1073/pnas.89.24.12137. doi:10.1073/pnas.89.24.12137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornak J, Rolls E.T, Wade D. Face and voice expression identification inpatients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia. 1996;34:247–261. doi: 10.1016/0028-3932(95)00106-9. doi:10.1016/0028-3932(95)00106-9 [DOI] [PubMed] [Google Scholar]

- Lang P.J. The emotion probe. Studies of motivation and attention. Am. Psychol. 1995;50:372–385. doi: 10.1037//0003-066x.50.5.372. doi:10.1037/0003-066X.50.5.372 [DOI] [PubMed] [Google Scholar]

- Lewis P.A, Critchley H.D, Rotshtein P, Dolan R.J. Neural correlates of processing valence and arousal in affective words. Cereb. Cortex. 2007;17:742–748. doi: 10.1093/cercor/bhk024. doi:10.1093/cercor/bhk024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morton E.S. On the occurrence and significance of motivation-structural rules in some birds and mammal sounds. Am. Nat. 1977;111:855–869. doi:10.1086/283219 [Google Scholar]

- Morton E.S. Grading, discreteness, redundancy, and motivaitional–structural rules. In: Kroodsma D, Miller E.H, editors. Acoustic communication in birds. vol. 1. Academic Press; New York, NY: 1982. pp. 183–212. [Google Scholar]

- Nicastro N, Owren M.J. Classification of domestic cat (Felis catus) vocalizations by naive and experienced human listeners. J. Comp. Psychol. 2003;117:44–52. doi: 10.1037/0735-7036.117.1.44. doi:10.1037/0735-7036.117.1.44 [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline J.B. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. doi:10.1016/j.neuroimage.2004.12.005 [DOI] [PubMed] [Google Scholar]

- Owren M.J, Rendall D. Sound on the rebound: bringing form and function back to the forefront in understanding nonhuman primate vocal signaling. Evol. Anthropol. 2001;10:58–71. doi:10.1002/evan.1014 [Google Scholar]

- Rolls E.T. The orbitofrontal cortex. Phil. Trans. R. Soc. B. 1996;351:1433–1444. doi: 10.1098/rstb.1996.0128. doi:10.1098/rstb.1996.0128 [DOI] [PubMed] [Google Scholar]

- Romanski L.M, Averbeck B.B, Diltz M. Neural representation of vocalizations in the primate ventrolateral prefrontal cortex. J. Neurophysiol. 2005;93:734–747. doi: 10.1152/jn.00675.2004. doi:10.1152/jn.00675.2004 [DOI] [PubMed] [Google Scholar]

- Russell J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980;39:1161–1178. doi:10.1037/h0077714 [Google Scholar]

- Sander K, Scheich H. Auditory perception of laughing and crying activates human amygdala regardless of attentional state. Brain Res. Cogn. Brain Res. 2001;12:181–198. doi: 10.1016/s0926-6410(01)00045-3. doi:10.1016/S0926-6410(01)00045-3 [DOI] [PubMed] [Google Scholar]

- Scherer K. Vocal affect signaling: a comparative approach. In: Rosenblatt J, Beer C, Busnel M.-C, Slater P.J.B, editors. Advances in the study of behavior. vol. 15. Academic Press; New York, NY: 1985. pp. 189–244. [Google Scholar]

- Schirmer A, Kotz S.A. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 2006;10:24–30. doi: 10.1016/j.tics.2005.11.009. doi:10.1016/j.tics.2005.11.009 [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Thieme; New York, NY: 1988. Co-planar stereotaxic atlas of the human brain. [Google Scholar]

- Wilkinson D, Halligan P. The relevance of behavioural measures for functional-imaging studies of cognition. Nat. Rev. Neurosci. 2004;5:67–73. doi: 10.1038/nrn1302. doi:10.1038/nrn1302 [DOI] [PubMed] [Google Scholar]

- Winston J.S, Gottfried J.A, Kilner J.M, Dolan R.J. Integrated neural representations of odor intensity and affective valence in human amygdala. J. Neurosci. 2005;25:8903–8907. doi: 10.1523/JNEUROSCI.1569-05.2005. doi:10.1523/JNEUROSCI.1569-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley K.J, Friston K.J. Analysis of fMRI time-series revisited-again. Neuroimage. 1995;2:173–181. doi: 10.1006/nimg.1995.1023. doi:10.1006/nimg.1995.1023 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table 1: acoustic characteristics of the animal positive vocalizations. Table 2: acoustic characteristics of the animal negative vocalizations. Table 3: acoustic characteristics of the human positive vocalizations. Table 4: acoustic characteristics of the human negative vocalizations