Abstract

People are especially efficient in processing certain visual stimuli such as human faces or good configurations. It has been suggested that topology and geometry play important roles in configural perception. Visual search is one area in which configurality seems to matter. When either of 2 target features leads to a correct response and the sequence includes trials in which either or both targets are present, the result is a redundant-target paradigm. It is common for such experiments to find faster performance with the double target than with either alone, something that is difficult to explain with ordinary serial models. This redundant-targets study uses figures that can be dissimilar in their topology and geometry and manipulates the stimulus set and the stimulus–response assignments. The authors found that the combination of higher order similarity (e.g., topological) among the features in the stimulus set and response assignment can effectively overpower or facilitate the redundant-target effect, depending on the exact nature of the former characteristics. Several reasonable models of redundant-targets performance are falsified. Parallel models with the potential for channel interactions are supported by the data.

Keywords: redundant-target effect, similarity, systems factorial technology, configural, topology

People detect more quickly, and more accurately, a left parenthesis, when presented within a certain context, “( ),” than when presented alone, “(” (Pomerantz, Sager, & Stoever, 1977; Pomerantz et al., 2003). Similarly, people seem to better identify a nose, within the context of a face, than when the nose is presented alone (Tanaka & Farah, 1993, 2003; Tanaka & Sengco, 1997).1 This kind of result is counterintuitive from certain basic perspectives, given that context adds more details to the display and therefore distracts attention and/or increases processing load as well as possibly masking the target.

Also, in many such studies context is controlled such that by itself it provides no information with respect to the required response. Nonetheless, people exhibit better performance in the presence of certain contexts than with other contexts or with less context. What is so special about these facilitating contexts? What is special about faces and good figures that aids in their processing? According to one view, highly symmetrical (i.e., good) or highly learned (faces) figures are processed as gestalts, that is, as unified wholes (Garner, 1974; Palmer, 1999). Another related view suggests that combining features in a felicitous way may create emergent features, such as symmetry or closure.

On another front, it has been found in scores of experiments that increasing redundancy of features, each of which carries sufficient information for a correct response, can dramatically increase speed or accuracy in responses (e.g., Garner, 1974; Raab, 1962), sometimes beyond what is expected on the basis of ordinary parallel processing (Miller, 1982; Townsend & Nozawa, 1995). The traditional statistic for testing superiority of the redundant stimuli relative to a single stimulus is called the redundant-target effect (RTE). The critical contrast for the RTE compares mean response time (RT) on the faster of the two single-target conditions versus mean RT on the redundant-target condition. We should note initially that in some studies, such as the present one, feature search could actually be carried out, for instance, by identifying the entire figure of which it is a part. Nonetheless, given that much of the redundant-target literature is couched in terms of search constructs, we continue with that terminology except in cases in which an alternative perspective is needed.

Some clarification may be needed when adopting visual-search terminology—specifically, the term feature—to this article. Throughout its history, the term feature has been clouded by its many scattered meanings and by the many near synonyms to which it is linked, including property, attribute, dimension, aspect, level or value, part, component, and element. All refer to a subset of a whole stimulus; they are building blocks that can be reassembled in the proper configuration into that whole. Whole stimuli can be described in any number of ways: For example, a triangle can be described as three suitably arranged line segments, as three vertices, as one vertex plus one line, as an infinite number of points, and so on. Accordingly, these could all be candidates for the basic parts from which a triangle emerges. Additionally, a triangle has global features, such as size, closure, and symmetry, that cannot be localized to any subset or region of the stimulus but must be determined from its entirety.

For complex stimuli such as faces, one can think of more features, perhaps an infinite number. One might claim, for example, that the product of eye width times nose length divided by the mouth-to-ear size ratio is a feature. Given an infinity of such features, it is helpful to distinguish between physical features of the stimulus (those that can be measured explicitly) and those features that happen to be encoded in human perception and so have psychological reality. It would be useful if the literature used different terms to distinguish these two, but for now that is rare. It would likewise be helpful if the literature more accurately distinguished between physical similarity (objective feature overlap, etc.) and perceived similarity, but that too is a task for the future.

In this article, we use the word feature to refer mainly to physical properties. It is in this sense that we characterize as features the diagonal lines and └ shapes from which we construct our triangles and arrows. In doing so, we do not intend to imply or presume that these are necessarily the features used in human vision. Instead, we engage in a “what if” game whereby if these were the operative features, then certain predictions follow. In many of our experiments, those predictions are violated, implying that the diagonals and └s are clearly not the features attended to, which in turn supports the notion that other features, such as topological ones, are used in some conditions. In fact, more generally, we suspect that the actual features used by people are likely adaptive in nature, depending on many aspects of the stimulus set and environment.

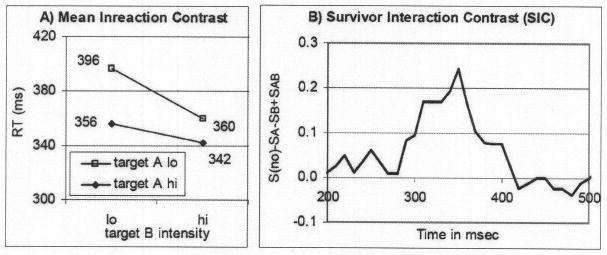

In this work, we hoped to learn more about configural perception and feature redundancy as well as their interplay by using the tools from systems factorial technology, a synthesis of RT methodologies from factorial approaches (e.g., Schweickert, 1978; Sternberg, 1969; Townsend & Ashby, 1982); redundant-targets designs (e.g., Egeth, 1966; Miller, 1982; Townsend & Nozawa, 1995), which are especially good with capacity assessment; and decisional stopping rules (e.g., Townsend & Colonius, 1997; Van Zandt & Townsend, 1993). Also, feature dependencies play an important theoretical role but are less directly addressed with our RT measures than with accuracy measures (see, e.g., Ashby & Townsend, 1986). Finally, Wenger and Townsend (2001) and Townsend and Wenger (2004) have proposed axioms concerning processing of configural objects that are expressed in terms of concepts and hypotheses from systems factorial technology and hence are testable using the latter's methodologies.

One of the major axioms, and the one emphasized here, concerns capacity interpreted in terms of speed of processing. The benchmark we use is based on measures of single features (dimensions, etc.) and how they should act when placed together to form a composite stimulus, if capacity is at the level we should expect if standard parallel processing is in operation (e.g., Townsend & Nozawa, 1995; Wenger & Townsend, 2000). A brief exposition of capacity and how we apply it here will be offered below. First, we need a bit more motivation from the relevant literature.

Pomerantz et al. (1977) provided a demonstration of a salutary effect of context that provides configural information. They showed that participants were faster in judging the direction of curvature of the left-hand member of a pair of parentheses such as “( ),” than they were in judging the curvature of the same member presented alone, “(.” This facilitation, referred to as the configural superiority effect, was even more apparent when they used a localization-of-oddity task (indicating the location of an odd target surrounded by distractors took 1,450 vs. 2,400 ms for the context and no-context conditions, respectively). Similar results were obtained with the same task and a different set of stimuli: Discriminating between orientations of diagonal lines with no context added took 1,884 ms. The addition of a context that provided no task relevant information (but contributed to closure or symmetry) reduced RTs to as little as 749 ms. Figure 1 illustrates the original, context, and composite (target plus context) displays of these experiments.

Figure 1.

An example of the stimuli used by Pomerantz et al. (1977) to produce a configural superiority effect. When participants had to localize the odd item in the original display (A), it took them 1,884 ms. When an informationless context (B) was superimposed on the original display, thus creating a new set (C), response times were reduced to as little as 749 ms.

In the aforementioned works of Pomerantz et al. (1977) and Tanaka and Farah (1993), the term context referred to the set of visual features (lines, face contours) that were presented on the display but were not the target features. The lexical definition of context, however, is broader: “the interrelated conditions in which something exists or occurs” (see http://www.merriam-webster.com/dictionary/context). Note that these conditions should not necessarily be salient to the participant and may include other components of the field of vision such as the computer monitor, participants' memory of past experience, and so on. Let us take stock of three types of context germane to our study:

Displayed context: the set of items that are presented on the screen with the target but are not targets. In visual search theories such items are often referred to as distractors (cf. Duncan & Humphreys, 1989; Treisman & Gelade, 1980; Wolfe, 1994). We, however, advise caution using the term distractor because it hints that performance may be hindered by the presence of the no-target item(s), whereas the phenomenon that we pursue in this article (i.e., configural superiority effect) is concerned with potentially beneficial effects of context. Clearly, the added lines that were used as context by Pomerantz et al. (1977) and appear in Figure 1 fall into the category of displayed context.

Nondisplayed context: items or features that are part of the stimulus set but are not currently presented. In a letter recognition task, when a person is asked to recognize an impoverished letter, say A, the rest of the alphabet letters, or a subset of the alphabet, may constitute the nondisplayed context. Many studies, including Townsend and Landon (1983) and more recently Rouder (2004), demonstrated how nondisplayed context factors, such as variations in set size, affect processing. More generally, from an information theory point of view, our perception of, say, a visual pattern is determined not only by the properties of the pattern but also by the set of implied alternatives—displayed and not displayed. For example, Garner (1970) argued that argued that “Good patterns have few alternatives” and that “the very best patterns are those which are unique, having no perceptual alternatives” (p. 42).

Response assignment: the assignment of stimuli to particular responses. The Simon effect (Simon, 1969) illustrates how the compatibility of responses to presented stimuli affects performance: RTs are faster when stimulus and response occur at the same location than when they do not, even if the stimulus location is irrelevant to the task. More recently, Grice, Canham, and Boroughs (1984, Experiments 3 and 4) provided evidence for the effect of stimulus–response compatibility in a redundant-target search task, much like the type of task we use in the current study (but with different stimuli). They presented target letters (H, S) either on the left- or on the right-hand side of a fixation point and showed that responses on single-target displays were faster (and consequently led to a larger RTE) when the target appeared on the same side as the required response. Response assignment, too, is well within the broader definition of the experimental context.

All three contextual factors play a role in determining RTs in cognitive tasks. Our goal is to arrange our contexts, given in terms of stimulus set and response instructions, so that the RTE and the configural superiority effect may either counter or facilitate each other. In implementing this strategy, we also vary what we call a higher order stimulus similarity based on emergent properties arising from features presented in the presence of other features. Some of Pomerantz et al.'s (1977, 2003) results, for instance, are interpreted by way of topological constructs, an approach supported in the research of Chen (2005; Chen et al., 2003). Our stimuli differ in their topological similarity. In our experiments we are not able to establish unequivocally that topological similarity alone is the critical factor: The important figural aspects also differ in terms of other geometric properties. Nonetheless, we present interstimulus measurements that definitely support the hypothesis that the operative similarity is of a more complex variety compared with the kind of similarity a simple pixel-by-pixel ideal observer would use. Context plays a vital role by varying similarity via the set of features composing stimulus patterns and by way of the stimulus–response assignments.

The configural superiority effect demonstrates how the presence of an irrelevant displayed context (irrelevant because the context by itself is not informative to the task at hand) facilitates the processing of another task-relevant source of information. The configural superiority effect, or any other effect induced by an irrelevant source of information, suggests that some sort of interaction occurs between the processing channels of the different information sources. Given Pomerantz et al.'s (1977) figures, the features seem to somehow integrate into a unified whole, a gestalt, which in turn makes the discrimination between the target and distractors more efficient. Wenger and Townsend (2001) provided a working definition for a configural (or gestalt-like) mode of processing in terms of capacity, architecture, and stopping rule. Systems factorial technology allows testing these properties of the cognitive system and consequently determining whether particular stimuli, such as the figures used by Pomerantz et al., are processed configurally.

Defining and Implementing the Capacity Function and Related Tests

Systems factorial technology is well suited to handle data acquired through a redundant-target search paradigm. The central manipulation involves comparison of RTs on trials in which a single-target stimulus (feature, item, dimensional value, etc.) is presented versus trials in which two (or more) target stimuli are presented. Participants receive instructions to respond affirmatively if any target appears and negatively only if no target appears. This basic paradigm can be alternatively called redundant target (because the response can be made if any target is perceived) or disjunctive target for the same reason.

Because of theoretical reasons discussed below (see especially the contributions of Miller and colleagues, starting in the 1970s— e.g., Miller, 1978), if performance on the redundant-target trials is sufficiently faster than that on single-target trials, strong conclusions may be drawn about processing structure. Even ordinary parallel processing, with targets engaging in a race, can lead to redundant-target superiority (see, e.g., Egeth, 1966; Raab, 1962). In fact, even somewhat limited capacity parallel race models can produce redundant-target superiority (Townsend & Nozawa, 1995; Townsend & Ashby, 1983, chap. 4).

However, something even stronger than ordinary parallel processing must be occurring if certain RT bounds are exceeded, as first suggested by Miller (1978; see also Colonius & Townsend, 1997; see caveats by Townsend & Nozawa, 1997). Miller called this special kind of processing coactivation in an operational fashion. Later, the concept received more rigorous mathematical treatment (e.g., Colonius & Townsend, 1997; Diederich & Colonius, 1991; Miller, 1982; Townsend & Nozawa, 1988, 1995). Townsend and Nozawa (1988, 1995) introduced the notion of capacity and showed that well-defined coactivation models always predicted super capacity—that is, higher speed than a standard parallel race model with independent channels predicts. These concepts are discussed below.

There have been two major types of implementations of this basic redundant-targets paradigm. In one variety, which we call targets and blanks, a stimulus dimension can either contain a target or be blank. For instance, Townsend and Nozawa (1988, 1995) presented a single dot in the left eye, a single dot in the right eye, dots in both eyes, or no dots in either eye. “Neither” trials were entirely blank and in a “single-target left” trial, there was only a blank on the right. In one of their conditions, the left and right targets could be binocularly fused but not so in the other. The participants received instructions to respond “yes” if either position contained a dot and “no” only if neither did. Still within this class, some studies favored a go/no-go paradigm, in which participants were instructed to respond “yes” if at least one target appears and to withhold response otherwise. For example, Miller (1982, Experiments 1 and 2) used a stimulus set made up of a visual signal (asterisk) and an auditory signal (780-Hz tone). Participants were instructed to respond as quickly as possible if either or both signals were presented but to withhold the response if neither signal appeared. Despite variations in the mode of responding on no-signal trials (respond “no” in the former example, withhold response in the latter), both designs fall into the same class because they share an important common feature: Namely, a particular location, or dimension, can take one of two values—a target signal or blank; it cannot contain a nontarget signal (distractor).

We call the second major variety of redundant-targets design targets and nontargets; this variety includes a distractor value when either target is absent. There appear to be many examples of this design in the literature. For instance, Egeth and Dagenbach (1991) used a visual search task in which two spatial locations could contain the letters X and O, with X defined as the target and O as the distractor. The participants were instructed to respond “yes” if either or both positions contained the target X (i.e., if the display is XO, OX, or XX). Otherwise (i.e., when OO is displayed), they had to respond “no.” Variations in the mode of responding on no-target trials may apply for this class as well. For example, Miller (1982, Experiment 3) used a go/no-go procedure in which participants were instructed to press a key when presented with either the letter X and/or the high pitch tone (targets) and to withhold response if presented with the letter O and a low tone (distractors).

Redundant-target paradigms can assist in quantifying critical processing mechanisms, for example, how capacity changes as workload is increased. Particularly in the first kind of design, in which on single-target trials there really is nothing in the alternative position (dimension, feature, etc.), the workload measure is especially meaningful. With this design, moving from a single target to two redundant targets, workload is increased because two channels or items are processed instead of one. Thus, the potential benefits of redundancy are pitted against the possible capacity (e.g., attention) draining aspects of having two entities rather than a single entity to handle.

In the second type of design (targets and nontargets), a finding of RTE, or stronger results as discussed below, could also be due to real capacity improvements in the redundant-target trials. For instance, suppose the features in a single-target pattern are processed in parallel and independently and that the redundant target is processed quickly as a good configuration, that is, coactively (Colonius & Townsend, 1997). In this case, an RTE will arise; in fact capacity will be judged as super, and an inference of coactivation or some other type of extraordinary processing can legitimately be made.

However, this targets and nontargets type of design allows other cogent interpretations. For instance, suppose that the single-target stimuli possess nontrivial similarity with the negative stimuli (i.e., the no-target stimuli, termed negative because they are mapped onto the “no” response). This is almost always the case. Then even if the redundant-target features are processed in a standard parallel fashion, RTE measures will indicate superiority over the single-target trials. Indeed, even super capacity may be the conclusion (see below for a more precise explanation of this concept). Yet, the culprit is the slowness of the single-target stimuli RTs, not the quickness of the redundant target RTs! We have to keep this important aspect in mind throughout our experiments, which are of the second (targets and nontargets) variety.

We now show how to measure capacity and test special important cases. Consider a redundant-target search task in which the stimulus set is created by factorially combining two dimensions (A and B) with two values each (target and no target). On a given trial, the two targets can be presented simultaneously (AB; a redundant-target trial), one at a time (A alone or B alone; a single-target trial), or not at all (no-target trial, including two distractors). Accuracy and RTs for the detection of the target(s) are collected.

For each of the four experimental conditions (redundant target, single-target A, single-target B, and no target), we calculate three functions: the probability density function, f(t), the cumulative distribution function, F(t) (or CDF), and the survivor function [S(t) = 1 − F(t)]. Whereas F(t) tells us the probability that a process is finished before or at time t, its complement, S(t), tells us the probability that the process is finished later than time t. Going one step further, the hazard function, h(t), gives the probability of finishing the task in the next instant of time, given that the task is not yet completed, and is formally specified as h(t) = t(t)/S(t).

Taking the amount of work that the system does at each moment, as assessed by h(t), and integrating it up to time t yields the integrated hazard function, H(t), which is a measure of the cumulative amount of work done up to that point of time. Townsend and Nozawa (1995) showed that H(t) = −ln[S(t)], such that H(t) can be derived from the empirical survivor function. Finally, by dividing the integrated hazard function of the redundant-target condition by the sum of the integrated hazard functions from the single-target conditions, we can estimate the capacity coefficient, C(t) = HAB(t)/[HA(t) + HB(t)].

This index measures capacity continuously at all times and is therefore a relatively fine-grained index of processing efficiency. The measure is founded on the basis of standard parallel processing, which assumes simultaneity of processing, the stochastic independence of individual channels, and each channel operating at the same efficiency regardless of how many other channels are activated (i.e., working). The latter assumption is called unlimited capacity. If the system has unlimited capacity, and if information from the two channels (A and B) is processed independently and in parallel, then the integrated hazard function for the double-target stimulus, HAB, equals the sum of the functions for the single-target stimuli A and B (HA + HB). If our observed C(t) is close to 1 for all reliable sample points, the system is said to be performing in an unlimited capacity. We cannot prove that the channels are independent just because C(t) = 1, but certainly the overall system efficiency is equivalent to the standard parallel model.

On the other hand, if presenting two signals simultaneously decreases the processing ability in each of the channels (relative to when only one signal is present), then the values of C(t) would be smaller than 1, indicating that the system has a limited capacity. A standard serial system would predict C(t) = 0.5 as would a (nonstandard) fixed-capacity parallel system, which assumes that a fixed amount of capacity is divided up among the working channels. Finally, if having two targets actually increases the efficiency of processing in each channel, then the values of C(t) would be greater than 1, indicating super capacity. Wenger and Townsend's (2001; also see Townsend & Nozawa, 1995) set of axioms on configural processing state that when true configural processing occurs in a facilitatory way, that is, C(t) > 1, measured capacity should be superior to standard parallel processing.

Whereas C(t) is a continuous measure of workload capacity, the Miller (1978, 1982) inequality establishes an upper bound of performance that Miller associated with ordinary parallel race processing. The Miller bound is formally written as FA, B(t) ≤ FA(t) + FB(t), where FA, B(t) is the CDF corresponding to the probability that a response occurred before time t given a double-target stimulus AB, and the CDFs FA(t) and FB(t) are, respectively, the probabilities of a response to occur before time t given that the stimulus contained either target A alone or target B alone. The inequality states that the probability that a response faster or equal to t is given to a redundant target cannot exceed the sum of probabilities for such responses given each target alone. Townsend and Nozawa (1995; see also Townsend & Wenger, 2004) showed that the presence of super capacity over a substantial interval implies a violation of Miller's inequality. Conversely, violation of the Miller bound implies super capacity, that is, C(t) > 1.

In the opposite direction from the Miller bound, Grice (e.g., Grice, Canham, & Gwynne, 1984) suggested a lower bound on performance. It is formally given by FA, B(t) ≥ MAX [FA(t), FB(t)], where FA, B(t), FA(t), and FB(t) are again the CDFs for the double-target, single-target A, and single-target B conditions. Violation of the Grice lower bound requires extremely limited capacity (Townsend & Nozawa, 1995; Townsend & Wenger, 2004).

Thus, if performance on redundant signals is worse than that of the faster of the two single signals, then the Grice inequality is violated, implying limited capacity of a rather strong degree. Note that when FA(t) = FB(t) = F(t), performance at the edge of the Grice bound occurs exactly when C(t) = 0.5, the prediction of the fixed capacity parallel model, or the standard serial models.

Recall that the RTE subtracts the mean of the redundant condition, say, RTA,B, from the mean of the fastest single-target condition, MIN(RTA, RTB). Failure to achieve an RTE, that is, MIN(RTA, RT B) is less than or equal to RTA,B, will occur only in the face of rather extraordinary limited capacity, much as with the Grice bound.

The facilitation of RTs in response to displays containing a good gestalt should be bigger than expected on the basis of the advantage given by standard parallel processing (i.e., simple unlimited capacity). In that case, we predict, C(t) values higher than 1 (i.e., super capacity) and, possibly, violations of Miller's inequality.

We used figures constructed of angles and oblique lines, which were shown by Pomerantz et al. (1977) to produce a noticeable configural superiority effect, as stimuli. Participants performed a redundant-target detection task, in which they had to detect the presence of features that were designated earlier as targets. If these features co act (or positively interact) to create a unified whole, then presenting two targets together should produce a marked RTE, even possibly superseding standard unlimited capacity parallel processing.

Similarity, Topology, and Architecture

Similarity

In their seminal article, Duncan and Humphreys (1989) suggested that the efficiency of visual search is determined by stimulus similarity. More specifically, they argued that “search efficiency decreases with (a) increasing similarity between targets and non-targets, and (b) decreasing similarity between non-targets themselves, the two interacting to scale one another's effect.” (p. 434). The similarity that we shall discuss in this article differs, however, in two major ways.

First, we focus on the similarity between an individually displayed item and other items that are in the stimulus set but are not concurrently presented (nondisplayed context), whereas Duncan and Humphreys's (1980) similarity refers to the resemblance between simultaneously presented items, targets and nontargets, that make up the displayed context. Second, we highlight higher order, possibly topological, similarity between members of the stimulus set, whereas in Duncan and Humphreys's study, topology did not play a significant role, as the targets and distractors (rotated letters L and T) fell within the same broad topological class. Recently, Chen (2005) provided a theoretical framework and compelling empirical evidence for the role of topology in visual perception. In the upcoming section we define topology and specify our predictions regarding the way it will affect performance.

In addition, similarity in regard to stimulus–response assignment will also be important. For instance, in a parallel race model, when presented with target-absent trials, one must exhaustively process information from all (two) channels. This, plus the time-honored finding that negative responses typically take longer than affirmative ones, predicts longest RTs on exhaustive processing, target-absent trials. However, in the event of an interaction of higher order similarity plus differential response assignment of similar stimuli to distinctive responses, we can predict a further interesting effect on the no-target trials. On the one hand, a gestalt nontarget might be perceptually processed rapidly, but if it is assigned to a different response from a similar stimulus, processing could be slowed down. The consequence of these countervailing forces could leave the “no” responses at an intermediate speed.

Although topology is not the only higher order similarity possibly active in this work, it has been sufficiently studied as to render it a viable concept. We discuss some of the evidence for its validity and pertinence to the present investigation.

The Topological Connection

Topology deals with properties of geometric figures that are not changed by homeomorphism, such as stretching or bending. Two objects are topologically equivalent if one can be deformed into the other without cutting it apart or gluing pieces of it together. We can bend a rubber ring, stretch it, rotate it, change its shape and orientation, yet its topological properties (such as connectivity and number of holes) remain invariant. A circle and a triangle are therefore topologically equivalent (both have one hole), whereas the digits 0 and 8 are not (one vs. two holes, respectively). By the same token, any two triangles are topologically equivalent, even if they are rotated in space such that one is facing left and the other is facing right (see the redundant- and no-target stimuli in Experiment 1).

Chen, Zhang, and Srinivasan (2003) provided evidence for the importance of topology in visual perception. They showed that honeybees rapidly learned to discriminate patterns that were topologically different (e.g., the shapes O and S) and successfully generalized this knowledge to other novel patterns. By contrast, discrimination of topologically equivalent patterns (e.g., a ring and a hollow square), carefully chosen to control for area, luminance, and spatial frequency, was learned more slowly and not as well. These results led them to argue that “although the global nature of topological properties makes their computation difficult, topology may be a fundamental component of the vocabulary by which visual systems represent and characterize objects” (Chen et al., 2003, p. 6884).

Chen (2005) went on to provide a comprehensive theory that highlights the role of topological properties in visual perception and argues that global topological perception precedes the perception of other properties. One of his many experiments showed that when the luminance, spatial frequency, and perimeter length (as well as other factors that are commonly considered in the study of visual perception) are controlled, two visual stimuli that are topologically different with respect to holes, such as an S-like figure and a ring, are more discriminable to humans in a near threshold same–different task than are other pairs of figures that are topologically equivalent, such as a disc and a square or a disc and an S-like shape.

Pomerantz (2003) supported the view that topological properties might be the effective primitives of perception by demonstrating how topological differences can contribute to the emergence of configural superiority effects. In one illustration he presented a panel of three identical black rings and a fourth, larger ring (that differed in diameter, but not in topology). Adding an identical context to all four rings by superimposing a black disc in the center of each ring changed the topological properties of the small rings: The disc completely covered the central space, such that the rings no longer had a hole inside. The disc did not fully cover, however, the central space of the larger ring, and so that fourth item was constructed of the concentric black disc, white ring, and black ring (from the center out). Detecting the odd item in the composite display (with context) was easier than in the original display (four rings, no context added), presumably because of the topological difference between the odd item and the three (completely black and with no holes) circles. The original display and the context and the composite (original plus context) displays are illustrated in Figure 2.

Figure 2.

Detecting the odd item in the composite display (C, with context) is easier than in the original display (A, four rings, no context added), presumably because of the topological difference—that is, number of holes—between the odd item and the three completely black discs (Pomerantz, 2003).

Given the seemingly fundamental role of topological properties in visual perception, we predict that topological (dis)similarity will be instrumental in determining the order of RTs for the different figures. In our case, topologically similar items would suffer relatively slow RTs if they are assigned to different responses, because discrimination between them would be difficult and require additional processing time. On the other hand, topologically similar items that are assigned to the same response (especially if they are topologically different from the other items that may be presented) would be processed quickly, as no discrimination is needed.

Architecture

Estimating capacity is only one leg of systems factorial technology. The other leg of the methodology typically enables us to uncover the architecture and stopping rule of the processing system by way of manipulating the saliency of each target. The architecture-identification facet, independent of the capacity assessment (and therefore not plagued by the usual ability of limited capacity parallel models to mimic ordinary serial processing), requires the assumption of selective influence by carefully chosen experimental variables. That is, two distinct experimental variables must distinctively affect separate underlying subsystem's processing times (see, e.g., Dzhafarov, 1997; Sternberg, 1969; Townsend, 1984). In the present experiments, salience of the features was manipulated in Experiments 2, 3, and 4. Unfortunately, selective influence was not satisfied, so the associated tests could not be carried out. This manipulation, as well as its theoretical implications, are explained in the discussion section of Experiment 2, where it was first implemented. The capacity measurements were implemented within and over the salience manipulations with no differences found in any of the five experiments. Therefore, we do not report results in the distinct factorial conditions. Finally, we present some potential implications of the failure of selective influence for perception of our present stimuli and an interesting modest side experiment we carried out on this problem.

Experiment 1

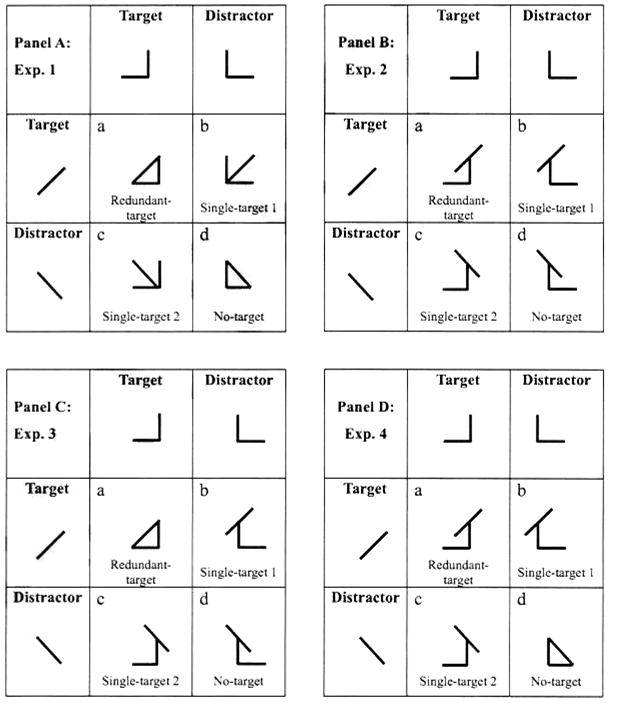

In Experiment 1, the two single-target stimuli were constructed such that they shared the same topology and were assigned to the same response (see Figure 3A, Quadrants b and c). The redundant-and no-target stimuli were topologically equivalent to each other (Quadrants a and d) but were assigned to different responses. Also, they can be considered good configurations, as they both possessed the topological property of closure. Comparing performance between redundant-target and single-target conditions would give us a sense of which of two factors, when combined, governs processing.

Figure 3.

The stimulus sets used in Experiments 1–4 (A–D, respectively). Each stimulus, in each of the experiments, was made up of a diagonal line (left–right) and an └ (normal–mirror image). For each component, one value was defined as a target and the other as a distractor, such that when combined factorially they produced redundant-target (a), single-target (b and c), and no-target (d) displays. Given that the task was target detection, the stimuli in Quadrants a, b, and c call for a “yes” response, whereas the stimulus in Quadrant d calls for a “no” response.

Fast responses on redundant-target trials emphasize the role of redundancy gain, whereas fast responses on single-target trials imply that similarity, possibly topological similarity, among set items is at least as important. Additionally, deciding between these two will help in the resolution of another fundamental issue: Dominance of the topological similarity implies holistic processing (because topological similarity among items is determined by their overall shape), whereas redundancy gain can be obtained if the stimuli are processed on a parallel, feature-by-feature basis. Of course, the stimuli in Figure 3 differ in more than just their topology. We address this issue in the Results and Discussion section of Experiment 1.

Method

Participants

Twenty-four undergraduate students from Indiana University participated in the study in exchange for partial course credit. All had normal or corrected-to-normal vision.

Stimuli and procedure

The stimulus set was based on the figures used by Pomerantz et al. (1977). We used the factorial combination of a diagonal line (either left, “\”, or right, “/”) and a right angle (open either to the right, └, or to the left). The stimuli used are shown in Figure 3A and were presented in black over a gray background. The viewing distance was approximately 50 cm from the center of the screen, such that stimuli subtended a visual angle of 1.5°. On each trial, a fixation cross appeared in the center of the screen for 500 ms, followed by a blank screen (also 500 ms). Then, a single stimulus was presented in one of four possible locations around the center (trial-to-trial spatial uncertainty prevented adaptation and attending to a particular location), until a response was made. Participants had to respond affirmatively, by pressing the right-side mouse key, if either of the features that were defined as targets appeared. Otherwise, if only distractors appeared, they were to produce a negative response by pressing the left key.

Response assignment was counterbalanced over participants. In the current experiment, “/” and the mirror image of └ were defined as targets. Therefore, when either or both appeared (see Figure 3A, Quadrants a, b, and c), participants had to respond “yes.” If only distractors appeared (see Figure 3A, Quadrant d), then the correct response was “no.”. Each participant performed in a practice session consisting of 32 trials, followed by four experimental blocks of 320 trials each (amounting to a total of 1,280 experimental trials). The stimuli were presented on a Dell 15-in. computer screen, with a 1024 × 768 resolution, controlled by an IBM compatible (Pentium 3) personal computer running DMDX software (Forster & Forster, 2003).

Results and Discussion

We excluded data from 3 participants who had an error rate of more than 10% (in fact they had much higher error rates of 24%, 46%, and 41%, which likely reflect nonconformity with the instructions). Error rate was low for all other participants and across the four conditions (3.1% errors across all participants, with only 2 participants averaging 7% each and all the others below 5%), and no RT–accuracy trade off was observed. Analysis of data was restricted to correct responses. Mean RTs for each participant and averaged across participants are presented in Table 1. RTs on single-target trials were fastest, on average (448.3 and 482.7 ms). RTs in the redundant- and the no-target conditions were slower, averaging 541.7 and 552.7 ms, respectively. This same ordering appeared for most participants.

Table 1. Mean Reaction Times (in Milliseconds) for the Redundant-, Single-, and No-Target Conditions of Experiment 1.

| Participant | Redundant target | Single target 1 | Single target 2 | No target | Fastest single target | RTE | t | df | P |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 454 | 372 | 463 | 521 | 372 | −82 | −7.86 | 610 | |

| 2 | 448 | 427 | 478 | 515 | 427 | −21 | −2.07 | 634 | .039 |

| 3 | 414 | 338 | 345 | 401 | 338 | −76 | −11.50 | 565 | |

| 4 | 612 | 517 | 611 | 634 | 517 | −95 | −6.32 | 594 | |

| 5 | 669 | 575 | 596 | 645 | 575 | −94 | −5.04 | 592 | |

| 6 | 646 | 512 | 598 | 696 | 512 | −134 | −6.82 | 561 | |

| 7 | 468 | 422 | 445 | 522 | 422 | −46 | −3.50 | 604 | |

| 8 | 434 | 334 | 353 | 421 | 334 | −100 | −11.84 | 506 | |

| 9 | 491 | 416 | 421 | 456 | 416 | −75 | −6.75 | 573 | |

| 10 | 659 | 504 | 482 | 603 | 482 | −177 | −12.73 | 604 | |

| 11 | 509 | 405 | 393 | 489 | 393 | −116 | −8.73 | 624 | |

| 12 | 534 | 393 | 398 | 511 | 393 | −141 | −13.29 | 586 | |

| 13 | 643 | 555 | 629 | 644 | 555 | −88 | −5.24 | 576 | |

| 14 | 498 | 399 | 439 | 551 | 319 | −179 | −9.14 | 631 | |

| 15 | 488 | 429 | 418 | 515 | 418 | −70 | −7.42 | 629 | |

| 16 | 568 | 474 | 543 | 580 | 474 | −94 | −7.46 | 608 | |

| 17 | 527 | 452 | 465 | 463 | 452 | −75 | −2.80 | 585 | .005 |

| 18 | 539 | 432 | 464 | 524 | 432 | −107 | −8.45 | 571 | |

| 19 | 564 | 436 | 441 | 573 | 436 | −128 | −11.04 | 624 | |

| 20 | 463 | 368 | 441 | 583 | 368 | −95 | −1.76 | 609 | .079 |

| 21 | 748 | 655 | 714 | 760 | 655 | −93 | −4.79 | 596 | |

| M | 541.7 | 448.3 | 482.7 | 552.7 | 442.4 | −99.3 |

Note. Redundant-target effect (RTE) is calculated as the difference between mean reaction time on the fastest single-target condition versus mean reaction time in the redundant-target condition. For both averaged and individual data, responses on single-target trials were faster than responses on redundant-target trials (resulting in negative RTE).

Recall that the critical contrast for the RTE is made by comparing mean RT on the faster of the two single-target conditions to mean RT on the redundant-target condition. Strikingly, all 21 participants in this experiment had slower RTs in the redundant-target condition, thus exhibiting a negative RTE (t test values and significance levels are presented in Table 1). All participants except for 3 exhibited a highly significant negative RTE (p < .001), documenting relatively slow responses when two targets were present. Even these 3 exhibited a significant, or close to significant (.001 < p < .1), negative RTE, as illustrated in the left panel of Figure 4, where each bar corresponds to the RTE of an individual participant and all bars (i.e., all participants) are below zero.

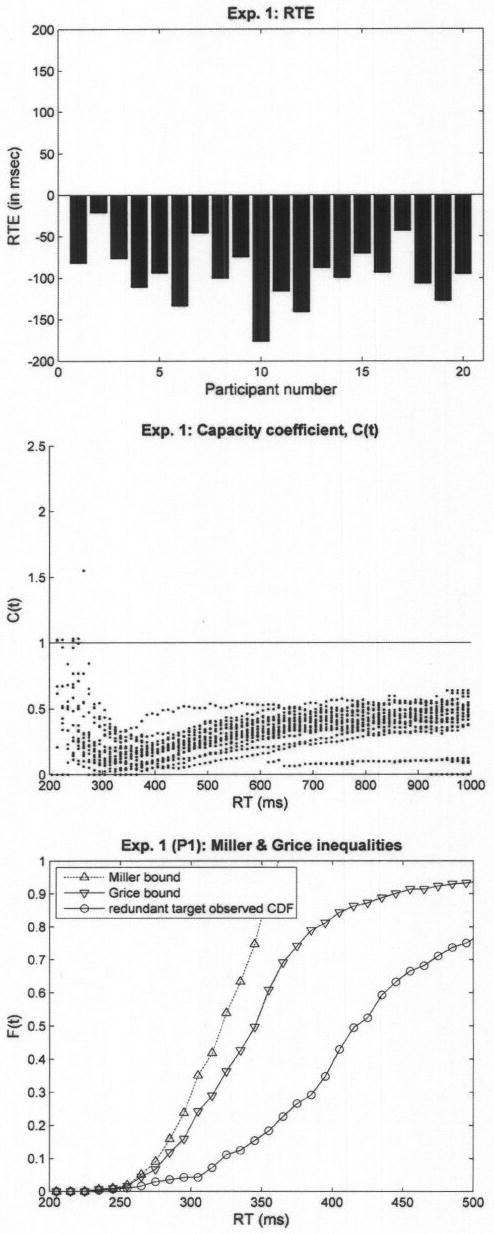

Figure 4.

Results of Experiment 1. The left panel presents the redundant-target effect (RTE) calculated individually, with each bar corresponding to a single participant. The middle panel presents C(t) values pooled across participants. The right panel presents the Miller and Grice bounds for a typical participant (P1). The uppermost dashed line is the calculated (not observed) cumulative distribution function (CDF) for the sum of the two single-target CDFs, which serves as Miller's bound; if the redundant-target CDF exceeds this line, then the Miller inequality is violated. Violations of the lower, Grice, bound occur if the redundant-target CDF goes below the CDF of the faster of the two single-target conditions. The Miller inequality was not violated at any point t, whereas the Grice inequality was violated across almost the whole time range.

Note that we analyze data at the individual level, not across participants (although we report group means in the table and in the results sections). Different individuals may be faster to respond on single-target 1 (see Figure 3) trials than on single-target 2 (see Figure 3) trials (e.g., Table 1, Participants 1 and 2), whereas others may be faster on single-target 2 trials (e.g., Table 1, Participants 10 and 11).

The participants responded faster on single-target trials likely because the two look-alike figures that contained only a single target feature were mapped into the same response and no discrimination had to be made between them. That is, when presented with an arrow-like shape, the participant could immediately provide a positive response (indicating the presence of a target), without the need to make a more difficult discrimination of which arrow was present. However, the two isosceles triangles that constitute the redundant- and the no-target displays belong to the same topological class yet call for different responses. To respond accurately, participants had to discriminate between these two closed figures, causing in turn slower responses.

The prediction from the literature of faster performance on redundant-target trials was clearly not confirmed. Paradoxically, this absence of the RTE may signal the effect of holistic processing. The results, and the reports of our participants, suggest that the features of diagonal line slope and └ orientation were almost meaningless by themselves and therefore also the concepts of “redundant-,” “single-,” and “no-target.” Rather than looking for these components, participants assigned the stimuli in Quadrants a–c of Figure 3A as wholes to the “yes” response and the stimulus in Quadrant d to the “no” response. From that moment on, no processing of the constituting features was necessary.

We further analyzed the data using the tools provided by Townsend and Nozawa (1995). The middle panel of Figure 4 shows the capacity coefficient, C(t), pooled across individual participants [we estimated C(t) for each individual, then plotted all values on the same scatter plot, so it is really a collection of individual data]. Recall that C(t) values lower than 1.0 imply a limited capacity in the sense of the comparison of double-to-single target features. Most C(t) values in the current study lie below 0.5, suggesting that the system had a severely limited capacity. Further support for the capacity limitation is evident in the Miller and Grice bounds: No violation of Miller's race inequality was observed, whereas the Grice bound was violated for almost all time bins (see Figure 4, right panel).

In summary, the results of Experiment 1 showed that processing was fastest for the single-target conditions and slower for the redundant-target (as well as for the no-target) condition. Hence, the well replicated redundancy gain was not observed.

Possible factors that determine the efficiency of processing are the topological similarity among items and the response assignment. When presented with the topologically equivalent arrow shapes of the single-target conditions, the participant could immediately provide a positive response (indicating the presence of a target), without the need to make the more difficult discrimination of which arrow had appeared. However, the two triangles that constitute the redundant- and the no-target displays belong to the same topological class yet call for different responses. To respond accurately, participants had to discriminate between the two closed figures, causing slower responses. Recall that C(t) measures relative efficiency of redundant-to-single target. In the present case, the latter were fast and the former were slow, thus exhibiting the extreme limitations in capacity. The redundant-and the no-target stimuli may differ from the single-target stimuli in more than one aspect. However, they clearly differ in their topological properties, making topology a sufficient account for the observed violation of the RTE.

Is it possible that some type of lower order similarity could account for our results in Experiment 1? We have conducted an ideal observer analysis (cf. Geisler, 2003) to show that a different type of similarity, based on a pixel-by-pixel overlap across stimuli, yields similarity ratings that fail to account for the observed order of RTs for the different experimental conditions, making topological similarity a strong contender. These models are simple in not using relational or topological structure and yet are nontrivial in that they form the basis of ideal observer predictions, which form a strong type of optimal processing.

We provide a brief description of our procedures here (a more detailed description of the ideal observer analysis and its results can be found in Appendix A, which is available in the online supplementary materials). In implementing the ideal observer approach, we presented an optimal Bayesian decider with a noisy image of one of the four stimuli. The decider then rates the degree of pixel-by-pixel similarity among stimuli and chooses the item from the stimulus set that best matches the input pattern (by maximizing the cross correlation). We repeated this procedure many times and across various levels of noise. The ideal observer ranked the redundant-target stimulus (see Figure 3A, Quadrant a) as being most similar to single target 1 (see Figure 3A, Quadrant b), then to single target 2 (see Figure 3A, Quadrant c), and as least similar to the no-target stimulus (see Figure 3A, Quadrant d). This outcome is straightforward and can be predicted by looking at the four stimuli and eyeballing the overlap between their line segments. Thus, a pixel-by-pixel (nontopological) similarity cannot explain why participants would respond faster on single-target trials compared with redundant-target trials.2

Experiment 1 thus indicates that the effects of higher order similarity, along with response assignments, between the displayed item and the other items that made up the stimulus set but were not simultaneously presented were stronger than the effect of target redundancy. We follow up on this inference in the next experiment.

In Experiment 2, we created stimuli that belong to the same topological class. The effect of higher order similarity should vanish, and instead we expected to find the ordering that is generally observed: faster responses to redundant-target trials (redundancy gain as a result of statistical facilitation), followed by single-target trials, with the no-target trials having the slowest RTs (the no-target display requires the observer to exhaustively search both components). In fact, if the stimuli are visually interpreted as separable and independent featural combinations, then responses to the double-target trials should be as fast as standard parallel processing, that is, C(t) = 1 (i.e., unlimited capacity) approximately. If the double-target stimulus is seen as a unique positive response gestalt then perhaps C(t) > 1, indicating super capacity. Or, even simply possessing both features completely distinct from the “no” stimulus might render super capacity as a result of lessened interference.

The stimuli are presented in Figure 3B. Note that none of the items are more closed or symmetrical than the others. Note also that the four stimuli are matched for other topological properties, such as the number of terminators and the types of intersections present. C(t) values are expected to be around 1.0, or slightly lower if capacity is moderately limited.

Experiment 2

Method

Participants

Four undergraduate students from Indiana University were paid to participate in the study. Twenty-four additional undergraduate students performed in exchange for partial course credit. All had normal or corrected-to-normal vision.

Stimuli and procedure

As in Experiment 1, we used the factorial combination of a diagonal line (either left, “\”, or right, “/”) and a right angle (facing either left or right), with “/” and the mirror image of └ defined as targets. When either target, or both, appeared (see Figure 3B, Quadrants a, b, and c), the participant had to respond “yes.” If only distractors appeared (see Figure 3B, Quadrant d), then the correct response was “no”.

The procedure followed closely that of Experiment 1, with one exception: To investigate the architecture of the system (serial, parallel, coactive), we manipulated the intensity of each component (oblique line, └) to be either high or low. To construct the stimuli in the high intensity condition, we used the same black line that was used for the stimuli in Experiment 1. To construct the low intensity stimuli, we reduced the figure-to-background contrast: Using Microsoft Painter, we set the hue, saturation, and luminance values of the stimuli to 160, 0, and 226, respectively. These values yielded a pale gray line that was hardly detectable over the grey background. We use the notation HH to indicate a stimulus with high saliency in both the first (oblique line) and the second (right angle) components. We use LL to indicate that both features of a stimulus are of low salience. Similarly, we use HL and LH to indicate stimuli with one highly salient feature and one low salient feature, respectively.

Results and Discussion

We excluded data from 2 participants who had an error rate of more than 10%. Error rate was low for all other participants and across the four conditions (3.6% errors across all participants, with only 3 averaging more than 5%), and no RT–accuracy trade off was observed. Analysis of data was restricted to correct responses only. On average, responses on redundant-target trials were fastest (575.5 ms). The two single-target conditions were slower, with 602.5 (single target 1) and 634.8 (single target 2) ms, and the no-target condition appears to be slowest, averaging 708.5 ms across all participants. RTE was calculated, for each individual, as the difference between mean RT on the faster of the two single-target conditions and mean RT on the redundant-target condition. The left panel of Figure 5 illustrates the observed RTEs from Experiment 2 as bar plots, where each bar corresponds to the RTE of an individual participant. Out of 26 participants, 17 exhibited positive RTEs, nine of which were statistically significant at p < .05. As opposed to Experiment 1, only 5 participants exhibited significant (p < .05) negative RTEs.

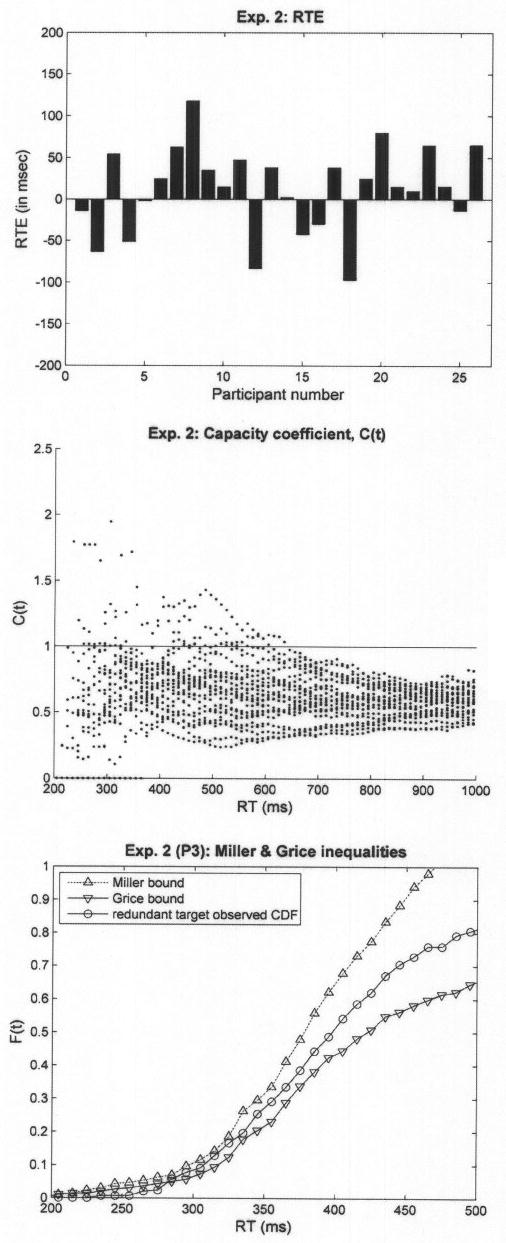

Figure 5.

Results of Experiment 2. The left panel presents individual redundant target effects (RTEs), where each bar corresponds to a single participant. The middle panel presents C(t) values pooled across participants. The left panel presents the Miller and Grice bounds for a typical participant (P3). The observed cumulative distribution function (CDF) for the redundant-target condition lies between the two bounds, suggesting that capacity was neither limited nor super.

The results of Experiment 2, when compared with those of Experiment 1, are noteworthy. As predicted, when topological similarity across the four experimental conditions was equated, the RT advantage of single-target trials that was observed in Experiment 1 vanished. Instead, for most participants, RTs were ordered in a fashion similar to that observed in many visual search studies, including ours (e.g., Townsend & Nozawa, 1995): RTs on redundant-target trials were the fastest, then the single-target trials, and finally the no-target trials.

The stimuli in this experiment were constructed as members of the same topological class such that no stimulus was more con figural than any other ( i.e., all four types of stimuli had more or less the same spatial relations between the oblique line and the angle; see Figure 3B). None of the stimuli had the property of closure, nor was any one of them more symmetrical than any other, so members of the “yes” stimulus set were topologically and geometrically like the “no” stimulus.

If processing is a standard parallel race (i.e., unlimited capacity, independent), then C(t) = 1 and a notable, but not huge, RTE will be found. If the similarity of the single-target stimulus patterns to the negative (no-target) patterns increases single-target RTs, then it could be discovered that C(t) > 1, indicating super capacity, depending on how fast the double-target condition is. Such a slowdown of the single-target performance could be at the featural or configural level, with the latter being compatible with Experiment 1 and our subsequent findings. Thus, a finding of super capacity might mean only that the single-target data are slowed in some way by the presence of the accompanying distractor feature, rather than that the redundant target is phenomenally efficient (cf. Grice, Canham, & Boroughs, 1984; Miller, 1991). On the other hand, if 0.5 < C(t) < 1, then speed is not as good as standard parallel racing (indicating moderate limited capacity) but is better than what the Grice bound or failure to find any RTE would allow.

The middle panel of Figure 5 shows the capacity coefficient, C(t), pooled across individual participants. Concomitantly for most participants, the CDF of the redundant-target condition fell within the bounds set by Miller's and Grice's inequalities. An example from the data of a typical participant (Participant 3) is provided in the right panel of Figure 5.

A few observers exhibited a modest tendency toward super capacity, but most of the data indicated moderately limited capacity with a solid cluster of points less than C(t) = 0.5, indicating severe capacity loss per channel. Hence, the fact that the massive bulk of the data lies substantially below C(t) = 1 suggests that decisive support for parallel, independent, unlimited capacity feature processing is untenable.

The hypothesis that a large decrement in single-target efficiency arising from similarity with the nontarget stimulus inevitably causes super capacity is also falsified. And the hypothesis that the double-target stimulus forms a better gestalt than the single-target patterns under these conditions is also disconfirmed. Below we interpret these findings in light of our entire corpus of results from all five experiments.

The analyses of Experiment 2 were performed on data pooled across different levels of stimulus saliency. Manipulating the saliency of the features changed the stimulus set. Each factorial condition (redundant-, single 1, single 2, and no-target) was subdivided into four new factorial combinations (HH, HL, LH, and LL). Thus, stimuli could have been black, half black–half gray, or all gray. Acknowledging the effect of other items in the stimulus set (formerly defined as nondisplayed context) on processing, we tested whether the same set of geometrical shapes—but with no manipulation of saliency—would yield the same results. In Appendix B (see the online supplementary materials), we report the results of an additional analysis and a new experiment (Experiment 2B) revealing that the additional manipulation of salience did not significantly affect performance.

In summary, the first two experiments are compatible with our general notions regarding the contextual importance of higher order similarity interacting with response assignment, although Experiment 2 by itself is perhaps open to certain feature processing interpretations. Experiments 3 and 4 further pursued this line of thought. The stimuli sets were almost identical to that of Experiment 2, with a single exception: Three of the figures remained topologically similar to each other, but the fourth item differed; its features were spatially arranged to create a closed form (triangle).

In Experiment 3 the closed form included the redundant-target stimulus and in Experiment 4 it included the no-target stimulus. Figures 3C and 3D present the stimuli that were used in Experiments 3 and 4, respectively. Thus, in these two experiments, we expect the topological (or higher order) dissimilarity of “no” and “yes” stimuli to facilitate performance. Items that are topologically different and assigned to distinct response classes should yield fast responses. So, in Experiment 3 (see Figure 3C), we predicted that RTs on redundant-target trials (Quadrant a) would be faster than on single-target trials (Quadrants b and c). All three displays were assigned to the same response (“yes”), but although the former is topologically unique, the stimuli in Quadrants b and c share the same topological properties as the no-target stimulus in Quadrant d, which is assigned to a different response (“no”). Participants can quickly respond to the stimulus in Quadrant a but need to make an extra distinction when presented with the stimuli in Quadrants b, c, or d, which should result in slower RTs. We not only predicted responses on redundant-target trials to be faster than on single-target trials but also expected the facilitation to be greater than that observed in Experiment 2 (where all displays belonged to same topological class). Operationally, we expect to discover C(t) values higher than 1.

In Experiment 4 (see Figure 3D), on the other hand, the closed form contained the no-target display, whereas all other displays (see Quadrants a, b, and c) shared a common topological structure. Here, the single targets are dissimilar from the “no” pattern as is the double-target stimulus. In that case, if the nontarget (“no”) stimulus is not interacting with processing of the positive stimuli, then comparing RTs on redundant-target trials versus single-target trials should yield results equivalent to those observed in Experiment 2 (because the same stimuli in Quadrants a, b, and c were used in both experiments; compare Figures 3B and 3D).

Of particular interest is the no-target stimulus in Experiment 4 (see Quadrant d of Figure 3D). It is topologically distinct from the others and is uniquely assigned to its (“no”) response. In lieu of other considerations, this alone should yield responses that are at least as fast as any other condition. However, suppose the information in the “no” stimuli is processed by way of our operationally defined features. Then, the “no” stimulus features must be processed exhaustively. If those features in “yes” stimuli are processed in a terminating fashion (i.e., “stop when sufficient target information is accrued for a correct response”), then the sheer topological similarity influence would be counteracted by the termination dynamic, causing “no” trials to take longer than the “yes” trials.

Experiments 3 and 4

Method

Participants

Six undergraduate students from Indiana University were paid to participate in Experiment 3, and 6 others in Experiment 4. An additional 24 undergraduate students performed in each of the experiments in exchange for partial course credit. All had normal or corrected-to-normal vision.

Stimuli and procedure

The stimuli and procedure followed closely those used in Experiment 2, with one exception: As in Experiment 2, we manipulated the presence versus absence of the line target, the angle target, and their saliency. However, as depicted in Figures 3C and 3D, three of the figures remained topologically similar to each other, whereas the features of the fourth item were spatially arranged to create a closed form (triangle). In Experiment 3 this closed form included the redundant-target stimulus and in Experiment 4 it included the no-target stimulus.

To create the desired sets of stimuli, we slightly lowered the vertical position of the diagonal for the stimulus that creates the triangle compared with its height for the other three stimuli. This may introduce a new source of information—the height of the diagonal—that is associated with whether a stimulus is a triangle and so may make it hard to ascribe the results to triangularity or any topological property. A partial solution was obtained by introducing trial-to-trial spatial uncertainty throughout all of our experimentation. Practically, this means that the displayed item could be presented in one of four possible locations around the center of the screen. Hence, participants could not predict the location of the next display, nor could they focus their attention or foveate that particular location. Consequently, they could not fixate on one feature in order to quickly estimate its vertical distance from the other feature.3

Results and Discussion

Two participants in Experiment 3 and 4 in Experiment 4 had error rates of more than 10% and were excluded from the analysis. Mean RTs, RTEs, and C(t) values for all other participants, both at the individual and at the group level, are pooled across the factorial conditions HH, HL, LH, and LL. In Experiment 3, mean RT on the redundant-target trials was by far the fastest (470.9 ms). The two single-target conditions generated substantially slower RTs (547.1 and 589.1 ms), and the no-target condition generated substantially slower RTs (670.4 ms). The redundancy effects in Experiment 3 are overwhelmingly positive and counter the results of Experiment 1. Out of 28 participants, 27 exhibited a positive RTE (as evinced by the positive bars in the upper left panel of Figure 6), with 23 of them showing a statistically significant effect at p < .05.

Figure 6.

Results of Experiments 3 and 4. The left panels present redundant-target effects (RTEs) calculated individually, with each bar corresponding to a single participant. The right panels presents C(t) values pooled across participants.

The upper right panel of Figure 6 illustrates the capacity coefficient values observed in Experiment 3. Responses on the topologically unique redundant-target displays were much faster than on the single-target displays. Indeed, C(t) values substantially exceed 1.0, indicating super capacity. In fact, C(t) was sufficiently greater than 1 so that Miller's inequality was violated for most participants.

The results from Experiment 4 were different from those of Experiment 3. First, RTs on redundant-target trials in Experiment 4 were only slightly faster (459.5 ms) than on single-target trials (473.1 and 483.3 ms). Individual RTEs for Experiments 4 are plotted at the lower left panel of Figure 6 and show the same trend as the averaged data. Furthermore, C(t) values were typically around 0.6–0.7 and hardly ever over 1 (see lower right panel). This finding mirrors that of the positive stimuli in Experiment 2, as both redundant- and single-target displays (i.e., “yes” response trials) belonged to the same topological class and were therefore processed roughly at the same speed.

Responses for the topologically distinct “no” item in Experiment 4 were the slowest (529.4 ms on average). That the “no” stimulus of Experiment 4 was, unlike that of Experiment 2, topologically distinct from the “yes” stimulus patterns failed to produce fast “no” responses. An exhaustive search of information in target-absent trials is sufficient to account for the slow RTs, and such an explanation is consonant with piecemeal feature processing. However, given our other results that are so supportive of massive effects of interpattern similarity, we doubt the latter explanation is the best one. Other causes could supplement or replace this dynamic, such as the general tendency of people (e.g., as suggested by Clark & Chase, 1972) to take longer to make virtually any type of negation, as opposed to affirmation, decision.

The findings from Experiments 1–4 support, in general, our claim that response latencies in a redundant-target search task, using Pomerantz et al.'s (1977) figures as stimuli, are predominantly determined by high order (possibly based upon topological properties) similarity. In these experiments, an oblique line and an angle were combined to create four possible stimuli in each of the experiments. By varying the spatial arrangement of the two features such that they would sometimes form topologically equivalent stimuli and sometimes form topologically distinctive stimuli, in conjunction with their response assignment, we were able to systematically manipulate the ordering of RTs observed for the redundant-, single-, and no-target conditions. Nonetheless, it appears that classical notions of the importance of stopping rule cannot entirely be ruled out since the “no” responses in Experiment 4, though highly dissimilar from the positive stimuli, took significantly more time to process than the latter.

Could the higher order similarity referred to be topological in nature, at least as a sufficient cause? Logic suggests that the answer should be positive, as topology is mainly concerned with holes (as in doughnuts or loops—not wholes—e.g., see Munkres, 1993), and these could have been evident in our complete figures (triangles), but never in their constituting features (simple lines).4

Experiments 1–4 engage search or identification processing involving a small potential alphabet. The question arises as to how observers might perform in the presence of a larger alphabet, indeed, with a set of potential stimuli that include the previous stimuli as subsets. How will, for instance, the inclusion of two triangles, one as a target and the other as a distractor, but amidst topologically distinct stimuli (nontriangles in the present study) also either as targets or distractors, affect processing efficiency? Will the positive triangle, as in Experiment 1, be extremely slow or act more as in Experiment 3, in a highly efficient fashion? How will the positive and negative stimuli fare with respect to one another in general? Experiment 5 examines these questions.

Another motivation for using a larger stimulus set in Experiment 5 was to examine capacity (as well as other measures: RTE, Miller's bound, etc.) when participants are encouraged to process features. In Experiments 1–4, the small number of possible stimuli allowed participants to bypass the processing of the features by memorizing whole figures and their corresponding responses. Garner (1978) referred to such possibility as configural interaction to indicate that in some cases the attributes used by the experimenter do not define the properties of the stimuli as processed by the participant. Rather, the stimuli become new entities, or configurations. For example, participants could easily assign the three target-present figures as wholes to the “yes” response and the fourth no-target figure to the “no” response. Although this could be regarded as a support for holistic processing, we should consider the possibility that in some cases stimulus sets may be too large to allow such a strategy. A more parsimonious approach would then be to look for either the line target or the angle target. Would participants still show evidence for holistic processing even when encouraged, almost forced (by the large number of target items), to process the constituting features? In Experiment 5, we tested this question by introducing a much larger stimulus set, which makes the memorizing of items as wholes much more difficult and therefore less likely.

Experiment 5

Method

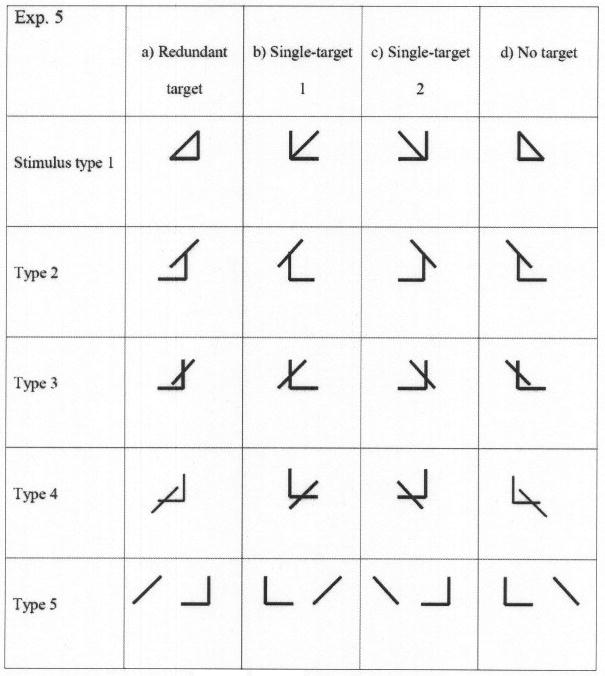

Four undergraduate students were paid to participate in Experiment 5. An additional 22 undergraduate students performed in exchange for partial course credit. All had normal or corrected-to-normal vision. As in the previous experiments, an oblique line (left–right) and an angle (left–right) were combined to form the stimuli, thus creating four experimental conditions (redundant target, single target 1, single target 2, and no target). However, as depicted in Figure 7, the line and angle could be arranged together in any of five ways, creating a total of 20 stimuli. The “/” and a rotated └ were again defined as the targets; when presented with either or both, the participants were to respond affirmatively by pressing the mouse key marked “yes.” Otherwise, they were to press the “no” key. Overall, 15 items contained at least one target feature and were assigned to the “yes” response, whereas the remaining 5 stimuli contained only distractor features.

Figure 7.

The stimulus set used in Experiment 5. As in the previous experiments, the redundant-target (a) and single-target (b and c) displays call for a “yes” response, whereas the no-target display (d) calls for a “no” response. The basic features of line and angle could be combined together in one of five spatial arrangements (stimulus types).

The design was similar to that of Experiment 1, with slightly fewer trials because of a longer instruction phase at the beginning of the session (the larger number of possible stimuli dictated more time for the introduction of stimulus examples). Each participant performed in a training session of 40 trials, followed by four experimental blocks of 240 trials each (a total of 960 trials that gives 240 trials per condition), with stimulus order randomized across and within blocks. The stimuli were evenly divided to five possible spatial arrangements (types), such that each of the four conditions had an equal number of 48 trials for any given spatial arrangement. We did not manipulate the saliency of the features, so stimuli were always presented in black over a gray background.

Results and Discussion

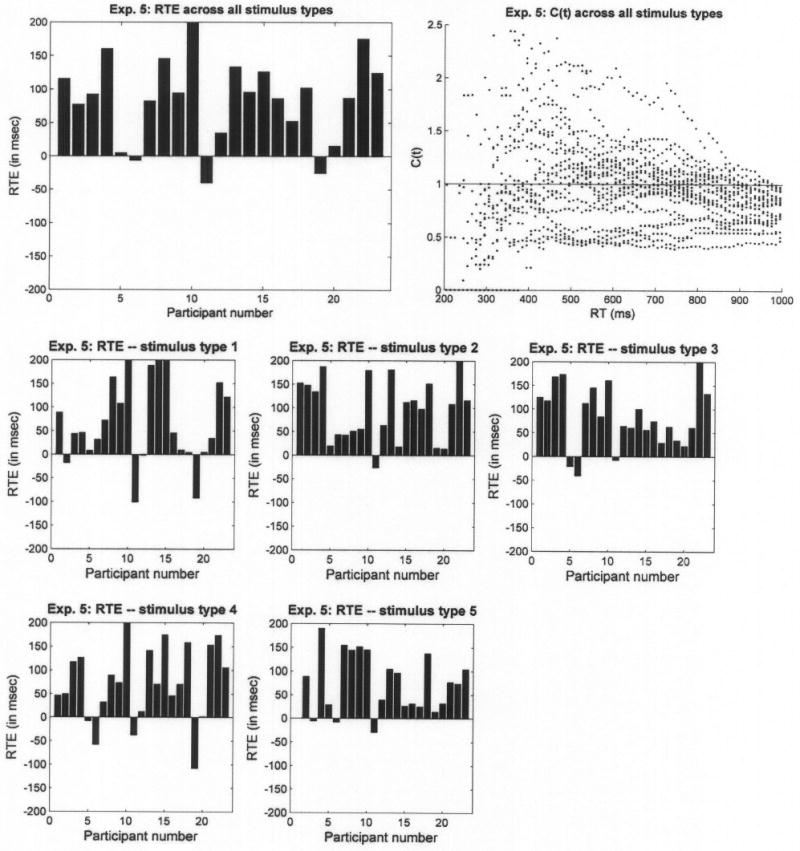

We excluded data from 3 participants who had an error rate of more than 10%. Most other participants demonstrated a notable RTE; when pooled across stimulus types, responses on redundant-target trials were much faster (577.4 ms on average) than on single-target trials (731.4 and 667.1 ms on average). The top two panels of Figure 8 show individual RTEs and capacity across all stimulus types. Positive RTEs, accompanied by C(t) values that exceed 1, were observed for almost all participants when data were pooled across stimulus types. Analysis of variance revealed that 17 out of the 23 participants exhibited highly significant RTE (p < .001), 1 (Participant 11) showed a negative RTE (p < .001), and the rest did not have statistically significant results. Also, there were massive indications of super capacity.

Figure 8.

Redundant-target effect (RTE) and capacity coefficient, C(t), for Experiment 5. The upper left panel presents individual RTEs, where each bar corresponds to a single participant. The upper right panel presents C(t) values pooled across participants. The lower panels present RTEs calculated separately for each stimulus type.

What is more interesting is that positive RTEs and super capacity were observed also when estimated separately for each of the stimulus types (bottom of Figure 8). Most startling is the pattern observed for Stimulus Type 1. These are the exact same stimuli that yielded a negative RTE and extremely low C(t) values in Experiment 1. However, when presented in Experiment 5 as part of a larger stimulus set (i.e., different nondisplayed context), they led to a positive RTE and super capacity for most participants.

On a minor but interesting note, the two single-target stimuli were not processed at the same rate. Responses to single target 2, where the single target was the angle, were faster than to single target 1, where the target was the oblique line. Several reasons may account for this result: The overall length of lines in the angle is larger than the oblique line, the angle possesses the extra feature of a vertex (angle) where the horizontal and vertical lines meet, and sensitivity to horizontal and vertical lines (angle) may be higher than to diagonals. In Experiments 1–4, on the other hand, single-target 1 trials were faster than single-target 2 trials. We cannot determine unequivocally what drives this difference, but one speculation is that with a small set size of four items, the items may be processed as wholes and the advantage of one feature (angle) over the other would not matter as much. Other factors, which we have not studied, may then determine the relative RTs of single-target 1 versus single-target 2 conditions.

Returning to the primary focus, the super capacity observed in Experiment 5 could result from averaging data across subconditions; participants may have processed certain types of stimuli holistically (for instance, the line and angle that were spatially arranged to create the closed figure of a triangle) but not others. Separate analyses of RTE for each stimulus type (bottom of Figure 8) revealed that this was not the case. In addition, we examined a finer grained capacity analysis, calculated for each stimulus type. For this purpose, we asked a new participant to perform in two sessions instead of just one, so that we would have enough trials (98) to establish stable CDFs for each of the five stimulus types in each of the four conditions (redundant target, single target 1, single target 2, no target). The results for each stimulus type were qualitatively similar: Regardless of whether the oblique line and the angle were aligned to create a triangle, crossing each other, or spatially separated, the participant repeatedly demonstrated super capacity, thus showing evidence in favor of holistic processing.