Abstract

We compared the contribution of featural information and second-order spatial-relations (spacing between features) in face processing. A fully factorial design had ‘features’ (eyes, mouth, nose) the same or different across two successive displays, while orthogonally the second-order spatial-relations between those features were the same or different. The range of such changes matched the possibilities within the population of natural face-images. Behaviorally we found that judging whether two successive faces depicted the same person was dominated by features, though second-order spatial-relations also contributed. This influence of spatial-relations correlated, for individual subjects, with their skill at recognition of faces (as famous, or as previously exposed) in separate behavioral tests. Using the same repetition-design in fMRI, we found feature-dependent effects in lateral occipital and right fusiform regions; plus spatial-relation effects in bilateral inferior occipital gyrus and right fusiform that correlated with individual differences in (separately measured) behavioral sensitivity to those changes. The results suggest that featural and second-order spatial-relation aspects of faces make distinct contributions to behavioral discrimination and recognition, with features contributing most to face discrimination and second-order spatial-relational aspects correlating best with recognition skills. Distinct neural responses to these aspects were found with fMRI, particularly when individual skills were taken into account for the impact of second-order spatial-relations.

Introduction

“Do I know you?” is a phrase that can herald an embarrassing social scenario which many of us have experienced, reflecting a failure to recognize the face of a person we have previously met, or occasional false ‘recognition’ of a stranger. Several lines of evidence indicate that correct recognition of previously seen faces may be a separable process from perceiving certain aspects of the currently seen face (Benton & Van Allen, 1972; Bruce & Young, 1986; de Gelder & Rouw, 2001); both in the normal brain, and in neurological cases where one or another aspect of face processing may be selectively damaged (Benton & Van Allen, 1972; De Renzi et al., 1991; Sergent & Signoret, 1992).

An abundant literature on face-processing makes a distinction between part-based or featural information, versus more holistic or configural processing (Carey & Diamond, 1977; Sergent, 1984; Tanaka & Farah, 1991; Tanaka & Farah, 1993; Tanaka & Sengco, 1997; Rossion et al., 2000; Collishaw & Hole, 2000; de Gelder & Rouw, 2000b; Sagiv & Bentin, 2001; Yovel & Duchaine, 2006). Features concern information about the individual parts within a face (e.g. eyes, nose), as distinct from information concerning relations between such features, which may be divided into several types (Maurer et al., 2002). We focus here on the roles of featural information versus second-order spatial-relations (i.e. spacing between features). We conducted behavioral studies and related neuroimaging experiments, seeking in particular to exploit any individual differences in face-processing skills; how these might relate to performance in different face tasks; and to brain activation under different conditions.

Featural versus configural processing for faces has been addressed by many prior studies, including behavioral (e.g. Carey & Diamond, 1977; Sergent, 1984; Tanaka & Farah, 1993; Collishaw & Hole, 2000), neuropsychological (Moscovitch et al., 1997; Barton et al., 2002; Yovel & Duchaine, 2006), electrophysiological (Perrett et al., 1992; McCarthy et al., 1999; Eimer, 2000; Sagiv & Bentin, 2001) and neuroimaging studies (Rossion et al., 2000; Lerner et al., 2001; Yovel & Kanwisher, 2004). One perspective has emphasized that face processing in general may rely on configural processing, more so for faces than for other classes of visual objects (Levine & Calvanio, 1989; Carey, 1992; Tanaka & Sengco, 1997; Farah et al., 1998; Saumier et al., 2001; Leder et al., 2001). Other authors have emphasized that both featural and configural information may contribute to face processing (Sergent, 1984; Perrett et al., 1992; Collishaw & Hole, 2000; Yovel & Duchaine, 2006; Jiang et al., 2006). This apparently accords with recent fMRI studies indicating that featural versus configural manipulations of faces may elicit distinct brain responses (Rossion et al., 2000; Lerner et al., 2001; Yovel & Kanwisher, 2004). Those studies contrasted featural and configural processing either by manipulating the task (Rossion et al., 2000); the stimuli (i.e. presenting features in canonical or non-canonical face configuration, Lerner et al., 2001); or both task and stimuli (Yovel & Kanwisher, 2004). However, the precise anatomical localization of putative featural versus configural face processing, as indicated by fMRI, appears somewhat inconsistent across existing studies. Featural processes have been attributed to bilateral lateral occipital cortices (LOC, Lerner et al., 2001; Yovel & Kanwisher, 2004), or left fusiform face area (FFA, Rossion et al., 2000); while configural processing has been attributed to bilateral fusiform gyrus (FFG, Lerner et al., 2001), or right FFA alone (Rossion et al., 2000) or to neither (Yovel & Kanwisher, 2004).

The operational definitions of featural versus configural processing often varied between such studies (e.g. configural corresponded to holistic in Rossion et al., 2000; to first-order relations in Lerner et al., 2001; to second-order spatial-relations in Yovel & Kanwisher, 2004); or were not always clearly stated (cf. Maurer et al., 2002). This might account for some of the differences in fMRI outcome. Here we defined featural versus configural processing in terms highlighted by Maurer and colleagues’ overview (Maurer et al., 2002). The eyes, nose and mouth were considered to provide facial ‘features’; while the second-order spatial-relations between these (i.e, spacing. of the eyes, nose and mouth relative to each other and to a constant face outline) provided the ‘configural’ cues for our stimulus manipulations (see also Yovel & Kanwisher, 2004). Note that while there may also be other aspects of processing that might be considered configural, such as first-order relations or potentially holistic properties (see Maurer et al., 2002), we focused solely on second-order spatial-relations, both for operational clarity, and because these aspects are thought to be particularly important for face individuation and recognition (Sergent, 1984). Notably this was commented upon long ago by Carl Lewis (Lewis, 1865), in the dialog between Humpty-Dumpy and Alice; but has also been emphasized in much recent empirical work (e.g. Sergent, 1984; Carey, 1992; Rhodes et al., 1998).

The roles of featural and second-order spatial-relation information in faces are commonly tested by assessing participants’ ability to detect changes in either of these two aspects (when changing facial features, or changing the spatial-location of otherwise comparable features within a face; e.g. Le Grand et al., 2001; Barton et al., 2002; Maurer et al., 2002; Yovel & Kanwisher, 2004; Yovel & Duchaine, 2006). This raises the question of whether, or indeed how, to ‘equate’ such changes for comparison. In some studies, featural and second-order spatial-relation changes have been putatively equated, by carefully matching these changes for overall behavioral difficulty in normal observers (e.g. see Le Grand et al., 2001; Yovel & Kanwisher, 2004). While this approach can be experimentally elegant, it runs the potential risk of producing unnatural stimuli that may not reflect the usual roles or relative weights of featural versus second-order spatial-relations information within real faces. For instance, if features normally differ more between distinct real faces than second-order spatial-relations, then the latter cues might have to be ‘exaggerated’ to match for difficulty, which could then lead to over-estimates of their usual weight, or vice-versa. Accordingly, the approach we took here was to utilize the natural range of featural and spatial-relation differences, as observed in original (real) face photos.

Prior studies that equated difficulty for normal participants have suggested that prosopagnosic patients are more impaired in detecting changes in second-order spatial-relations than in features of faces (Barton et al., 2002; Barton et al., 2003); though featural process may be also impaired in some of these patients (Yovel & Duchaine, 2006). This accord with the common idea in the face literature that spatial-relations in faces may be particularly critical for face recognition. Surprisingly, however, a recent fMRI study (Yovel & Kanwisher, 2004) did not observe increased responses for second-order spatial-relation processing in faces in ventral visual ‘fusiform face area’ (FFA). In that study, participants performed a one-back repetition-detection task, focusing either on the features or second-order spatial-relation aspects of faces, with the experiment seeking to match these two tasks for discrimination difficulty. The study reported an increase in response in ‘object-selective regions’ (lateral occipital cortex, or LOC, that included lateral and ventral occipital cortex) when attending to features compared with when attending to spatial-relation aspects (Yovel & Kanwisher, 2004). Note that this type of design, as in several previous fMRI studies also (Rossion et al., 2000; Lerner et al., 2001; Yovel & Kanwisher, 2004) typically tests for featural or spatial-relation processing by contrasting them directly against each other. In such a design, any regions that may process both types of information in faces cannot readily be detected. In the present fMRI study, we used a paradigm that allowed us to test for featural and second-order spatial-relation processing independently, in a fully factorial 2 × 2 design.

We used a combination of behavioral and fMRI experiments. As noted above, one key aspect of our studies was that we allowed the featural and second-order spatial-relation cues to vary over the ‘natural range’ from the set of real face photos that we manipulated. Although this may not equate the resulting stimuli for experimental ‘difficulty’ (cf. Le Grand et al., 2001; Yovel & Kanwisher, 2004), it may accord better with actual variation among natural faces. We then measured the impact of featural and second-order spatial-relation information on each participant in a person-discrimination task (‘do two successive face images show the same or a different person?’), rather than equating this contribution experimentally. We also sought to take advantage of any individual differences in the contribution of the different types of information (thus any variation in face-processing skills) that may arise spontaneously, in the group of neurological intact individuals whom we studied.

We used an immediate pair-repetition paradigm (cf Winston et al., 2004; Eger et al., 2004b; Rotshtein et al., 2005), manipulating whether all face features were the same or different; and independently whether the second-order spatial-relations between features were same or different, in a fully orthogonal 2 × 2 design (Fig. 1A). In a behavioral context, participants judged whether two successive faces in a pair represented the same or a different person (person-individuation, or equivalently person-discrimination task). In this way, we measured the impact of changing features and/or changing second-order spatial-relations on person discrimination judgments, plus any individual differences in the impact of features or of second-order spatial-relations upon this. We could thereby assess the relative contribution of each of these two cues to face processing when varying these over the ranges found in the natural set of faces used, for each participant.

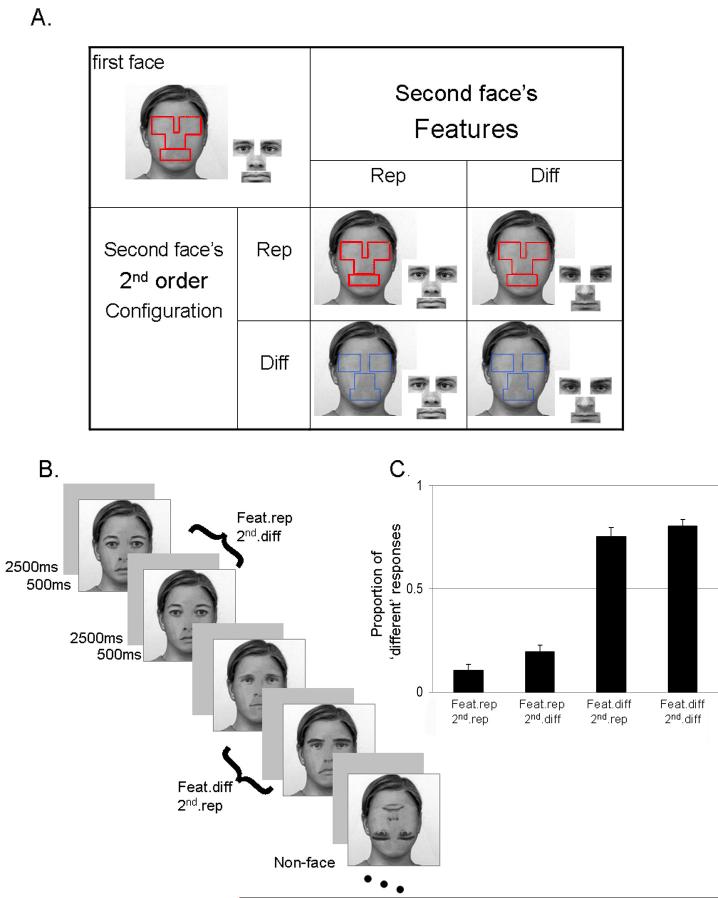

Figure 1. Person-discrimination experiment.

A. The 2 × 2 design had two orthogonal factors: feature change/repeat, and second-order spatial-relations change/repeat. Within a pair of face, the second stimulus either repeated or differed from the first stimulus in its component features (eyes, nose, mouth), and/or independently in the spatial layout of these component features. At upper-left is an example of the visual elements used to generate a first face in a pair. The four cells in the 2 × 2 ‘table’ display examples of the possible second faces that could follow. The ‘raw materials’ that generated each stimulus are shown: the blank face with gender-nonspecific haircut and outer features served as the template, while the red or blue lines mark where each feature was located; on the right of each face are shown the four rectangles containing the featural information. The spatial locations for placing these features onto the template define the second-order configural spatial-relations. Red lines indicate same second-order spatial-relations (i.e. configural spacing) as in first face; blue lines indicate a different second-order spatial-relations (taken from a different face in the original un-manipulated photos). B. Example sequence of stimuli as they appeared in the experiment. This sequence represents the fMRI experiment, in which a continuous stream of stimuli was presented and participants made a face/non-face decision. Note that the pair-structure depicted in (A) was thereby concealed within the ongoing stream, by random interleaving of conditions, and inclusion of filler ‘non-face’ stimuli. C. Results of Experiment 1, the person-discrimination experiment. The proportions of ‘different’ responses for the different types of pairs are presented, along with standard error of the mean.

We found that featural changes had a stronger influence than second-order spatial-relations cues on person-discrimination; but that second-order spatial-relations cues also contributed, and that the relative impact of each cue type on person-discrimination varied from one participant to another. Having thus indexed any individual differences among our neurologically intact participants, in the impact of features or second-order spatial-relations on person-discrimination, we next tested whether these individual differences correlated with performance on other face-processing tasks. Specifically, we conducted three entirely separate tests of face recognition. One tested for delayed explicit recognition after incidental exposure to previously unknown faces. The second tested for explicit recognition of famous faces. In both these cases we implemented objective signal-detection measures of face recognition skill. The third measure was a subjective self-rating of face recognition. Remarkably, we found that individual differences in objective and subjective face-recognition, measured on these separate tests, correlated with the impact of second-order spatial-relations cues, as measured in the separate person-discrimination task for each individual participant.

In an fMRI experiment we used a similar immediate repetition/non-repetition design as in the behavioral person-discrimination task, but now using a separate set of naive subjects, and without imposing explicit person-discrimination during scanning (to avoid possible contamination of fMRI results by behavioral performance and potential differences in “difficulty”). Our fMRI data showed that featural changes (versus repetition) influenced the response of lateral occipital sulcus and the right fusiform gyrus (FFG). Second-order spatial-relation changes had a distinct neural impact on the inferior occipital gyrus, and also on the right FFG, but this was revealed only by considering individual differences in the impact of these changes, as measured in the separate behavioral person-discrimination task.

Experiment 1: Person-discrimination task

Methods

Participants

19 healthy volunteers (9 females mean age 25yrs, range 18-35yrs) participated in behavioral Experiments 1 (and Experiment 2, see below). One participant failed to use the correct response buttons during the person-discrimination task so was excluded from analysis. Participants had normal or corrected visual acuity, and no current or past neurological or psychiatric history. Informed consent was obtained in accord with local ethics.

Stimuli

The initial stimulus set compromised 30 achromatic front-view faces with neutral expressions, taken from the Karolinska Directed Emotional Faces set (KDEF, Lundqvist & Litton, 1998). The stimuli were created using Photoshop 6.0. We first overlaid on each face an identical outer-contour that ensured faces only differed in their inner features. This outer-contour frame was created by combining outer features (hair, ears, neck etc) from different faces of the same original KDEF set, and had a unisex appearance that was identical across all faces (see examples in Fig. 1). Four virtual rectangles (identical in size across faces) were manually placed on each face around the eyes (bordering the nose bridge and the eye brow); around the nose (aligned with the starting point of the ‘valley’ between the nose and the cheek); and around the mouth (bordering the chin; see Fig. 1). The content of these rectangles was operationally defined as providing the facial features, while the spatial arrangement of these rectangles defined the second-order spatial-relation manipulation.

A blank face with skin texture and a constant outer-contour frame (see above), served as the template image (see Fig. 1A). The feature-rectangles were overlaid onto this template image to generate each new face stimulus. The spatial-relations between the feature-rectangles could match the spatial-relations within the original face for those features; or instead match the spatial-relations from a different face from the original natural set (see Fig. 1A). Examples of the final stimuli used are presented in Figure 1b. Three judged in a pilot study did not reliably distinguish between faces that had features and second-order spatial-relations originated from the same person or from two different persons. Importantly, our second-order spatial-relations and featural manipulations were based on variation of these parameters within the face set we used (i.e. total of 30 faces), using the values found naturally within the original set.

To provide an estimate of the extent of the second-order spatial variation within the set of faces (disregarding local feature changes), we measured this variation between 5 landmark points within each face: right and left pupils; tip of the nose; the upper lip at the extremity of the ‘Cupid’s bow’; the left earlobe; and the mid-point of the hairline. Note that the locations of the later two landmarks were fixed across subjects and therefore provide an objective measure of absolute differences between faces. We first calculated Euclidian distances between each of these landmarks and the left eye. Then we measured the differences in these distances between the face pairs from the experiment (see Procedure below) for equivalent features. These difference-scores were converted to percent change by dividing them with the fixed distance between the two earlobes (which was part of the outer-contour template and hence constant across all faces, see above). The percent-change provides a metric of perceptual change between pairs of faces in spatial-relations. On average, variations in second-order spatial-relations between the two pupils were 2.5% (± 1.8s.d.); the left pupil and the tip of the nose 1.8% (± 1.8s.d.); the left pupil and the upper lip 1.9% (± 1.5s.d.); left pupil and left earlobe 2.3% (± 1.7s.d.); left pupil and hairline 2.27% (± 0.63s.d.). In terms of viewing angle during the behavioral session these changes were all less than 1 degree. On average the second-order spatial-relation changes between pairs of faces thus sum up to 13.8% of the face width (i.e. distance between the two earlobes).

Procedure

We used an immediate pair-repetition paradigm, manipulating change or repetition across successive pairs of stimuli in a factorial 2x2 design, with the orthogonal factors of feature change (different or repeat) and second-order spatial-relation change (different or repeat). Thus, within each successive pair of faces, features or their second-order spatial-relations could repeat or differ, independently (Fig. 1A). Each condition included 15 different face pairs. Each pair was presented twice (giving a total of 30 pairs). Stimuli size was 7.2 cm2 and view angle was ∼4.12 degree. A pair trial started by an empty circle presented for 500ms that cued the participant to get ready. Each face in a pair was then presented for 500ms, with a 500ms interval between them, and the trial ended with a 2000ms fixation point, or after participants responded. Participants were instructed to judge whether the two successive faces appeared to depict the same person or two different persons. The task of person-discrimination was described in this subjective way, and with reference to people rather than images, with the aim of approximating natural face-individuation processes, rather than assessing the ability to detect any visual changes whatsoever between two successive retinal images. Participants were asked to respond as quickly and accurately as possible. Stimuli were presented and responses were collected using Cogent2000 software (http://www.vislab.ucl.ac.uk/Cogent).

Data analysis

Data were analyzed using Matlab6.0 and SPSS13.0. Results for the subjective person-discrimination task are presented (Fig. 1c) as the proportion of ‘different’ responses in the total number of pairs per condition (i.e. out of 30). For each participant we estimated the impact of a given type of change, by subtracting the proportion of ‘different’ responses for the repetition trials from those for the non-repetition trials, for each dimension separately. Thus for example, the impact of changing the features on person-discrimination was defined as featural different-minus-featural repeat (for proportions of ‘different’ responses). Note, this measure was based on subjective judgments by participants that pairs of faces depicted the same person or two different people, rather than a strictly objective measure of whether there had been any image change whatsoever. We chose this subjective measure because we were interested in investigating the conditions that affect the individuation process (i.e. what leads participants’ to decide that two faces depict two different individuals). As will be shown, this measure proved to be very revealing, correlating both with other more objective behavioral indices in separate experiments, and also with some of the later fMRI results. Correlation between the impact of feature and second-order spatial-relations changes were tested using non-parametric Spearman’s rho and significance was tested using two-tailed student-t distribution with [N-2 = 16] degrees of freedom.

Results

We assessed the influence of the different conditions on the proportion of “different’ judgements in person-discrimination, using repeated measures ANOVA with two factors (featural change or repeat; and second-order spatial-relations changed or repeated). We found that judgments were strongly affected by featural changes compared to featural repeats (F1,17 = 407, P < 0.001; see Fig. 1c). Performance was also significantly affected by changes of second-order spatial-relations (F1,17 = 19.9, P < 0.001), though less so. The interaction did not reach full significance (F1,17 = 2.55, P = 0.1). The impact of featural changes (increased proportion of ‘different’ responses being 0.65 ± 0.14s.d when features changes versus remained the same) was much larger than the corresponding impact of second-order spatial-relation changes (0.084 ± 0.08s.d.; t17 = 16.8, P < 0.001). Thus, featural changes had more impact overall in the present on-line person discrimination task, although second-order spatial-relations also had some influence. There were no significant differences in reaction times between conditions (all Ps > 0.1).

We next tested whether the results were consistent across stimulus pairs, re-analyzing the data now using pairs as the random term with 15 levels (1 for each particular pair). For each condition, we calculated a Chi- square statistic that tested whether some pairs tended to be judged significantly more frequently as ‘different’ (or ‘same’) than others. There were no significant differences between pairs (all χ214 < 23; Ps > 0.05).

As a first approach to possible individual differences, we tested whether the overall impact of featural change on particular participants’ judgments correlated with the overall impact of second-order spatial-relations changes. No such relations were found (Spearman’s rho = 0.23, t16 = 0.94, P = 0.4). Although this lack of correlation could in principle reflect a lack of power, we think this is unlikely, since clear correlations with other behavioral measures were found for the present group of subjects (see below). Instead, we suggest that the lack of correlation might arise because detection of featural changes and of second-order spatial-relations changes in faces may reflect some separate skills and distinct neural processes, as further elaborated on below, and ultimately confirmed here with fMRI.

The greater impact of featural than spatial-relation changes overall in Experiment 1 (see Fig. 1c) at first glance seems in apparent conflict with a common emphasis in the face literature on a critical role for second-order spatial-relations (and for configural processing more generally). For instance, it has been suggested that deficits in prosopagnosia may particularly implicate second-order configural spatial-relations, rather than processing of facial features (Barton et al., 2002). But note that prosopagnosia commonly presents as a failure to recognize faces, rather than to discriminate or individuate them per se (Benton & Van Allen, 1972). Our next behavioral experiments sought to determine whether individual differences among our group of neurologically intact individuals, in the impact of second-order spatial-relations, might be related to individual variation in their abilities at recognizing faces, more so than for the impact of features.

Experiment 2: Measures of participants’ face recognition skills

The ability to recognize faces was assessed by three independent behavioral measurements, implemented separately from Experiment 1 and using entirely different stimuli. Two of the new tests (Exps. 2a and 2b) provided objective measures of face recognition; while a third (Exp. 2c) comprised a subjective self-evaluation of face recognition skill in daily life. We related each of the measures from Experiments 2a-c, to the separately obtained data for the same participants from the person-discrimination task in Experiment 1, which had indexed the impact of featural changes and of second-order spatial-relational changes on person discrimination for each of these participants.

Methods

Participants

were identical to those from Experiment 1 (see above).

Experiment 2a - Incidental learning of newly exposed faces

Stimuli

40 faces with different identities (20 females) were taken from the Karolinska Directed Emotional Faces set (KDEF, Lundqvist,et al,. 1998). Note that the faces used in this experiment had different identities from those used in the person-discrimination experiment (Exp 1), with no overlap in stimuli. Experiment 2a had two phases, an incidental study phase, followed ∼45min later by a recognition test phase. In the study phase we used colored close-ups of happy facial expressions in three different view points (frontal, left 3/4, right 3/4, Fig. 2A). For the test phase, images of the same identities were used, but now zoomed to an extreme close up frontal view, achromatic and with neutral expressions rather than happy (Fig. 2B). This was done to ensure that recognition should not be based on low-level properties of the visual image, but rather on encoding of individual face properties.

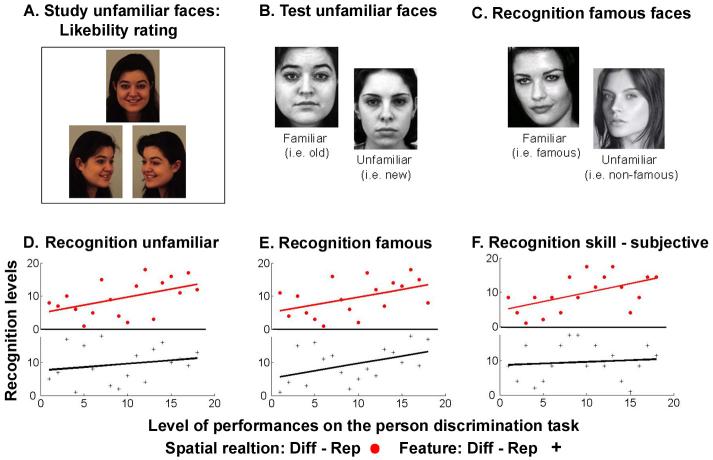

Figure 2. Face recognition measurements.

Examples of stimuli used for the face recognition tests. Two objective measures of face recognition ability were used: incidental learning of newly exposed faces (Exp. 2a) and recognition of famous faces (Exp. 2b). A. Example of the stimuli used in the study phase of the incidental learning task (Exp. 2a). Three different viewpoints of the same individual with a happy facial expression were presented simultaneously for 5s. Participants were asked to rate the likeability of each individual. B. In the test phase of Experiment 2a, achromatic zoomed close-ups of the 20 individuals presented in the study phase were randomly interleaved with 20 new individuals. All faces had neutral expressions. Participants were asked to make a yes/no recognition judgment on each face, presented singly. C. Examples of stimuli that were used in the famous face recognition test. Note that the face images were chosen with care such that famous and non-famous faces should not differ in their compositions. D-F. Scatter plots of individual participants’ behavioral impact of featural changes on proportion of ‘different person’ responses (black crosses, bottom) and likewise for the impact of second-order relational changes (red circles, top), plotted against the three measures of face recognition: D, Experiment 2a, d’ of the incidental learning; E, Experiment 2b, d’ of the recognition of famous faces and F, Experiment 2c, subjective rating of face recognition. Note that the scatter-plots describe the non-parametric correlations used, thus the values are rank orderings of the original values for each behavioral measure. The impact of second-order spatial-relations (red circle, top) correlated with all three measures of face recognition (all P’s < 0.05); while the impact of featural change (black crosses, bottom) correlated only with recognition of famous faces Experiment 2b (P < 0.05). See main text of Results for more details.

Experimental procedure

The incidental study phase occurred at the beginning of the experimental session (before Exp 1). In this phase, we used a likeability rating task. Participants were presented with three different views of the same face with a happy expression for 5s and instructed to study the faces closely. After the faces disappeared they were prompted with a question that asked them to state how much they liked this person on a scale from 1-to-6: one being ‘I don’t like this person at all’ and six being ‘I like this person very much and would be happy to meet him/her’. The next identity appeared after participants gave their rating. 20 identities (10 females) counterbalanced across participants were chosen randomly for the study phase from the total 40 identities. The incidental-learning procedure and likeability-rating were chosen as we sought to approximate real-life encounters and to accord with the Warrington Face Recognition procedure (Warrington & James, 1967), which uses a similar exposure task during their incidental learning procedure. In between the initial incidental-study phase, and the later recognition test, subjects participated in other face-related tasks (in the following order: person-discrimination, Exp 1; recognition of famous faces, Exp. 2b and other face related experiments that are not reported here), but with no overlap in the stimuli used (with Exp 2a). Altogether more than 250 photographs of faces (all with different identities) intervened, with an average time separation of ∼45min between the incidental study phase and the test phase.

In the recognition-test phase, participants performed a familiarity judgment on 40 identities (of which 20 had been presented in the earlier study phase and 20 new identities). Faces were presented in random order for 750ms each, with an inter-trial interval up to 2000ms (depending on response time). Participants were explicitly instructed that these faces might have identities that had appeared earlier, and that if so the face would always be a different image of that person. They were instructed to work with their gut feeling of familiarity. Foil (new) and target (old, previously exposed during study-phase) were fully counterbalanced across participants.

Data analysis

Data were analyzed using signal detection theory. For each participant d prime (d’) for sensitivity to previously seen faces, plus a decision criterion (β) or ‘response bias’, were estimated (Snodgrass and Colwin, 1988). Correct recognition of a previously seen face constituted a hit, while erroneously recognizing a novel face as familiar constituted a false alarm. Hit and false alarm rates were transformed to Z scores using the standard normalized probability distribution. d’ was estimated as the difference between the standardized scores (Z) of the hit rates (H) and of the false alarm rates (FA)

Decision criteria (β) was estimated as the ratio of the densities of the hit and the false alarm rates, again transformed using the standard normalized probability distribution and calculated as:

where f0 is the height of the normal distribution over ZH and fn is the height of the normal distribution over ZFA (Snodgrass and Colwin, 1988).

Correlations between behavioral measures (e.g. those from Experiment 1 and Experiments 2a,c) were calculated using non parametric Spearman’s rho correlations in SPSS14.0, with each participant contributing one score per behavioral measure. We used a non-parametric test (i.e. Spearman’s rho) for subject-by-subject correlations between different behavioral measurements, to ensure that our results were not unduly influenced by outlier participants. Significance was tested with a one-tailed t-distribution with [N-2 = 16] degrees of freedom, as any increase in sensitivity to a particular facial property (i.e. impact of featural changes, or particularly of second-order spatial relation changes, in Experiment 1) was expected to correlate positively with any estimates of face recognition skill (in Experiment 2). Two participants did not make any false alarms in Experiment 2a, precluding a reliable estimation of their decision criterion (β) and hence were omitted from correlation tests that involved the β criterion.

Experiment 2b - recognition of famous faces

Stimuli

114 achromatic close-ups of famous people and 114 achromatic close-ups of unfamiliar faces were used. An effort was made to match the photo composition of the unfamiliar faces to that of the famous faces, by using photos taken from unknown models’ books, and of politicians from distant regions (Fig. 2C). This was done to ensure that recognition of famous faces could not be performed based on cues in the photo composition, but rather on familiarity with individual face properties. In a pilot study, 2 judges confirmed the anonymity of the unfamiliar faces and the familiarity of the famous faces in the context of young (20-40y) London/British culture.

Experimental procedure

Stimuli were presented in random order, each for 750ms with an ISI of 750-2000ms (depending on participant response time). Participants performed a yes-no familiarity judgment on the faces. They were explicitly instructed to work with their gut feeling of familiarity, rather than rely on any semantic contextual cues. They were also explicitly instructed that the photos of the unfamiliar faces might have a similar photo composition to the photos of the famous individuals, so they should not base their judgment on photo composition. Experiment 2b was run after the study phase of Experiment 2a and after Experiment 1.

Data Analysis

Data were analyzed using signal detection theory and then non-parametric correlations (i.e. Spearman’s rho) with the other behavioral measures, similar to Experiment 2a. Hits comprised correct recognition of famous faces, while incorrectly recognizing an unfamiliar face as familiar counted as a false alarm.

Experiment 2c: subjective self-ratings of face-recognition skill in daily life

Participants were requested to rate their face recognition skill in daily life on a scale of 1-to-10 at the beginning of the experimental session (before performing the study phase of Exp 2a). With one being, ‘I cannot remember faces at all’ and ten being ‘I never forget a person’s face once I met her’. Subjects were explicitly instructed that this rating was not about their naming skills or their ability to retrieve semantic information regarding individuals, but just about their ability to recognize faces in daily life. Correlation with the other behavioral measures (Exps 1, 2a,b) were calculated using non parametric Spearman’s rho and significance was tested with a one-tailed t-distribution.

Results

First, to test for any relations between the three recognition skill measures, we computed correlation analyses on individual scores in Experiments 2a, 2b and 2c. The two independent objective measures of face recognition skill (Exps 2a, b) were highly correlated. The d’ values and response-bias values estimated from recognition of exposed but previously unknown faces (incidental learning, Exp. 2a) were highly correlated with those estimated separately from the recognition of famous faces in Experiment 2b (for d’: Spearman’s rho (r) = 0.795, t16 = 5.24, P < 0.001; β: Spearman’s rho = 0.794, t16 = 5.22, P < 0.001). This suggests that some related mechanisms may underlie recognition of recently seen but previously unfamiliar faces, and of famous faces. This result is in agreement with other recent work (Goshen-Gottstein & Ganel, 2000). On the other hand, the subjective measure of face recognition skill (Exp 2c) did not correlate with the d’ objective measures of that skill (Exp 2a,b; all Spearman’s rho < 0.03, t16 < 0.12, P > 0.1). This suggests that objective measures may tap into different aspects of face recognition skill than subjective self-ratings.

More importantly, the impact of second-order spatial-relations on person discrimination judgments (as measured in Exp 1 for each individual), correlated with all three separate measures of face-recognition skill (Figs. 2D-F). The impact of second-order spatial-relation changes in Experiment 1 for individual participants correlated with their d’ for incidental face-recognition in the entirely separate task of Experiment 2a (Spearman’s rho = 0.45; t16 = 2.01; P < 0.05); and with their d’ for recognizing famous faces in Experiment 2b (Spearman’s rho = 0.436; t16 = 1.94; P < 0.05). Thus, the impact of second-order spatial-relational changes (in unknown faces that do not have to be remembered) in Experiment 1 was positively related to individuals’ abilities to recognize newly learned faces (after a delay of ∼45 minutes) and to recognize famous faces. It did not correlate with the response-bias measures for either of the objective recognition tasks (all P > 0.1). Finally, the impact of second-order spatial relational changes on particular participants in the person-discrimination task also correlated with their self-ratings of face-recognition ability in daily life (Spearman’s rho = 0.54; t16 = 2.56; P < 0.01). Thus, remarkably more than 20% of the variability between participants in their face recognition skills can be explained by the impact of second-order spatial-relations on their person-discrimination.

By contrast, individual differences in the impact of featural changes for the person-discrimination task of Experiment 1 did not correlate with the incidental learning task in Experiment 2a (Spearman’s rho = 0.2, t16 = 0.8, P = 0.2), nor with self-rating of recognition skill (Exp 2c: Spearman’s rho = 0.12, t16 = 0.48, P = 0.3). There was however some correlation with recognition of famous faces (Exp 2b: Spearman’s rho = 0.44; t16 = 1.96, P < 0.05), indicating that features might perhaps become important for recognizing over-learned faces. As with spatial-relations, the impact of featural changes did not correlate with response-bias measures for any recognition tests (all P > 0.1). The absence of correlations between impact of featural changes, and the other recognition measures, seems unlikely merely to reflect restriction-of-range on the individual feature scores, since variability on the impact scores was actually more widely spread for features (ranging from 0.36 to 0.83 of proportional change in ‘different’ responses when features changed, with 0.18 s.d.) than for the impact of second-order spatial-relations changes (ranging from -0.01 to 0.25 with 0.08 s.d.).

Experiment 3: fMRI experiment

The preceding behavioral experiments showed that features had a greater overall impact on person-discrimination than second-order spatial-relations, though the latter did have some impact (Exp 1). Moreover, individual differences in the impact of second-order spatial-relations (Exp 1) related to individual differences in objective and subjective aspects of face-recognition (Exp 2). We next sought to test with fMRI for brain activations due to changes of features; or to changes of second-order spatial-relations and more particularly (given the results of Exp 2) any fMRI effects related to individual differences in the impact of second-order spatial-relations. Most previous fMRI studies of featural versus spatial-relation processing of faces have not considered individual differences among neurologically intact participants (though see Gauthier et al., 1999 who manipulated training, rather than looking at spontaneous individual differences as here, and did not focus on featural and spatial-relation processing, unlike here).

The design used for our fMRI study (n = 14) was analogous to the design for Experiment 1. We again manipulated whether operationally-defined face-features changed or not and orthogonally whether second-order spatial-relations changed or not, across two successive face stimuli. This was implemented in a fully factorial 2 × 2 immediate-repetition design. In this way we could test for any repetition effects on fMRI (cf. Henson et al., 2000; Kourtzi & Kanwisher, 2000; Avidan et al., 2002; Henson & Rugg, 2003; Winston et al., 2004; Eger et al., 2004a; Rotshtein et al., 2005; Grill-Spector et al., 2006) related to either repeated features or second-order spatial-relations. Importantly, the factorial design enabled us to test effects of featural and second-order spatial-relation changes independently and thus to delineate any regions that may be sensitive to only one type of information or both types. This design differs from other recent fMRI studies testing featural versus more configural processing, where typically the two putative processes were compared to each other directly (e.g. Rossion et al., 2000; Yovel & Kanwisher, 2004), rather than orthogonally as here.

A further key aspect of our fMRI study was consideration of individual differences in the impact of second-order spatial-relations (or featural information), as separately assessed behaviorally. The behavioral measures were implemented after scanning, using an analogous behavioral person-discrimination task as in Experiment 1, plus subjective rating of recognition skill as in Experiment 2c.

Methods

Participants

14 healthy volunteers participated in the fMRI experiment (9 females, mean age 29, range 19-51y). All participants had normal or corrected visual acuity, and no concurrent or past neurological or psychiatric history. Informed consent was obtained in accord with local ethics.

Experimental procedure

An immediate pair-repetition paradigm was used (see also (Kourtzi & Kanwisher, 2000; Winston et al., 2004; Rotshtein et al., 2005) with four types of successive pair being possible among an ongoing stream of stimuli. An identical set of stimuli was used as in Experiment 1, though the image size (here, 12.6 cm2) and view angle (here, 3.4 degree) of stimuli differed, but the second-order spatial-relations changes remained on averaged less than 1 degree of visual angle. The 2 × 2 design was analogous to that used in the person-discrimination behavioral experiment (Exp 1), with one factor of featural change or repetition, and a second orthogonal factor of second-order spatial-relation change or repetition (Fig. 1A). For efficiency (and so as to ‘disguise’ the pair-repetition structure of the fMRI experiment, and thereby minimize attention and strategic confounds), faces were now presented in a continuous stream, with each face appearing for 500ms, separated by an ISI of 2500ms containing only a central fixation point (Fig. 1B). Half of the stimuli were non-faces. These were generated by inverting the inner feature-rectangles of the faces. Participants were instructed to maintain fixation and to indicate for each stimulus whether it was a face or ‘non-face’, using the right middle or index finger (counterbalanced between subjects). Note that we did not impose person-discrimination judgments during scanning, as we sought to avoid the fMRI results from potentially being confounded by performance or ‘task difficulty’. A further ∼30% null events of 3sec length with a fixation point were added to jitter the events and allow the signal to saturate, hence optimizing the estimated response (Friston et al., 1999). The order of trials was pseudo-randomized to maximize the separation in time between repeating faces with the same features or with the same second-order spatial-relations. Each pair was presented three times in three different consecutive fMRI sessions. Each fMRI session was divided into two epochs, separated by a 5s break in which participants got feedback regarding their reaction times and accuracy in discriminating non-faces from faces. 13 of the fMRI participants performed the person-discrimination experiment (as in Exp 1) immediately after scanning. The procedure was identical except that the image size was larger (19.5cm2) and correspondingly the viewing angle wider (∼21.3 degree). Note as well that for the second cohort as opposed to the first, the stimuli set was familiar as they have seen each face at list 3 times before making the person discrimination task. 13 participants also gave a subjective rating of their face-recognition skill in daily life (as in Exp 2c) after the experimental session ended.

Scanning

A Siemens 1.5T Sonata system (Siemens, Erlangen, Germany) was used to acquire Blood Oxygenation Level Dependent (BOLD) gradient echo planar images (EPI). Images were reconstructed using Trajectory Based Reconstruction (TBR), to minimize ghosting and distortion effects in the images (Josephs et al., 2000). Thirty-two oblique axial slices (2 mm thick with 1.5mm gap) were acquired with 64*64 pixels and in-plane resolution of 3*3 mm2, 90° flip angles, 30ms echo time (TE) and 2880s repeat time (TR). To reduce susceptibility artifacts in anterior and posterior temporal cortices, slices were tilted 30 degrees anteriorly (Deichmann et al., 2003). Subsequent to the functional scans, a T1 weighted structural image (1*1*1 mm resolution) was acquired for co-registration and for anatomical localization of the functional results.

Data Analysis

Whole-brain voxel-based analyses were performed with SPM2 (www.fil.ion.ucl.ac.uk/spm). EPI volumes were realigned and unwarped to correct for artifacts due to head movements (Andersson et al., 2001; Ashburner & Friston, 2003a). The time-series for each voxel was realigned temporally to the acquisition of the middle slice. The EPI images were normalized to a standard EPI template, corresponding to the MNI reference brain, and resampled to 3*3*3 mm3 voxels (Ashburner & Friston, 2003b). The normalized images were smoothed with an isotropic 9 mm full-width-at-half-maximum (FWHM) Gaussian kernel, in accord with the SPM approach.

Statistical analysis in SPM2 uses summary statistics with two levels (Kiebel & Holmes, 2003; Penny et al., 2003). At the first level, single subject fMRI responses were modeled by a design matrix with regressors for each condition, depicting the onset of the second face in a pair. The onset regressors were convolved with the canonical HRF (Friston et al., 2003). Effects of no interest were also modeled, including regressors for the onsets of feedback events, the onset of the first face in each pair regardless of the subsequent stimulus, the scanning sessions, and harmonics that capture low frequency changes of the signal to account for biological and scanner noise (equivalent to highpass filtering the data at 1/128Hz). Note that in this design the critical conditions differed only in respect to how the second face related to the first face in a pair, with the latter held constant, and the former counterbalanced across subjects (Fig. 1A). Accordingly only the critical SPM results for the four regressors relating to the second face in each successive pair are reported. Linear contrasts pertaining to the main effects, interactions and simple effects were calculated for each subject. To allow inferences at the population level, a second-level random-effects analysis was performed, where subjects were treated as random variables. Here, images resulting from contrasts calculated for each subject were entered into a new analysis and tested for significance using a one-sample t-test. Figure 3 presents the resulting SPM student t-maps threshold at P < 0.005 uncorrected. We present only results that were consistent across subjects (i.e. in the random-effects group analysis).

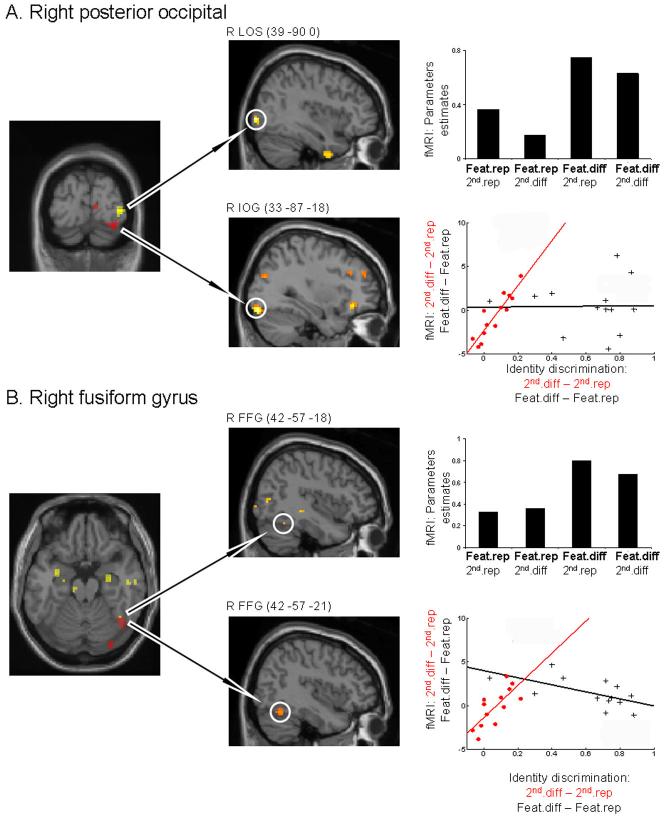

Figure 3. fMRI experiments results.

On the left, two overlaid SPMs maps (yellow and red) are presented on a T1 template image of a single subject. Yellow indicate areas showing significant main effects of featural changes; red indicates areas showing significant correlations of brain responses to second-order spatial relational change with the impact of such changes on individual participants’ behavioral person-discriminations, in a separate behavioral study (see main text). In the middle column, the corresponding SPMs are overlaid on a sagital T1 template image, depicting each effect separately. For presentation purposes all SPM maps are thresholds at P < 0.005. In the right columns, plots of parameters estimates taken from the maxima (MNI coordinates given in central column) are plotted for each of the four experimental conditions; and scatter plots are shown for the effects of second-order spatial-relation changes on fMRI activity (coordinates above) against the behavioral impact of such changes on person-discrimination (in red circles). For comparison, responses in relation to the behavioral impact of featural change on the fMRI response to featural change (no correlation found) are also plotted for the same maxima (black pluses). A. Separate featural and second-order spatial-relation effects in posterior occipital cortices (LOS, IOG, respectively). B. Convergence of featural and second-order spatial-relation effects (latter related to individual differences in behavioral impact of relational changes) in right FFG. Key to acronyms: 2nd.diff, changes of second-order relations; 2nd.rep, repetition of second-order relations; Feat − feature.

Correlations of behavioral outcomes (e.g. impact of second-order spatial-relations and of features on person-discrimination performed outside the scanner, calculated for individual participants just as for Exp 1) with BOLD response were tested using linear correlation (Pearson) analysis across subjects as implemented in SPM2, between the behavioral and contrast images that measures effects the same type of information within faces. Specifically, given the above behavioral results (Exp 2), we tested for any correlation between the behavioral impact of second-order spatial-relations and the size of the fMRI effect for second-order spatial-relations change minus repeat, for each individual participant. An analogous procedure was used to test for any correlations between the individual behavioral impact of feature changes and the fMRI effect of feature changes minus repeat; and between the subjective reports of face recognition skill and brain responses to changes in features or in second-order spatial-relations. The correlation scatter plots (Fig 3) plot the parameter estimates measures for each participant taken from the maxima within occipito-temporal cortex that showed correlation of brain-behavior against the impact of second-order spatial relation. For comparison, and to test for sub threshold effects, the parameter estimates for the feature change from the same maxima (as above) are plotted against the behavioral impact of featural change for each participant. Significance was tested using two-tailed t-tests with N-2 degrees of freedom.

Analyses of the behavioral experiments (the person-discrimination task and the subjective rating of face recognition skill) were implemented with the same statistical tests as in Experiments 1 and 2c.

Functional localizer of face-preferential voxels

To assess whether any of the fMRI effects observed in the main 2x2 experiment overlapped with voxels that may show preferential activation to faces versus other classes of objects, we implemented a separate functional localizer scan for 13 of our 14 participants. Stimuli for this localizer included 20 achromatic close-up photos of unfamiliar faces (Henson et al., 2003), cropped with an ellipse to exclude outer-contours, plus 20 achromatic photos of houses cropped with an ellipse to match the same outline frame as the faces. In addition scrambled versions of the faces were generated, initially in a checkerboard grid of 13*10 which was then cropped by the same ellipse-outlines as the faces. Stimuli for the functional localizer were presented in a blocked-design, with 20 stimuli from the same category in each block (either faces, houses, or scrambled faces). The blocks of 20sec duration were separated with a 10sec fixation point presented on a grey background. Each stimulus was presented for 500ms, with an inter-stimulus interval of 1000ms during which a fixation cross on a grey background was presented. The participants’ task during the localizer blocks was simply to detect any immediate repetition, by a button press, which occurred ∼15% of the times equally distributed across all blocked conditions. Each block was repeated 2-3 times.

The localizer fMRI data were acquired using an identical MR sequence and protocol as above and were pre-processed in the same manner. For each subject, face-preferential voxels were then delineated using the contrast [faces − (houses + scrambled faces)]. A second-level analysis then tested for consistent localisation of face-preferential voxels across subjects using a one-sample t-test. The outcome of this comparison was used as an inclusive mask (with the conventional threshold for masking of P < 0.05 uncorrected) within SPM2, to test whether any of the fMRI effects reported in the main experiment (i.e. changes of features and/or of second-order spatial-relations within faces) overlapped with face preferential voxels.

Results

Participants in the fMRI experiment performed the person-discrimination task (as in Exp 1) and gave subjective self-rated face recognition skill (as in Exp 2c) after the fMRI session. The results of these two new behavioral datasets replicated the results reported above in Experiment 1 and 2c. The new results for person-discrimination did not differ from those of Experiment 1 tested using a mix design ANOVA with cohort as a between subject factor and change/repeat featural or second-order spatial relations as within factors, for any terms (all the interactions with the cohort factors were F1,29 < 1, P > 0.3). We next describe the results of the analysis of only the second cohort. As in Experiment 1, participants were more likely to judge two faces as reflecting two different people when features changed compared to when they repeated (F1,12 = 80.2, P < 0.001); and likewise, albeit to a lesser extent, when second-order spatial-relations changed compared to repeated (F1,12 = 7.1, P < 0.05). As in Experiment 1, the impact of feature changes on proportion of ‘different’ responses (0.61 ± 0.2s.d.) was much larger than the impact of second-order spatial-relation changes (0.065 ± 0.08s.d.; t12 = 7.85, P < 0.001) and these two impacts did not correlate (Spearman’s rho = -0.088, t11 = 2.88, P = 0.3). Here changes in spatial-relations led to more frequent reports of a different person when features repeated than when features changed (F1,12 = 7.8, P < 0.05).

Importantly, the relation between person-discrimination and subjective self-ratings of recognition skill in the new behavioral dataset also replicated Experiment 2c. Once again only the impact of second-order spatial-relation changes related to participants’ face recognition skill (Spearman’s rho = 0.674, t10 = 2.88, P < 0.05), not the impact of feature change (Spearman’s rho = -0.004, t10 = 0.293, P = 0.91). Taken together the new behavioral results from the scanned participants replicate the findings for the earlier behavioral tests. Person-discrimination judgments were more influenced by featural than by second-order spatial-relation changes, though the latter also contributed significantly. The impact of second-order spatial-relations (on proportion of ‘different’ response) for individual participants again correlated with self-rated face-recognition skill, but the individual impact of feature changes did not.

During scanning, participants had to monitor each stimulus to determine whether it was a face or a non-face (see Methods). This monitoring task was implemented to avoid confounding repetition-related effects with response requirements (Henson et al., 2002). Mean accuracy to detect faces from non-face was 90%, and this was not affected by whether the preceding face in the stream had same or different features, or the same or different second-order spatial-relations (all P’s > 0.1). Furthermore, participants’ performances on the face, non-face task were not affected by the impact of features or second-order spatial relation measured above (all P’s > 0.1). Mean latency to respond to the face stimuli was 505ms, and this was unaffected by our experimental conditions (all P’s > 0.2). This confirms that any effects measured by the fMRI cannot be attributed to differences in task difficulty between the different conditions, thereby making attentional or strategic differences between conditions unlikely also, given the constant face/non-face task with equivalent performance.

Given findings from previous neuroimaging studies on face processing, and in particular on putative configural and featural aspects (Rossion et al., 2000; Lerner et al., 2001; Yovel & Kanwisher, 2004), we focus primarily on fMRI effects found within occipito-temporal cortices. But for completeness, Table 1 lists all regions anywhere in the brain surviving P < 0.001 uncorrected that involved more than 10 contiguous voxels. There was no reliable interaction between changes to features and second-order relations in any brain area. Thus we report and discuss main effects and correlations only.

Table 1. Consistent fMRI results across subjects.

| Anatomical location | H | Z-stat | MNI(x,y,z) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| A. Main effect of feature change: Feat.diff - Feat.rep | |||||||||

| LOS | R | 4.15 | 39 | -90 | 0 | ||||

| 3.07 | 45 | -75 | 9 | ☺ | |||||

| L | 2.76£ | -42 | -60 | 3 | ☺ | ||||

| FFG | R | 2.63£ | 42 | -54 | -21 | ☺ | |||

| Amyg | R | 3.22 | 30 | -9 | -21 | ☺ | |||

| L | 3.47 | -30 | -6 | -27 | ☺ | ||||

| B. Main effect of second-order configural relations change: 2nd.diff > 2nd.rep | |||||||||

| IPS | R | 4.15 | 48 | -39 | 36 | ||||

| C. Correlation of behaviour and fMRI, second-order configural relations: | |||||||||

| IOG | R | 4.31 | 33 | -87 | -18 | ||||

| L | 3.85 | -45 | -75 | -12 | |||||

| 2.80£ | -45 | -81 | -6 | ☺ | |||||

| FFG | R | 2.93£ | 42 | -57 | -21 | ||||

| 2.66£ | 45 | -54 | -21 | ☺ | |||||

| IFG | L | 4.54 | -33 | 36 | 24 | ||||

| SFG | R | 4.11 | 18 | 63 | 12 | ||||

| L | 3.47 | -18 | 18 | 42 | |||||

| CG | 3.57 | -3 | -9 | 36 | |||||

| IFG/insula | R | 3.75 | 33 | 33 | -12 | ||||

| Putamen | L | 3.39 | -24 | -3 | 9 | ||||

Abbreviations: LOS, lateral occipital sulcus; FFG, posterior fusiform gyrus; OFC, orbital frontal cortex; aSTS, anterior superior temporal sulcus; MTS, middle temporal sulcus; Amyg, amygdala; CG, cingulated gyrus; aHipp, anterior hippocampus; IPS, intraparietal sulcus; IOG, inferior occipital gyrus; IFG, inferior frontal gyurs; SFG, superior frontal gyrus; CG, cingulated gyrus; H, hemisphere; L, left, R, right. ☺ peak that overlap with face preferntial voxels across subjects (tested using inclusive mask P < 0.05, uncorrected). Statitical significnace of peak activation, two-tailed tests: all Z > 2.98, P ≤ 0.002, aside from £P < 0.008..

fMRI effects due to featural change were assessed by contrasting conditions in which the features repeated versus changed, irrespective of second-order spatial-relation conditions (i.e. main effect of featural change minus featural repetition). Bilateral lateral occipital sulcus (LOS) and the right FFG (Fig. 3 and Table 1A) showed relatively higher activation when features changed versus repeated within successive pairs of face stimuli in the stream. Clusters within bilateral LOS and the right FFG overlapped with face preferential voxels as identified by the separate functional localizer, suggesting these regions may have more involvement in processing faces than other object types (Table 1A and Methods).

Possible fMRI effects due to second-order spatial-relation changes (initially disregarding individual differences in the impact of such change) were assessed by comparing conditions in which second-order spatial-relations changes versus repeated, irrespective of featural conditions (i.e. main effect of spatial-relation changes minus spatial-relation repetition). Only the right intraparietal sulcus (Table 1B), outside of occipito-temporal visual cortex, showed significant increases in response when second-order spatial-relations changed compared to repeating. This activation might potentially be related to the role of right parietal cortex in more global or configural processing (cf. Robertson et al., 1988; Fink et al., 1996), as changes of second-order spatial-relations may affect global processing more than changes of featural information in faces (Farah et al., 1998). However given our a-priori interests in occipito-temporal cortex, we do not speculate further on this.

When disregarding individual differences, there were no reliable fMRI effects for changes of second-order spatial-relations in occipito-temporal cortex. But when considering individual different in the impact of second-order relational changes (which had been found to be crucial in the preceding behavioral experiments), some clear fMRI results were found. We analyzed the fMRI data to assess differential effects that depended on the inter-participant impact of second-order relational changes, as assessed (for n = 13) in the behavioral session of the person-discrimination task after scanning. Specifically, we tested for correlations between the individual impacts of second-order spatial-relation changes on behavioral person-discrimination (outside the scanner), with brain responses to such stimulus changes, for each individual, inside the scanner during the monitoring (face/non-face) task. We also tested any analogous brain-behavioral relations for the impact of featural changes. Reliable brain-behavior correlations were found only for the second-order spatial-relation changes. The individual behavioral impact of second-order spatial-relation change (measured subsequent to scanning) correlated positively with increased activity due to second-order spatial-relation changes (minus repeats) in bilateral IOG and in right FFG (see Fig. 3 and Table 1C). Note that these neural effects critically depended on the impact of second-order spatial-relations changes on each participant, as assessed with a separate behavioral measure, since there was no overall main effect for second-order relations changes in these regions when disregarding individual differences (see above).

Moreover, by using the separate functional localizer data to delineate face-preferential voxels, we show that part of the left IOG and the right FFG clusters overlapped with face preferential voxels (Table 1C), indicating that these regions may engage more in face processing than for other types of objects. No brain region showed a featural-change effect that correlated with individual differences in the (separately measured) behavioral impact of feature changes.

Turning to subjective self-ratings of face-recognition skill, remarkably we found that these also positively correlated with the effect of second-order spatial relational change for each participant, in left IOG ([-42 -84 -3]: Pearson r = 0.76, t11 = 3.89, P = 0.002). This raises the intriguing possibility that recruitment of these regions for encoding of changes in second-order relations may be associated with improved face recognition skill.

Recall that in the behavioral results, subjective-rating of recognition skill did not correlate with the impact of featural change. Likewise, there was not correlation of such subjective-ratings with the subject-by-subject fMRI impact of featural changes, unlike the positive relationship found for second-order spatial-relation changes, in the IOG.

Taken together, these fMRI data suggest that, in posterior occipital regions, featural and second-order relational aspects of faces may be processed separately (Fig. 3), albeit with an important role for individual differences in sensitivity to second-order spatial-relation changes. Responses of right LOS were affected by a change of features (t13 = 6.18, P < 0.001) but not by second-order spatial-relations changes (t13 = -1.4, P = 0.2), and were unrelated to the impact of second-order spatial-relations changes for particular individuals (Pearson r = 0.05, t11 = 0.16, P = 0.8). Conversely, bilateral IOG effects of second-order relational changes were reliably correlated (see above) with separately measured behavioral impact of relational change for each individual subject. Yet IOG was unaffected by featural change overall (right IOG: t13 = 0.5, P = 0.3; left IOG: t13 = 0.7, P = 0.2), and showed no reliable positive correlation with the individual behavioral impact of features (right IOG: t13 = 0.074, left IOG: t13 = -.5, see Fig. 3). It may be noteworthy that the functionally dissociated brain responses for featural or second-order spatial-relation changes were observed in relatively posterior regions of occipital cortex. Previous studies have reported face preferential voxels in posterior occipital cortices (Kanwisher et al., 1997; Levy et al., 2001; Hasson et al., 2003), as also observed here. One concern relates to the possibility that our effects were driven solely by low level visual changes between the face pairs. However, we note that both the second-order spatial-relations and featural changes were small in terms of degrees of viewing angle (< 1 degree), and that the affected regions fell primarily anterior to retinotopic visual areas, rather than arising in the very earliest regions such as the calcarine sulcus. Hence it seems unlikely that these effects reflect retinotopic factors per se.

The right FFG (peak MNI: 42 -54 -21) showed two critical effects. It was affected by featural change (t13 = 3.1, P < 0.005), but also showed a second-order spatial-relations influence that was related to the individual impact of relational changes on individuals’ person-discrimination behavior (Pearson r = 0.7, t11 = 3.25, P < 0.005). These two results indicate that, unlike more posterior regions, the right FFG may be involved in both featural and in (individually varying) second-order relational processing, with these two types of information potentially converging here. Note also that the right FFG cluster overlapped with face preferential voxels.

General Discussion

In a series of behavioral experiments, and an fMRI experiment, we studied the role and impact of featural face information (eyes, nose and mouth regions) and of second-order spatial-relations among these same features (Maurer et al., 2002). Unlike recent studies (Le Grand et al., 2001; Yovel & Kanwisher, 2004) that sought to ‘match’ the discrimination difficulty of featural and second-order spatial information using artificial ranges, we took the alternative approach of manipulating our stimuli according to the ranges within the different photos of real natural faces. Hence, variability in eyes, nose and mouth features, and likewise the variability in the second-order spatial-relations of these features, matched the variability of the original real faces in these operationally-defined aspects.

In an on-line person-discrimination task (Exp 1), we found that changes in the features had a bigger overall influence on the proportion of ‘different-person’ responses; but that second-order spatial-relations changes also had some impact, with these influences varying from one participant to another. Experiments 2a-c applied three very different measures of face-recognition ability to participants from Experiment 1. Experiment 2a assessed incidental learning of new faces, as tested by yes-no recognition after delays of ∼ 45 minutes (and more than 250 intervening face stimuli). Experiment 2b assessed familiarity judgments for famous faces intermingled with unknown faces. Experiment 2c obtained ratings of participants’ estimates of their own face-recognition skill in daily life. Thus, whereas Experiments 2a-b used objective measures of recognition sensitivity (d’), Experiment 2c provided a purely subjective self-rating.

Remarkably, we found that the behavioral impact of second-order spatial relational changes on person-discrimination in Experiment 1, for each participant who also underwent Experiment 2, correlated positively with the three separate measures of face-recognition (see and Figs. 2D-F). This provides an entirely new line of evidence, from individual differences among neurologically intact participants, for a role of second-order relational processing in face-recognition skills. The impact of second-order spatial-relations on each participant’s person-discriminations in Experiment 1 did not correlate with the impact of featural changes. Moreover, the latter showed no relation to incidental learning of new faces, nor to subjective ratings of face-recognition skill, although it did show some correlation with familiarity judgments for famous faces (indicating that high impact of features may become an advantage for over-learned individuals, in addition to second-order spatial-relations).

Turning to our fMRI data (Exp 3), participants now had solely to monitor the face stimuli (for non-face deviants), while we varied whether features and/or second-order spatial-relations repeated or changed across successive face stimuli in an ongoing stream. Featural change (versus repetition) led to reliable activation in bilateral LOS and in right FFG, with part of these clusters overlapping with face-preferential voxels, as determined from a separate functional localizer. These results may accord with recent reports suggesting that facial features are processed in lateral occipital cortex (LOC, Lerner et al., 2001; Yovel & Kanwisher, 2004). Note that LOC is a functionally defined region, which typically includes the lateral and ventral banks of the occipital cortex (Malach et al., 2002). LOC is hypothesized to be a complex of several sub-regions with sub-parts preferentially responsive to faces (Malach et al., 2002). Our results suggest that LOC subdivisions may reflect processing demands, namely featural versus second-order spatial-relations, and not just stimulus category (Malach et al., 2002). Our data suggest that these two processing types overlapped for some clusters with face-preferential voxels, but may not always be specific to faces (as shown by Lerner et al., 2001; Yovel & Kanwisher, 2004).

In accord with a recent study that reported no overall changes in activation for second-order spatial-relations versus featural properties (Yovel & Kanwisher, 2004), here we likewise found no overall effect of second-order spatial relational change versus repetition, when ignoring individual differences. However, when taking into account individual differences in the impact of second-order spatial-relations changes on person-discrimination (as assessed in the separate behavioral session), we found that bilateral IOG (and also right FFG) showed activations for second-order spatial-relations change that correlated with the individual participants’ behavioral impact of such changes. This outcome suggests that neurologically intact individuals vary in the extent to which they rely on second-order spatial-relations information from faces when discriminating people. Moreover, here we found that this variation can be reflected in their brain responses when monitoring faces. Furthermore, we also found rather remarkably that this fMRI effect correlated with self-ratings of face-recognition skill in daily life, for the IOG.

The present study points to the importance of considering individual differences in face processing among the normal population. We found that the behavioral impact of second-order relational changes varied between individuals in a way that related to three separate measures of face-recognition skill (incidental learning of new faces; familiarity judgments for famous faces; and self-ratings of face-recognition skill in daily life). Moreover, variation in the behavioral impact of second-order relational changes also related to brain activations for such changes, in bilateral IOG, and in right FFG. It may be worth noting that within these peaks of correlation, some participants showed increased activation when spatial-relations repeated compared to when they changed (i.e. an inverse of the usual repetition-decrease phenomenon). Increased fMRI responses following face repetitions in occipito-temporal cortex have been observed by some other studies (e.g. (Henson & Rugg, 2003; George et al., 1999). Furthermore it has been suggested that stimulus repetition may lead to fMRI increases or decreases, depending on expertise and initial processing difficulty (Kourtzi et al., 2005). Decreases following stimulus repetition may occur if processing is easy (e.g., with salient stimuli); while increases may occur if the task is difficult and performance is initially poor (Kourtzi et al., 2005). This perspective may fit nicely with our results, as here repetition of second-order spatial-relations led to an localized fMRI increase for those subjects that were relatively poor at processing spatial-relational changes within faces (as shown by the small impact of such changes on their person-discrimination), unlike subjects who were relatively good at processing this stimulus dimension (as shown by the larger impact for them). It may be interesting to test in future research whether supervised learning could invert the fMRI repetition effect of second-order spatial-relations within faces for the less ‘expert’ participants.

Our results may relate to previous findings emphasizing the role of second-order spatial relational processing (more than featural processing) in face-processing deficits such as prosopagnosia (Farah et al., 1995; de Gelder & Rouw, 2000a; Saumier et al., 2001; Barton et al., 2003; Joubert et al., 2003). Although the classic literature on prosopagnosia dealt with neurological patients, there has been increasing interest in the possibility that prosopagnosic-like deficits may exist within the otherwise ‘normal’ population, reflecting a developmental disorder (Barton et al., 2003; Behrmann & Avidan, 2005; Duchaine & Nakayama, 2006). None of our present participants reported face deficits in daily life; and none was within an abnormal range for recognizing famous or recently exposed faces (see Exp. 2). Nevertheless, our results indicate that processing of second-order spatial-relations, and face recognition, may be related skills that vary within the ‘normal’ population. Indeed, none of the most critical findings here (i.e. the correlations between different behavioral measures; or between a behavioral measure and an fMRI outcome) could have been obtained without considering individual differences. Identifying the causes of these individual differences (which might be genetic, experience-dependent, or both) requires future research. This may also address whether congenital or developmental prosopagnosics simply fall at one extreme end of a face-processing-skill continuum; or instead differ qualitatively from normal variation in sensitivity to second-order spatial-relations (see Behrmann & Avidan, 2005; Duchaine & Nakayama, 2006).

Individual differences in the impact of featural changes, rather than second-order spatial-relations, did not correlate with any other measure here, with the exception of familiarity judgments for famous faces. It may be that features do eventually become recognizable in over-learned faces. This accords with anecdotal reports that prosopagnosic patients occasionally recognize faces by relying on distinctive facial features (Bentin et al., 1999). Other prosopagnosic patients may show deficits in featural and not just second-order relational processing (Yovel & Duchaine, 2006). The in-principle sufficiency of well-learned featural processing for recognition of highly familiar faces accords with evidence from machine learning, where successful algorithms often rely on featural rather than second-order spatial-relation information (Hancock et al., 1998). Recognition performance with such approaches typically depends closely on the number of face exemplars the system has received for a particular individual during training (Burton et al., 2005). However, we found here that inter-participant differences in the impact of second-order relations showed stronger links to recognition performance (and to brain activity in IOG and FFG) than did individual differences in the impact of featural changes.