Abstract

The pretest–posttest study is commonplace in numerous applications. Typically, subjects are randomized to two treatments, and response is measured at baseline, prior to intervention with the randomized treatment (pretest), and at prespecified follow-up time (posttest). Interest focuses on the effect of treatments on the change between mean baseline and follow-up response. Missing posttest response for some subjects is routine, and disregarding missing cases can lead to invalid inference. Despite the popularity of this design, a consensus on an appropriate analysis when no data are missing, let alone for taking into account missing follow-up, does not exist. Under a semiparametric perspective on the pretest–posttest model, in which limited distributional assumptions on pretest or posttest response are made, we show how the theory of Robins, Rotnitzky and Zhao may be used to characterize a class of consistent treatment effect estimators and to identify the efficient estimator in the class. We then describe how the theoretical results translate into practice. The development not only shows how a unified framework for inference in this setting emerges from the Robins, Rotnitzky and Zhao theory, but also provides a review and demonstration of the key aspects of this theory in a familiar context. The results are also relevant to the problem of comparing two treatment means with adjustment for baseline covariates.

Keywords: Analysis of covariance, covariate adjustment, influence function, inverse probability weighting, missing at random

1. INTRODUCTION

1.1 Background and Motivation

The so-called pretest–posttest trial arises in a host of applications. Subjects are randomized to one of two interventions, denoted here by “control” and “treatment,” and the response is recorded at baseline, prior to intervention (pretest response), and again after a prespecified follow-up period (posttest response). We use the terms “baseline/pretest” and “follow-up/posttest” interchangeably. The effect of interest is usually stated as “difference in change of (mean) response from baseline to follow-up between treatment and control.”

For instance, in studies of HIV disease, a common objective is to determine whether the change in measures of immunologic status such as CD4 cell count from baseline to some subsequent time following initiation of antiretroviral therapy is different for different treatments. Depressed CD4 counts indicate impairment of the immune system, so larger, positive such changes are thought to reflect more effective treatment. To exemplify this situation, we consider data from 2139 patients from AIDS Clinical Trials Group (ACTG) protocol 175 (Hammer et al., 1996), a study that randomizes patients to four antiretroviral regimens in equal proportions. The findings of ACTG 175 indicate that zidovudine (ZDV) monotherapy is inferior to the other three [ZDV+didanosine (ddI), ZDV+zalcitabine, ddI] therapies, which showed no differences on the basis of the primary study endpoint of progression to AIDS or death. Accordingly, we consider two groups: subjects who receive ZDV alone (control) and those who receive any of the other three therapies (treatment). As is routine in HIV clinical studies, measures such as CD4 count were collected on all participants periodically throughout, and interest also focused on secondary questions regarding changes in immunologic and virologic status. An important secondary endpoint was change in CD4 count from baseline to 96±5 weeks.

To formalize this situation, let Y1 and Y2 denote baseline and follow-up response (e.g., baseline and 96±5 week CD4 count) and let Z = 0 or 1 indicate assignment to control or treatment, respectively. Because, under proper randomization, pretest mean response should not differ by intervention, it is reasonable to assume that E(Y1|Z = 0) = E(Y1|Z = 1) = E(Y1) = µ1. Letting E(Y2|Z) = µ2 + βZ, the desired effect may then be expressed as

| (1) |

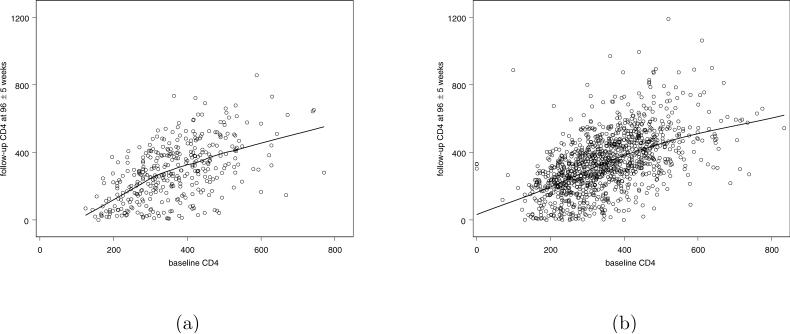

and interest focuses on the parameter β. A number of ways to make inference on β have been proposed. Because the question is usually posed in terms of difference in change from baseline, analysis is often based on the “paired t test” estimator for β found by taking the difference of the sample averages of (Y2 − Y1) in each group. The second expression in (1) involves only posttest treatment means, suggesting estimating β in the spirit of the two-sample t test by the difference of Y2 sample means for each treatment (ignoring baseline responses altogether). However, if baseline response is correlated with change in response or posttest response itself, intuition suggests taking this into account. For continuous response, this has led many researchers to advocate the use of analysis of covariance (ANCOVA) techniques, in which one estimates β directly by fitting the linear model E(Y2|Y1, Z) = α0 + α1Y1 + βZ. A variation is to include an interaction term involving Y1Z; here, β is estimated as the coefficient of (Z − Z̄) in the regression of Y2 − Ȳ2 on Y1 − Ȳ1, (Z − Z̄), where the overbars denote overall sample average. Singer and Andrade (1997) mentioned a “generalized estimating equation” (GEE) approach (see also Koch, Tangen, Jung and Amara, 1998), where (Y1,Y2)T is viewed as a multivariate response vector with mean (µ1,µ2 + βZ)T and standard GEE methods are used to make inference on β. Yang and Tsiatis (2001) and Leon, Tsiatis and Davidian (2003) provided further details on all of these methods. The two-sample t test approach implicitly assumes pre- and posttest responses are uncorrelated, which may be unrealistic, while the paired t test and ANCOVA evidently assume linear dependence of Y1 and Y2, which may not hold in practice; for example, Figure 1 shows baseline and follow-up CD4 counts in ACTG 175 and suggests a mild curvilinear relationship between them in each group. Numerous authors (e.g., Brogan and Kutner, 1980; Crager, 1987; Laird, 1983; Stanek, 1988; Stein, 1989; Follmann, 1991; Yang and Tsiatis, 2001) have studied these “popular” procedures under various assumptions, yet no general consensus has emerged regarding a preferred approach, providing little guidance for practice.

Fig. 1.

CD4 counts after 96±5 weeks versus baseline CD4 counts for complete cases for (a) ZDV alone and (b) the combination of ZDV+ddI, ZDV+ddC or ddI alone, ACTG 175. Solid lines were obtained using the Splus function loess() (Cleveland, Grosse and Shyu, 1993).

A further complication facing the data analyst, particularly in lengthy studies, is that of missing follow-up response Y2 for some subjects. In the ACTG 175 data, for example, although baseline CD4 (Y1) is available for all 2139 participants, 37% are missing CD4 count at 96±5 weeks (Y2) due to dropout from the study. A common approach in this situation is to undertake a complete-case analysis, applying one of the above techniques only to the data from subjects for whom both the pre- and posttest responses are observed. In the GEE method, one may in fact include data from all subjects by defining the “multivariate response” for those with missing Y2 to be simply Y1, with mean µ1. However, as is well known, for all of these approaches, unless the data are missing completely at random (Rubin, 1976), which implies that missingness is not associated with any observed or unobserved subject characteristics, these strategies may yield biased inference on β.

Often, baseline demographic and physiologic characteristics X1, say, are collected on each participant. Moreover, during the intervening period from baseline to follow-up, additional covariate information X2, say, including intermediate measures of the response, may be obtained. In ACTG 175, at baseline CD4 count (Y1) and covariates (X1), including weight; age; indicators of intravenous drug use, HIV symptoms, prior experience with antiretroviral therapy, hemophilia, sexual preference, gender and race; CD8 count (another measure of immune status); and Karnofsky score (an index that reflects a subject's ability to perform activities of daily living) were recorded for each participant. In addition, CD4 and CD8 counts and treatment status (on/off assigned treatment) were recorded intermittently between baseline and 96±5 weeks (X2). Missingness at follow-up is often associated with baseline response and baseline and intermediate covariates, and this relationship may be differential by intervention. For example, HIV-infected patients who are worse off at baseline as suggested by low baseline CD4 count may be more likely to drop out, particularly if they receive the less effective treatment. Moreover, HIV-infected patients may base a decision to drop out on post-baseline intermediate measures of immunologic or virologic status (e.g., CD4 counts), which themselves may reflect the effectiveness of their assigned therapy. Here, the assumption that follow-up is missing at random (MAR; Rubin, 1976), associated only with these observable quantities and not the missing response, may be reasonable.

If one is willing to adopt the MAR assumption, methods that take appropriate account of the missingness should be used to ensure valid inference. A standard approach to missing data problems is maximum likelihood, which in the pretest–posttest setting with Y2 MAR involves full (parametric) specification of the joint distribution of V = (X1,Y1,X2,Y2,Z). Alternatively, adaptation of popular estimators such as ANCOVA to handle MAR Y2 on a case-by-case basis may be possible. Maximum likelihood techniques are known to suffer potential sensitivity to deviations from modeling assumptions, and neither approach has been widely applied by practitioners in the pretest–posttest context.

In summary, although missing follow-up response is commonplace in the pretest–posttest setting, there is no widely accepted or used methodology for handling it. In this paper we demonstrate how a unified framework for pretest–posttest analysis under MAR may be developed by exploiting the results in a landmark paper by Robins, Rotnitzky and Zhao (1994).

1.2 Semiparametric Models, Influence Functions, and Robins, Rotnitzky and Zhao

A popular modeling approach that acknowledges concerns over sensitivity to parametric assumptions is to take a semiparametric perspective. A semiparametric model may involve both parametric and nonparametric components, where the nonparametric component represents features on which the analyst is unwilling or unable to make parametric assumptions, and interest may focus on a parametric component or on some functional of the nonparametric component. For example, in a regression context one may adopt a parametric model for the conditional expectation of a continuous response given covariates and seek inference on the model parameters but be uncomfortable assuming the full conditional distribution is normal, instead leaving it unspecified. Under the semiparametric model for the pretest–posttest trial we consider, features of the joint distribution of V = (X1,Y1,X2,Y2,Z) beyond the independence of (X1,Y1) and Z induced by randomization are left unspecified and thus constitute the nonparametric component, and interest focuses on the functional β of this distribution defined in (1). When Y2 is MAR, this semi-parametric view not only offers protection against incorrect assumptions on V , but allows us to exploit the theory of Robins, Rotnitzky and Zhao (1994) to deduce estimators for β. These authors derived an asymptotic theory for inference in general semiparametric models with data MAR that may be used to identify a class of consistent estimators for parametric components or such functionals, as we now outline.

Robins, Rotnitzky and Zhao (1994) restricted attention to estimators that are regular and asymptotically linear. Regularity is a technical condition that rules out “pathological” estimators with undesirable local properties (Newey, 1990), such as the “superefficient” estimator of Hodges (e.g., Casella and Berger, 2002, page 515). Generically, an estimator for β (p × 1) in a parametric or semiparametric statistical model for a random vector W based on i.i.d. data Wi, i = 1,...,n, is asympotically linear if it satisfies, for a function φ(W),

| (2) |

where β0 is the true value of β generating the data, E{φ(W)} = 0, E{φT (W)φ(W)} < ∞ and expectation is with respect to the true distribution of W . The function φ(W) is referred to as the influence function of , as to a first-order φ(W) is the influence of a single observation on in the sense given in Casella and Berger (2002, Section 10.6.4). An estimator that is both regular and asymptotically linear (RAL) with influence function φ(W) is consistent and asymptotically normal with asymptotic covariance matrix E{φ(W)φT (W)} . Although not all consistent estimators need be RAL, almost all reasonable estimators are. For RAL estimators, there exists an influence function φeff(W) such that E{φ(W)φT (W)} − E{φeff(W)φeffT (W)} is nonnegative definite for any influence function φ(W); φeff(W) is referred to as the efficient influence function and the corresponding estimator is called the efficient estimator. In fact, for any regular estimator, asymptotically linear or not, with asymptotic covariance matrix ∑, ∑ − E{φeff(W)φeffT (W) is nonnegative definite; thus, the best estimator in }the sense of “smallest” asymptotic covariance matrix is RAL, so that restricting attention to RAL estimators is not a limitation. We use the term “influence function” unqualified to mean the influence function of an RAL estimator.

As indicated by (2), there is a relationship between influence functions and consistent and asymptotically normal estimators; thus, by identifying influence functions, one may deduce corresponding estimators. In missing data problems, Robins, Rotnitzky and Zhao (1994) distinguished between full-data and observed-data influence functions. “Full data” refers to the data that would be observed if there were no missingness; in the pretest–posttest setting the full data are V . Accordingly, full-data influence functions correspond to estimators that could be calculated if full data were available and are hence functions of the full data. “Observed data” refers to the data observed when some components of the full data are potentially missing; hence observed-data influence functions correspond to estimators that can be computed from observed data only and are functions of the observed data. For a general semiparametric model, the pioneering contribution of Robins, Rotnitzky and Zhao (1994) was to characterize the class of all observed-data influence functions when data are MAR, including the efficient influence function, and to demonstrate that observed-data influence functions may be expressed in terms of full-data influence functions. Because for many popular semiparametric models the form of full-data influence functions is known or straightforwardly derived, this provides an attractive basis for identifying estimators when data are MAR, including the efficient one.

In summary, the Robins, Rotnitzky and Zhao (1994) theory provides a series of steps for deducing estimators for a semiparametric model of interest when data are MAR: (1) Characterize the class of full-data influence functions, (2) characterize the observed data under MAR and apply the Robins, Rotnitzky and Zhao theory to obtain the class of observed-data influence functions, including the efficient one and (3) identify observed-data estimators with influence functions in this class. In this paper, for the semiparametric pretest–posttest model when Y2 is MAR, β is a scalar (p = 1), and we carry out each of these steps and show how they lead to closed-form estimators for β suitable for routine practical use. Interestingly, despite the ubiquity of the pretest–posttest study and the simplicity of the model when no data are missing, to our knowledge explicit application of this powerful theory to pretest–posttest inference with data MAR with an eye toward developing practical estimators has not been reported.

1.3 Objectives and Summary

The goals of this paper are twofold. The first main objective is to develop accessible practical strategies for inference on β in a semiparametric pretest–posttest model with follow-up data MAR by using the fundamental theory of Robins, Rotnitzky and Zhao (1994) as described above. Although this theory is well known to experts, many researchers have only passing familiarity with its essential elements. Thus, the second main goal of this paper is to use the pretest–posttest problem as a backdrop to provide a detailed and mostly self-contained demonstration of application of the theory of semiparametric models and the powerful, general Robins, Rotnitzky and Zhao results in a concrete, familiar context. This account hopefully will serve as a resource to researchers and practitioners wishing to appreciate the scope and underpinnings of the Robins, Rotnitzky and Zhao theory by systematically tracing the key concepts and steps involved in its application and explicating how it can lead to practical insight and tools.

In Section 2 we summarize the semiparametric pretest–posttest model and outline how the class of full-data influence functions for estimators for β may be derived. In Section 3 we characterize the observed data when Y2 is MAR, review the essential Robins, Rotnitzky and Zhao (1994) results and apply them to derive the class of observed-data influence functions. Sections 4 and 5 present strategies for constructing estimators based on observed-data influence functions, and we demonstrate the new estimators by application to the ACTG 175 data in Section 6. Results and practical implications are presented in the main narrative; technical supporting material and details of derivations are given in the Appendix.

As in any missing-data context, validity of the assumption of MAR follow-up response is critical and is best justified with availability of rich baseline and intervening information. We assume throughout that the analyst is well equipped to invoke this assumption.

2. MODEL AND FULL-DATA INFLUENCE FUNCTIONS

2.1 Semiparametric Pretest–Posttest Model

First, consider the full data (no missing posttest response). Suppose each subject i = 1,...,n is randomized to treatment with known probability δ, so Zi = 0 or 1 as i is assigned to control or treatment; in ACTG 175, δ = 0.75. Then Y1i and Y2i are i's pretest and posttest responses (baseline and 96±5 week CD4 in ACTG 175), X1i is i's vector of baseline covariates and X2i is the vector of additional covariates collected on i after intervention but prior to follow-up, which may include intermediate measures of response. Assuming subjects' responses evolve independently, the full data on i are Vi = (X1i,Y1i,X2i,Y2i,Zi), i.i.d. across i with density p(v) = p(x1,y1,x2,y2,z); we often suppress the subscript i for brevity. From (1) interest focuses on , ; throughout, expectation and variance are with respect to the true distribution of V.

From Section 1.2, under a semiparametric perspective the analyst may be unwilling to make specific parametric assumptions on p(x1,y1,x2,y2,z) such as normality or equality of variances of Y1 and Y2. For example, in HIV research it is customary to assume that CD4 counts are normally distributed on some transformed scale and to carry out analyses on this scale; however, as there is no consensus on an appropriate transformation, methods that do not require this assumption are desirable. Thus, in arguments to deduce the form of full- and observed-data influence functions here and in Sections 3 and 4, we do not impose any specific assumptions beyond independence of (X1,Y1) and Z induced by randomization and assumptions on the form of the mechanism governing missingness. As our objective is to outline the salient features of the arguments without dwelling on technicalities, we assume needed moments, derivatives and matrix inverses exist without comment.

2.2 Full-Data Influence Functions

As presented in Section 1.2, our first step in applying the Robins, Rotnitzky and Zhao (1994) theory is to characterize the class of all full-data influence functions for RAL estimators for β; these will be functions of V . This may be accomplished by appealing to the theory of semiparametric models (e.g., Newey, 1990; Bickel, Klaassen, Ritov and Wellner, 1993), which provides a formal framework for characterizing influence functions for RAL estimators in such models, including the efficient influence function. The theory takes a geometric perspective, where, generically, influence functions based on data V for RAL estimators for a p-dimensional parameter or functional β in a statistical model for V are viewed as elements of a particular “space” of mean-zero, p-dimensional functions of V for which there is a certain relationship between the distance of any element of the space from the origin and the covariance matrix of the function. From (2), as the covariance matrix of an influence function is equal to the asymptotic covariance matrix of the corresponding estimator, the search for estimators with small covariance matrices, especially the efficient estimator, may thus be focused on functions in this space and guided by geometric distance considerations.

In Appendix A.1 we first sketch an argument that demonstrates that any RAL estimator has a unique influence function, supporting the premise of working with influence functions. We then review familiar results for fully parametric models and show how they may be regarded from this geometric perspective. Finally, we indicate how this perspective is extended to handle semiparametric models. The key results are a representation of the form of all influence functions for RAL estimators in a particular model and a convenient characterization of the efficient influence function that corresponds to the efficient estimator.

In Appendix A.2 we apply these results to show that all full-data influence functions for estimators for β in the semiparametric pretest–posttest model must be of the form

| (3) |

where h(c)(X1,Y1), c = 0, 1, are arbitrary functions with var{h(c)(X1,Y1)} < ∞. Technically, the influence function (3) depends on µ2 and β through their true values. As is conventional, here and in the sequel we write influence functions as functions of parameters, which highlights their practical use as the basis for deriving estimators, shown in Section 5. From (3), influence functions and hence all RAL estimators for β are functions only of (X1,Y1,Y2,Z) and hence do not depend on X2. This is intuitively reasonable; because X2 is a post-intervention covariate, we would not expect it to play a role in estimation of β when Y2 is observed on all subjects. In Section 3, however, we will observe that when Y2 is MAR for some subjects, such covariates are important not only for validating the MAR assumption, but for increasing efficiency of estimation of β, as discussed in Robins, Rotnitzky and Zhao (1994, page 848).

The results in Appendix A.2 also show that the efficient influence function, that with smallest variance among all influence functions in class (3), is found by taking

| (4) |

Thus, if full data were available in ACTG 175, the optimal estimator for β would involve the true regression of 96±5 week CD4 on pretest CD4 and other baseline covariates listed in Section 1.1. Leon, Tsiatis and Davidian (2003) identified class (3) when no intervening covariate X2 is observed and showed that influence functions for the popular estimators discussed in Section 1.1 are members; for example, the two-sample t test estimator

| (5) |

has influence function (3) with h(c) ≡ 0, c = 0, 1 (see Appendix A.2). Thus, popular estimators are=RAL and valid under the semiparametric model, and hence contrary to widespread belief, are consistent and asymptotically normal even if Y1 and Y2 are not normally distributed. Leon, Tsiatis and Davidian (2003) also showed that none of the popular estimators has the efficient influence function, suggesting that improved estimators are possible, and proposed estimators based on (4) that offer dramatic efficiency gains over popular methods.

In fact, (3) is the difference of the forms of all influence functions for and , respectively, which may themselves be deduced separately by arguments analogous to those in Appendix A.2. In Appendix A.3 we argue that, for the purposes of identifying observed-data estimators for β, it suffices to identify observed-data influence functions for estimators for and separately. We thus focus for simplicity in Section 3 on estimation of .

3. OBSERVED DATA INFLUENCE FUNCTIONS

3.1 Semiparametric Pretest–Posttest Model with MAR Follow-Up Response

Suppose now that Y2 is missing for some subjects, with all other variables observed, and define R = 0 or 1 as Y2 is missing or observed. Then the observed data for subject i are Oi = (X0i,Y1i,X1i,Ri,RiY2i,Zi), i.i.d. across i. We represent the assumption that Y2 is MAR as

| (6) |

reflecting the reasonable view for a pretest–posttest trial that there is a positive probability of observing Y2 for any subject. Equation (6) formalizes that missingness does not depend on the unobserved Y2, but may be associated with baseline and intermediate characteristics and be differential by intervention, the latter highlighted by the equivalent representation

| (7) |

for π(c)(X1,Y1,X2) = π(X1,Y1,X2,c) ≥ ε > 0, c = 0, 1. For ACTG 175, (6) and (7) make explicit the belief that subjects may have been more or less likely to drop out (and hence be missing CD4 at 96±5 weeks) depending on their baseline CD4 and other characteristics as well as intermediate measures of CD4 and CD8 and off-treatment status, where this relationship may be different for patients treated with ZDV only versus the other therapies, but that dropout does not depend on unobserved 96±5 week CD4. Relaxation of the assumption that X1,Y1,X2 are observed for all subjects is discussed in Section 7.

3.2 Complete-Case Analysis and Inverse Weighting

As noted in Section 1.1, a naive approach under these conditions is to conduct a complete-case analysis. For example, using the two-sample t test, estimate β by

| (8) |

the difference in sample means based only on data for subjects with Y2 observed. Under the semiparametric model, as E(RZY2) = E{ZY2E(R|X1,Y1,X2,Y2,Z)}= E{ZY2π(1)(X1,Y1,X2)} by (6) and (7), and E{RI(Z = 1)} = E(RZ) = E{Zπ(1)(X1,Y1,X2), the first term in (8) converges in probability to E{ZY2 · π(1)(X1,Y1,X2)}/(X1,Y1,X2)}, which is not equal to in general. Similarly, the second term is not consistent for . Thus, (8) is not a consistent estimator for β in general.

A simple remedy is to incorporate inverse weighting of the complete cases (IWCC; e.g., Horvitz and Thompson, 1952). Here, whereas the estimator for in (8) solves , weight each contribution by the inverse of the probability of seeing a complete case; that is, solve , yielding the estimator for ,

| (9) |

and analogously for . It is straightforward to show that such inverse weighting yields consistent estimators for , c = 0, 1; for example, for (9) (c = 1), using (6) and (7),

and similarly E{RZ/π(1)X1,Y1,X2)} = δ, so that (9) converges in probability to . Subtracting for each of c = 0, 1, the associated influence functions are seen to be

| (10) |

which have the form of the corresponding full-data influence functions in (3) weighted by 1/π(c), c = 0, 1, for the complete cases only (R = 1). The IWCC be applied to any RAL estimator with influence function in class (3), including popular ones. However, although such simple IWCC leads to consistent inference, methods with greater efficiency are possible.

3.3 The Robins, Rotnitzky and Zhao Theory

As noted in Section 1.2, the pioneering advance of Robins, Rotnitzky and Zhao (1994) was to derive, for a general semiparametric model, the class of all observed data influence functions for estimators for a parameter β under complex forms of MAR and to characterize the efficient influence function. The theory reveals, perhaps not unexpectedly, that there is a relationship between full- and observed-data influence functions and that the latter involve inverse weighting.

Denote the subset of the full data V that is always observed for all subjects as O*; O* = (X1,Y1,X2,Z) here. Under MAR, the probability that full data are observed depends only on O*, which we write as π(O*). Assuming π(O*) is known for now, if φF (V) is any full-data influence function, Robins, Rotnitzky and Zhao showed that, in general, all observed-data influence functions have the form RφF (V)/π(O*) − g(O), where g(O) is an arbitrary square-integrable function of the observed data that satisfies E{g(O)|V} = 0. For situations like that here, where a particular subset of V (Y2) is either missing or not for all subjects, this becomes

| (11) |

where g(O*) is an arbitrary square-integrable function of the data always observed. In (11), the first term has the form of an IWCC full-data influence function; the second term, which has mean zero, depending only on data observed for all subjects, “augments” (e.g., Robins, 1999) the first, which leads to increased efficiency provided that g is chosen judiciously.

3.4 Observed-Data Influence Functions for the Pretest–Posttest Problem

In the special case of the pretest–posttest problem, focusing on estimation of the treatment mean , with O* = (X1,Y1,X2,Z), (3) and (11) immediately imply that the class of all observed-data influence functions for estimators for when Y2 is MAR is

| (12) |

for arbitrary h(1) and g(1) such that var{h(1)(X1,Y1)} < ∞ and var{g1(X1,Y1,X2,Z) < ∞. Defining g(1)′ (X1,Y1X2Z), we may write (12) equivalently in a way that is convenient in subsequent developments as

| (13) |

there is a one-to-one correspondence between (12) and (13).

As in the full-data problem, it is of interest to identify the optimal choices of h(1) and g(1), or, equivalently, h(1) and g(1)′ , that is, those that yield the efficient observed-data influence function with smallest variance among all influence functions of form (12) or, equivalently, (13). In Appendix A.4 we show that the optimal choices of h(1) and g(1)′ in (13) are

| (14) |

The forms geff(1)′ and heff(1) show explicitly how augmentation exploits relationships among variables to gain efficiency. In ACTG 175, then, (14) shows that the optimal estimator for β involves knowledge of the true regressions of 96±5 week CD4 on baseline CD4 and other baseline covariates, and on this baseline information plus post-intervention CD4 and CD8 measures and off-treatment status, respectively.

To develop estimators for practical use with good properties, it is sensible to consider influence functions with form close to that of the efficient influence function. Accordingly, from the expression for geff(1)′ in (14) and the representation of π in (7), we restrict attention in the sequel to the subclass of (13) with elements of the form, for g(1)′ (X1,Y1,X2,Z) = Zq(1)(X1,Y1,X2) for arbitrary square-integrable q(1)(X1,Y1,X2),

| (15) |

Equation (15) includes the optimal g(1)′ , but rules out choices that cannot have the efficient form.

3.5 Estimation of the Missingness Mechanism

The foregoing results take π and, hence, π(1)(X1,Y1,X2) to be known, which is unlikely unless Y2 is missing purposefully by design for some subjects in a way that depends on a subject's baseline and intermediate information. In practice, unknown π(1) is often addressed by positing a parametric model for π(1); intuition suggests that such a model be correctly specified, although we discuss this further in Section 4.2. For now, then, suppose that a parametric model π(1)(X1,Y1,X2;γ), say, for γ (s × 1) has been proposed and is correct, where γ0 is the true value of γ so that evaluation at γ0 yields the true probability π(1)(X1,Y1,X2). For definiteness, we focus henceforth on the logistic regression model

| (16) |

where d(X1,Y1,X2) is a vector of functions of its argument, but a development analogous to that below is possible for other choices (e.g., a probit model). In the ACTG 175 analysis in Section 6 we model the probability of observing CD4 at 96±5 weeks by a logistic function, where d(X1,Y1,X2) includes functions of baseline and intermediate characteristics.

Under these conditions, a natural strategy is to derive an estimator for from an influence function of the form (15), assuming that π(1)(X1,Y1,X2) is known; estimate γ based on the i.i.d. data (X1i,Y1i,X2i,Ri,Zi), i = 1,...,n, and substitute the estimated value for γ in the (correct) parametric model π(1)(X1,Y1,X2;γ); and estimate acting as if π(1) were known. Robins, Rotnitzky and Zhao (1994) showed that, for any choice of h(1) and q(1) in (15), as long as an efficient procedure [e.g., maximum likelihood (ML)] is used to estimate γ , the resulting influence function for the estimator for β obtained by this strategy has the form

| (17) |

where

and π(1)(X1,Y1,X2) is the true probability (i.e., the parametric model evaluated at γ0). In Appendix A.5 we give the basis for this result. Thus, estimators for with influence functions in class (17) may be derived by finding estimators with influence functions in class (15) (so for “γ known” in the context of a correct parametric model for π(1)) and substituting the ML estimator for γ . Thus, although influence functions of the form (17) are useful for understanding the properties of estimators for when γ is estimated, one need only work with influence functions of the form (15) to derive estimators.

When q(1)(X1,Y1,X2) has the efficient form , b(1) = bq(1). Hence, as long as the parametric model for π(1) is correct, even if γ is estimated, the last term in (17) is identically equal to zero, but this will not necessarily be true otherwise. This reflects the general result shown by Robins, Rotnitzky and Zhao (1994) that an estimator derived from the efficient influence function will have the same properties whether the parameters in a (correct) model for the missingness mechanism are known or estimated. For general h(1) and q(1) not necessarily equal to the optimal choices, the theory also implies the seemingly counterintuitive result that, even if γ is known, estimating it anyway can lead to a gain in efficiency; that is, for a specific (nonoptimal) choice of h(1) and q(1), the variance of (17) is at least as small as that of (15). In Appendix A.5 we give a justification of this claim.

3.6 Summary

By a development entirely similar to that above for influence functions for estimators for , we may obtain similar influence functions for estimators for . Here, influence functions in a subclass that contains the efficient influence function are of the form (15) with Z, π(1), δ, h(1) and q(1) replaced by 1 − Z, π(0), (1 − δ) and analogous functions h(0) and q(0), respectively, with similar modifications in (17). The efficient influence function has, analogous to (14), heff(0) = E(Y2|X1,Y1,Z = 0) − µ(0) and . To deduce estimators for β when Y2 is MAR, we derive estimators for and from these developments and take their difference, which is justified by the argument in Appendix A.3.

It may be shown that if the true missingness mechanism follows a parametric model π(X1,Y1,X2,Z γ) inducing models π(c)(X1,Y1,X2;γ), c = 0, 1, correctly specifying this model and estimating γ by ML from the data for subjects with Z = 0 and 1 separately leads to estimators for and at least as efficient as those found by estimating γ by fitting π(X1,Y1,X2,Z; γ) to all the data jointly. We recommend this approach in practice.

4. ESTIMATORS FOR β

4.1 Derivation of Estimators from Influence Functions

As a generic principle, based on (2), to identify an estimator from a given influence function, one sets the sum of terms that have the form of the influence function for each subject i = 1,...,n to zero, regarding the influence function as a function of the parameter of interest, and solves for this parameter, possibly substituting estimators for other unknown quantities. In complex models, particularly when p > 1, it may be impossible to solve for the parameter explicitly, and this and additional considerations can lead to computational and other challenges. However, for the simple pretest–posttest model, this strategy straightforwardly leads to closed-form estimators for β, as we now demonstrate.

The form of the efficient influence function is a natural starting point from which to derive estimators with good properties. Thus, focusing on , applying this strategy to (15) with the optimal choices and , simple algebra yields

| (18) |

and similarly for . Thus, to estimate β, one would take the difference of (18) and the analogous expression for . In practice this is complicated by the fact that π(X1,Y1,X2) must be modeled and fitted; moreover, it is evident that suitable regression models for E(Y2|X1,Y1,Z) and E(Y2|X1,Y1,X2,Z) must be identified and fitted. We discuss strategies for resolving these practical challenges in Section 5.

4.2 Double Robustness

So far we have assumed that postulated models for π(c), c = 0, 1, are correctly specified. If the postulated model is incorrect, substituting this incorrect model into an influence function of the form (15) or (17) when c = 1 with arbitrary h(1) and q(1) yields an expression that need not have mean zero; for example, the leading term in ψ(X1,Y1, X2,R,RY2,Z) in (15) has expectation zero only if P(R = 1|X1,Y1, X2,Z = 1) = π(1) (X1,Y1,X2), the true probability, and similarly for c = 0. Because a defining characteristic of an influence function is zero mean, estimators derived under such conditions need no longer be consistent. However, there is an exception when the optimal h(1) and q(1) are used as in (18), which we now describe.

In general, the augmentation in (11) induces the interesting property that estimators derived from (11) will be consistent if either (1) the choice g(O*) does not correspond to the optimal choice but π(O*) is correctly specified or (2) the optimal choice of g(O*) is used but π(O*) is misspecified. This property is referred to as double robustness (e.g., Scharfstein, Rotnitzky and Robins, 1999, Section 3.2.3; van der Laan and Robins, 2003, Section 1.6).

We may demonstrate the double robustness property for estimators for the pretest–posttest model; for definiteness, consider . Under option 1, with any arbitrary choices for h(1) and q(1), if the model π(1)(X1,Y1,X2;γ) corresponds to the true mechanism, that (15) has mean zero is immediate. Thus, even if one models E(Y2|X1,Y1,Z) and E(Y2|X1,Y1, X2,Z) incorrectly in (18), the resulting estimator still has a corresponding legitimate influence function in class (18) (assuming γ is estimated) and hence is consistent. Conversely, under option 2, suppose E(Y2|X1,Y1,Z) and E(Y2|X1,Y1,X2,Z) are correctly specified in (18), but π(1)(X1,Y1,X2) is specified incorrectly by some π*(X1,Y1,X2), say. Substituting π* for π(1) in (18), it is straightforward to show that the right-hand side converges in probability to (see Appendix A.6), suggesting that an estimator based on (18) would still be consistent. In fact, the second term in (18) converges in probability to zero even if E(Y2|X1,Y1,Z = 1) is replaced by any arbitrary function of (X1,Y1), so that the double robustness property holds if only E(Y2|X1,Y1,X2,Z) is correct. Of course, if both π(1) and E(Y2|X1,Y1,X2,Z) are specified incorrectly, we cannot expect (18) to yield consistent inference in general.

As we discuss in Section 5, in practice one must develop and fit models for π(c), E(Y2|X1,Y1,Z) and E(Y2|X1,Y1,X2,Z), so the results above are somewhat idealized. However, if the analyst uses his or her best judgment and efforts to develop these models, the chance of coming very close to specifying at least one of them correctly may be high. The theoretical double robustness property suggests that, by using estimators like (18) based on the efficient influence function, the analyst has some protection against inadvertent mis-modeling. In our experience, even if both types of models are mildly incorrectly specified, valid inferences may be obtained; if one model is grossly incorrect, that with the mild misspecification error tends to dominate, so that reliable inferences are still possible.

5. PRACTICAL IMPLEMENTATION

To obtain estimators for β based on (18) and the analogous expression for suitable for practice, π(c)(X1,Y1,X2), E(Y2|X1,Y1,Z = c) and E(Y2 X1,Y1,X2,Z = c), c = 0, 1, must be modeled and fitted. Given parametric models π(c)(X1,Y1,X2;γ), if γ is estimated by ML separately from the data for Z = 0 and 1 as at the end of Section 3.6, yielding estimators , c = 0, 1, we may form estimated probabilities , say. Similarly, given fits of some regression models E(Y2|X1,Y1,Z = c) and E(Y2|X1,Y1,X2,Z = c), we may obtain predicted values and , c = 0, 1, say, for E(Y2i|X1i,Y1i,Zi = c) and E(Y2i|X1i,Y1i,X2iZi = c), respectively. Letting , substituting in (18) and its analog for c = 0 then yields the estimator , where

and

Intuitively, replacing the unknown quantities in (18) and its analog for c = 0 by consistent estimators should not alter the implications for consistency of discussed earlier. We now review considerations involved in obtaining , and , c = 0, 1.

A natural approach to modeling E(Y2|X1,Y1,Z = c) and E(Y2|X1,Y1,X2,Z = c) is to adopt parametric models based on usual regression considerations. For example, in ACTG 175, Y2 = CD4 at 96±5 weeks is a continuous measurement, suggesting that standard linear regression models may be used. Because of the assumption of MAR, E(Y2|X1,Y1,X2,Z,R) does not depend on R; thus, E(Y2|X1,Y1,X2,Z) = E(Y2|X1,Y1,X2,Z,R = 1), implying that this model may be postulated and fitted based only on the complete cases. Thus, standard techniques for model selection and diagnostics may be applied to the data from subjects with R = 1. For example, inspection of plots like those in Figure 1, which shows only data for subjects for whom CD4 at 96±5 weeks is observed, may be used. Figure 1 suggests that such reasonable models might include both linear and quadratic terms in Y1 = baseline CD4.

Considerations for developing and fitting models for E(Y2|X1,Y1,Z = c) are trickier. Ideally, the chosen model for this quantity must be compatible with that for E(Y2|X1,Y1,X2,Z), as E(Y2|X1,Y1,Z) = E{E(Y2|X1,Y1,X2,Z)|X1,Y1,Z}. Several practical strategies are possible, although none is guaranteed to achieve this property and hence yield the efficient estimators for , c = 0, 1. One approach is to adopt a model directly for E(Y2|X1,Y1,Z) that is likely “close enough” to be “approximately compatible.” For example, if E(Y2|X1,Y1,X2,Z) is a linear model in functions of (X1,Y1,X2), one may be comfortable with a linear model for E(Y2|X1,Y1,Z) that includes the same functions of X1,Y1. We demonstrate this ad hoc strategy for the ACTG 175 data in Section 6. If all of X1,Y1,X2,Y2 are continuous, assuming joint normality may be a reasonable approximation, in which case standard results may be used to deduce both models. Alternatively, one might use the relationship E(Y2|X1,Y1,Z) = E{E(Y2|X1,Y1,X2,Z) X1,Y1,Z . For example, for low-dimensional X2, a distributional model for X2|X1,Y1,Z might be fitted based on the (X1i,Y1i,X2i,Zi), i = 1,...,n, which are observed for all subjects; integration with respect to this model would yield the desired conditional quantities for c = 0, 1. For univariate binary X2, a logistic model for P(X2 = 1|X1,Y1,Z) may be used; this is straightforward, but could be more challenging for mixed continuous and discrete and/or high-dimensional X2. Instead, one might invoke an empirical approximation, for example, obtaining the predicted value , c = 0, 1, for each i by averaging estimates of E(Y2i|X1i,Y1i,X2j ,Zi = c) over subjects j that share the same values for (X1,Y1,Z) as i, which would likely be feasible only in specialized circumstances. A cruder version would be to average over all X2j for j in the same group as i; this would yield the desired result only if X2 is conditionally independent of (X1,Y1) given Z.

A further complication is that, for any chosen model for E(Y2|X1,Y1,Z), it is no longer appropriate to fit the model based on the complete cases only. Ideally this fitting should be carried out by a procedure that accounts for the fact that Y2 is MAR, such as an IWCC version of standard regression techniques. However, if the model is an approximation anyway, complete-case-only fitting may not be seriously detrimental. Even if the fit of the chosen model is not consistent for that model, the discussion of double robustness in Section 4.2 suggests that the resulting estimators and hence should be consistent regardless.

Another approach would be to use nonparametric smoothing to estimate E(Y2|X1,Y1,X1,Z) and E(Y2|X1,Y1,Z) and obtain predicted values and , for example, locally weighted polynomial smoothing (Cleveland, Grosse and Shyu, 1993) or generalized additive modeling (Hastie and Tibshirani, 1990). Ideally, smoothing for E(Y2|X1,Y1,Z) should be modified to take into account that Y2 is MAR, although this may not be critical by double robustness. Instead, an estimate of E(Y2|X1,Y1,Z) could be derived from integration of the nonparametric fit of E(Y2|X1,Y1,X2,Z). Feasibility of smoothing might be limited in high dimensions.

However one approaches developing and fitting models for E(Y2|X1,Y1,X2,Z = c) and E(Y2|X1,Y1,Z = c), c = 0, 1, we have found that it may be advantageous, at least for large n, to fit separate models for c = 0, 1. We also recommend including in all four models the same functions of components of X1, Y1 and X2 (if appropriate) if they were found to be important in any one model, as it may be prudent to overmodel rather than undermodel.

Similarly, standard techniques for parametric binary regression may be used to fit models π(c)(X1,Y1,X2; γ) for each c = 0, 1, as all n subjects will have the requisite data. We recommend including in these models all covariates found to be important in any of the regression models above, as it may be shown that including covariates in this model that are correlated with Y2, even if they are not associated with missingness, can lead to gains in efficiency. Lunceford and Davidian (2004) demonstrated this phenomenon in a simple related setting. Thus, we suggest developing this model after building and fitting of the models for E(Y2|X1,Y1,X2,Z = c) and E(Y2|X1,Y1,Z = c) are complete.

Theoretically, if all of these models are correctly specified, then should be efficient in the sense described earlier. For parametric regression models for E(Y2|X1,Y1,X2,Z = c) and E(Y2|X1,Y1,Z = c), although additional regression parameters must be estimated because of the geometry, there is no effect asymptotically; a similar phenomenon for nonparametric estimation of these quantities is suggested by the results of Newey (1990, pages 118−119) as long as this is at a rate faster than n−1/4. The double robustness property discussed in Section 4.2 ensures that consistent estimators for β and , c = 0, 1, will be obtained as long as either set of models is correct; however, efficiency is no longer guaranteed.

The asymptotic variance of is obtained from the expectation of the square of the difference of (15) and the analogous control influence function, given by

| (19) |

Implicit here is the assumption that the models for π(c)(X1,Y1,X2), E(Y2|X1,Y2,X2,Z = c) and E(Y2|X1,Y1,Z = c) are correct. Equation (19) may be estimated by replacing the first two terms by and , where , and replacing the remaining terms by sample averages with estimates substituted for needed quantities. Alternatively, var() may be estimated by , corresponding to the so-called sandwich technique, where is the difference of the influence functions with estimates substituted, that is,

If E(Y2|X1,Y2,X2,Z) and E(Y2|X1,Y1,Z) are mismodeled, the influence function of would instead be of the form (17) to account for estimation of γ , and similarly for . Although technically then the above formulae would seem to require modification, we have extensive empirical evidence to suggest that they yield reliable estimates of precision if incorrect models are used.

6. TREATMENT EFFECT IN ACTG 175

We now apply the proposed methods to the data from ACTG 175, where β is the difference in mean CD4 count at 96±5 weeks for subjects receiving ZDV (control) and those receiving any of the other three therapies (treatment), so that δ = 0.75. The analysis here is not definitive, but is meant to illustrate the typical steps in an analysis based on these techniques.

Following Section 5, we begin by modeling E(Y2|X1,Y1,X2,Z = c), c = 0, 1. As reviewed in Section 1.1, X1 contains 11 baseline covariates in addition to baseline CD4 (Y1). For X2, we considered three covariates available for all subjects: CD4 at 20±5 weeks postrandomization, CD8 at 20±5 weeks and an indicator of whether the subject went off his/her assigned treatment prior to 96 weeks; reasons could include death, dropout or other patient or physician decisions. Because of the high dimension of X1 and the fact that both X1 and X2 contain a mixture of continuous and discrete variables, we considered parametric linear regression modeling. Based on the 1342 of n = 2139 subjects that are complete cases, standard model selection techniques indicate that weight, indicators of HIV symptoms and prior antiretroviral therapy, Karnofsky score, CD8 count and CD4 count (linear and quadratic terms in CD4 and CD8) at baseline, CD8 and CD4 count at 20±5 weeks (linear and quadratic terms), and off-treatment status are associated with Y2 = CD4 count at 96±5 weeks in one or both treatment groups. Thus, we fit separately for c = 0, 1, the models

| (20) |

by ordinary least squares (OLS), obtaining predicted values , c = 0, 1 for each i = 1,...,n. Adopting the ad hoc strategy in Section 5, we directly modeled E(Y2|X1,Y1,Z = c), c = 0, 1, by including the same terms in X1 and Y1 as in (20), that is,

again fitting the model for each c by OLS and obtaining predicted values , c = 0, 1 for all n subjects. Finally, based on standard techniques for logistic regression and the guidelines in Section 5, we arrived at

where γ was estimated separately by ML for each group.

Table 1 shows the estimate of and the estimated standard error obtained by the sandwich technique, and appears to provide strong evidence that mean CD4 at 96±5 weeks is higher in the treatment group relative to the control. Table 1 also presents the estimate of β obtained via the IWCC method. The IWCC estimated standard error is larger than that for the proposed methods, consistent with the implication of the theory that incorporation of baseline and intervening covariate information should improve precision. For comparison, Table 1 shows estimates of β obtained by the two most popular approaches in practice based on the complete cases only. These results suggest there may be nonnegligible bias associated with these naive methods, in this case suggesting an overly optimistic treatment difference.

Table 1.

Treatment effect estimates for 96±5 week CD4 counts for ACTG 175

| Estimate | SE | |

|---|---|---|

| New method | 57.24 | 10.20 |

| IWCC | 54.69 | 11.79 |

| ANCOVA | 64.54 | 9.33 |

| Paired t test | 67.14 | 9.23 |

Note. Standard errors for the new method and the IWCC estimate were obtained using the sandwich approach. ANOVA denotes ordinary analysis of covariance with no interaction term. Standard errors for the popular estimators based on complete cases were obtained from standard formulæ.

7. DISCUSSION

We have shown how the theory developed by Robins, Rotnitzky and Zhao (1994) may be applied to the ubiquitous pretest–posttest problem to deduce analysis procedures that take appropriate account of MAR follow-up data, yield consistent inferences and lead to efficiency gains over simpler methods by exploiting auxiliary covariate information. This perspective provides a general framework for pretest–posttest analysis with missing data that illuminates how relationships among variables play a role in both accounting for missingness and enhancing precision, thus offering the analyst guidance for selecting appropriate methods in practice. We hope that this explicit, detailed demonstration of this theory in a familiar context will help researchers who are not well versed in its underpinnings appreciate the fundamental concepts and how the theoretical results may be translated into practical methods.

We have carried out extensive simulations that show that the proposed methods lead to consistent inference and considerable efficiency gains over simpler methods such as IWCC estimators; a detailed account is available at http://www4.stat.ncsu.edu/∼davidian.

We considered the situation where only follow-up response is potentially missing; baseline and intermediate covariates are assumed observable for all subjects. In some settings covariate information in the period between baseline and follow-up may be censored due to dropout, leading to only partially observed X2. The development may be extended to this case via the Robins, Rotnitzky and Zhao (1994) theory and is related to that for causal inference for time-dependent treatments, requiring assumptions similar to those of sequential randomization identified by Robins (e.g., Robins, 1999; van der Laan and Robins, 2003).

Although our presentation is in the context of the pretest–posttest study, it is evident that the results are equally applicable to the problem of comparing two means in a randomized study with adjustment for baseline covariates to improve efficiency, as discussed, for example, by Koch et al. (1998), because the pretest response Y1 may be viewed as simply another baseline covariate. Thus, the developments also clarify how such optimal adjustment should be carried out to achieve efficient inferences on a difference in means in this setting; moreover, they provide a systematic approach to accounting for missing response.

ACKNOWLEDGMENTS

The authors are grateful to Michael Hughes, Heather Gorski and the AIDS Clinical Trials Group for providing the ACTG 175 data. This research was supported in part by Grants R01-CA051962, R01-CA085848 and R37-AI031789 from the National Institutes of Health.

APPENDIX

A.1. INFLUENCE FUNCTIONS AND SEMIPARAMETRIC THEORY

Correspondence between influence functions and RAL estimators

Before we describe semiparametric theory, we sketch an argument that more fully justifies why working with influence functions is informative for identifying (RAL) estimators. It is straightforward to show by contradiction that an asymptotically linear estimator [i.e., an estimator satisfying (2)] has a unique (almost surely) influence function. In the notation in (2), if this were not the case, there would exist another influence function φ*(W) with E{φ*(W)} = 0 that also satisfies (2). If (2) holds for both φ(W) and φ*(W), it must be that . Whereas the Wi are i.i.d., A converges in distribution to a normal random vector with mean zero and covariance matrix E[{φ(W) − φ*(W)}{φ(W) − φ*(W)}T . Whereas this limiting distribution is op(1), it must be that ∑ = 0, implying φ(W) = φ*(W) almost surely.

Parametric model

We begin by considering fully parametric models. Formally, a parametric model for data V is characterized by all densities p(v) in a class indexed by a q-dimensional parameter θ, so that is fully specified by θ. Suppose that interest focuses on a parameter β in this model. In the most familiar case, θ may be partitioned explicitly as θ = (βT ,ηT)T for β(p×1) and η(r×1), r = q − p, so that η is a nuisance parameter, and we may write p(v, β, η). Alternatively, β = β(θ) may be some function of θ, and identifying the nuisance parameter may be less straightforward, but the principles are the same. For simplicity, whereas β in the pretest–posttest problem is a scalar, we restrict attention to p = 1.

Maximum likelihood estimator in a parametric model

For definiteness, consider the case where θ = (β, ηT)T. Define as usual the score vector , ∂/∂ηT {log|p(V, β, η)}]T and let be the true value of θ. Then E{Sθ(V, θ0)}= 0 and the information matrix is

where expectation is with respect to the true density p(v, β0,η0). Writing to denote the maximum likelihood estimator for θ found by maximizing , it is well known (e.g., Bickel et al., 1993, Section 2.4) that, under regularity conditions,

| (A.1) |

| (A.2) |

so that E{φeff(V)} = 0 and is RAL with influence function φeff(V). Whereas has variance , is consistent and asymptotically normal with asymptotic variance , the well-known Cramér–Rao lower bound, the smallest possible variance for (regular) estimators for β. Thus, is the efficient estimator and, accordingly, Seff(V) is often called the efficient score. Evidently φeff(V) is the efficient influence function, and these familiar results emphasize the connection between efficiency and the score vector.

We are now in position to place these results in a geometric context. Our discussion of this geometric construction for both parametric and semiparametric models is not meant to be rigorous and complete, but serves only to highlight the crucial elements.

Hilbert space

A Hilbert space is a linear vector space, so that for and any real a, b, equipped with an inner product; see Luenberger (1969, Chapter 3). The key feature that underlies the geometric perspective is that influence functions based on data V for estimators for a p-dimensional parameter β in a statistical model may be viewed as elements in the particular Hilbert space H of all p-dimensional, mean-zero random functions h(V) such that E{hT (V)h(V)} < ∞, with inner product for and corresponding norm ∥h∥ =[E{hT (V)h(V)}]1/2, measuring distance from h ≡ 0. Thus, the geometry of Hilbert spaces provides a unified framework for deducing results with regard to influence functions in both parametric and semiparametric models.

Some general results concerning Hilbert spaces are important. For any linear subspace M of , the set of all elements of orthogonal to those in M, denoted M⊥ (i.e., such that if h1 ∈ M and h2 ∈ M⊥, the inner product of h1,h2 is zero), is also a linear subspace of . Moreover, for two linear subspaces M and N, M ⊕ N is the direct sum of M and N if every element in M ⊕ N has a unique representation of the form m + n for m ∈ M, n ∈ N. Intuitively, it is the case that the entire Hilbert space . As we will see momentarily, a further essential concept is the notion of a projection. The projection of onto a closed linear subspace M of is the element in M, denoted by Π(h|M), such that ∥h − Π(h|M)∥ < ∥h−m∥ for all m ∈ M and the residual h − Π(h|M) is orthogonal to all m ∈ M; such a projection is unique (e.g., Luenberger, 1969, Section 3.3).

In light of the pretest–posttest problem, we again take p = 1. Let θ0 be the true value of θ.

Geometric perspective on the parametric model

Consider first the case where θ may be partitioned as θ = (β, ηT)T, η (r × 1). Let Λ be the linear subspace of that consists of all linear combinations of Sη(V, θ0) of the form BSη(V, θ0), that is, Λ = {BSη(V, θ0) for all (1 × r) B}, the linear subspace of spanned by Sη(V, θ0). Whereas depends on the score for nuisance parameters, it is referred to as the nuisance tangent space. A fundamental result in this case is that all influence functions for RAL estimators for β may be shown to lie in the subspace Λ⊥ orthogonal to Λ. Although a proof of this is beyond our scope, it is straightforward to provide an example by demonstrating that the efficient influence function in (A.2) lies in Λ⊥. In particular, we must show that for all B (1 × r). By taking B successively to be a vector with a 1 in one component and 0s elsewhere, this may be seen to be equivalent to showing that , which follows immediately. Thus, one approach to identifying influence functions for a particular model with θ = (β, ηT)T is to characterize the form of elements in Λ⊥ directly.

Alternatively, other representations are possible. For general p(v, θ), the tangent space Γ is the linear subspace of spanned by the entire score vector Sθ(V, θ0), where Sθ(V, θ) = ∂/∂θ {log p(V, θ)}, that is, Γ = {BSθ (V, θ0) for all (1 × q) B}. We have the following key result.

Result A.1

Representation of influence functions

All influence functions for (RAL) estimators for β may be represented as φ(V) = φ*(V) + ψ(V), where φ*(V) is any influence function and ψ(V) ∈ Γ⊥, the subspace of orthogonal to Γ.

This may be shown for general β(θ); we demonstrate when θ = (β, ηT)T. In this case, a defining property of influence functions φ(V) which is related to regularity is that (1) E{φ(V)Sβ(V, θ0) = 1 and (2) ; the proof } = is outside our scope here. Given this, we now show that all influence functions can be represented as in Result A.1. First, we demonstrate that if φ(V) can be written as φ*(V) + ψ(V ), where φ*(V) and ψ(V) satisfy the conditions of Result A.1, then φ(V) is an influence function. Letting Γβ ={BSβ(V, θ0) for all real B} be the space spanned by the score for β, it may be shown that Γ = Λ ⊕ Γβ. Thus, if ψ ∈ Γ⊥, ψ(V) is orthogonal to functions in both Λ and Γβ, so that E{ψ(V)Sβ(V, θ0)} = 0 and . Moreover, because φ*(V) is an influence function, it satisfies properties 1 and 2, whence it follows that φ(V) also satisfies properties 1 and 2 and, hence, is itself an influence function. Conversely, we show that if φ(V) is an influence function, it can be represented as in Result A.1. If φ(V) is an influence function, it must satisfy properties 1 and 2, and, writing φ(V) = φ*(V) + {φ(V) − φ*(V)} for some other influence function φ*(V), it is straightforward to use properties 1 and 2 to show that ψ(V) = {φ(V) − φ*(V)} ∈ Γ⊥. Thus, in general, by identifying any influence function and Γ⊥, one may exploit Result A.1 to characterize all influence functions.

Depending on the particular model and nature of β, one method for characterizing influence functions may be more straightforward than another. When using Result A.1 in models where θ = (β, ηT)T, Γ may be most easily determined by finding Λ and Γβ separately; for general β(θ), Γ may often be identified directly.

From Result A.1, we may also deduce a useful characterization of the efficient influence function φeff(V) that satisfies E{φ2(V)} − E{φeff2(V)} ≥ 0 for all influence functions φ(V). Whereas for arbitrary φ(V), φeff(V) = φ(V) − ψ(V) for ψ ∈ Γ⊥ and E{φeff2(V)} = ∥φ − ψ∥ must be as small as possible, it must be that ψ = Π(φ|Γ⊥). Thus, we have the following result.

Result A.2

Representation of the efficient influence function

The function φeff(V) may be represented as φ(V) − Π(φ|Γ⊥)(V) for any influence function φ(V).

In the case θ = (β, ηT)T, it is in fact possible to identify explicitly the form of the efficient influence function. Here, the efficient score is defined as the residual of the score vector for β after projecting it onto the nuisance tangent space, Seff(V, θ0) = Sβ(V, θ0) − Π(Sβ|Γ), and the efficient influence function is an appropriately scaled version of Seff given by φeff(V) = [E{Seff2(V, θ0)}]−1Seff(V, θ0). It is straightforward to observe that φeff(V) is an influence function by showing it satisfies 1 and 2 above. Specially, by construction Seff ∈ Λ⊥, so property 2 holds. This implies E{φeff(V)Π(Sβ|Λ)(V)} = 0, so that

demonstrating property 1. That φeff(V) has the smallest variance among influence functions may be seen by using the fact that all influence functions may be written as φ(V) = φeff(V) + ψ(V) for some ψ(V) ∈ Γ⊥. Because Sβ ∈ Γβ and Π(Sβ|Λ) ∈ Λ are both in Γ, it follows that E{ψ(V)φeff(V)} = 0. Thus, E{φ2(V)} = E[{φeff(V + ψ(V)}2] = Eφeff2(V)} + E{ψ2(V)}, so that any other influence function φ(V) has variance at least as large as that of φeff(V), and this smallest variance is immediately seen to be 1/Seff2(V, θ0).

Finally, we may relate this development to the familiar maximum likelihood results when θ = (β, ηT)T . By definition, Π(Sβ|Λ) ∈ Λ is the unique element B0Sη ∈ Λ such that E[{Sβ(V, θ0) − B0Sη(V, θ0)} · BSη(V, θ0)] = 0 for all B (1 × r). As above, this is equivalent to requiring , implying . Thus, and , as expected.

For a parametric model, it is usually unnecessary to appeal to the foregoing geometric construction to identify the efficient estimator and influence functions. In contrast, in the more complex case of a semiparametric model such results often may not be derived readily. However, as we now discuss, the geometric perspective may be generalized to semiparametric models, providing a systematic framework for identifying influence functions.

Geometric perspective on the semiparametric model

In its most general form, a semiparametric model for data V is characterized by the class of all densities p{v, θ(·)} that depend on an infinite-dimensional parameter θ(·). Often, analogous to the familiar parametric case, θ(·) ={β, η(·)}, where β is (p × 1) and η(·) is an infinite-dimensional nuisance parameter, and interest focuses on β. For example, in the regression situation in Section 1.2, β specifies a parametric model for the conditional expectation of a response given covariates, and η(·) represents all remaining aspects, such as other features of the conditional distribution, that are left unspecified. Alternatively, interest may focus on a functional β{θ(·)} of θ(·). This is the case in the semiparametric pretest–posttest model, where θ(·) represents all aspects of the distribution of V = (X1,Y1,X2,Y2,Z) that are left unspecified and β is given in (1).

The key to generalization of the results for parametric models to this setting is the notion of a parametric submodel. A parametric submodel is a parametric model contained in the semiparametric model that contains the truth. In the most general case, with densities p{v, θ(·)} and functional of interest β{θ(·), there is a true θ0(·) such that is the density that generates the data. A parametric submodel is the class of all densities characterized by a finite-dimensional parameter ξ such that and the true density , where the dimension r of ξ varies according to particular choice of submodel. That is, there exists a density identified by the parameter ξ0 within the parameter space of the parametric submodel such that p0(v) = p(v, ξ0). In Appendix A.2 below, we give an explicit example of parametric submodels in the pretest–posttest setting.

The importance of this concept is that an estimator is an (RAL) estimator for β under the semiparametric model if it is an estimator under every parametric submodel. Thus, the class of estimators for β for the semiparametric model must be contained in the class of estimators for a parametric submodel and, hence, any influence function for the semiparametric model must be an influence function for a parametric submodel. Now, if Γξ is the tangent space for a given submodel p(v, ξ) with score vector Sξ(v, ξ) = ∂/∂ξ {log p(v, ξ)}, by Result A.1 the corresponding influence functions for estimators for β must be representable as φ(V) = φ*(V) + γ(V), where φ*(V) is any influence function in the parametric submodel and . Thus, intuitively, defining to be the mean square closure of all parametric submodel tangent spaces [i.e., such that there exists a sequence of parametric submodels with ∥h(V) − BjSξ(V, ξ0j)∥2 → 0 as j → ∞}, where Bj are (1 × rj) constant matrices], then it may be shown that Result A.1 holds for semiparametric model influence functions. That is, all influence functions φ(V) for estimators for β in the semiparametric model may be represented as φ*(V) + ψ(V), where φ*(V) is any semiparametric model influence function and ψ(V) ∈ Γ⊥. Moreover, Result A.2 also holds: as in the parametric case, the efficient estimator with smallest variance has influence function φeff(V) and may be represented as φeff(V) = φ(V) − Π(φ|Γ⊥)(V) for any semiparametric model influence function φ(V). In Appendix A.2 we use these results to deduce full-data influence functions for the semiparametric pretest–posttest model.

Although the pretest–posttest model may be handled using the above development, it is worth noting that a framework analogous to the parametric case ensues when θ(·) = {β, η(·), so that p(v) = p{v, β, η(·)}, with true values β0,η0(·) such that the true density is . Here, a parametric submodel is the class of all densities characterized by β and finite-dimensional ξ such that . As a parametric model, a submodel has a corresponding nuisance tangent space and, as above, because the class of estimators for β for the semiparametric model must be contained in the class of estimators for a parametric submodel, influence functions for estimators for β for the semiparametric model must lie in a space orthogonal to all submodel nuisance tangent spaces. Thus, defining the semiparametric model nuisance tangent space Λ as the mean square closure of all parametric submodel nuisance tangent spaces, it may be shown that all influence functions for the semiparametric model lie in Λ⊥. Moreover, the semiparametric model tangent space Γ = Λ ⊕ Γβ, where Γ β is the space spanned by Sβ{V,β0,η0(·)} = ∂/∂β [logp{V,β,η0(·,) evaluated parametric model efficient score Seff is Sβ{V,β0,η0(·)} − Π(Sβ|Λ)(V) with efficient influence function φeff{V,β0,η0(·)} = E([Seff{V,β0,η0(·)}]2)}−1 · Seff{V,β0,η0(·)}. The variance of φeff, {E([Seff{V,β0,η0(·)}]2)}−1, achieves the so-called semiparametric efficiency bound, that is, the supremum over all parametric submodels of the Cramér–Rao lower bounds for β.

A.2. DERIVATION OF FULL-DATA INFLUENCE FUNCTIONS

We apply the theory in Appendix A.1 to identify the class of all influence functions φ(V) for estimators for β depending on the full data V = (X1,Y1,X2,Y2,Z) under the semiparametric pretest–posttest model with no assumptions on p(v) beyond independence of (X1,Y1) and Z. By Result A.1, these may be written as φ(V) = φ*(V) + ψ(V), where ψ(V) ∈ Γ⊥ and φ* is any influence function, so we proceed by identifying a φ* and characterizing Γ ⊥.

To identify a φ* under the semiparametric model, consider the two-sample t test estimator in (5). Using , c = 0,1, E(ZY2) = E(ZE(Y2|Z)} = δE(Y2|Z = 1) and similarly for E{1 − Z)Y2}, is clearly consistent under the minimal assumptions on p(v), and from the ensuing expression for , writing and using , it is straightforward derive the corresponding influence function

| (A.3) |

where we write this as a function of and following the convention noted after (3).

To find Γ⊥, we consider the class of all densities for our semiparametric model. Incorporating the only restriction on such densities of independence of (X1,Y1) and Z, it follows that has elements of the form, in obvious notation, p(v) = p(X1,y1)p(X2|x1,y1,z)p(y2|x1,y1,x2,z)p(z|x1,y1), where p(z|x1,y = δz(1 − δ)1−z and δ is known. The tangent space Γ is the mean square closure of the tangent spaces of parametric submodels

| (A.4) |

say. Each of the first three components of (A.4) must contain the truth. For example, if p0(X2|x1,y1,y2,z) is the true conditional density of X2 given (X1,Y1,Y2,Z), then, for h3 such that E{h3(X1,Y1,X2,Y2,Z)|X1,Y1,Y2,Z} = 0, a typical submodel for this component is

where ξ3 is sufficiently small so that p(X2|x1,y1,y2,z; ξ3) is a density and the score with respect to ξ3 may be shown to be h3(X1,Y1,Y2,Z), and similarly for the first two components of (A.4). Evidently Γ = Γ1 ⊕ Γ2 ⊕ Γ3, where (e.g., Newey, 1990)

It is easy to verify that Γ1, Γ2 and Γ3 are all mutually orthogonal; e.g., for h2 ∈ Γ2,h3 ∈ Γ3,

Thus, Γ⊥ is the space orthogonal to all of Γ1, Γ2 and Γ3. It is straightforward to verify that the space such that E{h4(X1,Y1,Z)|X1,Y1}= 0] is orthogonal to all of Γ1, Γ2 and Γ3. Moreover, it may also be deduced that Γ1 ⊕ Γ2 ⊕ Γ3 ⊕ Γ4 is in fact the entire Hilbert space of mean-zero functions of V. Thus, it follows that Γ4 contains all elements of orthogonal to Γ, so that Γ⊥ = Γ4. Because Z is binary, we may write any element in Γ⊥ equivalently as Zh(1)(X1,Y1)+(1−Z)h(0)(X1,Y1) for some h(c)(X1,Y1), c = 0,1 with finite variance such that E{Zh(1)(X1,Y1) + (1 − Z)h(0)(X1,Y1)|X1,Y1} = 0. This implies h(1)(X1,Y1) = −h(0)(X1,Y1) · (1 − δ)/δ for arbitrary h(0)(X1,Y1), showing that elements in Γ⊥ may be written as (Z − δ)h(X1,Y1) for h with var{h(X1,Y1) < ∞. Equivalently, may write these elements as −(Z − δ)h(X1,Y1), which proves convenient in later arguments.

Recalling that and and combining the foregoing results, we thus have that all influence functions for RAL estimators for β must be of the form

| (A.5) |

which may also be expressed in the equivalent form given in (3).

We may in fact identify the efficient influence function φeff in class (A.5). By Result A.2 we may represent φeff(X1,Y1,Y2,Z) = φ*(X1,Y1,Y2,Z) − Π(φ*|Γ⊥) for any arbitrary influence function φ*, and, from above, we know that Π(φ*|Γ⊥) must be of the form −(Z − δ)heff(X1,Y1) for some heff. Projection is a linear operation; hence, taking φ* to be (A.3), the projection may be found as the difference of the projections of each term in (A.3) separately. Moreover, by definition the residual for each term must be orthogonal to Γ⊥. Thus, we wish to find heff(c)(X1,Y1), c = 0, 1, such that

| (A.6) |

| (A.7) |

for all h(X1,Y1). For (A.6), then, we require

and similarly for (A.7). Using independence of (X1,Y1) and Z, we obtain

For example, for c = 1 this follows from

where P(Z = 1|X1,Y1) = δ, and similarly

Substituting in φ*(X1,Y1,Y2,Z) − Π(φ*|Γ⊥), the efficient influence function is

A.3. REPRESENTATION OF OBSERVED-DATA INFLUENCE FUNCTIONS

Robins, Rotnitzky and Zhao (1994) derived the form of observed-data influence functions in (11) by adopting the geometric perspective on semiparametric models outlined in Appendix A.1. In contrast to the full-data situation of Appendix A.2, the relevant Hilbert space , say, in which observed-data influence functions are elements is now that of all mean-zero, finite-variance random functions h(O), with analogous inner product and norm, that is, such functions depending on the observed data. The key is to identify the appropriate linear subspaces of (e.g., Γobs⊥ say) to deduce a representation of the influence functions, which in the general semiparametric model is a considerably more complex and delicate enterprise than for full-data problems.

We noted in Section 2.2 that, for purposes of deriving estimators for β based on the observed data, it suffices to identify observed-data influence functions for estimators for and separately. We now justify this claim. It is immediate from the definition (2) of an influence function that the differences of all observed-data influence functions for estimators for and are influence functions for observed-data estimators for β. Conversely, we may show that all observed-data influence functions for estimators for β can be written as the difference of observed-data influence functions for estimators for and . In particular, if φ1(O) and φ0(O) are any observed-data influence functions for estimators for and , respectively, then φ1(O) − φ0(O) is an influence function for β by the above reasoning. By Result A.1 it follows that any observed-data influence function for an estimator for β can be written as φ1(O) − φ0(O) + ψ(O), where ψ(O) ∈ Γobs⊥. We may rewrite this as {φ1w(O) + ψ(O)} − φ0(O). However, by Result A.1 {φ1(O) + ψ(O)} is an observed-data influence function for an estimator for , concluding the argument.

A.4. DERIVATION OF THE EFFICIENT OBSERVED DATA INFLUENCE FUNCTION

Robins, Rotnitzky and Zhao (1994) provide a general mechanism for deducing the form of the efficient influence function. In the pretest–posttest problem this approach may be used to find the optimal choices for h(1) and g(1)′ in (13) given in (14). However, because this mechanism is very general, for a simple model as in the pretest–posttest problem it is more direct and instructive to identify these choices via geometric arguments, as we now demonstrate.

We wish to determine heff(1) and geff(1)′ such that the variance of (13) is minimized; that is, writing (13) as A − B1 − B2, as E(A − B1 − B2) = 0, we wish to minimize E{(A − B1 − B2)2}. Geometrically, this is equivalent to finding the projection of A onto the subspace of of (mean-zero) functions of the form B1 + B2. It is straightforward to show that B1 and B2 are uncorrelated, whence it follows that, as E{(A−B1−B2)2)} = E{(A − B1)2)} + E{(A − B1)2)} − E(A2) under these conditions, this minimization is equivalent to minimizing the variances of A − B1 and A − B2 separately. Because B1 and B2 are uncorrelated, they define orthogonal subspaces of , so that these minimizations may be viewed as finding the separate projections of A onto these subspaces. Thus, as for the full-data case in Section A.2, we wish to find heff(1)(X1,Y1) and geff(1)′(X1,Y1X2,Z) such that, for all h(1) and g(1)′,

A conditioning argument as in Section A.2 using E(R|X1,Y1,X2,Y2,Z) = π(X1,Y1,X2,Z) under MAR then leads to (14). In (14), geff(1)′ does not depend on heff(1), and heff(1) is identical to the optimal full-data choice in (4). These features need not hold for general semiparametric models; in particular, the choice of φF (V) in (11) that yields the efficient observed-data influence function will not be the efficient full-data influence function in general. Here, this is a consequence of the simple pretest–posttest structure.

A.5. DEMONSTRATION OF (17)

The form of the influence function (17) when γ in (16) is estimated follows from a general result shown by Robins, Rotnitzky and Zhao (1994). In particular, Robins, Rotnitzky and Zhao showed precisely that, in our context, the influence function for the estimator for found by deriving an estimator for from the influence function ψ(X1,Y1,X2,R,RY2,Z) in (15) (assuming π(1) is known) and then substituting an estimator for γ , where γ is estimated efficiently (e.g., by ML), is the residual from projection of ψ(X1,Y1,X2,R,RY2,Z) onto the linear subspace of spanned by the score for γ. To demonstrate this, consider the special case of IWCC in (10), that is, (15) with h(1) ≡ q(1) 0. Suppose γ is estimated by ML from data with Z = ≡ 1 only. The score for γ is Sγ(X1,Y1,X2,Z;γ0) = d(X1,Y1,X2) · {R − π(1)(X1,Y1,X2;γ0)}Z and the relevant linear subspace of is {BSγ(X1,Y1,X2,Z;γ0) for all (p × s) matrices B}. Here, bq(1) = 0 and the projection of ψ onto this space, B0Sγ(X1,Y1,X2,Z,γ0), say, must satisfy

for all B. By a conditioning argument similar to those in Appendices A.2 and A.4, we may find B0 and show the projection is equal to the second term in the influence function

| (A.8) |

and that (A.8) is (17) in this special case.