Abstract

Speech–sign or “bimodal” bilingualism is exceptional because distinct modalities allow for simultaneous production of two languages. We investigated the ramifications of this phenomenon for models of language production by eliciting language mixing from eleven hearing native users of American Sign Language (ASL) and English. Instead of switching between languages, bilinguals frequently produced code-blends (simultaneously produced English words and ASL signs). Code-blends resembled co-speech gesture with respect to synchronous vocal–manual timing and semantic equivalence. When ASL was the Matrix Language, no single-word code-blends were observed, suggesting stronger inhibition of English than ASL for these proficient bilinguals. We propose a model that accounts for similarities between co-speech gesture and code-blending and assumes interactions between ASL and English Formulators. The findings constrain language production models by demonstrating the possibility of simultaneously selecting two lexical representations (but not two propositions) for linguistic expression and by suggesting that lexical suppression is computationally more costly than lexical selection.

Introduction

Separate perceptual and motoric systems provide speech–sign or “bimodal” bilinguals with the unique opportunity to produce and perceive their two languages at the same time. In contrast, speech–speech or “unimodal” bilinguals cannot simultaneously produce two spoken words or phrases because they have only a single output channel available (the vocal tract). In addition, for unimodal bilinguals both languages are perceived by the same sensory system (audition), whereas for bimodal bilinguals one language is perceived auditorily and the other is perceived visually. We investigated the implications of bimodal bilingualism for models of language production by examining the nature of bimodal language mixing in adults who are native users of American Sign Language (ASL) and who are also native English speakers. Below we briefly present some important distinctions between ASL and English, describe some of the sociolinguistic characteristics of this bimodal bilingual population, and then highlight some of the implications that this population has for understanding bilingualism and language production in general.

A brief comparison of American Sign Language and English

American Sign Language has a grammar that is independent of and quite distinct from English (see Emmorey, 2002, for a review). For example, ASL allows much freer word order compared to English. English marks tense morphologically on verbs, whereas ASL expresses tense lexically via temporal adverbs (likemany Southeast Asian languages). ASL contains several verbal aspect markers (expressed as distinct movement patterns superimposed on a verb root) that are not found in English, but are found in many other spoken languages (e.g., habitual, punctual, and durational aspect). ASL, unlike English, is a pro-drop language (like many Romance languages) and allows null pronouns in tensed clauses (Lillo-Martin, 1986). Obviously, ASL and English also differ in structure at the level of phonology. Signed languages, like spoken languages, exhibit a level of sublexical structure that involves segments and combinatorial rules, but phonological features are manual rather than oral (see Corina and Sandler, 1993, and Brentari, 1998, for reviews). Finally, English and ASL differ quite dramatically with respect to how spatial information is encoded. English, like many spoken languages, expresses locative information with prepositions, such as in, on, or under. In contrast, ASL encodes locative and motion information with verbal classifier predicates, somewhat akin to verbal classifiers in South American Indian languages. In these constructions, handshape morphemes specify object type, and the position of the hands in signing space schematically represents the spatial relation between two objects. Movement of the hand specifies the movement of an object through space (for wholeentity classifier constructions, see Emmorey, 2003). Thus, English and ASL are quite distinct from each other within phonological, morphological, and syntactic domains.

Some characteristics of the bimodal bilingual population

We examined HEARING ASL–English bilinguals because although Deaf1 signers are generally bilingual in ASL and English, many Deaf people prefer not to speak English, and comprehension of spoken English via lip reading is quite difficult. Hearing adults who grew up in Deaf families constitute a bilingual community, and this community exhibits characteristics observed in other bilingual communities. Many in this community identify themselves as Codas or Children of Deaf Adults who have a cultural identity defined in part by their bimodal bilingualism, as well as by shared childhood experiences in Deaf families. Bishop and Hicks (2005) draw a parallel between the marginalized Spanish–English bilinguals in New York City (Zentella, 1997) and the oppression of ASL and the Deaf community. Even within the educated public, battles continue over whether ASL can fulfill foreign language requirements (Wilcox, 1992) and over whether ASL should be part of a bilingual approach to deaf education (Lane, 1992). In addition, Deaf cultural values and norms are often not recognized or understood by the majority of the hearing English-speaking community (Lane, Hoffmeister and Bahan, 1996; Padden and Humphries, 2005). For example, within the Deaf community and in contrast to American society in general, face-to-face communication and prolonged eye contact are valued and expected behaviors. Hearing ASL–English bilinguals born into Deaf families, henceforth Codas, have an affiliation with both the Deaf community and the majority English-speaking community (Preston, 1994). Codas are not only bilingual, they are bicultural.

Issues raised by bimodal bilingualism

Bimodal bilingualism is distinct from unimodal bilingualism because the phonologies of the two languages are expressed by different articulators, thus allowing simultaneous articulation. Unimodal bilinguals cannot physically produce two words or phrases at the same time, e.g., saying dog and perro (“dog” in Spanish) simultaneously. Unimodal bilinguals therefore code-SWITCH between their two languages when producing bilingual utterances. Bimodal bilinguals have the option of switching between sign and speech or producing what we term code-BLENDS in which ASL signs are produced simultaneously with spoken English. We have coined the term CODE-BLEND to capture the unique simultaneous nature of bimodal language production that is obscured by the term CODE-SWITCH, which implies a change from one language to another.

The first question that we addressed was whether adult bimodal bilinguals prefer code-blending over code-switching. Previously, Petitto et al. (2001) reported that two young children acquiring spoken French and Langues des Signes Québécoise (LSQ) produced many more “simultaneous language mixes” (code-blends in our terminology) than code-switches: 94% vs. 6%of language mixing was simultaneous. However, it is possible that children acquiring sign and speech exhibit a different pattern of language mixing than adults. The language input to children acquiring a signed and a spoken language is often bimodal, i.e., words and signs are often produced simultaneously by deaf caregivers (Petitto et al., 2001; Baker and van den Bogaerde, in press). It is possible that in adulthood, bimodal bilinguals prefer to switch between languages, rather than produce simultaneous sign–speech expressions. A major goal of our study is to document the nature of code-switching and code-blending, using both conversation and narrative elicitation tasks.

If bimodal bilinguals prefer code-blending to code-switching, this has several important theoretical implications for models of bilingual language production. First, most models assume that one language must be chosen for production to avoid spoken word blends, such as saying English Spring simultaneously with German Frühling producing the nonce form Springling (Green, 1986). However, models differ with respect to where this language output choice is made. Some models propose that language choice occurs early in production at the preverbal message stage (e.g., Poulisse and Bongaerts, 1994; La Heij, 2005); others propose that dual language activation ends at lemma selection with language output choice occurring at this stage (e.g., Hermans, Bongaerts, De Bot, and Schreuder, 1998), while still others argue that both languages remain active even at the phonological level (e.g., Costa, Caramazza, Sebastián-Gallés, 2000; Colomé, 2001; Gollan and Acenas, 2004). If language choice occurs at the preverbal or lemma selection levels, then bimodal bilinguals should pattern like unimodal bilinguals and commonly switch between languages because one language has been selected for expression early in the production process. However, if the choice of output language arises primarily because of physical limitations on simultaneously producing two spoken words, then bimodal bilinguals should be free to produce signs and words at the same time, and code-blending can be frequent. If so, it will indicate that the architecture of the bilingual language production system does not require early language selection, thereby supporting models that propose a late locus for language selection.

Second, whether code-blending or code-switching is preferred by bimodal bilinguals will provide insight into the relative processing costs of lexical inhibition versus lexical selection. For code-blends, TWO lexical representations must be selected (an English word and an ASL sign). In contrast, for a code-switch, only one lexical item is selected, and the translation equivalent must be suppressed (Green, 1998; Meuter and Allport, 1999). If lexical selection is more computationally costly than suppression, then the expense of selecting two lexical representations should lead to a preference for code-switching (avoiding the cost of dual selection). On the other hand, if lexical inhibition is more costly than lexical selection, code-blending should be preferred by bimodal bilinguals to avoid the expense of suppressing the translation equivalent in one of the languages.

Another question of theoretical import is whether code-blending allows for the concurrent expression of different messages in the bilingual's two languages. If bimodal bilinguals can produce distinct propositions simultaneously, it will indicate that limits on the simultaneous processing of propositional information for ALL speakers arise from biological constraints on simultaneous articulation, rather than from more central constraints on information processing (Levelt, 1980). That is, monolinguals and unimodal bilinguals might have the cognitive capacity to produce distinct and unique propositions in each language, but are forced to produce information sequentially because only one set of articulators is available. On the other hand, if bimodal bilinguals rarely or never produce distinct propositions during code-blending, then it will suggest that there is a central limit on our cognitive ability to simultaneously encode distinct propositions.

When unimodal bilinguals produce code-switches, there is often an asymmetry between the participating languages. Myers-Scotton (1997, 2002) identifies the language that makes the larger contribution as the Matrix Language and the other language as the Embedded Language. The Matrix Language contributes the syntactic frame and tends to supply more words and morphemes to the sentence. To characterize the nature of bimodal bilingual language mixing, we investigated whether a Matrix Language – Embedded Language opposition can be identified during natural bimodal bilingual communication. Because ASL and English can be produced simultaneously with distinct articulators, it is conceivable that each language could provide a different syntactic frame with accompanying lexical items. Such a code-blending pattern would argue against the Matrix Language Frame (MLF) model and would suggest that models of language production are not constrained to encode a single syntactic structure for production.

It is important to distinguish between NATURAL bimodal interactions and SIMULTANEOUS COMMUNICATION or SIMCOM. Simcom is a communication system frequently used by educators of the deaf, and the goal is to produce grammatically correct spoken English and ASL at the same time. Grammatically correct English is important for educational purposes, and ASL is usually mixed with some form of Manually Coded English (an invented sign system designed to represent the morphology and syntax of English). Simcom is also sometimes used by bimodal bilinguals with a mixed audience of hearing people who do not know ASL and of Deaf people for whom spoken English is not accessible. Simcom differs from natural bimodal language because it is forced (both languages MUST be produced concurrently), and English invariably provides the syntactic frame, particularly within educational settings.

We also documented the distributional pattern of syntactic categories within code-blends. For unimodal bilinguals, nouns are more easily code-switched than verbs (Myers-Scotton, 2002; Muysken, 2005). Verbs may be resistant to intra-sentential code-switching because they are less easily integrated into the syntactic frame of the sentence (Myers-Scotton and Jake, 1995). We investigated whether this noun/verb asymmetry is present during language mixing when lexical items can be produced simultaneously. For example, bimodal bilinguals may have less difficulty “inserting” an ASL verb into an English sentence frame when the inflectional morphology of the spoken verb can be produced simultaneously with the signed verb.

Finally, the simultaneous production of signs and words has certain parallels with the monolingual production of co-speech gestures and spoken words. Co-speech gestures, like signs, are meaningful manual productions that can be produced concurrently with spoken words or phrases (McNeill, 1992). We explored the parallels and distinctions between code-blending and co-speech gesture in order to compare the language production system of monolingual speaker-gesturers with that of bilingual speaker-signers.

In sum, a major goal of this study was to characterize the nature of bimodal bilingual language mixing, and we addressed the following specific questions: a) Do bimodal bilinguals prefer to code-switch or code-blend? b) Are distinct propositions conveyed in each language within a code-blend? c) Is there a Matrix/Embedded Language asymmetry between the participating languages during bimodal code-blending? d) What is the distribution of syntactic categories for code-blends and code-switches? e) How are code-blends similar to and different from co-speech gesture?

A second major goal of this study was to put forth a model of bimodal bilingual language production that provides a framework for understanding the nature of code-blending. The model is laid out in the Discussion section below and is based on the model of speech production proposed by Levelt and colleagues (Levelt, 1989; Levelt, Roelofs, and Meyer, 1999) and integrates the model of co-speech gesture production proposed by Kita and Özyürek (2003).

Methods

Participants

Eleven ASL–English fluent bilinguals participated in the study (four males, seven females) with a mean age of 34 years (range: 22–53 years). All were hearing and grew up in families with two Deaf parents, and seven participants had Deaf siblings or other Deaf relatives. All participants rated themselves as highly proficient in both ASL and in English (a rating of 6 or higher on a 7-point fluency scale), with a marginal trend [t(10)=1.79, SE=.20, p=.10] towards higher proficiency ratings in English (M=6.91, SD=.30) than in ASL (M=6.55, SD=.52) perhaps reflecting immersion in an English-dominant environment. All but two participants reported using ASL in everyday life, and five participants were professional ASL interpreters.2 In addition, most of the participants (nine) had participated in cultural events for Codas where language mixing often occurs.

Procedure

Participants were told that we were interested in how Codas talked to each other, and they were asked to perform two tasks. First, each participant conversed with another ASL–English bilingual whom they knew, either one of the experimenters (HB) or another friend. Participants were given a list of conversation topics that were related to Coda experiences, for example, parent–teacher meetings in which the participant was asked to interpret for his or her parents. The conversations lasted 15–20 minutes. Participants were told that although they might begin talking in English, it was fine to use ASL “whenever you feel like it” and that “we want you to have both languages ‘on’”. We designed the interaction in this way because participants would be more likely to code-mix if they were in a bilingual mode, interacting with a bilingual friend and discussing ASL and deafness-related topics (see Grosjean, 2001).3 In the second task, participants watched a seven-minute cartoon (Canary Row) and then re-told the cartoon to their conversational partner. This particular cartoon was chosen because it is commonly used to elicit co-speech gesture (e.g., McNeill, 1992) and therefore allowed us to easily compare the simultaneous production of ASL and spoken English with co-speech gesture. In addition, the cartoon re-telling task enabled us to elicit the same narrative for all participants.

All interactions were videotaped for analysis. Filming took place at the Salk Institute in San Diego, at Gallaudet University in Washington DC, or in Iowa City, Iowa, where a group of ASL–English bilinguals were participating in a neuroimaging study at the University of Iowa.

Results

All bilinguals produced at least one example of language mixing during their 15–20 minute conversation with an ASL–English bilingual. Only two bilinguals chose not to mix languages at all during the cartoon re-telling task; participant 11 (P11) signed her narrative without any speech, and P6 re-told the cartoon completely in English. For quantitative analyses, we extracted and transcribed a segment of speech/sign within each conversation and cartoon narrative (mean language sample length: 3.5 minutes). For the conversation, each extraction contained at least one continuous anecdote told by the participant and occurred five minutes into the conversation. This time-point was chosen to avoid formulaic introductory conversation. For the cartoon narrative, the extraction contained the first three scenes of the cartoon in which Tweety the bird and Sylvester the cat view each other through binoculars, and Sylvester makes two attempts to climb up a drainpipe to capture Tweety.

The videotaped excerpts were stamped with time-code that allowed for the analysis of the timing between the production of ASL and English. An utterance was coded as containing a code-blend if sign and speech overlapped, as determined by the time-code and frame-by-frame tracking with a jog shuttle control. An utterance was coded as containing a code-switch from English to ASL if no spoken words could be heard during the articulation of the ASL sign(s).

Code-switching versus code-blending

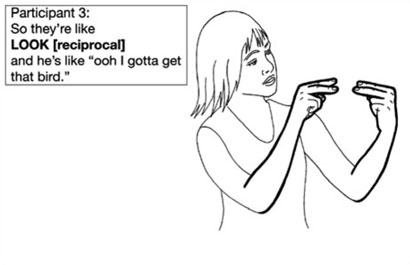

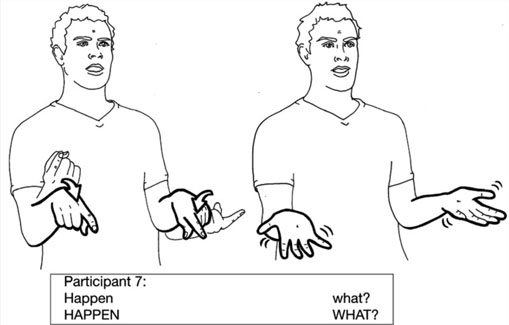

The results indicated that speech–sign bilinguals rarely code-switch. Of the 910 utterances in the data set, only 57 (6.26%) contained examples of code-switches in which the participant stopped talking and switched to signing without speech. The vast majority (91.84%) of intra-sentential codes-switches consisted of insertions of a single sign, as illustrated in Figure 1. The code-switch to the ASL sign LOOK[reciprocal] shown in Figure 1 was produced by P3 to describe the cartoon scene in which Tweety and Sylvester look at each other through binoculars, and the same code-switch was also produced by P1 and P7 (see the Appendix for ASL transcription conventions).

Figure 1.

Example of a code-switch from English to an ASL sign that does not have a simple single-word translation equivalent in English. The ASL sign is bolded and written in capital letters. ASL illustration copyright © Karen Emmorey.

In contrast, bimodal bilinguals frequently produced code-blends, in which ASL signs were produced simultaneously with spoken English. Of the 910 utterances, 325 (35.71%) contained a code-blend. Of the utterances in the sample that contained language mixing, 98% (325/333) contained a code-blend. As seen in Table 1, the percentage of language mixing was similar for the narrative and the conversation data sets.

Table 1.

Mean percent (M) and standard deviation (SD) of utterances containing a code-switch or a code-blend (note: utterances could contain both) for each language sample (N=11).

| Condition | Code-swithches |

Code-blends |

||

|---|---|---|---|---|

| M | SD | M | SD | |

| Narrative | 5.66 | 11.25 | 29.27 | 39.93 |

| Conversation | 3.72 | 6.34 | 35.03 | 38.87 |

A microanalysis of the timing of ASL–English code-blends was conducted using Adobe Final Cut Pro 5 software, which displays the speech waveform with the digitized video clip and allows slow motion audio. We selected 180 word–sign combinations from the narrative and the conversation data sets for digitized analysis in the following way. For 10 participants,4 we selected the first code-blends in each data set up to 20, excluding finger-spelled words and semantically non-equivalent code-blends (mean number of code-blends per participant: 16). For each blend, two independent raters judged the onset of the ASL sign and the onset of the auditory waveform for timing. The onset of an ASL sign was defined as when the handshape formed and/or the hand began to move toward the target location. The results indicated that the production of ASL signs and English words was highly synchronized. For the vast majority of code-blends (161/180 or 89.44%), the onset of the ASL sign was articulated simultaneously with the onset of the English word. For the remaining slightly asynchronous code-blends, the onset of the ASL sign preceded the onset of the English word (8.34%) by a mean of 230 ms (6.9 video frames) or slightly followed the English word (2.22%) by a mean of 133 ms (4.0 video frames).

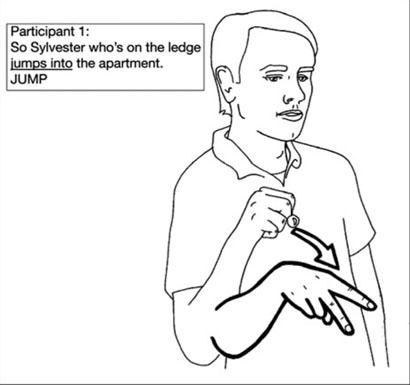

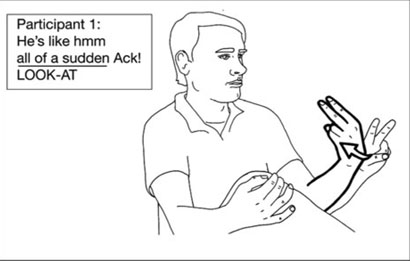

Figure 2 illustrates an example of an ASL–English code-blend from the cartoon narrative produced by P1. In this example, a single sign is articulated during the production of an English sentence. Further examples of single-sign code-blends are provided in examples (1a–c). Underlining indicates the speech that co-occurred with the ASL sign(s). In addition, participants produced longer code-blends, in which an ASL phrase or sentence was produced simultaneously with an English sentence, as illustrated in examples (2a–d). The language sample contained more multi-sign code-blends (205/325; 63.08%) than single-sign code-blends (120/325; 36.92%).

Figure 2.

An example of an ASL–English single-sign code-blend. Underlining indicates the speech that co-occurred with the illustrated sign. ASL illustration copyright © Karen Emmorey.

(1) Single-sign code-blends

-

P8 (conversation):

“She didn't even sign until fourteen.”

SIGN

-

P7 (cartoon narrative):

“He goes over and uh sees the gutter going up the side of the building.”

CL:1[upright person moves from a to b]

-

P5 (cartoon narrative):

“And there's the bird.”

BIRD

(2) Multi-sign code-blends

-

P9 (conversation):

“So, it's just like you don't like communicate very much at all.”

NOT COMMUNICATE

-

P4 (conversation):

“(He)was responsible for printing all of the school forms.”

RESPONSIBLE PRINT

-

P7 (cartoon narrative):

“I don't think he would really live.”

NOT THINK REALLY LIVE

-

P2 (conversation):

“Now I recently went back.”

NOW I RECENTLY GO-TO

Grammatical categories of ASL–English code-blends and code-switches

To investigate whether ASL–English intra-sentential code-blends or code-switches were constrained by grammatical category, we identified each ASL sign produced within a single-sign code-blend or code-switch as a verb, noun, pronoun, adjective, adverb, or other (e.g., preposition, conjunction). The percentage of single-sign code-blends that contained a verb (67.50%) was much higher than the percentage that contained a noun (6.67%). The same pattern was observed for code-switches (57.90% vs. 7.02%; see Table 2).

Table 2.

The percentages of ASL signs in each grammatical category for intra-sentential single-sign code-blends and code-switches, and the percent of multi-sign code-blends containing at least one sign within a grammatical category. Number of utterances is given in parentheses.

| Utterance type | Verb | Noun | Pronoun | Adjective | Adverb | Other |

|---|---|---|---|---|---|---|

| Code-blends (N=120) | 67.50 (81) | 6.67 (8) | 1.67 (2) | 17.50 (21) | 1.67 (2) | 5.0 (6) |

| Code-switches (N=57) | 57.90 (33) | 39.93 | 15.79 (9) | 14.04 (8) | 0 | 5.26 (3) |

| Multi-sign Code-blends (N=205) | 66.34 (136) | 64.39 (132) | 27.80 (57) | 35.61 (73) | 19.51 (40) | 36.10 (74) |

Because multi-sign code-blends rarely contained more than one verb, we did not find a preference for verbs in this context (see examples (2a–d)), with 66.34% of multi-sign code-blends containing at least one ASL verb and 64.39% containing at least one ASL noun (see Table 2). About half of the multi-sign code-blends (102/205; 49.76%) contained both a verb and a noun or pronoun.

Semantic characteristics of code-blends and code-switches

One consequence of lifting articulatory constraints on language mixing is that it becomes possible to produce information in one language while simultaneously producing additional, distinct information in the second language. However, as seen in Table 3, the majority of code-blends contained semantically equivalent words or phrases.5 Words or phrases were considered semantically equivalent in ASL and English if they constituted translation equivalents – the examples in (1a–c), (2a– d), and in Figure 2 are all translation equivalents. To define semantic equivalence, we used dictionary translation equivalence (e.g., “bird” and BIRD), and ASL classifier constructions that conveyed essentially the same information as the accompanying English were also considered semantically equivalent (e.g., “pipe” and CL:F handshape, 2-hands[narrow cylindrical object]). The percentage of semantically equivalent code-blends in our adult ASL–English bilinguals (82%) is similar to what Petitto et al. (2001) reported for their two bimodal bilingual children. They found that 89% of simultaneously produced LSQ signs and French words were “semantically congruent mixes”.

Table 3.

The semantic characteristics of ASL–English code-blends. The number of code-blended utterances is given in parentheses.

| Condition | Translation equivalents |

Semantically non-equivalent |

Timing discrepancy |

Lexical retrieval error |

|---|---|---|---|---|

| Conversation (N=153) | 76.47% (117) | 19.61% (30) | 3.92% (6) | —(0) |

| Narrative (N=172) | 86.05% (148) | 12.79% (22) | — (0) | 1.16% (2) |

| Total (N=325) | 81.54% (265) | 16.00% (52) | 1.85% (6) | 0.61% (2) |

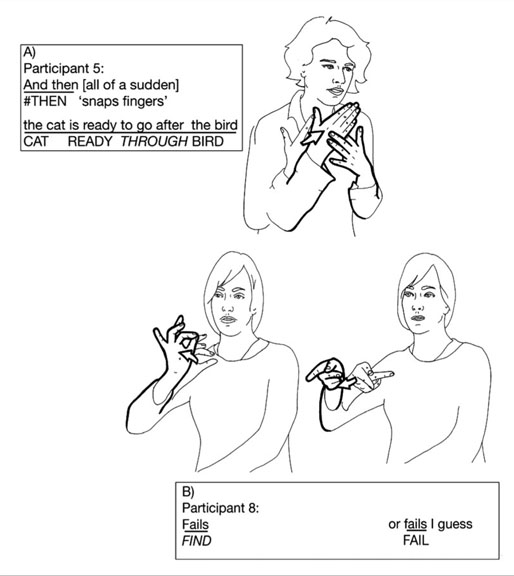

Semantically non-equivalent code-blends fell into three categories: a) the ASL sign(s) and English word(s) did not constitute translation equivalents and conveyed different information; b) there was a misalignment between the production of the ASL sign(s) and the articulation of the English word(s), e.g., an ASL sign was produced several seconds before the translation-equivalent word/phrase and simultaneously with a different English word/phrase; or c) a clearly incorrect sign was retrieved, i.e., a sign error occurred (errors were rare, but informative, see Discussion section and Figure 8 below). Examples of the first two categories are given in (3a–b).

Figure 8.

Examples of lexical retrieval errors during code-blending. The incorrect ASL signs are indicated by italics. A) The sign THROUGH is produced instead of the sign AFTER. The signs THROUGH and AFTER are phonologically related in ASL. B) The sign FIND is initially produced in a code-blend with “fail”, and then corrected to the semantically equivalent sign FAIL. ASL illustrations copyright © Karen Emmorey.

(3) Semantically non-equivalent code-blends

-

The bolded English word and ASL sign are not translation equivalents

P7 (cartoon narrative):

“Tweety has binoculars too.”

BIRD HAVE BINOCULARS

-

The bolded English and ASL classifier convey distinct information, and the English verb phrase and ASL classifier verb are not temporally aligned

P3 (conversation):

“And my mom's you know walking down.”

CL:Vwgl[walk down stairs]

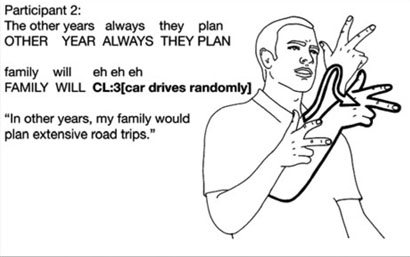

The code-switched signs fell into the following semantic categories: a) classifier constructions expressing motion or location; b) ASL expressions or signs that do not have an exact English translation (e.g., SCENECLOSE which describes the fade-to-black transition between cartoon scenes); c) a lexical sign that has an English translation; or d) ASL pronouns (a point to a location in signing space associated with a referent). The proportion of signs within each category is presented in Table 4. The majority of code-switches were to ASL classifier constructions that express motion or location, which frequently do not have exact English translations, as illustrated in Figure 3. In addition, this example contains a vocalization (“eh, eh, eh”) produced during the code-switch. Participants P1, P2, and P7 also occasionally produced vocalizations with classifier constructions. These vocalizations were either sound effects (a type of iconic vocal gesture) or vocalizations that are sometimes produced by Deaf signers to accompany such constructions (the example in Figure 3 falls into this category). The latter type of vocalization would only be produced by bimodal bilinguals (i.e., it is not a vocal gesture that hearing non-signers would produce) and may or may not express meaning (“eh, eh, eh” in Figure 3 may indicate extensive duration).

Table 4.

Semantic characteristics of code-switched ASL signs in the language sample (N=57). The number of signs is given in parentheses.

| Classifier construction |

No extact English translation |

English translation equivalent exists |

Pronoun |

|---|---|---|---|

| 42.10% (24) | 22.81% (13) | 19.30% (11) | 15.79% (9) |

Figure 3.

Example of a code-switch to an ASL classifier construction. The code-switched ASL sign is bolded. The utterance preceding the code-switch is an example of multi-word code-blending with ASL as the Matrix Language and English as the Accompanying Language. ASL illustration copyright © Karen Emmorey.

After classifier constructions, code-switches were most likely to be ASL lexical signs that do not have simple single-word translations in English, as exemplified by the code-switched sign LOOK[reciprocal] shown in Figure 1. This verb may have been frequently produced as a code-switch because it easily (and iconically) captures the scene with a single lexical form. The following are other unique code-switched signs that occurred in our language sample (only rough English glosses are given): LESS-SKILLED, FANCY, BUTTER-UP, SCENE-OPEN, DECORATED.

Thus, intra-sentential code-switches exhibited a semantic pattern that differed from code-blends. While code-blends were generally semantically equivalent, the majority of code-switches (65%) contained either novel ASL lexical signs or classifier constructions that do not occur in English and often do not have clear translation equivalents. In addition, for the majority of ASL code-switches (90%), the information that was signed in ASL was not repeated in English. Similarly, for unimodal bilinguals, switched constituents are rarely repeated (Poplack, 1980).

Finally, a unique reason that one bimodal bilingual code-switched was related to the physical properties of speech and sign. During his cartoon narrative, P7 was speaking (with some language mixing) and switched to signing without speech when he had a small coughing episode (this particular example of code-switching was not part of the extracted language sample).

Asymmetries between English and ASL: The Matrix Language and Embedded (or Accompanying) Language contrast

We observed many bimodal utterances in which English would be considered the Matrix Language providing the syntactic frame and the majority of morphemes. For these cases, ASL would be considered the Embedded Language, although Accompanying Language is a more appropriate term since ASL is produced simultaneously with English rather than embedded within the ASL sentence. Such examples are given in (1a–c), (2a–b), (3b), and in Figures 1 and 2. We also observed examples in which ASL would be considered the Matrix Language and English the Accompanying Language. In these cases, English phrases are clearly influenced by the syntax of ASL, and this type of speech has been informally called “Coda-talk” (Bishop and Hicks, 2005; Preston, 1994). An example of such ASL-influenced English can be found in the transcription that precedes the code-switch in Figure 3, and another example is illustrated in Figure 4. In the example in Figure 4, the spoken English phrase “Happen what?” is a word-for-sign translation of the ASL phrase that would be interpreted in this context as “And guess what?”

Figure 4.

Illustration of a bimodal utterance with ASL as the Matrix Language. The ASL phrase “HAPPEN WHAT?” would be translated as “And guess what?” in this context. The participant was describing a cartoon scene in which Sylvester notices that there is a drainpipe running up the side of Tweety's building and that the drainpipe is (conveniently!) right next to Tweety's window. The rhetorical question (HAPPEN WHAT?) was immediately followed by the answer (that the pipe was right next to the window where Tweety was sitting). ASL illustrations copyright © Karen Emmorey.

Eight participants produced at least one example of ASL-influenced English in their language sample, and two participants were particularly prone to this type of speech (P2 and P7). Of the 910 utterances in the data set, 146 (16%) contained ASL-influenced English with accompanying ASL signs. Further examples are given in (4a–c). Within Myers-Scotton's (1997, 2002) model of code-switching, ASL would be considered the Matrix Language for these examples since ASL provides the syntactic frame for code-blending, and English would be considered the Accompanying Language.

(4) Examples of ASL-influenced English: ASL is the Matrix Language and English is the Accompanying Language

-

P2 (cartoon narrative):

“Wonder what do.”

WONDER DO-DO

Translation: [Sylvester] wonders what to do.

-

P2 (conversation):

“show me when I am a little bit bad behavior …”

SHOW ME WHEN I LITTLE-BIT BAD BEHAVIOR

Translation: [My parents] would explain to me when I was behaving a little badly … [that I was lucky because our family took many vacations unlike our neighbors].

-

P7 (cartoon narrative)

“rolls happen to where? Right there”

ACROSS HAPPEN TO WHERE POINT

Translation: “[The bowling ball] rolls across [the street] to guess where? Right there! [the bowling alley].”

Note: The combination of “rolls” and ACROSS is an example of a semantically non-equivalent code-blend.

For some bimodal utterances, it was not possible to determine whether ASL or English was the Matrix or the Accompanying Language because neither language contributed more morphemes and the phrases are grammatically correct in each language. Examples without a clear Matrix Language choice can be found in (2c–d) and (3a).

Finally, we observed NO examples in which ASL was the Matrix Language and single-word English code-blends were produced, whereas we observed many examples in which English was the Matrix Language and single-sign code-blends were produced (e.g., examples (1a–c) and Figure 2). The participant (P11) who signed the entire cartoon narrative did not produce any accompanying spoken English during her ASL narrative. A hypothetical example of a single-word code-blend during a signed utterance is given in (5).

(5) Invented example of a single-word code-blend with ASL as the Matrix Language

“think”

THEN LATER CAT THINK WHAT-DO

Similarities and differences between ASL–English code-blending and co-speech gesture

Our analysis of code-blends reveals several parallels between code-blending and co-speech gesture. First, code-blends pattern like the gestures that accompany speech with respect to the distribution of information across modalities. For the vast majority of code-blends, the same concept is encoded in speech and in sign. Similarly, McNeill (1992) provides evidence that “gestures and speech are semantically and pragmatically coexpressive” (p. 23). That is, gestures that accompany a spoken utterance tend to present the same (or closely related) meanings and perform the same pragmatic functions. Second, ASL signs and English words are generally synchronous, as are gestures and speech. Timing studies by Kendon (1980) and McNeill (1992) indicate that the stroke of a gesture (the main part of the gesture) co-occurs with the phonological peak of the associated spoken utterance. Similarly, the onset of articulation for an ASL sign was synchronized with the onset of the associated spoken word, such that the phonological movement of the sign co-occurred with the phonological peak of the accompanying English word. Third, our data reveal that code-blending is common, but code-switching is rare. Similarly, switching from speech to gesture is rare; McNeill reports “90% of all gestures by speakers occur when the speaker is actually uttering something” (p. 23).

Code-blending and co-speech gesture appear to differ primarily with respect to conventionalization and the linguistic constraints that result from the fact that ASL is a separate language, independent of English. For example, co-speech gestures are idiosyncratic and convey meaning globally and synthetically (McNeill, 1992). In contrast, signs, like words, exhibit phonological and morphological structure and combine in phrases to create meaning, as seen particularly in multi-sign code-blends. Because of this lack of linguistic structure, co-speech gesture cannot drive the syntax of speech production, whereas ASL clearly can (see Figures 3 and 4, and examples (4a–c)). Gestures are dependent upon speech, whereas ASL signs are part of an independent linguistic system.

Finally, it should be noted that code-blending and co-speech gesture are not mutually exclusive. Participants produced both co-speech gestures and code-blends during their cartoon narratives and conversations.

Discussion

Bimodal bilingual language mixing was characterized by a) frequent code-blending, b) a clear preference for code-blending over code-switching, c) a higher proportion of verbs than nouns in single-sign code-switches and code-blends, and d) a strong tendency to produce semantically equivalent code-blends. In addition, asymmetries between the languages participating in bimodal language mixing were observed. Most often English was selected as the Matrix Language, but we also observed examples in which ASL was the Matrix Language and English the Accompanying Language. Finally, code-blending was similar to co-speech gesture with respect to the timing and coexpressiveness of the vocal and manual modalities, but differed with respect to the conventionality, combinational structure, and dependency on speech.

A model of ASL–English code-blending

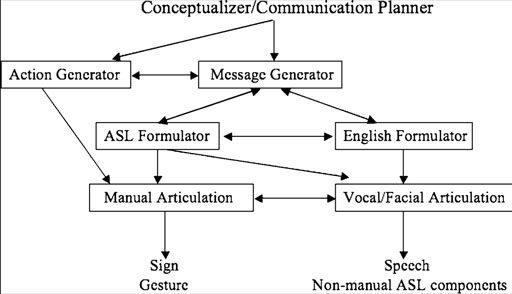

To account for the production of simultaneous ASL–English code blends, we adopt Levelt's speech production model (Levelt, 1989; Levelt et al., 1999), assuming separate but linked production mechanisms for sign and speech (see Emmorey, 2002, 2007 for evidence that Levelt et al.'s model can be adapted for sign language). We assume two separate Formulators for ASL and English to capture the fact that the lexicon, grammatical encoding, phonological assembly, and phonetic encoding are distinct for the two languages. However, we hypothesize that some or all of these components may be linked for bilinguals. A schematic representation of our proposed model of bimodal bilingual language production is shown in Figure 5.

Figure 5.

A model of ASL–English code-blend production based on Levelt (1989), which integrates Kita and Özyrük's (2003) model of speech and gesture production. Connections to the Action Generator from the Environment and Working Memory are not shown. The ASL and English Formulators are hypothesized to contain the lexicons and grammatical, morphological, and phonological encoding processes for each language.

To account for some of the similarities between code-blending and co-speech gesture, our model also incorporates relevant portions of the model of co-speech gesture production put forth by Kita and Özyürek (2003). Specifically, Kita and Özyürek (2003) propose that cospeech gestures arise from an Action Generator (a general mechanism for creating an action plan), which interacts with the Message Generator (Levelt, 1989) within the speech production system. By proposing that the Action Generator interacts with the Message Generator, Kita and Özyürek's model accounts for the fact that language can constrain the form of gesture. For example, Turkish speakers produce manner-of-motion gestures that differ from those of English speakers because Turkish encodes manner with a separate clause whereas English encodes manner and path information within the same clause.

The fact that co-speech gesture and ASL–English code-blends generally convey the same information in the two modalities indicates the centrality of the Message Generator for linguistic performance. Levelt (1989) argued that as soon as concepts are to be expressed, they are coded in a propositional mode of representation, which encodes the semantic structure of a preverbal message or interfacing representation (Bock, 1982). We propose that the Action Generator, ASL Formulator, and English Formulator all operate with respect to this interfacing representation. This architecture accounts for the production of the same (or complementary) information in gesture and speech and in ASL and English code-blends.

For semantically-equivalent code-blends, we hypothesize that the same concept from the Message Generator simultaneously activates lexical representations within both the English and ASL Formulators. Grammatical encoding occurs within the English Formulator when English is the Matrix Language and within the ASL Formulatorwhen ASL is the Matrix Language. When English is the Matrix Language (examples (1)–(2) and Figures 1 and 2), both single-sign and multi-sign code-blends can be produced, and the syntax of English constrains the order that ASL signs are produced. When ASL is selected as the Matrix Language (examples (4a–c) and Figures 3 and 4), we hypothesize that grammatical encoding in ASL drives the production of English, such that English words are inserted into the constituent structure assembled within the ASL Formulator and are produced simultaneously with their corresponding ASL signs. Self-monitoring for speech must be adjusted to permit function word omissions and non-English syntactic structures. To a monolingual English speaker, this type of speech would be considered “bad English”, and it would be avoided during Simcom because the goal of Simcom is to produce grammatically correct English and ASL at the same time.

However, for some examples it was not clear whether English or ASL should be considered the Matrix Language because the syntactic frame of the bimodal utterance was consistent with both languages (e.g., examples (2c–d)). It is possible that for these utterances, grammatical encoding occurs simultaneously in both English and ASL or both languages may tap shared syntactic structure (Hartsuiker, Pickering, & Veltkamp, 2004). Another possibility is that a Matrix Language has indeed been selected for these examples, but it must be identified based on other factors, such as the characteristics of preceding utterances, the frequency of the syntactic structure in ASL versus English, or other morpho-syntactic factors that indicate one language is serving as the Matrix Language.

Additional cross-linguistic work is needed to determine to what extent the ASL-influenced English (Coda-talk) that occurred during natural bilingual interactions is a phenomenon that is or is not unique to bimodal bilinguals. Such utterances may serve in part to define ASL–English bilinguals, particularly Codas, as a part of a bicultural group. Bishop and Hicks (2005) found similar English examples from a corpus of e-mails sent to a private listserv for Codas. Examples are given in (6) below. Bishop and Hicks (2005) found that the written English of these bimodal bilinguals often contained phrases and sentences that were clearly influenced by ASL, such as dropped subjects, missing determiners, topicalized sentence structure, and ASL glossing. While the written English examples from Bishop and Hicks (2005) were presumably produced without simultaneous signing, our spoken examples were almost always produced with ASL.

(6) Examples of written “Coda-talk” or ASL-influenced English from Bishop and Hicks (2005, pp. 205f)

Now is 6 AM, I am up 5 AM, go work soon

Sit close must sign

Have safe trip on plane

I am big excite

Finally, as noted in the introduction, deaf mothers and fathers produce code-blends when communicating with their children (Petitto et al., 2001; Baker and van den Bogaerde, in press). However, Petitto et al. (2001) argue that bimodal bilingual children differentiate their two languages very early in development, prior to first words; and of course, adult Codas can separately produce English or ASL without code-blending. Nonetheless, it is possible that the pattern of code-blending observed in our Coda participants was influenced by their early childhood language input. An investigation of code-blending by late-learners of ASL will elucidate which code-blending behaviors arise for all bimodal bilinguals and which are linked to exposure to language mixing in childhood.

Accounting for semantically non-equivalent code-blends

Although the majority of code-blends were semantically equivalent, the language sample nonetheless contained a fair number of semantically non-equivalent code-blends (see above, Table 3 and the examples in (3)). Figures 6–8 illustrate additional examples of such code-blends. In Figure 6, the participant is talking about a cartoon scene in which Sylvester the cat has climbed up the outside of the drainpipe next to Tweety's window. In this example, “he” refers to Sylvester who is nonchalantly watching Tweety swinging inside his birdcage on the window sill. Then all of a sudden, Tweety turns around and looks right at Sylvester and screams (“Ack!”). The sign glossed as LOOK-AT is produced at the same time as the English phrase “all of a sudden”. In this example, the ASL and English expressions each convey different information, although both expressions refer to the same event. To account for this type of code-blend, we propose that the preverbal message created by the Message Generator contains the event concept (e.g., Tweety suddenly looking at Sylvester), but in these relatively rare cases, different portions of the event concept are sent to the two language Formulators. The temporal aspect of the event (i.e., that it was sudden) is encoded in English, while the action (looking toward someone) is simultaneously encoded in ASL.

Figure 6.

Example of a semantically non-equivalent code-blend. In this example, the participant's body represents the object of LOOK-AT (i.e., Sylvester). ASL illustration copyright © Karen Emmorey.

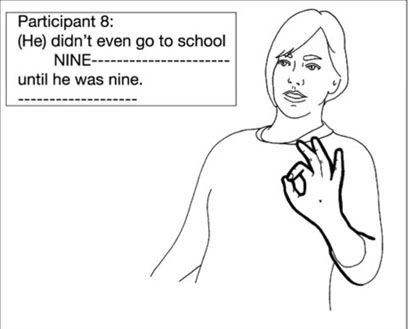

Another example of a semantically non-equivalent code-blend in which English and ASL simultaneously express distinct aspects of a concept is shown in Figure 7. Almost the entire utterance (“didn't go to school until he was”) was produced simultaneously with the ASL sign NINE, which foreshadowed the final English word (“nine”). Similarly, in example (3b) the ASL classifier verb foreshadows the following English verb phrase (“you know, walking down”),which is produced without accompanying ASL. Examples like these indicate that the timing of the production of ASL and English can be decoupled during language mixing. In addition, these code-blends may be similar to examples of co-speech gesture in which the gesture precedes the affiliated speech. For example, in a puzzle game in which speakers had to describe where to place blocks on a grid, one speaker produced a rotation gesture (her index finger circled counter-clockwise) while saying “the red one” but BEFORE describing how to rotate the block (“180 degrees on the left side”; Emmorey and Casey, 2001). Like ASL and English within a codeblended utterance, gesture and speech can occasionally be de-coupled during production, but they are more commonly produced in synchrony (McNeill, 1992).

Figure 7.

Example of a semantically non-equivalent code-blend due to a large mismatch in the timing between the production of ASL and English. The dashed line indicates that the sign was held in space. ASL illustration copyright © Karen Emmorey.

Finally, we observed two examples of semantic non-equivalency due to lexical retrieval errors in ASL (see Figure 8). Both examples were from the cartoon re-telling (one from the language sample and one from a later scene). In Figure 8A, the participant produced the ASL sign THROUGH instead of the semantically appropriate sign AFTER. The sign THROUGH is semantically and phonologically related to the sign AFTER. Both signs are prepositions and both are two-handed signs produced with two B handshapes (for the sign AFTER, the dominant hand moves across the back of the non-dominant hand, rather than through the fingers). This error is likely a sign production error, parallel to a speech error. Like word substitution errors, sign substitution errors are semantically and sometimes phonologically related to the intended sign (Hohenberger, Happ and Leuninger, 2002).

In contrast, the sign error FIND illustrated in Figure 8B is neither semantically nor phonologically related to the intended sign FAIL. In this example, P8 was indicating that Sylvester had failed yet again to capture Tweety, and she catches her sign error and then produces a corrected code code-blend. Although FAIL and FIND are not phonologically related in ASL (see Figure 8B for the sign illustrations), the ENGLISH TRANSLATIONS are phonologically related. Since ASL and English do not share phonological nodes, in order for English phonology to influence ASL production, there would need to be links from English phonological representations to ASL lexical representations. This code-blend error suggests that sign and speech production mechanisms might be linked such that occasionally, a weakly activated English phonological representation (e.g., /faynd/) can directly activate the corresponding ASL lexical representation (FIND) which is then encoded and manually produced, while at the same time, the more strongly activated English phonological representation (e.g., /feyl/) is phonetically encoded and produced by the vocal articulators. However, further investigation is needed to determine the frequency of this type of production error and the mechanism that might lead to such errors during bimodal bilingual language mixing.

Predictions for non-manual components of signed languages

The model shown in Figure 5 above also accounts for the fact that ASL, like many signed languages, makes use of facial (non-manual) articulations (Zeshan, 2004). For example, ASL adverbials are marked by distinct mouth configurations (Baker-Schenk, 1983, Anderson and Reilly, 1998); conditional clauses are marked by raised eye brows and a slight head tilt (Baker and Padden, 1978), and wh-questions are marked by furrowed brows, squinted eyes, and a slight head shake (Baker and Cokley, 1980). Grammatical facial expressions have a relatively sharp onset and offset and are produced simultaneously with an associated manual sign or syntactic clause. In addition, some ASL signs are produced with MOUTH PATTERNS or with MOUTHING. Mouth patterns are mouth movements that are unrelated to spoken words (Boyes-Braem and Sutton-Spence, 2001). For example, the ASL sign variously glossed as FINALLY/AT-LAST/SUCCESS is accompanied by an opening of the mouth (“pah”) that is synchronized with the outward movement of the hands. ASL signs with obligatory mouth patterns are not particularly common and generally do not have a straightforward English translation. Mouthing refers to mouth movements that are related to a spoken word (or part of a word). For example, in ASL the sign FINISH is often accompanied by mouthing “fsh”. Full spoken words (e.g., finish) are usually not produced during mouthing, and ASL signers vary in the degree to which they produce mouthings with signs.

The architecture of our proposed model makes some interesting predictions regarding the production of non-manual ASL components during code-blending. ASL adverbials and mouth patterns are produced by ASL-specific mouth configurations and are therefore incompatible with a code-blended spoken English word. For example, it is not possible to produce the mouth pattern “pah” and say “finally” at the same time or to produce a spoken English verb along with an ASL mouth adverbial. We predict that this incompatibility prevents code-blending for ASL signs that contain an obligatory mouth pattern and that bimodal bilinguals may be more likely to code-switch for these ASL signs. Our language sample did not contain any examples of code-blends or code-switches for signs with obligatory mouth patterns, but again, such signs are not particularly abundant. ASL mouth adverbials are relatively common but are not obligatory, and therefore they could be dropped during a code-blend. Of the 22 code-switches to ASL verbs in our language sample, two were produced with a mouth pattern or adverbial. P2 produced the ASL sign glossed as DROOL (meaning “to strongly desire”) with his tongue out (a common mouth pattern for this sign), and P7 produced the verb BUTTERUP (sometimes glossed as FLATTER) with the mm adverbial (lips protrude slightly) which indicates “with ease”; this code-switch occurred in the context of describing how easy it was to con his mother into something.

In contrast, mouthings are much more compatible with spoken English words, and we observed examples of code-blending with ASL signs that are normally accompanied by mouthings. In these cases, however, the full English word was produced. For example, P2 produced the sign FINISH while saying “finish” rather than mouthing “fsh”. However, in other contexts, bimodal bilinguals sometimes say “fish” while producing the sign FINISH, particularly when FINISH is produced as a single phrase meaning either “cut it out!” or “that's all there is”. However, such examples are not true code-blends between the English word fish and the ASL sign FINISH. Rather, they are examples of voiced ASL mouthings that create a homophone with an unrelated English word. In general, during code-blending ASL mouthings are supplanted by the articulation of the corresponding English word.

Finally, linguistic facial expressions produced by changes in eyebrow configuration do not conflict with the production of spoken English words. Our filming setup did not allow for a detailed examination of the facial expressions produced during bilingual language mixing because there was no second camera focused on the face of the participants. However, in a separate study, Pyers and Emmorey (in press) found that bimodal bilinguals (Codas) produced ASL facial expressions that mark conditional clauses (raised eyebrows) and wh-questions (furrowed brows) while conversing with English speakers who did not know ASL. For example, while asking, “Where did you grow up?” ASL–English bilinguals were significantly more likely to furrow their brows than monolingual English speakers, and while saying “If I won five hundred dollars”, they were more likely to raise their eyebrows. Furthermore, the production of these ASL facial expressions was timed to co-occur with the beginning of the appropriate syntactic clause in spoken English. Given that ASL linguistic markers conveyed by changes in eyebrow configuration were unintentionally produced when bimodal bilinguals conversed with monolingual non-signers, it is likely that they would also produce such facial expressions while conversing and code-blending with a bimodal bilingual.We predict that only non-manual linguistic markers that are compatible with the articulation of speech will be produced during code-blending. In essence, we hypothesize that speech is dominant for the vocal articulators during code-blending, which forces a code-switch to ASL for signs with obligatory mouth patterns or for verbs with mouth adverbials.

Accounting for similarities between co-speech gesture and code-blending

In the Kita and Özyürek (2003) model of co-speech gesture, the Action Generator also interfaces with the environment and working memory, which accounts for cases in which gesture conveys additional or distinct information from speech. For example, a spatio-motoric representation of an event in working memory can interface with the Action Generator to create a gesture that encodes manner of motion (e.g., “rolling”), while the simultaneously produced spoken verb expresses only path information (e.g., iniyor “descends” in Turkish). In contrast, when sign and speech express distinct information (i.e., semantically non-equivalent code-blends), code-blends), we hypothesize that the Message Generator sends separate information to the ASL and English Formulators (as in Figure 6 above) or that non-equivalent lexical representations are selected (e.g., saying “Tweety” and signing BIRD). However, for both co-speech gesture and code-blends, expressing distinct information in the two modalities is the exception rather than the rule.

The primary goal of Kita and Özyürek's model is to specify how the content of a representational (iconic) gesture is specified, rather than to account for the synchronization between speech and gesture. Similarly, the primary aim of our model of bimodal language mixing is to account for the content of ASL–English code-blends, rather than explicate the timing of their production. Nonetheless, our data indicate that there is a tight synchronous coupling between words and signs that parallels that observed for speech and gesture. Furthermore, in a study specifically comparing ASL and co-speech gesture production by non-native ASL–English bilinguals, Naughton (1996) found that the timing of their ASL signs with respect to co-occuring speech had the same tight temporal alignment as their co-speech gestures.

Therefore, as a step toward understanding the synchronization of co-speech gesture and of code-blending, our model specifies a link between manual and vocal/facial articulation. The link is bi-directional because current evidence suggests that gestures are synchronized with speech (rather than vice versa) and that ASL mouth patterns and non-manual markers are synchronized with signs (rather than vice versa) (McNeill, 1992; Woll, 2001). We hypothesize that both manual and vocal/facial articulations are dominated by a single motor control system, which accounts for the exquisite timing between a) manual gestures and speech, b) ASL signs and English words within code-blends, and c) manual and non-manual articulations during signing.

One further point concerning the output of the manual articulation component is the ability to simultaneously produce linguistic and gestural information within the same modality. An example of simultaneous gesture and sign can be found in the code-blend example illustrated in Figure 6. In this example, the ASL sign LOOK-AT is produced with a rapid twisting motion. However, the velocity of this movement is not a grammatical morpheme indicating speed; rather, the shortened motion is a gestural overlay, analogous to shortening the vowel within a spoken verb to emphasize the speed of an action. We propose that the output overlap between linguistic and gestural expression arises from a single motor control component that receives information from an Action Generator (that interfaces with the knowledge of the environment) and from the ASL Formulator.6

Finally, an intriguing possibility is that the Action Generator for gesture might influence ASL production via the Message Generator during ASL–English code-blending. According to Kita and Özyürek (2003, p. 29), “[t]he Action Generator is a general mechanism for generating a plan for action in real or imagined space”, and the Action Generator and the Message Generator “constantly exchange information and the exchange involves transformations between the two informational formats [i.e., spatio-motoric and propositional, respectively]”. This interaction might help explain the frequency of verbs produced during single-sign code-blends. That is, the Message Generator may be primed to express action information manually, and therefore ASL verbs may be more likely to be produced during an English utterance.

Implications for models of language production

Code-blending versus code-switching

Bimodal bilinguals frequently, and apparently easily, produced signs and words at the same time. Code-blends vastly outnumbered code-switches, and the majority of code-blends expressed semantically equivalent information in the two languages. The most parsimonious way to explain this pattern of bimodal bilingual language production is to assume that the architecture of the language production system does not REQUIRE that a single lexical representation be selected at the preverbal message level or at the lemma level. In addition, when semantically equivalent representations are available for selection it may be easier for the system to select two representations for further processing (i.e., for production) than it is to select just one representation and suppress the other. In other words, our findings imply that, for all speakers (whether bimodal, unimodal, or monolingual), lexical selection is computationally inexpensive relative to lexical suppression. Here we assume that bilinguals do not code-blend 100% of the time (see Table 1) because code-blending only occurs when both English and ASL become highly active at roughly the same time. On this view, all bilinguals would code-blend if they could, and to the extent that synonyms exist within language (Peterson and Savoy, 1998), even monolinguals would simultaneously produce two words if they could.

The frequency of code-blending in bimodal bilinguals is also consistent with the many experimental results suggesting that both languages are active all the way to the level of phonology for bilingual speakers (for a review see Kroll, Bobb and Wodniecka, 2006). Such data pose an interesting problem for modeling unimodal bilingualism in which physical limitations require that just one language be produced at a time. That is, if both languages are active all the way to phonology then how does one representation ultimately get selected for production? The overwhelming evidence for dual-language activation motivated some extreme solutions to this problem. For example, some have argued that lexical representations do not compete for selection between languages (Costa and Caramazza, 1999), and others have suggested completely abandoning the notion of competition for selection even within the context of monolingual production (Finkbeiner, Gollan, and Caramazza, 2006). Our results build on previous findings by indicating that not only can both of a bilingual's languages be active at all levels of production, but it also is possible to actually produce both languages simultaneously provided that the articulatory constraints are removed. Although future work is needed to determine the locus at which bimodal bilinguals select two representations for production, it may be simplest to assume that the locus of lexical selection (for all bilinguals) arises relatively late. If the system required that a single representation be selected for output early in production – this would have created a preference for code-switching instead of the preference for code-blending that we observed.

Why and when do code-blends arise?

The fact that the majority of code-blends contained the same information in both languages indicates that the primary function of code-blending is not to convey distinct information in two languages. Lifting the biological constraints on simultaneous articulation does not result in different messages encoded by the two modalities. This result is consistent with an early proposal by Levelt (1980) who suggested that there are central processing constraints for all natural languages, which impede the production or interpretation of two concurrent propositions. For example, the availability of two independent articulators for signed languages (the two hands) does not result in the simultaneous production of distinct messages with each hand by signers. Similarly, bimodal bilinguals do not take advantage of the independence of the vocal tract from the manual articulators to simultaneously produce distinct propositions when mixing their two languages. Models of language production are thus constrained at the level of conceptualization to encode a single proposition for linguistic expression, even when two languages can be expressed simultaneously.

Importantly, the choice of a Matrix Language is likely to be made at early stages in production, because one language must provide the syntactic frame. When English is selected as the Matrix Language, either single-sign or multi-sign code-blends are produced. What determines which lexical concepts are selected for expression in ASL? We hypothesize that the salience or importance of a concept to the discourse may be one factor that determines lexical selection. Evidence for this hypothesis is the fact that verbs were most frequently produced in single-sign code-blends. Verbs are not only the heads of sentential clauses but they also express critical event-related information within a narrative. The Message Generator may be more likely to activate lexical representations for ASL verbs because of the semantic and syntactic centrality of verbs. However, there are likely to be many linguistic and social factors that trigger code-blending, just as there are many linguistic and social factors that trigger code-switching for unimodal bilinguals (see for example, Muysken, 2005). For bimodal bilinguals, code-blending likely serves the same social and discourse functions that code-switching serves for unimodal bilinguals.

Why do code-switches (instead of code-blends) happen in bimodal bilinguals?

The semantic characteristics of bimodal code-switching suggest that switches from speaking to signing often occur when ASL is able to convey information in a unique manner, not captured by English. Unimodal bilinguals are also likely to switch between languages when one language does not have a relevant translation for a word, concept, or expression that they wish to express. However, the primary function of code-switching for fluent unimodal bilinguals is not to convey untranslatable lexical items since the majority of code-switches are made up of constituents that are larger than a single lexical item (Poplack, 1980). Rather, code-switching appears to serve discourse and social functions such as creating identity, establishing linguistic proficiency, signaling topic changes, creating emphasis, etc. (e.g., Romaine, 1989; Zentella, 1997).

For unimodal bilinguals, nouns are easily code-switched, but verbs switch much less frequently (Myers-Scotton, 1997; Muysken, 2005). For example, Poplack (1980) reported that verbs made up only 7% of intra-sentential code-switches for Spanish–English bilinguals. However, we found that ASL verbs were much more likely to be produced within a single-sign code-blend or a code-switch (see Table 2 above). Verbs may be easily code-blended because the inflectional morphology of ASL and English does not have to be integrated. That is, English tense inflections can remain in the speech while the ASL verb is produced simultaneously. For example, for the code-blend in Figure 2 above, the English verb is inflected for third person (jumps), and the ASL verb JUMP is produced at the same time. Furthermore, there are ways in which the languages can be adapted to match the morphology of the other. We observed examples in which an English verb was repeated in order to match the aspectual morphology of the ASL verb. For example, P2 used the reduplicative form of the ASL verb CHASE to indicate that Sylvester was chased all around the room. The English verb chase was repeated with each repetition of the ASL verb.

Finally, bimodal code-switching, unlike unimodal code-switching, can be motivated by differing physical constraints on the two languages. For example, bimodal bilinguals have been observed switching from speaking to signing during dinner conversations when eating impedes speech or switching to ASL when the ambient noise level in an environment becomes too loud (e.g. in a bar when a band starts to play loudly). Thus, a unique reason that bimodal bilinguals code-switch is due to the biology of speech and sign.

The possible role of suppression

Our analysis of language asymmetries within code-blended utterances also revealed a surprising and interesting gap: there were no examples in which ASL was the Matrix Language and a single-word English code-blend was produced, as illustrated by the invented example (5). This gap may arise because of the need to suppress English (Green, 1998) during production of ASL. Although the Codas in our study are native ASL signers and English speakers and highly proficient in both languages, on average they reported slightly higher proficiency ratings in English than in ASL. In addition, English is the majority language within the U.S.A., and so participants were immersed in an English-dominant environment. Codas arguably perceive English more often than ASL (e.g., on television, on radio, in non-Deaf businesses, etc.), and they probably produce English more often as well.

Studies of unimodal bilingual language production demonstrate that it is harder for bilinguals to switch from speaking their less dominant language to speaking their more dominant language than the reverse (e.g., Meuter & Allport, 1999). Switching to the dominant language is hypothesized to be more difficult because its lexical representations have been strongly inhibited to allow production in the less dominant language. On this view, bimodal bilinguals may not produce single-word English code-blends while signing ASL because English, the dominant language, has been strongly suppressed. In contrast, bimodal bilinguals can produce multi-word continuous code-blending while signing because English in these cases has not been inhibited (e.g., examples (4a–c) and Figures 3–4), and the unique nature of bimodal bilingualism allows the production of two languages simultaneously.

When bimodal bilinguals are speaking English, we assume that ASL is not strongly suppressed, and code-blends occur whenever the activation level of an ASL sign reaches a certain selection threshold. The hypothesis that ASL remains weakly active while speaking English is supported by the fact that bimodal bilinguals sometimes unintentionally produce ASL signs while talking with hearing non-signers (Casey and Emmorey, in press). In sum, if bimodal bilinguals suppress English while signing more than they suppress ASL while speaking, this asymmetric inhibition pattern explains why single-sign code-blends occur while speaking, but the reverse is unattested (or at least very rare). If our interpretation is correct, the results support models of bilingual language production that incorporate asymmetric inhibition (e.g., Green, 1998; Meuter and Allport, 1999; but see Costa, Santesteban, and Ivanova, 2006 for evidence that asymmetric switch costs only occur when one language is dominant over the other).

The current investigation demonstrated how bimodal bilingualism offers novel insight into the nature of language production. In future work, bimodal bilingualism can provide a unique vantage point from which to study the temporal and linguistic constraints on language mixing. For example, to the extent that shared phonological representations give rise to competition or cuing between spoken languages, such effects should not be found for bimodal bilinguals because phonological nodes cannot be shared between sign and speech. To the extent that switch costs arise from the need to access the same articulators for the production of two different languages, such switch costs should not be observed in bimodal bilinguals. By illuminating the cognitive mechanisms that allow for simultaneous output produced by the hands and vocal tract, a full characterization of bimodal bilingual code-blending is also likely to inform our understanding of co-speech gesture and – more broadly – of how people perform more than one task at a time.

Appendix. ASL transcription conventions

The notational conventions and symbols that are commonly used by sign language researchers:

APPLE Words in capital letters represent English glosses (the nearest equivalent translation) for ASL signs.

JOT-DOWN Multi-word glosses connected by hyphens are used when more than one English word is required to translate a single sign.

GIVE [durational] A bracketed word following a sign indicates a change in meaning associated with grammatical morphology, e.g., the durational inflection in this example.

DANCE++ ++ indicates that the sign is repeated.

#BACK A finger-spelled sign.

CL:3 [car moves randomly] A classifier construction produced with a three handshape (a vehicle classifier). The meaning of the classifier construction follows in brackets.

CL:F A classifier construction produced with F handshapes (thumb and index finger touch, remaining fingers extended).

It's funny now FUNNY NOW Underlining indicates the speech that co-occurred with the signs.

[all of a sudden] ‘snaps fingers’ Manual gestures are indicated by single quotes and brackets indicate the associated speech.

NINE— A dashed line indicates that a sign was held in space.

Footnotes

This research was supported by a grant from the National Institute of Child Health and Development (HD-047736) awarded to Karen Emmorey and San Diego State University. Tamar Gollan was supported by a grant from the National Institute of Deafness and Other Communication Disorders (K23 DC 001910). We thank Danielle Lucien, Rachel Morgan, Jamie Smith and Monica Soliongco for help with data coding and transcription. We thank David Green and two anonymous reviewers for helpful comments on an earlier draft of the manuscript. We also thank Rob Hills, Peggy Lott, and Daniel Renner for creating the ASL illustrations. We are especially grateful to all of our bilingual participants.

Following convention, lower-case deaf refers to audiological status, and upper-case Deaf is used when membership in the Deaf community and use of a signed language is at issue.

The pattern of results did not differ between participants who were interpreters and those who were not.

We also collected data from participants in a monolingual mode, i.e., interacting with an English speaker whom they did not know and who did not know any ASL while discussing topics of general interest (e.g., differences between men and women). Whether participants initially interacted with a bilingual or a monolingual partner was counterbalanced across participants. Results from the monolingual condition are presented in Casey and Emmorey (in press).

We excluded one participant because he did not produce any code-blends in his narrative re-telling and only a few code-blends during his conversation.

A larger proportion of semantically equivalent code-blends (94%) was reported in Emmorey, Borinstein and Thompson (2005), which had a smaller language sample.

It is possible that the Action Generator also connects with the mechanism controlling vocal articulation because speech and vocal gesture can also be produced simultaneously. For example, vowel length or pitch can be manipulated as gestural overlays, produced simultaneously with speech. One can say “It was a looooong time” (a vocal gesture illustrating length) or “The bird went up [rising pitch] and down [falling pitch]”. The changes in pitch are vocal gestures metaphorically representing rising and falling motion (Okrent, 2002).

References

- Anderson DE, Reilly J. PAH! The acquisition of adverbials in ASL. Sign Language and Linguistics. 1998;1-2:117–142. [Google Scholar]

- Baker A, Van den Bogaerde B. Codemixing in signs and words in input to and output from children. In: Plaza-Pust C, Morales Lopéz E, editors. Sign bilingualism: Language development, interaction, and maintenance in sign languagecontact situations. John Benjamins; Amsterdam: in press. [Google Scholar]

- Baker C, Cokley D. American Sign Language: A teacher's resource text on grammar and culture. TJ Publishers; Silver Spring, MD: 1980. [Google Scholar]

- Baker C, Padden C. Focusing on the nonmanual components of American Sign Language. In: Siple P, editor. Understanding language through sign language research. Academic Press; New York: 1978. pp. 27–57. [Google Scholar]

- Baker-Schenk C. A micro-analysis of the nonmanual components of questions in American Sign Language. University of California; Berkeley: 1983. Ph.D. dissertation. [Google Scholar]

- Bishop M, Hicks S. Orange Eyes: Bimodal bilingualism in hearing adults from Deaf families. Language Studies. 2005;5(2):188–230. [Google Scholar]

- Bock K. Toward a cognitive psychology of syntax: Information processing contributions to sentence formulation. Psychological Review. 1982;89:1–47. [Google Scholar]

- Boyes-Braem P, Sutton-Spence R, editors. The hands are the head of the mouth: The mouth as articulator in sign languages. Signum-Verlag; Hamburg: 2001. [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. MIT Press; Cambridge, MIT: 1998. [Google Scholar]