Abstract

Objective

To use the neural signals preceding movement and motor imagery to predict which of four movements/motor imageries is about to occur, and to access this utility for brain-computer interface (BCI) applications.

Methods

Eight naive subjects performed or kinesthetically imagined four movements while electroencephalogram (EEG) was recorded from 29 channels over sensorimotor areas. The task was instructed with a specific stimulus (S1) and performed at a second stimulus (S2). A classifier was trained and tested offline at differentiating the EEG signals from movement/imagery preparation (the 1.5 seconds preceding movement/imagery execution).

Results

Accuracy of movement/imagery preparation classification varied between subjects. The system preferentially selected event related (de)synchronization (ERD/ERS) signals for classification, and high accuracies were associated with classifications that relied heavily on the ERD/ERS to discriminate movement/imagery planning.

Conclusions

The ERD/ERS preceding movement and motor imagery can be used to predict which of four movements/imageries is about to occur. Prediction accuracy depends on this signal’s accessibility.

Significance

The ERD/ERS is the most specific pre-movement/imagery signal to the movement/imagery about to be performed.

Keywords: Electroencephalography (EEG), event related (de)synchronization (ERD/ERS), brain-computer interface (BCI), movement, motor imagery

Introduction

The goal of the present study was to prove the viability of a movement/imagery prediction brain computer interface (BCI), and to discover which electroencephalography (EEG) neural signals would be best for controlling such a device. We focused on EEG because it is non-invasive and mobile. Additionally, we restricted the device to decoding pre-movement/imagery signals with cued movements/imageries.

BCIs are important medical devices for patients who have lost control of their motor faculties, and can no longer outwardly express their needs and thoughts. A BCI enables these “locked in” patients to control a computer with their brain activity for communication, mobility, and other purposes. In practice, the most common neural signals to use as BCI inputs are movement-related signals, because they are well defined and natural to the user. Movement-controlled BCIs allow the patient to indicate a choice by making or imagining one of several predefined movements.

Current movement-controlled BCIs have two major drawbacks. First, they rapidly fatigue the patient by requiring sustained movement/imagery for several seconds. Neural signals associated with non-sustained movement/imagery subside quickly, and a longer sample of data is required for classification (Neuper et al. 2005). Second, after the neural signal is obtained, the device can require several seconds to classify it, especially if sophisticated techniques are involved (Brunner et al. 2006). This causes a waiting time between the patient’s actions and the BCI response, decreasing the BCI’s usability and convenience. To address these problems, we proposed a BCI that used the brain signals preceding movement execution and imagery (a prediction BCI). This enabled the extraction of more than one second of preparatory neural information from a single non-sustained movement/imagery, and had the potential to reduce the perceived waiting time between the patient’s actions and BCI response by using earlier neural signals.

We created a prediction BCI that selected the neural signals that gave it the best results for predicting which of 4 movements was about to be executed or imagined: right hand squeeze, left hand squeeze, tongue press on the roof of the mouth, or right foot toe curl. The movement was instructed with a specific stimulus (S1) and performed at a second stimulus (S2). We anticipated that the BCI would rely on one of the two predominant EEG signals known to precede cued movement and motor imagery in this circumstance: the contingent negative variation (CNV) or the event related (de)synchronization (ERD/ERS). These signals are unique to movement/imagery preparation, and dissimilar from those during movement/imagery execution. Specifically, the CNV only occurs preceding movement/imagery and subsides immediately upon movement/imagery execution. Additionally, the preparatory ERD/ERS is functionally distinct from the ERD/ERS that can occur during movement/imagery (Neuper, 1999). While the CNV and its coincident ERD/ERS are extracted from the contemporaneous EEG, they represent separate processes of motor/imagery preparation within the motor cortices (Filipovic et al. 2001).

The contingent negative variation (CNV) is a widespread negative shift occurring during the interval between a warning stimulus (S1) and a subsequent imperative stimulus (S2) that requires execution of a movement or motor imagery (Walter et al. 1964; Tecce 1972; Tecce and Cattanach 1993). In a choice situation, the instruction for which movement to make could be given with either the S1 or the S2; in our experiments, the instruction is given at S1. The earlier part of the CNV is maximal over the frontal cortex, and generated in response to S1. The later, or terminal CNV (tCNV) can begin up to 1.5 seconds before S2; and reflects both cognitive preparation, causing maximal activity within and negativity over the frontal and prefrontal cortices, and motor preparation, causing maximal activity within and negativity over the primary motor cortex (M1) and supplementary motor area (SMA) (Rohrbaugh et al. 1976; Rosahl and Knight 1995; Ikeda et al. 1996).

The tCNV’s motor-related activity is associated with a sustained ERD/ERS in the alpha (8-13 Hz) and beta (14-30 Hz) range. The ERD, a reduction of a specific frequency component, is related to increased neural activity and appears over the performed/imagined movement’s M1 representation. The ERS, an enhancement of a specific frequency component, is related to neural suppression, and sometimes appears over non-performed/imagined movements’ cortical representations (Pfurtscheller 1989; Neuper and Pfurtscheller 1992; Pfurtscheller et al. 1997; Pfurtscheller 2003; Caldara et al. 2004).

We anticipated that the prediction BCI would work best with the ERD/ERS due to the straightforward relationship between the ERD/ERS and motor cortex activity, in contrast to the CNV. Success would indicate that movement and motor imagery contain a substantial and specific preparation within the primary motor cortex, which is reflected in the ERD/ERS more than the CNV and other movement/imagery preparation neural signals. Furthermore, this 4-movement BCI could greatly improve the lives of locked in patients by offering them 2 dimensions, 4 directions derived from the 4 movements, of control.

Methods

Subjects

Subjects were eight, right-handed, healthy volunteers (five male) between the ages of 23 and 53. None of the subjects had prior BCI training or instruction on the current study’s procedure. The subjects were not informed of the experimental hypothesis, or the experimental design beyond the activities they performed. Each subject provided written informed consent prior to participating in the study. The Institutional Review Board approved the protocol.

Experimental protocol and data acquisition

Subjects were seated in a comfortable chair with their arms extended, supported by pillows, and their right leg extended, supported by a footrest. Stimuli presentation, data acquisition, and data processing and visualization were done using homemade MatLab (MathWorks, Natick, MA) scripts. Stimuli were presented on a nineteen-inch LCD computer screen, and occupied about 1.2 degrees of visual angle.

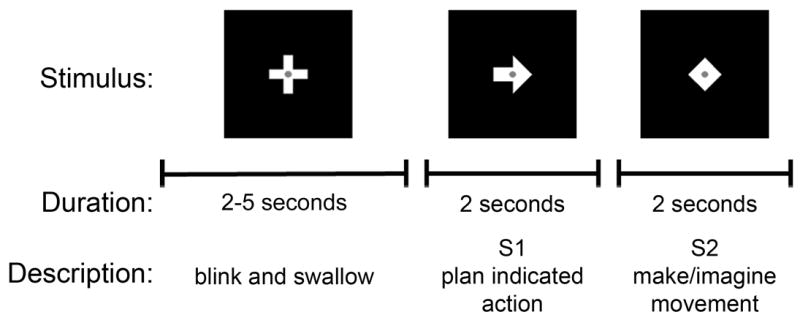

Each subject completed at least six blocks of trials, each block consisting of 100 trials. During each trial, a cross stimulus was presented for 2-5 seconds, followed by an arrow stimulus (S1) for 2 seconds, and then a diamond stimulus (S2) for 2 seconds (Fig. 1). Subjects were instructed to blink and swallow only during the cross presentation and to minimize eye movements during S1 and S2 presentations. We asked the subjects to relax their muscles and pay attention to the visual stimulus. We stressed the importance of their relaxation, because the presence of any S1-related artifacts could potentially contaminate our results. The duration of the cross stimulus was adjusted for each subject’s comfort and varied randomly between trials by at least one second. For each trial, S1 was randomly chosen to be an arrow in one of 4 directions: right, left, up, or down, which indicated the movement to be performed or imagined: a right hand squeeze, left hand squeeze, press of the tongue on the roof of the mouth, or right foot toe curl, respectively.

Fig. 1.

Trial stimuli. Stimuli presented during a single trial included a cross, an arrow (S1), and a diamond (S2). Subjects were asked to blink and swallow only during the cross presentation. S1 indicated the movement or imagery the subject should perform immediately upon S2 appearance.

During the first three blocks of trials, subjects were instructed to perform physical movement immediately upon S2 appearance, and not before it. During the last three blocks, subjects were instructed to perform kinesthetic motor imagery immediately upon S2 appearance, and not before it. Subjects were explicitly warned against making muscle contractions before S2. Instead of recording electromyography (EMG) on the arms and leg, we made sure that subjects were not making movements before S2 by watching them via a closed-circuit video camera. Tongue movements were detectable through the EEG scalp electrodes, and we used this to ensure that subjects were not making tongue movements after S2. Additionally, subject self-reports indicated that subjects were not performing imagery or muscle contractions before S2. The closed-circuit video was also used to ensure that subjects performed movement trials correctly and were awake and attentive. Extra blocks of trials were collected from subjects who failed to remain attentive; such bad trials were discarded. Each subject was enrolled in the study for 1.5 to 2 hours.

EEG was recorded from 29 tin surface electrodes mounted on an elastic cap (Electro-Cap International, Inc., Eaton, OH, USA). Electrodes were placed over central and parietal areas, spaced roughly 2.5 centimeters apart. These included the following, labeled as channels 1-29: FC1, FC3, FC5, C1, C3, C5, FC2, FC4, FC6, C2, C4, C6, CP1, CP3, CP5, P1, P3, P5, CP2, CP4, CP6, P2, P4, P6, FZ, FCZ, CZ, CPZ, and PZ (Sharbrough et al. 1991). Reference was taken from the right ear lobe and ground from the forehead. Bipolar recordings of the vertical and horizontal electrooculogram (EOG) were taken to monitor eye movements and blinks. All signals were amplified (Neuroscan Inc., El Paso, TX), passed through a 100 Hz low-pass filter, and digitized with a sampling frequency of 250 Hz.

Trial exclusion

Trials that contained muscle tension, tongue movement, face movement, eye movement, or eye blink artifacts in the 1.5 seconds preceding S2 appearance were identified offline and excluded from all subsequent visualizations and analyses. Tongue movements that occurred before S2 were not part of the experimental task, because subjects were instructed to make movements/imagery after S2. To assess the impact of trial quantity on classification success, the average quantity of movement and imagery trials retained for each subject was compared to the mean and variance of the results using a linear regression (movement and imagery were considered separately).

CNV and ERD/ERS visualizations

To create CNV and ERD/ERS visualizations, trials belonging to the same subject, channel, and movement or imagery were averaged offline over the 3 seconds preceding and 1 second following S2 appearance.

CNV visualizations were created by low-pass filtering (second order Butterworth filter with a 5 Hz cutoff) and averaging voltages after subtracting the average voltage between 3 to 2 seconds before S2 appearance. 95% confidence intervals were made using bootstrapping with 500 samples (Good 2006). ERD/ERS visualizations were created by generating power spectra for each trial (using a fast Fourier transformation (FFT) with Hanning windows, a 256-millisecond segment length, and no overlap between consecutive segments), and averaging 3.9 Hz, 256 millisecond bins of the power spectra (Bai et al. 2005).

Visualizations were inspected manually to identify CNV waveforms and sustained ERD/ERSs over motor areas. To assess the impact of ERD/ERSs on classification accuracy, the number of movement/imagery preparations displaying sustained motor channel ERD/ERSs was compared to average testing accuracies using linear regression (movement and imagery were considered separately).

Training and testing accuracies

Trials were separated into two groups per subject, one for movement and one for imagery. Each group was pseudo-randomly divided into a training set (80% of trials) and a testing set (20% of trials). All data were independently filtered using a spatial filter (independent component analysis, ICA), which attenuated the signal components associated with noise; and a temporal filter (discrete wavelet transformation, DWT), which applied a transformation to make relevant neural signals more accessible (it extracted features). Optimum filter parameters were determined using data in the training set. The filters were chosen based on their ability to produce better testing accuracies than other methods (spatial filters: principal component analysis, common spatial patterns analysis, and surface Laplacian derivation; and temporal filter: power spectral density estimation) in Bai et al. 2007, where specific implementation details can be found (Bai et al. 2007).

After filtering, the data were rescaled to have zero mean and unit variance. This eliminated the possibility of high variance features dominating the classification training (Bai et al. 2007). The training set was searched by a genetic algorithm, using Bayesian cross-validation and Bhattacharyya distances, to identify the best one hundred features for differentiating the movement/imagery preparations during the 1.5 seconds before S2 appearance. The Bhattacharyya distance of each feature quantified the feature’s impact; the larger the feature’s Bhattacharyya distance, the greater separability of the data by that feature (Marques De Sa 2001). Only 1.5 seconds before S2 were included in the classification, since this was the time period known to contain the motor related tCNV and ERD/ERS. We avoided using the 0.5 seconds after S1 appearance, to ensure that any S1-related artifacts were not used by the classification.

A naïve Bayesian (BSC) classifier used the best features (those with the largest Bhattacharyya distances), in the best quantity (the number between 1 and 16 that produced the best training set results), to classify each trial in the training set, yielding a training accuracy, and each trial in the testing set, yielding a testing accuracy. Similar to the filtering techniques, BSC was chosen based on its ability to produce better testing accuracies than other methods (Mahalanobis distance classifier, quadratic Mahalanobis distance classifier, multi-layer perceptron neural network, probabilistic neural network, and support vector machine) in Bai et al. (Bai et al. 2007).

This process was repeated five times, using data preceding all 4 movements/imageries, to account for variation from the random division of trials into training and testing sets and the genetic algorithm used in feature identification. All analyses used data preceding movement/imagery execution, although explicit statement of this is omitted from now on for brevity.

Six subjects were used for 2-movement/imagery classifications. Two subjects were excluded from this analysis based on their failure to adequately participate in the experiment. The results of their CNV visualizations (one lacked an imagery CNV and the other lacked movement and imagery CNVs) and their poor behavior during the experiment indicated that they were not investing effort in the task. The six subjects’ 2-movement/imagery data were classified ten times using the same procedure. This was done for each combination of 2 movements/imageries from the original 4. The results were collapsed across subjects.

The 2-movement/imagery classification results from subject 6 were examined in detail, because this subject had an ERD/ERS for every movement/imagery, and therefore had equally substantive data for every movement/imagery. We used this subject to investigate which pairs of movements/imageries were the easiest and hardest to discriminate. To reveal any relationship between 2-movement and 2-imagery testing accuracies, 2-movement testing accuracies were compared to 2-imagery testing accuracies using a linear regression.

ICA Topographies

Based on the training data, ICA separated the channel data into mutually independent components. The number of ICA components was automatically chosen based on classification performance in the training procedure (measured by cross-validation accuracy). This is physiologically sound because neuronal sources separated into ICA components are likely to be mutually independent from each other, and surely independent from environmental noise. Therefore, meaningful neural components associated with the movement/imagery task were relatively amplified compared to the un-associated neural components and noise, which were attenuated (Bai et al. 2007).

The ICA spatial filter attenuated the EEG signal over some scalp sites, and amplified the EEG signal over other scalp sites. To visualize typical signals amplified by ICA spatial filtering, we created ICA topography plots for one subject (subject 6). We made four plots, one for 4-movement, 4-imagery, 2-movement, and 2-imagery classification. The plots depict which scalp locations were attenuated and which were amplified. Each plot was made from only one classification (out of five 4-movement/imagery classifications and ten 2-movement/imagery classifications), because the ICA weights and component numbers differed between classifications due to the different training data.

Classification features

Our choice of DWT for temporal filtering determined that classification features were a combination of channel and variable width frequency bin (Bai et al. 2007). The features had a time window encompassing the entire 1.5 seconds before movement/imagery execution (S2). Features above 40 Hz were excluded, since there was no expectation of finding relevant neural signals above this frequency.

Classification features were visualized by creating Bhattacharyya distance matrices and scalp plots. The matrices depicted the Bhattacharyya distance of each feature (features were broken into 3.9 Hz bins, instead of variable width bins, for visualization) for a particular subject’s movement or imagery preparation. Bhattacharyya distances beyond the 100 highest were corrected to zero. The scalp plots indicated the Bhattacharyya distance of each channel for a particular 3.9 Hz frequency bin. Points between channel locations were interpolated to create continuous gradients of color. Within both types of plots, colors indicated the Bhattacharyya distance of the associated feature. A larger Bhattacharyya distance indicated a greater separability of the data by the associated feature.

The traditional method of ERD/ERS analysis, which is based on power spectral estimation, is similar to that from DWT (the classification features). Therefore, the spatial, temporal, and spectral characteristics of the ERD/ERS were describable by the DWT features.

A single ERD/ERS pattern likely extended over several frequency bins, and several channels. Therefore, the ERD/ERS was available to the BCI through many different features (each feature was a combination of channel and frequency bin). If, as we had hypothesized, the ERD/ERS signals were the best for differentiating the 4 movements/imageries, the BCI would preferentially select ERD/ERS features. To assess the impact of using ERD/ERS features on classification, we examined the top ten features (determined by their Bhattacharyya distances). If a feature was composed of an M1 channel (C1, C3, C5, CP1, CP3, CP5, C2, C4, C6, CP2, CP4, or CP6) and frequency between 8-30 Hz, it was regarded as an ERD/ERS feature. If a feature was composed of a frequency below 4 Hz, it was regarded as a CNV feature. We compared the presence of only ERD/ERS features in the top ten features to average testing accuracies using a linear regression (movement and imagery were considered separately). This was not done for CNV features, because of the extremely small number of CNV features.

Results

Trial exclusion

Trial exclusion retained, per subject, an average of 55.9 (standard deviation 10.1) trials per movement/imagery, from an original average of 78.4 (standard deviation 12.4) trials collected per movement/imagery. The correlation between the average quantity of included trials and average 4-movement/imagery testing accuracy was not significant (p>0.05); similarly not significant was the correlation between the average quantity of included trials and variance of 4-movement/imagery testing accuracies (p>0.05).

CNV and ERD/ERS visualizations

CNV visualizations, except for subject 7 motor imagery and subject 8 movement and motor imagery, displayed the characteristic widespread negativity. Subject 7 completed movement trials correctly, but lost interest in performing imagery trials in anticipation of the experiment ending. Subject 8 was unable to maintain attention during any portion of the experiment due to excessive sleepiness. Therefore, CNV absence indicated failure to adequately participate in the experiment.

The CNV visualizations for subject 7 right hand movement (containing a CNV) and motor imagery (not containing a CNV), from C3 (channel 5), are included to depict what we deemed as present and absent CNVs. The CNV visualizations for subject 5 right tongue movement and motor imagery, from CP2 (channel 19), are also included (Fig. 2). Movement and motor imagery produced similar CNV waveforms when CNVs were present. CNVs tended to be widespread, being visible in almost all channels, but with particularly large amplitudes in more central and frontal channels. Negativity began about 1.2 seconds before S2, following a positive peak that occurred about 1.5 seconds before S2. This positive peak represented a P100 response to visual S1 stimuli. Negativity persisted, often with slight attenuation, until S2, when it subsided.

Fig. 2.

CNV visualization examples. CNV visualizations from C3 (channel 5) associated with subject 7 right hand movement (a) and motor imagery (b); and from CP2 (channel 19) associated with subject 5 tongue movement (c) and motor imagery (d). Time is measured relative to S2 appearance. Dashed lines represent the 95% confidence interval. All visualizations, except subject 7’s motor imagery visualization, reveals a CNV waveform, a negative shift in potential beginning about 1.5 seconds before S2 appearance.

The ERD/ERS visualizations for subject 7 right hand movement (containing an ERD, a reduction of a specific frequency component, related to increased neural activity) and motor imagery (not containing an ERD), from C3 (channel 5), are included to depict what we deemed as present and absent ERDs. The ERD/ERS visualizations for subject 5 tongue movement and motor imagery (both containing an ERS, an enhancement of a specific frequency component, related to neural suppression), from CP2 (channel 19), are included as examples of ERSs (Fig. 3). ERD/ERSs appeared in mostly motor channels. They were characterized by a sustained power decrease/increase in the 8-30 Hz range, beginning about 1.0-1.5 seconds before S2 and persisting at least until S2, and often beyond S2 appearance. The number of movements or motor imageries displaying an ERD/ERS correlated well with average testing accuracies (movement r2≈0.43, imagery r2≈0.59, p<0.05).

Fig. 3.

ERD/ERS visualization examples. ERD/ERS visualizations from C3 (channel 5) associated with subject 7 right hand movement (a) and motor imagery (b); and from CP2 (channel 19) associated with subject 5 tongue movement (c) and motor imagery (d). Colors depict the relative amplitudes, or power, of the frequencies in the signals’ power spectra. Time is measured relative to S2 appearance. For subject 7, the movement visualization, but not the motor imagery visualization, reveals an ERD, a sustained reduction of power, for frequencies around 10 Hz beginning about 1.5 seconds before S2 appearance. For subject 5, both visualizations reveal an ERS, a sustained increase of power, for frequencies around 12 Hz beginning about 1.0 seconds before S2 appearance.

Subjects that displayed ERD/ERSs for movement did not necessarily display ERD/ERSs for motor imagery, or vice versa. Subjects were not limited to displaying an ERD or ERS exclusively, since they could display an ERD for some movements or imageries and an ERS for others. A movement/imagery never displayed both an ERD and an ERS. Also, a movement ERD could correspond to an imagery ERS, or vice versa (Table 1).

Table 1.

ERD/ERS presence. Subject’s movements and motor imageries that displayed a preparatory ERD or ERS. Action entries indicate the movement/imagery: R for right hand squeeze, L for left hand squeeze, T for tongue press on the roof of the mouth, and F for right foot toe curl. Movement and imagery entries indicate the display of an ERD, an ERS, or neither (-).

| Subject | Action | Movement | Imagery |

|---|---|---|---|

| 1 | R | - | - |

| L | ERD | ERD | |

| T | - | ERD | |

| F | ERD | - | |

| 2 | R | - | ERD |

| L | - | ERD | |

| T | - | ERD | |

| F | - | - | |

| 3 | R | - | - |

| L | - | - | |

| T | - | - | |

| F | ERD | - | |

| 4 | R | - | - |

| L | ERD | - | |

| T | - | - | |

| F | ERD | - | |

| 5 | R | ERS | - |

| L | ERS | ERD | |

| T | ERS | ERS | |

| F | ERS | - | |

| 6 | R | ERD | ERS |

| L | ERD | ERD | |

| T | ERS | ERS | |

| F | ERS | ERD | |

| 7 | R | ERD | - |

| L | ERD | - | |

| T | ERD | - | |

| F | ERD | - | |

| 8 | R | - | - |

| L | - | - | |

| T | - | - | |

| F | ERD | - |

Training and testing accuracies

Five training and testing accuracies were collected from each subject for 4 movements and 4 motor imageries (Fig. 4). Ten training and testing accuracies were obtained from subjects 1-6 (Fig. 5). For subject 6, the correlation between average 2-movement testing accuracy and average 2-imagery testing accuracy was not significant (p>0.05). The highest average 2-movement/imagery testing accuracies involved right foot and left hand responses. The lowest average 2-movement/imagery testing accuracies involved tongue responses.

Fig. 4.

4-movement/imagery results. Average training and testing accuracies for 4-movement (a) and 4-imagery (b) classification. Error bars indicate standard deviation. Chance accuracy for 4-movement/imagery classification was 25%.

Fig. 5.

2-movement/imagery results from subjects 1-6 (a) and subject 6 (b). Average training and testing accuracies for 2-movement/imagery classification. Error bars indicate standard deviation. Pairs of letters indicate the movements/imageries: R for right hand squeeze, L for left hand squeeze, T for tongue press on the roof of the mouth, and F for right foot toe curl. Chance accuracy for 2-movement/imagery classification was 50%.

ICA Topographies

To illustrate the neural signals amplified by ICA, we created four example plots of ICA topographies (Fig. 6). Heavy contributions from sensorimotor areas were detected, and circled in the figure. This illustrates the emphasis of our system on motor-related signals.

Fig 6.

ICA topography examples. ICA topographies for subject 6 2-movement/imagery classification (a) 4-movement/imagery classification (b). Colors indicate the ICA weights of the associated channels. Weights of large magnitude (-1 or 1) are associated with signal amplification. Weights of small magnitude (0) are associated with signal attenuation. Relative amplification of motor-related channels were detected in many of the plots and circled.

Classification features

Example feature visualizations are shown for subject 6 (Fig. 7). They show feature preference for ERD/ERS signals (over M1 channels, with frequencies between 8-30 Hz). Our system selected mostly ERD/ERS features as top ten features, instead of CNV features or other features. The only exception to preference for ERD/ERS over CNV features was for subject 1 imagery (Table 2). Additionally, the presence of only ERD/ERS features in the top ten was highly correlated with testing accuracy (movement r2 ≈0.51, imagery r2 ≈0.53, p<0.05). Classifications that did not rely on ERD/ERS or CNV features, but were in motor channels, had high frequencies (around 39 Hz), which were unlikely to carry relevant neural information, and probably represented recording equipment or environmental noise. Other classifications that did not rely on ERD/ERS or CNV features used features that were dispersed through more frontal channels. These features probably represented cognitive preparation (in the frontal and prefrontal cortices) for ensuing movement/imagery or cognitive noise from subject inattention. This selection by the BCI of non-CNV and non-ERD/ERS features was probably due to the failure of the BCI to identify ERD/ERS or CNV features. Use of these non-CNV and non-ERD/ERS features was not associated with high classification accuracy.

Fig. 7.

Feature visualization examples. Feature visualizations for subject 6 movement (a) and motor imagery (b) classification. Colors indicate the Bhattacharyya distance of the associated feature. Scalp plots (a1 and b1) are for the 3.9 Hz frequency bin centered at 19.5 Hz. Bhattacharyya distance matrices (a2 and b2) are scaled to match the associated scalp plot.

Table 2.

Top ten features. This table shows the distribution of subjects’ top ten features for 4-movement/imagery classifications. The features were split between being ERD/ERS features (from M1 channels with frequencies between 8-30 Hz), CNV features (with frequencies below 4 Hz), or other features (from non-M1 channels with frequencies above 4Hz, or from M1 channels with frequencies 4-8Hz or above 30 Hz).

| Subject | Movement Features | Imagery Features | ||||

|---|---|---|---|---|---|---|

| ERD/ERS | CNV | Other | ERD/ERS | CNV | Other | |

| 1 | 9 | 0 | 1 | 0 | 9 | 1 |

| 2 | 9 | 0 | 1 | 0 | 0 | 10 |

| 3 | 9 | 1 | 0 | 8 | 0 | 2 |

| 4 | 8 | 0 | 2 | 6 | 0 | 4 |

| 5 | 10 | 0 | 0 | 10 | 0 | 0 |

| 6 | 10 | 0 | 0 | 10 | 0 | 0 |

| 7 | 10 | 0 | 0 | 8 | 0 | 2 |

| 8 | 0 | 0 | 10 | 7 | 1 | 2 |

Discussion

Movement and motor imagery preparation

Every subject displayed a typical CNV waveform unless they were not performing the task, in which case they also lacked ERD/ERSs. Performing subjects with CNVs sometimes lacked ERD/ERSs, confirming independence between the CNV and ERD/ERS phenomena (Filipovic et al. 2001).

The 2-movement/imagery results averaged across subjects 1-6 were nearly identical for each pair of movement/imagery. Each subject had particular pairs of movements/imageries that produced better results than the rest, but these trends were not consistent across subjects. This may be related to the diversity we found in our subjects’ ERD/ERSs. For subject 6, who was the only subject with ERD/ERSs for every movement/imagery, 2-movement and 2-imagery average testing accuracies were not significantly correlated. This agrees with a functional difference in preparation between movement and kinesthetic motor imagery. Specifically, motor imagery preparation involves less M1 activity than does movement preparation (Caldara et al. 2004; Hanakawa et al. 2008). The dissimilarities between each subject’s movement and imagery ERD/ERSs also agree with this finding. Insufficient work has been conducted to compare the ERD/ERS patterns for movement preparation to ERD/ERS patterns for motor imagery preparation. Such research could possibly explain why, in this experiment, preparatory ERD/ERS presence correlated better with imagery prediction accuracy than movement prediction accuracy. More generally, such research could lead to a better understanding of the different neural activity involved in preparation for movement and motor imagery, and how this activity is related to the movement being performed or imagined.

The large difference between subjects’ BCI accuracies (testing accuracies) reflected a variable ability of our BCI to extract motor-related information, in particular the ERD/ERS. Our system preferentially extracted this information, but it was not always available. This suggests that the ERD/ERS is the neural signal preceding movement and motor imagery that is most specific to the particular movement/imagery. Observed variability may have been due to differences in the shape and position of M1 with respect to the skull, changing the relationship between channel locations and M1, making channels more or less effective at detecting M1 activity. Alternatively, it may have been due to differences in the amount and location of cortical activity experienced when preparing for movement/imagery. Such functional diversity may explain why some subjects with a CNV waveform had ERD/ERSs (representing two separate motor processes) and some did not. This explanation would be confirmed if, through training, a subject could improve his or her BCI accuracy by making M1 information more available.

Implications for BCI applications

The collection of more trials neither improved our BCI’s accuracy nor reduced the variability of its results. Our BCI performed best when using the ERD/ERS movement/imagery preparation signals from M1. We expected our BCI to produce the best results when differentiating between right hand and right foot preparations since EEG channels would detect these signals on opposite sides of the head. This is because, even though both right hand and right foot M1 representations are in the left hemisphere, right foot activity appears in right hemisphere EEG channels (Osman et al. 2005). We did not have high expectations for differentiating right and left hand preparations, since these can activate the same areas of the left hemisphere for right-handed subjects (Bai et al. 2005). We also did not expect tongue preparations to produce relatively discernible signals, for reasons explained later.

Overall, no particular movement/imagery produced better results than any other. However, the 2-movement/imagery results from subject 6, who had strong neural signals (ERD/ERSs) for each movement/imagery, garner some insight. Despite our expectations, right foot and left hand signals were the easiest for our BCI to differentiate. Perhaps, even though the EEG signals were detected on the same side of the head, the opposite hemisphere activity kept the signals from sharing similar noise or other characteristics, making them easier to differentiate.

We expected our BCI to produce the worst results when differentiating between tongue and other preparations, which it did. The tongue representation is relatively small and distributed across both hemispheres. Additionally, tongue movements created a glossokinetic potential (GKP) that disrupted the EEG signal (Satow et al. 2004). Trials contaminated by this GKP were identified in our trial exclusion process. For future application, our BCI’s results may be improved by replacing tongue movement/imagery with an alternative, such as the left foot.

It would be informative to compare our results to those from a similar BCI that used actual movement/imagery signals instead of the preceding ERD/ERS. This may be done in a future study, seeing as we did not ask subjects to make 1.5 seconds of movement/imagery for comparison. We directed our subjects to make non-sustained movements/imageries, since non-sustained movement/imagery is a major advantage of using a preparation BCI (as discussed in the introduction).

Overall, our BCI has the following advantages over other BCIs: 2-dimensional control through 4 commands; a natural control scheme (especially for right-left-up-down); a system that minimizes fatigue by using natural and non-sustained movements/imageries; and response on an acceptable time scale. Assuming that a patient could enhance their preparatory ERD/ERS contributions through training, our BCI could greatly impact the lives of patients suffering from ailments such as amyotrophic lateral sclerosis (ALS) or spinal cord injury. These patients have severe paralysis and a BCI could offer them control of mechanical, entertainment, and communication devices.

Acknowledgments

This research was supported by the Intramural Research Program of the NIH, National Institute of Neurological Disorders and Stroke. Ms. Morash was also partly supported by NINDS Summer Intern Program. The authors thank Ms. D.G. Schoenberg for skillful editing.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bai O, Lin P, Vorbach S, Li J, Furlani S, Hallett M. Exploration of Computational methods for classification of movement intention during human voluntary movement from single trial EEG. Clin Neurophysiol. 2007 doi: 10.1016/j.clinph.2007.08.025. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai O, Mari Z, Vorbach S, Hallett M. Asymmetric spatiotemporal patterns of event-related desynchronization preceding voluntary sequential finger movements: a high-resolution EEG study. Clin Neurophysiol. 2005;116:1213–1221. doi: 10.1016/j.clinph.2005.01.006. [DOI] [PubMed] [Google Scholar]

- Brunner C, Scherer R, Graimann B, Supp G, Pfurtscheller G. Online control of a brain-computer interface using phase synchronization. IEEE Trans Biomed Eng. 2006;53:2501–2506. doi: 10.1109/TBME.2006.881775. [DOI] [PubMed] [Google Scholar]

- Caldara R, Deiber MP, Andrey C, Michel C, Thut G, Hauert CA. Actual and mental motor preparation and execution: a spatiotemporal ERP study. Exp Brain Res. 2004;159:389–399. doi: 10.1007/s00221-004-2101-0. [DOI] [PubMed] [Google Scholar]

- Filipovic SR, Jahanshahi M, Rothwell JC. Uncoupling of contingent negative variation and alpha band event-related desynchronization in a go/no-go task. Clin Neurophysiol. 2001;112:1307–1315. doi: 10.1016/s1388-2457(01)00558-2. [DOI] [PubMed] [Google Scholar]

- Good PI. Resampling methods : a practical guide to data analysis. Boston: Birkhäuser; 2006. [Google Scholar]

- Hanakawa T, Dimyan MA, Hallett M. Motor Planning, Imagery, and Execution in the Distributed Motor Network: A Time-Course Study with Functional MRI. Cereb Cortex. 2008 doi: 10.1093/cercor/bhn036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ikeda A, Luders HO, Collura TF, Burgess RC, Morris HH, Hamano T, Shibasaki H. Subdural potentials at orbitofrontal and mesial prefrontal areas accompanying anticipation and decision making in humans: a comparison with Bereitschaftspotential. Electroenceph clin Neurophysiol. 1996;98:206–212. doi: 10.1016/0013-4694(95)00239-1. [DOI] [PubMed] [Google Scholar]

- Marques De Sa JP. Pattern Recognition: Concepts, Methods, and Applications. Berlin: Springer-Verlag; 2001. [Google Scholar]

- Neuper C, Pfurtscheller G. Event-Related Negativity and Event-Related Desynchronization During Preparation for Motor Acts. Eeg-Emg-Zeitschrift Fur Elektroenzephalographie Elektromyographie Und Verwandte Gebiete. 1992;23:55–61. [PubMed] [Google Scholar]

- Neuper C, Scherer R, Reiner M, Pfurtscheller G. Imagery of motor actions: differential effects of kinesthetic and visual-motor mode of imagery in single-trial EEG. Brain Res Cogn Brain Res. 2005;25:668–677. doi: 10.1016/j.cogbrainres.2005.08.014. [DOI] [PubMed] [Google Scholar]

- Osman A, Muller KM, Syre P, Russ B. Paradoxical lateralization of brain potentials during imagined foot movements. Brain Res Cogn Brain Res. 2005;24:727–731. doi: 10.1016/j.cogbrainres.2005.04.004. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G. Functional Topography during Sensorimotor Activation Studied with Event-Related Desynchronization Mapping. J Clin Neurophysiol. 1989;6:75–84. doi: 10.1097/00004691-198901000-00003. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G. Induced oscillations in the alpha band: Functional meaning. Epilepsia. 2003;44:2–8. doi: 10.1111/j.0013-9580.2003.12001.x. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Neuper C, Andrew C, Edlinger G. Foot and hand area mu rhythms. Int J Psychophysiol. 1997;26:121–135. doi: 10.1016/s0167-8760(97)00760-5. [DOI] [PubMed] [Google Scholar]

- Rohrbaugh JW, Syndulko K, Lindsley DB. Brain wave components of the contingent negative variation in humans. Science. 1976;191:1055–1057. doi: 10.1126/science.1251217. [DOI] [PubMed] [Google Scholar]

- Rosahl SK, Knight RT. Role of prefrontal cortex in generation of the contingent negative variation. Cereb Cortex. 1995;5:123–134. doi: 10.1093/cercor/5.2.123. [DOI] [PubMed] [Google Scholar]

- Satow T, Ikeda A, Yamamoto J, Begum T, Thuy DH, Matsuhashi M, Mima T, Nagamine T, Baba K, Mihara T, Inoue Y, Miyamoto S, Hashimoto N, Shibasaki H. Role of primary sensorimotor cortex and supplementary motor area in volitional swallowing: a movement-related cortical potential study. Am J Physiol Gastrointest Liver Physiol. 2004;287:G459–G470. doi: 10.1152/ajpgi.00323.2003. [DOI] [PubMed] [Google Scholar]

- Sharbrough F, Chatrian G-E, Lesser RP, Luders H, Nuwer M, Picton TW. American Electroencephalographic Society guidelines for standard electrode position nomenclature. J Clin Neurophysiol. 1991;8:200–202. [PubMed] [Google Scholar]

- Tecce JJ. Contingent negative variation (CNV) and psychological processes in man. Psychol Bull. 1972;77:73–108. doi: 10.1037/h0032177. [DOI] [PubMed] [Google Scholar]

- Tecce JJ, Cattanach L. Contingent negative variation. In: Niedermeyer E, Lopes da Silva FH, editors. Electroencephalography, Basic Principles, Clinical Applications and Related Fields. Baltimore: Williams and Wilkins; 1993. pp. 887–910. [Google Scholar]

- Walter WG, Cooper R, Aldridge VJ, McCallum WC, Winter AL. Contingent Negative Variation: An Electric Sign of Sensorimotor Association and Expectancy in the Human Brain. Nature. 1964;203:380–384. doi: 10.1038/203380a0. [DOI] [PubMed] [Google Scholar]