Abstract

Templates play a fundamental role in Computational Anatomy. In this paper, we present a Bayesian model for template estimation. It is assumed that observed images I1, I2, …, IN are generated by shooting the template J through Gaussian distributed random initial momenta θ1, θ2, …, θN. The template J is modeled as a deformation from a given hypertemplate J0 with initial momentum μ, which has a gaussian prior. We apply a mode approximation of the EM (MAEM) procedure, where the conditional expectation is replaced by a Dirac measure at the mode. This leads us to an image matching problem with a Jacobian weight term, and we solve it by deriving the weighted Euler-Lagrange equation. The results of template estimation for hippocampus and cardiac data are presented.

Keywords: Template estimation, Computational anatomy, Bayesian, Weighted Euler-Lagrange equation

1 Introduction

Computational Anatomy (CA) is the mathematical study of variability of anatomical and biological shapes. The framework has been pioneered by Grenander [11] through the notion of deformable templates. Given a template Itemp, the group of diffeomorphisms 𝒢 acts on it to generate an orbit ℐ = 𝒢.Itemp, a whole family of new objects with similar structure as Itemp. Hence, one can model elements in the orbit ℐ via diffeomorphic transformations.

Templates play an important role in CA. They are usually used to generate digital anatomical atlases and act as a reference when computing shape variability. Often, templates have been chosen to be manually selected “typical” observed images. It is however preferable to build a template based on statistical properties of the observed population. There has been by now several publications addressing the issue of shape averaging over a dataset. In this context, the average is based on metric properties of the space of shapes; assuming a distance in shape space is given, the average of a set of shapes is a minimizer of the sum of square distances to each element of the set (Fréchet or Karcher mean). When the shape space is modeled as a Riemannian manifold, a local minimum of this sum of squared distances must be such that the sum of initial velocities of the geodesics between the average and each of the elements in the set vanishes. This leads to the following averaging procedure (sometimes called procrustean averaging) which consists in (i)starting with an initial guess of the average, (ii) computing all the geodesics between this current average and each element in the set of shapes, (iii) averaging their initial velocities and (iv) displacing the current average to the endpoint of the geodesic starting with the initial velocity, this being iterated until convergence [12, 20, 9, 16, 21]. A different variational definition of the average has been provided in [15, 5, 4]. In the present work, however, we do not build the template as a metric average, but as the central component of a generative statistical model for the anatomy. This is reminiscent of the construction developed in [1] for linear models of deformations, and in [10] for thin-plate models of points set.

In our application of Grenander’s pattern theory, anatomical shapes are modeled as an orbit under the action of the group of diffeomorphisms. Because of this, diffeomorphisms play an important role in our statistical model. The nonlinear space of diffeomorphisms can be studied as an infinite dimensional Riemannian manifold, on which, with a suitable choice of metric, geodesic equations are described by a momentum conservation law [2, 3, 14, 17]. In our context, the methodology of geodesic shooting [23, 19] relies on this conservation law to derive statistical models on diffeomorphisms and deformable objects. Through the geodesic equations, the flow at any point along the geodesic is completely determined (once a template is fixed) by the momentum at the origin. The initial momentum therefore provides a linear representation of the nonlinear diffeomorphic shape space in a local chart around the template, to which linear statistical analysis can be applied. Note that metric averaging can be reconnected to geodesic shooting [23, 13]. In this case, the algorithm is: first, compute the geodesic from a given template I0 to several target images and obtain the initial momenta of each transformations; second, compute the mean initial momentum m̄; then, shoot I0 with initial momentum m̄ to get a new image Ī; iterate this procedure. This approach was used in landmark matching [23], 3D average digital atlas construction [6] and quantifying variability in heart geometry [13].

In this paper, we introduce a random statistical model on the initial momentum to represent random deformations of a template. The generative model we use combines this deformation with some observation noise. We will then develop a strategy to estimate the template from observations, based on a mode approximation of the EM algorithm (MAEM), under a Bayesian framework.

The paper is organized as follows. We first provide some background material and notation related to diffeomorphisms and their use in computational anatomy. We then discuss template estimation and detail the statistical model and the implementation of the MAEM procedure. This will require in particular introducing an extension of the LDDMM algorithm [7, 18] to the case where the data attachment term has a nonstationary spatial weight. We finally provide experimental results, with a comparison with a simplified, non-bayesian, approach.

1.1 Background

Let the background space Ω ⊂ ℝd be a bounded domain on which the images are defined. To a template Itemp corresponds the orbit ℐ = {Itemp ∘ g−1 : g ∈ 𝒢} under the group of diffeormorphisms 𝒢. For any two anatomical images J, I ∈ ℐ, there exists a set of diffeomorphisms (denote g as an arbitrary element in the set) that registers the given images: I = J ∘ g−1. Following [8, 22], when we define the orbit ℐ, we restrict to diffeomorphisms that can be generated as flows gt, t ∈ [0, 1] controlled by a velocity field υt, with the relation

| (1) |

with initial condition g0 = id. To ensure that the ODEs generate diffeomorphisms, the vector fields are constrained to be sufficiently smooth [8, 22]. More specifically, they are assumed to belong to (V, ‖·‖V ), a Hilbert space with squared norm defined as ‖υ‖V = (Aυ, υ) through an operator A : V ↦ V*, where V* is the dual space of V . For υ ∈ V , Aυ can be considered as a linear form on V (a mapping from V to ℝ) through the identification (Aυ,w) = 〈υ,w〉V, where (Aυ,w) is the standard notation for a linear form Aυ applied to w. Interpreting as an energy, Aυ will be called the momentum associated to the velocity υ. We assume that V can be embedded in a space of smooth functions, which makes it a reproducing kernel Hilbert space with kernel K = A−1 : V* ↦ V .

The geodesics in the group of diffeomorphisms are time-dependent diffeomorphisms t ↦ gt defined by (1) such that the integrated energy

| (2) |

is minimal with fixed boundary conditions g0 and g1. The image matching problem between J and I is formalized as the search for the optimal geodesic starting at g0 = id such that . From this is derived the inexact matching problem, which consists in finding a time-dependent vector field υt solution of the problem

| (3) |

Geodesics are characterized by the following Euler equation (sometimes called EPDiff [14]), which can be interpreted as a conservation equation for the momentum Aυ [2, 3]. The equation is

| (4) |

The conservation of momentum is described as follows. Let w0 be a vector field, and be the transported vector field along the geodesic. Then, if Aυt satisfies Eq. (4), we have:

| (5) |

This implies , meaning that the geodesic evolution in the orbit depends only on J and the momentum at time 0. The solution of (3) satisfies this property in an even simpler form, which explicitly provides the momentum Aυt in function of the deformed images and the diffeomorphism gt [7, 18]. Moreover, Eq. (5) can be shown to have singular solutions that propagate over time. In fluid mechanics, EPDiff is used to model the propagation of waves on shallow water. In our context, it provides a very simple form to finitely generate models of deformation (see section 3.2).

Hence, the nonlinear diffeomorphic shapes can be represented by the initial momenta which lie on a linear space (the dual of V ). This provides a powerful vehicle for statistical analysis of shapes. In this paper, we investigate a statistical model of deformable template estimation using this property.

Note that, if υ̂, with associated diffeomorphism ĝ, is a (local) minimum of (3), it is also a (local) minimum of the problem:

| (6) |

since the data term in (3) only depends on g1. Therefore (since (6) is equivalent to the geodesic minimization on groups of diffeomorphisms), υ̂ satisfies the EPDiff equation, which is therefore also relevant for inexact matching (as noticed in [19]).

2 Methodology of Template Estimation

2.1 Statistical model for the anatomy

In addition to the conservation of momentum discussed in the previous section, solutions of (3) have also their energy conserved (since they are geodesics): is independent of time. Because of this, problem (3) is equivalent to

| (7) |

where υ0 is the initial velocity. So the minimization is now restricted to time-dependent vector fields υ that satisfy (4). The minimized expression may formally be interpreted as a joint log-likelihood for the initial velocity υ0 and observed image I in which υ0 would be a random field (with V as a reproducing space) generating, via (4), a diffeomorphism g1, and I would be obtained from the deformed template by the addition of a white noise.

This is essentially the model we adopt in this paper, under a discrete form that will be more amenable to rigorous computation. Recall that we have defined the duality operator A on V as associating to υ in V the linear form Aυ ∈ V* defined by (Aυ,w) = 〈υ,w〉V . By the Riesz representation theorem, A is invertible with inverse A−1 = K. The discretization will be done on the momentum, m = Aυ, instead of the velocity field υ.

Note that the sets V and V* are isometric if V* is equipped with the product 〈m,m̃〉V* = (m, Km̃) for m, m̃ ∈ V*. Therefore, the norm of initial velocity is equal to the norm of corresponding initial momentum, that is, for m0 = Aυ0, we have

| (8) |

Formally again, this may be interpreted as the log-likelihood of a Gaussian distribution on V* with covariance operator A = K−1, characterized by the property that, for any w ∈ V , (m0,w) is a centered Gaussian distribution with variance

| (9) |

We now discuss a discrete version of this random field. For x, a ∈ ℝd, denote a ⊙ δx the linear form w ↦ (a ⊙ δx,w) ≔ aTw(x). Noting that K(a ⊙ δx) ∈ V is, by definition, a vector field on V that depends linearly on a, we make the abuse of notation

where K(y, x) is a d by d matrix (the reproducing kernel of V ). It can be checked that K(x, y) = K(y, x)T and

We model the random momentum θ as a sum of such measures

| (10) |

where x1, x2, …, xS ∈ Ω form a set of fixed (deterministic) points (for example, the grid supporting the image discretization) and a = (a1, a2, …, aS) are random variables such that a ~ 𝒩(0,∑) in ℝSd. We want to choose ∑ consistently with our formal interpretation of Eq.(8). For this, we can compute, for w ∈ V

| (11) |

which is a Gaussian with mean 0 and variance

where ∑ij is the d by d matrix . We want to compare this to equation (9), and in particular ensure that both expressions coincide when w = K(·, xk)b for some b ∈ ℝd and k ∈ {1,…, S}. In this case, we have yielding the constraint: for all k

Define K̃ij = K(xi, xj) and assume that the block matrix K̃ = (K̃ij) is invertible. Then the equation above is equivalent to ∑ = K̃−1, which completely describes the distribution of (a1,…, aS).

The probability density function (p.d.f) of a is given by

| (12) |

where .

It is interesting to notice that we have, with ,

| (13) |

| (14) |

So the p.d.f of θ can be written as

| (15) |

where 1 represents the indicator function and . Since in the infinite dimensional space V*, our model is a singular Gaussian distribution supported by the finite dimensional space V*(x). When restricted on this space, it is continuous with respect to the Lebesgue measure with a density provided by Eq. (15).

This describes the deformation part of the model, represented as the distribution of the initial momentum θ. We can solve (4) with initial condition Aυ0 = θ from time t = 0 to t = 1 and integrate the velocity field t ↦ υt to obtain a diffeomorphism, that we shall denote gθ, at time t = 1. Although we will not use this fact in our numerical methods, it is important to notice that gθ can be obtained from θ (given by Eq. (10)) via the solution of a system of ordinary differential equations to which Eq. (4) reduces. It can be shown that this system has solutions over all times, so our model is theoretically consistent. With this model, the image is therefore a random deformation of the template (since θ is random). We assume that the observed image I obtained from the deformed template after discretization and addition of noise. More precisely, denoting , the observation I is a discrete image given by

| (16) |

The complete process is thus via the pair (θ, I). Our goal is, given observations I1, …, IN having the same distribution as I above, to estimate the template J and the noise variance σ2.

2.2 Prior distribution on the template

We want to constrain the virtually infinite dimensional template estimation problem within a Bayesian strategy. We will introduce for this a hypertemplate, J0, and describe J as a random deformation of J0. The hypertemplate is given, usually provided by an anatomical atlas. The template J is modeled as with μ the initial momentum. We model μ as a discrete momentum like in the previous section, with distribution

| (17) |

for a reproducing kernel Kπ. In our experiments, we made the simple choice Kπ = λK, for some regularization parameter λ > 0.

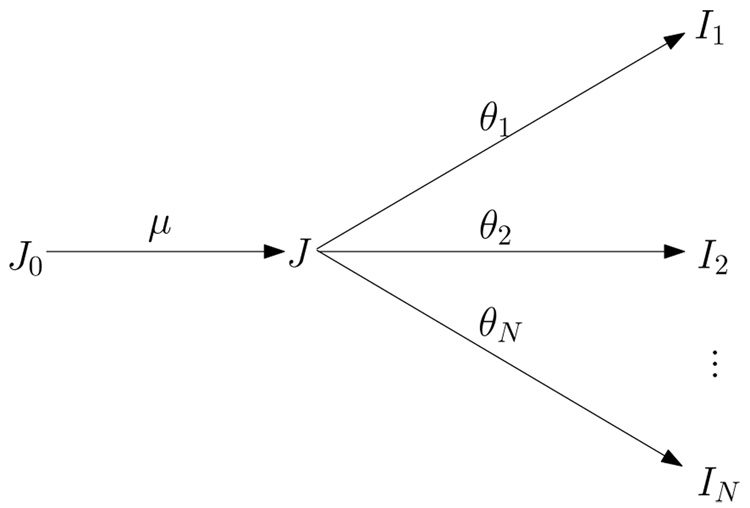

The relations between J0,J and I1, I2, …, IN is illustrated in Figure 1.

Figure 1.

The template J is to be estimated given hypertemplate J0 and observed images I1, I2, …, IN. We model the template as , where μ is the random initial momentum. In the following EM algorithm, μ is computed iteratively, with random initial momenta θ1, θ2, …, θN being hidden variables.

Remark

In this model, J0 and J are continuous. J0 is a given continuous function defined on Ω ∈ ℝd, although it may be finitely generated (using a finite element representation, for example). Observations I1, …, IN are discrete images. From these images, we will estimate the initial momentum μ, and further get the continuous template . For simplicity, we will still denote, with a little abuse, to refer to its discretization .

Remark

The kernels K and Kπ are important components of the model, and can be considered as infinite dimensional parameters. While not an impossible task, trying to estimate them would significantly complicate our procedure. Consequently, the kernels have been selected a priori, and left fixed during the estimation procedure.

3 Template Estimation with the MAEM Algorithm

3.1 The complete-data log-likelihood

We here describe the estimation of parameters μ (which uniquely describes the template) and σ2 based on the observation of I = {I1, I2,…, IN}. Our goal is to compute argmaxμ,σ2 pσ(μ|I). We let Θ = {θ1, θ2, …, θN} denote the sequence of hidden initial momenta.

To use the EM algorithm we first write down the complete-data log-likelihood. The joint likelihood of the model given the observations is

| (18) |

Where .

The EM algorithm generates a sequence (μ(k), σ2(k)) according to the transition (Eμ(k),σ2(k) is the expectation under the assumption that the true parameters are μ(k) and σ2(k).)

The maximization is decomposed into two steps implying a generalized EM algorithm:

Define the complete-data log-likelihood Q(μ, σ2|μ(k), σ2(k), I) as the right hand side of the previous equation which must be maximized alternatively in μ and σ2:

| (19) |

where S is the number of grids (or pixels, voxels) and C and C̃ are expressions that do not depend on μ or σ2. The maximization M-step at transition step k yields

| (20) |

| (21) |

Remark

The resulting MAEM algorithm formally coincides with the Maximum a Posterior (MAP) estimation, which maximizes the likelihood with respect to all parameters. We use MAEM here instead of MAP to be reminded that the deformation (with respect to which the mode is computed) is not a parameter, but a hidden variable, and that our procedure should be considered as an approximation of the computation of the maximum likelihood of the observations.

3.2 Maximization via the Euler Equation with Jacobian weight

This is the EM framework for template estimation. Now, the main difficulty of the algorithm is the minimization in (21). To derive the Euler -Lagrange Equation for the minimizer, we use the integral formula for the norm, so we can avoid the interpolation problem associated with discrete sum definition which corresponds to signal plus additive white noise. This makes the change of variable formula straightforward and links us to the Euler-Lagrange equations on vector fields which have been previously published. As well, our implementation is a discretization of that continuum equation. Note that one can provide a fully discrete analysis of the problem (relying on a representation of the images using linear interpolations as in [1], where both images and deformations are linear combinations of the kernels centered at the landmark points. ).

For the variation, let Vπ be the reproducing kernel Hilbert space associated to the prior kernel Kπ. Since the energy is conserved along geodesics, we have

| (22) |

where υ0 = Kπμ is the initial velocity. This connects our optimization in the MAEM algorithm to the original LDDMM Euler-Lagrange equation of [7].

If gt is a time-dependent flow of diffeomorphisms, we let gs,t : Ω ↦ Ω denote the composition gs,t(y) = gt ∘ (gs)−1(y), meaning position at time t of a particle that is at position y at time s. Let Dgs,t denote the Jacobian of mapping gs,t, the matrix composed with the space derivatives. We add a superscript υ to indicate that is the flow arising from (1). We state how perturbations of the vector field affect the variation of the mapping in the following lemma.

Lemma 3.1

The variation of mapping when υ ∈ L2([0, 1], Vπ) is per-turbed along h ∈ L2([0, 1], Vπ) is given by

| (23) |

Refer to [7] for proof.

Then the maximization is reduced to what we term the weighted-LDDMM image matching problem.

Proposition 3.1

(i) (Formalization to a weighted-LDDMM problem) At stage k + 1 define the following ancillary average of the conditional mean:

| (24) |

Then the M-step of the generalized EM algorithm reduces to the weighted-LDDMM algorithm:

| (25) |

with the Jacobian weight

| (26) |

(ii) (The weighted Euler-Lagrange Equation) Given a continuously differentiable template image J0, a target image Ī and Jacobian weight α, the optimal velocity field υ̂ ∈ L2[(0, 1), Vπ] with υ̂0 = Kπμ̂ for inexact matching of J0 and Ī defined as

| (27) |

satisfies the Euler-Lagrange equation

| (28) |

where .

The crucial idea here is that we are linked back to the basic LDDMM image matching problem of Beg (α = 0), with the Jacobian playing a role.

Proof

(i)Let , we have

| (29) |

With Ī(k+1) as defined in Eq. 24, we have, for all y ∈ Ω

| (30) |

In (a), the fact that the cross item

comes straightforward from the definition of Ī(k+1)(y).

Since the second term of Eq.(30) does not depend on μ, substituting Eq. (30) into Eq. (21), we see that μ(k+1) must minimize

| (31) |

with the Jacobian weight

| (32) |

. With the optimal μ(k+1), we can compute gμ(k+1) by geodesic shooting (Equation (4)), and further obtain the newly estimated template . This gives the first part of the proof.

(ii)The proof of the second half follows the derivation in [7]. Suppose the velocity υ ∈ L2([0, 1], Vπ) is perturbed along the direction h ∈ L2[(0, 1), Vπ] by an ε amount. The Gâteaux variation ∂hE(υ) of the energy function is expressed in term of the Fréchet derivative ∇υE

| (33) |

The variatio n of is given by

| (34) |

The second part of the energy is

| (35) |

The variation of E2(υ) is

| (36) |

with (a) derived straightly from Lemma 3.1 and (b) from the formulation D(J0 ∘ g1,0) = DJ0 ∘ g1,0Dg1,0. Changing variable with z = g1,t(y) i.e. gt,1(z) = y, one can obtain |dgt,1| dz = dy. The chain rule gives g1,0 ∘ gt,1 = gt,0. In addition, D(I ∘ g) = (∇(I ∘ g))T . With these substitutions, we get

Combining the two parts of energy functional, the gradient is thus

| (37) |

where the subscript Vπ in (∇υEt)Vπ is to clarify that the gradient is in the space L2([0, 1], Vπ). The optimizing velocity fields satisfy the Euler-Lagrange equation

| (38) |

Since h is arbitrary in L2([0, 1], Vπ) we get Eq.(28).

Equation (37) provides the gradient flow that minimizes (27). Recall that this problem must be solved to obtain the next deformation of the hypertemplate: given the solution υ̂, compute the initial momentum μ̂ = (Kπ)−1υ̂0, the optimal diffeomorphism gμ̂ and the new template .

Since the Euler-Lagrange equation for theWeighted LDDMM only differs from the original equation by the α∘gt,1 factor, its implementation is a minor modification to the basic one, for which we refer to [7] for details.

3.3 Computing the conditional mean via the mode

Another difficulty is to compute the conditional expectations, which cannot be done analytically, given the highly nonlinear relation between θn and In ∘ gθn. The crudest approximation of the conditional distribution is to replace it by a Dirac measure at its mode, and this is the one we will select for the time being. Already having J(k), estimation of the template in k-th iteration, denote to be the minimizer of

| (39) |

The computation of Ī(k+1) now becomes:

| (40) |

This also provides an approximation of the Jacobian weight

| (41) |

Concerning the implementation, the computation of is done using the LDDMM algorithm between the template J(k) and the target In. Note that is not needed for Eq. (40), but only the deformed target and the determinant of the Jacobian .

3.4 Template Estimation Algorithm

Now, the template estimation algorithm can be summarized as the following:

Algorithm 3.1 (Template estimation)

Having the hypertemplate J0 and N observations I1, I2, …, IN, we wish to estimate the template J and noise variance σ2. Let J(k) denote the estimated template after k iterations with initial guess J(0) = J0. Then, the (k + 1)th step is

(i) Map the current estimated template J(k) to In, n = 1, 2, …,N using basic LDDMM, and obtain the deformed targets and Jacobian determinants of the deformations .

(ii) Compute the mean image Ī(k+1) and the Jacobian weight α(k+1) defined by

| (42) |

and

| (43) |

where is the optimal diffeomorphic mapping from J(k) to In and is the determinant of its Jacobian matrix.

(iii)update the noise variance σ2

| (44) |

(iv) find μ(k+1) to minimize

| (45) |

using the weighted Euler-Lagrange equation described previously.

(v) the newly estimated template is .

(vi) Stop if J(k) is stable or the number of iterations is larger than a specific number. Else reiterate (i)–(iv).

4 Results and Discussions

Here we present numerical results of template estimation for 3D hippocampus data and 3D cardiac data. All data are binarized segmented images with grayscale 0–255 (the images are not strictly binary because of smoothing and interpolation). For these experiments, we have used Kπ = λK with a suitable value of λ.

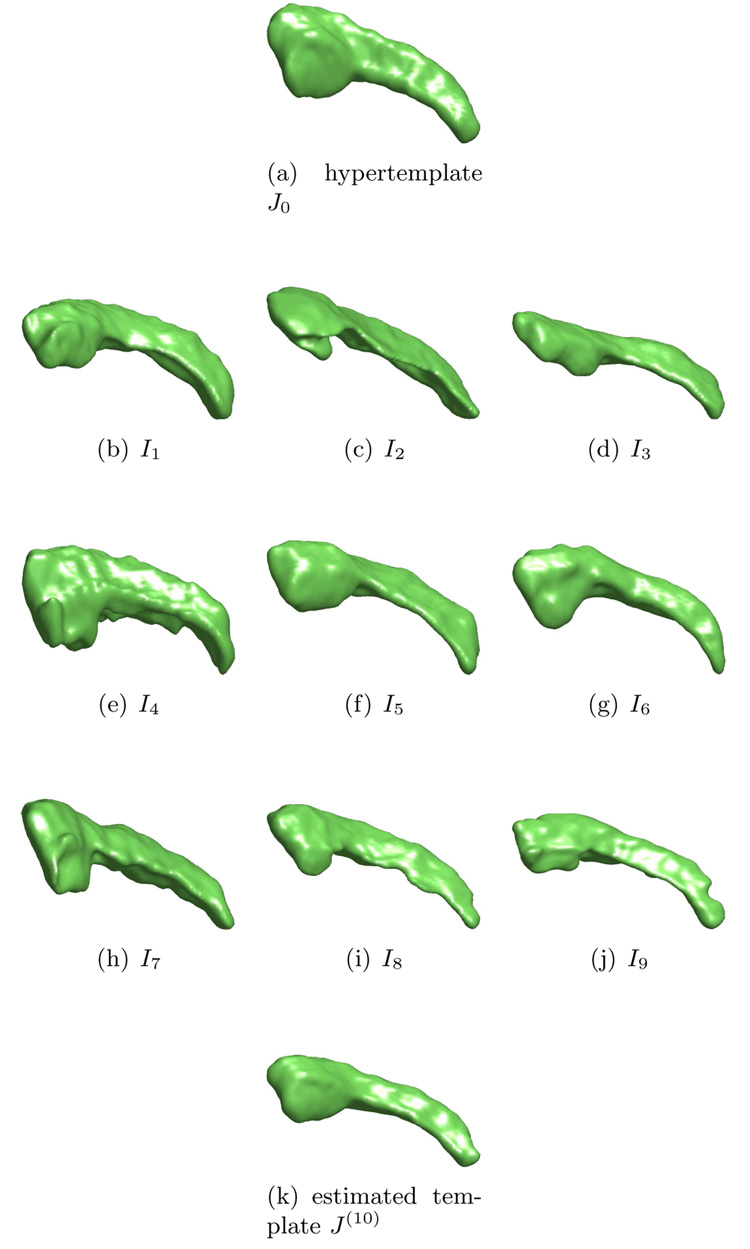

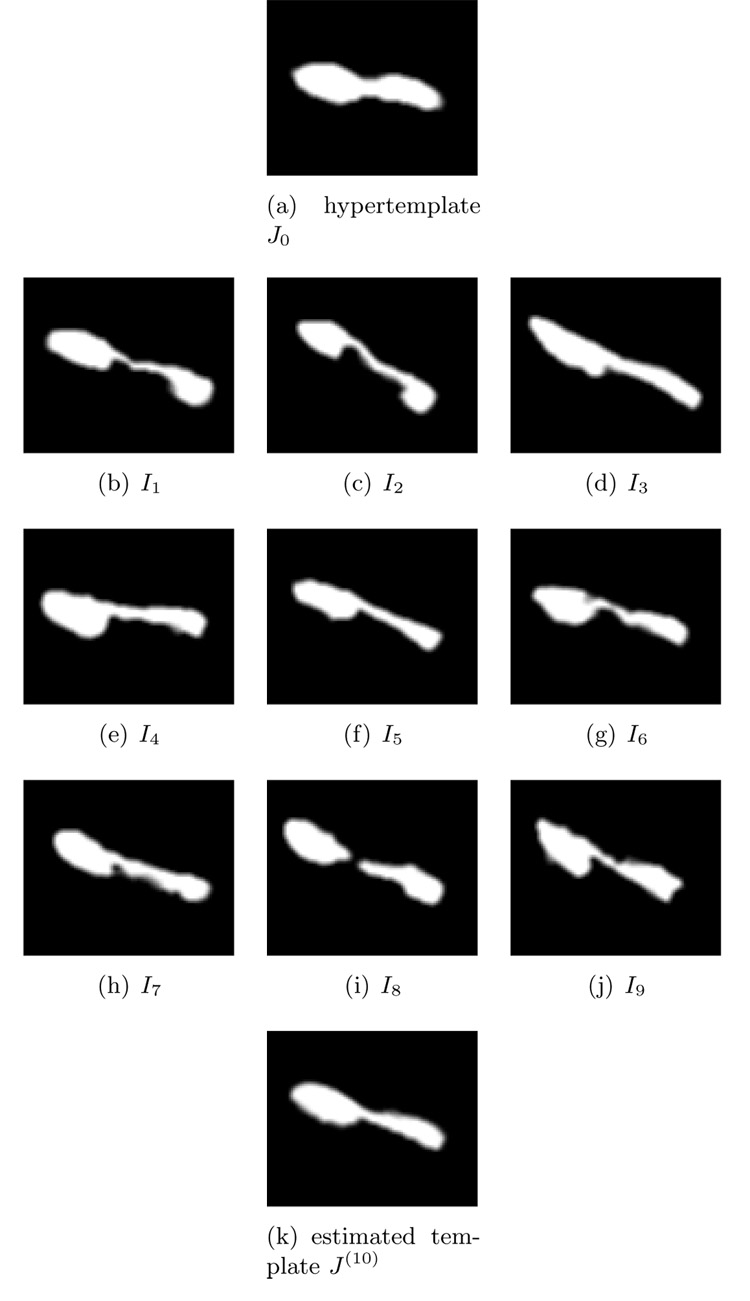

Shown in Figure 2 is an example of template estimation of 3D hippocampus data. Panel (a) is the hypertemplate and panel (b)-(i) are observations. Panel (j) is the estimated template with λ = 0.01.

Figure 2.

Estimating the template from 3D hippocampus data. Panel (a) is the hypertemplate. Panel (b)–(j) are observations In, n = 1, 2, …, 9. Panel (k) is the estimated template after 10 iterations. Data courtesy of Biomedical Informatics Research Network.

We present sections of the 3D data in Figure 3 to show more clearly that the estimated template adapts to the shapes of observations.

Figure 3.

Section view of 3D hippocampus data. Panel (a) is the hypertemplate. Panel (b)–(j) are observations In, n = 1, 2, …, 9. Panel (k) is the estimated template after 10 iterations. Data courtesy of Biomedical Informatics Research Network.

We define the deformation metric, ρℐ(J, In) to be square root of the deformation energy, , for the optimal velocity provided by the LDDMM algorithm. To show that the estimated template is a considerable improvement upon the hypertemplate, we list the metrics ρℐ(J0, In) and ρℐ(J(10), In) in Table 1, which are computed with the same parameters. This shows a significant metric reduction from the original hypertemplate to the template estimated after 10 steps.

Table 1.

The metric between observations and J0, J(10)

| ρℐ(·, ·) | J0 | J(10) |

|---|---|---|

| I1 | 5.2995 | 4.2773 |

| I2 | 7.6836 | 4.1446 |

| I3 | 4.7706 | 3.7492 |

| I4 | 4.9767 | 2.4993 |

| I5 | 4.3480 | 3.3836 |

| I6 | 4.2751 | 2.6810 |

| I7 | 5.3083 | 3.2349 |

| I8 | 5.1540 | 3.4601 |

| I9 | 5.8151 | 3.6120 |

To assess the convergence of the results, we investigate the differences between the estimated templates in successive iterations

| (46) |

where S is the number of voxels and k = 0, 1, …, 9. The results are shown in Table 2. We see that the differences between J(k+1) and J(k) decrease rapidly to a small value (approximately 0) in the first 10 iterations. This indicates the results converge to a stable shape. In Table 2, we also show the estimated noise levels, which converge too.

Table 2.

Differences between the estimated templates in successive iterations and estimated noise variances.

| iteration k | σ(k) | ||

|---|---|---|---|

| 0 | 29.7629 | 1.0000 | |

| 1 | 228.3461 | 8.5799 | |

| 2 | 232.6406 | 22.1642 | |

| 3 | 13.7406 | 27.1063 | |

| 4 | 0.3902 | 24.5795 | |

| 5 | 0.1638 | 23.8118 | |

| 6 | 0.0776 | 23.6478 | |

| 7 | 0.0036 | 23.5560 | |

| 8 | 0.0014 | 23.5726 | |

| 9 | 0.0003 | 23.5772 | |

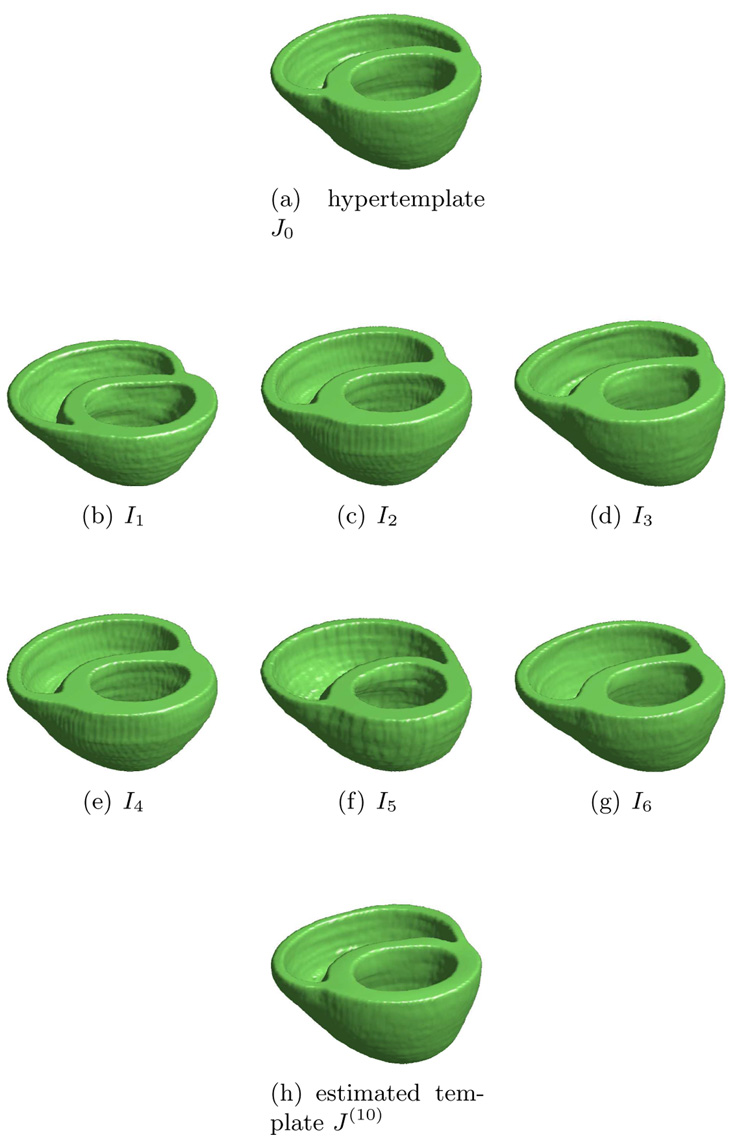

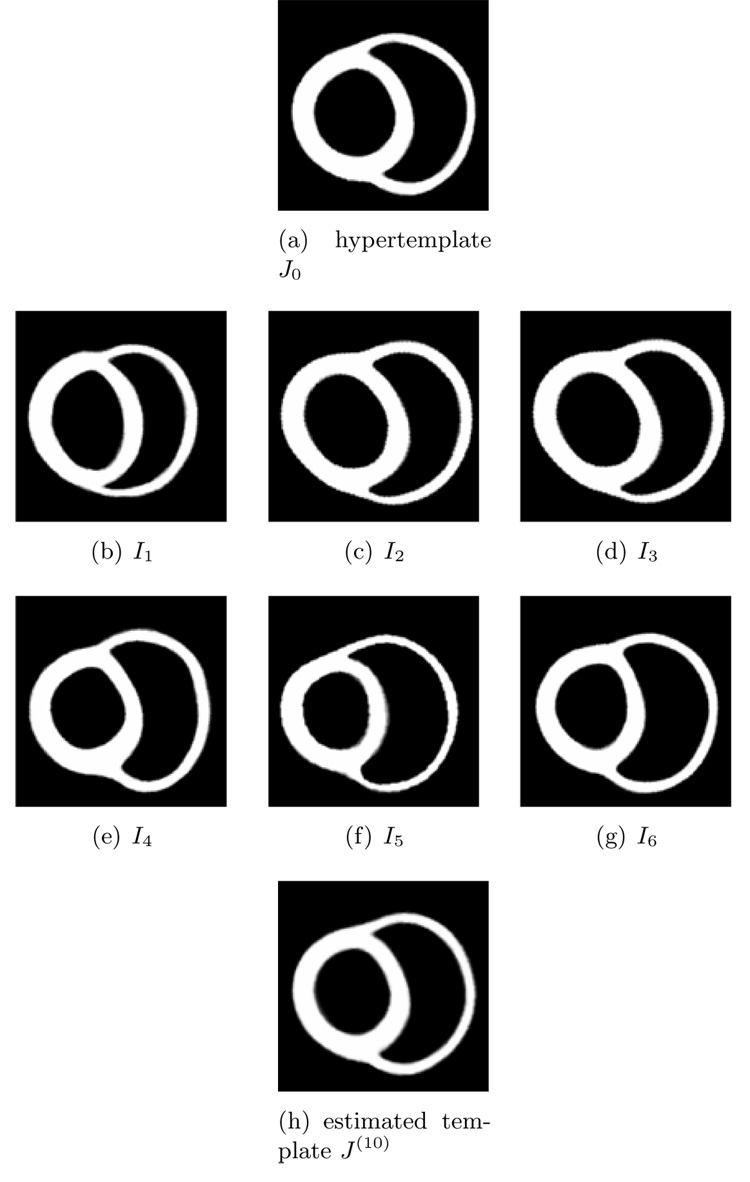

Finally we present the result for 3D heart template estimation. Panel (a) of Figure 4 is the hypertemplate. Panel (b)–(g) are observations In, n = 1, 2, …, 6. Panel (h) is the estimated template with λ = 0.0001 at 10th iteration. Figure 5 is the section view.

Figure 4.

Estimating the template from 3D heart data. Panel (a) is the hypertemplate. Panel (b)–(g) are observations In, n = 1, 2, …, 6. Panel (h) is the estimated template after 10 iterations. Data courtesy of Dr. Patrick Helm, previously of Dept. of Biomedical Engineering, Johns Hopkins University.

Figure 5.

Section view of 3D heart data. Panel (a) is the hypertemplate. Panel (b)–(g) are observations In, n = 1, 2, …, 6. Panel (h) is the estimated template after 10 iterations. Data courtesy of Dr. Patrick Helm, previously of Dept. of Biomedical Engineering, Johns Hopkins University.

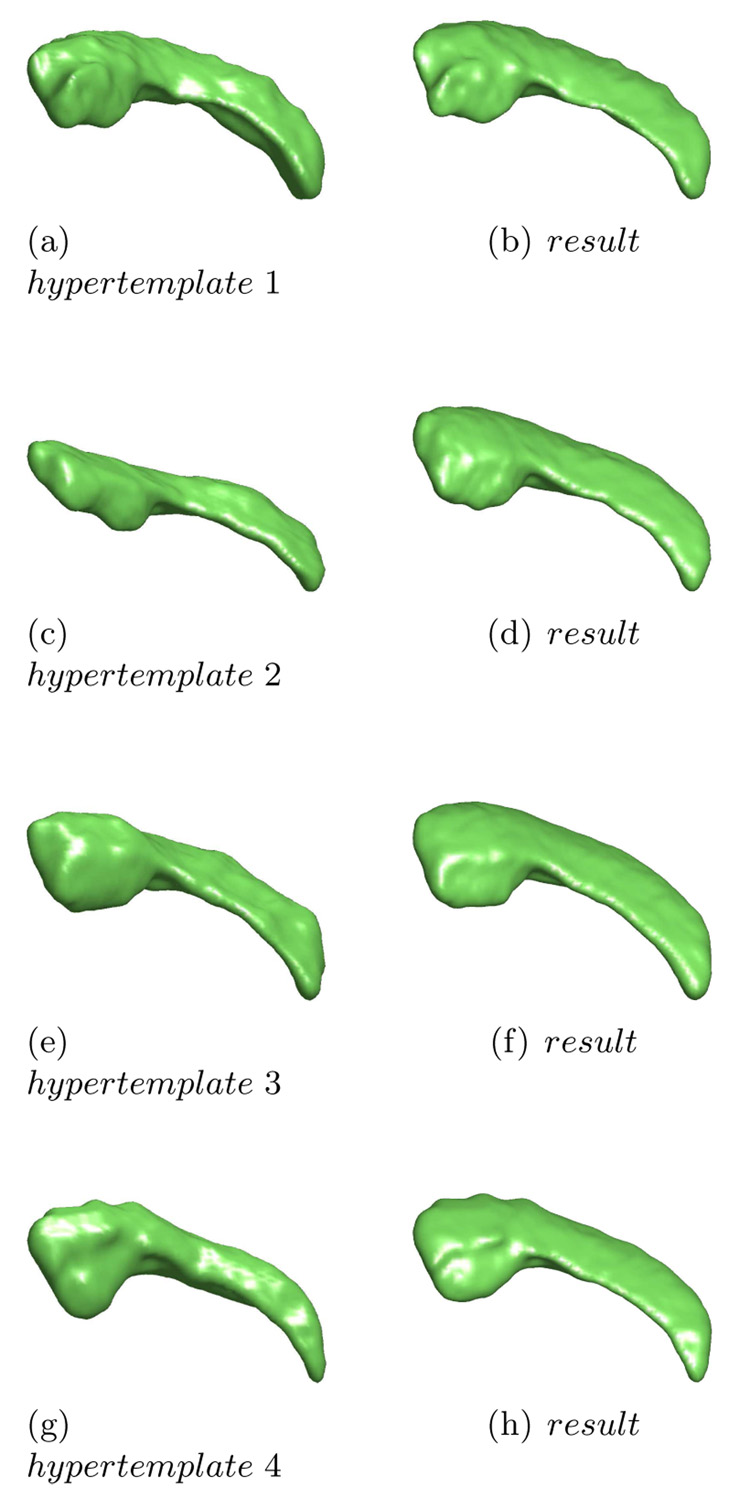

In our model, the hypertemplate is considered as an ”ideal” continuous image with fine structure, which can be provided by an atlas obtained from other studies, although we here simply choose a representative image in the population. Actually, as Figure 6 shows, different hypertemplates yield close results, although they have minor difference.

Figure 6.

For the same observed population, we choose different images as hypertemplate, λ = 0.01. The results only have minor differences.

λ controls how strongly the estimated template depends on the hypertemplate. This has been fixed by hands, but our experiments show a large range of variation without noticeable difference in the final result. By taking small values of λ, the prior reduces to an almost uniform distribution over the orbit of the hypertemplate. We indeed took values between 0.0001 and 1 and obtained stable results.

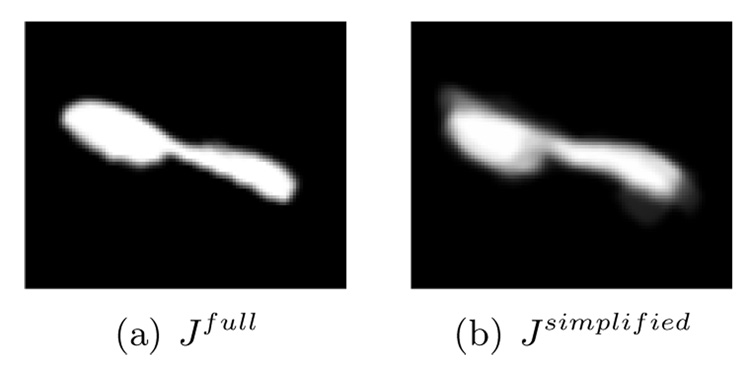

Remark

In the above model, we assume the initial momenta μ follows a prior distribution pπ(μ) and estimate the template given observations. We call this the “full model”. We can simplify this model by neglecting the prior and just estimate the maximum likelihood of the template J. The MAEM algorithm on this setting will lead us to iterate the procedure of

| (47) |

The resulting algorithm is similar to [15]. The difference is that the model in [15] warps the observations to match the template and places the white noise between the deformed target and the template, which does not induce a jacobian in the averaging process. However, for a generative model, the logical progression is from template to target. From this point of view, [15] implies an observation noise that is proportional to the inverse jacobian of the deformation, which is hard to justify.

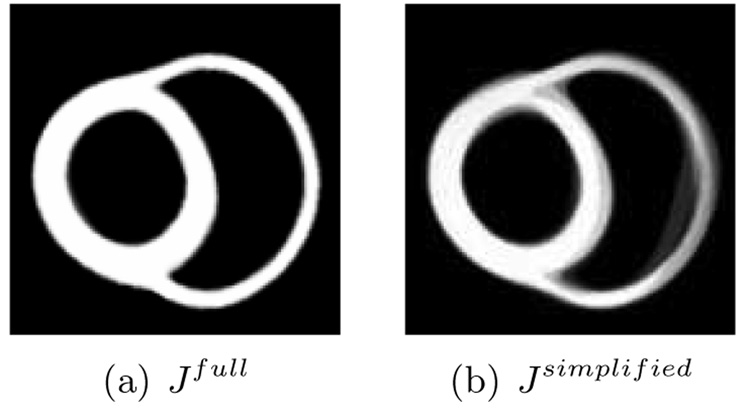

Figure 7 and Figure 8 compare the full model and the simplified model. The simplified model performed relatively poorly compared with the full model that used the prior. This discrepancy comes from the fact that we simultaneously estimate the template and the noise variance. This estimated value of the noise variance allows for some difference between the deformed template and the targets, yielding fuzzy boundaries in the simplified model. If we set the noise variance to a small number, we may obtain sharper boundaries using the simplified model, but this would estimate a template essentially as a metric average (a Fréchet mean) of the targets and would not be consistent with our generative model.

Figure 7.

The template estimation results of full model and simplified model for hippocampus data. Data courtesy of Biomedical Informatics Research Network.

Figure 8.

The template estimation results of full model and simplified model for cardiac data. Data courtesy of Dr. Patrick Helm, previously of Dept. of Biomedical Engineering, Johns Hopkins University.

5 Conclusion

In conclusion, we have presented in this paper a Bayesian model for template estimation in CA. By the momentum conservation law, the space of initial momenta is a linear space where statistical analysis can be applied. It is assumed that observed images I1, I2, …, IN are generated by shooting the template J0 through gaussian-distributed random initial momenta θ1, θ2, …, θN. The template J is modeled as a deformation from a given hypertemplate J0 with initial momentum μ, which has a gaussian prior. This allows us to apply an generalized EM algorithm MAEM to computing the Bayesian estimation of the initial momentum μ, where the conditional expectation of the EM is approached by a Dirac measure, so that one can take the advantage of the LDDMM algorithm. The MAEM procedure finally leads to an image mapping problem from J0 to Ī with Jacobian weight α in the energy term, which is solved by the weighted Euler-Lagrange Equation. In particular, we apply this method to template estimation for hippocampus and cardiac images. We show that the estimated template is “closer” to observations compared to the hypertemplate, and the differences between the estimated templates in successive iterations decrease to almost 0, which indicates the convergence of the algorithm. We also show the results are stable with different hypertemplates.

Acknowledgements

This work is partially supported by NSF DMS-0456253, NIH R01-EB000975, NIH P41-RR15241, NIH R01-MH064838, NIH 5 U24 RR021382-04, NIH 2 P01 AG003991-24, NIH 1 P02 AG02627601, NIH 5 P50 MH071616-04.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Jun Ma, Email: junma@cis.jhu.edu, Center For Imaging Science & Department of Biomedical Engineering, The Johns Hopkins University, 320 Clark Hall, Baltimore, MD 21218, USA.

Michael I. Miller, Email: mim@cis.jhu.edu, Center For Imaging Science & Department of Biomedical Engineering, The Johns Hopkins University, 301 Clark Hall, Baltimore, MD 21218, USA.

Alain Trouvé, Email: trouve@cmla.ens-cachan.fr, CMLA, Ecole Normale Supérieure de Cachan, 61 Avenue du President Wilson, F-94 235 Cachan CEDEX, France.

Laurent Younes, Email: laurent.younes@jhu.edu, Center For Imaging Science & Department of Applied Math and Statistics, The Johns Hopkins University, 3245 Clark Hall, Baltimore, MD 21218, USA.

References

- 1.Allassonnière S, Amit Y, Trouvé A. Toward a coherent statistical framework for dense deformable template estimation. Journal of the Royal Statistical Society Series B. 2007;69(1):3–29. [Google Scholar]

- 2.Arnold VI. Sur un principe variationnel pour les ecoulements stationnaires des liquides parfaits et ses applications aux problèmes de stabilité non linéaires. Journal de Mécanique. 1966;5:29–43. [Google Scholar]

- 3.Arnold VI. Mathematical Methods of Classical Mechanics. Second Edition: 1989. Springer; 1978. [Google Scholar]

- 4.Avants BB, Gee JC. Geodesic estimation for large deformation anatomical shape averaging and interpolation. NeuroImage. 2004;23 supplement 1:S139–S150. doi: 10.1016/j.neuroimage.2004.07.010. [DOI] [PubMed] [Google Scholar]

- 5.Avants BB, Gee JC. Symmetric geodesic shape averaging and shape interpolation. ECCV Workshops CVAMIA and MMBIA. 2004:99–110. doi: 10.1016/j.neuroimage.2004.07.010. [DOI] [PubMed] [Google Scholar]

- 6.Beg MF, Khan A. Computation of average atlas using lddmm and geodesic shooting; IEEE International Symposium on Biomedical Imaging; 2006. [Google Scholar]

- 7.Beg MF, Miller MI, Trouvé A, Younes L. Computing large deformation metric mappings via geodesic flows of diffeomorphisms. International Journal of Computer Vision. 2005;61(2):139–157. [Google Scholar]

- 8.Dupuis P, Grenander U, Miller MI. Variational problems on flows of diffeomorphisms for image matching. Quarterly of Applied Mathematics. 1998;56:587–600. [Google Scholar]

- 9.Fletcher PT, Joshi S, Lu C, Pizer SM. Principal geodesic analysis for the study of nonlinear statistics of shape. IEEE Transactions on Medical Imaging. 2004;23(8):995–1005. doi: 10.1109/TMI.2004.831793. [DOI] [PubMed] [Google Scholar]

- 10.Glasbey CA, Mardia KV. A penalized likelihood approach to image warping. Journal of the Royal Statistical Society. 2001;63(3):465–492. [Google Scholar]

- 11.Grenander U. General Pattern Theory. Oxford Univ. Press; 1994. [Google Scholar]

- 12.Guimond A, Meunier J, Thirion JP. Average brain models: A convergence study. Computer Vision and Image Understanding. 2000;77(2):192–210. [Google Scholar]

- 13.Helm PA, Younes L, Beg MF, Ennis DB, Leclercq C, Faris OP, McVeigh E, Kass D, Miller MI, Winslow RL. Evidence of structural remodeling in the dyssynchronous failing heart. Circulation Research. 2006;98:125–132. doi: 10.1161/01.RES.0000199396.30688.eb. [DOI] [PubMed] [Google Scholar]

- 14.Holm DD, Marsden JE, Ratiu TS. The Euler–Poincaré equations and semidirect products with applications to continuum theories. Advances in Mathematics. 1998;137:1–81. [Google Scholar]

- 15.Joshi S, Davis B, Jomier M, Gerig G. Unbiased diffeomorphic atlas construction for computational anatomy. NeuroImage; Supplement issue on Mathematics in Brain Imaging. 2004;23 Supplement 1:S151–S160. doi: 10.1016/j.neuroimage.2004.07.068. [DOI] [PubMed] [Google Scholar]

- 16.Le H, Kume A. The Fréchet mean shape and the shape of means. Advances in Applied Probability. 2000;32:101–113. [Google Scholar]

- 17.Marsden JE, Ratiu TS. Introduction to Mechanics and Symmetry. Springer; 1999. [Google Scholar]

- 18.Miller MI, Trouvé A, Younes L. On the metrics, Euler equations and normal geodesic image motions of computational anatomy; Proceedings of the 2003 International Conference on Image Porcessing, IEEE; 2003. pp. 635–638. [Google Scholar]

- 19.Miller MI, Trouvé A, Younes L. Geodesic shooting for computational anatomy. Journal of Mathematical Imaging and Vision. 2006 January;24(2):209–222. doi: 10.1007/s10851-005-3624-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mio W, Srivastava A, Joshi S. On shape of plane elastic curves. International Journal of Computer Vision. 2007;73(3):307–324. [Google Scholar]

- 21.Pennec X. Intrinsic statistics on riemannian manifolds: Basic tools for geometric measurements. Journal of Mathematical Imaging and Vision. 2006;25(1):127–154. [Google Scholar]

- 22.Trouvé A. An infinite dimensional group apporach for physics based model. technical report. 1995 (electronically available at http://www.cis.jhu.edu). unpublished.

- 23.Vailliant M, Miller MI, Younes L, Trouvé A. Statistics on diffeomorphisms via tangent space representations. NeuroImage. 2004;23:S161–S169. doi: 10.1016/j.neuroimage.2004.07.023. [DOI] [PubMed] [Google Scholar]