Abstract

To explore the effects of acoustic and behavioral context on neuronal responses in the core of auditory cortex (fields A1 and R), two monkeys were trained on a go/no-go discrimination task in which they learned to respond selectively to a four-note target (S+) melody and withhold response to a variety of other nontarget (S−) sounds. We analyzed evoked activity from 683 units in A1/R of the trained monkeys during task performance and from 125 units in A1/R of two naive monkeys. We characterized two broad classes of neural activity that were modulated by task performance. Class I consisted of tone-sequence–sensitive enhancement and suppression responses. Enhanced or suppressed responses to specific tonal components of the S+ melody were frequently observed in trained monkeys, but enhanced responses were rarely seen in naive monkeys. Both facilitatory and suppressive responses in the trained monkeys showed a temporal pattern different from that observed in naive monkeys. Class II consisted of nonacoustic activity, characterized by a task-related component that correlated with bar release, the behavioral response leading to reward. We observed a significantly higher percentage of both Class I and Class II neurons in field R than in A1. Class I responses may help encode a long-term representation of the behaviorally salient target melody. Class II activity may reflect a variety of nonacoustic influences, such as attention, reward expectancy, somatosensory inputs, and/or motor set and may help link auditory perception and behavioral response. Both types of neuronal activity are likely to contribute to the performance of the auditory task.

INTRODUCTION

A key question in auditory physiology is how complex sounds are encoded and how this sensory representation is linked to behavior. In the present study, we explored the neural representation of a target melody (four-note sequence) in core areas of auditory cortex of monkeys engaged in a simple auditory-discrimination task. Although higher-order representations of the complete target melody, or auditory object, may occur in auditory association cortex, we conjectured that such higher-order representations were likely to be based on simpler building blocks in the auditory core that encode component note frequencies and order (Kilgard and Merzenich 2002). Thus our experimental goals were to measure the response pattern to a highly learned target tone sequence to test whether neuronal responses to the target melody at the level of auditory core cortex in an awake, behaving animal were modulated by behavioral context and resolve whether the core neural representation of the melody could be parsimoniously explained as the linear sum of dyadic tone interactions between adjacent notes in the melodic sequence.

From earlier behavioral lesion studies, there is evidence that the auditory core plays an important role in the representation and discrimination of complex spectrotemporal patterns such as melody. Bilateral ablation of auditory cortex in cats impairs melody (tone pattern) discrimination (Diamond and Neff 1957; Dewson et al. 1970; Elliott and Trahiotis 1972; Neff et al. 1975; Tramo et al. 2002). Moreover, damage to the auditory cortex in humans is associated with impairments of discrimination of tone sequences (Pinheiro 1976), the order of tonal stimuli (Carmon and Nachshon 1971; Efron 1963; Swisher and Hirsh 1972), tone intervals and temporal rhythms (Liegeois-Chauvel et al. 1998), frequency change or direction discrimination (Tramo et al. 2002), and melodic contour (Peretz 1990). Imaging studies indicate that melody processing activates primary auditory cortex (A1) as well as secondary auditory association areas of the superior temporal gyrus and planum polare (Patterson et al. 2002).

In parallel, neurophysiological studies of the activity of the core or A1 in the behaving animal have been the focus of considerable current interest in the exploration of ongoing dynamic behavioral modulation of cortical responses and in the functional contribution of these changes to acoustic signal processing and behavior. In recent years, there has been considerable advancement in the understanding of neuronal response properties in A1 (reviewed in Eggermont and Ponton 2002; Ehret 1997; Nelken 2004; Read et al. 2002; Schreiner et al. 2000; Semple and Scott 2003). Earlier studies demonstrated that the activity and responses of some units in the core of auditory cortex depend on the attentional or behavioral state of the animal (Beaton et al. 1975; Benson and Hienz 1978; Benson et al. 1981; Fritz et al. 2003, 2005a,b, 2007a,b; Hocherman et al. 1976, 1981; Hubel et al. 1959; Miller et al. 1972; Pfingst et al. 1977; Vaadia et al. 1982) or by whether acoustic stimuli are externally presented or self-generated vocalizations (Eliades and Wang 2003, 2005, 2008). Previous work has also shown that A1 responses can be modulated by the history of previously presented sounds (Bartlett and Wang 2005; Brosch et al. 1997, 1998, 1999, 2000; Fishman et al. 2001, 2004; McKenna et al. 1998; Micheyl et al. 2005; O'Neill 1995; Ulanovsky et al. 2003, 2004; Wehr and Zador 2005; Werner-Reiss et al. 2006; Zhang et al. 2005), suggesting that sensory context plays an influential role in shaping cortical responses, even in the anesthetized animal. Other studies have shown that A1 responses can be strongly modulated by auditory short-term memory (Gottlieb et al. 1989; Sakurai 1994) and influenced by long-term training on discrimination tasks (Bao et al. 2004; Beitel et al. 2003; Blake et al. 2002; Brosch et al. 2005; Recanzone et al. 1993). Behavioral training can lead to persistent memory traces and long-term changes in cortical organization (Keuroghlian and Knudsen 2007; Suga and Ma 2003; Weinberger 2004). Many, if not all, of these features of auditory core processing are likely to contribute to tone sequence and melody encoding.

We report results from recordings in two areas of the primary auditory cortex (fields A1 and R) of monkeys performing a go/no-go auditory-discrimination task. We analyzed the activity of neurons whose responses to a behaviorally salient tonal target (S+) sequence could not be predicted by their receptive field properties (as measured in response to single pure-tone transients). We reasoned that such task-dependent responses were likely to contribute to the cortical representation of salient auditory objects. We characterized two classes of neurons in the trained monkeys that showed nonlinear response patterns to the target (S+) melody.

Class I: The first class was comprised of sequence-selective neurons that showed short-latency highly facilitated responses or long-latency suppressive responses to tonal components of the four-tone target sequence. The short-latency facilitation was rarely observed in two naive monkeys in response to the target (S+) melody, suggesting that the short-latency facilitation observed in the two trained monkeys was indeed behavioral in origin. Similarly, the extent of suppression as well as the temporal pattern of suppressive responses in the trained monkeys differed from those observed in task-naive monkeys.

Class II: A second class of neurons was characterized by “anticipatory” activity that was highly correlated with reward and/or with task-related bar release, a behavioral response that could lead to reward.

These results suggest that the activity of auditory core neurons not only reflect past as well as present acoustic inputs but may also reflect a variety of nonacoustic influences, such as heteromodal sensory inputs, attention, reward, expectation, or motor set.

METHODS

Four adult male rhesus monkeys (Macaca mulatta), weighing 8–10 kg, were used. Experiments were performed in a double-walled sound-attenuating booth (IAC). All experimental procedures followed National Institutes of Health (NIH) guidelines. The protocol for experimental studies on the two trained monkeys (conducted at NIH) was approved by the National Institute of Mental Health animal care and use committee. The protocol for experimental procedures on the two task-naive monkeys (conducted at the University of California at Davis) was approved by the University of California, Davis animal care and use committee.

Behavioral task

All four monkeys were conditioned and habituated to being restrained in an acoustically transparent primate chair and head-fixed for physiological recordings. Two monkeys were trained to perform a go/no-go auditory-discrimination task.

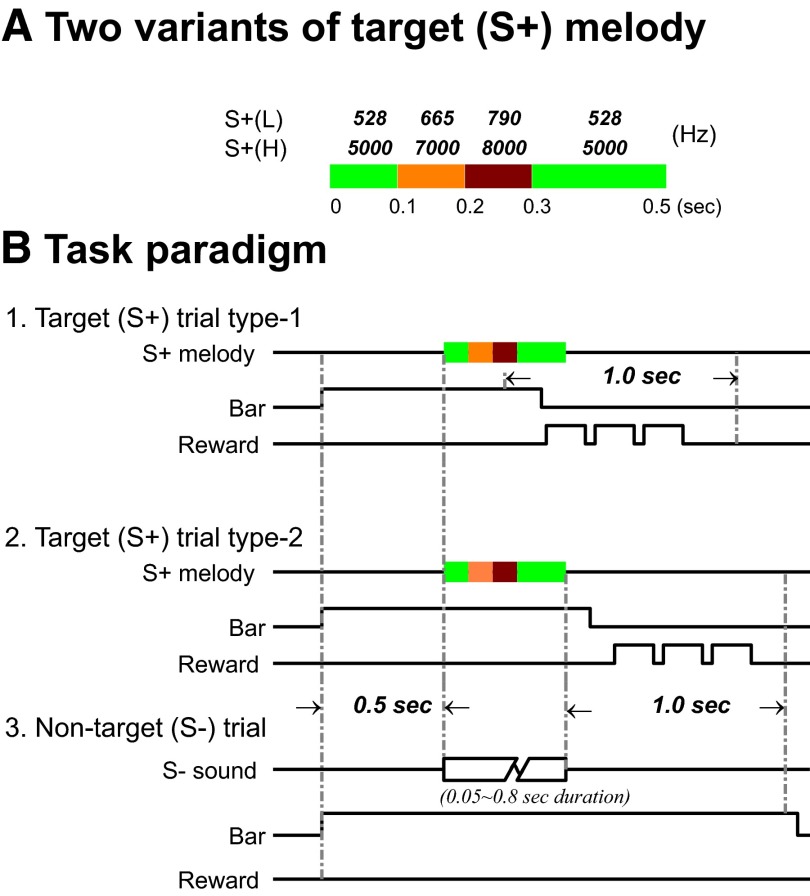

Monkeys learned to grasp a touch bar mounted on the chair for 500 ms to initiate a trial. For target (S+) trials, the monkeys were required to release the bar within a 1.0-s behavioral response window to obtain a fluid reward (typically three drops of juice or water presented successively over a period of 600 ms). For nontarget (S−) trials, the monkeys were required to withhold release for ≥1.0 s after stimulus offset to avoid a 3.0-s timeout (Fig. 1 B). Two slightly different behavioral response windows were used. For monkey B1, the 1.0-s response window began 250 ms after stimulus onset (S+ trial type 1 in Fig. 1B), whereas for monkey B2 it began from stimulus offset (S+ trial type 2 in Fig. 1B). For both monkeys, the likelihood ratio of the S+ stimulus presentations to S− stimulus presentations was 1:5 in random order.

FIG. 1.

Task schematic. The monkeys were trained to discriminate an S+ target melody (fixed duration of 500 ms) from a large set of acoustic stimuli, the nontarget (S−) sounds. The final nontarget (S−) stimulus set included single pure-tone transients, white and band-passed noise, frequency-modulated (FM) sweeps, and monkey vocalizations (with variable duration ranging from 50 to 800 ms). One of the monkeys (B1) learned only the low-frequency version of the S+ target melody and one monkey (B2) also learned a high-frequency version. A S+ target melody consisted of 4 sequential tones (1–2–3–1), illustrated as a color-coded bar (A). The frequencies of the component tones of the two S+ target melodies are given in A. Each of the first 3 tones had durations of 100 ms, whereas the duration of the last tone was 200 ms. A trial was initiated when the monkey held the touch bar for 0.5 s. A sound [either a target sound (S+) or nontarget sound (S−)] was then presented. In one task variant, one monkey (B1) was trained to release the touch bar for the S+ target melody within a 1.0-s response time window that began 250 ms after sound onset [B1: target sound (S+), trial type 1]. The second monkey (B2) learned another variant of the task, in which it was required to hold the touch bar throughout the target melody, and to release the touch bar immediately after offset of the target melody [B2: target sound (S+), trial type 2]. In nontarget sound (S−) trials, both monkeys were required to continue holding the touch bar for 1.0 s (B3). The monkey was rewarded for correct bar release on target sound (S+) trials with juice or water. Typically, the reward consisted of 3 drops, delivered one by one over a period of 600 ms. The intertrial interval for correct nontarget sound (S−) and target sound (S+) trials was about 1.5 s. The monkey received a 3.0-s timeout penalty for incorrect responses to target sound (S+) or nontarget sound (S−) trials (see text for details).

Behavioral training

Behavioral training to the performance criterion (>80% correct for both S+ and S− trials for three behavioral sessions) required about 6 mo for monkeys B1 and B2. The set of S− stimuli was gradually enlarged over this period to an acoustically varied set of 21 sounds (see the next section). Neither naive monkey (N1 or N2) was trained on the go/no-go task described earlier, but one monkey (N2) had received previous training on an unrelated acoustic task (discriminating sinusoidal amplitude modulation [AM]).

Acoustic stimuli: S+ and S− sounds

S+ sounds included only two variants, a high- and a low-frequency version of a four-note melody. Each of the two S+ melodies was a sequence of four single pure-tone notes with the same frequency for the first and last notes. Tone duration was 100 ms for notes 1–3 and 200 ms for note 4, for a total S+ duration of 500 ms (Fig. 1A). Although the onset and offset of the S+ melody were ramped by 5-ms cosine rise/fall times (as were all S− stimuli as well), there was no gap or ramp between the individual four single pure-tone notes that comprised the melody. To reduce transients, each note used the final phase of the preceding note as its initial phase, to provide a smooth transition. The S+(L) melody, used with both trained monkeys, was composed of the four low frequencies of 528, 665, 790, and 528 Hz, in that order. Monkey B2 also received training with a second melody S+(H), composed of four high frequencies of 5, 7, 8, and 5 kHz, in that order (Fig. 1A).

Physiological recording began when the S− stimulus set was comprised of 21 sounds, which included 10 rhesus monkey calls, 10 frequency-modulated (FM) sweeps (varied in sweep rates and directions), and white noise (500 ms). S− stimuli had durations from 50 to 800 ms. Eventually, in the final version of the task, the class of S− sounds included over 120 diverse stimuli divided into five sound sets: single pure tones, band-passed noise, AM or FM tones, and monkey calls. Since one of the key questions addressed in this study was whether auditory cortical responses to well-learned tone sequences (i.e., the S+ melodies) could be predicted from the linear summation of responses to single pure-tone transients, the main set of S− sounds included 24 pure-tone transients, with evenly spaced (1/3 octave) frequencies from 100 to 20,300 Hz in pseudorandom order. This set included tones with matched frequencies near those of individual tones within the S+ four-note melody. In this report, we describe neuronal responses to the pure-tone transient (S−) stimuli and to the melody (S+).

In addition to receiving the two S+ sequences presented to the trained monkeys, the naive monkeys were also presented with other three-tone (four-note) control sequences (C-sequences) that were customized for each recording site based on the best frequency (BF) of the multineuron recording at that site (see Supplemental Material for further details).1 These additional, customized (target-like) stimuli were needed to elicit a sufficient number of facilitatory and suppressive physiological responses in the naive animals to allow comparison with the pattern of responses in the trained animals (see section entitled Comparison of enhancement and suppression patterns to four-note sequences (S+ melodies and C-sequences) in trained and naive monkeys in results). For the naive monkeys, all of the sounds were devoid of behavioral meaning or context, and random juice and water rewards were administered occasionally between passive sound presentation trials to keep the naive animals alert.

Presentation of acoustic stimuli

For all training and recording sessions, sounds were delivered free field via a single loudspeaker (trained monkeys: AP-7, Digital Phase; naive monkeys: Radio Shack PA-110) located in front of the monkey (trained monkeys: 0.75 m; naive monkeys: 1.5 m). Digitally synthesized sounds (16-bit output resolution) were calibrated and/or equalized, attenuated, and amplified so that the frequency output was relatively constant over the frequency range of 0.1–16.0 kHz (varying <5 dB). The two sound delivery setups were well matched in sound delivery (see Supplemental Material for further details). All experimental sessions were conducted in double-walled, sound-attenuating booths.

Surgical procedures and single-unit recording

After reaching the behavioral criterion, the trained monkeys were prepared for physiological studies. A titanium head-restraint post and a CILUX recording chamber (ICO-J2A, Crist Instruments) were implanted under aseptic conditions while the animals were deeply anesthetized with 2% isoflurane. Using the coordinates derived from MRI images, the recording chamber was centered on the dorsal (monkey B2) or lateral (monkey B1) surface of the skull, allowing access to the superior surface of the superior temporal gyrus.

Neurophysiological recordings were made using customized tungsten or glass-coated Pt/Ir microelectrodes (1.5–3.0 MΩ, FHC). Neuronal activity was filtered and amplified (TDT Bioamp system or A-M Systems Model 1800) and digitally acquired (POWER-1401, CED). Single-unit activity was isolated on-line and then confirmed off-line by spike-sorting software (SPSS or Spike2) that uses a template-matching algorithm. The primary auditory cortex was mapped in each monkey's left hemisphere. Successive penetrations were separated by ≥0.5 or 1.0 mm in the mediolateral or rostrocaudal plane and recording sites in a given penetration were separated by ≥200 μm in depth. The electrode was advanced slowly and the depth of first auditory-responsive spikes was noted. We recorded from core auditory cortex at depths ranging from 100 to 2,000 μm below the first recorded auditory spike and thus we are likely to have recorded from most cortical layers (except possibly layer I and layer VI; see Supplemental Fig. S1).

The physiological data from the two naive monkeys were gathered using similar recording techniques and with data-acquisition software developed at the University of California, Davis (O'Connor et al. 2005; Petkov et al. 2007).

Data analysis for Class I cells

Frequency-response areas (FRAs) were assessed using pure tones presented as S− stimuli. For trained monkeys, FRAs derived with S− tones during task performance were similar to those derived while not performing a task. The best frequency (BF) for the cell was taken to be either: 1) the frequency that elicited the largest response at the lowest intensity level tested or 2) the characteristic frequency (CF), if BF measurements were made at threshold intensity. To construct tonotopic maps, we used the median BF values of all units recorded from the same penetration to represent the BF for each cortical location.

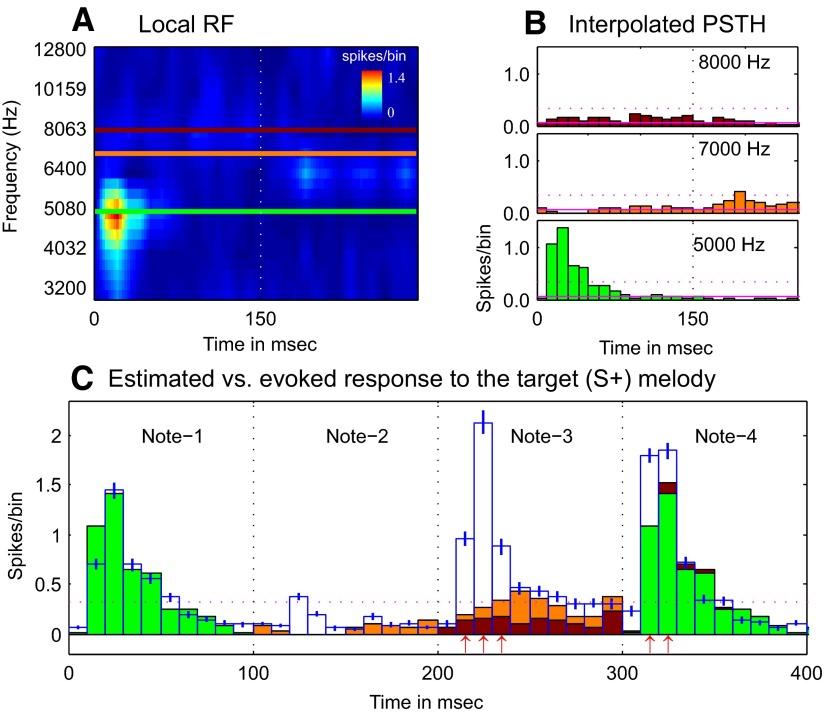

Since monkeys B1 and B2 were highly trained to respond to the tones that composed the S+ melody, we used interpolation from responses to nearby S− tones to calculate a linear prediction of expected responses to those individual tones of the melody (Fig. 2). In detail, we derived the estimated poststimulus time histogram (e-PSTH) of the S+ melody with the following procedure: We 1) calculated the PSTHs (with 10-ms bin size) for the two local frequency ranges, which included all of the frequencies used in the two S+ melodies, and thus obtained a local FRA (Fig. 2A); 2) derived e-PSTHs for each of the individual three component tone-frequencies in each S+ melody from the local FRA by using two-dimensional data interpolation (Fig. 2B); 3) estimated the PSTH (e-PSTH) for the complete S+ melody by linear summation of the estimated responses to the individual components. From the expected responses to the pure-tone responses we predicted the time-varying response to the S+ melody using linear summation of the expected tone responses. In this linear summation we included both estimated “on” and “off” responses (through 100 ms poststimulus) of the individual tonal components (Fig. 2C). This predicted PSTH (e-PSTH) for the S+ melody was then compared with the observed PSTH using a t-test (10-ms bin-by-bin basis).

FIG. 2.

Interpolation method for estimating neuronal response to the S+ target melody. A: an example of a neuron's local receptive field plot generated from its responses to a subset of the single pure tones used as nontarget S− stimuli. The figure shows a color-coded display of the poststimulus onset-time spike rate response to fixed-amplitude (70 dB) single pure-tone transients of 150-ms duration. Three horizontal lines indicate the 3 frequencies used in the 4-tone S+(H) target melody. B: interpolated poststimulus time histogram (PSTH; bin = 10 ms), i.e., receptive-field–based linearly estimated responses, to the individual pure-tone components of the S+(H) melody. C: an estimate of the complete response (filled color bars) to the first 400 ms of the entire S+ target melody was computed by linear addition of all interpolated on responses and off responses for the 4 notes (see methods for details). The actual evoked response to the S+ target melody is plotted as the means ± SE (the unfilled bar plots in blue outline, with blue error bars). The red arrows beneath the bar plots indicate those bins in notes 3 and 4 that showed a significant difference between the evoked response and the estimated response (upward arrow indicates enhancement).

An enhanced response to the S+ melody was defined as a minimum of two consecutive 10-ms bins in the observed PSTH with a significantly greater response (P < 0.01) than the predicted PSTH. A suppressed response was defined as at least two consecutive 10-ms bins in the PSTH with significantly less than the predicted PSTH. Thus the enhanced and suppressed responses were defined in relation to specific S+ notes rather than to the S+ melody as a whole. We also computed a strength index (SI) to quantify the magnitude of enhancement or suppression to the identified S+ melody notes. SI was defined as: SI = (|R − Re|)/(R + Re), where R is the average response of two successive bins showing maximal activity in the observed response to the identified notes in the S+ melodies and Re is the average of the two corresponding bins in the estimated response. The SI varied from 0 to 1 and was applicable in cases of either suppression or enhancement. A low SI indicated minor differences between R and Re (i.e., SI = 0 when R = Re), whereas a high SI indicated major differences between R and Re and thus a highly suppressive or enhancement effect.

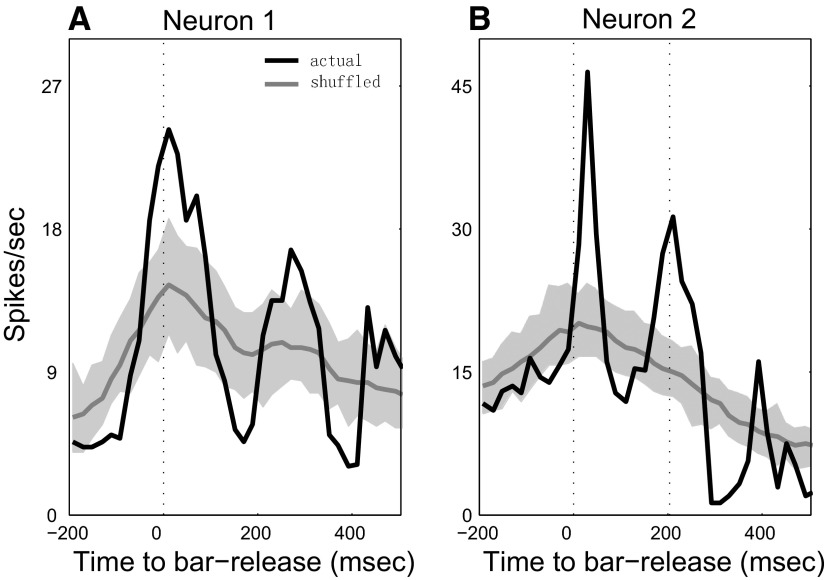

Data analysis for Class II cells

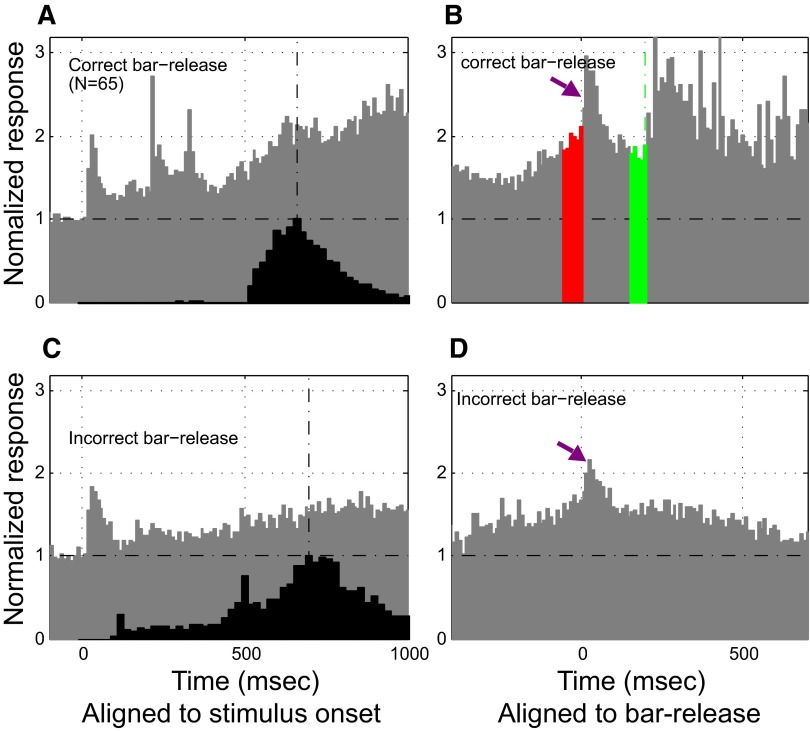

Each neuron was tested to ascertain whether its spiking activity was correlated with bar release. Initially, the neuronal activity associated with bar release was computed by temporally aligning all the correct S+ trials to the bar release, generating a bar-release–aligned histogram for a given behavioral block. Then bar-release timings were shuffled across trials and we recomputed the neuronal response by realigning the trials to the newly shuffled bar-release times. This shuffling procedure was repeated 100 times from which we obtained the average shuffled response and determined the 99% confidence interval. If the actual bar-release–aligned activity crossed the 99% confidence boundary of the shuffled neuronal activity within a 100-ms window before reward delivery (for monkey B1) or around bar release (for monkey B2), the activity was considered to be time-locked more strongly to the bar release than to the S+ sound. A neuron with such properties was classified as a Class II neuron. Two typical examples of Class II neurons, one from monkey B1 (Fig. 3 A) and one from monkey B2 (Fig. 3B), are shown in Fig. 3. In the figure, the black curve indicates the actual neuronal responses aligned to bar-release times. The gray curve indicates the average shuffled neuronal response and the shaded area shows the 99% confidence interval.

FIG. 3.

Method for identifying Class II neurons. Neuronal response PSTHs were generated by aligning all correct trials to bar release (black curve) and to the shuffled bar-release time (gray curve; flanking gray-shaded area indicates the 99% confidence interval). The shuffling procedure is described in methods. In the 2 examples, neurons from monkey B1 (A) and monkey B2 (B) show a larger and sharper peak in actual PSTH than in the shuffled PSTH, thus showing that the neural activity was more closely time-locked to the bar release than to auditory stimulus onset. The vertical dotted lines indicate the bar-release time and the beginning of reward-delivery time (∼200 ms in B).

We extended this method to extract the approximate nonacoustic component of activity from identified Class II neurons. As before, we first computed the average PSTH aligned to the S+ onset. We then subtracted this average acoustic PSTH from each individual trial of neuronal responses to remove the acoustic response evoked by the S+ sounds. Finally, we realigned each “acoustic-response-free” trial according to the timing of bar-release and thus obtained the activity evoked only by nonacoustic task components, such as motor command or hand tactile sensation of bar-release, attention, expectancy, or postbar-release reward delivery.

RESULTS

Behavior

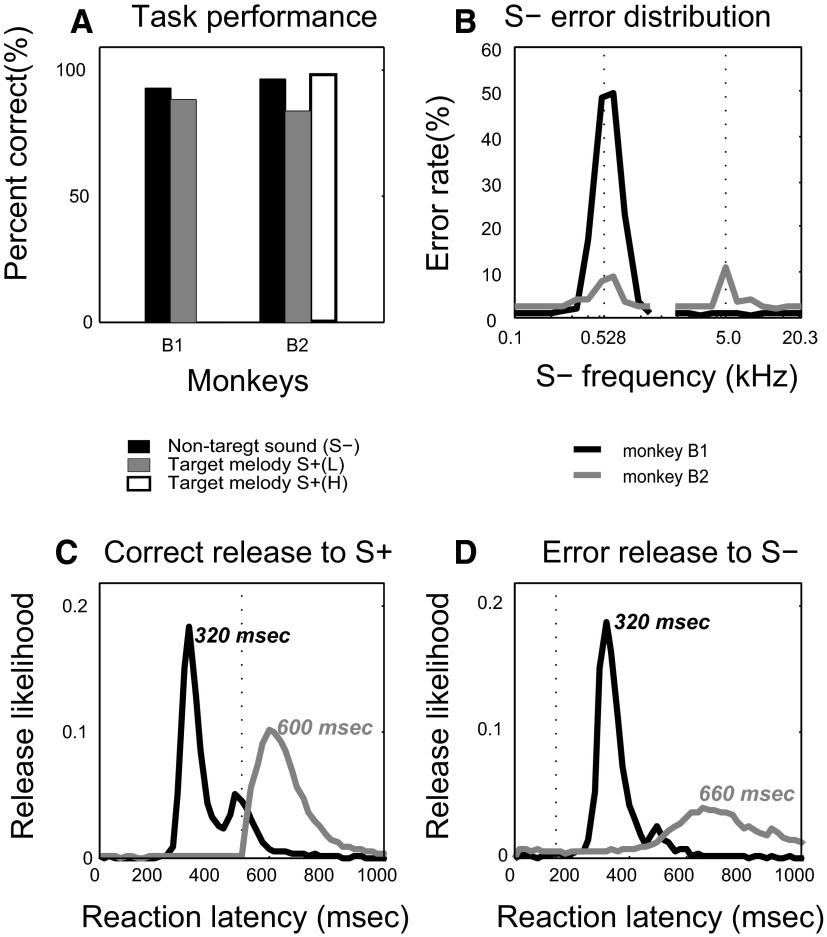

Overall task performance for each trained monkey, averaged over all physiological recording sessions, is presented in Fig. 4 A. Pooled performance level was high (>90% correct overall). However, both monkeys made incorrect go responses to a range of S− pure tones that were close in frequency to the individual notes comprising the S+ melodies (Fig. 4B). As shown in Fig. 4B, when the S− sounds were single pure tones, monkey B1 had an error rate of 49% for frequencies that most closely matched the first two notes in the S+(L) melody and 22% for the frequency that matched the third note of the S+(L) melody. For the same set of S− sounds, monkey B2 made fewer errors, responding incorrectly to an average of only 8 and 10%, respectively, of the frequencies that most closely matched the notes in the S+(L) and S+(H) target melodies.

FIG. 4.

Task performance. A: average percentage correct performance is shown for each monkey (B1 and B2) during physiological recording sessions. B: S− (nontarget) error distribution shows the greatest likelihood of false-positive task errors at frequencies near those of the target melody S+ component tone frequencies. The peak nontarget S− error rate for matched frequencies reached nearly 50% for monkey B1, but was <10% for monkey B2 [when pooling performance from all behavioral blocks with both target S+ melodies, i.e., S+(L) and S+(H)]. C: distributions of reaction latency (bar-release likelihood) for each monkey for correct target melody S+ trials. The black curve illustrates the data from monkey B1, which was trained only on melody S+(L). The gray line illustrates the pooled correct bar-releases to both S+(L) and S+(H) by monkey B2; the data for the 2 target melodies were combined because there was no observed difference between them in the distributions of bar-release latency. The vertical dashed line indicates the offset time of the S+ sound. D: distributions of reaction latency (bar-release likelihood) for each monkey for incorrect nontarget S− trials. Note similar timing to curves in C. The vertical dashed line indicates the offset time of the S− sound.

This pattern of errors (particularly pronounced in monkey B1) suggests that one behavioral strategy that the monkeys used was a purely spectral “narrow-band, absolute-frequency” strategy to detect the presence of the S+ target sounds. This strategy relied on matching the pitches of individual notes to those of the very similar component notes in the S+ melodies, rather than on matching to the template of the complete melody. This strategy was not surprising, since the initial S− sound set used in training consisted of nontonal sounds (either broader-band FM sweeps, white noise, or monkey calls). Thus for the initial training set, such a “narrow-band, absolute-frequency” strategy would be sufficient.

However, given the monkeys' overall performance, this cannot have been the only strategy they used; otherwise, they would have shown an even higher error rate (i.e., >49% for B1 and >10% for B2) for S− pure-tone stimuli that closely matched the components of the S+ melody. Therefore both monkeys (particularly B2) presumably used an additional strategy to identify the S+ target melody, one that was sensitive to both its temporal and its spectral properties. The difference between the two monkeys in error rate may be due to the fact that monkey B2 was required to listen to the entire S+ melody before responding. This interpretation is supported by the response-latency curves shown in Fig. 4C, in which the response-latency distribution was bimodal for monkey B1, with the major peak at 320 ms and a minor peak at 470 ms after sound onset. This latency distribution, with responses that began as early as 250 ms, was clearly shaped by the timing of reward, in that monkey B1 could obtain a reward by responding immediately after an obligatory 250-ms waiting period following the onset of the S+ sound. The response latencies for monkey B2 were longer, with a peak at 600–620 ms, reflecting the fact that this monkey was required to wait until the S+ melody finished (500 ms) before responding. For comparison, the distributions of response latency for erroneous releases to single S− tones are shown in Fig. 4D. These error latencies are similar to the correct response latencies for both monkeys, as shown for the correct responses to the S+ in Fig. 4C.

Overview of the physiological recordings

This neurophysiological report is based on a comparison of the extracellular responses of 683 single neurons from multiple depths in primary auditory cortex (A1 and R) of two trained monkeys performing the auditory-discrimination task with the extracellular responses of 125 single neurons in primary auditory cortex to similar acoustic stimuli in two naive monkeys. The comparison is limited to neuronal responses to the S+ and S− sounds. (Responses to the other S− sound sets—FM sweeps, band-passed noise bursts, and monkey calls—will be the subject of future reports.)

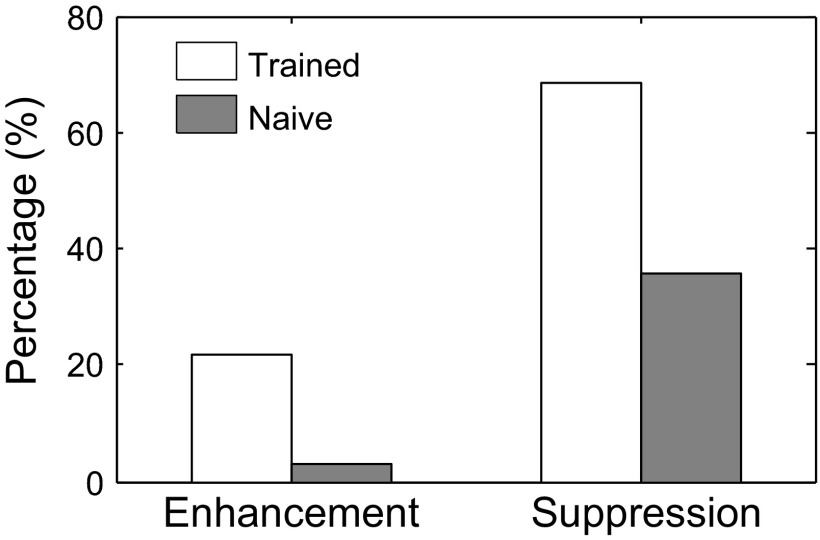

Overall, we found that neuronal firing was strongly modulated during presentation of the S+ melodies in the majority of units in trained monkeys, with 22% (135/615) showing enhancement and 67% (412/615) showing suppression (Fig. 5, white bars). Such widespread modulation to the same four-note S+ sequence was not observed in recorded units of naive monkeys, which showed only 3% (2/73) enhancement and 36% (26/73) suppression (Fig. 5, gray bars). Thus there was an eightfold increase in enhancement effects to the S+ melody in the trained monkeys compared with that found in the naive monkeys and a nearly twofold increase in suppressive effects to the S+ melody in the trained compared with the naive monkeys.

FIG. 5.

Differential likelihood of Class I enhancement and suppression in trained and naive monkeys. There was a significantly greater likelihood of Class I enhancement in trained monkeys compared with naive monkeys (22 vs. 3%), and also a significantly greater likelihood of Class I suppression in trained compared with naive monkeys (67 vs. 36%).

In detail, the neurons in the trained monkeys were recorded in the course of 248 electrode penetrations (113 in monkey B1; 135 in monkey B2). We obtained a frequency-tuning curve for 615/683 neurons, based on responses to single pure tones during task performance. Of these cells, 73% (449/615) showed either enhancement or suppression (as defined earlier) in response to the S+ melody during task performance (Table 1). The fraction of enhancement or suppressive effects was similar in recordings in the two monkeys and similar for both S+ melodies in monkey B2. Specifically, for both monkeys, in response to the S+(L) melody, 15% of units (92/615) showed an enhanced response and 60% of units (371/615) were suppressed. A similar pattern of responses was found to the high-frequency target melody S+(H), given only to monkey B2, in which 56% of units (205/368) showed enhancement or suppression. Specifically, in response to the S+(H) melody, 17% of neurons (63/368) showed enhanced responses and 51% (186/368) showed suppression.

TABLE 1.

Summary of distribution of Class I neurons in trained and naive monkeys

| (+) | (−) | (+) and (−) | No Effect | Total | |

|---|---|---|---|---|---|

| Trained (n = 2) | |||||

| S+(L) | 92 (15.0%) | 371 (60.3%) | 64 (10.4%) | 216 (35.1%) | 615 |

| S+(H) | 63 (17.1%) | 186 (50.5%) | 44 (12.0%) | 163 (44.3%) | 368 |

| S+(L) or S+(H) | 135 (21.9%) | 412 (67.0%) | 98 (15.9%) | 166 (27.0%) | 615 |

| Naive (n = 2) | |||||

| S+(L) | 1 (1.4%) | 15 (20.5%) | 1 (1.4%) | 58 (79.5%) | 73 |

| S+(H) | 1 (1.4%) | 13 (17.8%) | 1 (1.4%) | 60 (82.2%) | 73 |

| S+(L) or S+(H) | 2 (2.7%) | 26 (35.6%) | 2 (2.7%) | 47 (64.4%) | 73 |

(+), enhancement; (−), suppression. See text for further explanation.

The neurons in the naive monkeys were recorded in the course of 37 electrode penetrations (23 in N1, 14 in N2). The distribution of neuronal BF tuning in the naive monkeys overlapped that in the trained monkeys across five octaves (Supplemental Fig. S2), yet, as indicated earlier, they showed far less modulation during presentation of the S+ melodies.

In addition to the S+ melodies, a set of customized four-note sequences centered at neuronal BF was also presented to the task-naive monkeys (see methods). As mentioned earlier, the rationale for using these additional, customized (target-like) stimuli was to elicit a sufficient number of enhanced and suppressed neuronal responses in the naive animals to allow comparison with the pattern of neuronal responses in the trained animals. Hence, in the naive monkeys, all 125 units were studied with a set of six 1/3-octave customized-sequence stimuli (C-sequences). As expected, we observed a greater proportion of enhancement and suppression in these neurons than that in those studied earlier with a single set. Overall, 23% (29/125) showed enhancement and 58% (72/125) showed suppression to at least one of the six permuted C-sequences that were constructed by using the frequencies around each neuron's BF (see methods).

Frequency maps

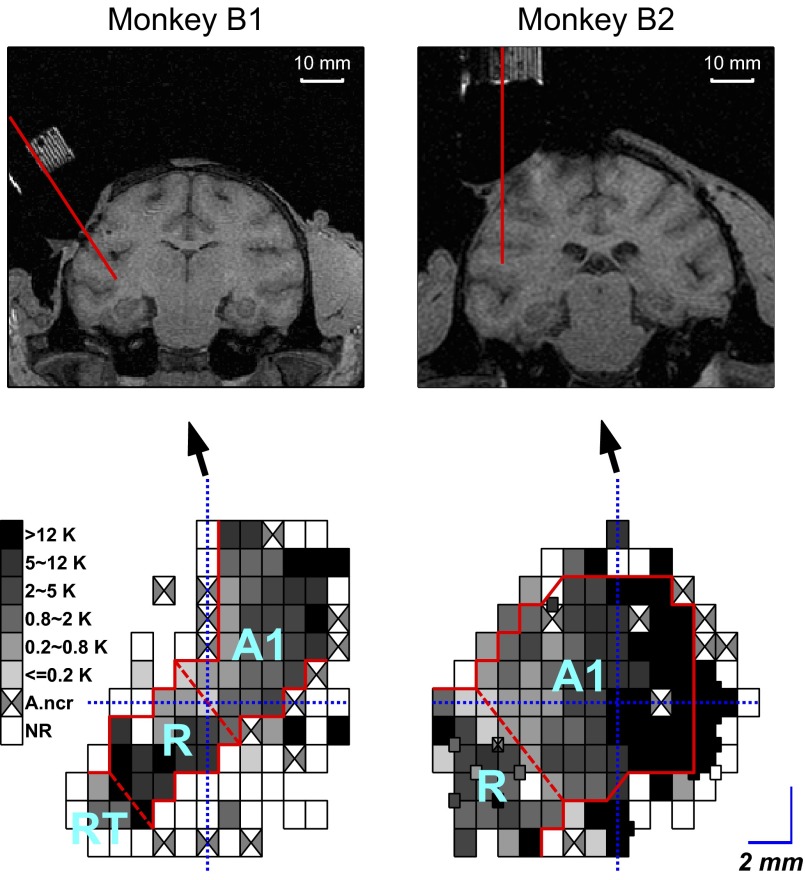

Recording sites covered much of the core auditory cortex and included a frequency reversal at the A1 and R border. This is shown in the grayscale plots (Fig. 6, bottom panels) of the BF maps in the two trained monkeys, ranging from low frequency (light gray) to high frequency (black). In both trained monkeys, the tonotopic axis in A1 ran from caudomedial to rostrolateral, with the highest BFs located caudally and the lowest BFs located rostrally. Continuing rostrally, there was a reversal of the tonotopic organization in both monkeys, consistent with the mirrored tonotopic organization in area R relative to that in A1.

FIG. 6.

Tonotopic maps of primary auditory cortex (A1 and R) in the 2 trained monkeys (B1 and B2). Top: MRI images show coronal sections through the center of the recording chambers in the 2 monkeys. The red lines indicate the path of an electrode penetration through the center of the chamber for B1 and B2. Bottom: best-frequency (BF) map shows the tonotopic maps for A1 and R in the 2 monkeys and the characteristic frequency reversal between A1 and R. The grayscale bar indicates BF, with white squares indicating cortical areas where we could not elicit auditory responses (NR, no response) and squares with crosses indicating cortical areas with auditory responses, which were poorly tuned (A.ncr = auditory, but no clear response to single pure tones). The solid red line indicates the approximate boundary between the primary and nonprimary auditory cortex. The dashed lines divide A1 and R and also divide R and rostrotemporal field (RT) in one monkey (B1).

The BFs in fields A1 and R in the trained monkeys showed less variation in the mediolateral direction than that in the rostrocaudal direction. This tonotopic organization is consistent with the isofrequency organization of A1 and R described in anesthetized macaque monkeys (Hackett et al. 2001; Kaas and Hackett 2000; Kosaki et al. 1997; Merzenich and Brugge 1973; Rauschecker et al. 1997) and in awake macaque monkeys (Recanzone 2000). In monkey B1, there was also a second reversal of the tonotopic axis rostral to R, which may mark the boundary with the rostrotemporal field (area RT), one of the three auditory core areas defined in the primate (Bendor and Wang 2008; Kaas and Hackett 2000; Morel and Kaas 1992).

Although less densely sampled, recording sites in the two naive monkeys were also centered on the core auditory cortex (Supplemental Fig. S3). Based on the tonotopic maps, the recordings in monkey N1 were from A1 and those in N2 were from A1 and R.

Enhanced responses to S+ melodies in trained monkeys

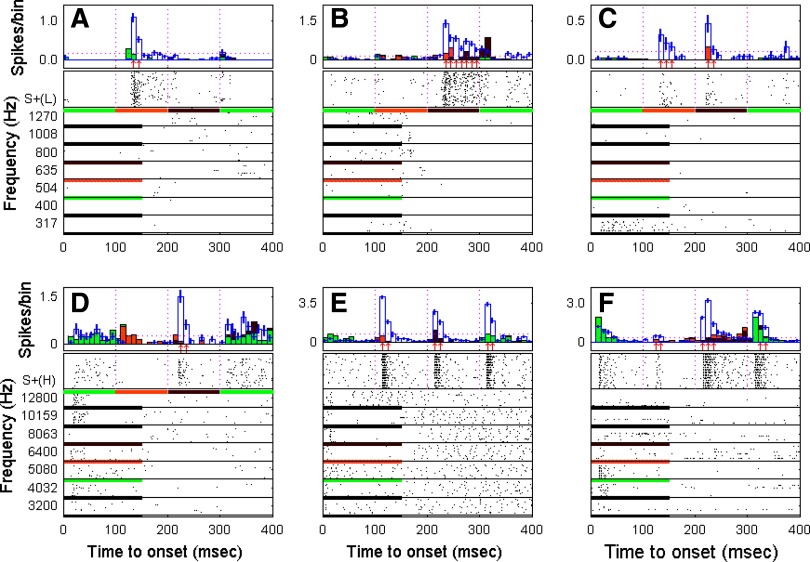

As noted earlier, the responses to the frequencies in the S+ melodies were not always predicted by the linear estimation of the responses to the component single tones alone (e-PSTH). An enhanced response was found to the frequencies in the melodies S+(L) or S+(H) in 22% (135/615) of the neurons (Table 1, column 2). Enhancement could be striking. Even when there were virtually no responses observed to the single tones presented alone, there could be a large response evoked by the melodies in the same frequency region as the component tones. The enhanced responses evoked by the S+ sound occurred at any one or more of the four note positions in the melodic sequence. Individual examples of enhancement to tone components in the melody, from six different neurons, are given in Fig. 7. A population profile of enhancement is given in Fig. 10.

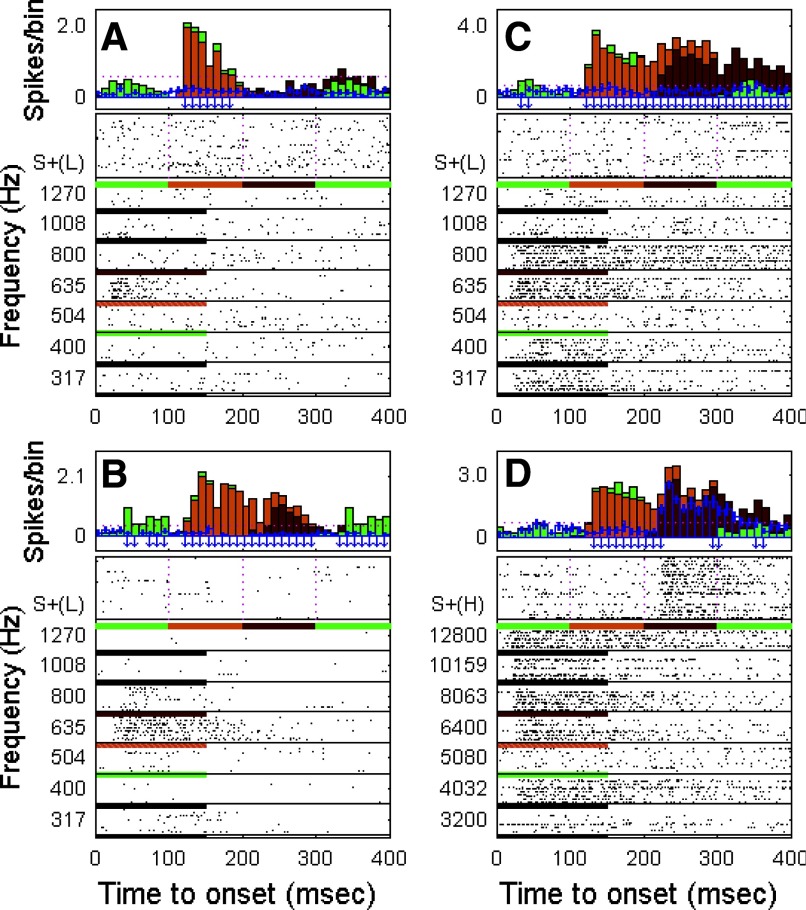

FIG. 7.

Class I neuronal target melody S+ enhancement profiles. Enhanced responses of 6 neurons to individual tone components in the low- and high-frequency S+ target melodies. Each of the 6 panels represents response profiles of different neurons to nontarget S− and target S+ sounds. Each panel contains 2 charts: in the top chart, the bars filled with color indicate the estimation of the response to the S+ target melody that was interpolated from the response to individually presented nontarget S− tones (see methods and Fig. 2). The unfilled blue bars represent the actual evoked response to the S+ target melody. An upward red arrow beneath the PSTH indicates an enhanced response compared with the estimated response for that 10-ms bin. The bottom chart in each panel shows the raster of the evoked responses to the S+ target melody and to the individual nontarget S− tones in the frequency range corresponding to the S+ target melody components. The horizontal bar below each raster block shows the S+ target melody (first 400 ms) and the duration of nontarget S− tones (the colors below the nontarget S− tones indicate the tones that are the nearest neighbors in frequency to the tone components in the S+ target melody).

FIG. 10.

Population profile of facilitatory and suppressive effects; “heat” map of Class I neurons. All Class I neurons were ordered by the time of occurrence of the first bin showing enhancement or suppression effects. The red and blue in the map indicate the presence of enhancement and suppression bins, respectively. See text for further explanation.

As shown in Fig. 7, the enhanced responses evoked by the S+ melody could occur at either the second (Fig. 7, A, C, E, and F), third (Fig. 7, B–F), or fourth note (Fig. 7, E and F) in the melodic sequence. In three neurons, there were enhanced responses to only one note in the melody (Fig. 7, A, B, and D), whereas the other three neurons showed enhanced responses to multiple notes in the melody (Fig. 7, C, E, and F). Two neurons showed enhanced responses to all three notes (Fig. 7, E and F). In more detail, the unit in Fig. 7A showed no response at all to any tones in the frequency range from 317 to 1,270 Hz presented as the S− single tones, but responded vigorously with an enhanced response to the second note of the S+(L) target melody, which had a frequency of 665 Hz (in the middle of the S− pure-tone unresponsive frequency region). The neuron in Fig. 7B showed only a weak off-response to S− pure tones in the frequency range of 800–1,008 Hz, although the note in S+(L) melody with a frequency of 790 Hz evoked an enhanced on-response and a strong, sustained response that was maintained for the full duration of the note. The neuron in Fig. 7C displayed a sustained response to S− pure tones in the frequency range <400 Hz and did not respond at all to tones with frequencies >400 Hz when presented alone as S− stimuli. This neuron, however, responded strongly with marked enhancement to the second and third notes of the S+(L) melody (i.e., 665 and 790 Hz). The neuron shown in Fig. 7D responded to S− tones in the frequency range of 4,032–12,800 Hz with onset or weak sustained responses, with the exception of the S− tone at 8,063 Hz, to which the cell did not respond at all when it was presented as an S− tone. If anything, there may have been a suppressed response to this S− tone. However, as shown in Fig. 7D, there was a strongly enhanced response to the third note of the high-frequency target melody S+(H), which had a frequency of 8 kHz, very close to the frequency that had elicited virtually no response when presented as an S− sound. In Fig. 7E, there was a very strong onset response evoked by the second, third, and fourth notes of the S+(H) melody, even though there was little or no response to any S− tone except for a very small onset response at 8,063 Hz. This neuron also offers an interesting example of differential responses to the same frequency tone (5,000 Hz) located in different positions of the target melody (first and last positions). Although there was no response or faint response to the first note, strongly enhanced activity occurred in response to the fourth note, a sequence position that made it more clearly part of the S+ melody. The final example is shown in Fig. 7F. The responsive frequency range for this neuron to S− tones was narrow, restricted to an onset response to a single frequency (5,080 Hz). However, the neuron showed a strongly enhanced response to the third note (8 kHz) of the S+(H) target melody. As in the earlier case (Fig. 7E), this neuron too showed an enhanced response to the fourth note in the S+(H) melody (5 kHz), although the response to the first note was predictable from the S− tone response (Fig. 7, E and F). These examples illustrate striking enhancement to the notes in the S+ melody and also the selective nature of the enhancement—i.e., not only to the frequency of a given component note, but also to its position in the melodic sequence.

Suppressed responses to target (S+) melodies

We also observed sequence-selective suppressive effects in many neurons, e.g., neurons that responded well to single pure-tone transients when presented as S− stimuli but showed a relatively diminished response when the same frequencies were presented as notes in the four-tone S+ melodies. As shown in Table 1, in the trained animals there was a threefold greater likelihood of suppressive effects [67% (412/615) to S+(L) or S+(H) melodies] than enhancement effects [22% (135/615)]. Individual examples of sequence-specific suppression from three neurons are illustrated in Fig. 8 and a population profile of suppression is shown later in Fig. 10.

FIG. 8.

Class I neuronal target melody S+ suppression profiles. Suppressed responses of 3 neurons to individual tone components in the low- and high-frequency S+ target melodies. The panel layout is the same as described in Fig. 7. The blue downward arrows indicate the bins showing suppression. A and B: 2 neurons showed suppression to the S+(L) target melody, particularly to the 2nd note. Although both narrowly tuned neurons displayed a strong response to the single nontarget S− tone frequency of 635 Hz (bottom rasters in A and B), neither responded to the component notes of the S+(L) target melody at nearby frequencies such as the 2nd tone frequency of 665 Hz. In addition to strong suppression of the 2nd tone frequency in the S+(L) target melody, the 2nd neuron (B) also showed significant (though less) suppression to the 3rd and 4th tone frequencies in the S+(L) target melody. C and D: responses of a 3rd neuron both to the S+(L) target melody (C) and also to the S+(H) melody (D). In this broadly tuned neuron, individual nontarget S− single pure tones evoked strong and sustained responses to tones over a wide frequency range that encompassed all component frequencies in both target melodies S+(L) and S+(H). However, as shown in C, there were virtually no responses to the target melody S+(L), and thus marked suppression. Also, as shown in D, there was a strongly suppressed response to the 2nd tone of the S+(H) target melody, although there was a clear response to the 3rd S+(H) component note in the high-frequency target melody.

The two neurons shown in Fig. 8, A and B did not respond at all to the S+(L) melody, even though they showed a marked response to the S− tone with a frequency of 635 Hz, close to the frequency of the second note (665 Hz) of the S+(L) melody. Another example of suppression is shown in Fig. 8, C and D. This neuron had at least two responsive frequency areas (317–1,008 and 4,032–12,800 Hz, Fig. 8, C and D, respectively) that correspond to the frequency range of the component notes in the low- and high-frequency S+ melodies. Although this unit showed a sustained/prolonged response to a wide range of S− tones, it showed profound suppression to the S+(L) melody (i.e., no response at all) and also showed a parallel, suppressed response to the S+(H) melody.

Comparison of enhancement and suppression patterns to four-note sequences (S+ melodies and C-sequences) in trained and naive monkeys

In recording from primary auditory cortices in the two naive monkeys, we found only 2 of 73 units that showed response enhancement to either of the S+ melodies. Using C-sequences, however (see methods), the enhancement percentage increased eightfold to about 23% (29/125) from <3% (2/73) and was thus comparable to the enhancement distribution seen in the trained animals. The construction of the C-sequences optimized the likelihood of obtaining context-dependent response modulation in the naive animals, since we 1) customized the C-sequences to be close to each neuron's BF and 2) included modulations to all six possible combinations of notes (rather than simply to the 1–2–3–1 sequence). Had we correspondingly modified the task and customized the S+ melody for each recording in the trained animal, or included all modulations for all note combinations, it is likely that we would have observed a much higher prevalence of modulation effects in the trained animals.

However, the modulation effects obtained from the C-sequences in the naive animals were useful for comparison with modulation observed to S+ melodies in the trained monkeys. We first analyzed the data to determine whether there were any differences between the two populations in the strength of enhancement or suppression in response to the tone sequences, using the strength index (SI; see methods). The median SI value for the enhancement effect to the S+ melodies in the neuronal population from the trained monkeys (0.723, n = 190) was greater than that observed in naive monkeys (0.43, n = 2) and it was also greater than the median SI value of 0.603 (n = 72; P < 0.01, Wilcoxon rank-sum test) for the 1/3-octave C-sequences presented to the naive monkeys. The comparison is shown in Fig. 9 A1 (top).

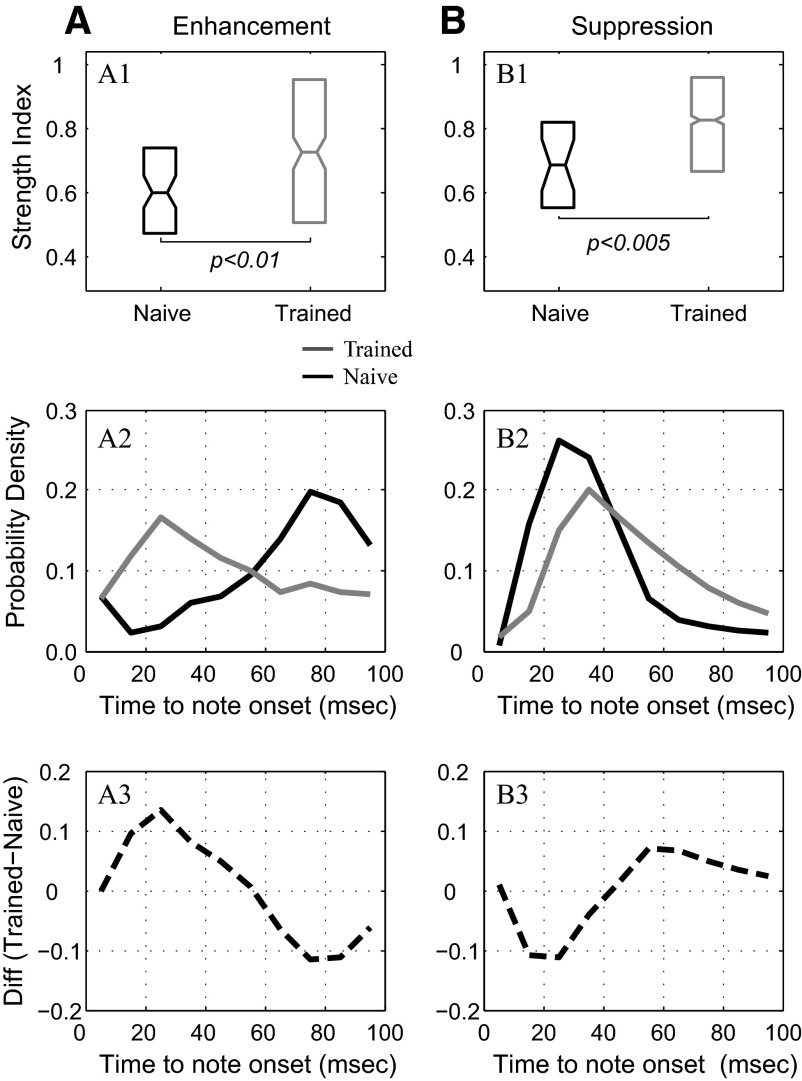

FIG. 9.

Comparison of responses in trained and naive monkeys; strength index (SI) and time course of responses. A1 and B1: population summaries of SI data from all Class I neurons in the trained and naive monkeys. The box plots in the figures show the median (horizontal midline in plot), the ±95% confidence intervals (side notches), and the 25th and 75th percentiles (bottom and top edges of plot) of each population distribution. The neuronal populations from trained monkeys (gray box plots) showed a significantly greater SI value in both enhancements (A1) and suppression (B1) than did the neuronal population recorded in naive monkeys (black box plots) in response to 1/3-octave customized sequences (C-sequences; with frequencies close to BF). A2 and A3: probabilistic time course of enhancement in trained monkeys (gray curves) and naive monkeys (black curves). The probability density distribution of the enhancement bins is displayed in relation to note transitions in the target melody sequence. As shown in A2, the likelihood of enhancement peaked in the 20- to 30-ms bin in trained monkeys, and overall, the greatest likelihood of enhanced responses occurred in the first 50 ms of note duration. In contrast, the enhancement peaked in the 70- to 80-ms bin in naive monkeys and the greatest likelihood of enhanced responses occurred in the last 50 ms of note duration. These differential time courses for enhancement in the trained and naive monkeys are also evident in the difference plot (A3). B2 and B3: the probabilistic time course of responses displaying suppression in trained (dashed gray lines) and naive monkeys (black lines). The likelihood of suppression peaked in the 30- to 40-ms bin for the trained monkeys and about 10 ms earlier in time for the naive monkeys in response to the 1/3-octave C-sequences (B2). This different time course for suppression is also evident in the cumulative density function plot (B3). Although less pronounced than in the differential time course for enhancement shown for trained vs. naive monkeys in A2 and A3, the differential time course for suppression, shown in B2 and B3, is also significant.

A similar result was obtained when we compared the magnitude of the suppression effect in the two neuronal populations. The neurons that exhibited suppression in the trained monkeys showed a significantly larger median SI value (0.823, n = 1,107) than the SI value found in the task-naive monkeys both for S+ melodies (0.775, n = 28, P < 0.001) and for C-sequences (0.685, n = 316, P < 0.005, Wilcoxon rank-sum test for both). This comparison is shown in Fig. 9B1 (top).

We also found that the temporal pattern of the enhanced responses in the naive and trained monkeys was strikingly different. In the task-naive monkeys, most of the enhancement responses occurred during the latter half of a given note in the C-sequence (i.e., with a latency >50 ms). This observation is compatible with the results from previous research studying responses to tone pairs in naive monkeys (Bartlett and Wang 2005; Brosch et al. 1999), which found that in most neurons, the enhanced responses to the second tone occurred during a late response phase after the initial response. In sharp contrast, the enhanced response in our trained monkeys had a much shorter latency, occurring during the onset response to a given note in the S+ melody.

To quantify the temporal differences between enhanced (and suppressed) responses in the trained and naive animals, we directly compared the time course of the neuronal population responses from the two pairs of animals. We computed probability density functions (PDFs) on a 10-ms bin-by-bin basis over a time course spanning the second, third, and fourth notes of the tonal sequences. For this analysis we used 400 bins from 135 neurons showing enhanced responses in the trained monkeys and 221 bins from 29 neurons with enhanced responses in the naive monkeys. As shown in Fig. 9A2 (middle), the likelihood of a bin showing an enhanced response in the trained monkeys peaked at 25 ms (corresponding to the third bin 20–30 ms following note onset within the S+ melody). In contrast, the likelihood of an enhanced response in the naive monkeys peaked much later, at 75 ms (corresponding to the eighth bin 70–80 ms following note onset within the C-sequences). The two-sample Kolmogorov–Smirnov (KS) test showed a highly significant difference (P < 0.001) in the distributions of the temporal occurrence of enhancement in the two neuronal populations. Figure 9A3 shows this difference in time course of enhancement between trained and naive monkeys.

Figure 9B2 shows the same analysis applied to suppressive effects. In the trained monkeys, the population density functions were constructed from bins with suppressive effects to the S+ melodies (a total of 5,373 bins from a total of 412 neurons; gray curve in the figure). In the naive monkeys, the population density functions were constructed from bins showing suppression to the C-sequences (a total of 1,433 bins from 72 suppression neurons; black curve). As with the enhancement effects, there were significantly different patterns in the time course of the suppressive response in the trained and naive monkeys. The PDF from the naive monkeys showed an early sharp peak (at 25 ms, corresponding to the third bin, 20–30 ms from note onset), whereas the peak of the PDF for the trained monkeys was relatively broader and occurred significantly later in time (at 35 ms, corresponding to the fourth bin, 30–40 ms from onset). As earlier, the two-sample KS test showed a highly significant difference (P < 0.001) between the distributions of the temporal occurrence of suppression in naive and trained monkeys. Figure 9B3 shows this difference in time course of suppression between the two groups.

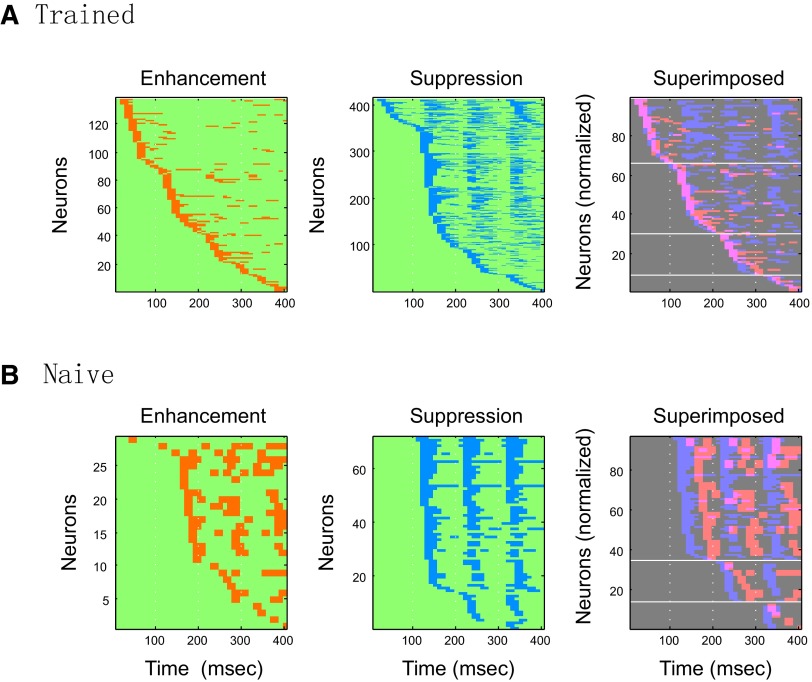

The “heat” maps in Fig. 10 show the population profiles of enhancement effects (in red) and suppression effects (in blue) across neurons and time course. The heat maps are sorted in the order of the timing of the first modulated (enhanced or suppressed) 10-ms bin for individual neurons. The maps reveal several interesting differences in enhancement and suppression response patterns between trained and naive monkeys. First, the onset times of the modulated bins (for both enhancement and suppression) are more sparsely distributed in neuronal responses of the trained monkeys than in those of naive monkeys. Also, in accord with the results shown in Fig. 9, in naive monkeys, enhancement effects (to C-sequences) typically occurred with a relatively long latency after each of the note transitions or occurred following a prior wave of suppressive effects (Fig. 10B). The amplitude of these enhancement effects in the naive monkeys was comparatively weak. Thus the overall pattern, shown in Fig. 10B (rightmost panel), reveals an alternating pattern of successive waves of suppression and enhancement for each note transition in the four-note C-sequence. At a global level, this pattern did not vary much between successive note transitions. In marked contrast, a very different overall population pattern occurred in the responses of trained monkeys, since the enhancement effects showed greater amplitude and occurred with a short latency, at about the same time as the suppressive effects (Fig. 9). The net effect of this differential timing in responses in the trained animal was to form a striking “coincidence wave” of modulation that continuously progressed across the neuronal population from target (S+) melody onset to offset and was followed by periods of subsequent suppression (Fig. 10A). This moving “coincidence wave,” beginning with the response to the first note, may encode the occurrence of the initial, salient S+ “event” and the subsequent tone transitions that characterize the S+ melody.

Nonauditory neuronal activity: Class II

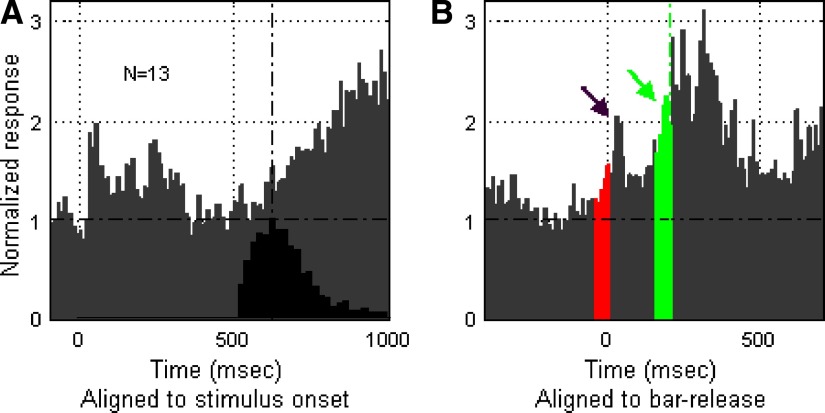

In analyzing neuronal activity during task performance, we observed neurons that showed nonauditory activity that was highly correlated with 1) task-related bar release, the behavioral response that could lead to reward, and/or 2) task-related onset of bar grasping, and/or 3) the anticipation of reward. We also observed neurons that responded during reward delivery; however, since there were unavoidable, multiple acoustic features associated with reward delivery (clicking of solenoid for water release, licking of the monkey) we focused our analysis instead on reward-related activity that preceded bar release and actual reward delivery (see methods for description of criteria for classification as Class II neurons). Out of a total of 683 single neurons isolated during the performance of the task, 13% (90/683) showed pre-reward-related activity (see Table 2). Of these 90 units, 20 cells from monkey B1 and 65 from monkey B2 showed bar-release–related activity. Moreover, 13/70 cells from monkey B2 showed a separate and distinct increase in activity prior to reward delivery (which closely coincided in time with the onset of bar grasping).

TABLE 2.

Class II neurons showing nonauditory responses during task performance

| Monkey | Total Class II Neurons | Bar Release–Related Response | Reward-Related Response | Total Neurons Analyzed |

|---|---|---|---|---|

| B1 | 20 (15.6%) | NA* | NA* | 128** |

| B2 | 70 (12.6%) | 65 | 13 (8 overlap) | 555** |

Since the rewards were delivered immediately after the bar release in monkey B1, it was unclear whether the response was associated with bar release or with anticipation of reward.

This number includes 68 neurons that were excluded from Table 1 because they had been tested not with the S− tone set but with the other S− sound sets instead.

The neuronal responses shown in Fig. 11 (A and B) were recorded from two neurons in monkey B1. This monkey had been trained to release the touch bar as soon as possible after detecting the S+ melody for immediate reward following a correct bar release (Fig. 1B1, S+ trial type 1). In this behavioral paradigm, the quickest bar release took ≥250 ms from the onset of the S+ and bar-release probability peaked at 320 ms following S+ onset. Neuron 1 (Fig. 11A) had a predictable transient on response to the S+ and a striking long-lasting response that began at about 300 ms (Fig. 11A, left). This activity was only loosely correlated with any feature of the S+ melody. However, by realigning all the spikes in relation to bar release and delivery of the reward (Fig. 11A, right), a much more tightly correlated pattern emerged. Spike activity began at about 50 ms before the bar release or the delivery of reward (see blue arrow). A similar pattern was observed in the spiking activity of neuron 2 (Fig. 11B). Although neuron 2 was tuned to a high frequency at around 12.8 kHz, it had a response evoked by the S+(L) melody that began about 300 ms after S+(L) onset (Fig. 11B, left). As in neuron 1, the activity in neuron 2 also showed a better correlation to the upcoming bar release or reward delivery (Fig. 11B, right). An increase in activity in neuron 2 appeared about 50 ms before bar release or reward delivery. To ascertain whether this activity was related to bar release or to anticipation of reward, we delayed the delivery of the reward to 100 ms after a correct bar release (as shown in Supplemental Fig. S4). Spikes from neuron 2 showed a better correlation with bar release than with reward delivery, thus suggesting that this spiking activity component might be related either to tactile sensation or motor output during bar release. In contrast, the neuron shown in Fig. 11C from monkey B2, to which reward was delivered 200 ms after the bar release rather than simultaneously with it (Fig. 1B2, S+ trial type 2), shows clear postbar-release–related activity as well as activity in anticipation of reward (Fig. 11C, right). However, in general, bar-release–related activity was not as strong or as long-lasting in monkey B2 as in monkey B1 (Fig. 11A), even though the activity of 65 neurons in monkey B2 was of this type. This physiological difference may be related to the distinct task variants learned by each monkey [see methods (Fig. 1B) and also discussion].

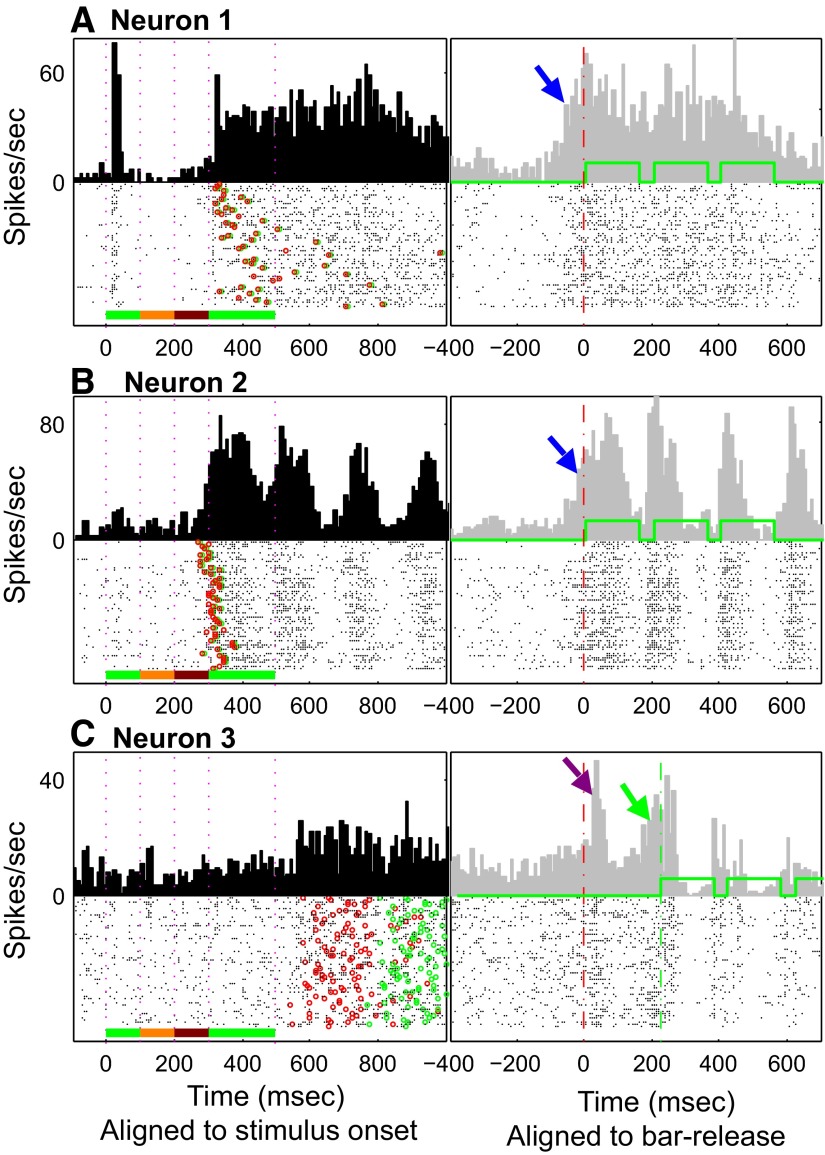

FIG. 11.

Neurons with Class II (nonauditory) activity. Three neurons with Class II type responses are shown in this figure. In each plot, the raster and PSTH were aligned to the onset of the S+ target melody (left) and aligned to bar-release (right). Each black dot in all rasters marks the temporal position of one spike during a trial. Left: the vertical red dotted lines indicate the onset and offset times of the notes in the S+ target melody; the red circles indicate the bar-release time; the green circles indicate the onset time of the reward delivery. Right panels: the red vertical dashed line indicates the time of bar-release. The 2 neurons in examples A and B are from monkey B1, trained on the type 1 version of the task, which allowed rapid bar-release 250 ms after S+ target melody onset followed immediately by delivery of reward. Thus in A and B the red vertical dashed line also indicates the onset time of water reward delivery, which consisted of 3 drops of water (the corresponding 3 solenoid open times for water delivery are shown by solid green line). A, left: neuron 1 showed a large, transient response to the onset of the S+ target melody and, after nearly 200 ms of silence, began sustained activity starting around 300 ms after S+ target melody onset. This latter activity built up immediately before the bar-release/reward delivery (blue arrow, right). As seen in the PSTH aligned to bar-release (right), the sustained response is time-locked better to bar-release/reward delivery than it is to the sensory stimulus. B: neuron 2 shows the same type of release-associated activity (blue arrow, right) immediately before the bar/reward events as shown in A. This neuron, unlike neuron 1, also showed a strong response to the onset and offset of acoustic solenoid noise associated with opening and closing the valve for water reward delivery (green line: 3 square waves showing solenoid open times). C: responses from neuron recorded in monkey B2, trained on the type 2 version of the task, which required delaying the response until the offset of the S+ target melody, followed by reward delivery at a fixed delay (200 ms) after bar-release. The green dashed line indicates the time of the reward delivery for this example. This neuron gave no response to the S+ target melody, but it gave a clear, transient response after bar-release and it also showed increased activity before the onset of reward delivery.

The firing patterns of the three individual Class II neurons shown in Fig. 11 clearly illustrate activity related to nonacoustic events. To provide an overview of the time course of all Class II activity in relation to these events, we summarize population activity in Figs. 12 and 13 (from monkeys B1 and B2, respectively).

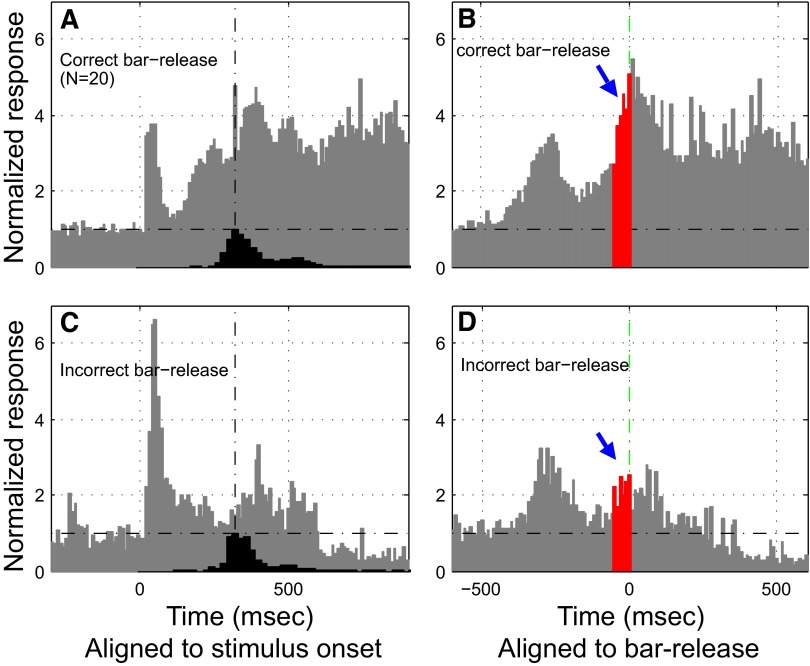

FIG. 12.

Population analysis of Class II nonacoustic responses; evidence for prebar-release activity. The population PSTHs (gray histograms in A–D) represent the average of individual normalized PSTHs (one from each neuron in the population). The PSTH from each individual neuron was normalized to the mean spontaneous baseline, which is indicated by a horizontal dot-dashed line in each population plot. The black histograms in A and C show the time distribution of bar release during task performance and the vertical dot-dashed lines mark the peak time of the distributions. A and B: population response (20 neurons) of correct bar-releases to S+ melodies, aligned to the onset of these stimuli (A) and to bar-release (B), respectively. A: this population PSTH was derived from responses of Class II neurons in monkey B1 during performance of the type 1 version of the task, which permitted bar-release immediately after onset of the S+ melody followed by immediate reward delivery. Note responses to onset of the S+ stimulus as well as to bar-release and reward delivery (seen more clearly in B, where the same spike data are aligned to bar-release). The red portion of the release-aligned histogram (B) indicates premovement activity (50 ms prior to bar-release) and water delivery time (indicated by the green dotted line). C and D: population activity of incorrect bar-release to the nontarget S− stimuli. The population PSTH in C was aligned to onset of the acoustic stimuli and in D it was aligned to bar-release. Unlike the case in B for correct release to the S+ melody, in D there is relatively little buildup to bar-release for incorrect releases to S− sounds.

FIG. 13.

Population analysis of Class II nonauditory responses; evidence for postbar-release activity. Population PSTH and release-aligned histograms (in gray) from Class II neurons (n = 65) in monkey B2 during performance of the type 2 version of the task, which required delaying bar-release until offset of the S+ melody, with reward delivery occurring 200 ms later. A and B: population activity for all correct behavioral responses (bar-release after S+ melodies) aligned to stimulus onset (A) and to bar-release (B). C and D: population response for all incorrect bar-releases to S− stimuli. The red portion (width = 50 ms) of the bar-release–aligned histogram (B) indicates an increase in activity that begins ≥50 ms prior to bar-release (as can be seen in B the increase in activity begins ∼150 ms before bar-release). However, as clearly shown in B and D, there is much greater activity following bar-release than before bar release [not only for correct responses (B) but also for incorrect responses (D)]. The green dotted line in B marks the water reward delivery onset time and the green portion (duration = 50 ms) of the PSTH (B) indicates an absence of anticipatory activity prior to water reward. The PSTH in B indicates that most of the increased activity occurred after the onset of water delivery. The black histograms in A and C show the temporal distribution of bar-release during task performance.

The population activity of Class II neurons from monkey B1 was aligned to stimulus onset and showed an initial transient onset response to the S+ melody (Fig. 12A ). All Class II neurons recorded in A1 or R showed auditory responses of this kind. Following the sensory onset response, there was a gradual increase leading to a second, strong, long-lasting period of activity that occurred about 250 ms after stimulus onset (Fig. 12A). This second, long-lasting period of activity, observed in correct trials, was strikingly shortened and diminished in the population spiking activity from incorrect bar-release trials to S− tones (Fig. 12C). After realigning the population activity for all the experimental trials to bar release/reward delivery, we observed a large peak (blue arrow) during correct trials that had a clear buildup phase (Fig. 12B, red area). In contrast, during incorrect bar release to S− tones, relatively weak bar-release activity was observed (Fig. 12D). This population pattern of auditory cortical activity, preceding and following bar release, illustrates the contribution of nonacoustic inputs in modulating Class II neuronal activity. This population activity is in accord with results from single neurons shown in Fig. 11.

When population activity for correct trials of monkey B2 was aligned to stimulus onset, it showed a small acoustic onset response to each of the four notes of the S+ melodies (initial four gray peaks in Fig. 13A) as well as a long-lasting buildup response following offset of the melodies. A large transient peak following bar release (purple arrow) became visible when the activity was realigned to bar release (as shown in Fig. 13, B and D). This peak of activity associated with bar release was preceded by relatively weak activity (Fig. 13, B and D). As in monkey B1, the population response during correct bar-release trials was greater than the response from incorrect bar release to S− tones (Fig. 13, C and D).

Additional evidence for reward-related activity is given in Fig. 14, which shows the population activity from a small subset of Class II neurons (n = 13 from monkey B2). The histograms in this figure, aligned to stimulus onset (Fig. 14A) and to bar release and reward delivery (Fig. 14B), illustrate a pattern of gradual buildup activity prior to reward delivery (green arrow) that was clearly distinct from the bar-release response (purple arrow). As mentioned, one important caveat is that bar regrasp occurred close to the time of reward delivery, so that it is difficult to disentangle the contributions to this activity of motor, cognitive, and nonauditory sensory inputs.

FIG. 14.

Population analysis of Class II nonauditory responses; evidence for pre-reward activity. Population PSTH and release-aligned histograms (in gray) from Class II neurons (n = 13) with responses highly correlated with reward delivery. All responses were from monkey B2 during performance of the type 2 version of the task. The green dotted line in B indicates the water delivery time (200 ms after a bar-release). The red portion (duration = 50 ms) of the release-aligned histogram (B) indicates pre-movement activity that begins ≥50 ms prior to bar-release. The green portion (duration = 50 ms) of the release-aligned histogram (B) indicates anticipatory activity that begins ≥50 ms prior to water reward. The black histogram in A shows the temporal distribution of bar-release during task performance. Note the presence of an auditory response to the S+ melody in A and, in B, a small response preceding and following bar-release, as well as strong activity time-locked to the start of reward delivery.

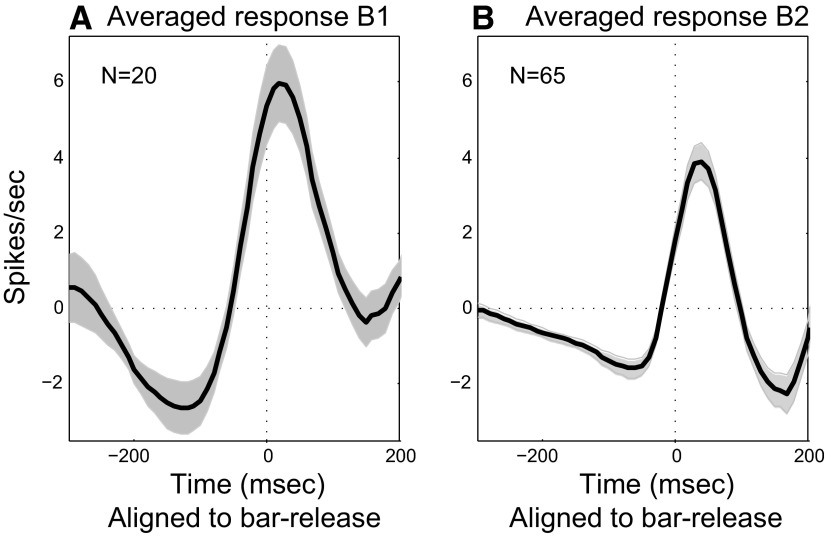

By extracting the nonacoustic responses of Class II neurons (see methods) and pooling these responses for each monkey, it was possible to obtain population profiles of Class II effects. As shown in the two panels of Fig. 15, the population responses from identified Class II neurons in the two trained monkeys had similar response patterns: suppression began at approximately 250 ms and reached a valley at 50–100 ms before bar release, which was followed by an excitatory peak near the bar release.

FIG. 15.

Overall analysis of Class II responses. Average response to bar-release for the population of Class II neurons in each trained monkey. The shaded area indicates the SE of the average response.

In conjunction with observational evidence that neither of the trained monkeys was vocalizing, nor making task-related sounds during task performance (see Supplemental Fig. S5), these individual neuronal and population responses of bar-release–related activity provide strong evidence for nonacoustic activity in the auditory cortical core of the macaque monkey.

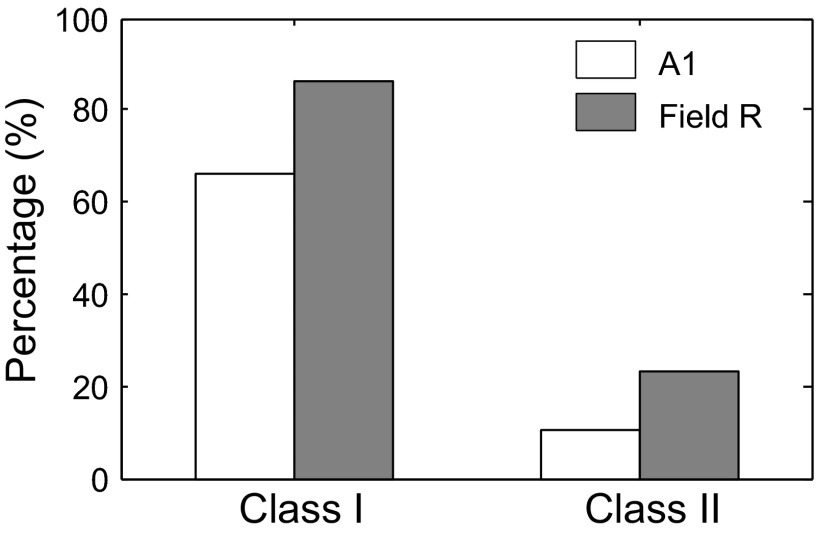

Differential distribution of Class I and Class II neurons in A1 and R

We analyzed the location of Class I and Class II neurons in the auditory core areas (Fig. 16), based on the mediolateral position of the penetrations relative to the tonotopic maps (Fig. 6). In field A1, 66% (292/441) of the cells were classified as Class I and 11% (47/441) were classified as Class II. By contrast, in field R, 86% (150/174) of the cells were classified as Class I and 23% (40/174) as Class II (16 neurons had both Class I and Class II properties). These interareal distributions were significantly different using the chi-square test (P < 0.001 for both Class I and Class II).

FIG. 16.

Differential distribution of Class I and Class II neurons in fields A1 and R. Class I neurons were more prevalent in R than in A1 (86 vs. 66%). Class II neurons were more prevalent in R than in A1 (23 vs. 11%). Both differences are highly significant (see text).

DISCUSSION

Until recently, the function of the primary auditory cortex has been viewed mainly from the perspective of auditory information processing. However, new evidence suggests that activity in A1 and R can also be modulated by top-down influences such as attention and expectation (Fritz et al. 2007a,b) and by other nonacoustic influences (Foxe and Schroeder 2005; Ghazanfar and Schroeder 2006). In these experiments, we observed the presence of neuronal activity that was dependent on behavioral as well as acoustic context and, accordingly, we have defined two new classes of neuronal effects: 1) Class I: sequence selective and 2) Class II: nonauditory. Since we found neurons with these properties at all cortical depths, our data do not provide evidence for laminar segregation of these two classes (see Supplemental Fig. S1). However, our data do indicate significant quantitative differences in the proportion of these classes in A1 and R (Fig. 16). We speculate that the Class I responses may comprise a set of mechanisms that constitute an early stage of encoding of a behaviorally salient melody and may contribute to a long-term sensory representation of the melody. These mechanisms offer insight into the neural correlates in the auditory cortical core of melody processing for a very simple tone sequence. In contrast, we propose that Class II activity may help link auditory perception and behavior (since all type II neurons also responded to acoustic stimuli). Both types of activity are likely to contribute to performance of audiomotor tasks and illustrate the role of the auditory core in developing an early cortical representation of salient acoustic stimuli and a unified representation of auditory as well as relevant nonacoustic events.

In the following two sections we examine our results showing Class I enhancement and suppressive effects to tone sequences in the context of previous studies. In the next two sections, we discuss these sequence-related response modulations in terms of possible behavioral strategies used by the trained monkeys and the implications for representation of auditory sequences in A1 and R. Finally, we discuss our observations of Class II nonauditory responses in the context of other recent findings of multisensory representations in primary sensory cortices.

Class I effects and tone-sequence enhancement of responses in auditory cortex

Some of the earliest studies to examine facilitated responses to pairs of stimuli (Feng et al. 1978; O'Neill and Suga 1979) observed neurons in the auditory system of echolocating bats that responded selectively with enhanced responses to pairs of tones or FM chirps at specific interstimulus delays between acoustic stimuli, corresponding to behaviorally relevant echo delays. In nonecholocating species such as cat and monkey, enhanced responses to two-tone combinations have been demonstrated (Bartlett and Wang 2005; Brosch and Schreiner 1997, 2000; Brosch et al. 1999). A high proportion of neurons in primate primary auditory cortex exhibited intrinsic facilitation to two-tone combinations if the appropriate tone pairs were presented (Bartlett and Wang 2005; Brosch and Schreiner 1997, 2000; Brosch et al. 1999; De Ribaupierre et al. 1972). To elicit maximal facilitation the tone pairs had to be chosen carefully and often required highly specific spectral and temporal separation of the tone-pair stimuli (Bartlett and Wang 2005; Brosch and Schreiner 2000). Brosch and colleagues (1999, 2000), in recordings from auditory cortex in anesthetized macaque monkey and cat, found that the combination of two tones (T1 and T2) most likely to elicit greatest T2 facilitation is composed of tone pairs separated by about 1.0 octave in frequency and about 100 ms in time of onset. There are even fewer studies of sequences composed of three or more tones (Espinosa and Gerstein 1988; McKenna et al. 1989). Earlier studies as well as the present study generally find context dependence that is not predictable from responses taken outside of the context of the sequence.

Although there are a handful of neurophysiological studies that have investigated cortical responses to two-tone combinations, there are even fewer that have explored responses to longer tone sequences. Although less frequently studied, sequence-sensitive neurons have been described in the auditory cortex of the cat and monkey (Espinosa and Gerstein 1988; McKenna et al. 1989; Yin et al. 2001). The study by McKenna and colleagues (1989) explored responses of neurons in cat primary and secondary auditory cortex to a variety of tone sequences (each composed of five isointensity 300-ms tones with 300- to 900-ms intertone intervals) and demonstrated that the response to the tones within the sequence was not predictable from the responses to isolated tones (presented with 3,200-ms intertone intervals). Espinosa and Gerstein (1988) reported order sensitivity in neural networks in A1 by showing that cross-correlated activity between pairs of neurons in auditory cortex during presentation of a given tone was highly dependent on the presentation order of the tone frequencies in sequences of three tones. In the task-naive rhesus monkeys in the present study, we found some facilitated responses to four-tone control sequences with 1/3-octave separation between the successive tones (C-sequences).

Thus it is possible that many, if not most, neurons in the primary auditory cortex are sequence sensitive when stimulated with the appropriate sound sequence. In interpreting the results of our experiments, the key question is whether the response enhancement that we observed to the second, third, and fourth tones in the four-tone target melody S+ sequences are the result of learning and/or attention, or whether the enhancement can be more parsimoniously explained as the result of purely automatic, preattentive mechanisms enhancing tonal responses in the context of a tonal sequence (sensory facilitation)? If it were the latter, similar facilitation should occur in untrained monkeys. To discriminate between these two possible explanations of our data (sensory vs. learned and/or attention-driven enhancement), we compared: 1) S+ melody enhancement in the trained versus task-naive animals and 2) S+ melody enhancement in the trained animals versus C-sequence facilitation in task-naive animals. The data support differences between trained and untrained animals, thus ruling out purely sensory facilitation as the explanation (see Supplemental Fig. S2). These results therefore support facilitation due either to learning (by rerepresenting the behaviorally important tone sequence) or/and by attention. In these experiments, the data are not sufficient to distinguish between the contributions of learning and attention to the observed enhancement.

To compare the characteristics of responses to the S+ melody in trained and naive monkeys' auditory core areas, we sought to match the distribution of cell tuning in the two populations of neurons from primary auditory cortex. As shown (Supplemental Fig. S2, A and B) the BF distribution of these two populations overlaps for over five octaves. Moreover, facilitatory and suppressive effects are largely independent of neuronal best frequency (Supplemental Fig. S2C).

A methodological limitation of our data set is the lack of physiological responses to S− tones precisely matched to the component notes in the S+ melody. Although our interpolation techniques, based on responses to near-frequency–matched single S− tones, allowed us to estimate a predicted response to the component notes of the S+ melody, it might not be possible to predict the responses to the third and fourth tones without taking into consideration the offset responses of all the previous tones, rather than just the immediately preceding tone. In our interpolation procedure, the predicted response to a component tone in the target melody is based on the sum of the off-response of the just previous tone and the on-response of the current tone (derived from responses to the component tones presented alone). Although our justification for doing so is that we did not observe off-responses to single tones that persisted for >100 ms, more subtle effects of the previous sequence of notes may have persisted beyond that limit.