Abstract

In this primer, we give a review of the inverse problem for EEG source localization. This is intended for the researchers new in the field to get insight in the state-of-the-art techniques used to find approximate solutions of the brain sources giving rise to a scalp potential recording. Furthermore, a review of the performance results of the different techniques is provided to compare these different inverse solutions. The authors also include the results of a Monte-Carlo analysis which they performed to compare four non parametric algorithms and hence contribute to what is presently recorded in the literature. An extensive list of references to the work of other researchers is also provided.

This paper starts off with a mathematical description of the inverse problem and proceeds to discuss the two main categories of methods which were developed to solve the EEG inverse problem, mainly the non parametric and parametric methods. The main difference between the two is to whether a fixed number of dipoles is assumed a priori or not. Various techniques falling within these categories are described including minimum norm estimates and their generalizations, LORETA, sLORETA, VARETA, S-MAP, ST-MAP, Backus-Gilbert, LAURA, Shrinking LORETA FOCUSS (SLF), SSLOFO and ALF for non parametric methods and beamforming techniques, BESA, subspace techniques such as MUSIC and methods derived from it, FINES, simulated annealing and computational intelligence algorithms for parametric methods. From a review of the performance of these techniques as documented in the literature, one could conclude that in most cases the LORETA solution gives satisfactory results. In situations involving clusters of dipoles, higher resolution algorithms such as MUSIC or FINES are however preferred. Imposing reliable biophysical and psychological constraints, as done by LAURA has given superior results. The Monte-Carlo analysis performed, comparing WMN, LORETA, sLORETA and SLF, for different noise levels and different simulated source depths has shown that for single source localization, regularized sLORETA gives the best solution in terms of both localization error and ghost sources. Furthermore the computationally intensive solution given by SLF was not found to give any additional benefits under such simulated conditions.

1 Introduction

Over the past few decades, a variety of techniques for non-invasive measurement of brain activity have been developed, one of which is source localization using electroencephalography (EEG). It uses measurements of the voltage potential at various locations on the scalp (in the order of microvolts (μV)) and then applies signal processing techniques to estimate the current sources inside the brain that best fit this data.

It is well established [1] that neural activity can be modelled by currents, with activity during fits being well-approximated by current dipoles. The procedure of source localization works by first finding the scalp potentials that would result from hypothetical dipoles, or more generally from a current distribution inside the head – the forward problem; this is calculated or derived only once or several times depending on the approach used in the inverse problem and has been discussed in the corresponding review on solving the forward problem [2]. Then, in conjunction with the actual EEG data measured at specified positions of (usually less than 100) electrodes on the scalp, it can be used to work back and estimate the sources that fit these measurements – the inverse problem. The accuracy with which a source can be located is affected by a number of factors including head-modelling errors, source-modelling errors and EEG noise (instrumental or biological) [3]. The standard adopted by Baillet et. al. in [4] is that spatial and temporal accuracy should be at least better than 5 mm and 5 ms, respectively.

In this primer, we give a review of the inverse problem in EEG source localization. It is intended for the researcher who is new in the field to get insight in the state-of-the-art techniques used to get approximate solutions. It also provides an extensive list of references to the work of other researchers. The primer starts with a mathematical formulation of the problem. Then in Section 3 we proceed to discuss the two main categories of inverse methods: non parametric methods and parametric methods. For the first category we discuss minimum norm estimates and their generalizations, the Backus-Gilbert method, Weighted Resolution Optimization, LAURA, shrinking and multiresolution methods. For the second category, we discuss the non-linear least-squares problem, beamforming approaches, the Multiple-signal Classification Algorithm (MUSIC), the Brain Electric Source Analysis (BESA), subspace techniques, simulated annealing and finite elements, and computational intelligence algorithms, in particular neural networks and genetic algorithms. In Section 4 we then give an overview of source localization errors and a review of the performance analysis of the techniques discussed in the previous section. This is then followed by a discussion and conclusion which are given in Section 5.

2 Mathematical formulation

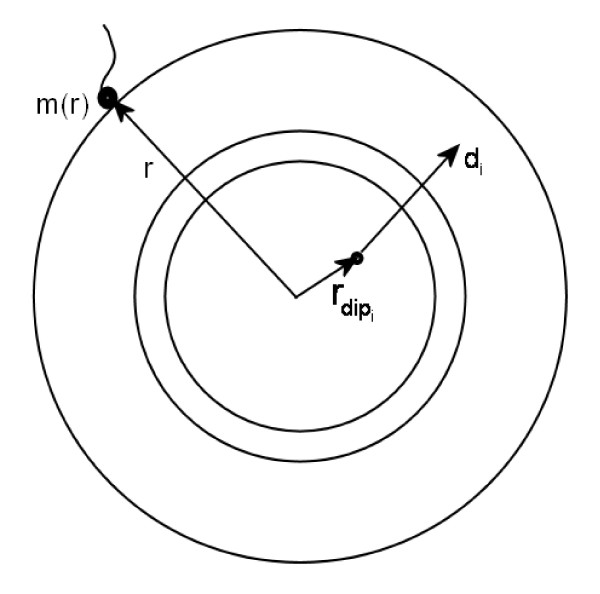

In symbolic terms, the EEG forward problem is that of finding, in a reasonable time, the potential g(r, r dip , d) at an electrode positioned on the scalp at a point having position vector r due to a single dipole with dipole moment d = de d (with magnitude d and orientation e d ), positioned at r dip (see Figure 1). This amounts to solving Poisson's equation to find the potentials V on the scalp for different configurations of r dip and d. For multiple dipole sources, the electrode potential would be . Assuming the principle of superposition, this can be rewritten as , where g(r, ) now has three components corresponding to the Cartesian x, y, z directions, d i = (d ix , d iy , d iz ) is a vector consisting of the three dipole magnitude components, ' T ' denotes the transpose of a vector, d i = ||d i || is the dipole magnitude and is the dipole orientation. In practice, one calculates a potential between an electrode and a reference (which can be another electrode or an average reference).

Figure 1.

A three layer head model.

For N electrodes and p dipoles:

| (1) |

where i = 1, ..., p and j = 1, ..., N. Each row of the gain matrix G is often referred to as the lead-field and it describes the current flow for a given electrode through each dipole position [5].

For N electrodes, p dipoles and T discrete time samples:

| (2a) |

| (2b) |

where M is the matrix of data measurements at different times m(r, t) and D is the matrix of dipole moments at different time instants.

In the formulation above it was assumed that both the magnitude and orientation of the dipoles are unknown. However, based on the fact that apical dendrites producing the measured field are oriented normal to the surface [6], dipoles are often constrained to have such an orientation. In this case only the magnitude of the dipoles will vary and the formulation in (2a) can therefore be re-written as:

| (3a) |

| (3b) |

| (3c) |

where D is now a matrix of dipole magnitudes at different time instants. This formulation is less underdetermined than that in the previous structure.

Generally a noise or perturbation matrix n is added to the system such that the recorded data matrix M is composed of:

| M = GD + n. | (4) |

Under this notation, the inverse problem then consists of finding an estimate of the dipole magnitude matrix given the electrode positions and scalp readings M and using the gain matrix G calculated in the forward problem. In what follows, unless otherwise stated, T = 1 without loss of generality.

3 Inverse solutions

The EEG inverse problem is an ill-posed problem because for all admissible output voltages, the solution is non-unique (since p >> N) and unstable (the solution is highly sensitive to small changes in the noisy data). There are various methods to remedy the situation (see e.g. [7-9]). As regards the EEG inverse problem, there are six parameters that specify a dipole: three spatial coordinates (x, y, z) and three dipole moment components (orientation angles (θ, φ) and strength d), but these may be reduced if some constraints are placed on the source, as described below.

Various mathematical models are possible depending on the number of dipoles assumed in the model and whether one or more of dipole position(s), magnitude(s) and orientation(s) is/are kept fixed and which, if any, of these are assumed to be known. In the literature [10] one can find the following models: a single dipole with time-varying unknown position, orientation and magnitude; a fixed number of dipoles with fixed unknown positions and orientations but varying amplitudes; fixed known dipole positions and varying orientations and amplitudes; variable number of dipoles (i.e. a dipole at each grid point) but with a set of constraints. As regards dipole moment constraints, which may be necessary to limit the search space for meaningful dipole sources, Rodriguez-Rivera et al. [11] discuss four dipole models with different dipole moment constraints. These are (i) constant unknown dipole moment; (ii) fixed known dipole moment orientation and variable moment magnitude; (iii) fixed unknown dipole moment orientation, variable moment magnitude; (iv) variable dipole moment orientation and magnitude.

There are two main approaches to the inverse solution: non-parametric and parametric methods. Non-parametric optimization methods are also referred to as Distributed Source Models, Distributed Inverse Solutions (DIS) or Imaging methods. In these models several dipole sources with fixed locations and possibly fixed orientations are distributed in the whole brain volume or cortical surface. As it is assumed that sources are intracellular currents in the dendritic trunks of the cortical pyramidal neurons, which are normally oriented to the cortical surface [6], fixed orientation dipoles are generally set to be normally aligned. The amplitudes (and direction) of these dipole sources are then estimated. Since the dipole location is not estimated the problem is a linear one. This means that in Equation 4, {} and possibly e i are determined beforehand, yielding large p >> N which makes the problem underdetermined. On the other hand, in the parametric approach few dipoles are assumed in the model whose location and orientation are unknown. Equation (4) is solved for D, {} and e i , given M and what is known of G. This is a non-linear problem due to parameters {}, e i appearing non-linearly in the equation.

These two approaches will now be discussed in more detail.

3.1 Non parametric optimization methods

Besides the Bayesian formulation explained below, there are other approaches for deriving the linear inverse operators which will be described, such as minimization of expected error and generalized Wiener filtering. Details are given in [12]. Bayesian methods can also be used to estimate a probability distribution of solutions rather than a single 'best' solution [13].

3.1.1 The Bayesian framework

In general, this technique consists in finding an estimator of x that maximizes the posterior distribution of x given the measurements y [4,12-15]. This estimator can be written as

where p(x | y) denotes the conditional probability density of x given the measurements y. This estimator is the most probable one with regards to measurements and a priori considerations.

According to Bayes' law,

The Gaussian or Normal density function

Assuming the posterior density to have a Gaussian distribution, we find

where z is a normalization constant called the partition function, F α (x) = U1(x) + αL(x) where U1(x) and L(x) are energy functions associated with p(y | x) and p(x) respectively, and α (a positive scalar) is a tuning or regularization parameter. Then

If measurement noise is assumed to be white, Gaussian and zero-mean, one can write U1(x) as

| U1(x) = ||Kx - y||2 |

where K is a compact linear operator [7,16] (representing the forward solution) and ||.|| is the usual L2 norm. L(x) may be written as U s (x) + U t (x) where U s (x) introduces spatial (anatomical) priors and U t (x) temporal ones [4,15]. Combining the data attachment term with the prior term,

This equation reflects a trade off between fidelity to the data and spatial/temporal smoothness depending on the α.

In the above, p(y | x) ∝ exp(-X T .X) where X = Kx - y. More generally, p(y | x) ∝ exp(-Tr(X T .σ-1.X)), where σ-1 is the data covariance matrix and 'Tr' denotes the trace of a matrix.

The general Normal density function

Even more generally, p(y | x) ∝ exp(-Tr((X - μ) T .σ-1.(X - μ))), where μ is the mean value of X. Suppose R is the variance-covariance matrix when a Gaussian noise component is assumed and Y is the matrix corresponding to the measurements y. The R-norm is defined as follows:

Non-Gaussian priors

Non-Gaussian priors include entropy metrics and L p norms with p < 2 i.e. L(x) = ||x|| p .

Entropy is a probabilistic concept appearing in information theory and statistical mechanics. Assuming x ∈ ℝ n consists of positive entries x i > 0, i = 1, ..., n the entropy is defined as

where > 0 is a is a given constant. The information contained in x relative to is the negative of the entropy. If it is required to find x such that only the data Kx = y is used, the information subject to the data needs to be minimized, that is, the entropy has to be maximized. The mathematical justification for the choice L(x) = -ℰ(x) is that it yields the solution which is most 'objective' with respect to missing information. The maximum entropy method has been used with success in image restoration problems where prominent features from noisy data are to be determined.

As regards L p norms with p < 2, we start by defining these norms. For a matrix A, where a ij are the elements of A. The defining feature of these prior models is that they are concentrated on images with low average amplitude with few outliers standing out. Thus, they are suitable when the prior information is that the image contains small and well localized objects as, for example, in the localization of cortical activity by electric measurements.

As p is reduced the solutions will become increasingly sparse. When p = 1 [17] the problem can be modified slightly to be recast as a linear program which can be solved by a simplex method. In this case it is the sum of the absolute values of the solution components that is minimized. Although the solutions obtained with this norm are sparser than those obtained with the L2 norm, the orientation results were found to be less clear [17]. Another difference is that while the localization results improve if the number of electrodes is increased in the case of the L2 approach, this is not the case with the L1 approach which requires an increase in the number of grid points for correct localization. A third difference is that while both approaches perform badly in the presence of noisy data, the L1 approach performs even worse than the L2 approach. For p < 1 it is possible to show that there exists a value 0 <p < 1 for which the solution is maximally sparse. The non-quadratic formulation of the priors may be linked to previous works using Markov Random Fields [18,19]. Experiments in [20] show that the L1 approach demands more computational effort in comparision with L2 approaches. It also produced some spurious sources and the source distribution of the solution was very different from the simulated distribution.

Regularization methods

Regularization is the approximation of an ill-posed problem by a family of neighbouring well-posed problems. There are various regularization methods found in the literature depending on the choice of L(x). The aim is to find the best-approximate solution x δ of Kx = y in the situation that the 'noiseless data' y are not known precisely but that only a noisy representation y δ with ||y δ - y|| ≤ δ is available. Typically y δ would be the real (noisy) signal. In general, an is found which minimizes

| F α (x) = ||Kx - y δ ||2 + αL(x). |

In Tikhonov regularization, L(x) = ||x||2 so that an is found which minimizes

| F α (x) = ||Kx - y δ ||2 + α||x||2. |

It can be shown (in Appendix) that

where K* is the adjoint of K. Since (K*K + αI)-1K* = K*(KK* + αI)-1 (proof in Appendix),

Another choice of L(x) is

| L(x) = ||Ax||2 | (5) |

where A is a linear operator. The minimum is obtained when

| (6) |

In particular, if A = ∇ where ∇ is the gradient operator, then = (K*K + α∇ T ∇)-1K*y. If A = ΔB, where Δ is the Laplacian operator, then = (K*K + αB*Δ T ΔB)-1K*y.

The regularization parameter α must find a good compromise between the residual norm ||Kx - y δ || and the norm of the solution ||Ax||. In other words it must find a balance between the perturbation error in y and the regularization error in the regularized solution.

Various methods [7-9] exist to estimate the optimal regularization parameter and these fall mainly in two categories:

1. Those based on a good estimate of ||ϵ|| where ϵ is the noise in the measured vector y δ .

2. Those that do not require an estimate of ||ϵ||.

The discrepancy principle is the main method based on ||ϵ||. In effect it chooses α such that the residual norm for the regularized solution satisfies the following condition:

| ||Kx - y δ || = ||ϵ|| |

As expected, failure to obtain a good estimate of ϵ will yield a value for α which is not optimal for the expected solution.

Various other methods of estimating the regularization parameter exist and these fall mainly within the second category. These include, amongst others, the

1. L-curve method

2. General-Cross Validation method

3. Composite Residual and Smoothing Operator (CRESO)

4. Minimal Product method

5. Zero crossing

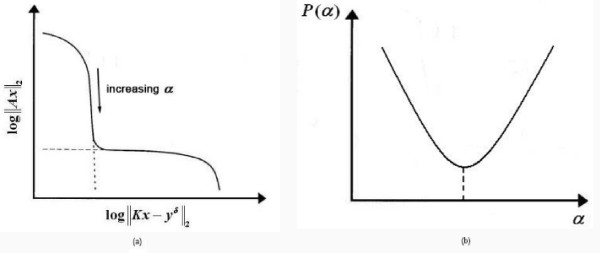

The L-curve method [21-23] provides a log-log plot of the semi-norm ||Ax|| of the regularized solution against the corresponding residual norm ||Kx - y δ || (Figure 2a). The resulting curve has the shape of an 'L', hence its name, and it clearly displays the compromise between minimizing these two quantities. Thus, the best choice of alpha is that corresponding to the corner of the curve. When the regularization method is continuous, as is the case in Tikhonov regularization, the L-curve is a continuous curve. When, however, the regularization method is discrete, the L-curve is also discrete and is then typically represented by a spline curve in order to find the corner of the curve.

Figure 2.

Methods to estimate the regularization parameter. (a) L-curve (b) Minimal Product Curve.

Similar to the L-curve method, the Minimal Product method [24] aims at minimizing the upper bound of the solution and the residual simultaneously (Figure 2b). In this case the optimum regularization parameter is that corresponding to the minimum value of function P which gives the product between the norm of the solution and the norm of the residual. This approach can be adopted to both continuous and discrete regularization.

| P(α) = ||Ax(α)||.||Kx(α) - y δ || |

Another well known regularization method is the Generalized Cross Validation (GCV) method [21,25] which is based on the assumption that y is affected by normally distributed noise. The optimum alpha for GCV is that corresponding to the minimum value for the function G:

where T is the inverse operator of matrix K. Hence the numerator measures the discrepancy between the estimated and measured signal y δ while the denominator measures the discrepancy of matrix KT from the identity matrix.

The regularization parameter as estimated by the Composite Residual and Smoothing Operator (CRESO) [23,24] is that which maximizes the derivative of the difference between the residual norm and the semi-norm i.e. the derivative of B(α):

| B(α) = α2||Ax(α)||2 - ||Kx(α) - y δ ||2 | (7) |

Unlike the other described methods for finding the regularization parameter, this method works only for continuous regularization such as Tikhonov.

The final approach to be discussed here is the zero-crossing method [23] which finds the optimum regularization parameter by solving B(α) = 0 where B is as defined in Equation (7). Thus the zero-crossing is basically another way of obtaining the L-curve corner.

One must note that the above estimators for are the same as those that result from the minimization of ||Ax|| subject to Kx = y. In this case x = K(*)(KK(*))-1y where K(*) = (AA*)-1K* is found with respect to the inner product ⟨⟨x, y⟩⟩ = ⟨Ax, Ay⟩. This leads to the estimator,

| x = (A*A)-1K*(K(AA*)-1K*)-1y |

which, if regularized, can be shown to be equivalent to (6).

As regards the EEG inverse problem, using the notation used in the description of the forward problem in Section ??, the Bayesian methods find an estimate of D such that

where

As an example, in [26] one finds that the linear operator A in Equation (5) is taken to be a matrix A whose rows represent the averages (linear combinations) of the true sources. One choice of the matrix A is given by

In the above equation, the subscripts p, q are used to indicate grid points in the volume representing the brain and the subscripts k, m are used to represent Cartesian coordinates x, y and z (i.e. they take values 1,2,3), d pq represents the Euclidean distances between the pth and qth grid points. The coefficients w j can be used to describe a column scaling by a diagonal matrix while σ i controls the spatial resolution. In particular, if σ i → 0 and w j = 1 the minimum norm solution described below is obtained.

In the next subsections we review some of the most common choices for L(D).

Minimum norm estimates (MNE)

Minimum norm estimates [5,27,28] are based on a search for the solution with minimum power and correspond to Tikhonov regularization. This kind of estimate is well suited to distributed source models where the dipole activity is likely to extend over some areas of the cortical surface.

| L(D) = ||D||2 |

or

The first equation is more suitable when N > p while the second equation is more suitable when p > N. If we let T MNE be the inverse operator G T (GG T + αI N )-1, then T MNE G is called the resolution matrix and this would ideally the identity matrix. It is claimed [5,27] that MNEs produce very poor estimation of the true source locations with both the realistic and sphere models.

A more general minimum-norm inverse solution assumes that both the noise vector n and the dipole strength D are normally distributed with zero mean and their covariance matrices are proportional to the identity matrix and are denoted by C and R respectively. The inverse solution is given in [14]:

R ij can also be taken to be equal to σ i σ j Corr(i, j) where is the variance of the strength of the ith dipole and Corr(i, j) is the correlation between the strengths of the ith and jth dipoles. Thus any a priori information about correlation between the dipole strengths at different locations can be used as a constraint. R can also be taken as where is such that it is large when the measure ζ i of projection onto the noise subspace is small. The matrix C can be taken as σ2I if it is assumed that the sensor noise is additive and white with constant variance σ2. R can also be constructed in such a way that it is equal to UU T where U is an orthonormal set of arbitrary basis vectors [12]. The new inverse operator using these arbitrary basis functions is the original forward solution projected onto the new basis functions.

Weighted minimum norm estimates (WMNE)

The Weighted Minimum Norm algorithm compensates for the tendency of MNEs to favour weak and surface sources. This is done by introducing a 3p × 3p weighting matrix W:

| (8) |

or

W can have different forms but the simplest one is based on the norm of the columns of the matrix G: W = Ω ⊗ I3, where ⊗ denotes the Kronecker product and Ω is a diagonal p × p matrix with , for β = 1, ..., p.

MNE with FOCUSS (Focal underdetermined system solution)

This is a recursive procedure of weighted minimum norm estimations, developed to give some focal resolution to linear estimators on distributed source models [5,27,29,30]. Weighting of the columns of G is based on the mag nitudes of the sources of the previous iteration. The Weighted Minimum Norm compensates for the lower gains of deeper sources by using lead-field normalization.

| (9) |

where i is the index of the iteration and W i is a diagonal matrix computed using

| (10) |

, j ∈ [1, 2, ..., p] is a diagonal matrix for deeper source compensation. G(:, j) is the jth column of G. The algorithm is initialized with the minimum norm solution , that is, , where (n) represents the nth element of vector . If continued long enough, FOCUSS converges to a set of concentrated solutions equal in number to the number of electrodes.

The localization accuracy is claimed to be impressively improved in comparison to MNE. However, localization of deeper sources cannot be properly estimated. In addition to Minimum Norm, FOCUSS has also been used in conjunction with LORETA [31] as discussed below.

Low resolution electrical tomography (LORETA)

LORETA [5,27] combines the lead-field normalization with the Laplacian operator, thus, gives the depth-compensated inverse solution under the constraint of smoothly distributed sources. It is based on the maximum smoothness of the solution. It normalizes the columns of G to give all sources (close to the surface and deeper ones) the same opportunity of being reconstructed. This is better than minimum-norm methods in which deeper sources cannot be recovered because dipoles located at the surface of the source space with smaller magnitudes are priveleged. In LORETA, sources are distributed in the whole inner head volume. In this case, L(D) = ||ΔB.D||2 and B = Ω ⊗ I3 is a diagonal matrix for the column normalization of G.

or

Experiments using LORETA [27] showed that some spurious activity was likely to appear and that this technique was not well suited for focal source estimation.

LORETA with FOCUSS [31]

This approach is similar to MNE with FOCUSS but based on LORETA rather than MNE. It is a combination of LORETA and FOCUSS, according to the following steps:

1. The current density is computed using LORETA to get .

2. The weighting matrix W is constructed using (10), the initial matrix being given by , where (n) represents the nth element of vector .

3. The current density is computed using (9).

4. Steps (2) and (3) are repeated until convergence.

Standardized low resolution brain electromagnetic tomography

Standardized low resolution brain electromagnetic tomography (sLORETA) [32] sounds like a modification of LORETA but the concept is quite different and it does not use the Laplacian operator. It is a method in which localization is based on images of standardized current density. It uses the current density estimate given by the minimum norm estimate and standardizes it by using its variance, which is hypothesized to be due to the actual source variance S D = I3p, and variation due to noisy measurements = αI N . The electrical potential variance is S M = GS D G T + and the variance of the estimated current density is . This is equivalent to the resolution matrix T MNE G. For the case of EEG with unknown current density vector, sLORETA gives the following estimate of standardized current density power:

| (11) |

where ∈ ℝ3 × 1 is the current density estimate at the lth voxel given by the minimum norm estimate and [] ll ∈ ℝ3 × 3 is the lth diagonal block of the resolution matrix . It was found [32] that in all noise free simulations, although the image was blurred, sLORETA had exact, zero error localization when reconstructing single sources, that is, the maximum of the current density power estimate coincided with the exact dipole location. In all noisy simulations, it had the lowest localization errors when compared with the minimum norm solution and the Dale method [33]. The Dale method is similar to the sLORETA method in that the current density estimate given by the minimum norm solution is used and source localization is based on standardized values of the current density estimates. However, the variance of the current density estimate is based only on the measurement noise, in contrast to sLORETA, which takes into account the actual source variance as well.

Variable resolution electrical tomography (VARETA)

VARETA [34] is a weighted minimum norm solution in which the regularization parameter varies spatially at each point of the solution grid. At points at which the regularization parameter is small, the source is treated as concentrated When the regularization parameter is large the source is estimated to be zero.

where L is a nonsingular univariate discrete Laplacian, L3 = L ⊗ I3 × 3, where ⊗ denotes the Kronecker product, W is a certain weight matrix defined in the weighted minimum norm solution, Λ is a diagonal matrix of regularizing parameters, and parameters τ and α are introduced. τ controls the amount of smoothness and α the relative importance of each grid point. Estimators are calculated iteratively, starting with a given initial estimate D0 (which may be taken to be ), Λ i is estimated from Di - 1, then D i from Λ i until one of them converges.

Simulations carried out with VARETA indicate the necessity of very fine grid spacing [34].

Quadratic regularization and spatial regularization (S-MAP) using dipole intensity gradients

In Quadratic regularization using dipole intensity gradients [4], L(D) = ||∇D||2 which results in a source estimator given by

or

The use of dipole intensity gradients gives rise to smooth variations in the solution.

Spatial regularization is a modification of Quadratic regularization. It is an inversion procedure based on a non-quadratic choice for L(D) which makes the estimator become non-linear and more suitable to detect intensity jumps [27].

where N v = p × N n and N n is the number of neighbours for each source j, ∇D|v is the vth element of the gradient vector and . K v = α v × β v where α v depends on the distance between a source and its current neighbour and β v depends on the discrepancy regarding orientations of two sources considered. For small gradients the local cost is quadratic, thus producing areas with smooth spatial changes in intensity, whereas for higher gradients, the associated cost is finite: Φ v (u) ≈ , thus allowing the preservation of discontinuities. The estimator at the ith iteration is of the form

where Θ is a p by N matrix depending on G and priors computed from the previous source estimate .

Spatio-temporal regularization (ST-MAP)

Time is taken into account in this model whereby the assumption is made that dipole magnitudes are evolving slowly with regard to the sampling frequency [4,15]. For a measurement taken at time t, assuming that and may be very close to each other means that the orthogonal projection of on the hyperplane perpendicular to is 'small'. The following nonlinear equation is obtained:

where

is a weighted Laplacian and

with

is the projector onto .

Spatio-temporal modelling

Apart from imposing temporal smoothness constraints, Galka et. al. [35] solved the inverse problem by recasting it as a spatio-temporal state space model which they solve by using Kalman filtering. The computational complexity of this approach that arises due to the high dimensionality of the state vector was addressed by decomposing the model into a set of coupled low-dimensional problems requiring a moderate computational effort. The initial state estimates for the Kalman filter are provided by LORETA. It is shown that by choosing appropriate dynamical models, better solutions than those obtained by the instantaneous inverse solutions (such as LORETA) are obtained.

3.1.2 The Backus-Gilbert method

The Backus-Gilbert method [5,7,36] consists of finding an approximate inverse operator T of G that projects the EEG data M onto the solution space in such a way that the estimated primary current density = TM, is closest to the real primary current density inside the brain, in a least square sense. This is done by making the 1 × p vector (u, v = 1, 2, 3 and γ = 1, ..., p) as close as possible to where δ is the Kronecker delta and I γ is the γ th column of the p × p identity matrix. G v is a N × p matrix derived from G in such a way that in each row, only the elements in G corresponding to the vth direction are kept. The Backus-Gilbert method seeks to minimize the spread of the resolution matrix R, that is to maximize the resolving power. The generalized inverse matrix T optimizes, in a weighted sense, the resolution matrix.

We reproduce the discrete version of the Backus-Gilbert problem as given in [5]:

under the normalization constraint: . 1 p is a p × 1 matrix consisting of ones.

One choice for the p × p diagonal matrix is:

where v i is the position vector of grid point i in the head model. Note that the first part of the functional to be minimized attempts to ensure correct position of the localized dipoles while the second part ensures their correct orientation.

The solution for this EEG Backus-Gilbert inverse operator is:

where:

'†' denotes the Moore-Penrose pseudoinverse.

3.1.3 The weighted resolution optimization

An extension of the Backus-Gilbert method is called the Weighted Resolution Optimization (WROP) [37]. The modification by Grave de Peralta Menendez is cited in [5]. is replaced by where

The second part of the functional to be minimzed is replaced by

where

α GdeP and β GdeP are scalars greater than zero. In practice this means that there is more trade off between correct localization and correct orientation than in the above Backus-Gilbert inverse problem.

In this case the inverse operator is:

In [5] five different inverse methods (the class of instantaneous, 3D, discrete linear solutions for the EEG inverse problem) were analyzed and compared for noise-free measurements: minimum norm, weighted minimum norm, Backus-Gilbert, weighted resolution optimization (WROP) and LORETA. Of the five inverse solutions tested, only LORETA demonstrated the ability of correct localization in 3D space.

The WROP method is a family of linear distributed solutions including all weighted minimum norm solutions. As particular cases of the WROP family there are LAURA [26,38], a local autoregressive average which includes physical constraints into the solutions and EPI-FOCUS [38] which is a linear inverse (quasi) solution, especially suitable for single, but not necessarily point-like generators in realistic head models. EPIFOCUS has demonstrated a remarkable robustness against noise.

LAURA

As stated in [39] in a norm minimization approach we make several assumptions in order to choose the optimal mathematical solution (since the inverse problem is underdetermined). Therefore the validity of the assumptions determine the success of the inverse solution. Unfortunately, in most approaches, criteria are purely mathematical and do not incorporate biophysical and psychological constraints. LAURA (Local AUtoRegressive Average) [40] attempts to incorporate biophysical laws into the minimum norm solution.

According to Maxwell's laws of electromagnetic field, the strength of each source falls off with the reciprocal of the cubic distance for vector fields and with the reciprocal of the squared distance for potential fields. LAURA method assumes that the electromagnetic activity will occur according to these two laws.

In LAURA the current estimate is given by the following equation:

The W j matrix is constructed as follows:

1. Denote by the vicinity of each solution point defined as the hexahedron centred at the point and comprising at most = 26 points.

2. For each solution point denote by N k the number of neighbours of that point and by d ki the Euclidean distance from point k to point i (and vice versa).

3. Compute the matrix A using e i = 2 for scalar fields and e i = 3 for vector fields

and

4. The weight matrix W j is defined by:

| W j = P T P |

where:

| P = W m A ⊗ I3 |

where I3 is the 3 × 3 identity matrix and ⊗ denotes the Kronecker product. W m is a diagonal matrix formed by the mean of the norm of the three columns of the lead field matrix associated with the ith point.

3.1.4 Shrinking methods and multiresolution methods

By applying suitable iterations to the solution of a distributed source model, a concentrated source solution may be obtained. Ways of performing this are explained in the next section.

S-MAP with iterative focusing

This modified version [27] of Spatial Regularization is dedicated to the recovery of focal sources when the spatial sampling of the cortical surface is sparse. The source space dimension is reduced by iterative focusing on the regions that have been previously estimated with significant dipole activity. An energy criterion is used which takes into consideration both the source intensities and its contribution to data:

| E = 2E c + E a |

where E c measures the contribution of every dipole source to the data and E a is an indicator of dipole relative magnitudes. Sources with energy greater than a certain threshold are selected for the next iteration. The estimator at the ith iteration is given by

where G i is the column-reduced version of G and Θ is a p i ≤ p by N matrix depending on the G i and priors computed from the previous source estimate . A similar approach was used in [31] where the source region was contracted several times but at each iteration, LORETA was used to estimate the source tomography.

Shrinking LORETA-FOCUSS

This algorithm combines the ideas of LORETA and FOCUSS and makes iterative adjustments to the solution space in order to reduce computation time and increase source resolution [?, 20]. Starting from the smooth LORETA solution, it enhances the strength of some prominent dipoles in the solution and diminishes the strength of other dipoles. The steps [20] are as follows:

1. The current density is computed using LORETA to get .

2. The weighting matrix W is constructed using (10), its initial value being given by .

3. The current density is computed using (9).

4. (Smoothing operation) The prominent nodes (e.g. those with values larger than 1% of the maximum value) and their neighbours are retained. The current density values on these prominent nodes and their neighbours are readjusted by smoothing, the new values being given by

where r l is the position vector of the lth node and s l is the number of neighbouring nodes around the lth node with distance equal to the minimum inter-node distance d.

5. (Shrinking operation) The corresponding elements in and G are retained and the matrix M = D is computed.

6. Steps (2) to (5) are repeated until convergence.

7. The solution of the last iteration before smoothing is the final solution.

Steps (4) and (5) are stopped if the new solution space has fewer nodes than the number of electrodes or the solution of the current iteration is less sparse than that estimated by the previous iteration. Once steps (4) and (5) are stopped, the algorithm becomes a FOCUSS process. Results [20] using simulated noiseless data show that Shrinking LORETA-FOCUSS is able to reconstruct a three-dimensional source distribution with smaller localization and energy errors compared to Weighted Minimum Norm, the L1 approach and LORETA with FOCUSS. It is also 10 times faster than LORETA with FOCUSS and several hundred times faster than the L1 approach.

Standardized shrinking LORETA-FOCUSS (SSLOFO)

SSLOFO [41] combines the features of high resolution (FOCUSS) and low resolution (WMN, sLORETA) methods. In this way, it can extract regions of dominant activity as well as localize multiple sources within those regions. The procedure is similar to that in Shrinking LORETA-FOCUSS with the exception of the first three steps which are:

1. The current density is computed using sLORETA to get .

2. The weighting matrix W is constructed using (10), its initial value being given by .

3. The current density is computed using (9). The power of the source estimation is then normalized as

| (12) |

where and [R i ] ll is the lth diagonal block of matrix R i .

In [41], SSLOFO reconstructed different source configurations better than WMN and sLORETA. It also gave better results than FOCUSS when there were many extended sources. A spatio-temporal version of SSLOFO is also given in [41]. An important feature of this algorithm is that the temporal waveforms of single/multiple sources in the simulation studies are clearly reconstructed, thus enabling estimation of neural dynamics directly from the cortical sources. Neither Shrinking LORETA-FOCUSS nor FOCUSS are able to accurately reconstruct the time series of source activities.

Adaptive standardized LORETA/FOCUSS (ALF)

The algorithms described above require a full computation of the matrix G. On the other hand, ALF [42] requires only 6%–11% of this matrix. ALF localizes sources from a sparse sampling of the source space. It minimizes forward computations through an adaptive procedure that increases source resolution as the spatial extent is reduced. The algorithm has the following steps:

1. A set of successive decimation ratios on the set of possible sources is defined. These ratios determine successively higher resolutions, the first ratio being selected so as to produce a targeted number of sources chosen by the user and the last one produces the full resolution of the model.

2. Starting with the first decimation ratio, only the corresponding dipole locations and columns in G are retained.

3. sLORETA (Equation(11)) is used to achieve a smooth solution. The source with maximum normalized power is selected as the centre point for spatial refinement in the next iteration, in which the next decimation ratio is applied. Successive iterations include sources within a spherical region at successively higher resolutions.

4. Steps 2 and 3 are repeated until the last decimation ratio is reached. The solution produced by the final iteration of sLORETA is used as initialization of the FOCUSS algorithm. Standardization (Equation(12)) is incorporated into each FOCUSS iteration as well.

5. Iterations are continued until there is no change in solution.

It is shown in [42] that the localization accuracy achieved is not significantly different than that obtained when an exhaustive search in a fully-sampled source space is made. A multiresolution framework approach was also used in [15]. At each iteration of the algorithm, the source space on the cortical surface was scanned at higher spatial resolution such that at every resolution but the highest, the number of source candidates was kept constant.

3.1.5 Summary

Refering to Equation (8), Table 1 summarizes the different weight matrices used in the algorithms. Refering to Subsection 3.1.4, Table 2 summarizes the steps involved in the different iterative methods which were discussed.

Table 1.

Summary of weighting strategies for the various non-parametric methods. For definition of notation, refer to the respective subsection.

| Algorithm | Weight Matrix W |

| MNE | I3p |

| WMNE | Ω ⊗ I3 |

| LORETA | (Ω ⊗ I3)Δ T Δ(Ω ⊗ I3) |

| Quadratic Regularization | ∇ |

| LAURA | W m A ⊗ I3 |

Table 2.

Steps involved in the iterative methods

| Iterative Method | Description |

| S-MAP with Iterative Focusing | Uses the S-MAP algorithm; an energy criterion is used to reduce the dimension of G; priors computed from the previous source estimate are used at each new iteration. |

| Shrinking LORETA-FOCUSS | LORETA solution computed; Weighting matrix W constructed; FOCUSS algorithm used to estimate ; smoothing of current density values of prominent dipoles and their neighbours; shrinking of and G; computation of M = G; process (computation of W etc.) repeated. |

| SSLOFOM | sLORETA solution computed; Weighting matrix W constructed; FOCUSS algorithm used to estimate ; source estimation power is normalized; smoothing of current density values of prominent dipoles and their neighbours; shrinking of and G; computation of M = G; process (computation of W etc.) repeated. |

| ALF | Decimation ratios are defined; first ratio is used to retain the corresponding dipole locations and columns of G; sLORETA computed; source with maximum normalized power selected as centre point for spatial refinement; next decimation ratio used; process repeated until last ratio is reached; final sLORETA solution used to initialize FOCUSS algorithm with standardization. |

3.2 Parametric methods

Parametric Methods are also referred to as Equivalent Current Dipole Methods or Concentrated Source or Spatio-Temporal Dipole Fit Models. In this approach, a search is made for the best dipole position(s) and orientation(s). The models range in complexity from a single dipole in a spherical head model, to multiple dipoles (up to ten or more) in a realistic head model. Dynamic models take into consideration dipole changes in time as well. Constraints on the dipole orientations, whether fixed or variable, may be made as well.

3.2.1 The non-linear least-squares problem

The best location and dipole moment (six parameters in all for each dipole) are usually obtained by finding the global minimum of the residual energy, that is the L2-norm ||V in - V model ||, where V model ∈ ℝ N represents the electrode potentials with the hypothetical dipoles, and V in ∈ ℝ N represents the recorded EEG for a single time instant. This requires a non-linear minimization of the cost function ||M - G({r j , })D|| over all of the parameters (, D). Common search methods include the gradient, downhill or standard simplex search methods (such as Nelder-Mead) [43-46], normally including multi-starts, as well as genetic algorithms and very time-consuming simulated annealing [45,47,48]. In these iterative processes, the dipolar source is moved about in the head model while its orientation and magnitude are also changed to obtain the best fit between the recorded EEG and those produced by the source in the model. Each iterative step requires several forward solution calculations using test dipole parameters to compare the fit produced by the test dipole with that of the previous step.

3.2.2 Beamforming approaches

Beamformers are also called spatial filters or virtual sensors. They have the advantage that the number of dipoles must not be assumed a priori. The output y(t) of the beamformer is computed as the product of a 3 × N (each Cartesian axis is considered) spatial filtering matrix W T with m(t), the N × 1 vector representing the signal at the array at a given time instant t associated with a single dipole source, i.e. y(t) = W T m(t). This output represents the neuronal activity of each dipole d in the best possible way at a given time t.

In beamforming approaches [6], the signals from the electrodes are filtered in such a way that only those coming from sources of interest are maintained. If the location of interest is r dip , the spatial filter should satisfy the following constraints:

where G(r) = [g(r, e x ), g(r, e y ), g(r, e z )] is the N × 3 forward matrix for three orthogonal dipoles at location r having orientation vectors e x , e y and e z respectively, I is the 3 × 3 identity matrix and δ represents a small distance.

In linearly constrained minimum variance (LCMV) beamforming [49], nulls are placed at positions corresponding to interfering sources, i.e. neural sources at locations other than r dip (so δ = 0). The LCMV problem can be written as:

where C y = E[yy T ] = W T C m W and C m = E[mm T ] is the signal covariance matrix estimated from the available data. This means that the beamformer minimizes the output energy W T C m W under the constraint that only the dipole at r dip is active at that time. Minimization of variance optimally allocates the stop band response of the filter to attenuate activity originating at other locations. By applying Lagrange multipliers and completing the square (proof in Appendix), one obtains:

The filter W(r dip ) is then applied to each of the vectors m(t) in M so that an estimate of the dipole moment at r dip is obtained. To perform localization, an estimation of the variance or strength (r dip ) of the activity as a function of location is calculated. This is the value of the cost function Tr{W T (r dip )C m W(r dip )} at the minimum, equal to .

This approach can produce an estimate of the neural activity at any location by changing the location r dip . It assumes that any source can be explained as a weighted combination of dipoles. Hence the geometry of sources is not restricted to points but may be distributed in nature according to the variance values. Moreover, this approach does not require prior knowledge of the number of sources and anatomical information is easily included by evaluating (r dip ) only at physically realistic source locations.

The resolution of detail obtained by this approach depends on the filter's passband and on the SNR (signal to noise ratio defined as the ratio of source variance to noise variance) associated with the feature of interest. To minimimize the effect of low SNRs, the estimated variance is normalized by the estimated noise spectral spectrum to obtain what is called the neural activity index:

where Q is the noise covariance matrix estimated from data that is known to be source free.

Sekihara et. al [50] proposed an 'eigenspace projection' beamformer technique in order to reconstruct source activities at each instant in time. It is assumed that, for a general beamformer, the matrix W = [w x , w y , w z ] where the column weight vectors w x , w y and w z , respectively, detect the x, y and z components of the source moment to be determined and are of the form

where μ = x, y or z, f x = [1, 0, 0] T , f y = [0,1 0] T , f z = [0, 0, 1] T and

The weight vectors for the proposed beamformer, , are derived by projecting the weight vectors w μ onto the signal subspace of the measurement covariance matrix:

where E S is the matrix whose columns consist of the signal-level eigenvectors of C m . This beamformer, when tested on Magnetoencephalography (MEG) experiments, not only improved the SNR considerably but also the spatial resolution. In [50], it is further extended to a prewhitened eigenspace projection beamformer to reduce interference arising from background brain activities.

3.2.3 Brain electric source analysis (BESA)

In a particular dipole-fit model called Brain Electric Source Analysis (BESA) [27], a set of consecutive time points is considered in which dipoles are assumed to have fixed position and fixed or varying orientation. The method involves the minimization of a cost function that is a weighted combination of four criteria: the Residual Variance (RV) which is the amount of signal that remains unexplained by the current source model; a Source Activation Criterion which increases when the sources tend to be active outside of their a priori time interval of activation; an Energy Criterion which avoids the interaction between two sources when a large amplitude of the waveform of one source is compensated by a large amplitude on the waveform of the second source; a Separation Criterion that encourages solutions in which as few sources as possible are simultaneously active.

3.2.4 Subspace techniques

We now consider parametric methods which process the EEG data prior to performing the dipole localization. Like beamforming techniques, the number of dipoles need not be known a priori. These methods can be more robust since they can take into consideration the signal noise when performing dipole localization.

Multiple-signal Classification algorithm (MUSIC)

The multiple-signal Classification algorithm (MUSIC) [6,51] is a version of the spatio-temporal approach. The dipole model can consist of fixed orientation dipoles, rotating dipoles or a mixture of both. For the case of a model with fixed orientation dipoles, a signal subspace is first estimated from the data by finding the singular value decomposition (SVD) [8]M = UΣV T and letting U S be the signal subspace spanned by the p first left singular vectors of U. Two other methods of estimating the signal subspace, claimed to be better because they are less affected by spatial covariance in the noise, are given in [52]. The first method involves prewhitening of the data matrix making use of an estimate of the spatial noise covariance matrix. This means that the data matrix M is transformed so that the spatial covariance matrix of the transformed noise matrix is the identity matrix. The second method is based on an eigen decomposition of a matrix product of stochastically independent sweeps. The MUSIC algorithm then scans a single dipole model through the head volume and computes projections onto this subspace. The MUSIC cost function to be minimized is

where is the orthogonal projector onto the noise subspace, r and e are position and orientation vectors, respectively. This cost function is zero when g(r, e) corresponds to one of the true source locations and orientations, r = and e = , i = 1, ..., p. An advantage over least-squares estimation is that each source is found in turn, rather than searching simultaneously for all sources.

In MUSIC, errors in the estimate of the signal subspace can make localization of multiple sources difficult (subjective) as regards distinguishing between 'true' and 'false' peaks. Moreover, finding several local maxima in the MUSIC metric becomes difficult as the dimension of the source space increases. Problems also arise when the subspace correlation is computed at only a finite set of grid points.

Recursive MUSIC (R-MUSIC) [53] automates the MUSIC search, extracting the location of the sources through a recursive use of subspace projection. It uses a modified source representation, referred to as the spatio-temporal independent topographies (IT) model, where a source is defined as one or more nonrotating dipoles with a single time course rather than an individual current dipole. It recursively builds up the IT model and compares this full model to the signal subspace.

In the recursively applied and projected MUSIC (RAP-MUSIC) extension [54,55], each source is found as a global maximizer of a different cost function. Assuming g(r, e) = h(r)e, the first source is found as the source location that maximizes the metric

over the allowed source space, where r is the nonlinear location parameter. The function subcorr(h(r), U S )1 is the cosine of the first principal angle between the subspaces spanned by the columns of h(r) and U S given by:

The k-th recursion of RAP-MUSIC is

| (13) |

where is formed from the array manifold estimates of the previous k - 1 recursions and is the projector onto the left-null space of . The recursions are stopped once the maximum of the subspace correlation in (13) drops below a minimum threshold.

A key feature of the RAP-MUSIC algorithm is the orthogonal projection operator which removes the subspace associated with previously located source activity. It uses each successively located source to form an intermediate array gain matrix and projects both the array manifold and the estimated signal subspace into its orthogonal complement, away from the subspace spanned by the sources that have already been found. The MUSIC projection to find the next source is then performed in this reduced subspace. Other sequential subspace methods besides R-MUSIC and RAP-MUSIC are S-MUSIC and IES-MUSIC [54]. Although they all find the first source in the same way, in these latter methods the projection operator is applied just to the array manifold, rather than to both arguments as in the case of RAP-MUSIC.

FINES subspace algorithm

An alternative signal subspace algorithm [56] is FINES (First Principal Vectors). This approach, used in order to estimate the source locations, employs projections onto a subspace spanned by a small set of particular vectors (FINES vector set) in the estimated noise-only subspace instead of the entire estimated noise-only subspace as in the case of classic MUSIC.

In FINES the principal angle between two subspaces is defined according to the closeness criterion [56]. FINES creates a vector set for a region of the brain in order to form a projection operator and search for dipoles in this specific region.

An algorithmic description of the FINES algorithm can be found in [56]. Simulation results in [56] show that FINES produces more distinguishable localization results than classic MUSIC and RAP-MUSIC even when two sources are very close spatially.

3.2.5 Simulated annealing and finite elements

In [47], an objective function based on the current-density boundary integral associated with standard finite-element formulations in two dimensions is used instead of measured potential differences, as the basis for optimization performed using the method of simulated annealing. The algorithm also enables user-defined target search regions to be incorporated. In this approach, the optimization objective is to vary the modelled dipole such that the Neumann boundary condition is satisfied, that is, the current density at each electrode approaches zero.

where C(x p , y p , θ p , d p ) l is the objective function associated with the lth electrode resulting from p dipoles, N is the number of electrodes, J l is the current density associated with the lth electrode, ψ l represents the weighting function associated with the lth electrode and is the outward-pointing normal direction to the boundary of the problem domain. This formulation allows for the single calculation of the inverse or preconditioner matrix in the case of direct or iterative matrix solvers, respectively, which is a significant reduction in the computational time associated with 3-D finite element solutions.

3.2.6 Computational intelligence algorithms

Neural networks

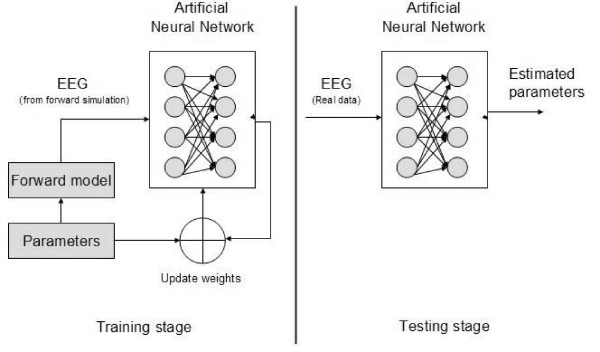

Since the inverse source localization problem can be considered a minimization problem – find the optimal coordinates and orientation for each dipole – the optimization can be performed with an artificial neural network (ANN) based system.

The main advantage of neural network approaches [57] is that once trained, no further iterative process is required. In addition, although iterative methods are shown to be better in noise free environments, ANN performs best in environments with low signal to noise ratio [58]. Therefore ANNs seem to be more noise robust. In any case, many research works [59-67] claim a localization error in ANN methods of less than 5%.

A general ANN system for EEG source localization is illustrated in Figure 3. According to [65], the number of neurons in the input layer is equal to the number of electrodes and the features at the input can be directly the values of the measured voltage. The network also consists of one or two hidden layers of N neurons each and an output layer made up of six neurons, 3 for the coordinates and 3 for dipole components. In addition each hidden layer neuron is connected to the output layer with weights equal to one in order to permit a non-zero threshold of the activation function. Weights of inter connections are determined after the training phase where the neural network is trained with preconstructed examples from forward modeling simulations.

Figure 3.

General block diagram for an artificial neural network system used for inverse source localization.

Genetic algorithms

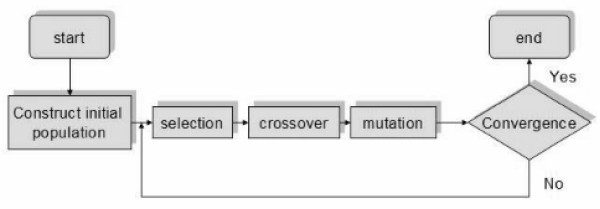

An alternative way to solve the inverse source localization problem as a minimization problem is to use genetic algorithms. In this case dipoles are modelled as a set of parameters that determine the orientation and the location of the dipole and the error between the projected potential and the measured potentials is minimized by genetic algorithm evolutionary techniques.

The minimization operation can be performed in order to localize multiple sources either in the brain [68] or in Independent Component backprojections [69,70]. If component back-projections are used, the correlation between the projected model and the measured one will have to be minimized rather than the energy of the difference.

Figure 4 shows how the minimization approach develops. An initial population is created, this being a set of potential solutions. Every solution of the set is encoded e.g. binary code and then a new population is created with the application of three operators: selection, crossover and mutation. The procedure is repeated until convergence is reached.

Figure 4.

Genetic algorithm schema.

3.2.7 Estimation of initial dipoles for parametric methods

In most parametric methods, the final result is extremely dependent on the initial guess regarding the number of dipoles to estimate and their initial locations and orientations. Estimates can be obtained as explained below.

The optimal dipole

In this model, any point inside the sphere has an associated optimal dipole [71], which fits the observed data better than any other dipole that has the same location but different orientation. The unknown parameters of an optimal dipole are the magnitude components d x , d y and d z (which is a linear least-squares problem).

There are two possible ways for finding the required optimal dipoles. The first is a minimization iteration, where a few optimal dipoles are found in an arbitrary region. The steepest slope with respect to the spatial coordinate is then found and a new search region is obtained. Optimal dipoles in that region are found, a new slope is determined and so on, until the best of all optimal dipoles is found.

The second way is to find optimal dipoles at a dense grid of points that covers the volume in which the dipolar source is expected to be. The best of all the optimal dipoles is the chosen optimal dipole.

Stagnant dipoles

Stagnant dipoles [10] have only time varying amplitude (dipole magnitude). So the number of unknowns is p(5 + T) where p is the number of dipoles and T is the number of time-samples. In this model, the minimum number of dipoles L (assumed to be smaller than N or T) required to describe the observed responses adequately was determined. The resulting residual between the data matrix M and the corresponding model predictions is expressed as

where is a N × T matrix of rank L. It is found that the resulting residual will never exceed the true residual H:

This is equivalent to

where λ k are the singular values of M, arranged in decreasing order. Hence more sources haveto be included in the model till the lower bound is smaller than the noise level H.

4 Performance analysis

In this section we do a comparative review of the performance of different methods as recorded in the literature and subsequently report our own Monte-Carlo comparative experiments. Various measures have been used to determine the quality of localization obtained by a given algorithm and each measure represents some performance aspect of the algorithm such as localization error, smoother error, generalization etc. In the following we review a number of measures that capture various characteristics of localization performance.

For a single point source, localization error is the distance between the maximum of the estimated solution and the actual source location [20,27,32]. An extension of this which takes into consideration the magnitudes of other sources besides the maximum is the formula

where d n = | - r dip | is the distance from the nth element to the true source [30].

The energy error [20], which describes the accuracy of the dipole magnitudes, is defined as

where is the power of the maxima in the estimated current distribution and ||D|| is the power of the simulated point source.

Baillet and Garnero [4] also determine the Data Fit and Reconstruction Error to evaluate the performance of their algorithms where Data Fit is defined as

and the Reconstruction Error (in which orientation of the dipoles is taken into consideration) is defined as

Baillet [27] also estimates the distance between the actual source location and the position of the centre of gravity of the source estimate by:

where is the location of the nth source with intensity (n) and r dip is the original source location.

E spurious [27] is defined as the relative energy contained in spurious or phantom sources with regards to the original source energy.

For two dipoles [30], the true sources are first subtracted from the reconstructions. The error is:

where dn1 and dn2 are the distances from each element to the corresponding source, sn1 and sn2 were used to reduce the penalty due to the distance between a source and a part of the solution near the other source. This means that a large dipole magnitude solution near to one source should not give rise to an improper error estimate due to the potentially large distance to the other source. sn1 can be chosen in the following way:

and similarly for sn2 using dn1.

In [72], Yao and Dewald used three indicators to determine the accuracy of the inverse solution:

1. the error distance (ED) which is the distance between the true and estimated sources defined as

| (14) |

is the real dipole location of the simulated EEG data. is the source location detected by the inverse calculation method. i and j are the indices of locations of estimated and the actual sources. N I and N J are the total numbers of estimated and undetected sources respectively. In current distributed-source reconstruction methods, a source location was defined as the location where the current strength was greater than a set threshold value. The first term in (14) calculates the mean of the distance from each estimated source to its closest real source. The corresponding real source is then marked as a detected source. All the undetected real sources made up the elements of data set J. The second term calculates the mean of the distance from each of the undetected sources to the closest estimated sources.

2. the percentage of undetected source number (PUS) defined as where N un and N real are the numbers of undetected and real sources respectively. An undetected source is defined as a real source whose location to its closest estimated source is larger than 0.6 times the unit distance of the source layer.

3. the percentage of falsely-detected source number (PFS) defined as where N false and N estimated are the numbers of falsely-detected and estimated sources respectively. A falsely-detected source is defined as an estimated source whose location to its closest real source is larger than 0.6 times the unit distance of the source layer.

The accuracy with which a source can be localized is affected by a number of factors including source-modeling errors, head-modeling errors, measurement-location errors and EEG noise [73]. Source localization errors depend also on the type of algorithm used in the inverse problem. In the case of L1 and L2 minimum norm approaches, the localization depends on several factors: the number of electrodes, grid density, head model, the number and depth of the sources and the noise levels.

In [3], the effects of dipole depth and orientation on source localization with varying sets of simulated random noise were investigated in four realistic head models. It was found that if the signal-to-noise ratio is above a certain threshold, localization errors in realistic head models are, on average, the same for deep and superficial sources and no significant difference in accuracy for radial or tangential dipoles is to be expected. As the noise increases, localization errors increase, particularly for deep sources of radial orientation. Similarly, in [46] it was found that the importance of the realistic head model over the spherical head model reduces by increasing the noise level. It has also been found that solutions for multiple-assumed sources have greater sensitivity to small changes in the recorded EEGs as compared to solutions for a single dipole [73].

4.1 Literature review of performance results of different inverse solutions

This section provides a review of the performance results of the different inverse solutions that have been described above and cites literature works where some of these solutions have been compared.

In [5], Pascual-Marqui compared five state-of-the-art parametric algorithms which are the minimum norm (MN), weighted minimum norm (WMN), Low resolution electromagnetic tomography (LORETA), Backus-Gilbert and Weighted Resolution Optimization (WROP). Using a three-layer spherical head model with 818 grid points (intervoxel distance of 0.133) and 148 electrodes, the results showed that on average only LORETA has an acceptable localization error of 1 grid unit when simulating a scenario with a single source. The other four inverse solutions failed to localize non-boundary sources and hence the author states that these solutions cannot be classified as tomographies as they lack depth information. When comparing MN solutions and LORETA solutions with different L p norms, Jun Yao et al. [72] have also found out that LORETA with the L1 norm gives the best overall estimation. In this analysis a Boundary Element Method (BEM) realistic head model was used and the simulated data consisted of three different datasets, designed to evaluate both localization ability and spatial resolution. Unlike the data in [5], this simulated data included noise and the SNR was set to 9–10 dB in each case. The results provided were the averaged results over 10 time samples. In [72] these current distribution methods were also compared to dipole methods where only a number of discrete generators are active. Results showed that although these methods give relatively low error distance measures, meaning that a priori knowledge of the number of dipoles could improve the inverse results, a medial and posterior shift occurred for all the three datasets. This shift was not present for current distribution methods. Jun Yao et al. have also used the percentage of undetected sources (PUS) as a measure to compare solutions. LORETA with the L1 norm resulted in a significantly smaller and less variable PUS when compared to all other methods tested in this paper [72]. The techniques were also applied to real data and once again the same approach gave qualitatively superior results which match those obtained with other neuroimaging techniques or cortical recordings.

Pascual-Marqui has also tested the effect on localization when the simulated data is made up of two sources [5]. In this case the LORETA and WROP solutions were used to localize these two sources, one of which was placed very deep. LORETA identified both sources but gave a blurred result, hence its name low resolution, but WROP did not succeed in identifying the two sources. MN, WMN and Backus-Gilbert gave a similar performance. From this analysis, Pascual-Marqui concluded that LORETA has the minimum necessary condition of being a tomography, however this does not imply that any source distribution can be identified suitably with LORETA [5].

In [32], Pascual-Marqui developed another algorithm called sLORETA. The name itself gives the impression that this is an updated version of LORETA but in fact it is based on the MN solution. The latter is known to suffer considerably when trying to localize deep sources. sLORETA handles this limitation by standardizing the MN estimates and basing localization inference on these standardized estimates [32]. When using a 3-layer spherical head model registered to the Talairach human brain atlas and assuming 6340 voxels with 5 mm resolution, sLORETA was found to give zero localization error for noiseless, single source simulations. In this case the solution space was restricted to the cortical gray matter and hippocampus areas. The same algorithm was tested on noisy data with noise scalp field standard deviation equal to 0.12 and 0.082 times the source with lowest scalp field standard deviation. The regularization parameter was in this case estimated using cross-validation. When compared to the Dale method, sLORETA was found to have the lowest localization errors and the lowest amount of blurring [32].

Another inverse solution considered as a complement to LORETA is VARETA [34]. This is a weighted minimum norm solution in which a regularization parameter that varies spatially is used. In LORETA this regularization parameter is a constant. In VARETA this parameter is altered to incorporate both concentrated and distributed sources. In [34], this inverse solution is compared to the FOCUSS algorithm [30]. The latter is a linear estimation method based on recursive, weighted norm minimization. When tested on noise-free simulations made up of a single or multi-source, FOCUSS achieved correct identification but the algorithm is initialization dependent. Iteratively it changes the weights based on the strength of the solution data in the last iteration and it converges to a set of concentrated sources which are equal in number to the number of electrodes. VARETA on the other hand is based on a Bayesian approach and does not necessarily converge to a set of dipoles if the simulated sources are distributed [34].

In [20], Hesheng Liu et al. developed another algorithm based on the foundation of LORETA and FOCUSS. This algorithm, called Shrinking LORETA FOCUSS (SLF) was tested on single and multi-source reconstruction and was compared to three other inverse solutions, mainly WMN, L1-NORM and LORETA-FOCUSS. In all scenarios considered, consisting of a number of sources organized in different arrangements and having different strengths and positions, SLF gave the closest solution to what was expected. WMN often gave a very blurred result, L1-NORM resulted in spurious sources or solutions with blurred images and incorrect amplitudes and LORETA-FOCUSS gave generally a high resolution but some sources were sometimes lost or had varying magnitude. LORETA-FOCUSS was found to have a localization error which was 3.2 times larger than that of SLF and an energy error which is 11.6 times larger. SLF is based on the assumption that the neuronal sources are both focal and sparse. If this is not the case and sources are distributed over a large area, then a low resolution solution as that offered by LORETA would be more appropriate [20].

Hesheng Liu [41] states that in situations where few sources are present and these are clustered, high resolution algorithms such as MUSIC can give better results. Solutions such as FOCUSS are also capable of reconstructing space sources. The performance of these algorithms degrades when the sources are large in number and extended in which case low resolution solutions such as LORETA and sLORETA are better. To achieve satisfactory results in both circumstances Hesheng Liu developed the SSLOFO algorithm [41]. Apart from localizing the sources with relatively high spatial resolution this algorithm gives also good estimates of the time series of the individual sources. Algorithms such as LORETA, sLORETA, FOCUSS and SLF fail to recover this temporal information satisfactorily. When compared with sLORETA for single source reconstruction, the mean error for SSLOFO was found to be 0 and the mean energy error was 2.99%. The mean error for sLORETA is also 0 but the mean energy error goes up to 99.55% as sLORETA has very poor resolution and a high point spread function [41]. In this same paper the authors have also analyzed the effect of noise. Noise levels ranging from 0 to 30 dB were considered and the mean localization error over a total of 2394 voxel positions was found. WMN and FOCUSS both resulted in large localization errors. sLORETA compared well with SSLOFO for a single source especially when the level of noise was high. When it comes to temporal resolution however, SSLOFO gave the best results. WMN mixed signals coming from nearby sources, sLORETA can only give power values and FOCUSS cannot produce a continuous waveform [41].

Another technique similar to SSLOFO in that it can capture spatial and temporal information is the ST-MAP [4]. If only spatial information is taken into account, the so called S-MAP succeeds in recovering most edges, preserving the global temporal shape but with possible sharp magnitude variations. The ST-MAP however gives a much smoother reconstruction of dipoles which helps stabilize the algorithm and reduce the computation time by 22% when compared to the S-MAP. These results were compared to those obtained by Quadratic Regularization (QR) and LORETA and the error measures were based on the data fit and reconstruction error. S-MAP and ST-MAP were both found to be superior to these algorithms as they give much smoother solutions and reasonably lower reconstruction errors [4].

Since the inverse problem in itself is underdetermined, most solutions use a mathematical criteria to find the optimal result. This however leaves out any reliable biophysical and psychological constraints. LAURA takes this into consideration and incorporates biophysical laws in the minimum norm solutions (MN, WMN, LORETA and EPIFOCUS [38]) and the simulation showed that EPIFOCUS and LAURA outperform LORETA. This comparison was done by measuring the percentage of dipole localization error less than 2 grid points.

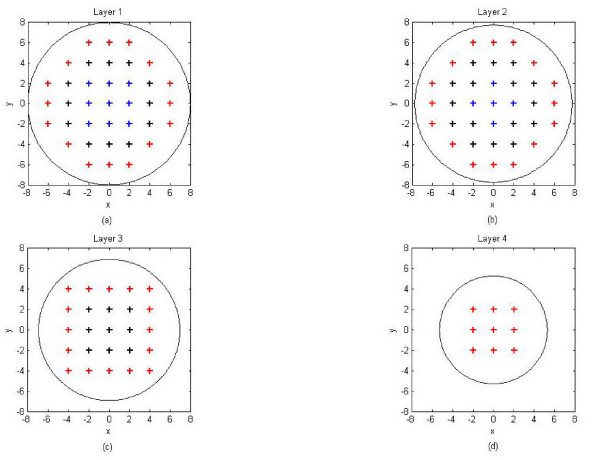

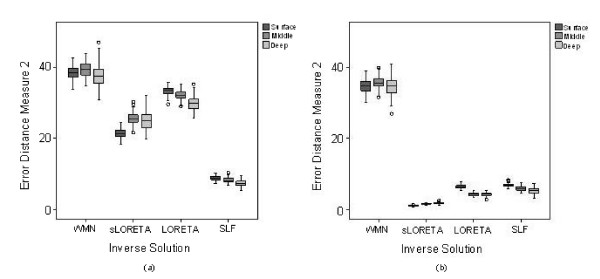

Another solution to the inverse problem which was mentioned in Section 3.2.1 is BESA. This technique is very sensitive to the initial guess of the number of dipoles and therefore is highly dependent on the level of user expertise. In [74] it is shown that the grand average location error of 9 subjects who were familiar with evoked potential data was 1.4 cm with a standard deviation of 1 cm.