Abstract

Objective

Interruptive alerts within electronic applications can cause “alert fatigue” if they fire too frequently or are clinically reasonable only some of the time. We assessed the impact of non-interruptive, real-time medication laboratory alerts on provider lab test ordering.

Design

We enrolled 22 outpatient practices into a prospective, randomized, controlled trial. Clinics either used the existing system or received on-screen recommendations for baseline laboratory tests when prescribing new medications. Since the warnings were non-interruptive, providers did not have to act upon or acknowledge the notification to complete a medication request.

Measurements

Data were collected each time providers performed suggested laboratory testing within 14 days of a new prescription order. Findings were adjusted for patient and provider characteristics as well as patient clustering within clinics.

Results

Among 12 clinics with 191 providers in the control group and 10 clinics with 175 providers in the intervention group, there were 3673 total events where baseline lab tests would have been advised: 1988 events in the control group and 1685 in the intervention group. In the control group, baseline labs were requested for 771 (39%) of the medications. In the intervention group, baseline labs were ordered by clinicians in 689 (41%) of the cases. Overall, no significant association existed between the intervention and the rate of ordering appropriate baseline laboratory tests.

Conclusion

We found that non-interruptive medication laboratory monitoring alerts were not effective in improving receipt of recommended baseline laboratory test monitoring for medications. Further work is necessary to optimize compliance with non-critical recommendations.

Introduction

Safe medication use includes ensuring that a medication is appropriate for an individual patient and then monitoring for subsequent adverse effects. Laboratory values give providers insight into a patient's ability to tolerate a medication, yet too often clinicians fail to order the baseline tests that could prevent or warn of potential adverse drug events. 1–6

Many clinical information systems now engage decision support to improve serum testing in conjunction with new prescriptions. A number of methods for intervention exist, ranging from passive displays of the most recent test results and pharmacotherapy reference links to more active pop-up warnings for excessive chemotherapy dosing and bidirectional feedback between the user and a support tool for antibiotic selection. 7–10 Some decision support types can also occur after the point of the care. For example, one system scanned for patients given diuretics, sent automated reminders to physicians the following day for missing baseline potassium values, and prompted a 9.8% increase in potassium testing. 11 Raebel et al. reported a collaborative approach in which pharmacists, alerted to missing test results, encouraged physicians to place laboratory orders, and this intervention significantly raised testing from 70.2% to 79.1%. 12

The conundrum that surrounds decision support is not the utility but ensuring its effectiveness. Taking into account that “speed is everything” and that needs must be anticipated and “delivered in real time,” decision support systems must also demonstrate that they can influence the delivery of medicine in a useful and timely fashion. 13–17 In the outpatient setting of today, providers and patients discuss medical issues and develop a plan primarily during office visits. If alerts or reminders are to have maximal influence on the ordering of prescriptions and appropriate laboratory tests, they should be visible during these encounters. Some commercial prescribing applications have included real-time alerts, with a reported 19% significant increase in laboratory requests with interruptive alerts for medication orders missing baseline values for potassium, platelets, renal, and/or liver function. 18 Vendors, however, bear the burden of producing one product that must serve varied audiences, and sometimes may include decision support that fires with low thresholds. 19 Recommendations which could result in severe morbidity or mortality are often presented to providers with the same urgency and level of interruption as suggestions with lesser clinical consequences. Providers already hassled by time pressures become “fatigued” by the number of clinically insignificant alerts, and subsequently may bypass warnings that could prevent adverse drug events. 20

To reduce over-alerting, we developed a knowledge base for outpatient clinics with alerts stratified into interruptive and non-interruptive notification levels. Interruptive alerts, the subject of a study by Shah et al., included alerts that either completely prevented completion of an order or permitted order completion contingent upon the provision of an override reason. 20 Non-interruptive alerts provided a warning in a reserved information box on the screen but did not require user intervention to proceed. The tiered system of warnings during medication prescribing demonstrated that two-thirds of interruptive alerts were accepted when paired with non-interruptive alerts for issues of lower clinical severity. In this study, we performed a prospective, randomized, controlled trial to evaluate the impact of non-interruptive alerts on baseline lab monitoring for newly prescribed medications.

Methods

Setting

The study was conducted among 22 adult primary care clinics in the Partners HealthCare System, a large integrated delivery system in the greater Boston area affiliated with two large academic teaching hospitals, Brigham and Women's Hospital and Massachusetts General Hospital. The outpatient practices included 16 hospital-affiliated clinics, two major women's health centers, and four community health centers. Study participants included 173 active staff and affiliated physicians, 29 fellows, 59 house staff, 29 nurse practitioners and physician assistants, and 76 ancillary staff including nurses. Since 2000, Partners HealthCare has used an ambulatory electronic medical record called the Longitudinal Medical Record (LMR) which includes clinical notes, reports (laboratory, radiology, and pathology), and electronic prescribing.

Randomization

We performed a stratified randomization of the primary care clinics based on site characteristics to balance the distributions of gender and socioeconomic factors between the intervention and control groups. The specific site characteristics used were 1) general primary care versus women's health center, and 2) academic clinic versus community health center. All providers were assigned to control or intervention categories based upon their practice affiliation. Randomization was conducted at the clinic level in order to avoid learning biases and treatment arm cross-contamination. 21 If randomized at the patient level, physicians would likely see reminders for some patients but not others. If randomized at the provider level, patients seeking care from multiple providers would receive care both with and without reminders. We excluded six physicians who administered clinical care to patients in more than one clinic. For the 23 patients that chose to seek care in more than one clinic, they were seen exclusively by physicians in the control or intervention category, but not both.

Clinical Content Generation

The knowledge content for our outpatient clinical decision support system was developed at Partners HealthCare and evaluated by a physician and pharmacist expert panel with recommendations from First DataBank, Hansten's, and the U.S. Food and Drug Administration, among other sources. 20 Specifically, contributions from preexisting knowledge bases and evidence-based literature helped focus the list and guide clinical importance. The knowledge base only included alerts pertinent to ambulatory care. Through multiple discussions, the expert panel placed each alert into one of three clinical severity tiers, where non-interruptive had the least likely and the least severe consequences based on clinical studies, case findings, and panel experience. The interruptive alerts required providers either to completely abort the order or to give a reason for overriding the warning. With non-interruptive alerts, the recommendations included baseline monitoring, use of a drug with caution, or increased monitoring to capture any unwanted effects. Moreover, providers did not have to supply response to the warning in order to proceed with the intended medication order. In this particular evaluation, an example of a non-interruptive medication laboratory monitoring alert would be to monitor potassium when prescribing ACE inhibitors—the full list of medication-laboratory monitoring rules are listed in Appendix 1 (available as a JAMIA online supplement at www.jamia.org). All decisions required consensus, and clinical specialists were consulted if the scope extended beyond the panel's expertise.

Provider Workflow and Alert Logic

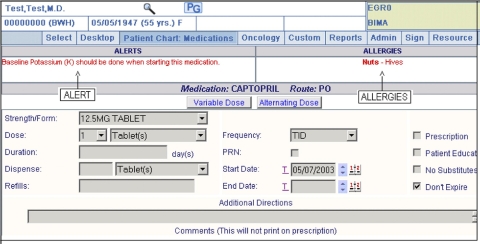

Provider workflow prior to implementation of the non-interruptive medication laboratory alerts was that each prescription was sequentially entered into the LMR but not evaluated for medication-laboratory test alerts. During the study, non-interruptive medication laboratory monitoring alerts were implemented only for the intervention arm and were displayed at the top of the medication ordering screen (▶).

Figure 1.

Screen-shot example of a non-interruptive medication laboratory alert within the Longitudinal Medical Record (LMR), our electronic health record. Here the provider has initiated a computerized medication order entry for Captopril. The tool queries our clinical data respository for a baseline lab. Since no results are found, an alert is triggered in real-time, and a recommendation is displayed in the left, upper box with red-colored text. Because the alert is designed to be non-interruptive, providers are not forced to change their medication order, correct any errors in the system, or provide a reason for their decision to override the alert.

The alert is designed to potentially fire only when the provider has initiated an order for a medication in the LMR. Once the user has selected a medication, even before the order has been submitted, the tool will immediately query our clinical data repository for baseline labs within a time frame (between 14 days and 12 months) defined by the knowledge base. If those baseline labs are missing, a notification will be displayed in real-time on the screen in the left, upper portion of the screen. The warnings were consistently displayed in red and were always positioned in the same text box. No general education messages were ever displayed in this reserved screen space, and the presence of any text in the alert box implicitly meant that the patient was missing a laboratory value. Providers did not have to request the recommended lab tests in order to finalize the prescription. In addition, no reason for bypassing the non-interruptive alert was required. If the clinician wanted to order the suggested test, they did so via the usual paper requisition for lab tests.

Data Collection

The study was conducted from 7/21/2003 to 1/20/2004, and data was collected each time any study provider, control or intervention, should have ordered a recommended laboratory test when prescribing a medication. If the recommended laboratory test appeared in the Partners Clinical Data Repository within two weeks of the outpatient encounter, then the medication laboratory alert was considered accepted. Patient demographics and provider practice affiliations were also collected during the course of the study.

Statistical Analysis

The unit of analysis was defined as an outpatient visit in a study practice by any patient with any provider in which a medication was ordered for the first time. The primary outcome was the patient's receipt of the appropriate laboratory testing within 14 days of the clinical encounter. In our organization, laboratory tests are routinely completed within 24 hours of the time of blood specimen receipt.

In univariate analyses, the control and intervention groups were compared using the chi-square statistic. To assess the impact of the alerting system on rates of appropriate laboratory monitoring, we used multi-variable logistic regression models. These were adjusted for patient age, sex, race, and insurance status as well as provider age and sex in order to address potential residual confounding occurring after randomization at the clinic level. The GENMOD procedure in SAS (Version 9.1, Cary, NC) was used to conduct the modeling while accounting for clustering of patients within clinical sites. 22 Odds ratios for each laboratory test were calculated, including 95% confidence intervals around the point estimate.

Results

Overall

Among 12 clinics with 191 providers in the control group and 10 clinics with 175 providers in the intervention group, there were 3673 events among 2765 patients in which a non-interruptive recommendation would have fired. During this period, 1520 patients were seen in clinics assigned to the control group, and 1245 patients were seen in clinics assigned to the intervention group. A summary and comparison of demographic factors in each study arm are shown in ▶ and confirm that significant differences exist between control and intervention patients. There were more Hispanics and Medicaid patients in the control group. There were more female, white, and Medicare patients in the intervention group. Among providers, females were more represented in the control group.

Table 1.

Table 1 Demographics of Both Patients and Providers

| PATIENT | Control (1520) | Intervention (1245) | p-value |

|---|---|---|---|

| Female | 767 (50%) | 722 (58%) | <0.001 |

| Race | |||

| White | 868 (57%) | 865 (69%) | <0.001 |

| African American | 181 (12%) | 122 (10%) | 0.087 |

| Hispanic | 284 (19%) | 111 (9%) | <0.001 |

| Other | 164 (11%) | 119 (10%) | 0.313 |

| Unknown | 23 (2%) | 28 (2%) | 0.158 |

| Insurance | |||

| Medicare | 425 (28%) | 452 (36%) | <0.001 |

| Medicaid | 216 (14%) | 138 (11%) | 0.016 |

| Private | 823 (54%) | 621 (50%) | 0.027 |

| None | 56 (4%) | 34 (3%) | 0.164 |

| PROVIDER | Control (191) | Intervention (175) | p-value |

| Female | 141 (74%) | 110 (63%) | 0.024 |

Of the 1988 events that occurred in the control group, laboratory tests were requested for 771 (39%) within 2 weeks of the visit. In the intervention group, 1685 events were monitored, and laboratory values were ordered by clinicians in 689 (41%) of the cases. The association between the intervention and the rate of ordering appropriate tests was produced an odds ratio of 1.048 with a 95% confidence interval from 0.753 to 1.457 (p = 0.782).

By Medication and Medication Class

In the study period, medication laboratory monitoring alerts were displayed for 70 different medications. For the 25 most commonly involved medications (at least 27 orders placed), there was no consistent correlation between the intervention and the level of alert compliance. Of these, 14 of the medications demonstrated a non-significant positive correlation with alerts and level of laboratory ordering, and eight had a non-significant negative correlation. Incidentally, the three medications with significant correlations demonstrated a negative association, with odds ratios ranging from 0.104 to 0.121—pravastatin (p = 0.024, 95% confidence interval 0.015 to 0.744), atorvastatin (p = 0.034, 95% confidence interval 0.299 to 0.952), and lithium (p = 0.044, 95% confidence interval 0.016 to 0.947).

The drugs were also classified into 23 medication classes, of which 12 classes had at least 33 or more orders placed (▶). Again, non-significant associations were seen. Odds ratios across all categories ranged from 0.117 to 2.583, where 6 of the medication classes demonstrated a positive association (1.184 to 2.583).

Table 2.

Table 2 By Class of Medication, Odds Ratios for the Association between Non-interruptive Alerts and the Quantity of Labs Ordered

| Medication Class | Total Labs Ordered | Total Alerts Fired | Odds Ratio | 95% Confidence Interval | p-value |

|---|---|---|---|---|---|

| Antimanic Agents | 24 | 71 | 0.858 | (0.117, 0.016) | 0.035 |

| HMG-CoA Reductase Inhibitors | 295 | 1025 | 1.136 | (0.654, 0.377) | 0.132 |

| Diuretics | 404 | 799 | 2.023 | (1.324, 0.866) | 0.196 |

| Angiotensin-Converting Enzyme Inhibitors | 289 | 621 | 2.124 | (1.184, 0.660) | 0.571 |

| Hypoglycemics | 82 | 177 | 2.252 | (1.221, 0.662) | 0.524 |

| Antifungal Antibiotics | 65 | 106 | 2.649 | (0.854, 0.275) | 0.785 |

| Anticonvulsants | 44 | 255 | 2.756 | (0.591, 0.127) | 0.503 |

| Antiarthritics | 25 | 103 | 3.129 | (1.328, 0.564) | 0.517 |

| Cardiotonic Agents | 35 | 56 | 4.977 | (0.346, 0.024) | 0.435 |

| Antituberculosis Agents | 62 | 115 | 7.617 | (1.964, 0.506) | 0.329 |

| Angiotensin II Receptor Antagonists | 53 | 130 | 8.131 | (2.583, 0.821) | 0.105 |

“Total labs ordered” includes the number of times serum testing was completed in both control and intervention groups within 14 days of the alert firing. “Total alerts fired” includes both the number of times the alert potentially could have fired in the control group and the number of the times the non-interruptive alert did fire in the intervention group. The sample size for antiarrhythmic agents was too small to generate a representative odds ratio.

By Lab Ordered

Within the knowledge base, 12 laboratory tests could have been recommended by the non-interruptive baseline alerts. Five laboratory tests generated a sufficient sample size to provide an odds ratio. No significant associations were demonstrated between the presence of non-interruptive alerts and whether certain types of labs were ordered more frequently (▶).

Table 3.

Table 3 By Laboratory Test Ordered, Odds Ratio between Presence of Non-interruptive Alerts and the Quantity of Labs Ordered

| Laboratory Test | Total Labs Ordered | Total Alerts Fired | Odds Ratio | 95% Confidence Interval | p-value |

|---|---|---|---|---|---|

| Alkaline Phosphatase | 18 | 82 | 0.740 | (0.223, 2.456) | 0.623 |

| Alanine Aminotransferase | 483 | 1453 | 0.789 | (0.502, 1.242) | 0.306 |

| Thyroid Stimulating Hormone | 17 | 56 | 0.811 | (0.235, 2.803) | 0.741 |

| Creatinine | 165 | 384 | 1.267 | (0.738, 2.175) | 0.392 |

| Potassium | 744 | 1526 | 1.288 | (0.852, 1.947) | 0.229 |

“Total labs ordered” includes the number of times serum testing was completed in both control and intervention groups within 14 days of the alert firing. “Total alerts fired” includes both the number of times the alert potentially could have fired in the control group and the number of the times the non-interruptive alert did fire in the intervention group.

Discussion

We found that our non-interruptive medication laboratory alerts did not impact provider ordering of baseline labs for new prescriptions, even when analyzed by medication class or laboratory test type. We felt that our warnings merited action from providers in most instances. The attempt, however, to decrease alert fatigue through non-interruptive alerts did not translate into changes in clinician behavior for this set of alerts. Unlike the Shah et al. study which demonstrated that alert compliance increased with a limited set of interruptive, severity-tiered alerts, failure to demand action from the user may cause alerts to be ineffective.

Many hypotheses exist for the end-result. Assuming that providers have every intention of delivering an accepted standard of care, lack of alert compliance implies a deliberate choice to disagree with the recommendations or a latency that includes unawareness, information deficiencies, and human error. If the decision to ignore the alerts is active, then users are reading the warnings. They may, however, have felt that our knowledge base for the non-interruptive alerts had too low a threshold for firing medication laboratory alerts and/or may not have agreed with the recommendations. We did, however, expect a high clinical acceptance from our providers, especially since the knowledge base used in this study was reviewed by a team within our institution.

Latency, or the tendency to let the status quo “slide,” however, is more concerning. The goal of a decision support system is to adjust for human knowledge gaps and the nature to err, especially when overwhelmed with the tremendous load of information in the delivery of health care. Keeping in mind Bates et al.'s suggestion that “physicians will strongly resist stopping” we had designed our alerts to be minimally intrusive, occupying a restricted box within the medication order entry screen. We wanted neither to resort to cluttering pop-ups nor to sacrifice on-screen real estate belonging to clinical order entry, yet it is possible the warnings were simply too easy to ignore. Despite being displayed in the color red, users may require additional stimuli such as alternate color schemes, animations, or screen arrangements to capture their attention. 23 Alternately, providers could possess an inherent bias against non-interruptive recommendations, mentally linking suggestions that are neither life-threatening nor action-dependent to the assumption that the information is not very important. If the total number of alerts crossed a clinician's subjective threshold of “too much” or “too distracting,” the user could have adopted the “lesser evil” of accepting the higher-tiered, interruptive warnings and bypassing the less critical, non-interruptive warnings. One critical systems aspect is that we did not have an electronic link between computerized physician medication order entry, our automated alerts, and laboratory requisitions at the time of the study. Although the alert may have fired when a request for medications was initiated, providers could submit the recommended blood tests only by hand. The involvement of two mediums, computer and paper, may simply have been too inconvenient and disruptive to permit a reasonable level of alert compliance. Ultimately, the outcome is likely not dominated by one reason but rather an amalgamation of these many factors.

Our findings do not mitigate the overall utility of tiered alerts. As noted by Shah et al. in a study which utilized the identical Partners knowledge base of medication warnings, high acceptance of interruptive, moderate-high acuity alerts depended on a tiered alert system to limit alert burden. 20 By maintaining less critical recommendations in a non-interruptive state, users were less prone to workflow disruption and possibly more amenable to heeding an alert that warranted interruptive decision-making. 23,24 These findings reinforce that the significant value of a non-interruptive alert lies in its minimally intrusive nature. Promoting non-interruptive alerts of low importance to the next tier of interruptive alerts would diminish the overall acceptance rates for interruptive alerts and make it more likely that more important alerts would be overridden.

Encouraging provider awareness toward or compliance with embedded electronic alerts remains a formidable task. The range of responses to alerts has been varied, and while studies have shown some compliance with recommendations, the vast majorities have demonstrated minimal to modest changes in behavior. 25–28 One example would be the Weingart et al. study which found that 91% of drug-allergy and 89% of high-severity drug-drug interaction automated alerts were overridden by physicians in ambulatory care. 29 In some cases, the reason was justified—when the benefits of the drug outweighed the disadvantages or the possible adverse events, the drug selection was limited, or existing patient information was incorrect. However, ignoring or misinterpreting multiple or all alerts as “unjustified overrides” poses a patient safety issue. 18 In fact, addressing the need to intercept enough potential adverse drug events while avoiding fatigue caused by clinically insignificant alerts, technological problems, unnecessary workflow interruptions, and lack of time that contribute to alert overrides identifies the critical balance between the sensitivity and specificity of drug alerts. 30

The study has a number of limitations. As we mentioned earlier, it is the unjustified overriding of alerts that poses a notable patient safety concern, but without reasons justifying why providers elected not to order the recommended baseline laboratory tests, we cannot determine the proportion of alerts that were inappropriately disregarded. In addition, we recognize that our results are influenced by the on-screen interface specific to our system, but without further feedback from users we are unable to identify any contribution of system design to human behavior that led to the final outcome. Over-testing may also have gone undetected; it was outside the scope of this study but has been examined in our other work. 31,32 Finally, these practices were all affiliated with large academic medical centers, and while they represented a diverse group, the results may not be generalizable to small, independent practices.

Future studies should include an assessment of interruptive alerts with linked lab order entry to facilitate provider ordering of the recommended baseline labs. Another study could track the eye movements of providers using a tool to register what on-screen elements are effective in capturing the user's initial attention and to what temporal extent users are paying heed to the non-interruptive alerts. Finally, we suggest that the urgency of the interruptive alerts may, in part, be defined by the existence of a lower limit provided by the less critical set of recommendations in non-interruptive alerts; however, we have yet to determine the extent non-interruptive alerts impact behavior toward the two more critical alert levels. A future study would examine the provider medication and lab ordering behavior of critical interruptive alerts with and without the presence of less urgent, non-interruptive alerts.

In conclusion, the delicate art of implementing clinically useful alerts in ambulatory care requires the consideration of many factors including user demands, knowledge base content, and systems design. The clinical importance of non-interruptive medication laboratory rules alone are an insufficient drive toward full compliance with alerts, yet safe and high quality patient care is contingent upon baseline laboratory values that should be ordered in conjunction with new prescriptions. As prescribers often have limited time and a strong tendency to maintain an existing workflow, efforts should still be directed at maximizing the utility of non-interruptive alerting. Future studies should examine if linked order entry or changes in screen design might significantly influence these results.

Acknowledgments

The authors thank the study participants, including the patients and primary care providers within the Partners HealthCare System. The authors also thank Julie Fiskio for her assistance retrieving information from the Partners clinical data repository and the Longitudinal Medical Record. Supported in part by grant HS-11169-01 from the Agency for Healthcare Research and Quality and by grant 1-T15-LM-07092 from the National Library of Medicine.

References

- 1.Gurwitz JH, Field TS, Harrold LR, et al. Incidence and preventability of adverse drug events among older persons in the ambulatory setting JAMA 2003;289(9):1107-1116Mar 5. [DOI] [PubMed] [Google Scholar]

- 2.Raebel MA, Lyons EE, Andrade SE, et al. Laboratory monitoring of drugs at initiation of therapy in ambulatory care J Gen Intern Med. 2005;20(12):1120-1126Dec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Schiff GD, Klass D, Peterson J, et al. Linking laboratory and pharmacy: opportunities for reducing errors and improving care Arch Intern Med 2003;163(8):893-900Apr 28. [DOI] [PubMed] [Google Scholar]

- 4.Howard RL, Avery AJ, Slavenburg S, et al. Which drugs cause preventable admissions to hospital?. A systematic review. Feb Br J Clin Pharmacol 2007;63(2):136-147Epub 2006 Jun 26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Matheny ME, Sequist TD, Seger AC, et al. A Randomized Trial of Electronic Clinical Reminders to Improve Medication Laboratory MonitoringJul-Aug J Am Med Inform Assoc 2008;15(4):424-429Epub 2008 Apr 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Overhage JM, Tierney WM, Zhou XH, et al. A randomized trial of “corollary orders” to prevent errors of omissionSep-Oct J Am Med Inform Assoc 1997;4(5):364-375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Miller RA, Waitman LR, Chen S, et al. The anatomy of decision support during inpatient care provider order entry (CPOE): empirical observations from a decade of CPOE experience at Vanderbilt J Biomed Inform 2005;38(6):469-485Dec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Evans RS, Pestotnik SL, Classen DC, et al. A computer-assisted management program for antibiotics and other antiinfective agents N Engl J Med 1998;338(4):232-238Jan 22. [DOI] [PubMed] [Google Scholar]

- 9.Rosenbloom ST, Chiu KW, Byrne DW, et al. Interventions to regulate ordering of serum magnesium levels: report of an unintended consequence of decision support J Am Med Inform Assoc 2005;12(5):546-553Sep-Oct. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gainer A, Pancheri K, Zhang J. Improving the human computer interface design for a physician order entry system AMIA Annu Symp Proc. 2003:847. [PMC free article] [PubMed]

- 11.Hoch I, Heymann AD, Kurman I, et al. Countrywide computer alerts to community physicians improve potassium testing in patients receiving diureticsNov-Dec J Am Med Inform Assoc. 2003;10(6):541-546Epub 2003 Aug 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Raebel MA, Lyons EE, Chester EA, et al. Improving laboratory monitoring at initiation of drug therapy in ambulatory care: a randomized trial Arch Intern Med. 2005;165(20):2395-2401Nov 14. [DOI] [PubMed] [Google Scholar]

- 13.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality J Am Med Inform Assoc. 2003;10(6):523-530Nov-Dec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kuperman GJ, Bobb A, Payne TH, et al. Medication-related clinical decision support in computerized provider order entry systems: a review J Am Med Inform Assoc. 2007;14(1):29-40Jan-Feb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gross PA, Bates DW. A pragmatic approach to implementing best practices for clinical decision support systems in computerized provider order entry systems J Am Med Inform Assoc. 2007;14(1):25-28Jan-Feb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bates DW, Cohen M, Leape LL, et al. Reducing the frequency of errors in medicine using information technology J Am Med Inform Assoc. 2001;8(4):299-308Jul-Aug. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rochon PA, Field TS, Bates DW, et al. Computerized physician order entry with clinical decision support in the long-term care setting: insights from the Baycrest Centre for Geriatric Care J Am Geriatr Soc. 2005;53(10):1780-1789Oct. [DOI] [PubMed] [Google Scholar]

- 18.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errorsMar-Apr J Am Med Inform Assoc. 2004;11(2):104-112Epub 2003 Nov 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Reichley RM, Resetar E, Doherty J, et al. Implementing a commercial rule base as a medication order safety net AMIA Annu Symp Proc. 2003:983. [PMC free article] [PubMed]

- 20.Shah NR, Seger AC, Seger DL, et al. Improving acceptance of computerized prescribing alerts in ambulatory careJan-Feb J Am Med Inform Assoc. 2006;13(1):5-11Epub 2005 Oct 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Campbell MJ, Donner A, Klar N. Developments in cluster randomized trials and Statistics in Medicine Stat Med. 2007;26(1):2-19Jan 15. [DOI] [PubMed] [Google Scholar]

- 22.Localio AR, Berlin JA, Ten Have TR, et al. Adjustments for center in multicenter studies: an overview Ann Intern Med. 2001;135(2):112-123Jul 17. [DOI] [PubMed] [Google Scholar]

- 23.Feldstein A, Simon SR, Schneider J, et al. How to design computerized alerts to safe prescribing practices Jt Comm J Qual Saf. 2004;30(11):602-613Nov. [DOI] [PubMed] [Google Scholar]

- 24.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a realityNov-Dec J Am Med Inform Assoc. 2003;10(6):523-530Epub 2003 Aug 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Judge J, Field TS, DeFlorio M, et al. Prescribers' responses to alerts during medication ordering in the long term care setting J Am Med Inform Assoc. 2006;13(4):385-390Jul-Aug. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Steele AW, Eisert S, Witter J, et al. The effect of automated alerts on provider ordering behavior in an outpatient setting PLoS Med. 2005;2(9):e255Sep. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hsieh TC, Kuperman GJ, Jaggi T, et al. Characteristics and consequences of drug allergy alert overrides in a computerized physician order entry system J Am Med Inform Assoc. 2004;11(6):482-491Nov-Dec. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nightingale PG, Adu D, Richards NT, et al. Implementation of rules based computerised bedside prescribing and administration: intervention study BMJ 2000;320(7237):750-753Mar 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Weingart SN, Toth M, Sands DZ, Aronson, MD, Davis RB, Phillips RS. Physicians' decisions to override computerized drug alerts in primary care Arch Intern Med. 2003;163(21):2625-2631Nov 24. [DOI] [PubMed] [Google Scholar]

- 30.van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entryMar-Apr J Am Med Inform Assoc. 2006;13(2):138-147Epub 2005 Dec 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chen P, Tanasijevic MJ, Schoenenberger RA, et al. A computer-based intervention for improving the appropriateness of antiepileptic drug level monitoring Am J Clin Pathol. 2003;119(3):432-438Mar. [DOI] [PubMed] [Google Scholar]

- 32.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests Am J Med. 1999;106(2):144-150Feb. [DOI] [PubMed] [Google Scholar]