Abstract

The ability to learn and exploit environmental regularities is important for many aspects of skill learning of which language may be a prime example. Much of such learning proceeds in an implicit fashion, that is, it occurs unintentionally and automatically and results in knowledge that is difficult to verbalize explicitly. An important research goal is to ascertain the underlying neurocognitive mechanisms of implicit learning abilities and understand its contribution to perception, language, and cognition more generally. In this paper, we review recent work that investigates the extent to which implicit learning of sequential structure is mediated by stimulus-specific versus domain-general learning mechanisms. Although much of previous implicit learning research has emphasized its domain-general aspect, here we highlight behavioral work suggesting a modality-specific locus. Even so, our data also reveals that individual variability in implicit sequence learning skill correlates with performance on a task requiring sensitivity to the sequential context of spoken language, suggesting that implicit sequence learning to some extent is domain-general. Taking into consideration this behavioral work, in conjunction with recent imaging studies, we argue that implicit sequence learning and language processing are both complex, dynamic processes that partially share the same underlying neurocognitive mechanisms, specifically those that rely on the encoding and representation of phonological sequences.

Keywords: implicit learning, sequence learning, language processing, modality-specificity, domain-generality, prefrontal cortex, basal ganglia

INTRODUCTION

An essential characteristic of skill learning is the necessity of encoding, representing, and/or producing structured sequences. Because the environment is characterized by the regular and coherent occurrence of sounds, objects, and events, any organism that can usefully encode and exploit such structure will have an adaptive advantage. Language and communication are excellent examples of structured sequential domains to which humans are sensitive. That is, spoken and written language units (letters, phonemes, syllables, words, etc) each adhere to a semi-regular, sequential structure that can be defined in terms of statistical or probabilistic relationships (Rubenstein, 1973). Sensitivity to such probabilistic information in the speech stream can improve the perception of spoken materials in noise; the more predictable a sentence is, the easier it is to perceive (Kalikow et al., 1977; see also Miller & Selfridge, 1950). The presence of probabilistic, structured patterns is found in almost all aspects of our interaction with the world whether it be speaking, listening to music, learning a tennis swing, or perceiving complex scenes.

How the mind, brain, and body encode and use structure that exists in time and space remains a formidable challenge for the cognitive and neural sciences (Port & Van Gelder, 1995). This issue has begun to be elucidated through the study of “implicit” learning (Cleeremans, Destrebecqz, & Boyer, 1998; Conway & Christiansen, 2006; Reber, 1993; Perruchet & Pacton, 2006). Implicit learning involves automatic learning mechanisms that are used to extract regularities and patterns distributed across a set of exemplars, typically without conscious awareness of the regularities being learned. Implicit learning is believed to be important for many aspects of skill learning, problem solving, and language processing.

One important research goal is to establish whether implicit learning is subserved by a single, domain-general mechanism that applies across a wide range of tasks, input, and domains, or instead consists of multiple task- or stimulus-specific subsystems. This is especially important if we are to usefully apply knowledge gained from laboratory studies of implicit learning to more real-world examples of language and skill learning. Two bodies of evidence have favored the former conclusion. First, many studies have demonstrated implicit learning across a wide range of stimulus domains and tasks, including but not limited to speech-like stimuli (Gomez & Gerken, 1999; Saffran, Aslin, & Newport, 1996), tone sequences (Saffran, Johnson, Aslin, & Newport, 1999), visual scenes and geometric shapes (Fiser & Aslin, 2001; Pothos & Bailey, 2000), colored light displays (Karpicke & Pisoni, 2004), and visuomotor sequences (Cleeremans & McClelland, 1991). Second, other studies using the artificial grammar learning (AGL) paradigm (Reber, 1967) have shown that participants can transfer their knowledge gained from one stimulus domain (e.g., visual symbols) to a different domain (e.g., nonsense syllables) if the underlying rule structure is the same (Altmann, Dienes, & Goode, 1995; Brooks & Vokey, 1991; Gomez & Gerken, 1999; Reber, 1969). Thus, implicit learning is argued to result in knowledge representations that are abstract or amodal in nature, independent of the physical qualities of the stimulus (Reber, 1993).

Despite this apparent evidence for a single, domain-general, and amodal implicit learning skill, there is reason to believe that implicit learning may be at least partly mediated by a number of separate specialized neurocognitive mechanisms. First, many views of the mind encompass to a greater or lesser extent the notion of functional specialization (Barrett & Kurzban, 2006; Fodor, 1983). As an example, working memory (Baddeley, 1986) consists in part of multiple, modality-specific processing components. Second, some of the transfer of knowledge data discussed above may suffer from methodological concerns (Redington & Chater, 1996); even if one disregards such concerns, it is not immediately clear that the results necessarily support the notion of amodal knowledge gained through implicit learning. Third, the fact that implicit learning has been demonstrated across numerous input types and tasks does not necessarily imply a single, domain-general system. It is just as possible logically that there may exist multiple implicit learning subsystems that all have similar computational principles, but that only some are engaged for specific task and input demands (Conway & Christiansen, 2005; Goschke, 1998; Seger, 1998). Finally, consistent with a multiple subsystems perspective, correlational analyses suggest that implicit learning is relatively task-specific (Feldman, Kerr, & Streissguth, 1995; Gebauer & Mackintosh, 2007).

In the first section below, we review recent behavioral work that examines the issues of domain-generality and modality-specificity in implicit learning. Our investigations explore the effect of sensory modality on implicit learning of sequential structures and the contribution of such abilities to language processing. Following the presentation of these studies, we review recent neural evidence that further illuminates the underlying neurocognitive basis of implicit sequence learning. Finally, we integrate the behavioral and imaging data and offer an account of the relation between implicit learning and language processing.

COGNITIVE BASIS OF IMPLICIT LEARNING: RECENT BEHAVIORAL STUDIES

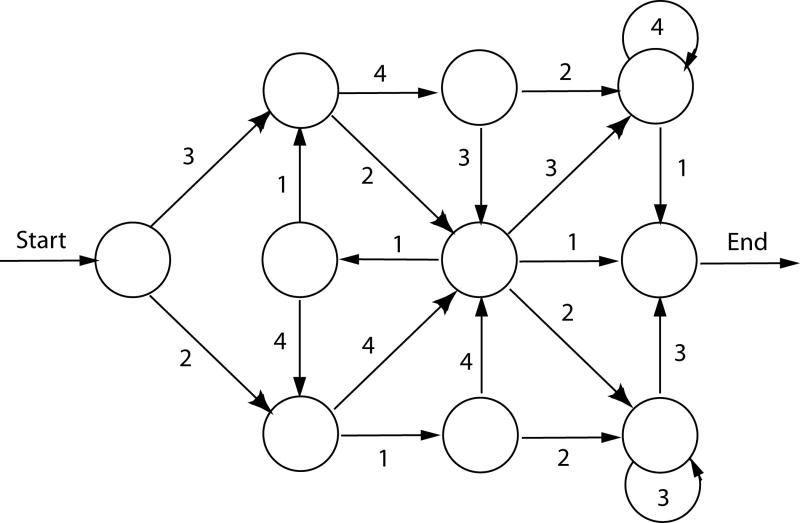

The following three studies all use the artificial grammar learning (AGL) methodology (Reber, 1967). In a standard AGL task, a finite-state grammar is used to generate stimuli that conform to particular rules that determine the order in which each element of a sequence can occur (Figure 1). Participants are exposed to the rule-governed stimuli under incidental and unsupervised learning conditions. Following exposure, participants’ knowledge of the complex sequential structure is tested by giving them a test in which they must decide whether a set of novel stimuli follow the same rules or not. Generally, participants display adequate knowledge of the sequential structure despite having very little explicit awareness of what the underlying “rules” are; in fact, most participants report they were guessing during the test task. The similarities between implicit learning of artificial grammars and language acquisition are notable: both appear to involve the automatic extraction of sequential structure from a complex input domain that results in knowledge that is difficult to verbalize explicitly (Cleeremans et al., 1998, Reber, 1967).

Figure 1.

An example of an artificial grammar used to generate sequences in implicit learning experiments. To generate a sequence, the experimenter follows the paths of the grammar and notes the sequence of numbers that are encountered. For instance, the sequence 3-4-2-4-1 is grammatical with respect to this grammar, whereas the sequence 4-1-3-2-2 is not. The numbers 1-4 are then mapped onto stimulus elements needed for the experiment in question, allowing for the generation of a set of structured patterns occurring in virtually any stimulus modality or dimension as needed (e.g., tones, nonsense syllables, visual patterns, etc.).

In the following sets of studies, we examine three important issues: the nature of modality constraints affecting implicit learning, the question of whether learning is mediated by multiple, independent learning mechanisms, and the extent to which implicit learning is a fundamental component of language processing abilities.

Modality Constraints

To examine the effect of modality in implicit learning rigorously, we had three groups of participants engage in an AGL task, each assigned to a different sense modality: audition, vision, or touch (Conway & Christiansen, 2005). In these experiments, our strategy was to incorporate comparable experimental procedures and materials in the three sensory conditions in order to ensure a valid comparison of learning across modalities. These studies were also the first investigation of implicit learning in the tactile domain, a realm that had been previously ignored in earlier research.

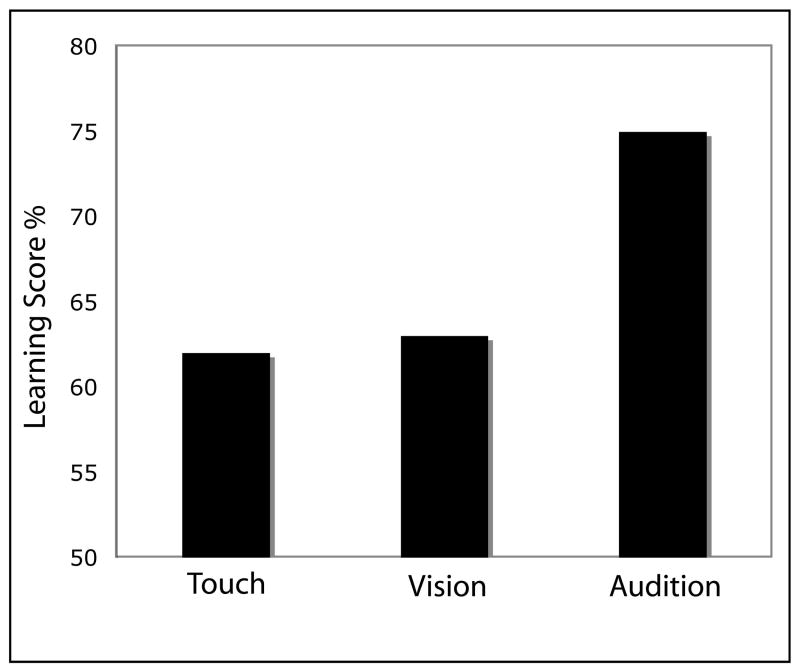

Tactile stimulation was accomplished via vibrotactile pulses delivered to participants’ five fingers of one hand (Figure 2). The sequence pulses were generated from a finite-state grammar, where each “letter” of the grammar corresponded to a pulse delivered to a particular finger. Each sequence generated from the grammar thus represented a series of vibration pulses delivered to the fingers, one finger at a time. For the visual group, visual sequences consisted of black squares appearing at different spatial locations, one at a time. Auditory sequences were comprised of tone patterns. Like the vibrotactile sequences, the visual and auditory stimuli were nonlinguistic and thus participants could not easily rely on a verbal encoding strategy.

Figure 2.

Vibrotactile devices attached to the fingers of a participant’s hand. This setup was used by Conway & Christiansen (2005) to assess tactile implicit learning.

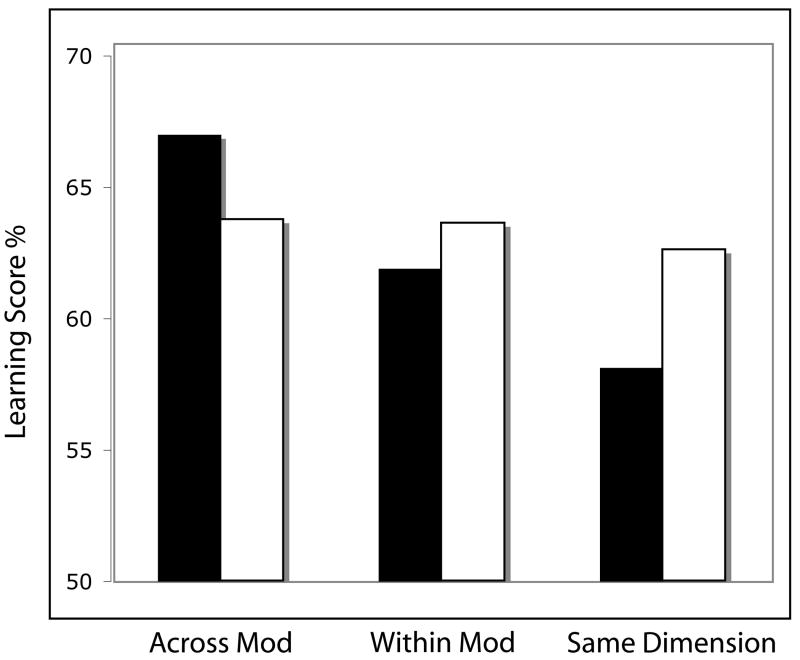

The test results, which assess the ability to classify correctly novel sequences as being generated from the same grammar, revealed that visual learning performance was nearly identical to tactile learning (about 62%) whereas auditory learning was much higher than either tactile or visual learning (75% correct; Figure 3). These data suggest a commonality among tactile, visual, and auditory implicit sequence learning: all three senses were able to mediate the encoding of the structured input. However, one striking difference among the senses was that the auditory performance was substantially (and significantly) greater than the other two modalities (75% vs. 62%). Thus, it appears that in this task, auditory implicit learning was more efficient than both tactile and visual learning. This is in accord with previous research emphasizing audition as being superior among the senses in regard to temporal processing tasks in general (e.g., Friedes, 1974; Handel & Buffardi, 1969).

Figure 3.

Implicit learning results for tactile, visual, and auditory sequences. Figure adapted from Conway & Christiansen (2005).

We were also interested in determining whether there were any other more subtle differences in learning across the senses. Additional analyses revealed that tactile learners were most sensitive to information at the beginning of a sequence, auditory learners were most sensitive to information at the end of a sequence, and visual learners displayed no biases toward either the beginning or the ending of the sequences. We determined this by comprehensively examining all training and test sequences in terms of their statistical structure. Learners in the tactile condition were more likely to make their classification judgments based on the extent to which a test sequence had statistical structure consistent with training items at the beginning of the sequence whereas auditory learners focused on structure at the endings of the sequences.

Taken together, the results from these experiments, confirmed and expanded in additional work (Conway & Christiansen, 2007), suggest that not only does auditory implicit learning have a quantitative advantage over tactile and visual learning, but that there also may be qualitative differences in implicit learning among the three modalities. Specifically, tactile learning appears to be more sensitive to statistical structure at the beginnings of sequences whereas auditory learning may be more sensitive to final-item structure. These biases suggest that each sensory system may apply slightly different computational strategies when processing sequential input. The auditory–recency bias is interesting because it mirrors findings on the modality effect in serial recall, in which a more pronounced recency effect (i.e., greater memory for items at the end of a list) is obtained with auditory lists as compared with visual lists (e.g., Crowder, 1986). This may indicate that similar constraints affect both explicit encoding of serial input and implicit learning of statistical structure. In both cases, learners appear to be more sensitive to auditory material at the end of a structured sequence or list of items.

Independent Stimulus-Specific Learning Mechanisms?

We recently attempted to determine whether implicit sequence learning consists of a single learning mechanism or multiple mechanisms that may operate simultaneously for different kinds of input or tasks (Conway & Christiansen, 2006). In order to distinguish between these two alternatives, we employed a dual-grammar, modified crossover AGL design. In a standard crossover design (see Redington & Chater, 1996), half of the participants are exposed to items from one grammar (e.g., Grammar A) in the exposure phase and then in the test phase must judge among items from both Grammars A and B. The other half of participants receive Grammar B at exposure but get the same test items as the first half of participants (from both Grammars A and B). The novel modification we added is the inclusion of the second grammar during the acquisition phase. Thus, participants receive stimuli generated from both grammars A and B and are tested on novel stimuli also generated from both A and B. With this dual-grammar design, it is possible to assess to what extent learners can extract sequential structure from multiple input streams simultaneously; furthermore, we can test whether dual-grammar learning is easier or harder when both input streams are in the same sensory modality or same perceptual dimension.

In our study, participants viewed a subset of sequences from both grammars, arranged randomly. One grammar, Grammar A, was presented in the auditory modality as tone sequences. The other grammar, Grammar B, was presented as visual sequences of colored patches in the center of the screen. After the acquisition phase, participants received novel sequences from both grammars with some participants receiving visual sequences and others auditory. Like the standard AGL procedure, participants were told to classify each test sequence in terms of whether it followed the same underlying rules that generated the previous stimuli. This dual-grammar crossover design allowed us to assess to what extent learning two streams of sequential structure can occur simultaneously and independently of one another. In a second experiment, we varied the stimulus format of the two input streams, presenting both within the same sense modality but instantiated along two different perceptual dimensions (e.g., tones versus nonwords or color sequences versus shape sequences). In a third experiment, both grammars were instantiated in the same perceptual dimension (e.g., two sets of nonword vocabularies or two sets of shape vocabularies).

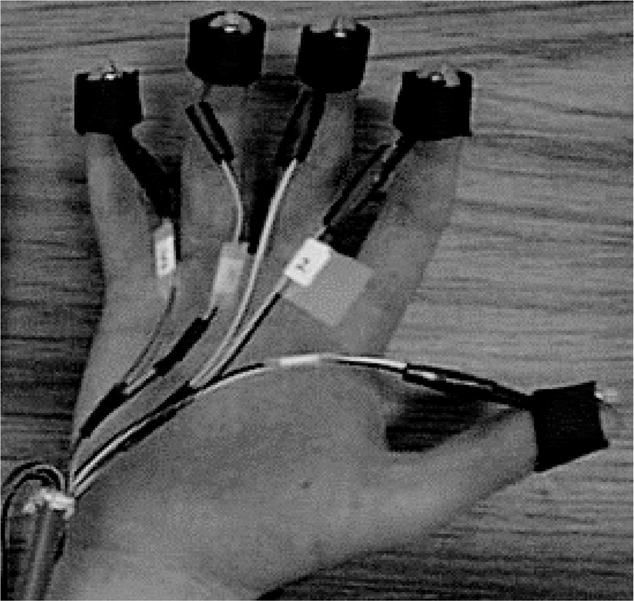

The overall results are presented in Figure 4 for each of the three experimental dual-grammar conditions (black bars): across modality, within modality, and within the same perceptual dimension. We also compared performance levels with a different group of participants who received only one grammar at acquisition but were tested with the same stimulus materials that the dual-grammar participants received (white bars). This allowed us to assess to what extent learning two grammars proceeds as well as how learning one grammar proceeds for each of the three experimental input conditions. As Figure 4 shows, for the across modality condition participants learned the two input structures, visual and auditory, quite well. In fact, the dual-grammar learning performance levels were actually higher than the performance of the single-grammar learners. These data suggest that participants learned the structural regularities from two artificial grammars in two different sense modalities just as well as if they had learned only one grammar alone, suggesting that the underlying learning systems operate simultaneously and independently of one another. For the other two input conditions, the results revealed that performance dropped slightly for the within-modal condition in which the two grammars were in the same sense modality, and performance broke down completely when the grammars were in the same perceptual dimension (see Figure 4).

Figure 4.

Implicit learning results comparing performance for dual-grammar (black bars) versus single-grammar (white bars) conditions. Figure drawn from data presented in Conway & Christiansen (2006).

Overall, the data revealed, quite remarkably, that participants were just as adept at learning structural regularities from two input streams as they were with one, as long as the two input types were in different sense modalities or perceptual dimensions. These findings suggest the operation of parallel, independent learning mechanisms that each handle a specific type of perceptual input, such as shapes, colors, tones, or word-like sounds (Goschke, Friederici, Kotz, & van Kampen, 2001; Keele, Ivry, Mayr, Hazeltine, & Heuer, 2003).

Contribution to Language Processing

Although it is commonly assumed that implicit learning is important for language processing, the evidence directly linking the two is equivocal. One approach is to assess language-impaired individuals on a putatively non-linguistic implicit learning task; if the group shows a deficit on the implicit learning task, this result is taken as support for a close link between the two cognitive processes. Using this approach, some researchers have found an implicit sequence learning deficit in dyslexics (Howard, Howard, Japikse, & Eden, 2006; Menghini, Hagberg, Caltagirone, Petrosini, & Vicari, 2006; Vicari, Marotta, Menghini, Molinari, & Petrosini, 2003) while others have found no connection between implicit learning, reading abilities, and dyslexia (Kelly, Griffiths, & Frith, 2002; Rüsseler, Gerth, & Münte, 2006; Waber et al., 2003). At least with regard to reading and dyslexia, the role of implicit learning is not clear (though also see Bennett et al., this volume, Folia et al., this volume, and Stoodley et al., this volume).

Given the data described previously, one complication with establishing an empirical link between implicit learning and language processing becomes apparent. If implicit learning involves multiple subsystems that each handle different types of input (e.g., Conway & Christiansen, 2006; Goschke et al., 2001; Seger, 1998), then it is possible that some implicit learning systems (e.g., perhaps those handling phonological sequences) may be more closely involved with language acquisition and processing than others. To elucidate these issues, participants engaged in two AGL tasks, one using color patterns and the other using non-color spatial patterns, followed by a spoken sentence perception task (Conway, Karpicke, & Pisoni, 2007). For the AGL tasks, we used a procedure wherein participants observed a visual sequential pattern and then attempted to recall and reproduce the pattern by pressing appropriate buttons on a touch-screen monitor (Pisoni & Cleary, 2004). Implicit learning was assessed to the extent that serial recall for rule-governed sequences improves relative to non-structured sequences (Karpicke & Pisoni, 2004; Miller, 1958). This procedure has an advantage over traditional AGL performance scores because it provides a more valid assessment of implicit learning by relying on an indirect rather than direct measure of performance (Redington & Chater, 2002). Additionally, the use of the two types of visual sequences, colored patterns or non-colored spatial patterns, allowed us to examine possible differences for stimuli that differ in ease of verbal encoding.

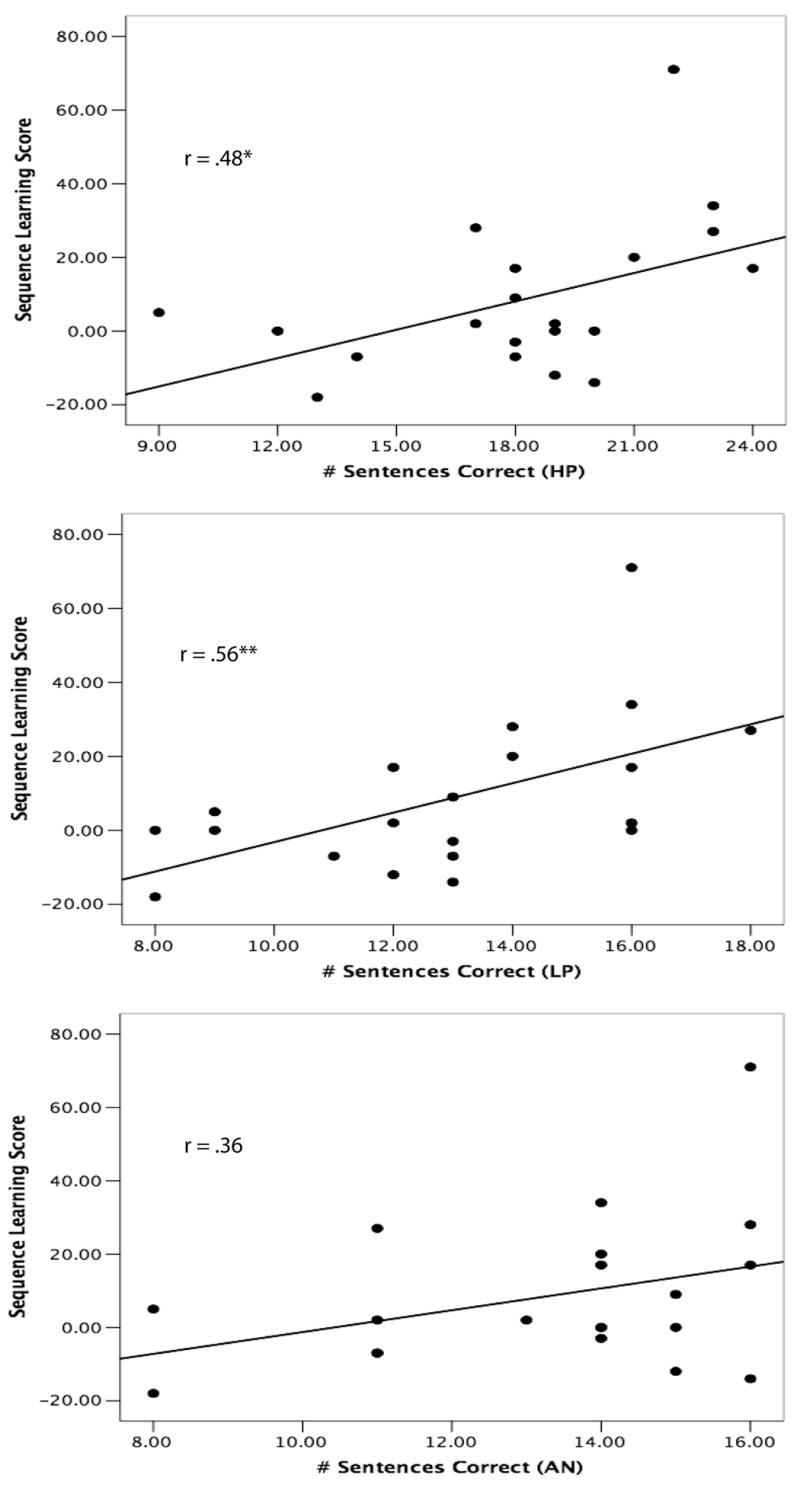

The language processing task involved participants listening to spoken sentences under degraded listening conditions and then identifying and writing down the final word in each sentence. Crucially, the sentences varied in the predictability of the final target word (see Kalikow et al., 1977 and Clopper & Pisoni, 2006). Three types of sentences were used: high-predictability (HP), low-predictability (LP), and anomalous (AN). HP sentences had a final target word that is predictable given the semantic context of the sentence (e.g., “Her entry should win first prize”); LP sentences had a target word that is not predictable given the semantic context of the sentence (e.g., “The man spoke about the clue”). AN sentences follow the same syntactic form as the HP and LP sentences, but the content words have been placed randomly to create semantically anomalous sentences (e.g., “The coat is talking about six frogs”). In this way, we were able to assess whether implicit sequence learning that is or is not phonologically-mediated correlated with spoken language perception under degraded listening conditions for sentence materials that vary in their probabilistic structure. We first review the results for the color sequence learning task. The results revealed that performance on this task was significantly correlated with language processing performance for the HP (r = .48) and LP (r = .56) but not anomalous sentences (Figure 5). Importantly, only participants’ learning score on the sequencing task, not serial recall performance in general, correlated with the language task. That is, the contribution to language processing that we have demonstrated is not due merely to serial recall abilities, which has shown to be related to language development (Baddeley, 2003). It was only when we assessed how much memory span improved for grammatically-consistent sequences did we find a significant correlation. Thus, what is important is the ability to implicitly acquire knowledge about structured sequential patterns, not just the ability to encode and recall a sequence of items from memory.

Figure 5.

Performance on a visual color sequence learning task plotted against performance on a spoken language perception task under degraded listening conditions for high predictability (HP), low predictability (LP), and anomalous (AN) sentences. Figures drawn from data presented in Conway et al. (2007).

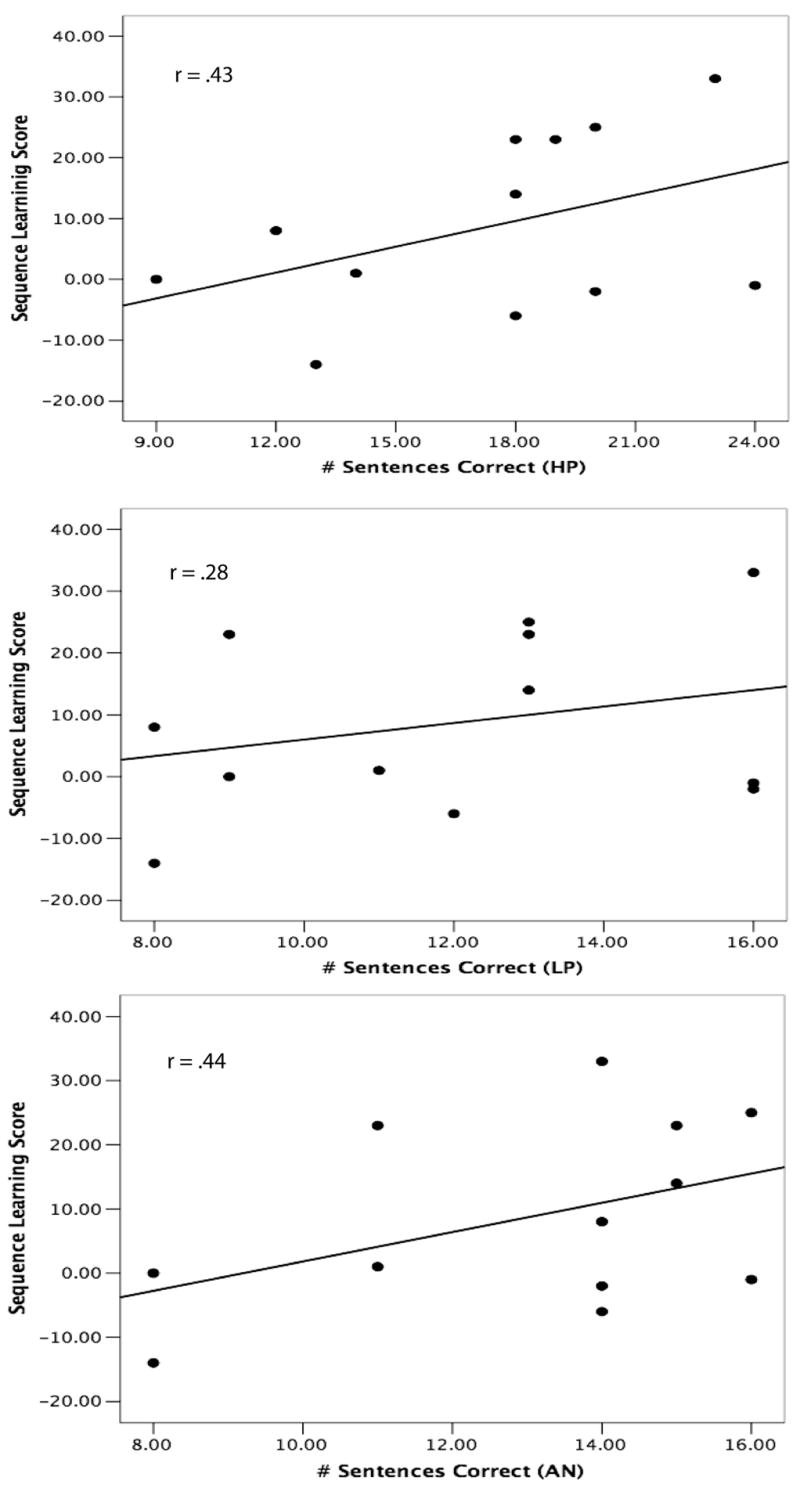

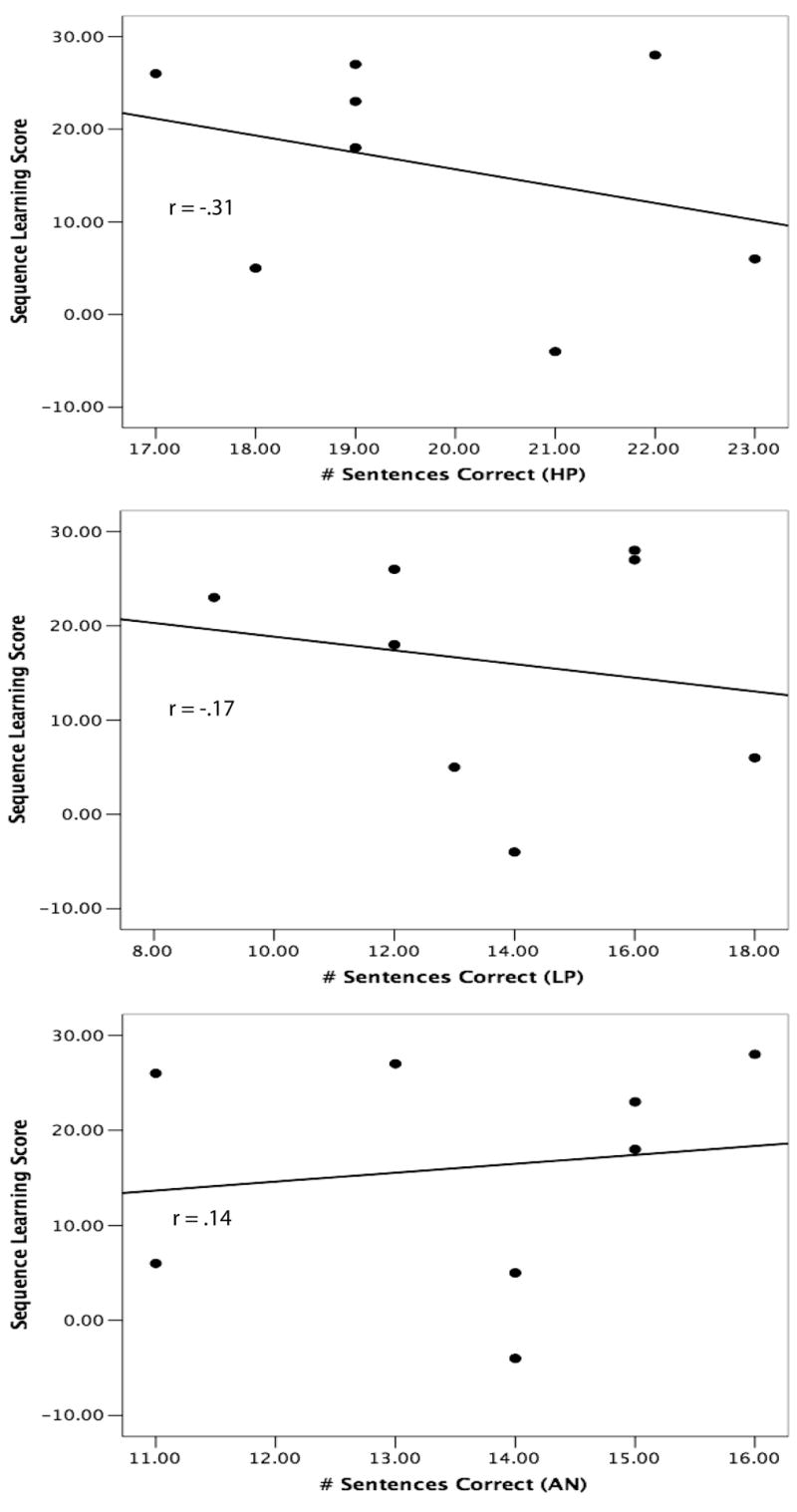

Interestingly, the sequence learning task that did not involve color sequences was not significantly correlated with performance on any of the sentence processing tasks (r’s < .38). Whereas the sequences from the color learning task are very readily verbalized and coded into phonological forms (e.g., “red-blue-yellow-red”), those from the other task are not because they emphasize visual-spatial attributes. Thus, it is possible that implicit learning of phonological representations, not just spatiotemporal events more generally, contribute to success on the language processing task. To examine this prediction further, we used a post-experiment debriefing on the non-color learning task to divide participants into two groups: those who attempted to encode sequences using some kind of verbal code, such as labeling each of the four spatial positions with a digit (1-4; “phonological coders”) and those who indicated they did not use a verbal code during the task (“non-phonological-coders”). We then assessed correlations between these two groups’ learning scores and language perception measures and found that although none of the correlations quite reached statistical significance (presumably due to a lack of statistical power; N’s = 12, 8), the difference in the correlations between the two groups was quite striking. The correlation results for the phonological coders and the non-coders are shown in Figure 6 and Figure 7, respectively. Phonological coders’ performance on the sequence task was positively correlated with their performance on the language task (r’s = .43, .28, .44, for the HP, LP, and AN sentences, respectively) whereas the correlations for non-coders were much less or even negatively correlated with the language task (r’s = -.31, -.17, .14, for the HP, LP, and AN sentences, respectively). This pattern of results further supports the conclusion that a crucial aspect of implicit sequence learning that contributes to spoken language processing is the learning of structured patterns from sequences that can be easily represented using a verbal code.

Figure 6.

Performance on a visual non-color sequence learning task for subjects who used a verbal coding strategy, plotted against performance on a spoken language perception task under degraded listening conditions for high predictability (HP), low predictability (LP), and anomalous (AN) sentences. Figures drawn from data presented in Conway et al. (2007).

Figure 7.

Performance on a visual non-color sequence learning task for subjects who did not use a verbal coding strategy, plotted against performance on a spoken language perception task under degraded listening conditions for high predictability (HP), low predictability (LP), and anomalous (AN) sentences. Figures drawn from data presented in Conway et al. (2007).

These results provide the first empirical demonstration, to our knowledge, of individual variability in implicit learning performance correlating with language processing in typically-developing subjects. We believe the evidence points to an important factor underlying spoken language processing: the ability to implicitly learn complex sequential patterns, especially those that can be represented phonologically.

NEURAL BASIS OF IMPLICIT SEQUENTIAL LEARNING

The behavioral data described above lead to two primary conclusions. First, implicit sequence learning involves multiple, modality-constrained mechanisms with each operating relatively independently of one another and handling different kinds of input. Second, implicit learning for some kinds of stimuli, especially those that can be readily verbalized, has shared mechanisms with aspects of language processing. Considered together with data suggesting that implicit learning and sequence learning may also operate at more abstract levels of processing (e.g., Dominey, Ventre-Dominey, Broussolle, & Jeannerod, 1995; Tunney & Altmann, 2001), we should expect to find that there exists a combination of both modality-specific and more domain-general neural regions underlying implicit sequence learning. In fact, recent findings from neuroimaging studies support this general prediction. Although it is difficult to compare studies that rely on different methodologies, the general trends implicate both modality-specific and more abstract sequential processing brain areas. Below, we discuss neural evidence related to modality-specificity first and domain-generality second, followed by an integrative discussion to pinpoint the neurocognitive basis of implicit sequential learning and the relation to language processing.

Modality-Specificity

One of the central discoveries that has emerged out of the field of cognitive neuroscience is that cognition is grounded in sensorimotor function (Barsalou, Simmons, Barbey, & Wilson, 2003; Glenberg, 1997; Harris, Petersen, & Diamond, 2001). For instance, even conceptual knowledge, which has traditionally been proposed to be amodal or propositional in nature, appears to be based on perceptual representations (James & Gauthier, 2003). That is, neuroimaging studies have shown that concepts like “tools” or “animals” are represented by the same brain areas that are involved in perceiving or interacting with the actual physical items (e.g., motor and visual cortical areas, respectively; Pulvermüller, 2001). In a review of neural evidence from both humans and nonhumans, Harris et al. (2001) concluded that low-level sensory areas are involved not only in the online perception of information, but also for learning, memory, and storage of that information. These insights about the brain are paving a way for a new view of mind and behavior, one that focuses on sensorimotor constraints (Glenberg, 1997), brain-body-environment interactions (Clark, 1997), and the unity of perception, conception, and cognition (Goldstone & Barsalou, 1998).

Because of these insights, it may not be too surprising to discover that low-level, modality-specific brain regions also appear to underlie aspects of the temporal ordering and sequencing of stimuli. For instance, in both humans and rats, the auditory cortex mediates the learning and categorization of tone sequences (Gottselig, Brandeis, Hofer-Tinguely, Borbély, & Achermann, 2006; Kilgard & Merzenich, 2002; Ohl, Scheich, & Freeman, 2001). So too, visual areas such as IT in the monkey appear to be responsible for the learning of conditional associations among visual stimuli occurring in a sequential presentation format (Messinger, Squire, Zola, & Albright, 2001).

The best evidence, however, comes directly from neuroimaging studies of implicit sequence learning in humans. Although brain areas that may be considered to be domain-general are involved in implicit learning, there is also substantial evidence for a modality-specific component. Only a handful of neuroimaging studies have examined implicit learning using the AGL paradigm, but most of those reveal sensory-specific brain regions (e.g., occipital cortex) involved in the learning of rule-governed visual stimuli (e.g., Forkstam, Hagoort, Fernandez, Ingvar, & Petersson, 2006; Lieberman, Chang, Chiao, Bookheimer, & Knowlton, 2004; Petersson, Forkstam, & Ingvar, 2004; Seger, Prabhakaran, Poldrack, & Gabrieli, 2000; Skosnik et al., 2002). For example, Lieberman et al. (2004) and Skosnik et al. (2002) observed increased medial occipital and superior occipital activation respectively when participants viewed grammatical test strings compared to ungrammatical test strings. Consistent with these data, the serial reaction time task (SRT; Nissen & Bullemer, 1987) has also revealed modality-specific brain regions responsible for learning sequential structure (e.g., Rauch et al., 1995; 1997). Based on evidence such as this, Keele et al. (2003) recently presented a neurocognitive theory of implicit sequence learning and suggested the existence of multiple modality- or input-specific learning systems.

The modality-specificity revealed in these implicit learning tasks has a parallel in the implicit memory literature. For example, repetition priming – i.e., improvement in the ability to perceive or identify a stimulus due to previous exposure to it – is known to involve modality-specific brain regions (see Schacter, Dobbins, & Schnyer, 2004). Reber, Stark, and Squire (1998) used an implicit memory task not unlike the AGL paradigm in which participants viewed complex dot patterns that were distortions of an underlying prototype pattern. Following exposure, participants attempted to classify novel dot patterns as either being similar to the prototype or not. Visual cortex showed different levels of activity for these new dot patterns, depending on whether they were similar to the prototype or not. Thus, the similar nature of modality-specific brain regions involved in both implicit learning and implicit memory (e.g., priming) may implicate similar underlying computational processes involved in each. One likely possibility is that implicit sequence learning, like priming, at least partly is based on perceptual processing mechanisms that become tuned to particular stimuli based on previous experience (for similar proposals, see Chang & Knowlton, 2004; Kinder, Shanks, Cock, & Tunney, 2003).

In sum, consistent with the modality-constrained view outlined above, it is clear that brain regions traditionally thought to be modality-specific are active during implicit sequence learning tasks. However, it is possible that other brain networks are also involved in learning sequential structure including areas that process information in ways that may be considered to be more domain-general or abstract.

Domain-Generality

One of the most consistent findings from neuroimaging studies is that frontal cortical (e.g., prefrontal cortex, premotor cortex, supplementary motor areas, etc.) as well as subcortical areas (e.g., basal ganglia) play an essential role in sequence learning and representation (for general reviews, see Bapi, Pammi, Miyapuram, & Ahmed, 2005; Clegg, DiGirolamo, & Keele, 1998; Curran, 1997; Hikosaka et al., 1999; Pascal-Leone, Grafman, & Hallett, 1995; Rhodes, Bullock, Verwey, Averbeck, & Page, 2004). For instance, the SRT task consistently activates premotor (Berns, Cohen, & Mintun, 1997; Grafton, Hazeltine, & Ivry, 1995; Rauch et al., 1996) and prefrontal (Grafton et al., 1995; Peigneux et al., 2000) cortex, as well as parts of the basal ganglia including the striatum (Berns et al., 1997; Rauch et al., 1995), the caudate (Peigneux et al., 2000; Rauch et al., 1995), and putamen (Grafton et al., 1995). A recent meta-analysis shows that patients with Parkinson’s disease are impaired on the SRT task (Siegert, Taylor, Weatherall, & Abernethy, 2006), highlighting the importance of the basal ganglia for this task. The AGL task also activates premotor (Opitz & Friederici, 2004) and prefrontal cortex (Fletcher, Büchel, Josephs, Friston, & Dolan, 1999; Skosnik et al., 2002) often including Broca’s area (Forkstam, et al., 2006; Petersson, et al., 2004; Seger, et al., 2000). The basal ganglia have also been implicated in some AGL studies (Forkstam et al., 2006; Lieberman et al., 2004) although unlike the SRT task, Parksinson’s disease may not adversely affect AGL (Witt, Nühsman, & Deuschl, 2002). Other sequencing tasks besides SRT or AGL also show involvement of frontal lobe and basal ganglia (e.g., Bischoff-Grethe, Martin, Mao, & Berns, 2001; Carpenter, Georgopoulos, & Pellizzer, 1999; Huettel, Mack, & McCarthy, 2002).

The basal ganglia consist of a number of components, including the striatum, which itself is made up of the caudate and putamen (Nolte & Angevine, 1995). Although it has long been known that the basal ganglia are important for motor function, it is also becoming apparent that they are associated with other cognitive functions more generally, especially processes used for sequencing tasks (Middleton & Strick, 2000; Seger, 2006). The basal ganglia connect to multiple cortical sites including motor cortex, premotor cortex, and prefrontal cortex. The prefrontal cortex in turn is associated with executive function, cognitive control, and working memory (Miller & Cohen, 2001). More importantly, the prefrontal cortex is believed to play an essential role in learning, planning, and executing sequences of thoughts and actions (Fuster, 2001; 1995). The prefrontal cortex has many interconnections with various sensory, motor, and subcortical regions, making it an ideal candidate for more abstract, domain-general aspects of cognitive function (Miller & Cohen, 2001).

The corticostriatal loops connecting basal ganglia with cortex appear to be crucial for various forms of motor skill learning (e.g., Heindel, Butters, & Salmon, 1988) and non-motor cognitive processing (Seger, 2006). Interestingly, each of the parallel loops is believed to mediate a different aspect of cognitive processing, such as “visual”, “motor”, or “executive” functions (Seger, 2006), thus constituting a form of specialization of function. The ways in which basal ganglia and prefrontal cortex interact appear to be complex and may partly depend on the nature of the task demands. In some cases, the prefrontal cortex appears necessary for learning new sequences while the basal ganglia only become active once the sequences become well-practiced (Fuster, 2001). In other cases, learning appears to occur first in the basal ganglia, which then guide learning in prefrontal cortex (Pasupathy & Miller, 2005). A third possibility is that the basal ganglia contribute to reinforcement learning while the cortex is specialized to handle unsupervised learning situations (Doya, 1999). Regardless of the actual ways in which these two brain structures interact, it appears likely that sequence learning relies heavily on the complex interaction of multiple corticostriatal loops, in a complex dynamic interplay that connect the basal ganglia to various cortical areas including circuits in the prefrontal cortex.

As is evident from this brief overview, implicit sequence learning appears to depend on a wide network of brain areas, including sensory, motor, frontal, and subcortical regions. Although we have focused here on frontal-striatal circuits, other brain regions including the parietal cortex (see Menghini et al., this volume) and the cerebellum (Desmond & Fiez, 1998; Molinari, Filippini, & Leggio, 2002; Paquier & Mariën, 2005) have also been implicated in implicit learning. Implicit sequence learning likely involves multiple levels of learning including learning simple stimulus-response associations as well as higher-order forms of learning that could be considered more abstract (Curran, 1998). Thus, the neural basis of implicit sequence learning not surprisingly involves a number of brain circuits that are active to a greater or lesser extent depending on the task’s demands, learning situation, and nature of the input. The final question we address is to what extent neural mechanisms for implicit sequence learning are shared by those involved in language processing.

SEQUENTIAL LEARNING AND LANGUAGE: A SYNTHESIS

The traditional Broca-Wernicke model of language is now giving way to the recognition that language processing involves a much more distributed network of brain mechanisms including, interestingly enough, frontal/basal ganglia circuits (Friederici, Rüschemeyer, Hahne, & Fiebach, 2003; Lieberman, 2002; Ullman, 2001). For example, a recent fMRI study (Obleser, Wise, Dresner, & Scott, 2007) involving a speech perception task nearly identical to ours in which sentences varied on their semantic predictability (Conway et al., 2007) showed that a network of frontal brain regions had increased activation for high predictability sentences. Thus, the frontal lobe and particularly the prefrontal cortex appear to be important for processing sequential context in spoken language. In addition, the two classic language dysfunctions, Broca’s and Wernicke’s aphasia, are now known to be driven just as much if not more from damage to subcortical basal ganglia structures as from cortical lesions (Lieberman, 2002). Even so, Broca’s area and other frontal lobe structures still certainly play an important role in linguistic tasks, but they do not appear to be language-specific. For example, several theories ascribe Broca’s area to be a “supramodal” sequence or structural processor, especially for complex hierarchical sequences be they linguistic or not (Conway & Christiansen, 2001; Forkstam et al., 2006; Friederici, A.D., 2004; Greenfield, 1991; Tettamanti et al., 2002). Thus, the frontal lobe, basal ganglia, and corticostriatal circuits appear to be active for both implicit sequence learning and several aspects of language processing.

However, the behavioral data reviewed above indicate that not all aspects of implicit sequence learning will necessarily recruit the same neurocognitive mechanisms as language processing. Performance on an implicit learning task only correlated with spoken language processing when the sequences were composed of verbal items. Thus, we ought to expect to find that certain brain regions are involved specifically in verbal sequencing, and these may be the regions that underlie both the implicit sequence learning and the spoken language tasks. There are two likely candidates for a verbal-specific sequencing brain system. One possibility is that there may be corticostriatal circuits that are specifically devoted to sequencing of verbal-mediated material. This possibility is consistent with suggestions that different corticostriatal loops perform analogous computations (i.e., sequencing) but handle different input domains (Ullman, 2001). A second likely possibility is that regions of Broca’s area specifically handle phonological sequences. This idea is supported by data showing that Broca’s aphasics can perform a spatio-motor implicit sequence learning task but not one involving phonological sequences (Goschke et al., 2001).

In sum, there appear to be both domain-general and modality-specific neurocognitive mechanisms that underlie both language and implicit sequence learning. The extent to which these neural mechanisms will be shared across linguistic and non-linguistic tasks will depend on several factors, one being related to the perceptual dimension of the input (e.g., visual, auditory, verbal, etc). For instance, we predict that the neural mechanisms that are devoted to sequence processing more generally (frontal/basal ganglia circuits) will interact with modality-specific (e.g., visual and auditory unimodal and association areas) brain regions when the task requires it. Furthermore, there may be phonological-specific processing areas (e.g., specific components of the frontal lobe, such as Broca’s region) that will be preferentially recruited when the task involves phonological sequencing whether the input itself is visual or auditory. This complex dynamic of domain-general and modality-specific neural mechanisms has been recently demonstrated in a neuroimaging study examining the effect of input modality and linguistic complexity during spoken and written language processing tasks (Jobard, Vigneau, Mazoyer, & Tzourio-Mazoyer, 2007). Both reading and listening tasks involved a common phonological or supramodal network of brain regions, including the inferior frontal area, whereas visual and auditory unimodal and association areas were preferentially active during reading and listening tasks, respectively.

CONCLUSIONS

We have reviewed evidence that implicit sequential learning is mediated by a combination of modality-specific and domain-general neurocognitive learning mechanisms that likely contribute to the successful acquisition and processing of linguistic input. Sequence learning and language are both complex, dynamic processes that involve a wide network of brain areas acting in concert. The behavioral work suggests some of the ways in which the underlying neurocognitive mechanisms are both constrained by input modality and how they rely on neural mechanisms shared with language processing that are of a more abstract or supramodal nature. We suggest that a full understanding of language acquisition and processing – whether it be for written or spoken material – will likely benefit from increased exploration into the understanding of the neurocognitive basis of implicit sequence learning.

Acknowledgments

Preparation of this manuscript was supported by NIH DC00012.

References

- Altmann GTM, Dienes Z, Goode A. Modality independence of implicitly learned grammatical knowledge. Journal of Experimental Psychology: Learning, Memory, & Cognition. 1995;21:899–912. [Google Scholar]

- Baddeley AD. Working memory and language: An overview. Journal of Communication Disorders. 2003;36:189–208. doi: 10.1016/s0021-9924(03)00019-4. [DOI] [PubMed] [Google Scholar]

- Baddeley AD. Working memory. Oxford, UK: Oxford University Press; 1986. [Google Scholar]

- Bapi RS, Pammi VSC, Miyapuram KP, Ahmed Investigation of sequence processing: A cognitive and computational neuroscience perspective. Current Science. 2005;89:1690–1698. [Google Scholar]

- Barrett HC, Kurzban R. Modularity in cognition: Framing the debate. Psychological Review. 2006;113:628–647. doi: 10.1037/0033-295X.113.3.628. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Simmons WK, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends in Cognitive Sciences. 2003;7:84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Berns GS, Cohen JD, Mintun MA. Brain regions responsive to novelty in the absence of awareness. Science. 1997;276:1272–1275. doi: 10.1126/science.276.5316.1272. [DOI] [PubMed] [Google Scholar]

- Bischoff-Grethe A, Martin M, Mao H, Berns GS. The context of uncertainty modulates the subcortical response to predictability. Journal of Cognitive Neuroscience. 2001;13:986–993. doi: 10.1162/089892901753165881. [DOI] [PubMed] [Google Scholar]

- Brooks LR, Vokey JR. Abstract analogies and abstracted grammars: Comments on Reber (1989) and Mathews et al (1989) Journal of Experimental Psychology: General. 1991;120:316–323. [Google Scholar]

- Carpenter AF, Georgopoulos AP, Pellizzer G. Motor cortical encoding of serial order in a context-recall task. Science. 1999;283:1752–1757. doi: 10.1126/science.283.5408.1752. [DOI] [PubMed] [Google Scholar]

- Chang GY, Knowlton BG. Visual feature learning in artificial grammar classification. Journal of Experimental Psychology Learning, Memory, & Cognition. 2004;30:714–722. doi: 10.1037/0278-7393.30.3.714. [DOI] [PubMed] [Google Scholar]

- Clark A. Being there: Putting brain, body, and world back together again. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- Cleeremans A, Destrebecqz A, Boyer M. Implicit learning: News from the front. Trends in Cognitive Sciences. 1998;2:406–416. doi: 10.1016/s1364-6613(98)01232-7. [DOI] [PubMed] [Google Scholar]

- Cleeremans A, McClelland JL. Learning the structure of event sequences. Journal of Experimental Psychology: General. 1991;120:235–253. doi: 10.1037//0096-3445.120.3.235. [DOI] [PubMed] [Google Scholar]

- Clegg BA, DiGirolamo GJ, Keele SW. Sequence learning. Trends in Cognitive Sciences. 1998;2:275–281. doi: 10.1016/s1364-6613(98)01202-9. [DOI] [PubMed] [Google Scholar]

- Clopper CG, Pisoni DB. The Nationwide Speech Project: A new corpus of American English dialects. Speech Communication. 2006;48:633–644. doi: 10.1016/j.specom.2005.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway CM, Christiansen MH. Sequential learning in non-human primates. Trends in Cognitive Sciences. 2001;5:529–546. doi: 10.1016/s1364-6613(00)01800-3. [DOI] [PubMed] [Google Scholar]

- Conway CM, Christiansen MH. Modality-constrained statistical learning of tactile, visual, and auditory sequences. Journal of Experimental Psychology. 2005;31:24–39. doi: 10.1037/0278-7393.31.1.24. [DOI] [PubMed] [Google Scholar]

- Conway CM, Christiansen MH. Statistical learning within and between modalities: Pitting abstract against stimulus-specific representations. Psychological Science. 2006;17:905–912. doi: 10.1111/j.1467-9280.2006.01801.x. [DOI] [PubMed] [Google Scholar]

- Conway CM, Christiansen MH. Seeing and hearing in space and time: Effects of modality and presentation rate on implicit statistical learning. 2007 Manuscript submitted for publication. [Google Scholar]

- Conway CM, Karpicke J, Pisoni DB. Contribution of implicit sequence learning to spoken language processing: Some preliminary findings from normal-hearing adults. Journal of Deaf Studies and Deaf Education. 2007;12:317–334. doi: 10.1093/deafed/enm019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowder RG. Auditory and temporal factors in the modality effect. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1986;2:268–278. doi: 10.1037//0278-7393.12.2.268. [DOI] [PubMed] [Google Scholar]

- Curran T. Implicit sequence learning from a cognitive neuroscience perspective: What, how, and where? In: Stadler MA, Frensch PA, editors. Handbook of implicit learning. London: Sage Publications; 1998. pp. 365–400. [Google Scholar]

- Desmond JE, Fiez JA. Neuroimaging studies of the cerebellum: Language, learning, and memory. Trends in Cognitive Sciences. 1998;2:355–361. doi: 10.1016/s1364-6613(98)01211-x. [DOI] [PubMed] [Google Scholar]

- Dominey PF, Ventre-Dominey J, Broussolle E, Jeannerod M. Analogical transfer in sequence learning. In: Grafman J, Holyoak KJ, Boller F, editors. Structure and functions of the human prefrontal cortex. New York: New York Academy of Sciences; 1995. pp. 173–181. [DOI] [PubMed] [Google Scholar]

- Doya K. What are the computations of the cerebellum, the basal ganglia and the cerebral cortex? Neural Networks. 1999;12:961–974. doi: 10.1016/s0893-6080(99)00046-5. [DOI] [PubMed] [Google Scholar]

- Feldman J, Kerr B, Streissguth AP. Correlational analyses of procedural and declarative learning performance. Intelligence. 1995;20:87–114. [Google Scholar]

- Fiser J, Aslin RN. Unsupervised statistical learning of higher-order spatial structures from visual scenes. Psychological Science. 2001;12:499–504. doi: 10.1111/1467-9280.00392. [DOI] [PubMed] [Google Scholar]

- Fletcher P, Büchel C, Josephs O, Friston K, Dolan R. Learning-related neuronal responses in prefrontal cortex studied with functional neuroimaging. Cerebral Cortex. 1999;9:168–178. doi: 10.1093/cercor/9.2.168. [DOI] [PubMed] [Google Scholar]

- Fodor JA. The modularity of mind: An essay on faculty psychology. Cambridge, MA: Bradford Books, MIT Press; 1983. [Google Scholar]

- Forkstam C, Hagoort P, Fernandez G, Ingvar M, Petersson KM. Neural correlates of artificial syntactic structure classification. NeuroImage. 2006;32:956–967. doi: 10.1016/j.neuroimage.2006.03.057. [DOI] [PubMed] [Google Scholar]

- Freides D. Human information processing and sensory modality: Cross-modal functions, information complexity, memory, and deficit. Psychological Bulletin. 1974;81:284–310. doi: 10.1037/h0036331. [DOI] [PubMed] [Google Scholar]

- Friederici AD. Processing local transitions versus long-distance syntactic hierarchies. Trends in Cognitive Sciences. 2004;8:245–247. doi: 10.1016/j.tics.2004.04.013. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Rüschemeyer S-A, Hahne A, Fiebach CJ. The role of left inferior frontal and superior temporal cortex in sentence comprehension: Localizing syntactic and semantic processes. Cerebral Cortex. 2003;13:170–177. doi: 10.1093/cercor/13.2.170. [DOI] [PubMed] [Google Scholar]

- Fuster J. The prefrontal cortex-- an update: Time is of the essence. Neuron. 2001;30:319–333. doi: 10.1016/s0896-6273(01)00285-9. [DOI] [PubMed] [Google Scholar]

- Fuster J. Temporal processing. In: Grafman J, Holyoak KJ, Boller F, editors. Structure and functions of the human prefrontal cortex. New York: New York Academy of Sciences; 1995. pp. 173–181. [Google Scholar]

- Gebauer GF, Mackintosh NJ. Psychometric intelligence dissociates implicit and explicit learning. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2007;33:34–54. doi: 10.1037/0278-7393.33.1.34. [DOI] [PubMed] [Google Scholar]

- Glenberg AM. What memory is for. Behavioral and Brain Sciences. 1997;20:1–55. doi: 10.1017/s0140525x97000010. [DOI] [PubMed] [Google Scholar]

- Goldstone RL, Barsalou LW. Reuniting perception and conception. Cognition. 1998;65:231–262. doi: 10.1016/s0010-0277(97)00047-4. [DOI] [PubMed] [Google Scholar]

- Gomez RL, Gerken L. Artificial grammar learning by 1-year-olds leads to specific and abstract knowledge. Cognition. 1999;70:109–135. doi: 10.1016/s0010-0277(99)00003-7. [DOI] [PubMed] [Google Scholar]

- Goschke T. Implicit learning of perceptual and motor sequences: Evidence for independent learning systems. In: Stadler MA, Frensch PA, editors. Handbook of implicit learning. London: Sage Publications; 1998. pp. 410–444. [Google Scholar]

- Goschke T, Friederici AD, Kotz SA, van Kampen A. Procedural learning in Broca’s aphasia: Dissociation between the implicit acquisition of spatio-motor and phoneme sequences. Journal of Cognitive Neuroscience. 2001;13:370–388. doi: 10.1162/08989290151137412. [DOI] [PubMed] [Google Scholar]

- Gottselig JM, Brandeis D, Hofer-Tinguely G, Borbély AA, Achermann P. Human central auditory plasticity associated with tone sequence learning. Learning & Memory. 2006;11:162–171. doi: 10.1101/lm.63304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafton ST, Hazeltine E, Ivry R. Functional mapping of sequence learning in normal humans. Journal of Cognitive Neuroscience. 1995;7:497–510. doi: 10.1162/jocn.1995.7.4.497. [DOI] [PubMed] [Google Scholar]

- Greenfield PM. Language, tools and brain: The ontogeny and phylogeny of hierarchically organized sequential behavior. Behavioral and Brain Sciences. 1991;14:531–595. [Google Scholar]

- Handel S, Buffardi L. Using several modalities to perceive one temporal pattern. Quarterly Journal of Experimental Psychology. 1969;21:256–266. doi: 10.1080/14640746908400220. [DOI] [PubMed] [Google Scholar]

- Harris JA, Petersen RS, Diamond ME. The cortical distribution of sensory memories. Neuron. 2001;30:315–318. doi: 10.1016/s0896-6273(01)00300-2. [DOI] [PubMed] [Google Scholar]

- Heindel WC, Butters N, Salmon DP. Impaired learning of a motor skill in patients with Huntington’s disease. Behavioral Neuroscience. 1988;102:141–147. doi: 10.1037//0735-7044.102.1.141. [DOI] [PubMed] [Google Scholar]

- Hikosaka O, Nakahara H, Rand MK, Sakai K, Lu X, Nakamura K, Miyachi S, Doya K. Parallel neural networks for learning sequential procedures. Trends in Neurosciences. 1999;22:464–471. doi: 10.1016/s0166-2236(99)01439-3. [DOI] [PubMed] [Google Scholar]

- Howard JH, Jr, Howard DV, Japikse KC, Eden GF. Dyslexics are impaired on implicit higher-order sequence learning, but not on implicit spatial context learning. Neuropsychologia. 2006;44:1131–1144. doi: 10.1016/j.neuropsychologia.2005.10.015. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Mack PB, McCarthy G. Perceiving patterns in random series: Dynamic processing of sequence in prefrontal cortex. Nature Neuroscience. 2002;5:485–490. doi: 10.1038/nn841. [DOI] [PubMed] [Google Scholar]

- James TW, Gauthier I. Auditory and action semantic features activate sensory-specific perceptual brain regions. Current Biology. 2003;13:1792–1796. doi: 10.1016/j.cub.2003.09.039. [DOI] [PubMed] [Google Scholar]

- Jobard G, Vigneau M, Mazoyer B, Tzourio-Mazoyer N. Impact of modality and linguistic complexity during reading and listening tasks. NeuroImage. 2007;34:784–800. doi: 10.1016/j.neuroimage.2006.06.067. [DOI] [PubMed] [Google Scholar]

- Kalikow DN, Stevens KN, Elliott LL. Development of a test of speech intelligibility in noise using sentence materials with controlled word predictability. Journal of the Acoustical Society of America. 1977;61:1337–1351. doi: 10.1121/1.381436. [DOI] [PubMed] [Google Scholar]

- Karpicke JD, Pisoni DB. Using immediate memory span to measure implicit learning. Memory & Cognition. 2004;32:956–964. doi: 10.3758/bf03196873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keele SW, Ivry R, Mayr U, Hazeltine E, Heuer H. The cognitive and neural architecture of sequence representation. Psychological Review. 2003;110:316–339. doi: 10.1037/0033-295x.110.2.316. [DOI] [PubMed] [Google Scholar]

- Kelly SW, Griffiths S, Frith U. Evidence for implicit sequence learning in dyslexia. Dyslexia. 2002;8:43–52. doi: 10.1002/dys.208. [DOI] [PubMed] [Google Scholar]

- Kilgard MP, Merzenich MM. Order-sensitive plasticity in adult primary auditory cortex. Proceedings of the National Academy of Sciences. 2002;99:3205–3209. doi: 10.1073/pnas.261705198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kinder A, Shanks DR, Cock J, Tunney RJ. Recollection, fluency, and the explicit/implicit distinction in artificial grammar learning. Journal of Experimental Psychology: General. 2003;132:551–565. doi: 10.1037/0096-3445.132.4.551. [DOI] [PubMed] [Google Scholar]

- Lieberman MD, Chang GY, Chiao J, Bookheimer SY, Knowlton BJ. An event-related fMRI study of artificial grammar learning in a balanced chunk strength design. Journal of Cognitive Neuroscience. 2004;16:427–438. doi: 10.1162/089892904322926764. [DOI] [PubMed] [Google Scholar]

- Lieberman P. On the nature and evolution of the neural bases of human language. Yearbook of Physical Anthropology. 2002;45:36–62. doi: 10.1002/ajpa.10171. [DOI] [PubMed] [Google Scholar]

- Menghini D, Hagberg GE, Caltagirone C, Petrosini L, Vicari S. Implicit learning deficits in dyslexic adults: An fMRI study. Neuroimage. 2006;33:1218–1226. doi: 10.1016/j.neuroimage.2006.08.024. [DOI] [PubMed] [Google Scholar]

- Messinger A, Squire LR, Zola SM, Albright TD. Neuronal representations of stimulus associations develop in the temporal lobe during learning. Proceedings of the National Academy of Sciences. 2001;98:12239–12244. doi: 10.1073/pnas.211431098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middleton FA, Strick PL. Basal ganglia output and cognition: Evidence from anatomical, behavioral, and clinical studies. Brain and Cognition. 2000;42:183–200. doi: 10.1006/brcg.1999.1099. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miller GA. Free recall of redundant strings of letters. Journal of Experimental Psychology. 1958;56:485–491. doi: 10.1037/h0044933. [DOI] [PubMed] [Google Scholar]

- Miller GA, Selfridge JA. Verbal context and the recall of meaningful material. American Journal of Psychology. 1950;63:176–185. [PubMed] [Google Scholar]

- Molinari M, Filippini V, Leggio MG. Neuronal plasticity of interrelated cerebellar and cortical networks. Neuroscience. 2002;111:863–870. doi: 10.1016/s0306-4522(02)00024-6. [DOI] [PubMed] [Google Scholar]

- Nissen MJ, Bullemer P. Attentional requirements of learning: Evidence from performance measures. Cognitive Psychology. 1987;19:1–32. [Google Scholar]

- Nolte JN, Angevine JB. The human brain: In photographs and diagrams. New York: Mosby; 1995. [Google Scholar]

- Obleser J, Wise RJS, Dresner MA, Scott SK. Functional integration across brain regions improves speech perception under adverse listening conditions. The Journal of Neuroscience. 2007;27:2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohl FW, Scheich H, Freeman WJ. Change in pattern of ongoing cortical activity with auditory category learning. Nature. 2001;412:733–736. doi: 10.1038/35089076. [DOI] [PubMed] [Google Scholar]

- Opitz B, Friederici AD. Brain correlates of language learning: The neuronal dissociation of rule-based versus similarity-based learning. The Journal of Neuroscience. 2004;24:8436–8440. doi: 10.1523/JNEUROSCI.2220-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paquier PF, Mariën P. A synthesis of the role of the cerebellum in cognition. Aphasiology. 2005;19:3–19. [Google Scholar]

- Pascal-Leone A, Grafman J, Hallett M. Procedural learning and prefrontal cortex. In: Grafman J, Holyoak KJ, Bollers F, editors. Structure and functions of the human prefrontal cortex. New York: New York Academy of Sciences; 1995. pp. 61–70. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Miller EK. Different time courses of learning-related activity in the prefrontal cortex and striatum. Nature. 2005;433:873–876. doi: 10.1038/nature03287. [DOI] [PubMed] [Google Scholar]

- Peigneux P, Maquet P, Meulemans T, Destrebecqz A, Laureys S, Degueldre C, Delfiore G, Aerts J, Luxen A, Franck G, Van der Linden M, Cleeremans A. Striatum forever, despite sequence learning variability: A random effect analysis of PET data. Human Brain Mapping. 2000;10:179–194. doi: 10.1002/1097-0193(200008)10:4<179::AID-HBM30>3.0.CO;2-H. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perruchet P, Pacton S. Implicit learning and statistical learning: Two approaches, one phenomenon. Trends in Cognitive Sciences. 2006;10:233–238. doi: 10.1016/j.tics.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Petersson KM, Forkstam C, Ingvar M. Artificial syntactic violations activate Broca’s region. Cognitive Science. 2004;28:383–407. [Google Scholar]

- Pisoni DB, Cleary M. Learning, memory, and cognitive processes in deaf children following cochlear implantation. In: Zeng FG, Popper AN, Fay RR, editors. Springer handbook of auditory research: Auditory prosthesis. SHAR Volume X. 2004. pp. 377–426. [Google Scholar]

- Port RF, Van Gelder T. Mind as motion: Explorations in the dynamics of cognition. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- Pothos EM, Bailey TM. The role of similarity in artificial grammar learning. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2000;26:847–862. doi: 10.1037//0278-7393.26.4.847. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. Brain reflections of words and their meaning. Trends in Cognitive Sciences. 2001;5:517–524. doi: 10.1016/s1364-6613(00)01803-9. [DOI] [PubMed] [Google Scholar]

- Rauch SL, Savage CR, Brown HD, Curran T, Alpert NM, Kendrick A, Fischman AJ, Kosslyn SM. A PET investigation of implicit and explicit sequence learning. Human Brain Mapping. 1995;3:271–286. [Google Scholar]

- Rauch SL, Whalen PJ, Savage CR, Curran T, Kendrick A, Brown HD, Bush G, Breiter HC, Rosen BR. Striatal recruitment during an implicit sequence learning task as measured by functional magnetic resonance imaging. Human Brain Mapping. 1997;5:124–132. [PubMed] [Google Scholar]

- Reber AS. Implicit learning of artificial grammars. Journal of Verbal Learning and Verbal Behavior. 1967;6:855–863. [Google Scholar]

- Reber AS. Transfer of syntactic structure in synthetic languages. Journal of Experimental Psychology. 1969;81:115–119. [Google Scholar]

- Reber AS. Implicit learning and tacit knowledge: An essay on the cognitive unconscious. Oxford, England: Oxford University Press; 1993. [Google Scholar]

- Reber PJ, Stark CEL, Squire LR. Cortical areas supporting category learning identified using functional MRI. Proceedings of the National Academy of Sciences, USA. 1998;95:747–750. doi: 10.1073/pnas.95.2.747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redington M, Chater N. Knowledge representation and transfer in artificial grammar learning (AGL) In: French RM, Cleeremans A, editors. Implicit learning and consciousness: An empirical, philosophical, and computational consensus in the making. Hove, East Sussex: Psychology Press; 2002. pp. 121–143. [Google Scholar]

- Redington M, Chater N. Transfer in artificial grammar learning: A reevaluation. Journal of Experimental Psychology: General. 1996;125:123–138. [Google Scholar]

- Rhodes BJ, Bullock D, Verwey WB, Averbeck BB, Page MPA. Learning and production of movement sequences: Behavioral, neurophysiological, and modeling perspectives. Human Movement Science. 2004;23:699–746. doi: 10.1016/j.humov.2004.10.008. [DOI] [PubMed] [Google Scholar]

- Rubenstein H. Language and probability. In: Miller GA, editor. Communication, language, and meaning: Psychological perspectives. New York: Basic Books, Inc; 1973. pp. 185–195. [Google Scholar]

- Rüsseler J, Gerth I, Münte TF. Implicit learning is intact in developmental dyslexic readers: Evidence from the serial reaction time task and artificial grammar learning. Journal of Clinical and Experimental Neuropsychology. 2006;28:808–827. doi: 10.1080/13803390591001007. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Johnson EK, Aslin RN, Newport EL. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70:27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Dobbins IG, Schnyer DM. Specificity of priming: A cognitive neuroscience perspective. Nature Reviews Neuroscience. 2004;5:853–862. doi: 10.1038/nrn1534. [DOI] [PubMed] [Google Scholar]

- Seger CA. The basal ganglia in human learning. The Neuroscientist. 2006;12:285–290. doi: 10.1177/1073858405285632. [DOI] [PubMed] [Google Scholar]

- Seger CA. Multiple forms of implicit learning. In: Stadler MA, Frensch PA, editors. Handbook of implicit learning. London: Sage Publications; 1998. pp. 295–320. [Google Scholar]

- Seger CA, Prabhakaran V, Poldrack RA, Gabrieli JDE. Neural activity differs between explicit and implicit learning of artificial grammar strings: An fMRI study. Psychobiology. 2000;28:283–292. [Google Scholar]

- Siegert RJ, Taylor KD, Weatherall M, Abernethy DA. Is implicit sequence learning impaired in Parkinson’s disease? A meta-analysis. Neuropsychology. 2006;20:490–495. doi: 10.1037/0894-4105.20.4.490. [DOI] [PubMed] [Google Scholar]

- Skosnik PD, Mirza F, Gitelman DR, Parrish TB, Mesulam M-M, Reber PJ. Neural correlates of artificial grammar learning. NeuroImage. 2002;17:1306–1314. doi: 10.1006/nimg.2002.1291. [DOI] [PubMed] [Google Scholar]

- Tettamanti M, Alkadhi H, Moro A, Perani D, Kollias S, Weniger D. Neural correlates for the acquisition of natural language syntax. NeuroImage. 2002;17:700–709. [PubMed] [Google Scholar]

- Tunney RJ, Altmann GTM. Two modes of transfer in artificial grammar learning. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2001;27:614–639. [PubMed] [Google Scholar]

- Ullman MT. Contributions of memory circuits to language: The declarative/procedural model. Cognition. 2004;92:231–270. doi: 10.1016/j.cognition.2003.10.008. [DOI] [PubMed] [Google Scholar]

- Vicari S, Marotta L, Menghini D, Molinari M, Petrosini L. Implicit learning deficit in children with developmental dyslexia. Neuropsychologia. 2003;41:108–114. doi: 10.1016/s0028-3932(02)00082-9. [DOI] [PubMed] [Google Scholar]

- Waber DP, Marcus DJ, Forbes PW, Bellinger DC, Weiler MD, Sorensen LG, et al. Motor sequence learning and reading ability: Is poor reading associated with sequencing deficits? Journal of Experimental Child Psychology. 2003;84:338–354. doi: 10.1016/s0022-0965(03)00030-4. [DOI] [PubMed] [Google Scholar]

- Witt K, Nühsman A, Deuschl G. Intact artificial grammar learning in patients with cerebellar degeneration and advanced Parkinson’s disease. Neuropsychologia. 2002;40:1534–1540. doi: 10.1016/s0028-3932(02)00027-1. [DOI] [PubMed] [Google Scholar]