Abstract

Neuroeconomic studies of decision making have emphasized reward learning as critical in the representation of value-driven choice behaviour. However, it is readily apparent that punishment and aversive learning are also significant factors in motivating decisions and actions. In this paper, we review the role of the striatum and amygdala in affective learning and the coding of aversive prediction errors (PEs). We present neuroimaging results showing aversive PE-related signals in the striatum in fear conditioning paradigms with both primary (shock) and secondary (monetary loss) reinforcers. These results and others point to the general role for the striatum in coding PEs across a broad range of learning paradigms and reinforcer types.

Keywords: fear conditioning, striatum, amygdala, prediction error, Neuroeconomics

1. Introduction

The rapid growth of Neuroeconomics has yielded many investigations on valuation signals, absolute and temporal preferences and risky decision making under uncertainty (for reviews see Glimcher & Rustichini (2004), Camerer et al. (2005) and Sanfey et al. (2006)). One key theme emerging is the link between certain neural structures, specifically the striatum, and the subjective value an individual assigns to a particular stimulus (Montague & Berns 2002; Knutson et al. in press). This subjective value is represented prior to choice, encouraging exploratory or approach behaviour, and is dynamically updated according to a previous history, such as when an outcome deviates from expectation. Currently, most of these neuroimaging studies focus on reward processing and use instrumental designs where outcomes are contingent on specific actions and subjective value deviates from positive expectations. As a consequence, the striatum's role in affective learning and decision making in the context of Neuroeconomics has been primarily associated with the domain of reward-related processing, particularly with respect to prediction error (PE) signals.

It is unclear, however, whether the role of the striatum in affective learning and decision making can also be expanded to general aversive processing and learning signals necessary for aversive learning. This is a topic of growing interest in Neuroeconomics given classic economic topics such as loss and risk aversion (Tom et al. 2007), framing and the endowment effect (De Martino et al. 2006) and even social aversions that may arise due to betrayals of trust (Baumgartner et al. 2008). To date, most of our knowledge of the neural basis of aversive processing comes from a rich literature that highlights the specific contributions of the amygdala in the acquisition, expression and extinction of fear (for reviews see LeDoux 1995). In contrast to the instrumental paradigms typically used in Neuroeconomic studies of decision making, most investigations of aversive learning have used classical or Pavlovian conditioning paradigms, in which a neutral stimulus (the conditioned stimulus, CS) comes to acquire aversive properties by simple pairing with an aversive event (the unconditioned stimulus, US).

Although the amygdala has been the focus of most investigations of aversive learning, whereas the striatum is primarily implicated in studies of reward, there is increasing evidence that the role of both of these structures in affective and particularly reinforcement learning is not so clearly delineated. The goal of the present paper is to explore the role of the striatum in aversive processing. Specifically, we focus on the involvement of the striatum in coding PEs during Pavlovian or classical aversive conditioning. To start, we briefly review the involvement of the amygdala, a structure traditionally linked to fear responses, in affective learning. This is followed by a consideration of the role of the striatum in appetitive or reward conditioning. Finally, we discuss the evidence highlighting the contributions of the striatum in aversive processing, leading to an empirical test of the role of the human striatum in aversive learning. One key finding consistent across the literature on human reward-related processing is the correlation between striatal function and PE signals, or learning signals that adjust expectations and help guide goal-directed behaviour (Schultz & Dickinson 2000). However, if the striatum is involved in affective learning and decision making and not solely reward-related processing, similar signals should be expected during aversive conditioning. We therefore propose to test an analogous correlation between PE signals and striatal function with two separate datasets from our laboratory on aversive learning with either primary (Schiller et al. in press) or secondary (current paper) reinforcers using a classical fear conditioning paradigm, which is usually linked to amygdala function (Phelps et al. 2004).

(a) Amygdala contributions to affective learning

In a typical classical fear conditioning paradigm, a neutral event, such as a tone (the CS), is paired with an aversive event, such as a shock (the US). After several pairings of the tone and the shock, the presentation of the tone itself leads to a fear response (the conditioned response, CR). Studies investigating the neural systems of fear conditioning have shown that the amygdala is a critical structure in its acquisition, storage and expression (LeDoux 2000; Maren 2001). Using this model paradigm, researchers have been able to map the pathways of fear learning from stimulus input to response output. Although the amygdala is often referred to as a unitary structure, several studies indicate that different subregions of the amygdala serve different functions. The lateral nucleus of the amygdala (LA) is the region where the inputs from the CS and US converge (Romanski et al. 1993). Lesions to the LA disrupt the CS–US contingency, thus interfering with the acquisition of conditioned fear (Wilensky et al. 1999; Delgado et al. 2006). The LA projects to the central nucleus (CE) of the amygdala (Pare et al. 1995; Pitkanen et al. 1997). Lesions of CE block the expression of a range of CRs, such as freezing, autonomic changes and potentiated startle, whereas damage to areas that CE projects to interferes with the expression of specific CRs (Kapp et al. 1979; Davis 1998; LeDoux 2000). The LA also projects to the basal nucleus of the amygdala. Damage to this region prevents other means of expressing the CR, such as active avoidance of the CS (Amorapanth et al. 2000).

Investigations of the neural systems of fear conditioning in humans have largely supported and extended these findings from non-human animals. Studies in patients with amygdala lesions fail to show physiological evidence (i.e. skin conductance) of conditioned fear, although such patients are able to verbally report the parameters of fear conditioning (Bechara et al. 1995; LaBar et al. 1995). This explicit awareness and memory for the events of fear conditioning is impaired in patients with hippocampal damage, who show normal physiological evidence of conditioned fear (Bechara et al. 1995). Brain imaging studies show amygdala activation to a CS that is correlated with the strength of the CR (LaBar et al. 1998). Amygdala activation during fear conditioning occurs when the CS is presented both supraliminally and subliminally (Morris et al. 1999), suggesting awareness and explicit memory are not necessary for amygdala involvement. Technical limitations largely prevent the exploration of roles for specific subregions of the amygdala in humans, but the bulk of evidence suggests that this fear-learning system is relatively similar across species.

Although most investigations of amygdala function focus on fear or the processing of aversive stimuli, it has been suggested that different subregions of the amygdala may also play specific roles in classical conditioning paradigms involving rewards. When adapted to reward learning, the CS would be a neutral stimulus, such as the tone, but the US would be rewarding, for example a food pellet. Owing to the appetitive nature of the US, this type of reward-related learning is often referred to as appetitive conditioning (Gallagher et al. 1990; Robbins & Everitt 1996). It is hypothesized that the basolateral nucleus of the amygdala (BLA) may be particularly important for maintaining and updating the representation of the affective value of an appetitive CS (Parkinson et al. 1999, 2001), specifically through its interactions with the corticostriatal and dopaminergic circuitry (Rosenkranz & Grace 2002; Rosenkranz et al. 2003). Accordingly, the BLA may be involved in specific variations of standard appetitive conditioning paradigms, such as when using a secondary reinforcer as a US, coding that that value of the US has changed after the conditioning paradigm, and interactions between Pavlovian and instrumental processes (Gallagher et al. 1990). Interestingly, because similar effects were found following disconnection of the BLA and the nucleus accumbens (NAcc), amygdala–striatal interactions appear to be critical for processing of information about learned motivational value (Setlow et al. 2002). A number of studies have shown that the CE may be critical for some expressions of appetitive conditioning, such as enhanced attention (orienting) to the CS (Gallagher et al. 1990), and for controlling the general motivational influence of reward-related events (Corbit & Balleine 2005). In humans, there is some evidence for amygdala involvement in appetitive conditioning. For example, patients with amygdala lesions are impaired in conditioned preference tasks involving rewards (Johnsrude et al. 2000). In addition, neuroimaging studies have reported amygdala activation during an appetitive conditioning task using food as a US (Gottfried et al. 2003).

(b) Striatal contributions to affective learning

The striatum is the input of the basal ganglia and consists of three primary regions encompassing a dorsal (caudate nucleus and putamen) and ventral (NAcc and ventral portions of caudate and putamen) subdivision. A vast array of research exists highlighting the role of the striatum and connected regions of the prefrontal cortex during affective learning essential for goal-directed behaviour (for a review see Balleine et al. 2007). These corticostriatal circuits allow for flexible involvement in motor, cognitive and affective components of behaviour (Alexander et al. 1986; Alexander & Crutcher 1990). Anatomical tracing work in non-human primates also highlights the role of midbrain dopaminergic structures (both substantia nigra and ventral tegmental area) in modulating information processed in corticostriatal circuits. Such work suggests that an ascending spiral of projections connecting the striatum and midbrain dopaminergic centres creates a hierarchy of information flow from the ventromedial to the dorsolateral portions of the striatum (Haber et al. 2000). Therefore, given its connectivity and anatomical organization, the striatum finds itself in a prime position to influence different aspects of affective learning, ranging from basic classical and instrumental conditioning believed to be mediated by more ventral and dorsomedial striatum regions (e.g. O'Doherty 2004; Voorn et al. 2004; Delgado 2007) and progressing to procedural and habitual learning thought to be dependent on dorsolateral striatum (e.g. Jog et al. 1999; Yin et al. 2005, 2006). This flow of information would allow an initial goal-directed learning phase that slowly transfers to habitual processing (Balleine & Dickinson 1998).

Since the goal of this paper is to examine whether the role of the striatum during reward-related processing extends to aversive learning particularly in the context of PEs, we will consider striatal function during similar appetitive and aversive classical conditioning paradigms. In our review, we highlight the contributions of the striatum to affective learning by first discussing the role of the striatum in appetitive or reward conditioning that has more often been linked to the integrity of corticostriatal systems. We then consider the role of the striatum in aversive learning, a domain particularly linked to amygdala function as previously discussed.

(i) Appetitive conditioning in the striatum

Neurophysiological evidence outlining the mechanisms of associative learning of rewards has been elegantly demonstrated by Schultz et al. (1997). According to this research, phasic signals originating from dopamine (DA) neurons in the non-human primate midbrain are observed upon unexpected delivery of rewards, such as a squirt of juice (the US). After repeated pairings with a visual or auditory cue (the CS), responses of DA neurons shift to the onset of the CS, rather than the delivery of the liquid. That is, DA responds to the earliest predictor of the reward, a signal that can be modulated by magnitude (Tobler et al. 2005) and probability (Fiorillo et al. 2003) of the rewarding outcome. Additionally, omission of an expected reward leads to a depression in DA firing. These findings and others (e.g. Bayer & Glimcher 2005) led researchers to postulate that dopaminergic neurons play a specific role in reward processing, but not as a hedonic indicator. Rather, the dopaminergic signal can be thought of as the coding for ‘PEs’, i.e. the difference between the reward received and the expected reward (Schultz & Dickinson 2000), a vital signal to learning and shaping of decisions.

As previously mentioned, both dorsal and ventral striatum are innervated by dopaminergic neurons from midbrain nuclei, contributing to the involvement of the striatum in reward-related learning (Haber & Fudge 1997). Infusion of DA agonists in the rodent striatum, for example, leads to enhanced reward conditioning (Harmer & Phillips 1998). Further, increases in DA release, measured through microdialysis, have been reported in the ventral striatum not only when rats self-administer cocaine (the US), but also when they are solely presented with a tone (the CS) that has been previously paired with cocaine administration (Ito et al. 2000). Consistent with these studies, lesions of the ventral striatum in rats impair the expression of behaviours indicating conditioned reward. For instance, rats with ventral striatum lesions are less likely to approach a CS-predicting reward than non-lesioned rats (Parkinson et al. 2000; Cardinal et al. 2002). Similarly, upon establishing place preference using classical conditioning by exposing hungry rats to sucrose in a distinctive environment, lesions of the ventral striatum abolish this learned response (Everitt et al. 1991). Consistent findings were also demonstrated in non-human primates (e.g. Apicella et al. 1991; Ravel et al. 2003). Striatal neurons show an increased firing rate during presentation of cues that predict a reward, selectively firing at reward-predicting CSs after learning (Schultz et al. 2003). Moreover, associations between actions and rewarding outcomes were also found to be encoded in the primate caudate nucleus (Lau & Glimcher 2007).

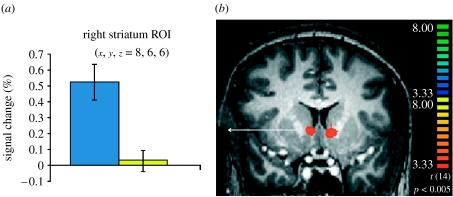

Consistent with the findings from these animals models, brain imaging studies in humans have widely reported activation of the striatum during appetitive conditioning tasks with both primary (e.g. O'Doherty et al. 2001; Pagnoni et al. 2002; Gottfried et al. 2003) and secondary (e.g. Delgado et al. 2000; Knutson et al. 2001b; Kirsch et al. 2003) reinforcers. For instance, in a probabilistic classical conditioning paradigm with instruction (i.e. participants are aware of the contingency), activation of the ventral caudate nucleus is observed when comparing a conditioned reinforcer paired with a monetary reward ($4.00) with a non-predictive CS (Delgado et al. 2008; figure 1). This region of interest (ROI) is similar in location to a ventral caudate ROI identified in a classical conditioning paradigm with food, or primary rewards (O'Doherty et al. 2001). Interestingly, a dichotomy between dorsal and ventral striatum has been suggested in human conditioning studies, building on the ‘actor–critic’ model (Sutton & Barto 1998). According to this model, the ‘critic’ learns to predict future rewards whereas the ‘actor’ processes outcome information to guide future behaviour. While some studies suggest that the ventral parts of the striatum are involved in both classical and instrumental conditioning, in turn serving in the role of the critic (O'Doherty 2004), activity in the dorsal striatum resembles the actor, being linked primarily to instrumental conditioning (Elliott et al. 2004; O'Doherty 2004; Tricomi et al. 2004), when rewards are contingent on a purposeful action and inform future behaviour (Delgado et al. 2005).

Figure 1.

(a,b) Striatal responses during reward conditioning with secondary reinforcers. In this paradigm, the participants are presented with two conditioned stimuli that predict a potential reward (CS+, blue bar) or not (CS−, yellow bar). Adapted with permission from Delgado et al. (2008). ROI, region of interest.

(ii) Temporal difference learning in the striatum

Computational models have been particularly influential in understanding the role of the striatum in reward-related learning. Theoretical formulations of reinforcement learning suggest that learning is driven by deviation of outcomes from our expectations, namely PEs. These errors are continuously used to update the value of predictive stimuli (Rescorla & Wagner 1972). Based on this, the temporal difference (TD) learning rule (Sutton & Barto 1990) has been shown to account for the previously discussed electrophysiological data from appetitive conditioning (Montague et al. 1996; Schultz et al. 1997).

The PE signal, which is the key component of reinforcement learning model, can be used to both guide learning and bias action selection. Simply put, positive PEs occur when an unexpected outcome is delivered, while negative PEs occur when an expected outcome is omitted. When the delivery of an outcome is just as expected, a PE signal is zero. This model has been robustly tested in human and non-human animals with reward paradigms. It has been examined to a lesser extent in paradigms involving aversive learning, where some confusion can arise since a PE in an aversive context (i.e. non-delivery of an expected punishment) could be viewed as a positive outcome. Yet, in a TD model where outcomes are treated as indicators, regardless of their valence, PE signals are always negative in this case of the unexpected omission of the outcome (positive or negative). Here, we consider neuroimaging studies of PEs during human reward learning, before discussing PEs during aversive learning in the later sections.

Sophisticated neuroimaging studies incorporating TD learning models and neural data during appetitive conditioning or reward learning have started to identify the neural correlates of PE signals in the human brain (e.g. McClure et al. 2003; O'Doherty et al. 2003; Schönberg et al. 2007; Tobler et al. 2007). The first reports used classical conditioning studies with juice rewards and found that activation, indexed by blood-oxygen-level-dependent (BOLD) signals, in the ventral (O'Doherty et al. 2003) and dorsal (McClure et al. 2003) putamen correlated with a PE signal. Interestingly, the location within the striatum (putamen, NAcc and caudate) varies across paradigms (e.g. classical, instrumental) and even with different types of stimuli (e.g. food, money). PEs in the human striatum have also been observed to correlate with behavioural performance in instrumental-based paradigms (for a review see O'Doherty 2004). In most of these paradigms, PE signals were observed in both dorsal and ventral striatum and were stronger in the participants who successfully learn (Schönberg et al. 2007), while being dissociable from pure goal values or the representation of potential rewards by a stimulus or action (Hare et al. 2008). Finally, extensions of PE models to more social situations are observed in both ventral and dorsal striatum with social stimuli such as attractive faces (Bray & O'Doherty 2007), as well as trust and the acquisition of reputations (King-Casas et al. 2005).

Although the neurophysiological data implicate midbrain DA neurons in coding a PE signal, functional magnetic resonance imaging (fMRI) investigations often focus on the dopaminergic targets such as the striatum. This is primarily due to the difficulty in generating robust and reliable responses in the midbrain nuclei, and the idea that the BOLD signals are thought to reflect inputs into a particular region (Logothetis et al. 2001). Notably, a recent fMRI study used high-resolution fMRI to investigate the changes in the human ventral tegmental area according to PE signals (D'Ardenne et al. 2008). The BOLD responses in the ventral tegmental area reflected positive PEs for primary and secondary reinforcers, with no detectable responses during non-rewarding events. In sum, there is considerable evidence that corticostriatal circuits, modulated by dopaminergic input, are critically involved in appetitive or reward conditioning, and are particularly involved in representations of PE signals, guiding reward learning.

(iii) Aversive processing and the striatum

Evidence for the role of striatum in affective learning is not strictly limited to appetitive conditioning, but was also demonstrated in various tasks involving aversive motivation (for reviews, see Salamone (1994), Horvitz (2000), Di Chiara (2002), White & Salinas (2003), Pezze & Feldon (2004), McNally & Westbrook (2006) and Salamone et al. (2007)). Animal research on aversive learning has implicated, in particular, the DA system in the striatum. In the midbrain, DA neurons appear to respond more selectively to rewards and show weak responses, or even inhibition, to primary and conditioned aversive stimuli (Mirenowicz & Schultz 1996; Ungless et al. 2004). However, elevated DA levels were observed in the NAcc not only in response to various aversive outcomes, such as electric shocks, tail pinch, anxiogenic drugs, restraint stress and social stress (Robinson et al. 1987; Abercrombie et al. 1989; McCullough & Salamone 1992; Kalivas & Duffy 1995; Tidey & Miczek 1996; Young 2004), but also in response to CSs predictive of such outcomes or exposure to the conditioning context (Young et al. 1993, 1998; Saulskaya & Marsden 1995b; Wilkinson 1997; Murphy et al. 2000; Pezze et al. 2001, 2002; Josselyn et al. 2004; Young & Yang 2004). DA in the NAcc is also important for aversive instrumental conditioning as seen during active or passive avoidance and escape tasks (Cooper et al. 1974; Neill et al. 1974; Jackson et al. 1977; Schwarting & Carey 1985; Wadenberg et al. 1990; McCullough et al. 1993; Li et al. 2004). The levels of striatal DA reflect the operation of various processes including the firing of DA neurons, DA reuptake mechanisms and activation of presynaptic glutamatergic inputs, which operate on different time scales. It should be noted, however, that the striatum receives inputs from other monoaminergic systems, which might also convey aversive information, either on its own or through complex interactions with the DA system (Mogenson et al. 1980; Zahm & Heimer 1990; Floresco & Tse 2007; Groenewegen & Trimble 2007).

Lesions of the NAcc or temporary inactivation studies have yielded a rather complex pattern of results, with different effects of whole or partial NAcc damage on cue versus context conditioning (Riedel et al. 1997; Westbrook et al. 1997; Haralambous & Westbrook 1999; Parkinson et al. 1999; Levita et al. 2002; Jongen-Relo et al. 2003; Schoenbaum & Setlow 2003; Josselyn et al. 2004). This has been taken to suggest that the NAcc subdivisions, namely the ventromedial shell and the dorsolateral core, make unique contributions to aversive learning. Accordingly, the shell might signal changes in the valence of the stimuli or their predictive value, whereas the core might mediate the behavioural fear response to the aversive cues (Zahm & Heimer 1990; Deutch & Cameron 1992; Zahm & Brog 1992; Jongen-Relo et al. 1993; Kelley et al. 1997; Parkinson et al. 1999, 2000; Pezze et al. 2001, 2002; Pezze & Feldon 2004).

In addition to the ventral striatum, evidence also exists linking the dorsal striatum with aversive learning. Specifically, lesions to this region have been shown to produce deficits in conditioned emotional response, conditioned freezing and passive and active avoidance (Winocur & Mills 1969; Allen & Davison 1973; Winocur 1974; Prado-Alcala et al. 1975; Viaud & White 1989; White & Viaud 1991; White & Salinas 2003).

Consistent with the rodent data, human fMRI studies also identify the striatum in classical and instrumental learning reinforced by aversive outcomes. Although the striatum is rarely the focus of neuroimaging studies on fear conditioning and fear responses, a number of studies using shock as a US report activation of the striatum in these paradigms, in addition to amygdala activation (Buchel et al. 1998, 1999; LaBar et al. 1998; Whalen et al. 1998; Phelps et al. 2004; Shin et al. 2005). Striatal activation has also been reported in expectation of thermal pain (Ploghaus et al. 2000; Seymour et al. 2005), and even monetary loss (Delgado 2007; Seymour et al. 2007; Tom et al. 2007). The striatum has also been reported during direct experience with noxious stimuli (Becerra et al. 2001) and avoidance responses (Jensen et al. 2003). Aversive-related activation has been observed throughout the striatum, and the distinct contribution of the different subdivisions (dorsal/ventral) has not been clearly identified to date, potentially due to limitations in existing fMRI techniques. However, activation of the striatum during anticipation of aversive events is not always observed (Breiter et al. 2001; Gottfried et al. 2002; Yacubian et al. 2006), with some reports specifically suggesting that the ventral striatum is solely involved in the appetitive events, and not responsive during anticipation of monetary loss (Knutson et al. 2001a).

(iv) PE and striatum during aversive processing

It is generally agreed that the striatum is probably involved in the processing of aversive PE in learning paradigms. However, the nature of the aversive PE signal is still under debate. For example, the neurotransmitter systems carrying the aversive PE signal are unclear. One possibility is that the serotonin released from dorsal raphe nucleus may be the carrier of the aversive PE and act as an opponent system to the appetitive dopaminergic system (Daw et al. 2002). However, there is evidence that dopaminergic modulations in humans affect the PE-related signals not only in appetitive (Pessiglione et al. 2006) but also in aversive conditioning (Menon et al. 2007). Consistent with this finding, there are numerous demonstrations that DA release increases over baseline during aversive learning in rodents (Young et al. 1993, 1998; Saulskaya & Marsden 1995a; Wilkinson 1997; Murphy et al. 2000; Pezze et al. 2001, 2002; Josselyn et al. 2004; Young 2004). These data suggest the possibility that DA codes both appetitive and aversive PEs.

Another issue under debate is where these aversive PEs are represented in the brain. Converging evidence from experiments in humans that adopt fMRI as the major research tool has suggested that aversive and appetitive PEs both may be represented in the striatum (Seymour et al. 2005, 2007; Kim et al. 2006; Tom et al. 2007), albeit spatially separable along its axis (Seymour et al. 2007). However, in a study using an instrumental conditioning paradigm (Kim et al. 2006), researchers failed to find aversion-related PE signals in the striatum, while observing them in other regions including the insula, the medial prefrontal cortex, the thalamus and the midbrain.

A related question is how exactly does the BOLD signal in the striatum correspond to the aversive error signal? Studies of PEs for rewards have shown that outcome omission (i.e. negative PE) results in deactivation of striatal BOLD signal (e.g. McClure et al. 2003; O'Doherty et al. 2003; Schönberg et al. 2007; Tobler et al. 2007). It might be argued that reward omission is equivalent to the receipt of an aversive outcome. Accordingly, one might expect that in the case of aversive outcomes, positive PEs would similarly result in deactivation. An alternative hypothesis would be that positive and negative PEs are similarly signed for both appetitive and aversive outcomes. In support of the latter hypothesis, a number of studies suggest that the same relation existing between striatal BOLD signals and appetitive PEs also applies for aversive PEs (Jensen et al. 2003; Seymour et al. 2004, 2005, 2007). For example, using a high-order aversive conditioning paradigm, Seymour et al. (2004) showed that the BOLD responses in the ventral striatum increase following unexpected delivery of the aversive outcome, and decrease following unexpected omission of it.

An interesting question arises in aversive conditioning tasks by considering the consequences of this omission, i.e. the by-product of relief and its rewarding properties. To examine this using a classical aversive conditioning procedure, the participants experienced prolonged experimentally induced tonic pain and were conditioned to learn pain relief or pain exacerbation (Seymour et al. 2005). The appetitive PE signals related to relief (appetitive learning) and the aversive PE signals related to pain (aversive learning) were both represented in the striatum. These findings support the idea that the striatal activity is consistent with the expression of both appetitive and aversive learning signals.

Finally, when examining the neural mechanisms mediating the aversive PE signal, it is important to take into consideration the type of learning procedure and the type of reinforcement driving it. It is possible that the use of either primary or secondary aversive reinforcers, in either classical or instrumental paradigms, is the cause for some reported inconsistencies in the aversive learning and PE literature. For example, Seymour et al. (2007) used a secondary reinforcer (monetary loss or gain) in a probabilistic first-order classical delay conditioning task, but used a high-order aversive conditioning task (Seymour et al. 2004) when examining the effect of a primary reinforcer (thermal pain). Jensen et al. (2003) conducted a direct comparison between classical and avoidance conditioning but focused on primary reinforcers (electric shock). Given these discrepancies a more careful examination of the differences and commonalities between the processing of primary versus secondary reinforcers during the same aversive learning paradigm may yield useful insight into the role of the striatum in encoding aversive PEs.

(c) Experiment: PEs during classical aversive conditioning with a monetary reinforcer

The goal of this experiment was to examine the representation of aversive PEs during learning with secondary reinforcers (money loss) using a classical conditioning task typically used in aversive conditioning studies. Further, we compare the results with a previous study in our laboratory, which used a similar paradigm with a primary reinforcer (Schiller et al. in press). Unlike previous studies that suggested similarities between primary and secondary reinforcers during aversive learning in the striatum (Seymour et al. 2004, 2007), this experiment takes advantage of more similar paradigms previously used with electric shock (primary reinforcer) to investigate similarities in PE representations with monetary loss (secondary reinforcer), allowing more direct comparisons to be drawn regarding the underlying neural mechanisms of aversive learning.

The data from our study with a primary reinforcer are published elsewhere (Schiller et al. in press) and will only be summarized here. We examined the role of striatum in aversive learning using a typical primary reinforcer (mild shock to the wrist) using a fear discrimination and reversal paradigm (Schiller et al. in press). During acquisition, the participants learned to discriminate between the two faces. One face (CS+) co-terminated with an electric shock (US) on approximately one-third of the trials and the other face (CS−) was never paired with the shock. Following acquisition, with no explicit transition, a reversal phase was instituted where the same stimuli were presented only with reversed reinforcement contingencies. Thus, the predictive face was no longer paired with the shock (new CS−), and the other face was now paired with the shock on approximately one-third of the trials (new CS+). Using fMRI, we examined which regions correlated with the predictive value of the stimuli as well as with the errors associated with these fear predictions. For the latter, we used a PE regressor generated by the TD reinforcement-learning algorithm (see below). We found robust striatal activation, located at the left and right striatum, tracking the predictive value of the stimuli throughout the task. This region showed stronger responses to the CS+ versus CS−, and flexibly switched this responding during the reversal phase. Moreover, we found that the striatal activation correlated with the PEs during fear learning and reversal. The region that showed PE-related activation was located in the head of the caudate nucleus (Talairach coordinates: left: x, y, z=−7, 3, 9; right: x, y, z=9, 5, 8).

In the present study, we further characterize the role of the striatum during aversive learning by investigating PE signals during learning with a secondary aversive reinforcer, namely, monetary loss. The acquisition phase was similar to the one described above. Also similar was the use of the TD learning rule to assess the neural basis of PEs during aversive conditioning.

2. General methods

(a) Participants

Fourteen volunteers participated in this study. Although behavioural data from all the 14 participants are presented, 3 participants were removed from the neuroimaging analysis due to excessive motion. The participants responded to posted advertisement and all the participants gave informed consent. The experiments were approved by the University Committee on Activities Involving Human Subjects.

(b) Procedure

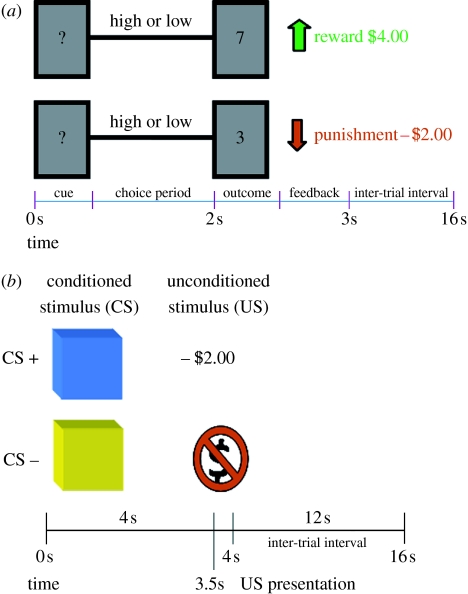

The experiment consisted of two parts (figure 2): a gambling session (adapted from Delgado et al. 2000) and an aversive conditioning session (adapted from Delgado et al. 2006). The goal of the gambling session was to endow the participants with a monetary sum that would be at risk during the aversive conditioning session.

Figure 2.

Experimental paradigm. The experimental task consisted of two parts: (a) a gambling session to allow participants to earn a monetary endowment (adapted from Delgado et al. 2000) and (b) an aversive conditioning paradigm where presentation of the unconditioned stimulus (−$2.00) led to monetary detractions from the total sum earned during the gambling session (adapted from Delgado et al. 2006).

In the gambling session, the participants were told they were playing a ‘card-guessing’ game, where the objective was to determine whether the value of a given card was higher or lower than the number 5 (figure 2a). During each trial, a question mark was presented in the centre of the ‘card’, indicating that the participants had 2 s to make a response. Using a MRI compatible response unit, the participants made a 50/50 choice regarding the potential outcome of the trial. The outcome was either higher (6, 7, 8, 9) or lower (1, 2, 3, 4) than 5. The outcome was then displayed for 500 ms, followed by a feedback arrow (which indicated positive or negative feedback) for another 500 ms and an inter-trial interval of 13 s before the onset of the next trial. A correct guess led to the display of a green upwards arrow indicating a monetary reward of $4.00 (reward trials), while an incorrect guess led to the display of a red downwards arrow indicating a monetary loss of −$2.00 (punishment trials). In some trials, irrespective of guess, the outcome was ‘5’ and led to the display of a blue dash, resulting in no monetary gain or loss (neutral trials). Each trial was presented for 16 s and there were three blocks of 18 trials each (total trials: 21 reward, 21 punishment and 12 neutral). Unbeknownst to the participants, the outcomes were predetermined ensuring a 50 per cent reinforcement rate and a fixed profit across the participants. At the end of the gambling session, a screen appeared congratulating the participant for earning the sum of $42.00 and informing them that the second part was about to start.

In the second part of the experiment, the participants were exposed to an aversive conditioning session with monetary reinforcers (figure 2b). The participants were presented with blue and yellow squares (the conditioned stimuli: CSs) for 4 s, followed by a 12 s inter-trial interval. The unconditioned stimulus (US) was loss of money, depicted by the symbol −$2.00 written in red ink and projected inside the square for 500 ms. In this partial reinforcement design, one coloured square (e.g. blue) was paired with the monetary loss (CS+) on approximately 36 per cent of the trials, while another coloured square (e.g. yellow) was never paired with the US (CS−). The participants were instructed that they would see different coloured squares and occasionally an additional −$2.00 sign indicating that $2.00 were to be deducted from their total accumulated during the gambling session. They were not told about the contingencies. There were 54 total trials broken down evenly into three blocks of 18 trials. There were 21 CS− trials and 33 CS+ trials, of which 12 were paired with the US.

At the end of the aversive conditioning session, the monetary penalties accumulated resulted in a total of $24.00. The participants then performed a final round of the gambling game to ensure that each participant was paid $60.00 in compensation following debriefing.

(c) Physiological set-up, assessment and behavioural analysis

Skin conductance responses (SCRs) were acquired from the participant's middle phalanges of the second and third fingers in the left hand using BIOPAC systems skin conductance module. Shielded Ag–AgCl electrodes were grounded through an RF filter panel and served to acquire data. AcqKnowledge software was used to analyse SCR waveforms. The level of SCR was assessed as the base to peak difference for an increase in the 0.5–4.5 s window following the onset of a CS, the blue or yellow square (see LaBar et al. 1995). A minimum response criterion of 0.02 μS was used with lower responses scored as 0. The responses were square-root transformed prior to statistical analysis to reduce skewness (LaBar et al. 1998). Acquired SCRs through the three blocks of aversive conditioning were then averaged per participant, per type of trial. The trials in which the CS+ was paired with $4.00 were separated into time of CS+ presentation and time of US presentation, so only differential SCR to the CS+ itself was included. Two-tailed paired t-tests were used to compare the activity of CS+ versus CS− trials to demonstrate effective conditioning.

(d) fMRI acquisition and analysis

A 3T Siemens Allegra head-only scanner and a Siemens standard head coil were used for data acquisition at NYU's Center for Brain Imaging. Anatomical images were acquired using a T1-weighted protocol (256×256 matrix, 176 1-mm sagittal slices). Functional images were acquired using a single-shot gradient echo planar imaging sequence (TR=2000 ms, TE=20 ms, FOV=192 cm, flip angle=75°, bandwidth=4340 Hz per pixel and echo spacing=0.29 ms). Thirty-five contiguous oblique-axial slices (3×3×3 mm voxels) parallel to the AC–PC line were obtained. Analysis of imaging data was conducted using Brain Voyager software (Brain Innovation, Maastricht, The Netherlands). The data were initially corrected for motion (using a threshold of 2 mm or less), and slice scan time using sinc interpolation was applied. Further spatial smoothing was performed using a three-dimensional Gaussian filter (4 mm FWHM), along with voxel-wise linear detrending and high-pass filtering of frequencies (three cycles per time course). Structural and functional data of each participant were then transformed to standard Talairach stereotaxic space (Talairach & Tournoux 1988).

A random effects analysis was performed on the functional data using a general linear model (GLM) on 11 participants. There were 12 different regressors: 3 at the level of the CS (CS−, CS+ and CS+-US; the trials paired with US); 2 at US onset (US or NoUS); 1 PE regressor; and 6 motion parameter regressors of no interest in x, y, z dimensions. The main statistical map of interest (correlation with PE) was created using a threshold of p<0.001 along with a cluster threshold of 10 contiguous voxels.

The PE regressor that provided the main analysis of interest was based on traditional TD learning models and is the same as the one used in the aversive conditioning study with primary reinforcers described earlier (Schiller et al. in press). In TD learning, the expectation of the true state value V(t) at time t within a trial is a dot product of the weights wi and an indicator function xi(t) that equals 1 if a conditioned stimulus (CS) is present at time t, or 0 if it is absent,

| (2.1) |

At each time step, learning is achieved by updating the expectation value of each time point t within that trial by continuously comparing the expected value at time t+1 to that at time t, which results in a PE,

| (2.2) |

where r(t) is the reward harvested at time t. In aversive conditioning, we usually treat aversive stimuli as reward and assign positive value to the aversive reinforcer. Discount factor γ is used to take into account the fact that reward received earlier is more important than the one received later on. Usually, γ is set such that 0<γ<1. In the results reported here, γ=0.99. The weights are then updated from trial to trial using a Bellman rule,

| (2.3) |

where λ is the learning rate and set to be 0.2 in our study. We assigned CSs and outcome as adjacent time points within each trial and set the initial weights for each CS to be 0.4 as used in a variety of aversive conditioning paradigms. With these parameters (λ=0.2, γ=0.99 and wi=0.4), we calculated PEs using the updating rules (2.1) and (2.2) and generated the actual regressors for the fMRI data analysis.

3. Results

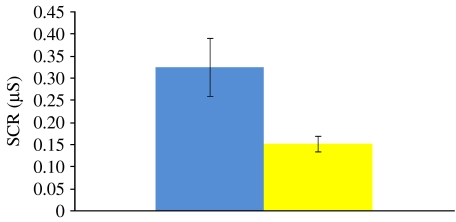

(a) Physiological assessment of aversive conditioning

Analysis of the SCR data assessed the success of aversive conditioning with monetary reinforcers (figure 3). The participants' SCR to CS+ trials (M=0.33, s.d.=0.25) was significantly higher than for CS− trials (M=0.15, s.d.=0.07) over the course of the experiment (t(13)=3.48, p<0.005). Conditioning levels were sustained across the three blocks as no differences were observed in the CR (the difference between CS+ and CS− trials) between blocks 1 and 2 (t(12)=1.53, p=0.15) or blocks 1 and 3 (t(12)=0.97, p=0.35) with one participant removed for showing no responses during block 3. Finally, removal of the three participants due to motion does not affect the main comparison of CS+ and CS− trials (t(10)=5.49, p<0.0005).

Figure 3.

SCRs during the aversive conditioning: SCR data suggest successful aversive conditioning with a secondary reinforcer such as monetary losses. Blue bar, CS+; yellow bar, CS−.

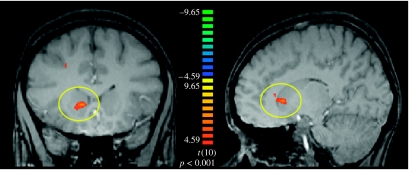

(b) Neuroimaging results

The primary contrast of interest was a correlation with PE as previously described. A statistical parametric map contrasting the PE regressor with fixation provided the main analysis (p<0.001, cluster threshold of 10 contiguous voxels). This contrast led to the identification of five regions (table 1), including the medial prefrontal cortex, midbrain and a region in the anterior striatum in the head of the caudate nucleus (figure 4). The observation of PE signals in the striatum during aversive conditioning with secondary reinforcers is consistent with the previous accounts of striatum involvement in PEs, irrespective of learning context (appetitive or aversive).

Table 1.

PE during aversive conditioning.

| region of activation | laterality | Talairach coordinates | no. of voxels | ||

|---|---|---|---|---|---|

| x | y | z | |||

| medial prefrontal cortex (BA 9/24) | right | 24 | 10 | 35 | 337 |

| cingulate gyrus (BA 24/32) | left | −13 | 37 | 14 | 869 |

| cingulate gyrus (BA 24/32) | right | 16 | 30 | 8 | 280 |

| caudate nucleus | right | 13 | 20 | 4 | 329 |

| midbrain | right | 6 | −24 | 4 | 334 |

| medial temporal lobe (BA 41/21) | left | −40 | −31 | 2 | 524 |

Figure 4.

Activation of striatum ROI defined by a contrast of PE regressor and fixation. The ROI is located in the right hemisphere, in the anterior portion of the head of the caudate nucleus (x, y, z=13, 20, 4).

4. Discussion

The striatum, although commonly cited for its role in reward processing, also appears to play a role in coding aversive signals as they relate to affective learning, PEs and decision making. In this present study, we used a classical fear conditioning paradigm and demonstrated that BOLD signals in the striatum, particularly the head of the caudate nucleus, are correlated with predictions errors derived from a TD learning model, similar to what has been previously reported in appetitive learning tasks (e.g. O'Doherty et al. 2003). This role for the striatum in coding aversive PEs was observed when conditioned fear was acquired with monetary loss, a secondary reinforcer. These results complement our previously described study that used a similar PE model and paradigm, albeit with a mild shock, a primary reinforcer (Schiller et al. in press). These results and others point to the general role for the striatum in coding PEs across a broad range of learning paradigms and reinforcer types.

Our present results, combined with the results of Schiller et al. (in press), demonstrate aversive PE-related signals with both primary and secondary reinforcers and suggest a common role for the striatum. There were some differences between the two studies, however, with respect to results and design. In the primary reinforcer study, the region of the striatum correlated with PEs was bilateral and located in a more posterior part of the caudate nucleus (x, y, z=9, 5, 8; Schiller et al. in press). In the current study with secondary reinforcers, the correlated striatal region was in more anterior portions of the caudate nucleus, and unilateral (right hemisphere, x, y, z=13, 20, 4). These anatomical distinctions between the studies raise the possibility that different regions of the striatum may code aversive predictions errors for primary and secondary reinforcers, similar to the division within the striatum that has been suggested when comparing appetitive and aversive PEs (Seymour et al. 2007). One potential explanation for the more dorsal striatum ROI identified in the aversive experiments is that the participants may possibly be contemplating ways of avoiding the potentially negative outcome, leading to more dorsal striatum activity previously linked to passive or active avoidance (Allen & Davison 1973; White & Salinas 2003). However, given the nature of neuroimaging data acquisition and analysis techniques, such a conclusion would be premature, as would any conclusion with respect to parcellation of function within subdivisions of the striatum based on the paradigms discussed. Additionally, while the studies were comparable in terms of design, there were distinct differences besides the type of reinforcer that discourages a careful anatomical comparison. Such differences include the timing and amount of trials, the experimental context (e.g. gambling prior to conditioning, reversal learning) and potential individual differences across the participants. Future studies will need to explore within subject designs (e.g. Delgado et al. 2006), with similar paradigms and instructions, and perhaps high-resolution imaging techniques to fully capture any differences in coding aversive PEs-related signals for primary and secondary reinforcers within different subsections of the striatum.

Interestingly, the amygdala, the region that is primarily implicated in the studies of classical fear conditioning, did not reveal BOLD responses correlated with PEs. In our primary reinforcer study previously described (Schiller et al. in press), an examination of the pattern of amygdala activation revealed anticipatory BOLD responses, similar to the pattern observed in the striatum; however, only striatal activation correlated with PEs. Although striatal signals have been shown to be correlated with PEs in a range of neuroimaging studies (see above), very few have reported PE-related signals in the amygdala (Yacubian et al. 2006). A recent electrophysiological study in monkeys found that responses in the amygdala could not differentiate PE-related signals from other signals such as CS value, stimulus valence and US responses (Belova et al. 2007). While the amygdala clearly plays a critical role in aversive learning, the computations underlying the representation of value by amygdala neurons may not be fully captured by traditional TD learning models. Alternatively, modulation of different parameters within a model (e.g. stimulus intensity) or consideration of task context (e.g. avoidance learning) may be revealed to be more sensitive to amygdala activity.

Both striatum and amygdala are intrinsically involved in affective learning, but may differ with respect to their involvement. A vast array of evidences implicates both structures in general appetitive and aversive learning (for a review see O'Doherty (2004) and Phelps & LeDoux (2005)) with variations due to task context and type or intensity of stimuli (Anderson et al. 2003). Thus, it is possible that differences between the striatum and amygdala may be observed during a direct comparison of primary and secondary reinforcers. Further, as previously discussed, the present results suggest that striatum and amygdala differences may arise in the context of processing PEs. Future studies may focus on direct similarities and differences between these two structures in similar paradigms and using within-subjects comparison, varying both the valence (appetitive and aversive), intensity (primary and secondary reinforcer) and type of learning (classical and instrumental) to fully understand how these two structure may interact during affective learning.

Neuroeconomic studies of decision making have emphasized reward learning as critical in the representation of value driving choice behaviour. However, it is readily apparent that punishment and aversive learning are also significant factors in motivating decisions and actions. As the emerging field of Neuroeconomics progresses, understanding the complex relationship between appetitive and aversive reinforcement and the computational processes underlying the interacting and complementary roles of the amygdala and striatum will become increasingly important in the development of comprehensive models of decision making.

Acknowledgments

The experiments were approved by the University Committee on Activities Involving Human Subjects.

This study was funded by a Seaver Foundation grant to NYU's Center for Brain Imaging and a James S. McDonnell Foundation grant to E.A.P. The authors wish to acknowledge Chista Labouliere for assistance with data collection.

Footnotes

One contribution of 10 to a Theme Issue ‘Neuroeconomics’.

References

- Abercrombie E.D, Keefe K.A, DiFrischia D.S, Zigmond M.J. Differential effect of stress on in vivo dopamine release in striatum, nucleus accumbens, and medial frontal cortex. J. Neurochem. 1989;52:1655–1658. doi: 10.1111/j.1471-4159.1989.tb09224.x. doi:10.1111/j.1471-4159.1989.tb09224.x [DOI] [PubMed] [Google Scholar]

- Alexander G.E, Crutcher M.D. Functional architecture of basal ganglia circuits: neural substrates of parallel processing. Trends Neurosci. 1990;13:266–271. doi: 10.1016/0166-2236(90)90107-l. doi:10.1016/0166-2236(90)90107-L [DOI] [PubMed] [Google Scholar]

- Alexander G.E, DeLong M.R, Strick P.L. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annu. Rev. Neurosci. 1986;9:357–381. doi: 10.1146/annurev.ne.09.030186.002041. doi:10.1146/annurev.ne.09.030186.002041 [DOI] [PubMed] [Google Scholar]

- Allen J.D, Davison C.S. Effects of caudate lesions on signaled and nonsignaled Sidman avoidance in the rat. Behav. Biol. 1973;8:239–250. doi: 10.1016/s0091-6773(73)80023-9. doi:10.1016/S0091-6773(73)80023-9 [DOI] [PubMed] [Google Scholar]

- Amorapanth P, LeDoux J.E, Nader K. Different lateral amygdala outputs mediate reactions and actions elicited by a fear-arousing stimulus. Nat. Neurosci. 2000;3:74–79. doi: 10.1038/71145. doi:10.1038/71145 [DOI] [PubMed] [Google Scholar]

- Anderson A.K, Christoff K, Stappen I, Panitz D, Ghahremani D.G, Glover G, Gabrieli J.D, Sobel N. Dissociated neural representations of intensity and valence in human olfaction. Nat. Neurosci. 2003;6:196–202. doi: 10.1038/nn1001. doi:10.1038/nn1001 [DOI] [PubMed] [Google Scholar]

- Apicella P, Ljungberg T, Scarnati E, Schultz W. Responses to reward in monkey dorsal and ventral striatum. Exp. Brain Res. 1991;85:491–500. doi: 10.1007/BF00231732. doi:10.1007/BF00231732 [DOI] [PubMed] [Google Scholar]

- Balleine B.W, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. doi:10.1016/S0028-3908(98)00033-1 [DOI] [PubMed] [Google Scholar]

- Balleine B.W, Delgado M.R, Hikosaka O. The role of the dorsal striatum in reward and decision-making. J. Neurosci. 2007;27:8161–8165. doi: 10.1523/JNEUROSCI.1554-07.2007. doi:10.1523/JNEUROSCI.1554-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumgartner T, Heinrichs M, Vonlanthen A, Fischbacher U, Fehr E. Oxytocin shapes the neural circuitry of trust and trust adaptation in humans. Neuron. 2008;58:639–650. doi: 10.1016/j.neuron.2008.04.009. doi:10.1016/j.neuron.2008.04.009 [DOI] [PubMed] [Google Scholar]

- Bayer H.M, Glimcher P.W. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. doi:10.1016/j.neuron.2005.05.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becerra L, Breiter H.C, Wise R, Gonzalez R.G, Borsook D. Reward circuitry activation by noxious thermal stimuli. Neuron. 2001;32:927–946. doi: 10.1016/s0896-6273(01)00533-5. doi:10.1016/S0896-6273(01)00533-5 [DOI] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H, Adolphs R, Rockland C, Damasio A.R. Double dissociation of conditioning and declarative knowledge relative to the amygdala and hippocampus in humans. Science. 1995;269:1115–1118. doi: 10.1126/science.7652558. doi:10.1126/science.7652558 [DOI] [PubMed] [Google Scholar]

- Belova M.A, Paton J.J, Morrison S.E, Salzman C.D. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. doi:10.1016/j.neuron.2007.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray S, O'Doherty J. Neural coding of reward-prediction error signals during classical conditioning with attractive faces. J. Neurophysiol. 2007;97:3036–3045. doi: 10.1152/jn.01211.2006. doi:10.1152/jn.01211.2006 [DOI] [PubMed] [Google Scholar]

- Breiter H.C, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. doi:10.1016/S0896-6273(01)00303-8 [DOI] [PubMed] [Google Scholar]

- Buchel C, Morris J, Dolan R.J, Friston K.J. Brain systems mediating aversive conditioning: an event-related fMRI study. Neuron. 1998;20:947–957. doi: 10.1016/s0896-6273(00)80476-6. doi:10.1016/S0896-6273(00)80476-6 [DOI] [PubMed] [Google Scholar]

- Buchel C, Dolan R.J, Armony J.L, Friston K.J. Amygdala-hippocampal involvement in human aversive trace conditioning revealed through event-related functional magnetic resonance imaging. J. Neurosci. 1999;19:10 869–10 876. doi: 10.1523/JNEUROSCI.19-24-10869.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer C, Loewenstein G, Prelec D. Neuroeconomics: how neuroscience can inform economics. J. Econ. Lit. 2005;43:9–64. doi:10.1257/0022051053737843 [Google Scholar]

- Cardinal R.N, Parkinson J.A, Hall J, Everitt B.J. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci. Biobehav. Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. doi:10.1016/S0149-7634(02)00007-6 [DOI] [PubMed] [Google Scholar]

- Cooper B.R, Howard J.L, Grant L.D, Smith R.D, Breese G.R. Alteration of avoidance and ingestive behavior after destruction of central catecholamine pathways with 6-hydroxydopamine. Pharmacol. Biochem. Behav. 1974;2:639–649. doi: 10.1016/0091-3057(74)90033-1. doi:10.1016/0091-3057(74)90033-1 [DOI] [PubMed] [Google Scholar]

- Corbit L.H, Balleine B.W. Double dissociation of basolateral and central amygdala lesions on the general and outcome-specific forms of Pavlovian–instrumental transfer. J. Neurosci. 2005;25:962–970. doi: 10.1523/JNEUROSCI.4507-04.2005. doi:10.1523/JNEUROSCI.4507-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Ardenne K, McClure S.M, Nystrom L.E, Cohen J.D. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. doi:10.1126/science.1150605 [DOI] [PubMed] [Google Scholar]

- Davis M. Anatomic and physiologic substrates of emotion in an animal model. J. Clin. Neurophysiol. 1998;15:378–387. doi: 10.1097/00004691-199809000-00002. doi:10.1097/00004691-199809000-00002 [DOI] [PubMed] [Google Scholar]

- Daw N.D, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15:603–616. doi: 10.1016/s0893-6080(02)00052-7. doi:10.1016/S0893-6080(02)00052-7 [DOI] [PubMed] [Google Scholar]

- Delgado M.R. Reward-related responses in the human striatum. Ann. NY Acad. Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. doi:10.1196/annals.1390.002 [DOI] [PubMed] [Google Scholar]

- Delgado M.R, Nystrom L.E, Fissell C, Noll D.C, Fiez J.A. Tracking the hemodynamic responses to reward and punishment in the striatum. J. Neurophysiol. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Delgado M.R, Frank R.H, Phelps E.A. Perceptions of moral character modulate the neural systems of reward during the trust game. Nat. Neurosci. 2005;8:1611–1618. doi: 10.1038/nn1575. doi:10.1038/nn1575 [DOI] [PubMed] [Google Scholar]

- Delgado M.R, Labouliere C.D, Phelps E.A. Fear of losing money? Aversive conditioning with secondary reinforcers. Soc. Cogn. Affect. Neurosci. 2006;1:250–259. doi: 10.1093/scan/nsl025. doi:10.1093/scan/nsl025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado M.R, Gillis M.M, Phelps E.A. Regulating the expectation of reward via cognitive strategies. Nat. Neurosci. 2008;11:880–881. doi: 10.1038/nn.2141. doi:10.1038/nn.2141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan R.J. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. doi:10.1126/science.1128356 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deutch A.Y, Cameron D.S. Pharmacological characterization of dopamine systems in the nucleus accumbens core and shell. Neuroscience. 1992;46:49–56. doi: 10.1016/0306-4522(92)90007-o. doi:10.1016/0306-4522(92)90007-O [DOI] [PubMed] [Google Scholar]

- Di Chiara G. Nucleus accumbens shell and core dopamine: differential role in behavior and addiction. Behav. Brain Res. 2002;137:75–114. doi: 10.1016/s0166-4328(02)00286-3. doi:10.1016/S0166-4328(02)00286-3 [DOI] [PubMed] [Google Scholar]

- Elliott R, Newman J.L, Longe O.A, William Deakin J.F. Instrumental responding for rewards is associated with enhanced neuronal response in subcortical reward systems. Neuroimage. 2004;21:984–990. doi: 10.1016/j.neuroimage.2003.10.010. doi:10.1016/j.neuroimage.2003.10.010 [DOI] [PubMed] [Google Scholar]

- Everitt B.J, Morris K.A, O'Brien A, Robbins T.W. The basolateral amygdala–ventral striatal system and conditioned place preference: further evidence of limbic–striatal interactions underlying reward-related processes. Neuroscience. 1991;42:1–18. doi: 10.1016/0306-4522(91)90145-e. doi:10.1016/0306-4522(91)90145-E [DOI] [PubMed] [Google Scholar]

- Fiorillo C.D, Tobler P.N, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. doi:10.1126/science.1077349 [DOI] [PubMed] [Google Scholar]

- Floresco S.B, Tse M.T. Dopaminergic regulation of inhibitory and excitatory transmission in the basolateral amygdala–prefrontal cortical pathway. J. Neurosci. 2007;27:2045–2057. doi: 10.1523/JNEUROSCI.5474-06.2007. doi:10.1523/JNEUROSCI.5474-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher M, Graham P.W, Holland P.C. The amygdala central nucleus and appetitive Pavlovian conditioning: lesions impair one class of conditioned behavior. J. Neurosci. 1990;10:1906–1911. doi: 10.1523/JNEUROSCI.10-06-01906.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher P.W, Rustichini A. Neuroeconomics: the consilience of brain and decision. Science. 2004;306:447–452. doi: 10.1126/science.1102566. doi:10.1126/science.1102566 [DOI] [PubMed] [Google Scholar]

- Gottfried J.A, O'Doherty J, Dolan R.J. Appetitive and aversive olfactory learning in humans studied using event-related functional magnetic resonance imaging. J. Neurosci. 2002;22:10 829–10 837. doi: 10.1523/JNEUROSCI.22-24-10829.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried J.A, O'Doherty J, Dolan R.J. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. doi:10.1126/science.1087919 [DOI] [PubMed] [Google Scholar]

- Groenewegen H.J, Trimble M. The ventral striatum as an interface between the limbic and motor systems. CNS Spectr. 2007;12:887–892. doi: 10.1017/s1092852900015650. [DOI] [PubMed] [Google Scholar]

- Haber S.N, Fudge J.L. The primate substantia nigra and VTA: integrative circuitry and function. Crit. Rev. Neurobiol. 1997;11:323–342. doi: 10.1615/critrevneurobiol.v11.i4.40. [DOI] [PubMed] [Google Scholar]

- Haber S.N, Fudge J.L, McFarland N.R. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J. Neurosci. 2000;20:2369–2382. doi: 10.1523/JNEUROSCI.20-06-02369.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haralambous T, Westbrook R.F. An infusion of bupivacaine into the nucleus accumbens disrupts the acquisition but not the expression of contextual fear conditioning. Behav. Neurosci. 1999;113:925–940. doi: 10.1037//0735-7044.113.5.925. doi:10.1037/0735-7044.113.5.925 [DOI] [PubMed] [Google Scholar]

- Hare T.A, O'Doherty J, Camerer C.F, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J. Neurosci. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. doi:10.1523/JNEUROSCI.1309-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harmer C.J, Phillips G.D. Enhanced appetitive conditioning following repeated pretreatment with d-amphetamine. Behav. Pharmacol. 1998;9:299–308. doi:10.1097/00008877-199807000-00001 [PubMed] [Google Scholar]

- Horvitz J.C. Mesolimbocortical and nigrostriatal dopamine responses to salient non-reward events. Neuroscience. 2000;96:651–656. doi: 10.1016/s0306-4522(00)00019-1. doi:10.1016/S0306-4522(00)00019-1 [DOI] [PubMed] [Google Scholar]

- Ito R, Dalley J.W, Howes S.R, Robbins T.W, Everitt B.J. Dissociation in conditioned dopamine release in the nucleus accumbens core and shell in response to cocaine cues and during cocaine-seeking behavior in rats. J. Neurosci. 2000;20:7489–7495. doi: 10.1523/JNEUROSCI.20-19-07489.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson D.M, Ahlenius S, Anden N.E, Engel J. Antagonism by locally applied dopamine into the nucleus accumbens or the corpus striatum of alpha-methyltyrosine-induced disruption of conditioned avoidance behaviour. J. Neural Transm. 1977;41:231–239. doi: 10.1007/BF01252018. doi:10.1007/BF01252018 [DOI] [PubMed] [Google Scholar]

- Jensen J, McIntosh A.R, Crawley A.P, Mikulis D.J, Remington G, Kapur S. Direct activation of the ventral striatum in anticipation of aversive stimuli. Neuron. 2003;40:1251–1257. doi: 10.1016/s0896-6273(03)00724-4. doi:10.1016/S0896-6273(03)00724-4 [DOI] [PubMed] [Google Scholar]

- Jog M.S, Kubota Y, Connolly C.I, Hillegaart V, Graybiel A.M. Building neural representations of habits. Science. 1999;286:1745–1749. doi: 10.1126/science.286.5445.1745. doi:10.1126/science.286.5445.1745 [DOI] [PubMed] [Google Scholar]

- Johnsrude I.S, Owen A.M, White N.M, Zhao W.V, Bohbot V. Impaired preference conditioning after anterior temporal lobe resection in humans. J. Neurosci. 2000;20:2649–2656. doi: 10.1523/JNEUROSCI.20-07-02649.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jongen-Relo A.L, Groenewegen H.J, Voorn P. Evidence for a multi-compartmental histochemical organization of the nucleus accumbens in the rat. J. Comp. Neurol. 1993;337:267–276. doi: 10.1002/cne.903370207. doi:10.1002/cne.903370207 [DOI] [PubMed] [Google Scholar]

- Jongen-Relo A.L, Kaufmann S, Feldon J. A differential involvement of the shell and core subterritories of the nucleus accumbens of rats in memory processes. Behav. Neurosci. 2003;117:150–168. doi: 10.1037//0735-7044.117.1.150. doi:10.1037/0735-7044.117.1.150 [DOI] [PubMed] [Google Scholar]

- Josselyn S.A, Kida S, Silva A.J. Inducible repression of CREB function disrupts amygdala-dependent memory. Neurobiol. Learn. Mem. 2004;82:159–163. doi: 10.1016/j.nlm.2004.05.008. doi:10.1016/j.nlm.2004.05.008 [DOI] [PubMed] [Google Scholar]

- Kalivas P.W, Duffy P. Selective activation of dopamine transmission in the shell of the nucleus accumbens by stress. Brain Res. 1995;675:325–328. doi: 10.1016/0006-8993(95)00013-g. doi:10.1016/0006-8993(95)00013-G [DOI] [PubMed] [Google Scholar]

- Kapp B.S, Frysinger R.C, Gallagher M, Haselton J.R. Amygdala central nucleus lesions: effect on heart rate conditioning in the rabbit. Physiol. Behav. 1979;23:1109–1117. doi: 10.1016/0031-9384(79)90304-4. doi:10.1016/0031-9384(79)90304-4 [DOI] [PubMed] [Google Scholar]

- Kelley A.E, Smith-Roe S.L, Holahan M.R. Response–reinforcement learning is dependent on N-methyl-d-aspartate receptor activation in the nucleus accumbens core. Proc. Natl Acad. Sci. USA. 1997;94:12 174–12 179. doi: 10.1073/pnas.94.22.12174. doi:10.1073/pnas.94.22.12174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Shimojo S, O'Doherty J.P. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS Biol. 2006;4:e233. doi: 10.1371/journal.pbio.0040233. doi:10.1371/journal.pbio.0040233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King-Casas B, Tomlin D, Anen C, Camerer C.F, Quartz S.R, Montague P.R. Getting to know you: reputation and trust in a two-person economic exchange. Science. 2005;308:78–83. doi: 10.1126/science.1108062. doi:10.1126/science.1108062 [DOI] [PubMed] [Google Scholar]

- Kirsch P, Schienle A, Stark R, Sammer G, Blecker C, Walter B, Ott U, Burkart J, Vaitl D. Anticipation of reward in a nonaversive differential conditioning paradigm and the brain reward system: an event-related fMRI study. Neuroimage. 2003;20:1086–1095. doi: 10.1016/S1053-8119(03)00381-1. doi:10.1016/S1053-8119(03)00381-1 [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams C.M, Fong G.W, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J. Neurosci. 2001a;21:RC159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Fong G.W, Adams C.M, Varner J.L, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001b;12:3683–3687. doi: 10.1097/00001756-200112040-00016. doi:10.1097/00001756-200112040-00016 [DOI] [PubMed] [Google Scholar]

- Knutson B, Delgado M.R, Phillips P.E.M. Representation of subjective value in the striatum. In: Glimcher P.W, Camerer C.F, Fehr E, Poldrack R.A, editors. Neuroeconomics: decision making and the brain. Oxford University Press; Oxford, UK: In press.. [Google Scholar]

- LaBar K.S, LeDoux J.E, Spencer D.D, Phelps E.A. Impaired fear conditioning following unilateral temporal lobectomy in humans. J. Neurosci. 1995;15:6846–6855. doi: 10.1523/JNEUROSCI.15-10-06846.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar K.S, Gatenby J.C, Gore J.C, LeDoux J.E, Phelps E.A. Human amygdala activation during conditioned fear acquisition and extinction: a mixed-trial fMRI study. Neuron. 1998;20:937–945. doi: 10.1016/s0896-6273(00)80475-4. doi:10.1016/S0896-6273(00)80475-4 [DOI] [PubMed] [Google Scholar]

- Lau B, Glimcher P.W. Action and outcome encoding in the primate caudate nucleus. J. Neurosci. 2007;27:14 502–14 514. doi: 10.1523/JNEUROSCI.3060-07.2007. doi:10.1523/JNEUROSCI.3060-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux J.E. Emotion: clues from the brain. Annu. Rev. Psychol. 1995;46:209–235. doi: 10.1146/annurev.ps.46.020195.001233. doi:10.1146/annurev.ps.46.020195.001233 [DOI] [PubMed] [Google Scholar]

- LeDoux J.E. Emotion circuits in the brain. Annu. Rev. Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. doi:10.1146/annurev.neuro.23.1.155 [DOI] [PubMed] [Google Scholar]

- Levita L, Dalley J.W, Robbins T.W. Disruption of Pavlovian contextual conditioning by excitotoxic lesions of the nucleus accumbens core. Behav. Neurosci. 2002;116:539–552. doi: 10.1037//0735-7044.116.4.539. doi:10.1037/0735-7044.116.4.539 [DOI] [PubMed] [Google Scholar]

- Li M, Parkes J, Fletcher P.J, Kapur S. Evaluation of the motor initiation hypothesis of APD-induced conditioned avoidance decreases. Pharmacol. Biochem. Behav. 2004;78:811–819. doi: 10.1016/j.pbb.2004.05.023. doi:10.1016/j.pbb.2004.05.023 [DOI] [PubMed] [Google Scholar]

- Logothetis N.K, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. doi:10.1038/35084005 [DOI] [PubMed] [Google Scholar]

- Maren S. Neurobiology of Pavlovian fear conditioning. Annu. Rev. Neurosci. 2001;24:897–931. doi: 10.1146/annurev.neuro.24.1.897. doi:10.1146/annurev.neuro.24.1.897 [DOI] [PubMed] [Google Scholar]

- McClure S.M, Berns G.S, Montague P.R. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. doi:10.1016/S0896-6273(03)00154-5 [DOI] [PubMed] [Google Scholar]

- McCullough L.D, Salamone J.D. Increases in extracellular dopamine levels and locomotor activity after direct infusion of phencyclidine into the nucleus accumbens. Brain Res. 1992;577:1–9. doi: 10.1016/0006-8993(92)90530-m. doi:10.1016/0006-8993(92)90530-M [DOI] [PubMed] [Google Scholar]

- McCullough L.D, Sokolowski J.D, Salamone J.D. A neurochemical and behavioral investigation of the involvement of nucleus accumbens dopamine in instrumental avoidance. Neuroscience. 1993;52:919–925. doi: 10.1016/0306-4522(93)90538-q. doi:10.1016/0306-4522(93)90538-Q [DOI] [PubMed] [Google Scholar]

- McNally G.P, Westbrook R.F. Predicting danger: the nature, consequences, and neural mechanisms of predictive fear learning. Learn. Mem. 2006;13:245–253. doi: 10.1101/lm.196606. doi:10.1101/lm.196606 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menon M, Jensen J, Vitcu I, Graff-Guerrero A, Crawley A, Smith M.A, Kapur S. Temporal difference modeling of the blood-oxygen level dependent response during aversive conditioning in humans: effects of dopaminergic modulation. Biol. Psychiatry. 2007;62:765–772. doi: 10.1016/j.biopsych.2006.10.020. doi:10.1016/j.biopsych.2006.10.020 [DOI] [PubMed] [Google Scholar]

- Mirenowicz J, Schultz W. Preferential activation of midbrain dopamine neurons by appetitive rather than aversive stimuli. Nature. 1996;379:449–451. doi: 10.1038/379449a0. doi:10.1038/379449a0 [DOI] [PubMed] [Google Scholar]

- Mogenson G.J, Jones D.L, Yim C.Y. From motivation to action: functional interface between the limbic system and the motor system. Prog. Neurobiol. 1980;14:69–97. doi: 10.1016/0301-0082(80)90018-0. doi:10.1016/0301-0082(80)90018-0 [DOI] [PubMed] [Google Scholar]

- Montague P.R, Berns G.S. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. doi:10.1016/S0896-6273(02)00974-1 [DOI] [PubMed] [Google Scholar]

- Montague P.R, Dayan P, Sejnowski T.J. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris J.S, Ohman A, Dolan R.J. A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl Acad. Sci. USA. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. doi:10.1073/pnas.96.4.1680 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy C.A, Pezze M, Feldon J, Heidbreder C. Differential involvement of dopamine in the shell and core of the nucleus accumbens in the expression of latent inhibition to an aversively conditioned stimulus. Neuroscience. 2000;97:469–477. doi: 10.1016/s0306-4522(00)00043-9. doi:10.1016/S0306-4522(00)00043-9 [DOI] [PubMed] [Google Scholar]

- Neill D.B, Boggan W.O, Grossman S.P. Impairment of avoidance performance by intrastriatal administration of 6-hydroxydopamine. Pharmacol. Biochem. Behav. 1974;2:97–103. doi: 10.1016/0091-3057(74)90140-3. doi:10.1016/0091-3057(74)90140-3 [DOI] [PubMed] [Google Scholar]

- O'Doherty J.P. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr. Opin. Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. doi:10.1016/j.conb.2004.10.016 [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Kringelbach M.L, Rolls E.T, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nat. Neurosci. 2001;4:95–102. doi: 10.1038/82959. doi:10.1038/82959 [DOI] [PubMed] [Google Scholar]

- O'Doherty J.P, Dayan P, Friston K, Critchley H, Dolan R.J. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. doi:10.1016/S0896-6273(03)00169-7 [DOI] [PubMed] [Google Scholar]

- Pagnoni G, Zink C.F, Montague P.R, Berns G.S. Activity in human ventral striatum locked to errors of reward prediction. Nat. Neurosci. 2002;5:97–98. doi: 10.1038/nn802. doi:10.1038/nn802 [DOI] [PubMed] [Google Scholar]

- Pare D, Smith Y, Pare J.F. Intra-amygdaloid projections of the basolateral and basomedial nuclei in the cat: Phaseolus vulgaris-leucoagglutinin anterograde tracing at the light and electron microscopic level. Neuroscience. 1995;69:567–583. doi: 10.1016/0306-4522(95)00272-k. doi:10.1016/0306-4522(95)00272-K [DOI] [PubMed] [Google Scholar]

- Parkinson J.A, Olmstead M.C, Burns L.H, Robbins T.W, Everitt B.J. Dissociation in effects of lesions of the nucleus accumbens core and shell on appetitive Pavlovian approach behavior and the potentiation of conditioned reinforcement and locomotor activity by d-amphetamine. J. Neurosci. 1999;19:2401–2411. doi: 10.1523/JNEUROSCI.19-06-02401.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson J.A, Willoughby P.J, Robbins T.W, Everitt B.J. Disconnection of the anterior cingulate cortex and nucleus accumbens core impairs Pavlovian approach behavior: further evidence for limbic cortical–ventral striatopallidal systems. Behav. Neurosci. 2000;114:42–63. doi:10.1037/0735-7044.114.1.42 [PubMed] [Google Scholar]

- Parkinson J.A, Crofts H.S, McGuigan M, Tomic D.L, Everitt B.J, Roberts A.C. The role of the primate amygdala in conditioned reinforcement. J. Neurosci. 2001;21:7770–7780. doi: 10.1523/JNEUROSCI.21-19-07770.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan R.J, Frith C.D. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. doi:10.1038/nature05051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pezze M.A, Feldon J. Mesolimbic dopaminergic pathways in fear conditioning. Prog. Neurobiol. 2004;74:301–320. doi: 10.1016/j.pneurobio.2004.09.004. doi:10.1016/j.pneurobio.2004.09.004 [DOI] [PubMed] [Google Scholar]

- Pezze M.A, Heidbreder C.A, Feldon J, Murphy C.A. Selective responding of nucleus accumbens core and shell dopamine to aversively conditioned contextual and discrete stimuli. Neuroscience. 2001;108:91–102. doi: 10.1016/s0306-4522(01)00403-1. doi:10.1016/S0306-4522(01)00403-1 [DOI] [PubMed] [Google Scholar]

- Pezze M.A, Feldon J, Murphy C.A. Increased conditioned fear response and altered balance of dopamine in the shell and core of the nucleus accumbens during amphetamine withdrawal. Neuropharmacology. 2002;42:633–643. doi: 10.1016/s0028-3908(02)00022-9. doi:10.1016/S0028-3908(02)00022-9 [DOI] [PubMed] [Google Scholar]

- Phelps E.A, Delgado M.R, Nearing K.I, LeDoux J.E. Extinction learning in humans: role of the amygdala and vmPFC. Neuron. 2004;43:897–905. doi: 10.1016/j.neuron.2004.08.042. doi:10.1016/j.neuron.2004.08.042 [DOI] [PubMed] [Google Scholar]

- Phelps E.A, LeDoux J.E. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron. 2005;48:175–187. doi: 10.1016/j.neuron.2005.09.025. doi:10.1016/j.neuron.2005.09.025 [DOI] [PubMed] [Google Scholar]

- Pitkanen A, Savander V, LeDoux J.E. Organization of intra-amygdaloid circuitries in the rat: an emerging framework for understanding functions of the amygdala. Trends Neurosci. 1997;20:517–523. doi: 10.1016/s0166-2236(97)01125-9. doi:10.1016/S0166-2236(97)01125-9 [DOI] [PubMed] [Google Scholar]

- Ploghaus A, Tracey I, Clare S, Gati J.S, Rawlins J.N, Matthews P.M. Learning about pain: the neural substrate of the prediction error for aversive events. Proc. Natl Acad. Sci. USA. 2000;97:9281–9286. doi: 10.1073/pnas.160266497. doi:10.1073/pnas.160266497 [DOI] [PMC free article] [PubMed] [Google Scholar]