Abstract

Ten years have passed since the Japanese ‘Century of the Brain’ was promoted, and its most notable objective, the unique ‘creating the brain’ approach, has led us to apply a humanoid robot as a neuroscience tool. Here, we aim to understand the brain to the extent that we can make humanoid robots solve tasks typically solved by the human brain by essentially the same principles. I postulate that this ‘Understanding the Brain by Creating the Brain’ approach is the only way to fully understand neural mechanisms in a rigorous sense. Several humanoid robots and their demonstrations are introduced. A theory of cerebellar internal models and a systems biology model of cerebellar synaptic plasticity is discussed. Both models are experimentally supported, but the latter is more easily verifiable while the former is still controversial. I argue that the major reason for this difference is that essential information can be experimentally manipulated in molecular and cellular neuroscience while it cannot be manipulated at the system level. I propose a new experimental paradigm, manipulative neuroscience, to overcome this difficulty and allow us to prove cause-and-effect relationships even at the system level.

Keywords: computational neuroscience, creating the brain, humanoid robot, cerebellar learning, long-term depression, manipulative neuroscience

1. ‘Creating the brain’ in the Japanese century of the brain

In April 1996, an ad hoc committee on brain science of the Science Council of Japan, the nation's most prestigious association of scientists, issued an advisory called ‘Promotion of Brain Science’ to the Japanese government. In August of the same year, a committee for brain science promotion in the Science and Technology Agency of the Japanese government proposed a huge national brain research project funded at 2 trillion yen (approx. US$20 billion) for 20 years within a blueprint for the ‘Century of the Brain’. In May 1997, a brain science committee of the Prime Minister's Council of Science and Technology, the nation's highest science advisory body, established three major research areas for its long-term plan: Understanding the Brain, Protecting the Brain, and Creating the Brain. The Brain Science Institute of RIKEN (The Institute of Physical and Chemical Research) was started in 1997, closely following this long-term plan (Normile 1997). Ten years have passed since the Japanese Century of Brain was launched but, surprisingly, according to Shun-ichi Amari, president of RIKEN's Brain Science Institute, the 2005 competitive budget for Japanese brain science was only 18 billion yen, and it has been decreasing over the past 7 years after peaking in 2000 (Amari 2006), in sharp contrast to the magnitude of the original proposal (the 2007 total budget was estimated as approximately 30 billion yen by the Japanese Ministry of Education, Science and Culture).

Among the three ways of exploring the brain, Creating the Brain is unique to Japan. Computers and robots have improved dramatically, but they are still greatly inferior to humans in most brain functions, such as natural language processing, visual scene understanding, smooth and dexterous manipulation of objects and common sense reasoning, all of which can be executed almost effortlessly by humans, at least at the conscious level. This situation indicates that computer science and robotics did not make as much progress as expected 50 years ago and that neuroscience has not provided sufficient information on these areas. The ‘Creating the Brain’ was motivated by this reflection, with the aim to develop brain-style information processing and communication technologies. In particular, the development of ‘brain-style’ computer systems, with human-like intellectual and emotional capabilities, was set as this work's long-term goal.

Over the past 10 years, millions of artificial retinal chips were sold for several billion yen per year, and many kinds of ‘neuro-’electric home appliances with learning capabilities based on artificial neural networks have been popular in the Japanese market. However, the current status of research is still far from the development of brain-style computer systems. The main difficulty is that conventional medical and biological approaches in neuroscience do not provide the type of knowledge that is essential for computer development. The accumulation of a vast amount of data on brain locations (whose function is lost when a specific brain region is destroyed), on substances (a specific molecule is critical for some brain functions) and on correlations (firing rates of neurons in a specific brain area correlated with a hypothetical element in cognition) is unfortunately nearly useless in unravelling neural mechanisms, at least to the extent that principles of information processing can be learnt from the brain. On the other hand, these kinds of knowledge are useful for Protecting the Brain (e.g. avoiding the speech area in neurosurgical ablation) or at some levels of Understanding the Brain.

We cannot state that we fully understand brain functions by simply accumulating the above types of data on locations, substances and correlations. In the deepest sense of ‘Understanding the Brain’, we should reveal the secrets of neural mechanisms of brain functions to the extent that we can ‘Create the Brain’. Furthermore, definitions of computational neuroscience vary depending on the researchers involved in this area. For some, approaches that depend on computers, mathematics, physics, engineering or David Marr's computational theory could be called ‘Computational Neuroscience’. From the mid-1980s, we initiated our own approach to computational neuroscience with the following definition: ‘We elucidate information processing of the brain to the extent that artificial machines, either computer programs or robots, can be built to solve the same computational problems that are solved by the human brain, using essentially the same principles.’ Many researchers, including our group, started to believe that the only possible methodology to fully understand how the brain works is to build or reconstruct artificial systems that can realize brain functions. We call this approach ‘Understanding the Brain by Creating the Brain’, and it is currently the major approach taken in the Japanese ‘Creating the Brain’ area; furthermore, this might be regarded as the Japanese definition of computational neuroscience. In general, this is the new direction of ‘analysis by synthesis’ in biological disciplines, and it has common ground with synthetic biology in systems biology or bioinformatics of molecular and cellular biology, where the goal is to create artificial organisms in silico or in wet biology.

2. Humanoid robots as a neuroscience tool

Even if we could create an artificial brain, we could not investigate its functions, such as vision or motor control, if we just let it float in incubation fluid in a jar. The brain must be connected to sensors and a motor apparatus so that it can interact with its environment. A humanoid robot controlled by an artificial brain, which is implemented as software based on computational models of brain functions, seems to be the most plausible candidate for this purpose, given currently available technology. With the slogan of Understanding the Brain by Creating the Brain, in the mid-1980s, we started to use robots for brain research (Miyamoto et al. 1988), and approximately 10 different kinds of robots have been used by our group at Osaka University's Department of Biophysical Engineering, ATR Laboratories, ERATO Kawato Dynamic Brain Project (ERATO 1996–2001; http://www.kawato.jst.go.jp/) and ICORP Kawato Computational Brain Project (ICOPR 2004–2009; http://www.cns.atr.jp/hrcn/ICORP/project.html).

An optimal computational theory for one type of body may not be optimal for other types. Thus, if a humanoid robot is used for exploring and examining neuroscience theories rather than for engineering, it should be as close as possible to a human body. Within the ERATO project, in collaboration with the SARCOS research company led by Prof. Stephen C. Jacobsen of the University of Utah, Dr Stefan Schaal as robot-group leader with his colleagues developed a humanoid robot called DB (Dynamic Brain; figure 1) with the aim of most closely replicating a human body, given the robotics technology of 1996. DB possessed 30 degrees of freedom (d.f.) and human-like size and weight. DB is mechanically compliant like a human body, and dissimilar to most electric motor-driven and highly geared humanoid robots, because the SARCOS’ hydraulic actuators are powerful enough to avoid the necessity of using reduction mechanisms at the joints. Within its head, DB is equipped with an artificial vestibular organ (gyro sensor), which measures head velocity, and four cameras with vertical and horizontal d.f. Two of the cameras have telescopic lenses corresponding to foveal vision, while the other two have wide-angle lenses corresponding to peripheral vision. SARCOS developed the hardware and low-level analogue feedback loops, while the ERATO project developed high-level digital feedback loops and all of the sensory motor coordination software.

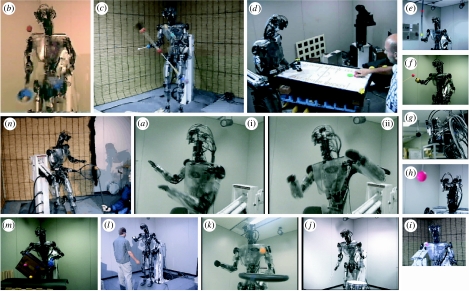

Figure 1.

Demonstrations of 14 different tasks by the ERATO humanoid robot DB.

The photographs in figure 1 introduce 14 of the more than 30 different tasks that can be performed by DB (Atkeson et al. 2000). Most of the algorithms used for these task demonstrations are based roughly on the principles of information processing in the brain, and many contain some or all of the three learning elements: imitation learning (Miyamoto et al. 1996; Schaal 1999; Ude & Atkeson 2003; Nakanishi et al. 2004; Ude et al. 2004), reinforcement learning and supervised learning. Imitation learning (‘Learning by Watching’, ‘Learning by Mimicking’ or ‘Teaching by Demonstration’) was involved in (a(i),(ii)) Okinawan folk dance ‘Katya-shi’ (Riley et al. 2000), (b) three-ball juggling (Atkeson et al. 2000), (c) devil sticking, (d) air hockey (Bentivegna et al. 2004a,b), (e) pole balancing, (l) sticky hands interaction with a human (Hale & Pollick 2005), (m) tumbling a box (Pollard et al. 2002) and (n) a tennis swing (Ijspeert et al. 2002). (d) The air-hockey demonstration (Bentivegna et al. 2004a,b) uses not only imitation learning but also a reinforcement learning algorithm with reward (a puck enters the opponent's goal) and penalty (a puck enters the robot's goal) and skill learning (a kind of supervised learning). Demonstrations of (e) pole balancing and (f) visually guided arm reaching towards a target used a supervised learning scheme (Schaal & Atkeson 1998), which was motivated by our approach to cerebellar internal model learning, introduced in the next section. Demonstrations of (g) adaptation of the vestibulo-ocular reflex (Shibata & Schaal 2001), (h) adaptation of smooth pursuit eye movement and simultaneous realization of these two kinds of eye movements together with (i) saccadic eye movements were based on computational models of eye movements and their learning (Shibata et al. 2005). (j) Demonstrations of drumming, (k) paddling a ball and (n) a tennis swing were based on central pattern generators.

The ICORP Computational Brain Project (2004–2009), which is an international collaboration project with Prof. Chris Atkeson of Carnegie Mellon University, follows the ERATO Dynamic Brain Project in its slogan Understanding the Brain by Creating the Brain and ‘Humanoid Robots as a Tool for Neuroscience’. Again in collaboration with SARCOS, at the beginning of 2007, Dr Gordon Cheng as a group leader with his colleagues developed a new humanoid robot called CB-i (Computational Brain; figure 2). CB-i is even closer to a human body than DB. To improve the mechanical compliance of the body, CB-i also used hydraulic actuators rather than electric motors. The biggest improvement of CB-i over DB is its autonomy. DB was mounted at the pelvis because it needs to be powered by an external hydraulic pump through oil hoses arranged around the mount. A computer system for DB was also connected to DB by wires. Thus, DB could not function autonomously. In contrast, CB-i carries both onboard power supplies (electric and hydraulic) and a computing system on its back, and thus it can function fully autonomously. CB-i was designed for full-body autonomous interaction, for walking and simple manipulations. It is equipped with a total of 51 d.f.: 2×7 d.f. legs, 2×7 d.f. arms, 2×2 d.f. eyes, 3 d.f. neck/head, 1 d.f. mouth, 3 d.f. torso and 2×6 d.f. hands. CB-i is designed to have similar configurations, range of motion, power and strength to a human body, allowing it to better reproduce natural human-like movements, in particular for locomotion and object manipulation.

Figure 2.

New humanoid robot called CB-i (Computational Brain).

Locomotion and posture control are the most challenging research topics for a human-like and autonomous humanoid robot such as CB-i, owing to its mechanically compliant and 51 d.f. body. Within the ICORP CB-i Project, biologically inspired control algorithms for locomotion have been studied using the three different humanoid robots as shown in figure 3 (a, DB-chan (Nakanishi et al. 2004); b, Fujitsu Automation HOAP-2 (Matsubara et al. 2006) and c, CB-i (Morimoto et al. 2006)) as well as the SONY small-size humanoid robot QRIO (Endo et al. 2005) as test beds. Successful locomotion algorithms use various aspects of biological control systems, such as neural networks for central pattern generators, phase resetting by various sensory feedbacks including adaptive gains and hierarchical reinforcement learning algorithms. In the demonstration of robust locomotion (figure 3a), three biologically important aspects of control algorithms are used: imitation learning, a nonlinear dynamical system as a central pattern generator and phase resetting by a foot-ground contact signal (Nakanishi et al. 2004). First, a neural network model developed by Schaal et al. (2003) quickly learns correctly demonstrated locomotion trajectories by humans or other robots. In order to synchronize this limit cycle oscillator (central pattern generator) with a mechanical oscillator functioning through the robot body and the environment, the neural oscillator is phase reset by foot-ground contact. This guarantees stable synchronization of neural and mechanical oscillators with respect to phase and frequency. The achieved locomotion is quite robust against different surfaces with various frictions and slopes, and it is human-like such that the robot body's centre of gravity is high while the knee is nearly fully extended at foot contact. This is in sharp contrast to locomotion engineered by zero-moment point control, a traditional control method for biped robots, which was proposed by Vukobratovic 35 years ago and then successfully implemented by Ichiro Kato and Honda and Sony humanoid robots, and usually induces a low centre of gravity and bent knees.

Figure 3.

Biologically motivated biped locomotion of three humanoid robots. (a) DB-chan with learning from demonstration and a nonlinear dynamical system for motor primitives (Nakanishi et al. 2004). (b) HOAP2 with central pattern generators and reinforcement learning algorithms (Matsubara et al. 2006). (c) CB-i with coupled oscillators that are phase regulated by reaction forces from the floor (Morimoto et al. 2006).

3. Cerebellar internal models

Many of the DB demonstrations mentioned in the previous section are based on the cerebellar internal model theory, which we have been developing over the past 25 years (Kawato et al. 1987; Kawato & Gomi 1992; Kawato 1999): each microzone of the cerebellar cortex learns to acquire an internal model of some specific object in the external world, such as an arm or an eye of one's own body, manipulative tools or another person's brain. In this section, we briefly describe the background of this theory, the theory itself and some experimental support of this theory.

Among the many different models of the cerebellum, learning models have always been the most attractive, versatile and influential. The learning models are based on unique structures of the cerebellar cortex. The Purkinje cell, the only output neuron in the cerebellar cortex, receives two major excitatory inputs from climbing and parallel fibres. A single climbing fibre axon makes multiple synapses on a single Purkinje cell and induces very strong excitatory postsynaptic potentials, which lead to complex spikes that consist of a few Ca2+ spikes. By contrast, 200 000 synapses from parallel fibres, which are the axons of 1010–11 granule cells, generate simple spikes, each of which is a single Na+ spike. Although early cerebellar learning models (Marr 1969; Ito 1970; Albus 1971) proposed that the cerebellum performs pattern recognition, probably influenced by a ‘perceptron’, they are not consistent with physiological data showing that simple spike-firing rates of Purkinje cells temporally change and encode dynamic and kinematic features of movements. Recent studies suggest that the human cerebellum is important for sensory and cognitive functions as well as for motor control. Might there be a general computation realized by the cerebellar cortex that covers the sensory, cognitive and motor domains and also conforms to existing hypotheses on such matters as timing, coordination and error correction? Internal models seem to provide the most versatile and hypothesis-compatible computational entity satisfying these requirements. Internal models are neural networks inside the brain (‘internal’) that can mimic (‘model’) the input–output characteristics of some dynamical process outside the brain. In a motor control context, a forward model of a body can predict sensory consequences of movement from the efference copy of a motor command. This is because the controlled objects in movements generate a trajectory from the motor commands. By contrast, an inverse model of a body and the external world can compute the necessary motor commands from a desired movement pattern. Internal models are useful for predictive computations in sensory, cognitive and motor functions. If an inverse dynamics model for a controlled object possessing dynamics and kinematics with multiple d.f. is destroyed, the movement becomes clumsy, slow and not well corrected. This is because neither feed-forward control (dependent on the inverse model) nor sophisticated feedback control (dependent on the forward model) is available. Thus, control must rely on poor and crude feedback control alone.

Approximately 25 years ago, my colleagues and I proposed that different parts of the cerebellum contain either a forward or inverse model (Tsukahara & Kawato 1982; Kawato et al. 1987) and also that a computational scheme called feedback-error-learning is used to acquire an inverse model in the supervised learning scheme (figure 4; Kawato et al. 1987; Kawato 1990; Kawato & Gomi 1992). If supervised learning takes place in the brain for motor control, the following difficult computational problem called ‘distal teacher’, formulated by Jordan & Rumelhart (1992), should be resolved. The error between desired and actual movement patterns can be measured by sensory organs, but these movement errors could be very different in spatial coordinates as well as in temporal dynamics from the necessary error signal for the motor command. Furthermore, it should be emphasized that there exists no teaching signal for motor commands in supervised motor learning, since if it did exist, it would be directly used for motor control and there would be no need for learning.

Figure 4.

(a) General feedback-error-learning model. (b) Cerebellar feedback-error-learning model (Kawato 1999). The ‘controlled object’ is a physical entity that needs to be controlled by the central nervous system, such as the eyes, hands, legs or torso.

In the feedback-error-learning model depicted in figure 4, the inverse model first transforms a desired trajectory into a feed-forward motor command. Second, a crude feedback controller generates a feedback motor command from the sensory error. Third, the summation of the feed-forward and feedback motor commands is sent to the controlled object. Finally, and most importantly, the feedback motor command is used as the error signal for motor commands in the supervised learning to acquire the inverse model. Stability and convergence of the feedback-error-learning algorithm have been mathematically proven (Nakanishi & Schaal 2004) as well as successfully applied to several robotic demonstrations (e.g. Miyamoto et al. 1988). Here, simple spikes represent feed-forward motor commands and the parallel fibre inputs represent the desired trajectory as well as the sensory feedback of the current status of the controlled object. A microzone of the cerebellar cortex constitutes part of an inverse model of a specific controlled object, such as the eye or the arm. Most importantly, climbing fibre inputs are assumed to carry a copy of the feedback motor commands generated by the feedback control circuit. Thus, the complex spikes are assumed to be trajectory error signals already expressed in motor-command coordinates. Climbing fibre inputs are delayed by 100 ms with respect to the responsible parallel fibre inputs that caused the movement and the resulting error, but the spike-timing dependency of parallel fibre-Purkinje cell long-term depression (LTD) selectively decreases the efficacy of only the responsible parallel fibre synapses, explained in the next section.

Kenji Kawano and his colleagues supported the cerebellar feedback-error-learning model with neurophysiological studies in the ventral paraflocculus of the monkey cerebellum during ocular following responses (Shidara et al. 1993; Gomi et al. 1998; Kobayashi et al. 1998; Kawano 1999; Kawato 1999; Takemura et al. 2001; Yamamoto et al. 2002). The ocular following responses are tracking movements of the eyes evoked by movements in a large visual scene that are thought to be important for visual stabilization of gaze. In the neural circuit controlling this response, the phylogenetically older and cruder feedback circuit comprises the retina, the accessory optic system and the brain stem. The phylogenetically newer and more sophisticated feed-forward pathway and the inverse dynamics model correspond to the cerebral and cerebellar cortical pathways and the cerebellum, respectively. The sensory error signal is computed as the image motion on the retina (retinal slip). This is different from the scheme of figure 4, where some neural mechanisms explicitly compute the difference (error) between the desired and actual states. However, in the case of eye movement control, such as ocular following responses or vestibulo-ocular reflexes, the desired state is eye movement in which the retinal image does not move, and thus the optical computation (retinal slip detection) can replace subtraction of the desired and actual states. On the other hand, the desired trajectory information transmitted to the flocculus for the vestibulo-ocular reflex is the head motion signal detected by semicircular canals, whereas for the ocular following responses the desired trajectory information for the eye is easily computed from the image motion signal conveyed by the visual areas in the cerebral cortex.

During ocular following responses, the time courses of simple spike-firing frequency show complicated patterns. However, they were quite accurately reconstructed using an inverse dynamics representation of eye movement (Shidara et al. 1993; Gomi et al. 1998). The model fit was good for the majority of the neurons studied under a wide range of visual stimulus conditions, different eye movements and different firing patterns. The same inverse dynamics analysis for the medial superior temporal area (MST) of the cerebral cortex and dorsolateral pontine nucleus, which provide visual mossy fibre inputs, revealed that the their firing time courses were well reconstructed from visual retinal slip but not from motor commands (Takemura et al. 2001). Taken together, these data suggest that the ventral paraflocculus is the major site of the inverse dynamics model of the eye for ocular following responses.

Motor commands, conveyed by simple spikes, should be directly modified and acquired by LTD while guided by motor-command errors, which are conveyed by climbing fibre inputs. For this to work, the climbing fibre inputs need to convey comparable details of temporal and spatial information to the motor commands, but the ultra-low discharge rates of the latter would appear to rule this out. This apparently discrete and sporadic nature of climbing fibre inputs was characterized as an unexpected event detector, and it suggested a reinforcement learning-type theory. However, if thousands of trials are averaged, the firing rates actually conveyed very accurate and reliable time courses of motor-command error within a few hundreds of milliseconds (Kobayashi et al. 1998). More specifically, complex spike time courses are better reconstructed by retinal slip than eye movements, but in the motor-command coordinates rather than in visual coordinates as detailed below. Because LTD has recently been shown to integrate a temporally leaky postsynaptic Ca2+ signal (Tanaka et al. 2007) as introduced in the next section, it can perform temporal averaging. Consequently, the firing probability of climbing fibre inputs can convey fine temporal resolution that matches information of the dynamic command signal.

The preferred directions of MST and pontine neurons were evenly distributed over 360°. Thus, the visual coordinates are uniformly distributed over all possible directions. On the other hand, projections of the three-dimensional spatial coordinates of the extraocular muscles were in either a horizontal or a vertical direction, and they were entirely different from the visual coordinates. Preferred directions of simple spikes were either downward or ipsiversive (i.e. towards the same side), and at the site of each recording, electrical stimulation of a Purkinje cell elicited eye movement towards the preferred direction of the simple spike (Kawano 1999). These data indicate that the simple spike coordinate framework is already that of the motor commands. Thus, at the parallel fibre-Purkinje cell synapse, a drastic visuomotor coordinate transformation occurs. The neural representation dramatically changes from population coding in the MST and pontine nucleus to firing-rate coding of Purkinje cells at the parallel fibre-Purkinje cell synapse. What, then, is the origin of this drastic transformation? The theory proposes that the complex spikes, and ultimately the accessory optic system, are the source of this motor-command spatial framework. The preferred directions of pretectum neurons are upward and those of the contralateral nucleus of optic tract neurons are ipsiversive (thus contraversive for the cerebellum). Their signals are conveyed to the inferior olive and further to the cerebellum to produce the complex spikes. In summary, the coordinate frame of the climbing fibres was vertical and horizontal, forming the motor coordinates, not the visual coordinates of MST or pontine nucleus. A realistic neural network model successfully simulated all of these experimental findings (Yamamoto et al. 2002). The coordinates of semicircular canals and the extraocular muscles are very similar, and it is rather difficult to evaluate coordinate transformation in the vestibulo-ocular reflex and flocculus compared with the ocular following responses. However, recent physiological studies indicate that climbing fibre inputs encode motor error rather than sensory error (Winkelman & Frens 2006). This argues against a class of model that assumes sensorimotor transformation downstream of the Purkinje cells, including de-correlation models (Dean et al. 2002).

For arm movements under multiple force fields, firings of many Purkinje cells correlate with dynamics (Yamamoto et al. 2007). fMRI studies mapped forward and inverse models of tools and manipulated objects in the cerebellar cortex (Imamizu et al. 2000; Kawato et al. 2003). Thus, we have been accumulating experimental support for the cerebellar internal model theory for arm movements, the human cerebellum for tool use and forward models in addition to inverse models. Having said this, I must admit that experimental support outside the inverse dynamics model for the ocular following responses in the ventral paraflocculus is rather indirect and circumstantial, and these issues are still controversial (e.g. cf. Pasalar et al. 2006; Yamamoto et al. 2007). However, because the neural circuit of the cerebellar cortex is so uniform for different regions and different functions, unlike the cerebral cortex, I predict that a theory fully supported even for only a single function in a small region of the cerebellum should possess some computational principles that can be generalized to all functions and regions of the cerebellum.

4. Systems biology models of cerebellar LTD

If the LTD of parallel fibre-Purkinje cell synapses is the elementary cellular process of supervised learning, as postulated in cerebellar learning theory, it must satisfy the following three requirements. First, LTD should be associative. That is, LTD should be induced only when both parallel and climbing fibre inputs are conjunctively activated. Second, LTD should be maximally induced when climbing fibre activation is delayed by 50–200 ms with respect to parallel fibre activation. This is because the time window of LTD should compensate for the delay of the reafferent climbing fibre inputs to feedback the consequences of the parallel fibre simple spike-induced movements. Third, LTD should be synapse specific; only a parallel fibre synapse that is conjointly activated with a climbing fibre input should be depressed and not other synapses. However, a considerable number of studies have reported violations of these three requirements under some experimental conditions; consequently, LTD has been questioned several times as the elementary process of cerebellar supervised learning (Llinas et al. 1997) and has been postulated for other functions, such as the normalization of total synaptic efficacies on a single Purkinje cell (De Schutter 1995). The kinetic simulation model of LTD signal transduction pathways in a Purkinje cell dendritic spine (figure 5), which has been developed in a series of publications from our group (Kuroda et al. 2001; Doi et al. 2005; Ogasawara et al. 2007) and was recently supported experimentally (Tanaka et al. 2007), provided a coherent perspective on these confusing experimental data and competing theoretical hypotheses, and it is introduced in this section.

Figure 5.

Signal transduction model of cerebellar LTD. AA, arachidonic acid; CF, climbing fibres; ER, endoplasmic reticulum; Glu, glutamate; Gq, G-proteins; IP3, inositol 1,4,5-triphosphate; IP3Rs, IP3 receptors; MAP, mitogen-activated protein; MEK, MAP/extracellular signal-regulated kinase; mGluRs, metabotropic glutamate receptors; NO, nitric oxide; PF, parallel fibres; PKC, protein kinase C; PKG, cGMP-dependent protein kinase; PP2A, protein phosphatase 2A; PLA2, cytosolic phosopholipase A2; PLC, phospholipase C; sGC, soluble guanylyl cyclase; VGCCs, voltage-gated calcium channels.

Climbing fibre inputs activate AMPA receptors (AMPA-Rs) on dendritic shafts and induce depolarization, which opens the voltage-gated calcium channels (VGCCs) on a spine as indicated by the dashed line in figure 5, leading to Ca2+ influx from the extracellular space into the spine head, reaching a concentration of approximately 1 μM. Parallel fibre inputs also induce AMPA-R activation on dendritic spines, just as climbing fibre input does but with a smaller depolarization and Ca2+ influx. In addition, they simultaneously activate metabotropic glutamate receptors (mGluRs). Furthermore, they presynaptically synthesize nitric oxide (NO) that diffuses into the spine. In the metabotropic pathway, activated mGluRs induce production of inositol 1,4,5-triphosphate (IP3) and diacylglycerol (DAG) via G-proteins (Gq) and phospholipase-Cβ (PLCβ) at the postsynaptic density. IP3 then diffuses into the cytosol and binds to IP3 receptors (IP3Rs), which are IP3-gated Ca2+ channels on the intracellular Ca2+ stores, such as the endoplasmic reticulum (ER). Cytosolic Ca2+ above some threshold triggers a regenerative cycle of IP3-dependent and Ca2+-induced Ca2+ release (depicted as a positive feedback loop (red) between Ca2+ and IP3Rs in figure 5) from the ER via IP3Rs opened by binding of both IP3 and Ca2+. This means that the open probability of IP3Rs increases with Ca2+ concentration, and the Ca2+ threshold of this regenerative cycle decreases with IP3 concentration. An increase in cytosolic IP3 concentration was simulated to be maximal at 3 μM approximately 100 ms after the parallel fibre input. This slow rate of increase in IP3 concentration is due to the slow dynamics of the metabotropic pathway. Because Ca2+ influx via VGCCs due to climbing fibre activation is an immediate event, when the climbing fibre input is delayed approximately 100 ms with respect to the parallel fibre input, Ca2+ increase coincides with IP3 increase, and together they trigger the Ca2+-induced Ca2+ release of 10 μM. If either climbing fibre input or parallel fibre input alone is activated, this large Ca2+ release does not occur because either IP3 or Ca2+, respectively, does not reach the threshold for the regenerative cycle. Consequently, the Ca2+ nonlinear excitable dynamics model of Doi et al. (2005; one-third on r.h.s. in figure 5) reproduced the associative nature and the spike-timing-dependent plasticity (STDP) of cerebellar LTD.

In the model proposed by Kuroda et al. (2001), the large Ca2+ increase, which is caused by the conjunctive parallel fibre input and the delayed climbing fibre input, induces the activation of linear cascades of phosphorylation of protein kinase C (PKC) followed by phosphorylation and then internalization of AMPA-R, which explains the early phase of LTD up to approximately 10 minutes. On the other hand, the intermediate phase of LTD up to several tens of minutes is mediated by a mitogen-activated protein (MAP) kinase-dependent positive-feedback loop, which consists of PKC, Raf, MAP/extracellular signal-regulated kinase (MEK), MAP kinase, cytosolic phosopholipase A2 (PLA2), arachidonic acid (AA) and back to PKC (the magenta loop in the middle of figure 5). This positive feedback loop has two stable equilibrium points separated by a saddle point that determines the threshold of LTD. At the low equilibrium point, the MAP kinase-positive feedback loop is not activated, but if strong or long-lasting Ca2+ elevation occurs, the state jumps to the higher equilibrium over the saddle point and AMPA-Rs continue to be phosphorylated and internalized during this prolonged activation of the positive feedback loop. Consequently, this MAP kinase-positive feedback model (one-third in middle of figure 5) explains the long-term nature of LTD.

In the NO model (one-third on left-hand side of figure 5), which was first proposed by Kuroda et al. (2001) and then extensively studied in combination with electrical properties of dendrites by Ogasawara et al. (2007), NO activates soluble guanylyl cyclase (sGC), and the activated sGC catalyses the conversion of GTP into cGMP, which activates cGMP-dependent protein kinase (PKG). PKG phosphorylates its substrate, G-substrate and the phosphorylated G-substrate preferentially inhibits protein phosphatase 2A (PP2A). Activated PP2A dephosphorylates MEK and AMPA-R. Inhibition of the dephosphorylation of MEK was required for the activation of the MAP kinase-positive feedback loop. Therefore, by ultimately inhibiting PP2A and activating the positive feedback loop, NO plays a permissive role in the induction of LTD.

The Ca2+ dynamics model coherently reproduced both the associative and non-associative natures of LTD, and the spike-timing dependency of associative LTD, as follows. Within the physiological ranges of input strength, the conjunctive stimulation of the parallel and delayed climbing fibre inputs is essential to induce a large regenerative Ca2+ increase, which is necessary for crossing the MAP kinase-positive feedback loop threshold and inducing LTD. However, if stimulation of parallel fibre bundles is strong enough, or if uncaged Ca2+ or IP3 is large enough, these stimuli alone can increase the Ca2+ concentration so that it crosses the Ca2+ dynamics threshold, resulting in a large enough Ca2+-induced Ca2+ release from the ER to reproduce non-associative LTD. In the hippocampal and cerebral pyramidal neurons, backpropagation of somatic action potentials removes the magnesium block of NMDA receptors, and when coincided with glutamate release by pre-spiking, NMDA receptors are fully activated and lead to supralinear Ca2+ influx, resulting in the induction of LTP. The coincidence of glutamate release with backpropagation in this order within a 10 ms order is essential for NMDA receptor-dependent STDP there. Backpropagation of somatic action potentials require firing of postsynaptic cells, which is the essential condition of Hebbian learning. Neither backpropagation nor the functional NMDA receptors exist in Purkinje cells, suggesting completely different cellular mechanisms of Purkinje cell supervised learning with 100 ms STDP from cerebral Hebbian learning with STDP of tens of milliseconds. These systems biology models explain diverse LTD experiments and clearly demonstrate that LTD is a supervised learning rule, and not anti-Hebbian as characterized elsewhere (Dayan & Abbott 1999).

The excitable dynamics of the Ca2+ model and bistable dynamics of the MAP kinase-positive feedback loop model may provide a possible molecular mechanism to resolve the ‘plasticity–stability’ dilemma, maintaining memory stable while still being sensitive to delicate environmental changes. Each spine is equipped with a surprisingly small number of molecules (e.g. only 40 AMPA-Rs). With a cascade of excitable and bistable dynamics starting from Ca2+ dynamics, followed by the MAP kinase-positive feedback loop, internalization of AMPA receptors, a possible change in cytoskeleton and membrane proteins, and finally a morphological change in the spine; LTD could be induced by very delicate and low-energy inputs but still maintain acquired memory over a prolonged period of days, even under stochasticity due to the small numbers of molecules.

The synapse specificity of LTD is also explained well by the NO model combined with an electrical cable model of Purkinje cell dendrite and spines, the Ca2+ dynamics model, and the MAP kinase-positive feedback loop model (Ogasawara et al. 2007). When no nearby parallel fibre is activated and NO is low, not even a conjunction of the parallel and climbing fibre inputs can induce LTD. If too many surrounding parallel fibres are stimulated and NO is very high, even the synapse, which is not stimulated, exhibits LTD only by climbing fibre stimulation. In this case, a spread of LTD occurs and the synapse specificity of LTD is lost. Only when a modest number of parallel fibres are activated and NO is within an intermediate range can synapse-specific LTD occur; the contextual information for storing different motor skills might be conveyed by this gating mechanism. This strongly predicts the sparseness of parallel fibre coding in vivo, which has recently gained much experimental support (e.g. Chadderton et al. 2004).

Taken together, the LTD kinetic simulation models provide a comprehensive account of several seemingly diverse, conflicting and unrelated experimental data within the framework of complicated nonlinear dynamics regulated by Ca2+, IP3 and NO concentrations. We must note that owing to the nonlinear summation effects of these signalling molecules, non-physiological stimuli in vitro, such as parallel fibre bundle stimulation and uncaging (i.e. direct and instant application of interested molecules by photolysis of its caged compounds) of Ca2+ or IP3, might induce qualitatively different characteristics from in vivo LTD in terms of its associative nature, spike-timing dependency and synapse specificity.

The systems biology models of LTD recently gained strong experimental support. First, IP3 was found to increase slowly with a 100 ms time scale in Purkinje cell dendrites after parallel fibre stimulation (Okubo et al. 2004). Slow IP3 increase, the key prediction of our model, was thus confirmed. Yet, we must note that the measurement was not for a single spine owing to technical difficulties, and future high-spatial resolution experiments are expected to examine this prediction more precisely. Second, very recently, George Augustine and his colleagues examined Ca2+ dose–response curves of Purkinje cell LTD in a slice by systematically varying magnitudes and durations of the Ca2+ elevation, which was controlled by uncaging and simultaneously measuring Ca2+ (Tanaka et al. 2007). They demonstrated that LTD is a highly cooperative phenomena with large Hill coefficients, and suggested that the MAPK-positive feedback loop leaky integrates the incoming Ca2+ time course and determines whether to trigger the entire process of LTD in an all-or-nothing manner by comparing the leaky-integrated Ca2+ with its threshold for the bistable dynamics. Because the measurements of the postsynaptic currents were summed over roughly 30 spines and not shown for a single spine, the above suggestion is not yet conclusive, but one enigma regarding the stability of our memory might be nearly resolved in the following scenario. Bistability of nonlinear dynamics within the MAPK-positive feedback loop guarantees memory maintenance in the first place, and cerebellar memory is discrete in its nature at the single-spine level.

5. Towards manipulative neuroscience

Ten years have not yet elapsed since the cerebellar LTD MAPK-positive feedback loop model was first published (Kuroda et al. 2001), before experiments firmly supported its essential proposition that the bistability of nonlinear dynamics is the elementary process of memory (Tanaka et al. 2007; see §4). This is in sharp contrast to the cerebellar internal model theory, for which two completely opposing experimental papers were published (Pasalar et al. 2006; Yamamoto et al. 2007), although 25 years have passed since its first proposal in a preliminary form (Tsukahara & Kawato 1982; see §3). One possible reason for this marked difference could be that LTD within a single spine is a much more small-scale and simpler phenomenon than learning of internal models in sensory motor coordination as well as in cognitive function, which uses not only the cerebellum but also all of its loops with the cerebral cortex and other brain regions. Or, some might even argue that the former theory is correct but the latter theory is wrong. I of course do not agree with this view but believe that the biggest difference between the two concepts is in the availability of experimental methodology that permits manipulation of essential information within a given system. In the LTD example within the molecular–cellular biology domain, the time course of Ca2+ concentration, the most important intracellular signal, can be directly manipulated by uncaging and directly measured by bioimaging techniques. By contrast, systems neuroscience does not possess any experimental technique for direct manipulation or control of information at the system level; information such as an error signal in motor-command coordinates is carried by climbing fibres, or feed-forward motor commands represented by simple spikes, in the case of cerebellar internal model theory. Anatomical ablation or electrocoagulation can destroy neural substrates but cannot manipulate information. Electrical stimulation may excite or suppress neurons indefinitely but cannot manipulate information such as the error signal or motor commands. Furthermore, in most cases at the system level, we do not even know how neural firing and its coherence represent information. Consequently, computational theories are essential to make the initial assumptions about what is represented in the brain and how it is neuronally represented.

Several decades ago, systems neuroscientists could get excited by finding a mere temporal correlation between neural firing rates or an fMRI signal, with some experimental parameters (e.g. attributes of sensory stimuli, movement parameters), or with some hypothetical cognitive aspects of the task performed by subjects. However, as explained in §1, mere accumulation of this kind of correlation data does not lead to understanding the neural mechanism of any brain function; in other words, it does not lead to a rigorous science that explains cause and effect relationships. I recently heard the criticism from scientists in other disciplines that systems neuroscientists do not know what should be revealed before they can state that they ultimately understand the brain. Answering this criticism, I define the ultimate goal of system neuroscience as follows. We can simultaneously measure the activities of all neurons in the brain, and we can freely change these activities experimentally. Thereby, we can control the arbitrary information in the brain based on computational theories that explain how information is represented by neural activities, and the theories can predict the resulting changes in the organisms regarding their sensation, movements, emotions and thoughts. The current status of systems neuroscience is far from reaching this ultimate goal in all aspects of measurement, control, manipulation of information and theory. However, regarding measurement of neuron and brain activities, much progress has been made, such as optical imaging of Ca2+ or voltage and many non-invasive brain activity measurement techniques. Regarding control of neuron activities, promising work in development includes the juxtacellular clamp, the voltage clamp for simulating synaptic currents (dynamic clamp) and genetic engineering methods of using channelrhodopsin-2 and its photostimulation (Boyden et al. 2005). For simpler organisms such as Caenorhabditis elegans, simultaneous measurement and control of all neural activities might be achieved within 10 years. However, at least for several decades, it will be very difficult for mammals in vivo, and it seems almost impossible for humans unless new physical principles for observation and control are invented.

Much progress has been made with respect to close interactions between computational theories and neurophysiological and neuroimaging experiments. Many experiments are nowadays designed for proving or disproving predictions of computational models, or at least motivated by them. In the new experimental paradigms, which are named ‘computational model-based neurophysiology and computational model-based neuroimaging’, interactions between theories and experimental designs are even more intimate. Here, animal or human subjects are given sensory stimuli and a series of reward/penalty signals and asked to execute some tasks and conduct movements. The computational models such as the cerebellar internal model theory or basal ganglia reinforcement learning theory are employed to simulate quantitatively subjects’ behaviours while the models are given exactly the same sensory stimuli and series of reward/penalty signals for the subjects. First of all, we expect that the computational models fairly well reproduce subjects’ behaviours. Then, within each computational model, there exist some hypothetical computational variables such as the error signal or the feed-forward motor command in the case of the cerebellar internal model theory, or the reward prediction error or value functions in the basal ganglia reinforcement learning theory example. Each of these hypothetical variables can be used as an explanatory variable in correlation analysis with an fMRI signal or firing frequency of neurons. By using this correlation analysis, researchers can make statements such as ‘climbing fibre inputs may represent error signals in motor-command coordinates’ (Kobayashi et al. 1998) or ‘putamen neurons may encode action and state-dependent reward prediction…’ (Samejima et al. 2005; Haruno & Kawato 2006a) ‘while the caudate nucleus may encode reward prediction error’ (Haruno & Kawato 2006b). Our group as well as others has recently published a fair amount along this experimental paradigm (Imamizu et al. 2000, 2003; Haruno et al. 2004; Tanaka et al. 2004; Samejima et al. 2005; Haruno & Kawato 2006a,b). Computational model-based neuroscience is a major advance over the classical approach of simply correlating neural or brain activities with stimulus attributes, movement parameters or some conceptual aspects of the task. If only the former two had been used as explanatory variables, cognitive functions remote from sensory and motor interfaces of the brain would never have been directly studied. An ad hoc conceptual aspect of the task could be informative in giving some clues to brain mechanisms, but this is far from quantitative understanding or revealing cause and effect relationships. A computational model-based approach is attractive first because it is a quantitative theory that can be disproved because it makes testable predictions. Second, it tries to reproduce behaviours as well as neural or brain activities. Third, computational models try to explain the whole sequence of information processing necessary for some task, while ad hoc cognitive hypotheses tend to explain just a fragmentary aspect within the whole processing sequence. However, we tend to be frustrated by the difficulties of computational models and this approach's weakness in demonstrating cause and effect relationships, even after advocating this approach for 10 years.

The first difficulty is in computational models. We still have not yet developed a powerful enough computational model to explain a large variety of behaviours and whole-brain activities simultaneously. Thus, in many cases, only a small part of the behavioural data can be reproduced by a single model. In this case, many models could equally well reproduce experimental data, and thus it is difficult to select one best model. The second difficulty is the probable complicated relationship between information and its carrier, such as neural firing rates and coherence or fMRI signals, due to possible population, sparse or temporal coding. The third and most critical difficulty is that what we can demonstrate in computational model-based neuroscience is still just the temporal correlation between hypothetical computational variables and neural/brain activities. We can never prove cause and effect relationships, no matter how high the correlation coefficient is, how much of the behavioural data the model explains or how deeply the theory goes into the core of cognition.

Consequently, among the following four key factors necessary for ultimate systems neuroscience: (i) measurement and (ii) control of neural/brain activity, (iii) manipulation of information, and (iv) computational theories, we have made significant progress or are about to make major improvements on all factors except the manipulation of information. It is theoretically and technically the most difficult, first because we do not know how information is represented, second because there exist complicated relationships between neural/brain activities and represented information and third because simultaneous control of neural/brain activities from many neurons and in many areas is necessary but extremely difficult. However, without the manipulation of information, we can never prove cause and effect relationships at the system level.

Based on these reflections on the computational model-based approach, we have started to advocate and promote a new approach named ‘manipulative neuroscience’, where we aim to manipulate information even for human subjects. Technically, this is based on computational theories, a brain-network interface and non-invasive decoding algorithms. Sato et al. (2004) at ATR Computational Neuroscience Laboratories (ATR-CNS) have been developing a ‘brain-network interface’ based on a hierarchical, variational Bayesian technique to combine information from fMRI and magnetoencephalography They succeeded in estimating brain activities with spatial resolution of a few millimetres and millisecond-level temporal resolution for various domains such as visual perception, visual feature attention and voluntary finger movements. In collaboration with the Shimazu company, we aim to develop within 10 years a portable and wireless combined EEG/NIRS (electroencephalography/near infrared spectroscopy)-based Bayesian estimator for millimetre and millisecond accuracy. Brain–network interface is the term we have created for this project, and it is like a brain-machine interface or a brain–computer interface. A brain–network interface non-invasively estimates brain activity by solving the inverse problem, and it also estimates neural activities and reconstructs represented information. Accordingly, it is neither a brain–machine interface because it is non-invasive and nor a brain–computer interface because it does not require extensive user training since it decodes information. Researchers at ATR-CNS have already succeeded, for example, in estimating the velocity of wrist movements from single-trial data without subject training. Furthermore, the Honda Research Institute of Japan in collaboration with ATR-CNS demonstrated real-time control of a robot hand by decoding three motor primitives (rock paper scissors, as in the children's game) from the fMRI data of a subject's primary motor cortex activity (Press Release 2006; http://www.atr.jp/html/topics/press_060526_e.html). This was based on the machine learning algorithms previously developed by Kamitani & Tong (2005, 2006) for decoding the attributes of visual stimuli from fMRI data.

Manipulative neuroscience can be achieved by combining computational theories, brain–network interfaces and non-invasive decoding algorithms as follows. Human subjects execute a task. We assume that we have already developed a computational theory that postulates that specified neural networks solve necessary computational problems and that some information is represented in a specified way by brain activities within a certain area. During task execution, this information is reconstructed by a brain–network interface and decoding algorithm in real time, and it is then fed back to the subject in any sensory modality or even by transcranial magnetic or electrical stimulation. Then, based on a prediction by the computational model, some manipulation is applied to the extracted information. Predicted changes in brain activities and behaviours are examined experimentally. Although we discussed a human example with non-invasive measurements, the same idea can be applied to animals with neural recordings. In that case, information is decoded from multiple neural activities and then manipulated and fed back to the animal using any available method.

Having defined manipulative neuroscience in this way, it becomes apparent that almost all systems neuroscience experiments try to change or at least influence information representations in the brain by giving sensory stimuli, assigning reward/penalty, imposing tasks, requiring movements and so on, and then they examine changes in behaviour and cognition as well as neural/brain activities. Computational model-based neuroscience further employs a computational model to reproduce behaviours and correlate its hypothetical variable with neural/brain activity that should somehow represent the relevant information. The new approach also uses a computational model but in prediction rather than correlation, and it directly manipulates information rather than attempt to indirectly influence information. If subjects’ behaviour and neural/brain activity change as predicted by a computational model when the extracted information is manipulated while guided by the computational theory, we are in a much better position to state that the model is experimentally supported and that the hypothetical information has a causal relationship with the altered behaviour and neural/brain activity. This is because the information and the neural/brain activities that carry it are directly manipulated in the new paradigm, and this manipulation's effects are compared with model predictions. This level of understanding has been very rare in traditional systems neuroscience or computational model-based neuroscience. We are very aware of the difficulty and risks of the new approach but at the same time believe that this is probably the only persuasive way to fully understand brain functions.

Footnotes

One contribution of 17 to a Theme Issue ‘Japan: its tradition and hot topics in biological sciences’.

References

- Albus J.S. A theory of cerebellar function. Math. Biosci. 1971;10:25–61. doi:10.1016/0025-5564(71)90051-4 [Google Scholar]

- Amari S. Crisis of Japanese brain science. Kagaku. 2006;76:433–437. [Google Scholar]

- Atkeson C.G, et al. Using humanoid robots to study human behavior. IEEE Intell. Syst. Spec. Issue Hum. Robot. 2000;15:46–56. doi:10.1109/5254.867912 [Google Scholar]

- Bentivegna D.C, Atkeson C.G, Cheng G. Learning tasks from observation and practice. Robot. Auton. Syst. 2004a;47:163–169. doi:10.1016/j.robot.2004.03.010 [Google Scholar]

- Bentivegna D.C, Atkeson C.G, Ude A, Cheng G. Learning to act from observation and practice. Int. J. Hum. Robot. 2004b;1:585–611. doi:10.1142/S0219843604000307 [Google Scholar]

- Boyden E.S, Zhang F, Bamberg E, Nagel G, Deisseroth K. Millisecond-timescale, genetically targeted optical control of neural activity. Nat. Neurosci. 2005;8:1263–1268. doi: 10.1038/nn1525. doi:10.1038/nn1525 [DOI] [PubMed] [Google Scholar]

- Chadderton P, Margrie T.W, Hausser M. Integration of quanta in cerebellar granule cells during sensory processing. Nature. 2004;428:856–860. doi: 10.1038/nature02442. doi:10.1038/nature02442 [DOI] [PubMed] [Google Scholar]

- Dayan P, Abbott L.F. MIT Press; Cambridge, MA: 1999. Theoretical neuroscience. [Google Scholar]

- Dean P, Porrill J, Stone J.V. Decorrelation control by the cerebellum achieves oculomotor implant compensation in simulated vestibulo-ocular reflex. Proc. R. Soc. B. 2002;269:1895–1904. doi: 10.1098/rspb.2002.2103. doi:10.1098/rspb.2002.2103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Schutter E. Cerebellar long-term depression might normalize excitation of Purkinje cells: a hypothesis. Trends Neurosci. 1995;18:291–295. doi: 10.1016/0166-2236(95)93916-l. doi:10.1016/0166-2236(95)93916-L [DOI] [PubMed] [Google Scholar]

- Doi T, Kuroda S, Michikawa T, Kawato M. Inositol 1,4,5- trisphosphate-dependent Ca2+ threshold dynamics detect spike timing in cerebellar Purkinje cells. J. Neurosci. 2005;25:950–961. doi: 10.1523/JNEUROSCI.2727-04.2005. doi:10.1523/JNEUROSCI.2727-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Endo, G., Morimoto, J., Matsubara, T., Nakanishi, J. & Cheng, G. 2005 Learning CPG sensory feedback with policy gradient for biped locomotion for a full body humanoid. In The Twentieth National Conf. on Artificial Intelligence (AAAI-05 Proceedings), Pittsburgh, USA, July 9–13, 2005, 1237–1273.

- Gomi H, Shidara M, Takemura A, Inoue Y, Kawano K, Kawato M. Temporal firing patterns of Purkinje cells in the cerebellar ventral paraflocculus during ocular following responses in monkeys. I. Simple spikes. J. Neurophysiol. 1998;80:818–831. doi: 10.1152/jn.1998.80.2.818. [DOI] [PubMed] [Google Scholar]

- Hale J.G, Pollick F.E. ‘Sticky hands’: learning and generalisation for cooperative physical interactions with a humanoid robot. IEEE Trans. Syst. Man Cybern. 2005;35:512–521. doi:10.1109/TSMCC.2004.840063 [Google Scholar]

- Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus–action–reward association learning. J. Neurophysiol. 2006a;95:948–959. doi: 10.1152/jn.00382.2005. doi:10.1152/jn.00382.2005 [DOI] [PubMed] [Google Scholar]

- Haruno M, Kawato M. Heterarchical reinforcement-learning model for integration of multiple cortico-striatal loops; fMRI examination in stimulus–action–reward association learning. Neural Netw. 2006b;19:1242–1254. doi: 10.1016/j.neunet.2006.06.007. doi:10.1016/j.neunet.2006.06.007 [DOI] [PubMed] [Google Scholar]

- Haruno M, Kuroda T, Doya K, Toyama K, Kimura M, Samejima K, Imamizu H, Kawato M. A neural correlate of reward-based behavioral learning in caudate nucleus: a functional magnetic resonance imaging study of a stochastic decision task. J. Neurosci. 2004;24:1660–1665. doi: 10.1523/JNEUROSCI.3417-03.2004. doi:10.1523/JNEUROSCI.3417-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ijspeert, A. J., Nakanishi, J. & Schaal, S. 2002 Movement imitation with nonlinear dynamical systems in humanoid robots. In Proc. IEEE Int. Conf. on Robotics and Automation (ICRA2002), May 11–15, Washington, USA 2002, pp. 1398–1403. (doi:10.1109/ROBOT.2002.1014739)

- Imamizu H, Miyauchi S, Tamada T, Sasaki Y, Takino R, Puetz B, Yoshioka T, Kawato M. Human cerebellar activity reflecting an acquired internal model of a new tool. Nature. 2000;403:192–195. doi: 10.1038/35003194. doi:10.1038/35003194 [DOI] [PubMed] [Google Scholar]

- Imamizu H, Kuroda T, Miyauchi S, Yoshioka T, Kawato M. Modular organization of internal models of tools in the human cerebellum. Proc. Natl Acad. Sci. USA. 2003;100:5461–5466. doi: 10.1073/pnas.0835746100. doi:10.1073/pnas.0835746100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M. Neurophysiological basis of the cerebellar motor control system. Int. J. Neurol. 1970;7:162–176. [PubMed] [Google Scholar]

- Jordan M.I, Rumelhart D.E. Forward models: supervised learning with a distal teacher. Cogn. Sci. 1992;16:307–354. doi:10.1016/0364-0213(92)90036-T [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat. Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. doi:10.1038/nn1444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding seen and attended motion directions from activity in the human visual cortex. Curr. Biol. 2006;16:1096–1102. doi: 10.1016/j.cub.2006.04.003. doi:10.1016/j.cub.2006.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawano K. Ocular tracking: behavior and neurophysiology. Curr. Opin. Neurobiol. 1999;9:467–473. doi: 10.1016/S0959-4388(99)80070-1. doi:10.1016/S0959-4388(99)80070-1 [DOI] [PubMed] [Google Scholar]

- Kawato M. Feedback–error–learning neural network for supervised motor learning. In: Eckmiller R, editor. Advanced neural computers. Elsevier; North-Holland, UK: 1990. pp. 365–372. [Google Scholar]

- Kawato M. Internal models for motor control and trajectory planning. Curr. Opin. Neurobiol. 1999;9:718–727. doi: 10.1016/s0959-4388(99)00028-8. doi:10.1016/S0959-4388(99)00028-8 [DOI] [PubMed] [Google Scholar]

- Kawato M, Gomi H. A computational model of four regions of the cerebellum based on feedback–error–learning. Biol. Cybern. 1992;68:95–103. doi: 10.1007/BF00201431. doi:10.1007/BF00201431 [DOI] [PubMed] [Google Scholar]

- Kawato M, Furukawa K, Suzuki R. A hierarchical neural-network model for control and learning of voluntary movement. Biol. Cybern. 1987;57:169–185. doi: 10.1007/BF00364149. doi:10.1007/BF00364149 [DOI] [PubMed] [Google Scholar]

- Kawato M, Kuroda T, Imamizu H, Nakano E, Miyauchi S, Yoshioka T. Internal forward models in the cerebellum: fMRI study on grip force and load force coupling. Prog. Brain Res. 2003;142:171–188. doi: 10.1016/S0079-6123(03)42013-X. [DOI] [PubMed] [Google Scholar]

- Kobayashi Y, Kawano K, Takemura A, Inoue Y, Kitama T, Gomi H, Kawato M. Temporal firing patterns of Purkinje cells in the cerebellar ventral paraflocculus during ocular following responses in Monkeys II. Complex spikes. J. Neurophysiol. 1998;80:832–848. doi: 10.1152/jn.1998.80.2.832. [DOI] [PubMed] [Google Scholar]

- Kuroda S, Schweighofer N, Kawato M. Exploration of signal transduction pathways in cerebellar long-term depression by kinetic simulation. J. Neurosci. 2001;21:5693–5702. doi: 10.1523/JNEUROSCI.21-15-05693.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Llinas R, Lang E.J, Welsh J.P. The cerebellum, LTD, and memory: alternative views. Learn. Memory. 1997;3:445–455. doi: 10.1101/lm.3.6.445. doi:10.1101/lm.3.6.445 [DOI] [PubMed] [Google Scholar]

- Marr D. A theory of cerebellar cortex. J. Physiol. (Lond.) 1969;202:437–470. doi: 10.1113/jphysiol.1969.sp008820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsubara T, Morimoto J, Nakanishi J, Sato M, Doya K. Learning CPG-based biped locomotion with a policy gradient method. Robot. Auton. Syst. 2006;54:911–920. doi:10.1016/j.robot.2006.05.012 [Google Scholar]

- Miyamoto H, Kawato M, Setoyama T, Suzuki R. Feedback–error–learning neural network for trajectory control of a robotic manipulator. Neural Netw. 1988;1:251–265. doi:10.1016/0893-6080(88)90030-5 [Google Scholar]

- Miyamoto H, Schaal S, Gandolfo F, Gomi H, Koike Y, Osu R, Nakano E, Wada Y, Kawato M. A Kendama learning robot based on dynamic optimization theory. Neural Netw. 1996;9:1281–1302. doi: 10.1016/s0893-6080(96)00043-3. doi:10.1016/S0893-6080(96)00043-3 [DOI] [PubMed] [Google Scholar]

- Morimoto, J., Endo, G., Nakanishi, J., Hyon, S., Cheng, G., Bentivegna, D. C. & Atkeson, C. G. 2006 Modulation of simple sinusoidal patterns by a coupled oscillator model for biped walking. In IEEE Int. Conf. on Robotics and Automation (ICRA2006) Proc., Orlando, USA, 15–19 May 2006, pp. 1579–1584.

- Nakanishi J, Schaal S. Feedback error learning and nonlinear learning adaptive control. Neural Netw. 2004;17:1453–1465. doi: 10.1016/j.neunet.2004.05.003. doi:10.1016/j.neunet.2004.05.003 [DOI] [PubMed] [Google Scholar]

- Nakanishi J, Morimoto J, Endo G, Cheng G, Schaal S, Kawato M. Learning from demonstration and adaptation of biped locomotion. Robot. Auton. Syst. 2004;47:79–91. doi:10.1016/j.robot.2004.03.003 [Google Scholar]

- Normile D. Japanese neuroscience: new institute seen as brains behind big boots in spending. Science. 1997;275:1562–1563. doi: 10.1126/science.275.5306.1562. doi:10.1126/science.275.5306.1562 [DOI] [PubMed] [Google Scholar]

- Ogasawara H, Doi T, Doya K, Kawato M. Nitric oxide regulates input specificity of long-term depression and context dependence of cerebellar learning. PLoS Comput. Biol. 2007;3:e179. doi: 10.1371/journal.pcbi.0020179. doi:10.1371/journal.pcbi.0020179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okubo Y, Kakizawa S, Hirose K, Iino M. Cross talk between metabotropic and ionotropic glutamate receptor-mediated signaling in parallel fiber-induced inositol 1,4,5-trisphosphate production in cerebellar Purkinje cells. J. Neurosci. 2004;24:9513–9520. doi: 10.1523/JNEUROSCI.1829-04.2004. doi:10.1523/JNEUROSCI.1829-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasalar S, Roitman A.V, Durfee W.K, Ebner T.J. Force field effects on cerebellar Purkinje cell discharge with implications for internal models. Nat. Neurosci. 2006;9:1404–1411. doi: 10.1038/nn1783. doi:10.1038/nn1783 [DOI] [PubMed] [Google Scholar]

- Pollard, N. S., Hodgins, J. K., Riley, M. J. & Atkeson, C. G. 2002 Adapting human motion for the control of a humanoid robot. In Proc. IEEE Int. Conf. on Robotics and Automation (ICRA2002), Washington, USA, May 11–15 2002, pp. 1390–1397. (doi:10.1109/ROBOT.2002.1014737)

- Riley, M., Ude, A. & Atkeson, C. G. 2000 Methods for motion generation and interaction with a humanoid robot: case studies of dancing and catching. In Proc. Workshop on Interactive Robotics and Entertainment (WIRE-2000), April 30–May 1, 2000, Pittsburgh, USA, pp. 35–42.

- Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. doi:10.1126/science.1115270 [DOI] [PubMed] [Google Scholar]

- Sato M, Yoshioka T, Kajiwara S, Toyama K, Goda N, Doya K, Kawato M. Hierarchical Bayesian estimation for MEG inverse problem. NeuroImage. 2004;23:806–826. doi: 10.1016/j.neuroimage.2004.06.037. doi:10.1016/j.neuroimage.2004.06.037 [DOI] [PubMed] [Google Scholar]

- Schaal S. Is imitation learning the route to humanoid robots? Trends Cogn. Sci. 1999;3:233–242. doi: 10.1016/s1364-6613(99)01327-3. doi:10.1016/S1364-6613(99)01327-3 [DOI] [PubMed] [Google Scholar]

- Schaal S, Atkeson C.G. Constructive incremental learning from only local information. Neural Comput. 1998;10:2047–2084. doi: 10.1162/089976698300016963. doi:10.1162/089976698300016963 [DOI] [PubMed] [Google Scholar]

- Schaal, S., Peters, J., Nakanishi, J. & Ijspeert, A. 2003 Control, planning, learning and imitation with dynamic movement primitives. In IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS2003) Workshop on Bilateral Paradigms of Human and Humanoid. Las Vegas, USA, October 27–31 2003, pp. 39–58.

- Shibata T, Schaal S. Biomimetic gaze stabilization based on feedback–error learning with nonparametric regression networks. Neural Netw. 2001;14:201–216. doi: 10.1016/s0893-6080(00)00084-8. doi:10.1016/S0893-6080(00)00084-8 [DOI] [PubMed] [Google Scholar]

- Shibata T, Tabata H, Schaal S, Kawato M. A model of smooth pursuit based on learning of the target dynamics using only retinal signals. Neural Netw. 2005;18:213–225. doi: 10.1016/j.neunet.2005.01.001. doi:10.1016/j.neunet.2005.01.001 [DOI] [PubMed] [Google Scholar]

- Shidara M, Kawano K, Gomi H, Kawato M. Inverse-dynamics model eye movement control by Purkinje cells in the cerebellum. Nature. 1993;365:50–52. doi: 10.1038/365050a0. doi:10.1038/365050a0 [DOI] [PubMed] [Google Scholar]

- Takemura A, Inoue Y, Gomi H, Kawato M, Kawano K. Change in neuronal firing patterns in the process of motor command generation for the ocular following response. J. Neurophysiol. 2001;86:1750–1763. doi: 10.1152/jn.2001.86.4.1750. [DOI] [PubMed] [Google Scholar]

- Tanaka S.C, Doya K, Okada G, Ueda K, Okamoto Y, Yamawaki S, Doya K. Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nat. Neurosci. 2004;7:887–893. doi: 10.1038/nn1279. doi:10.1038/nn1279 [DOI] [PubMed] [Google Scholar]

- Tanaka K, Khiroug L, Santamaria F, Doi T, Ogasawara H, Ellis-Davies G, Kawato M, Augustine G.J. Ca2+ requirements for cerebellar long-term synaptic depression: role for a postsynaptic leaky integrator. Neuron. 2007;54:787–800. doi: 10.1016/j.neuron.2007.05.014. doi:10.1016/j.neuron.2007.05.014 [DOI] [PubMed] [Google Scholar]

- Tsukahara N, Kawato M. Dynamic and plastic properties of the brain stem neuronal networks as the possible neuronal basis of learning and memory. In: Amari S, Arbib M.A, editors. Competition and cooperation in neural nets. Lecture Notes in Biomathematics 45. Springer; New York, NY: 1982. pp. 430–441. [Google Scholar]

- Ude A, Atkeson C.G. Online tracking and mimicking of human movements by a humanoid robot. J. Adv. Robot. 2003;17:165–178. doi:10.1163/156855303321165114 [Google Scholar]

- Ude A, Atkeson C.G, Riley M. Programming full-body movements for humanoid robots by observation. Robot. Auton. Syst. 2004;47:93–108. doi:10.1016/j.robot.2004.03.004 [Google Scholar]

- Winkelman B, Frens M. Motor coding in floccular climbing fibers. J. Neurophysiol. 2006;95:2342–2351. doi: 10.1152/jn.01191.2005. doi:10.1152/jn.01191.2005 [DOI] [PubMed] [Google Scholar]

- Yamamoto K, Kobayashi Y, Takemura A, Kawano K, Kawato M. Computational studies on acquisition and adaptation of ocular following responses based on cerebellar synaptic plasticity. J. Neurophysiol. 2002;87:1554–1571. doi: 10.1152/jn.00166.2001. [DOI] [PubMed] [Google Scholar]

- Yamamoto K, Kawato M, Kotosaka S, Kitazawa S. Encoding of movement dynamics by Purkinje cell simple spike activity during fast arm movements under resistive and assistive force fields. J. Neurophysiol. 2007;97:1588–1599. doi: 10.1152/jn.00206.2006. doi:10.1152/jn.00206.2006 [DOI] [PubMed] [Google Scholar]